Quick human movement identification method oriented to human-computer interaction

A technology of human action recognition and human-computer interaction, applied in the input/output, character and pattern recognition, computer parts and other directions of user/computer interaction, can solve the problem of weak robustness, etc., to improve the speed of action recognition, Optimize the effect of quick control and convenient subsequent identification

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

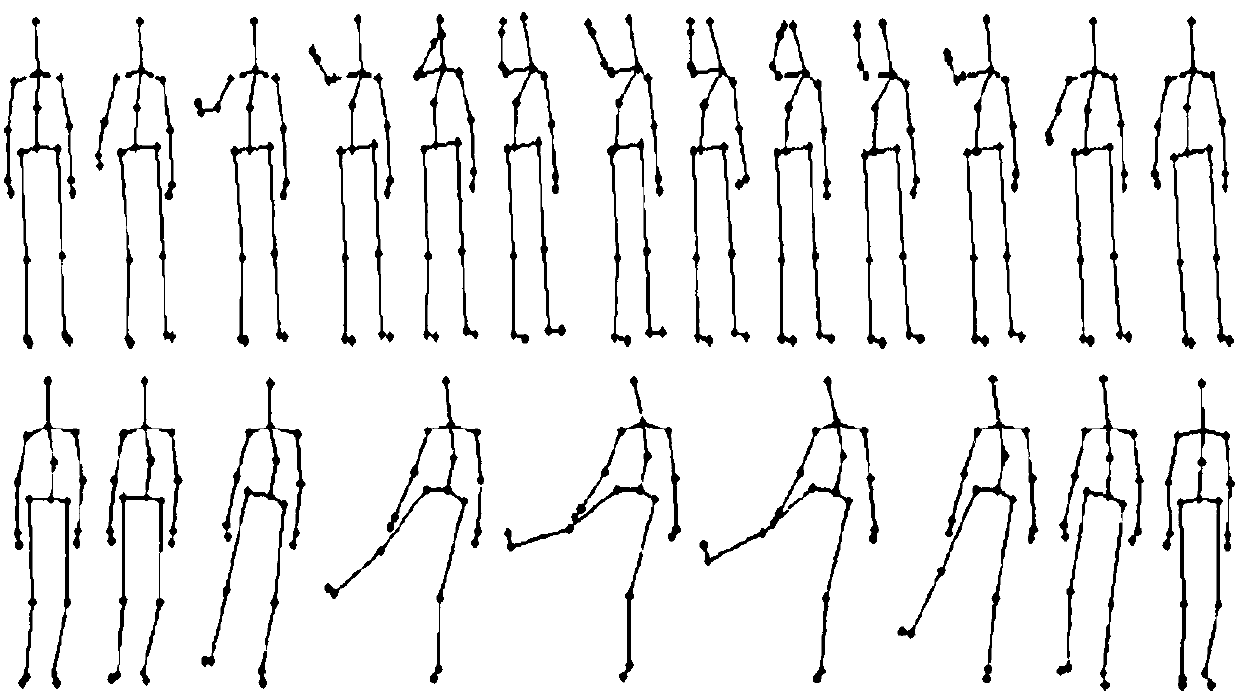

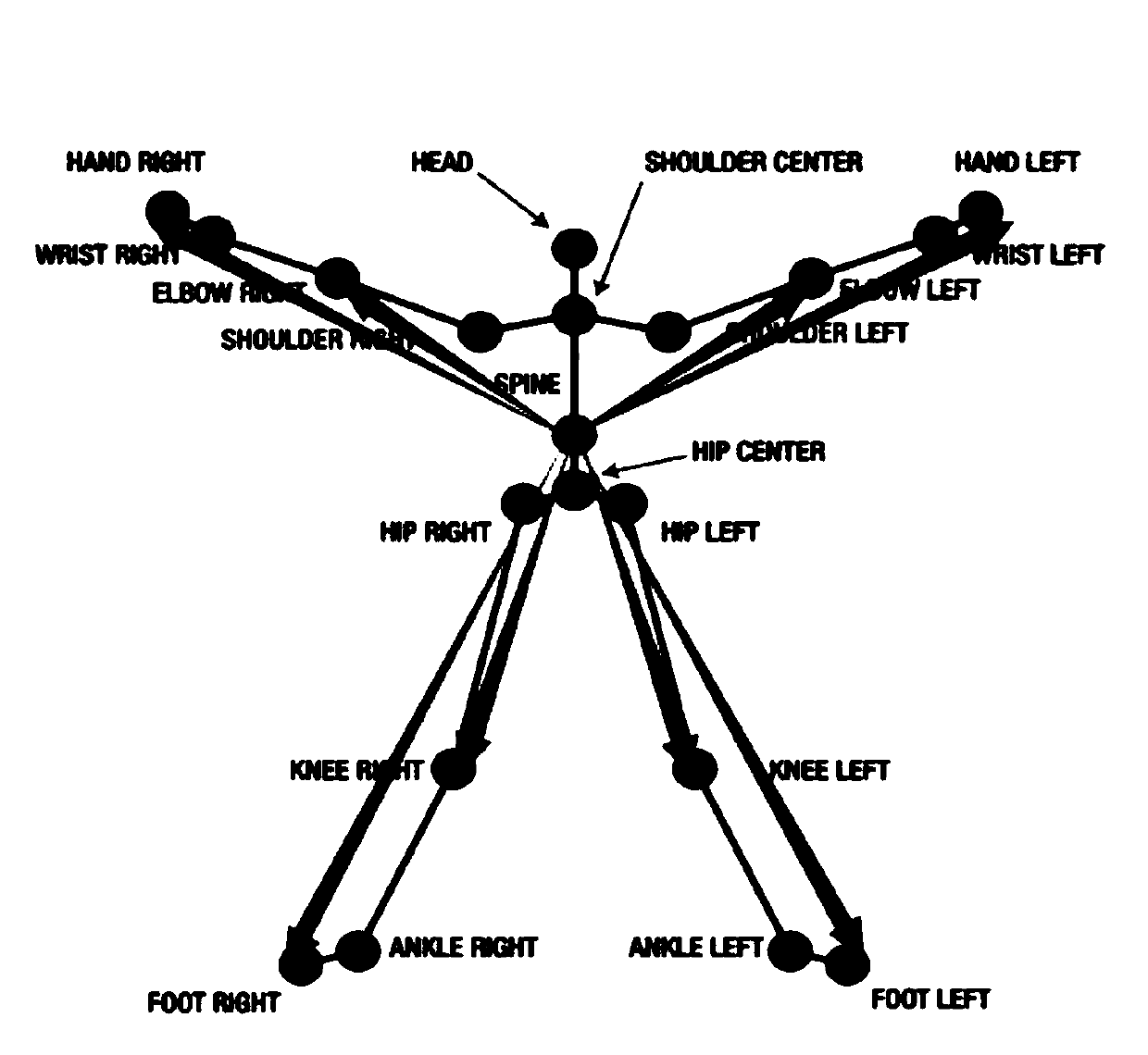

[0070] The present invention adopts Microsoft's 3D depth-of-field camera Kinect as an action acquisition device, and the camera has no requirement for ambient light, and complete darkness is also acceptable. When collecting actions, place the Kinect at a height of 1m from the ground, and the person to be collected stands facing the Kinect, keeping his body parallel to the camera plane, about 1-2m away from the camera, and there is no obstacle between the camera and the camera. According to the experimental requirements, 5 people in the laboratory were selected for action recording and testing, and the following three action template libraries were established:

[0071] (1) Customize 20 actions, select one of them, record these 20 actions once, and save the action template.

[0072] (2) Customize 20 kinds of actions, choose 5 people, each person will record 20 kinds of actions 10 times, and save the average value of 50 templates as a template.

[0073] (3) Use the second templ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com