Word sense disambiguation method based on hidden Markov model

A Hidden Markov and Word Sense Disambiguation technology, applied in semantic analysis, character and pattern recognition, special data processing applications, etc., can solve the problems of a large amount of manpower and material resources, sparse model parameter data, insufficient support for a large number of disambiguation tasks, etc. , to achieve the effect of improving accuracy and correct rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] Embodiments of the present invention will be described in detail based on the flow charts described above. The implementation methods here are only examples, and equivalent changes made based on the technical essence of the present invention still fall within the protection scope of the present invention.

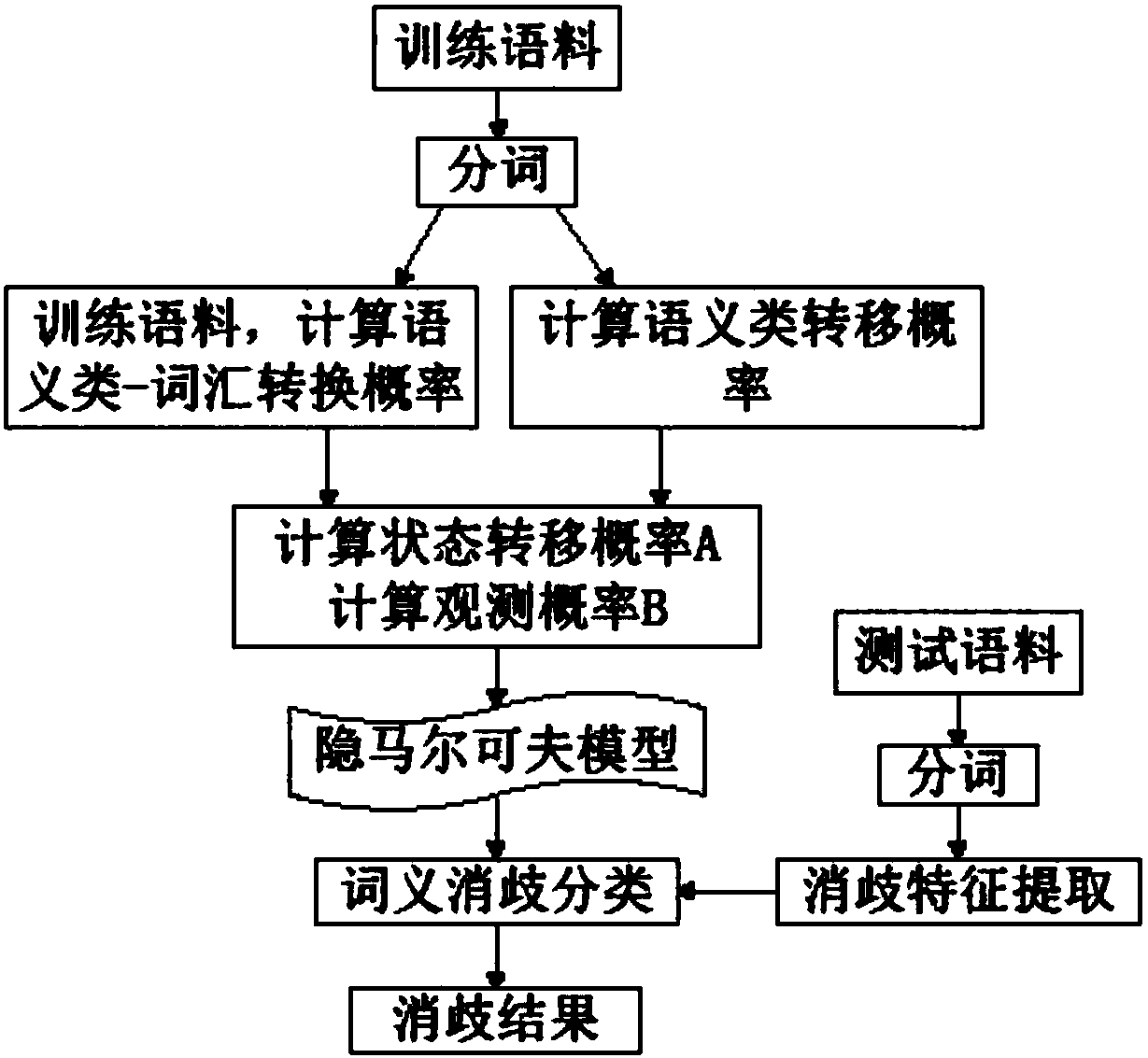

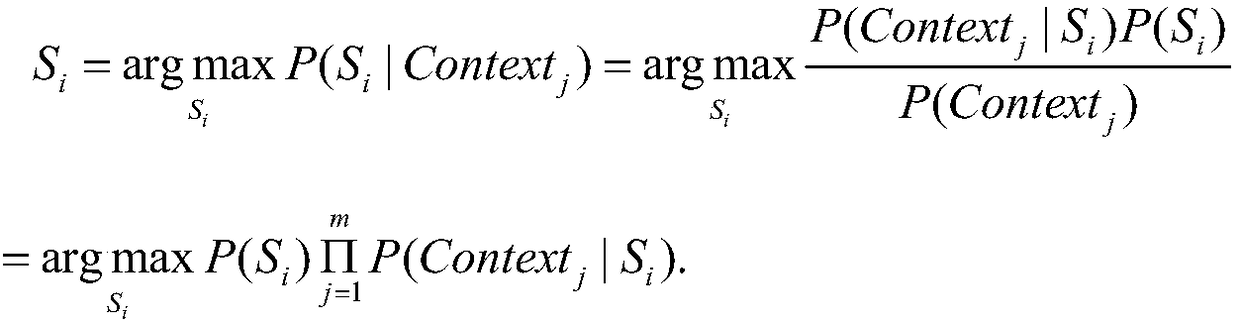

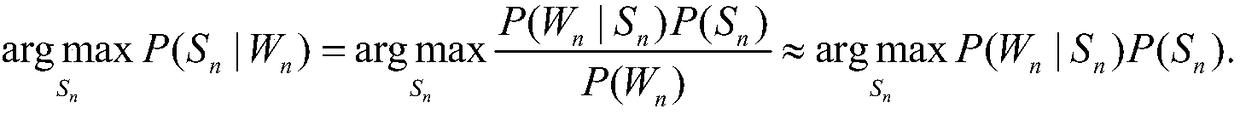

[0024] The solution framework of the prediction problem: given the hidden Markov model λ=(A,B,π) (A and π are semantic class sequences, B is the vocabulary sequence) and the observation sequence O=(o 1 ,o 2 ,...,o n ), solve the hidden state sequence with the largest conditional probability P(Q|O) of the observation sequence, that is, the semantic sequence. The previous probability is obtained through corpus training, and the hidden sequence is generally solved using the Viterbi algorithm, that is, dynamic programming is used to solve the hidden Markov model prediction problem.

[0025] Step 1 uses the (Harbin Institute of Technology) artificial semantic annotatio...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com