Hadoop load balance task scheduling method based on hybrid metaheuristic algorithm

A meta-heuristic algorithm and task scheduling technology, applied in computing, resource allocation, program control design, etc., can solve problems such as local optimality of heuristic algorithm, unstable performance, cumbersome solution process, etc., to overcome cluster load imbalance , revenue increase, and the effect of optimizing search capabilities

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] In order to illustrate the present invention more clearly, the present invention will be further described below in conjunction with preferred embodiments and accompanying drawings. Similar parts in the figures are denoted by the same reference numerals. Those skilled in the art should understand that the content specifically described below is illustrative rather than restrictive, and should not limit the protection scope of the present invention.

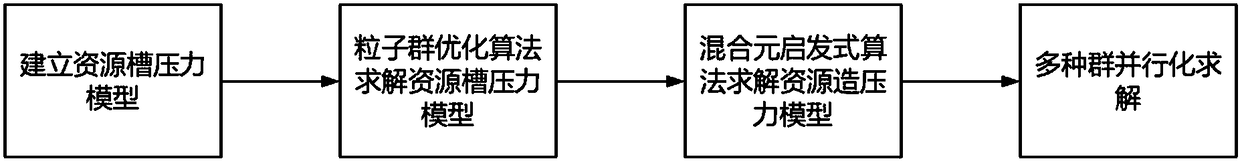

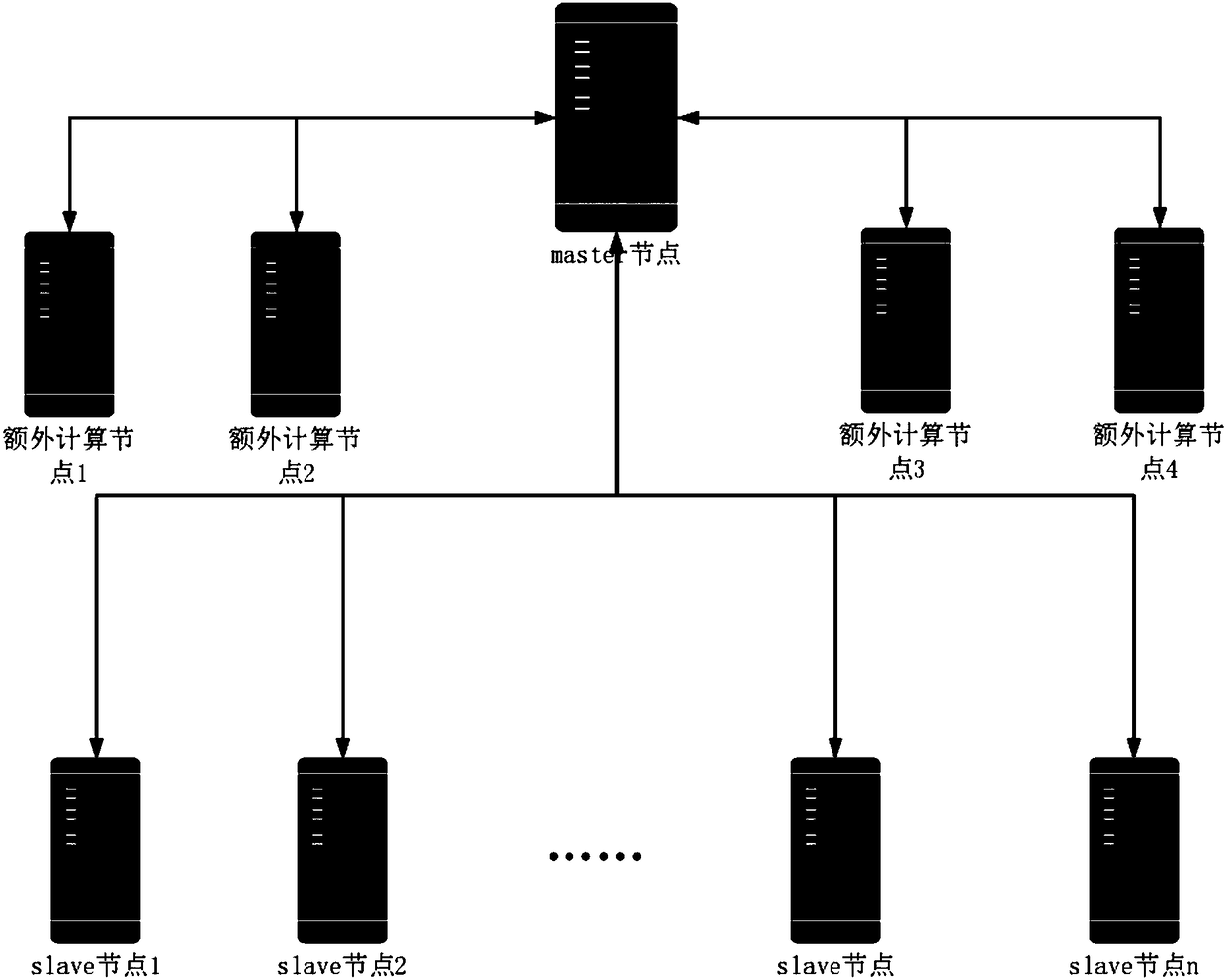

[0035] Such as figure 1 with figure 2 As shown, the technical field of a Hadoop load balancing task scheduling method based on a hybrid heuristic algorithm disclosed by the present invention comprises the following steps:

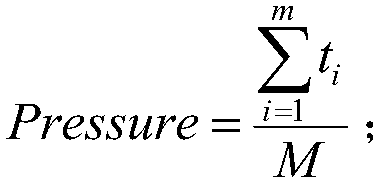

[0036] S1. A resource slot pressure model is established according to the principle of resource slots for computing pressure of processing tasks of balancing task processing nodes.

[0037] The main goal of the above resource slot pressure model is to make the computing pressure of the tasks to be ex...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com