Patents

Literature

142 results about "Parallel programing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Parallel programming is a programming technique wherein the execution flow of the application is broken up into pieces that will be done at the same time (concurrently) by multiple cores, processors, or computers for the sake of better performance.

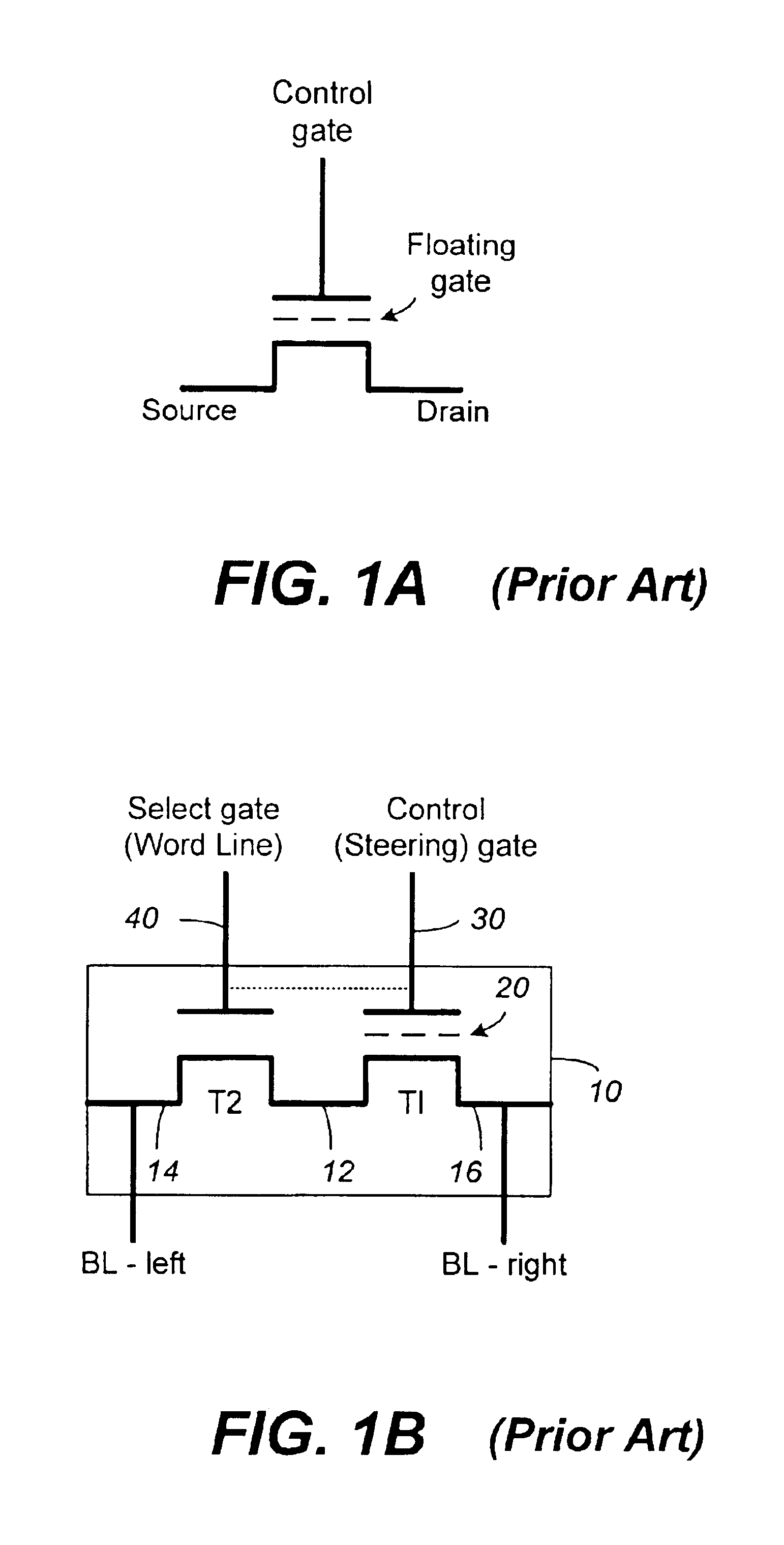

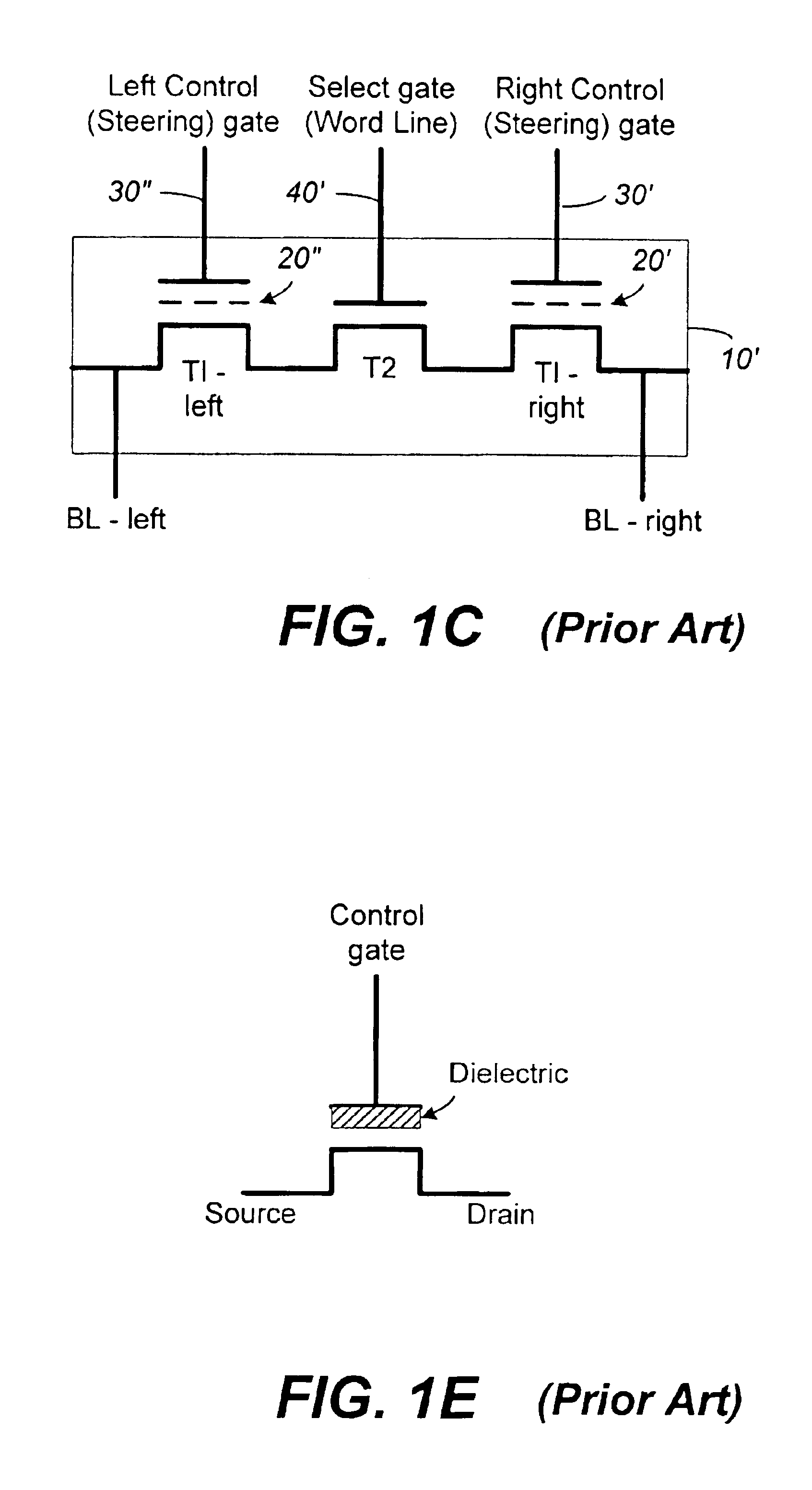

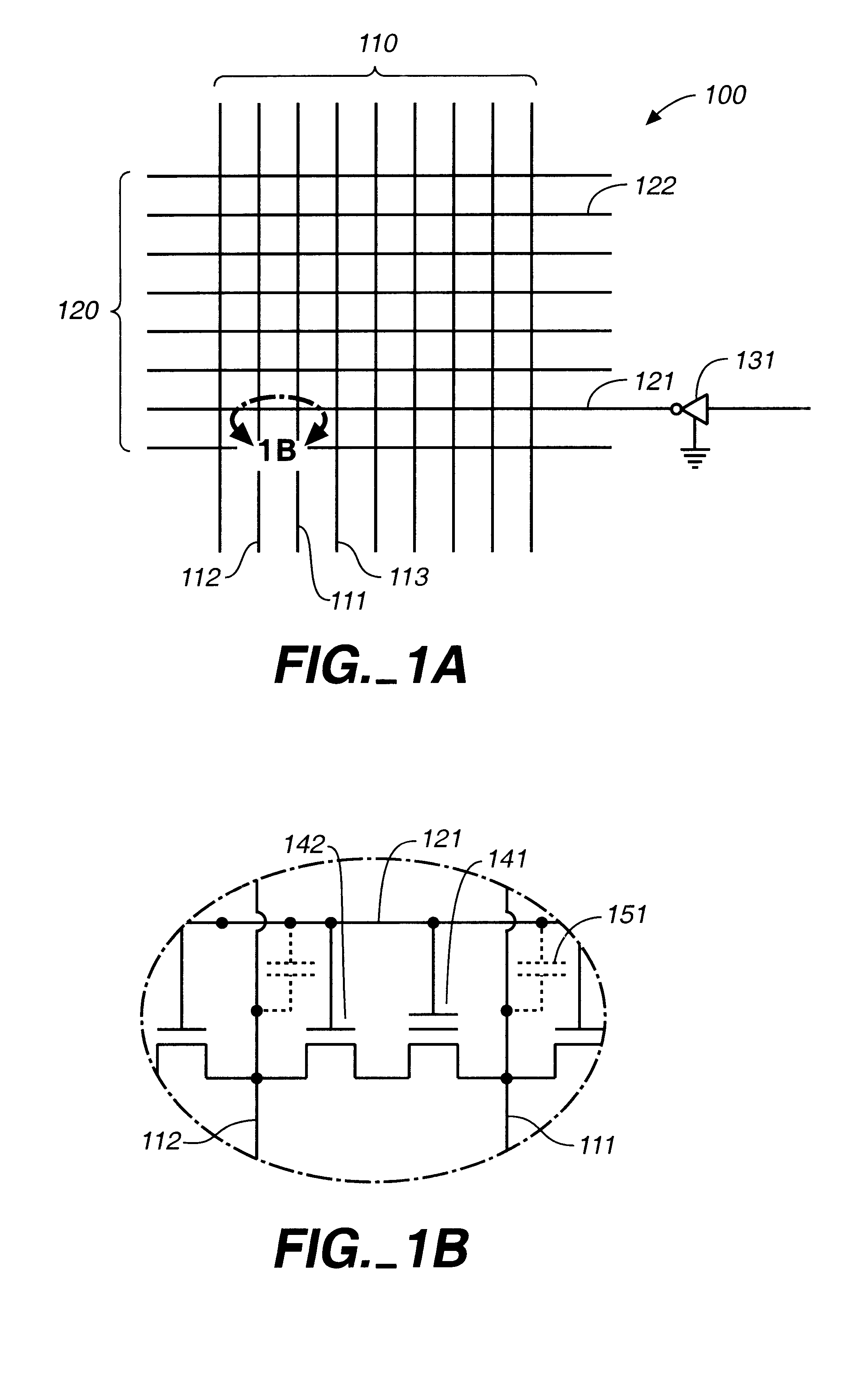

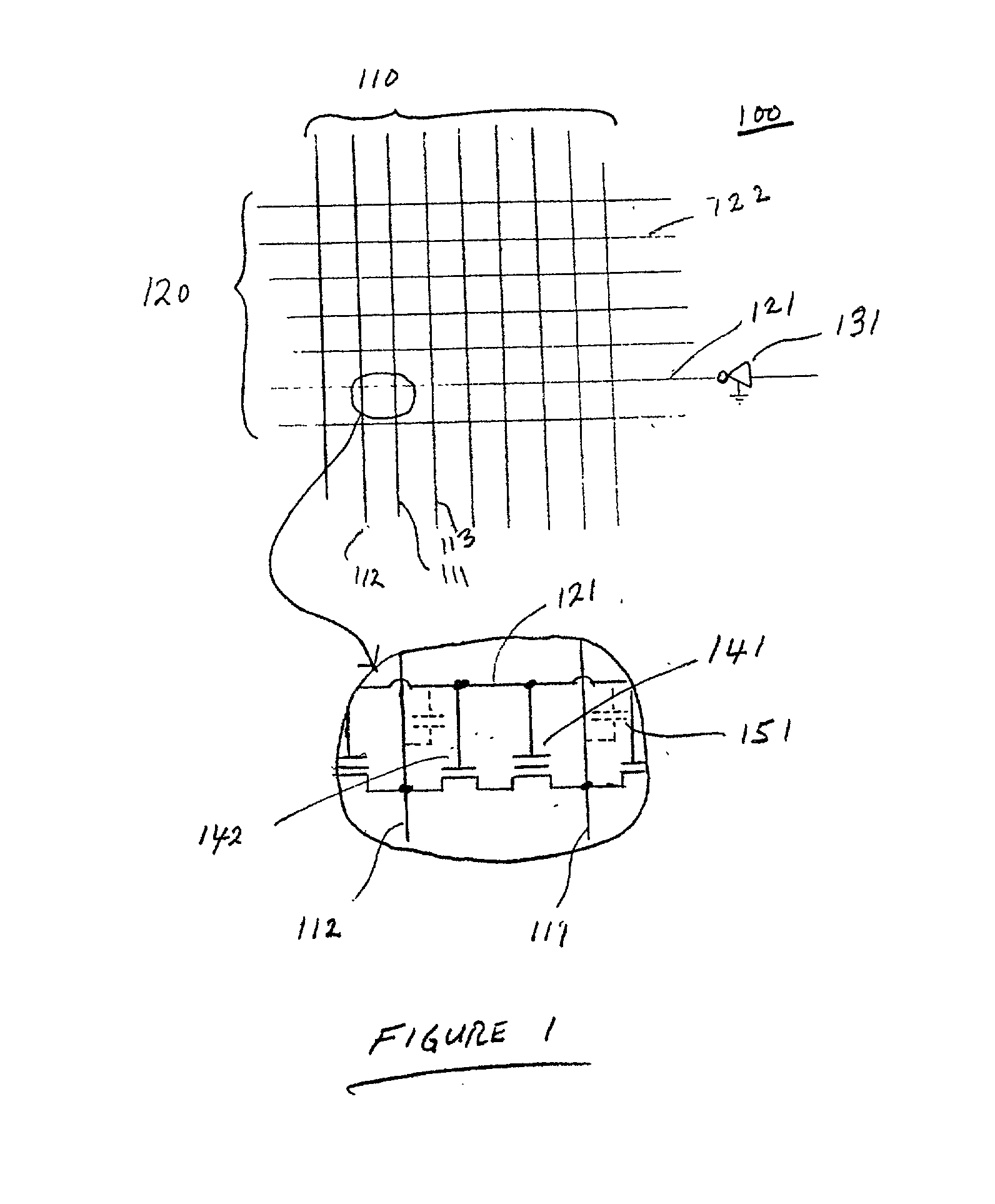

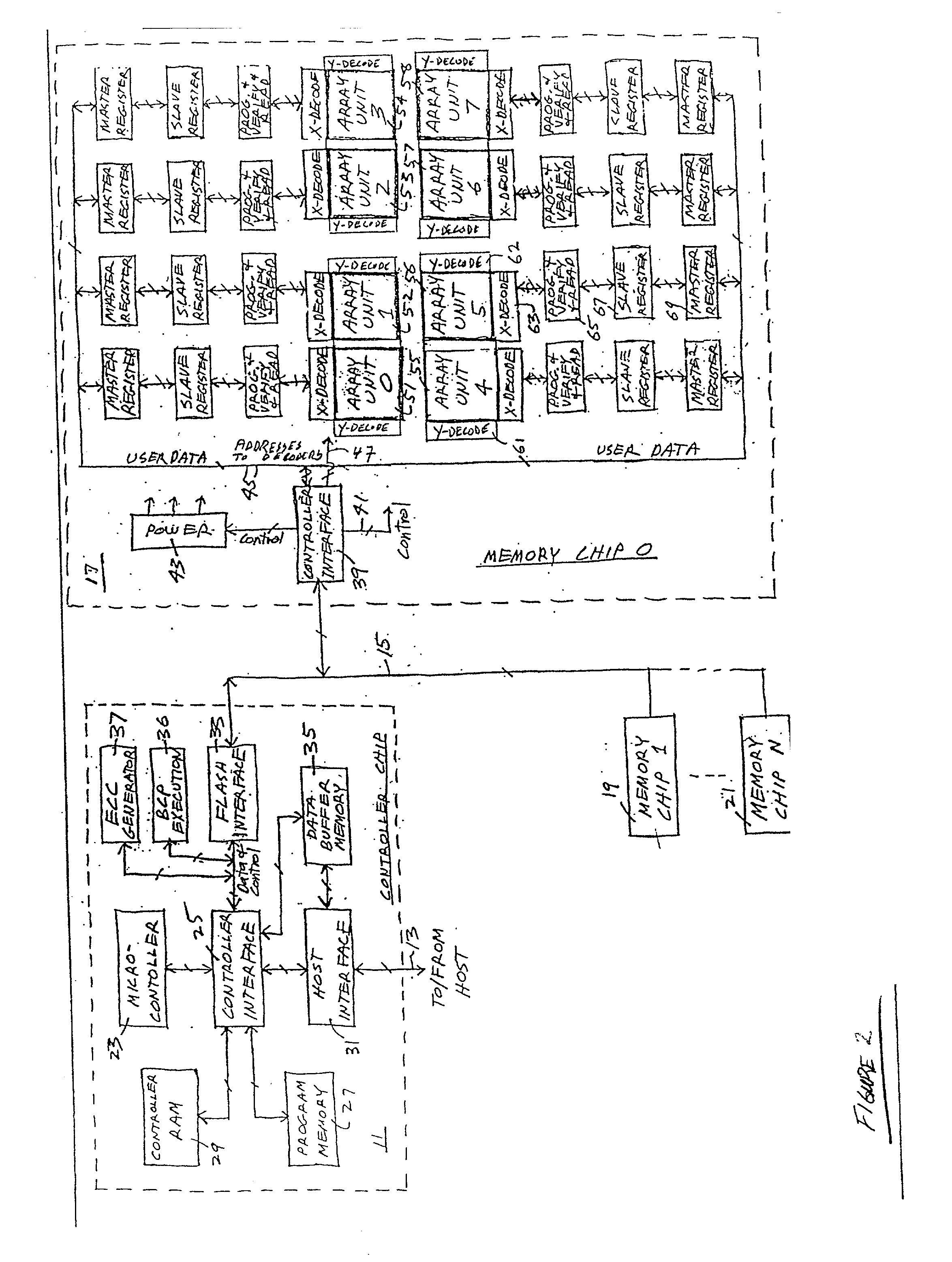

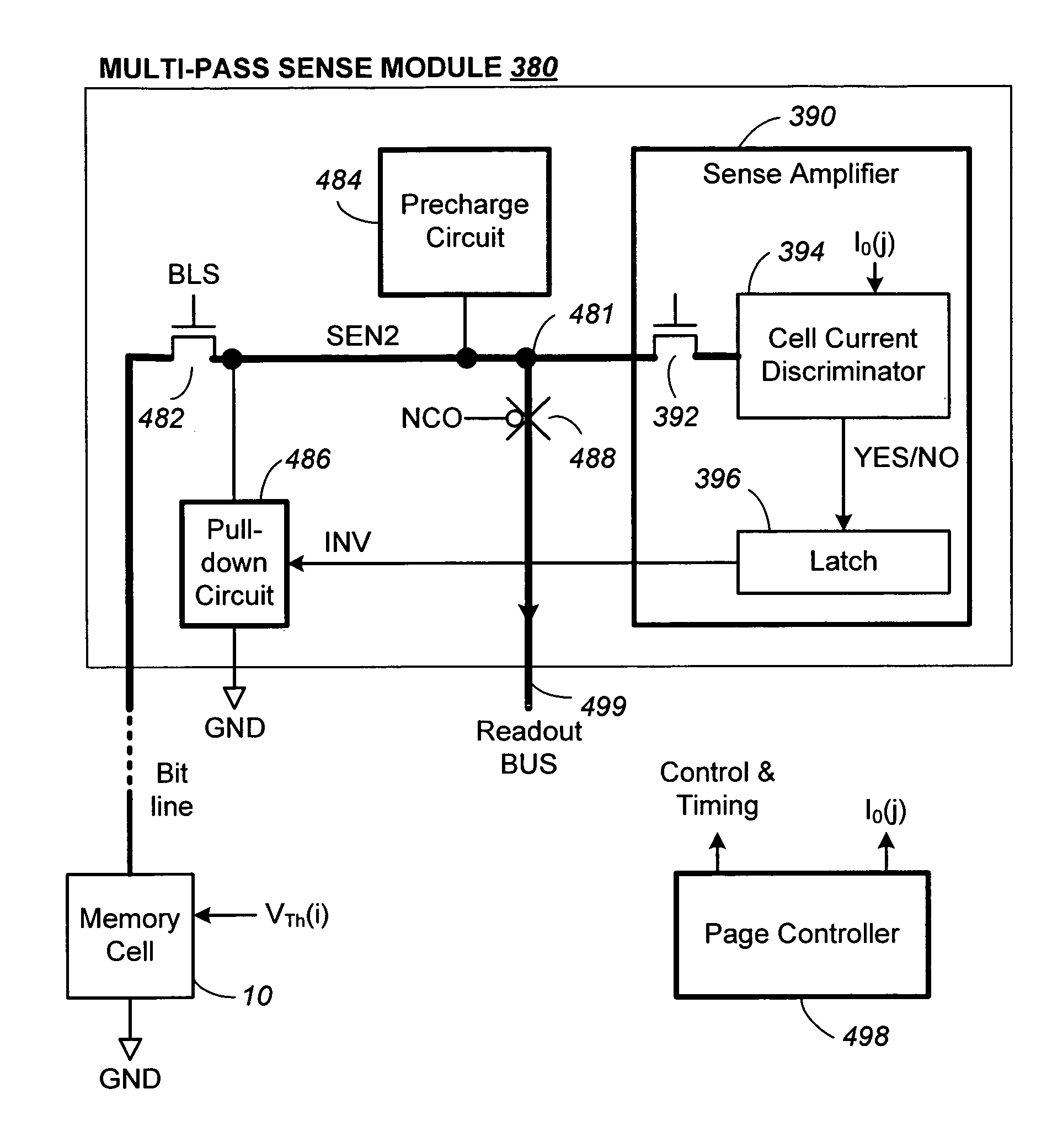

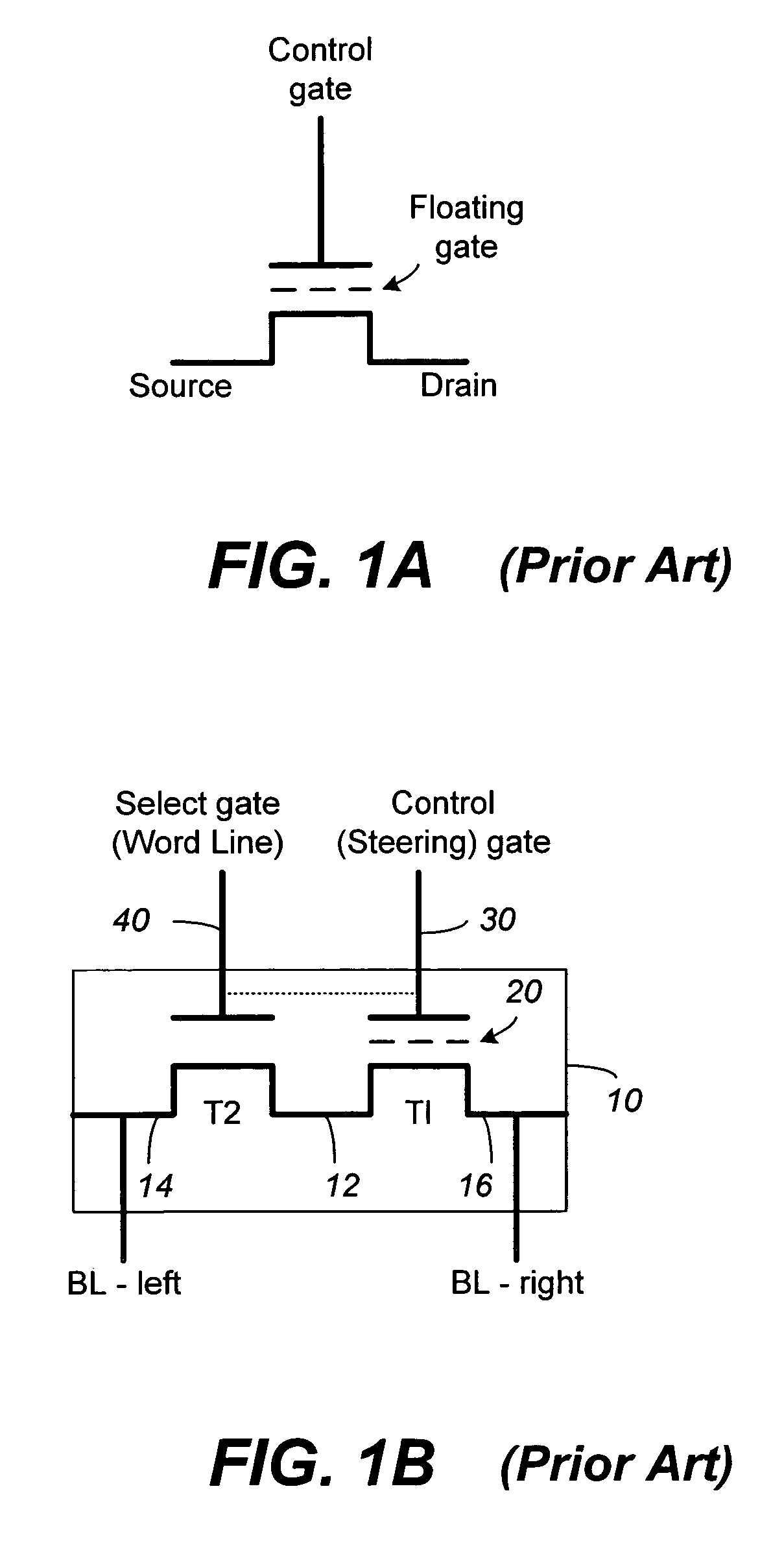

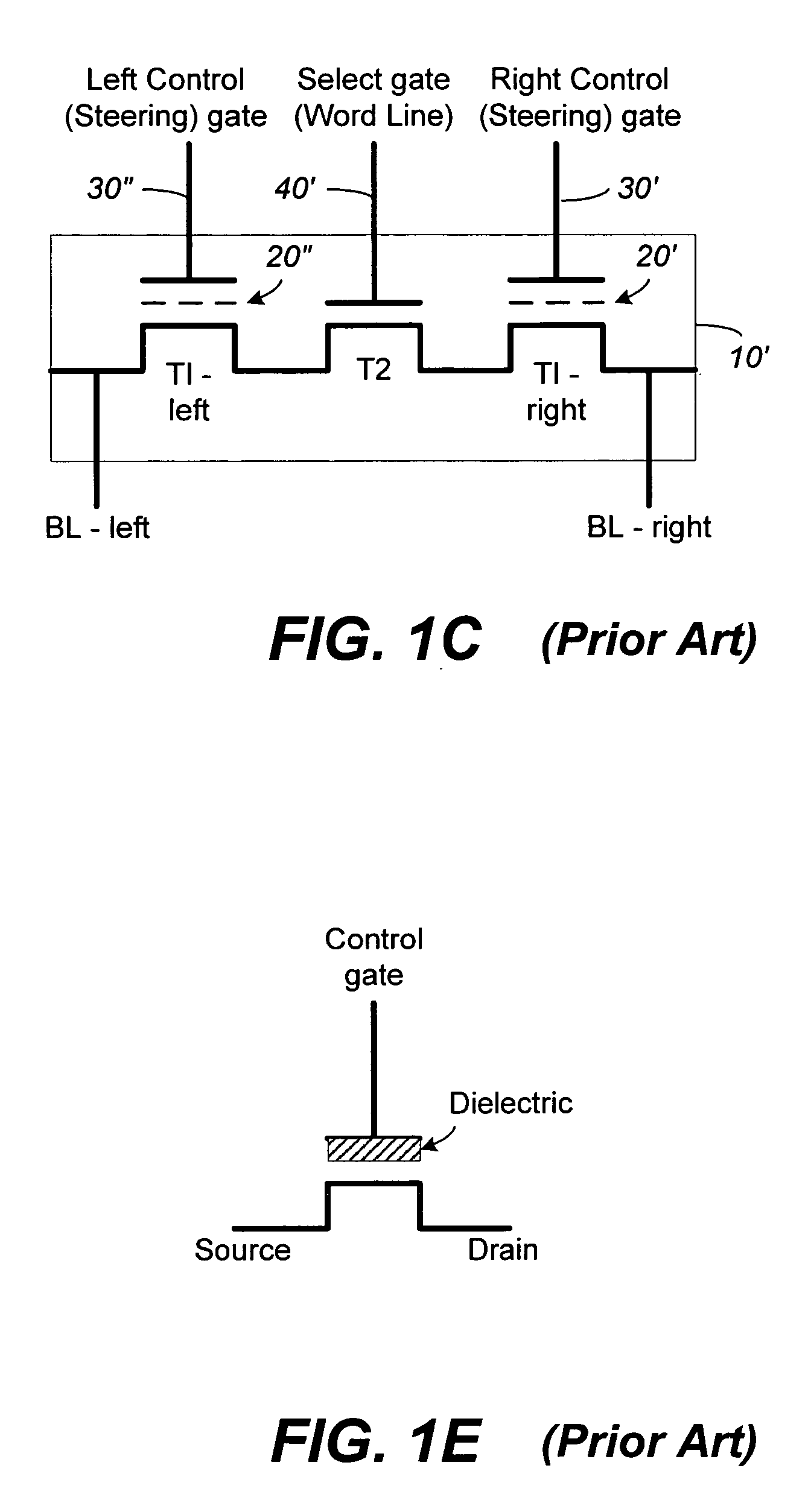

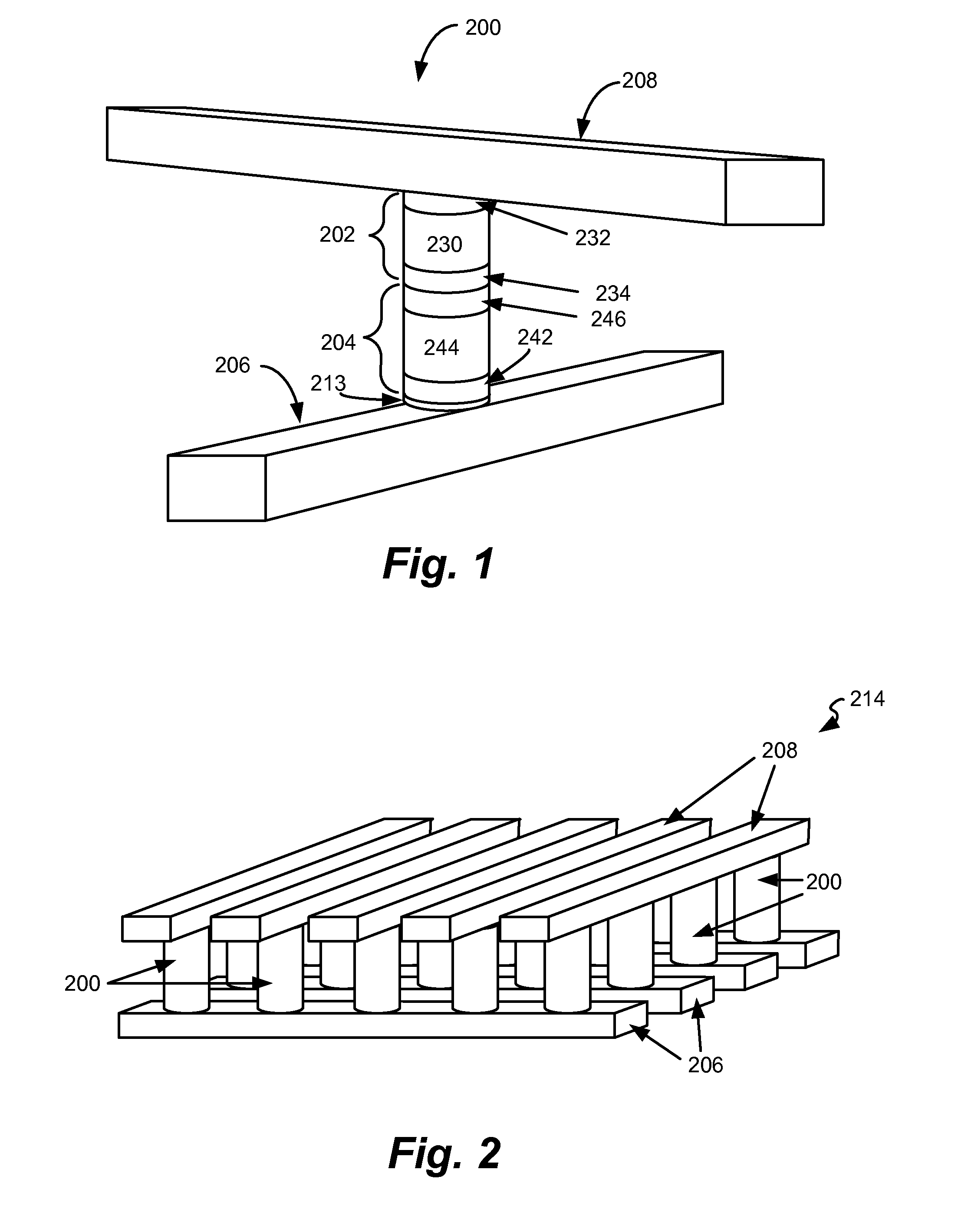

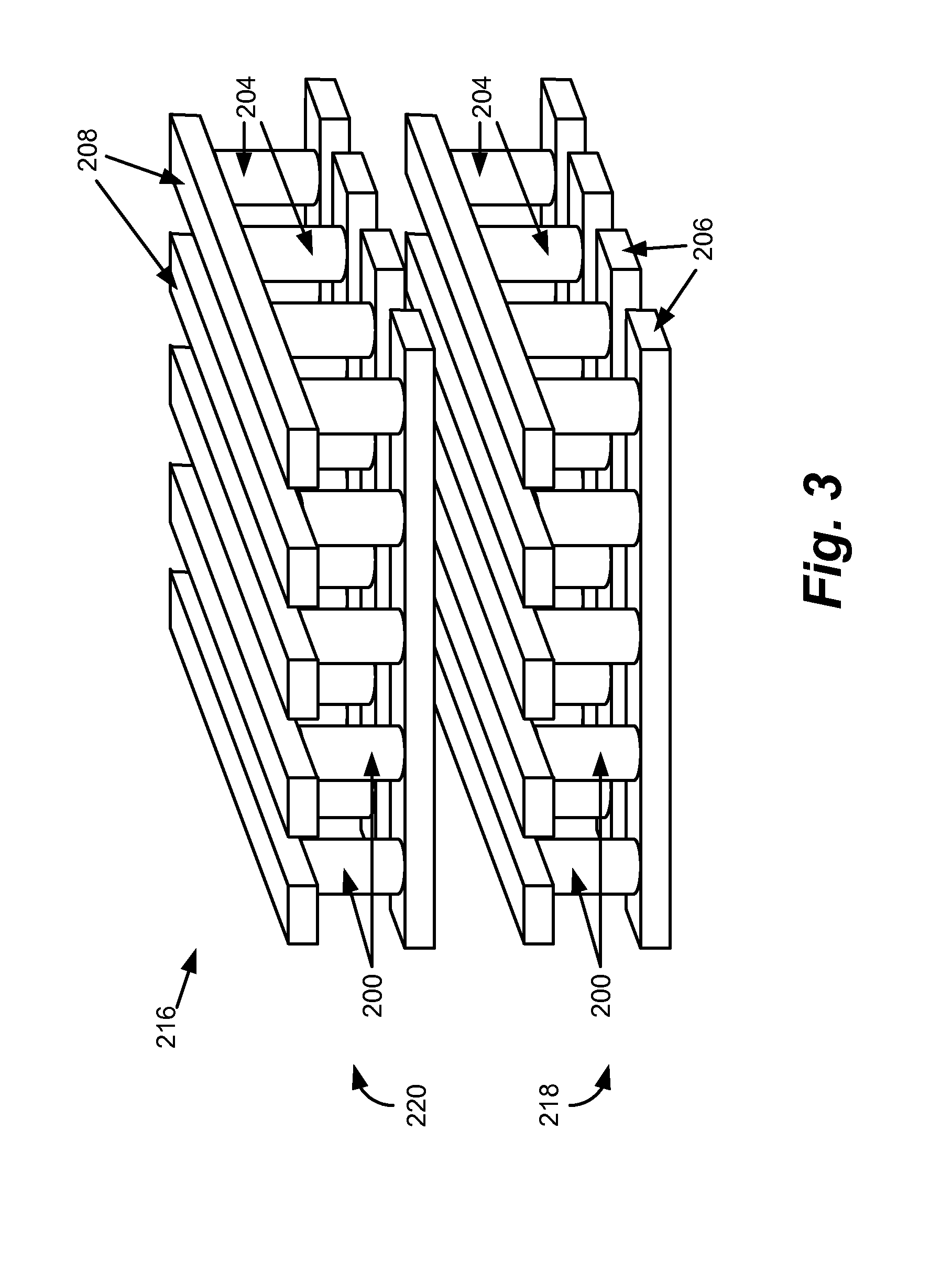

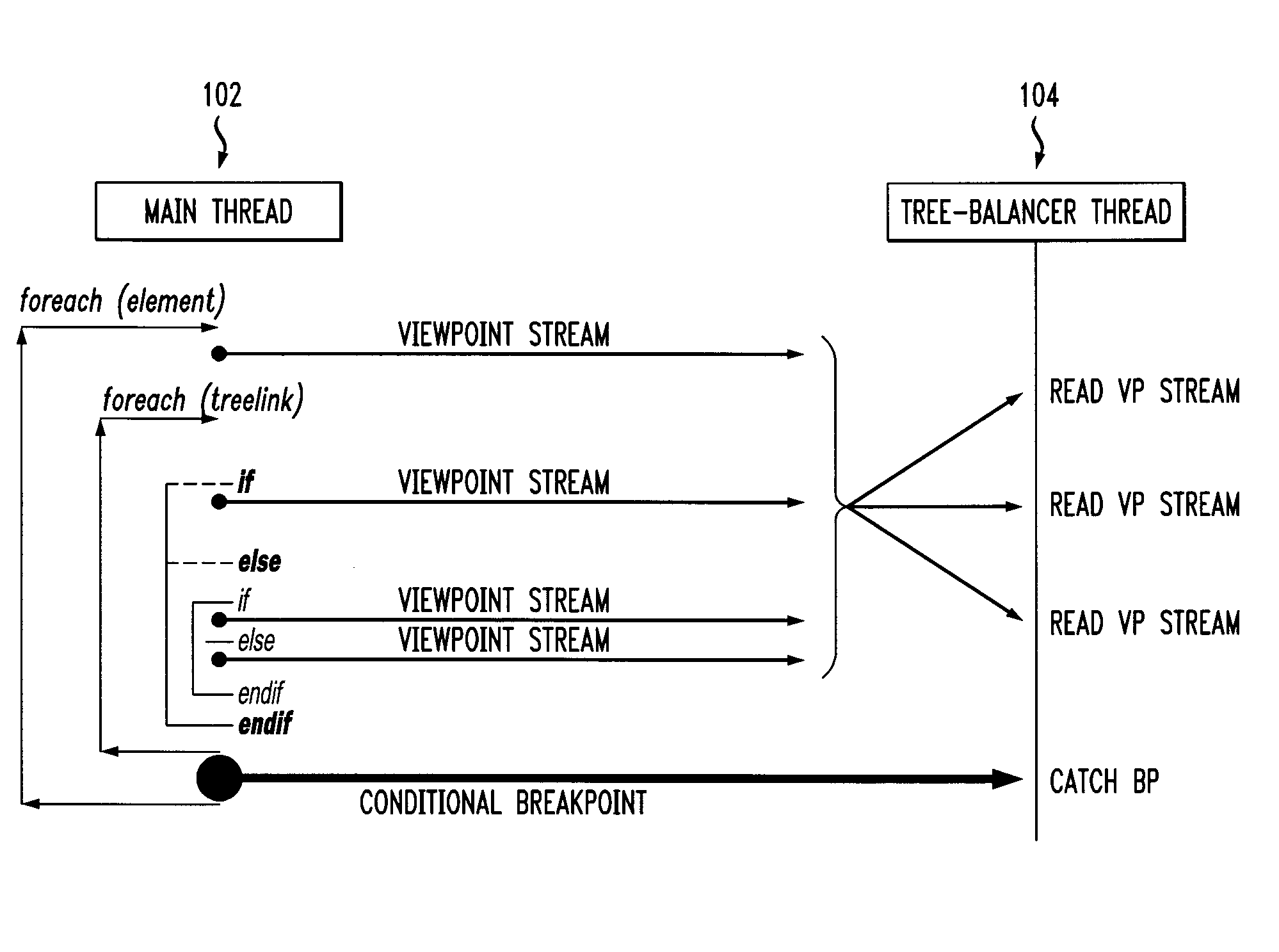

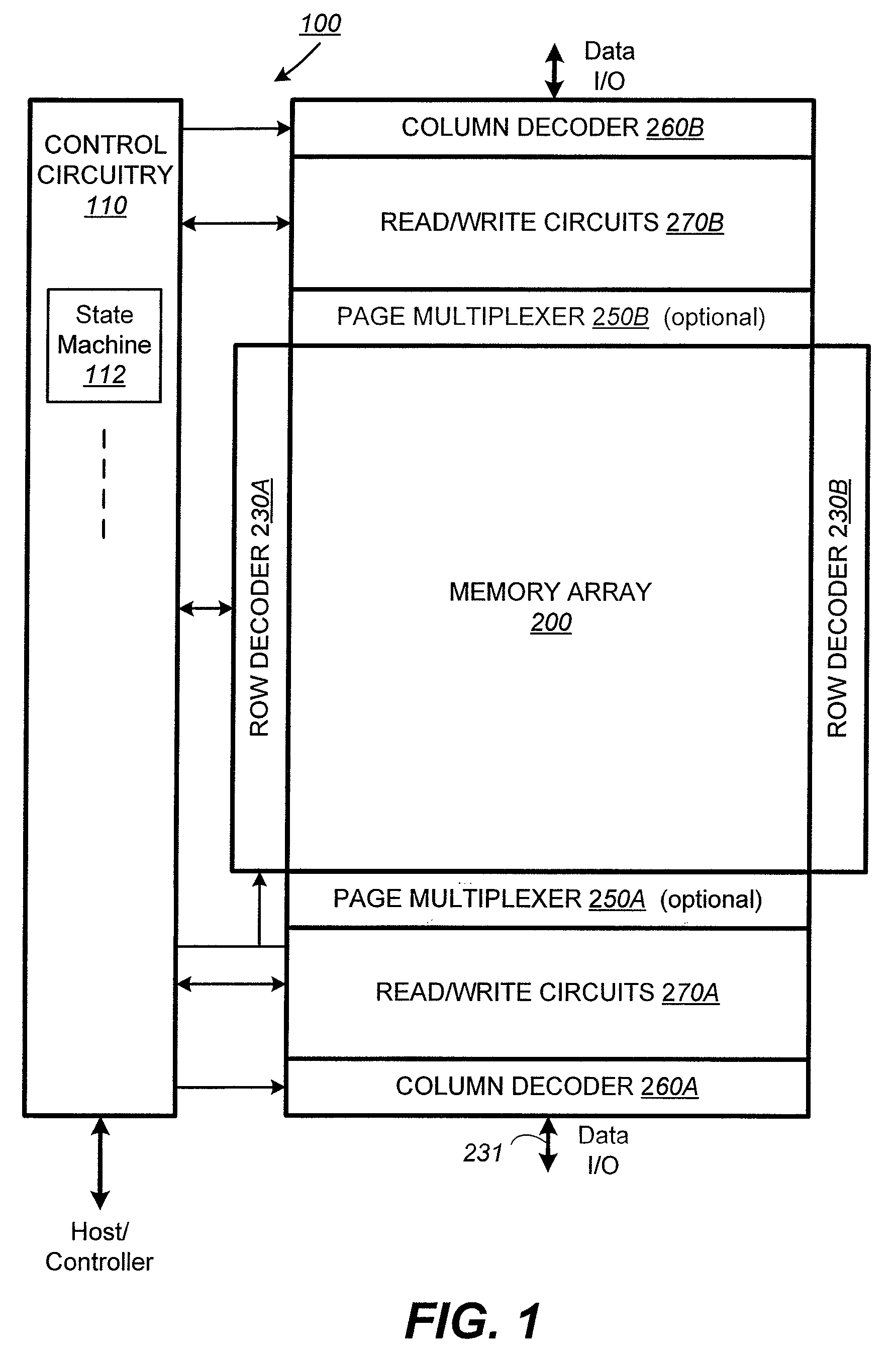

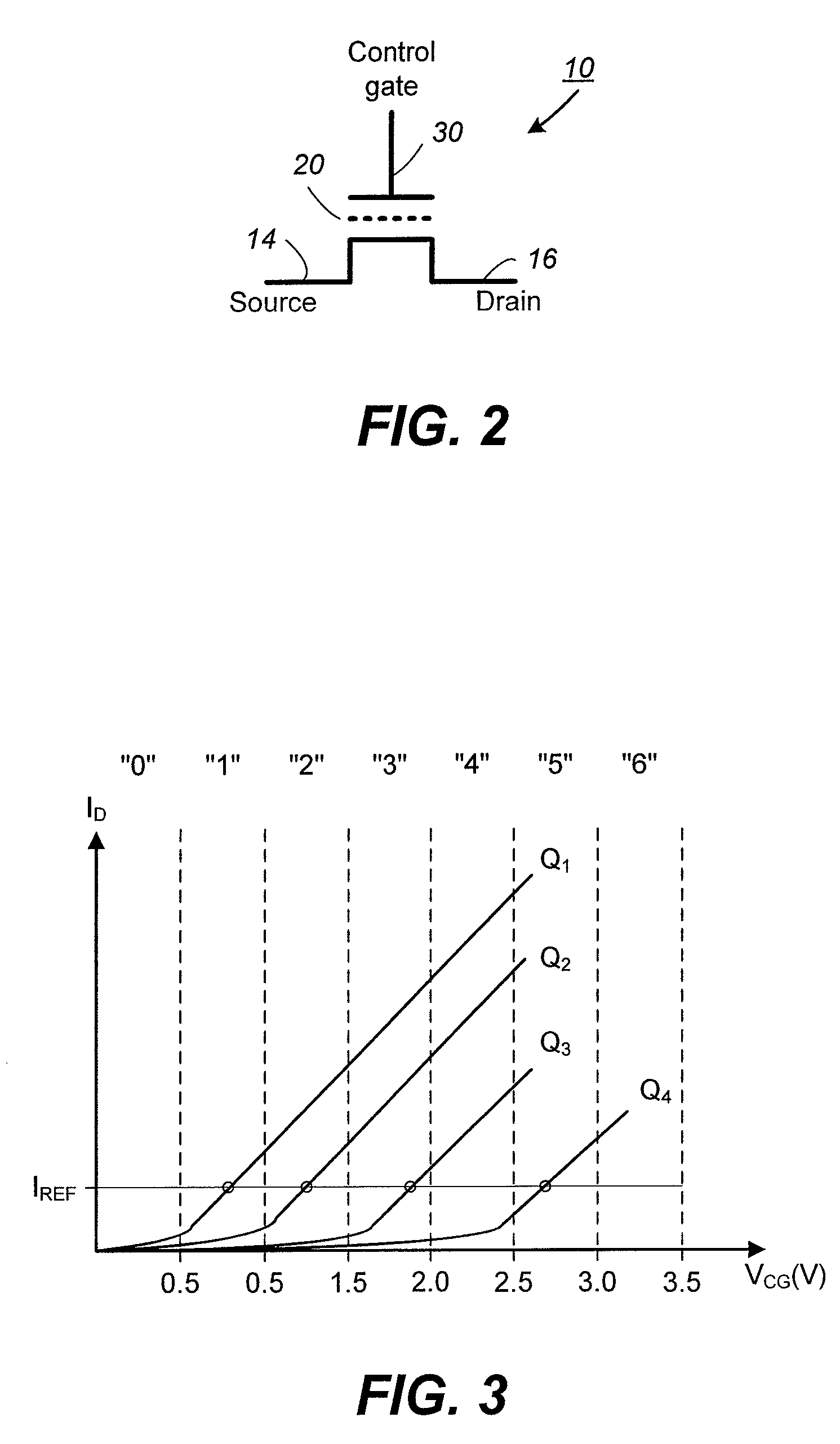

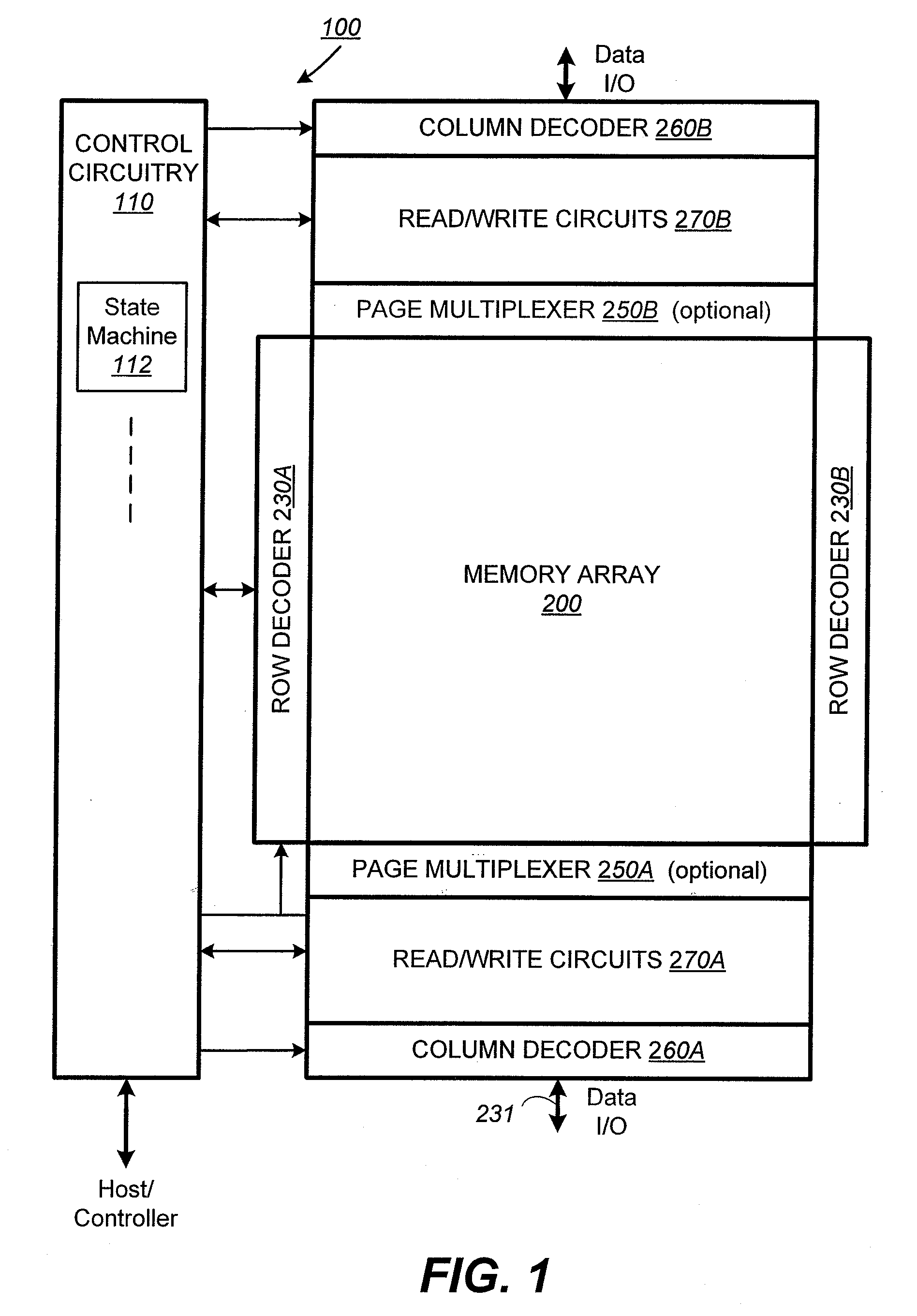

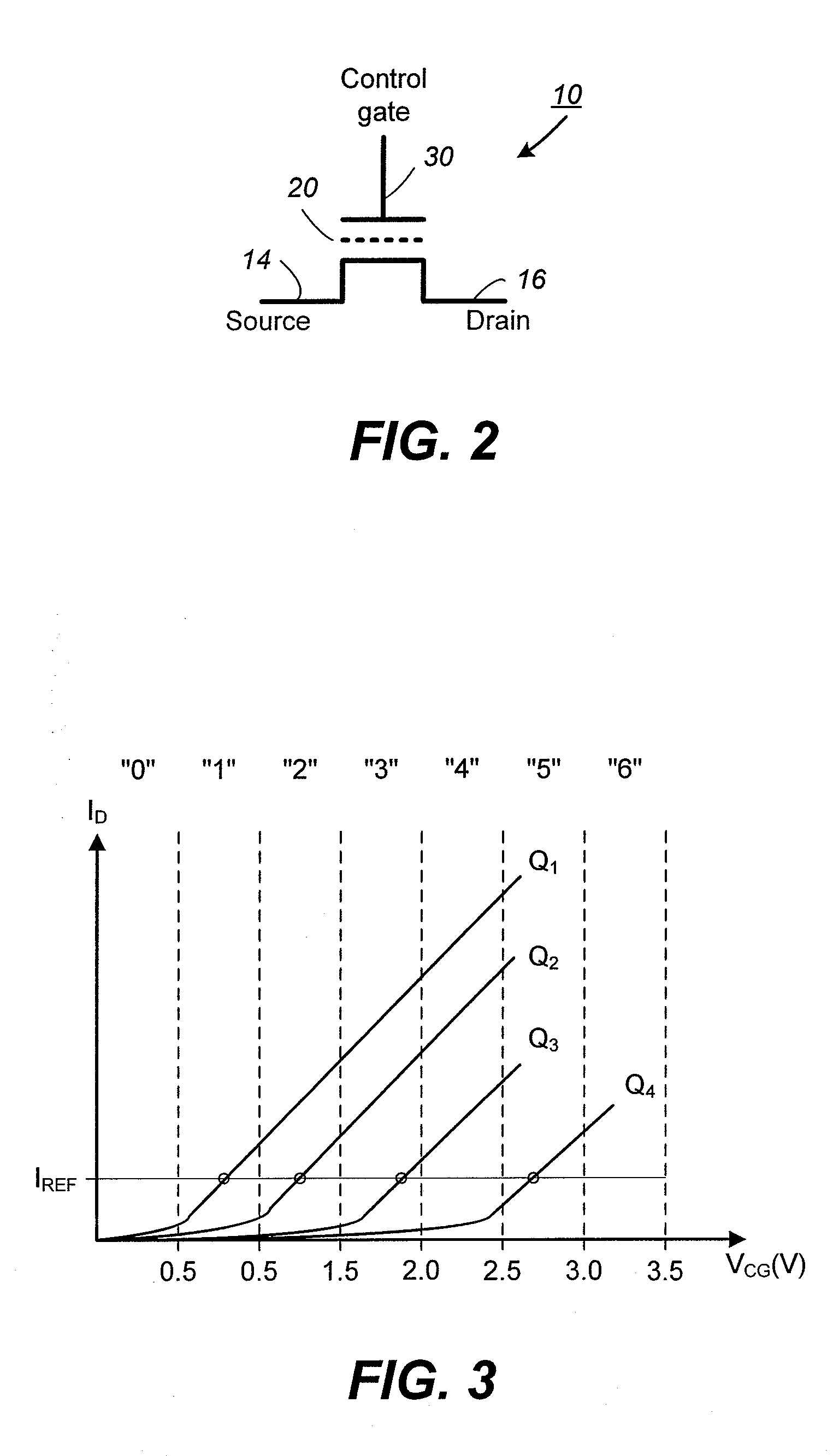

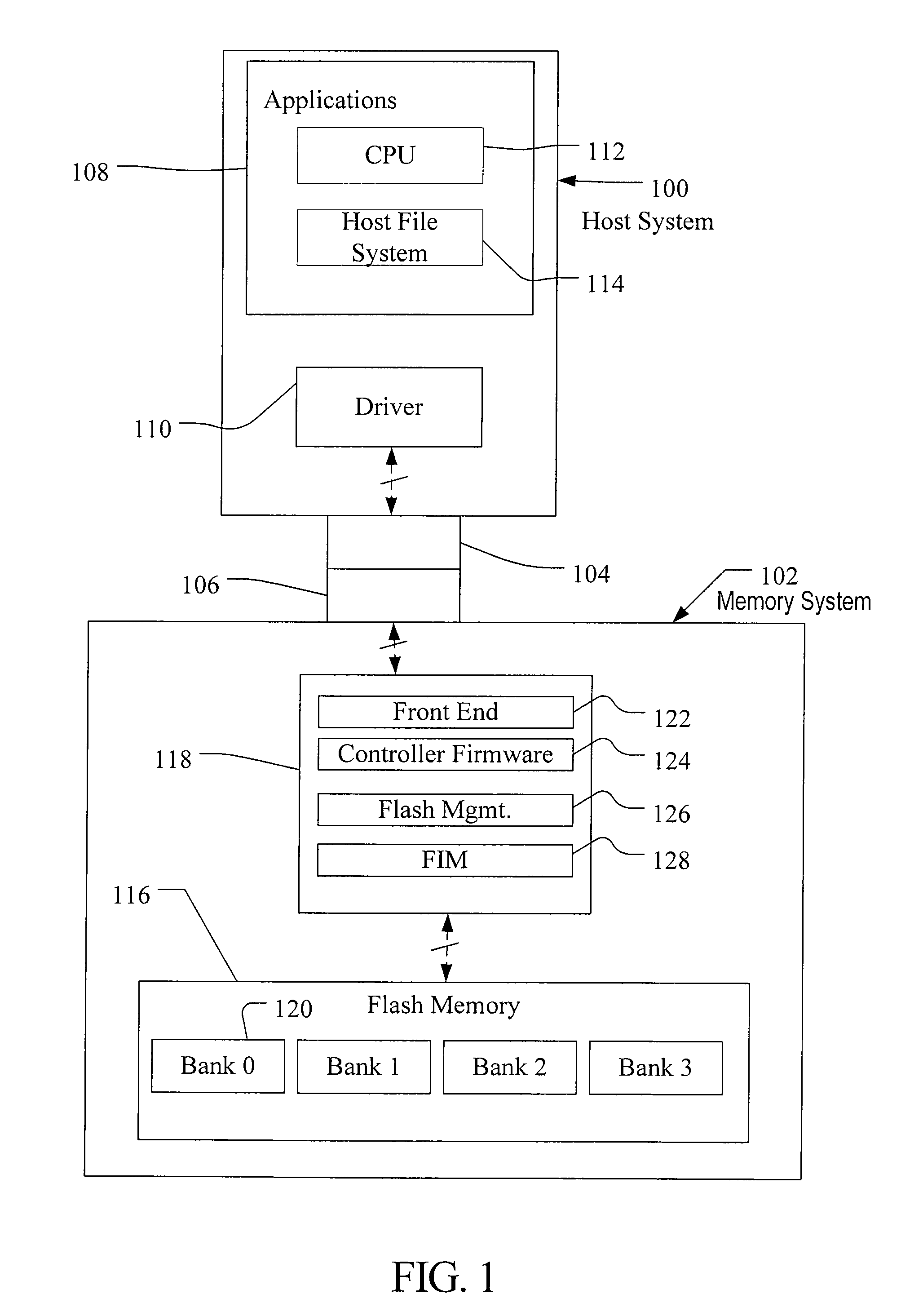

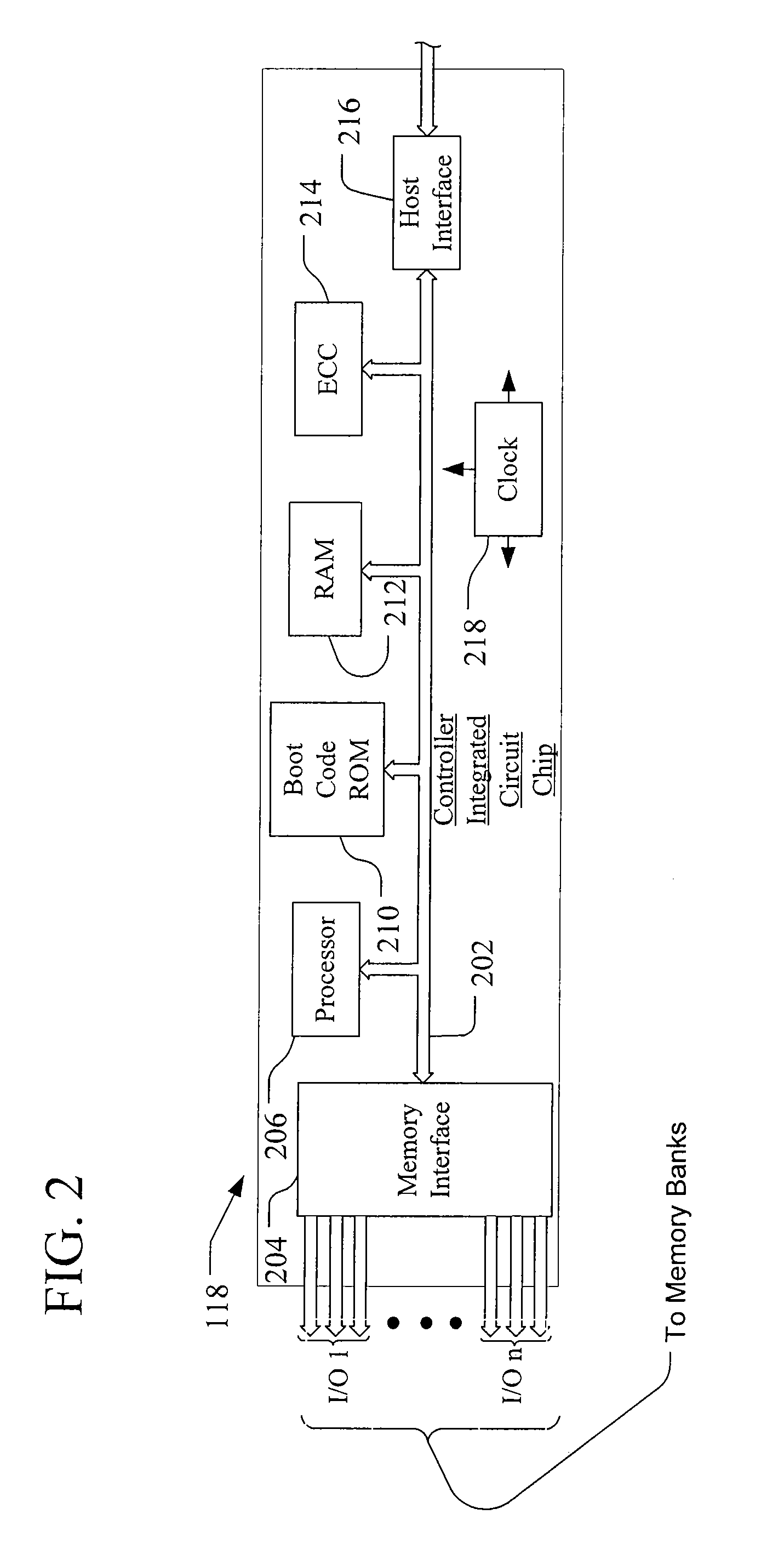

Non-volatile memory and method with reduced neighboring field errors

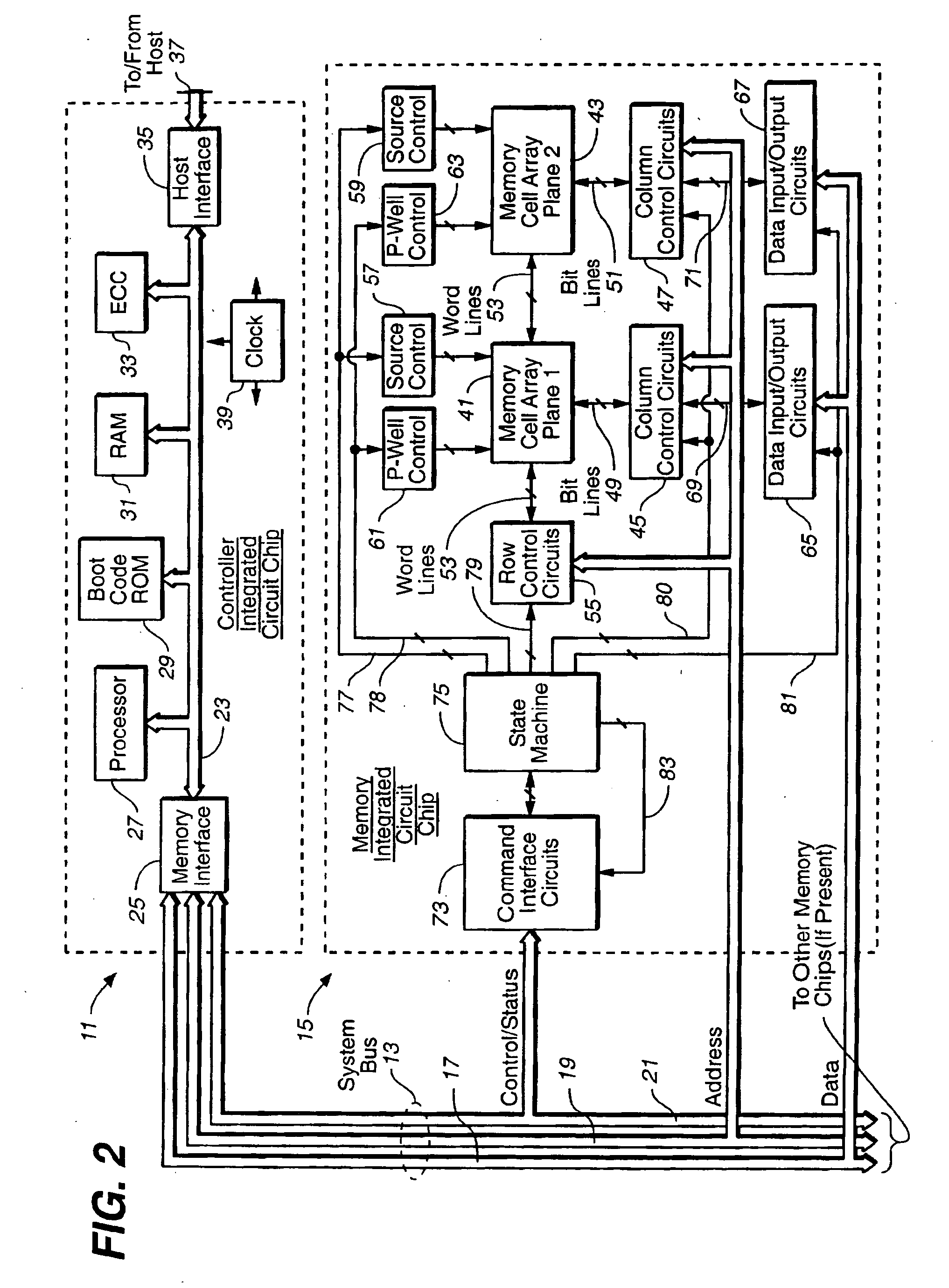

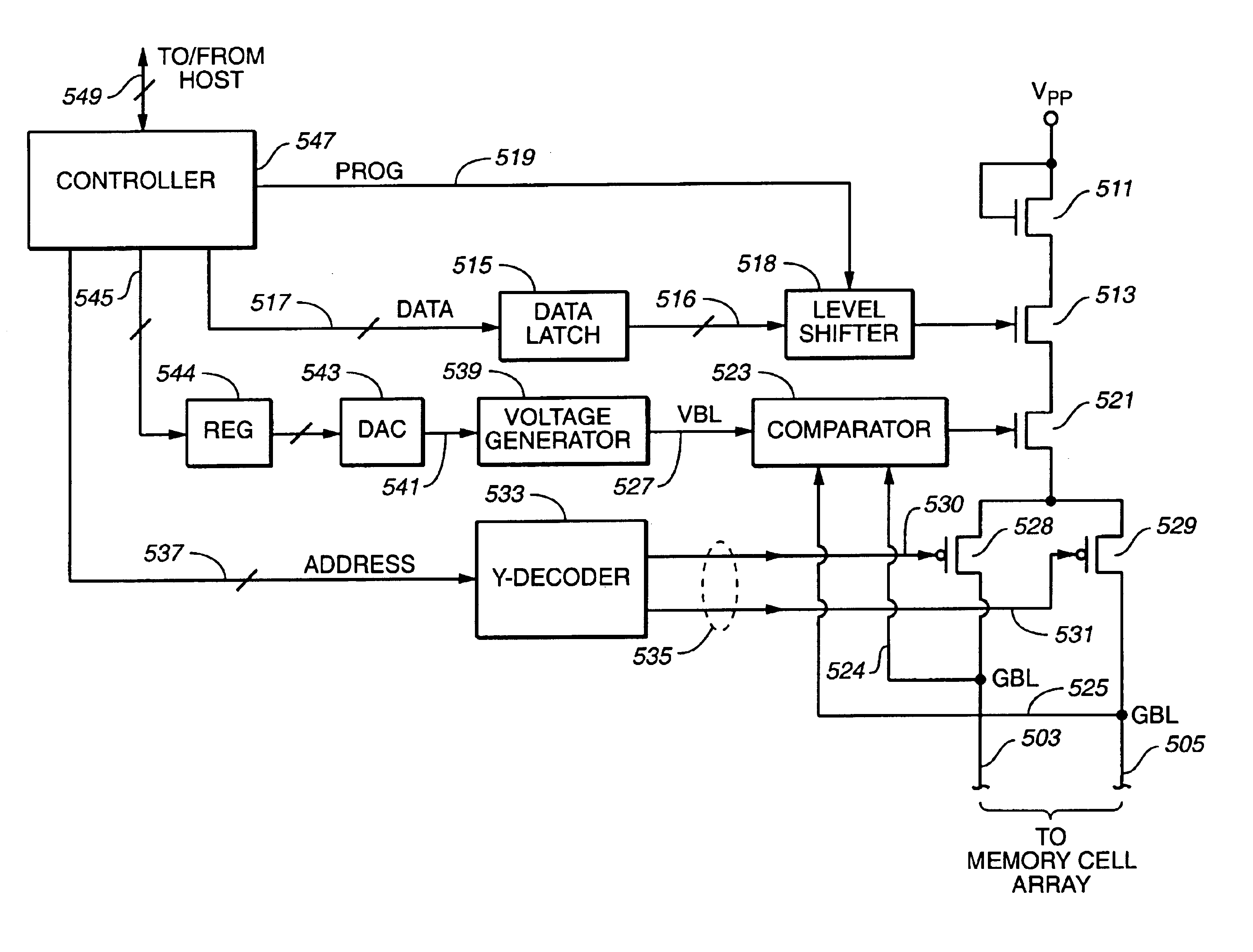

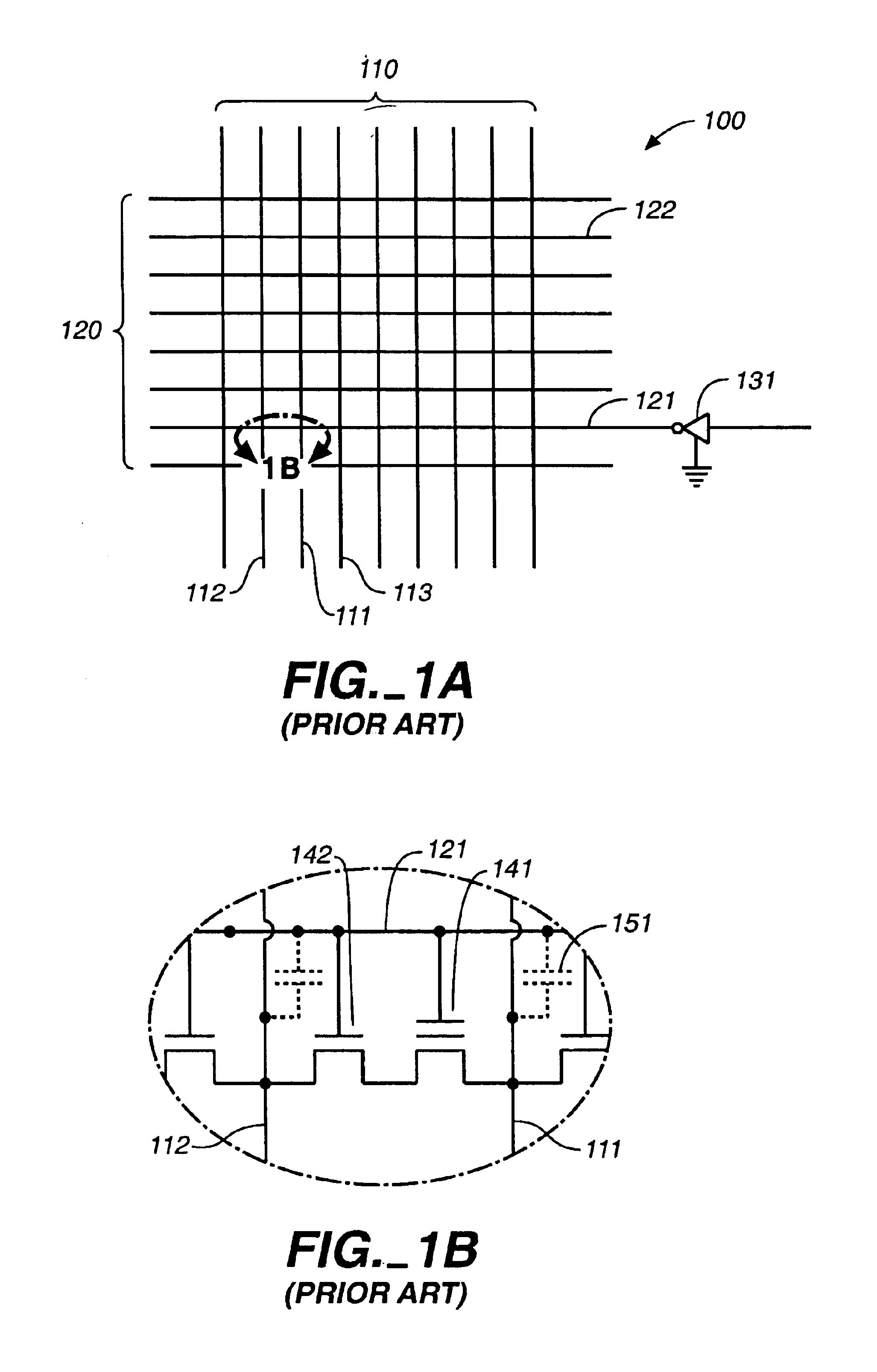

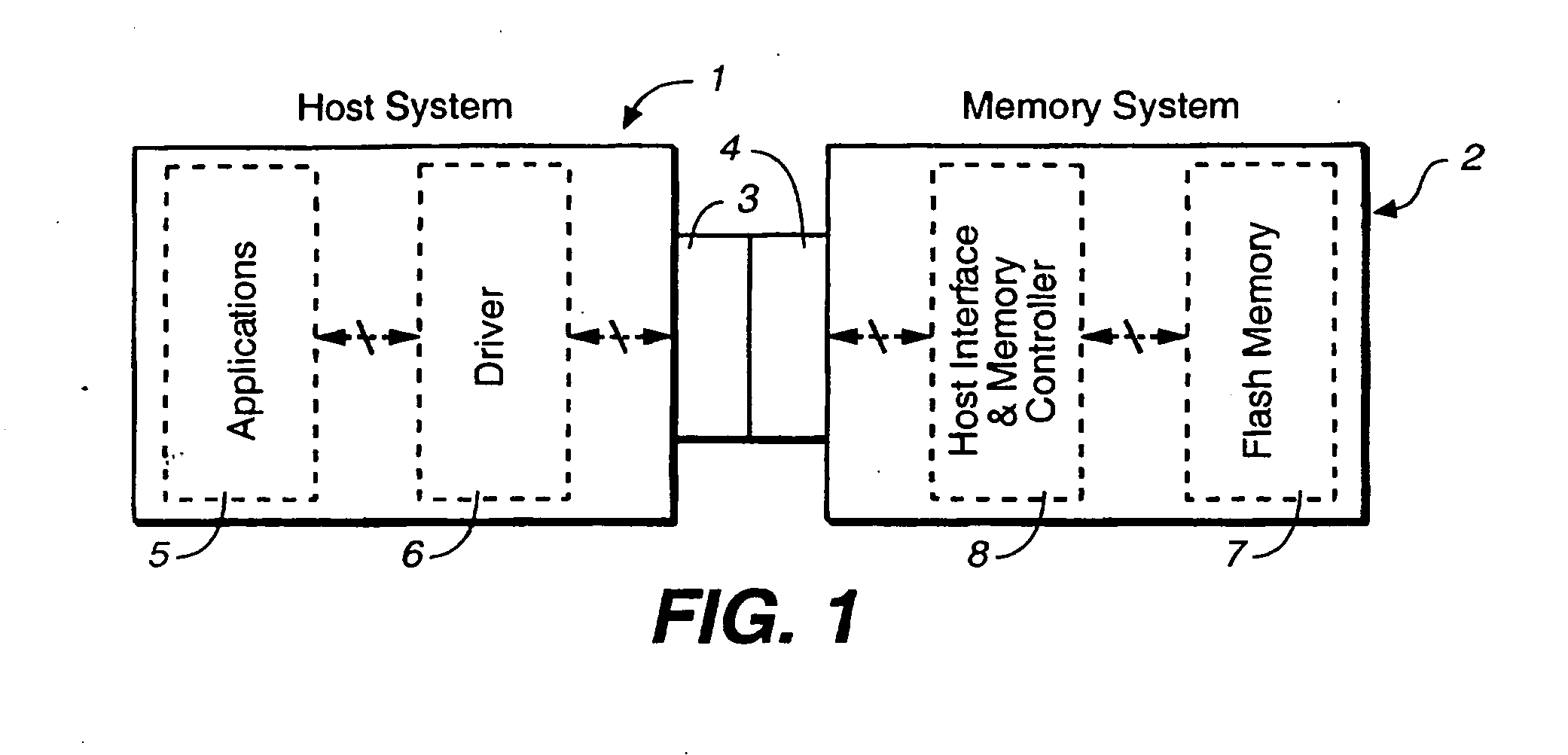

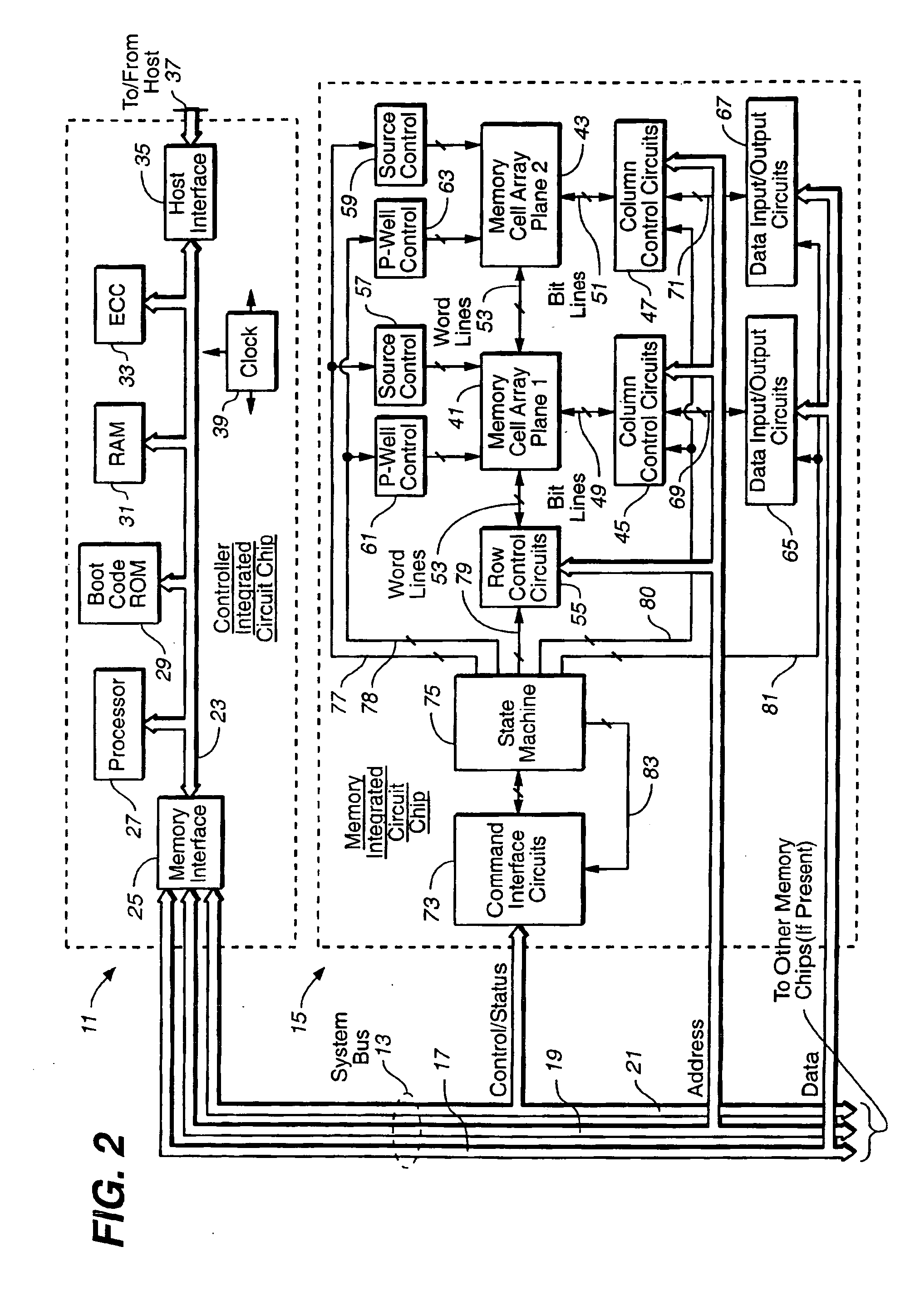

InactiveUS6987693B2Large capacityImprove performanceRead-only memoriesDigital storageNon-volatile memoryParallel programing

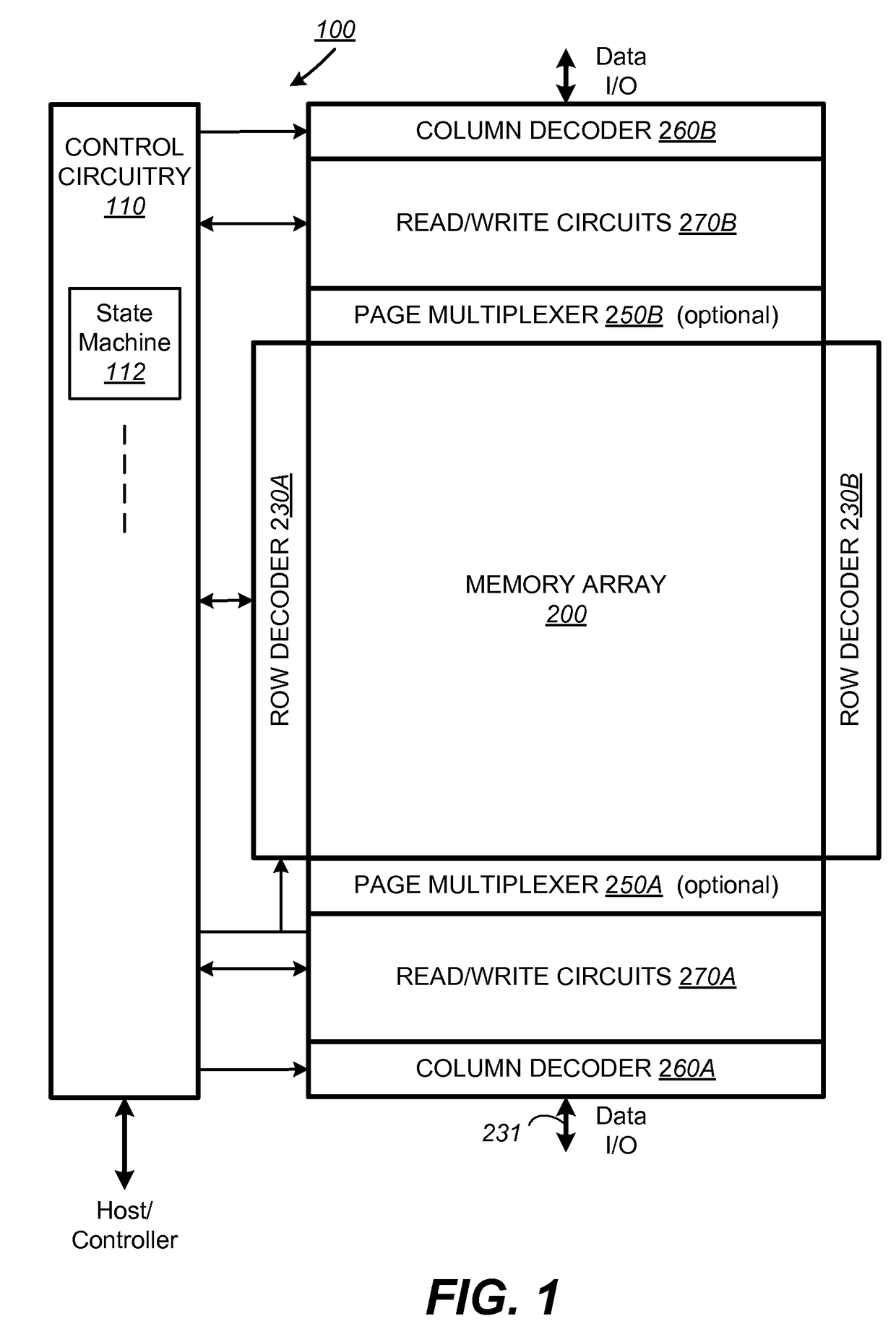

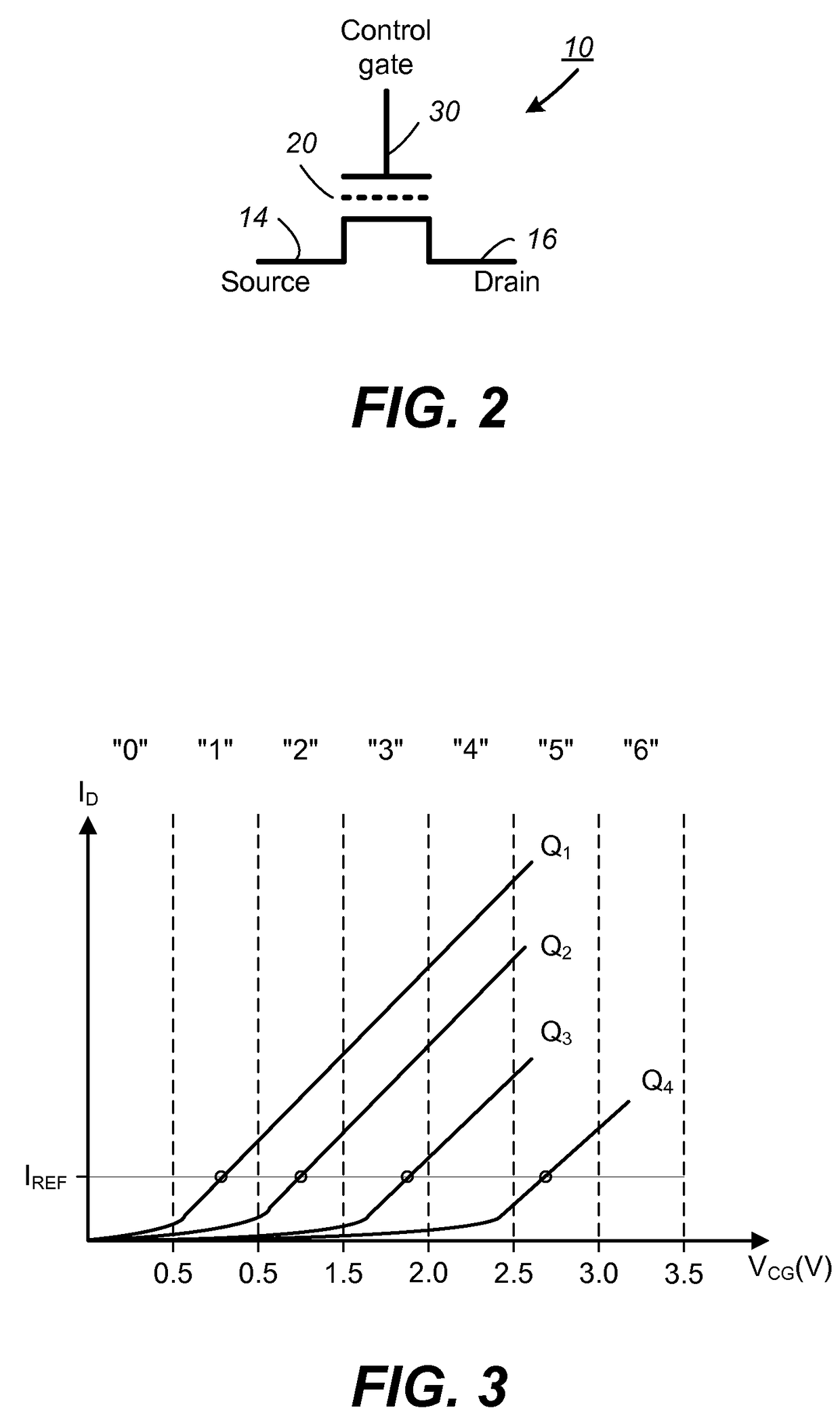

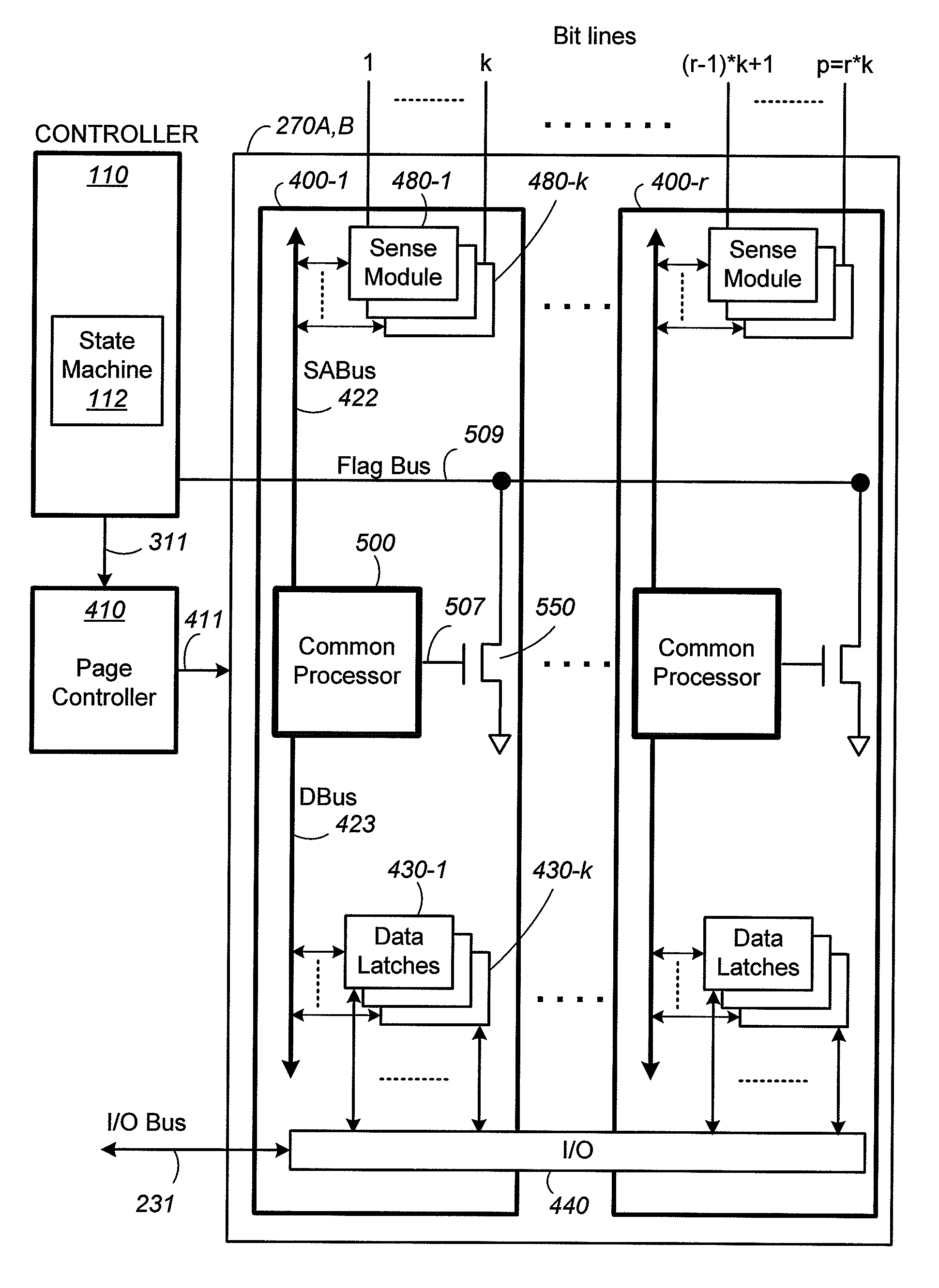

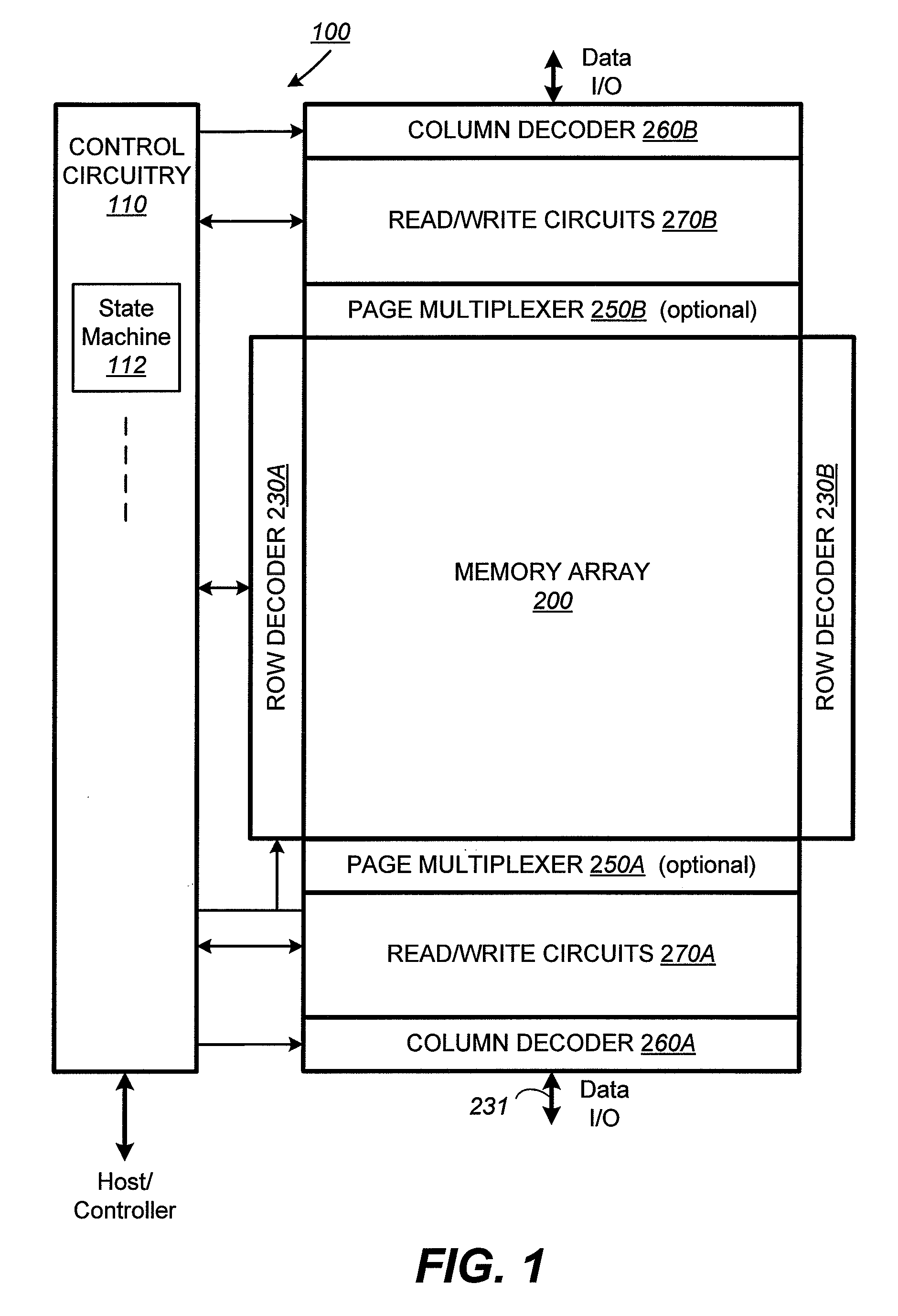

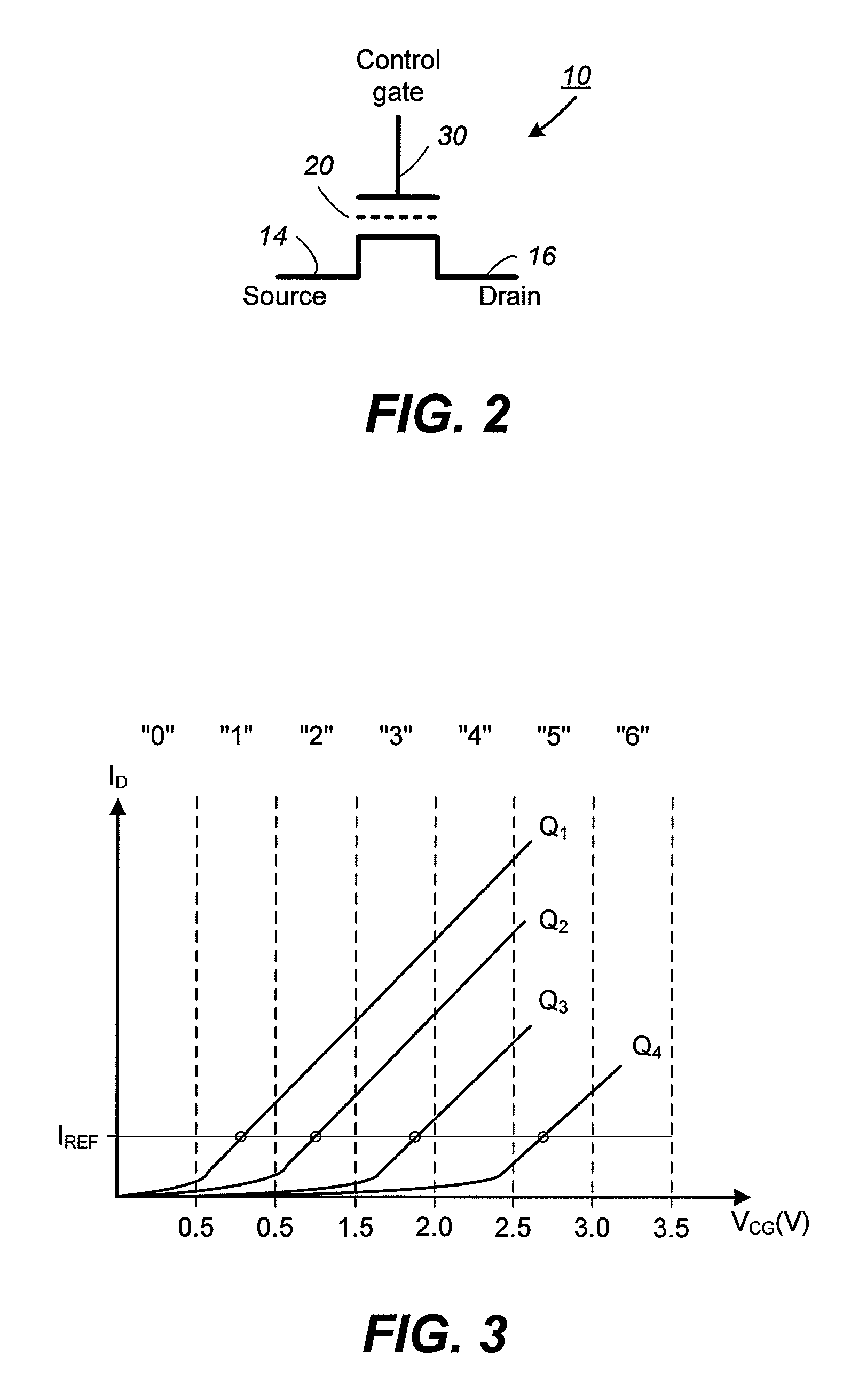

A memory device and a method thereof allow programming and sensing a plurality of memory cells in parallel in order to minimize errors caused by coupling from fields of neighboring cells and to improve performance. The memory device and method have the plurality of memory cells linked by the same word line and a read / write circuit is coupled to each memory cells in a contiguous manner. Thus, a memory cell and its neighbors are programmed together and the field environment for each memory cell relative to its neighbors during programming and subsequent reading is less varying. This improves performance and reduces errors caused by coupling from fields of neighboring cells, as compared to conventional architectures and methods in which cells on even columns are programmed independently of cells in odd columns.

Owner:SANDISK TECH LLC

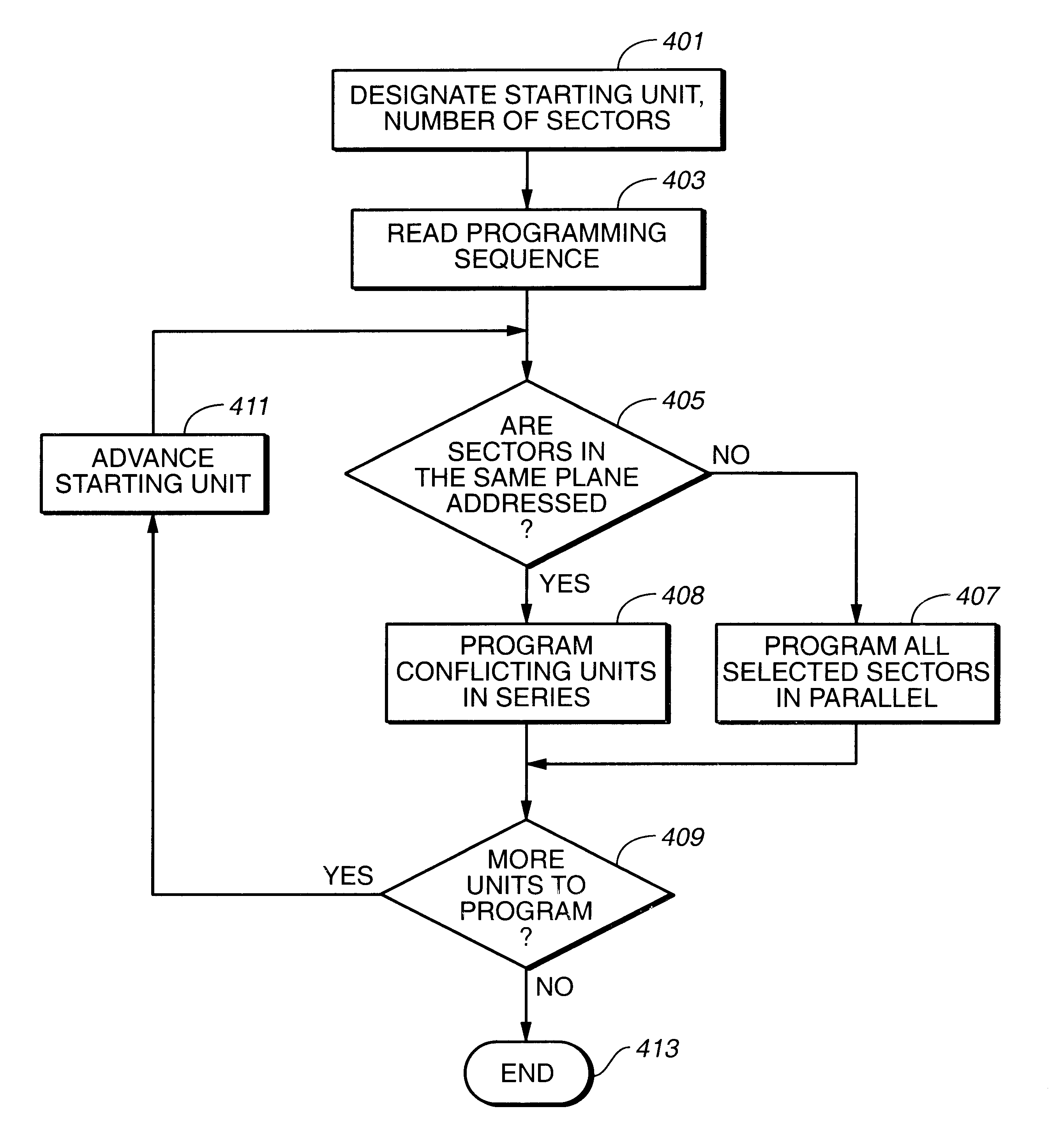

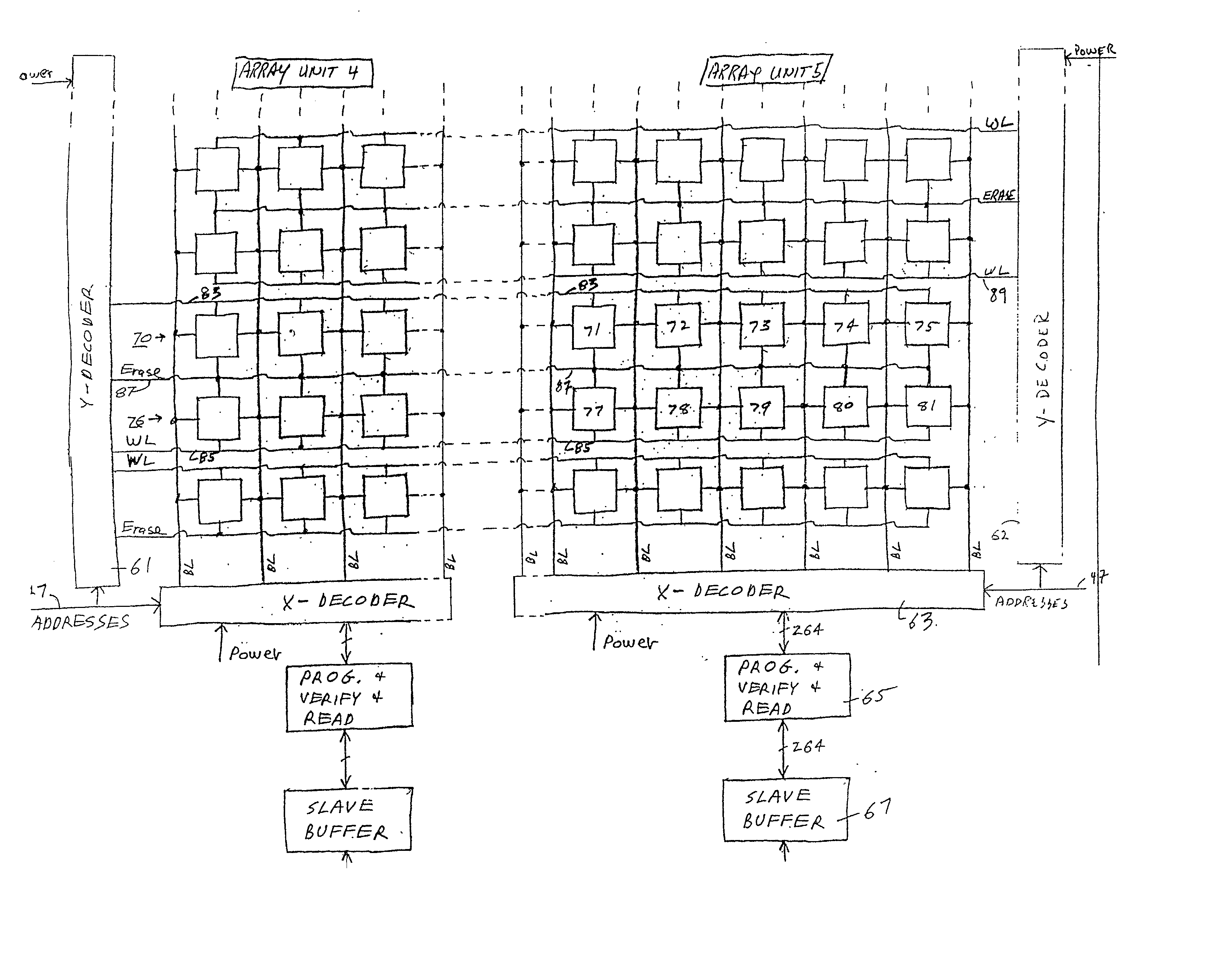

Method of reducing disturbs in non-volatile memory

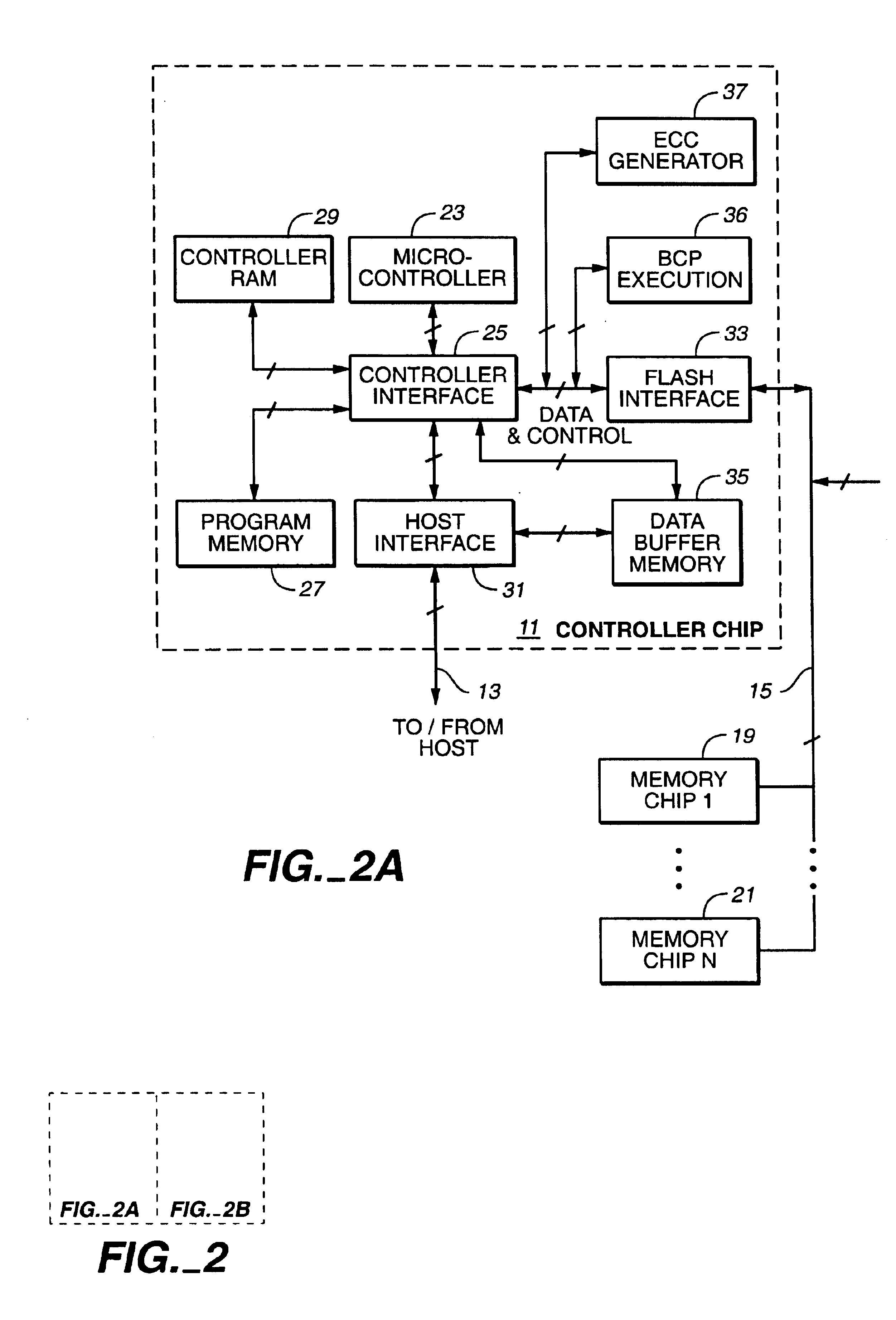

In a non-volatile memory, the displacement current generated in non-selected word lines that results when the voltage levels on an array's bit lines are changed can result in disturbs. Techniques for reducing these currents are presented. In a first aspect, the number of cells being simultaneously programmed on a word line is reduced. In a non-volatile memory where an array of memory cells is composed of a number of units, and the units are combined into planes that share common word lines, the simultaneous programming of units within the same plane is avoided. Multiple units may be programmed in parallel, but these are arranged to be in separate planes. This is done by selecting the number of units to be programmed in parallel and their order such that all the units programmed together are from distinct planes, by comparing the units to be programmed to see if any are from the same plane, or a combination of these. In a second, complementary aspect, the rate at which the voltage levels on the bit lines are changed is adjustable. By monitoring the frequency of disturbs, or based upon the device's application, the rate at which the bit line drivers change the bit line voltage is adjusted. This can be implemented by setting the rate externally, or by the controller based upon device performance and the amount of data error being generated.

Owner:SANDISK TECH LLC

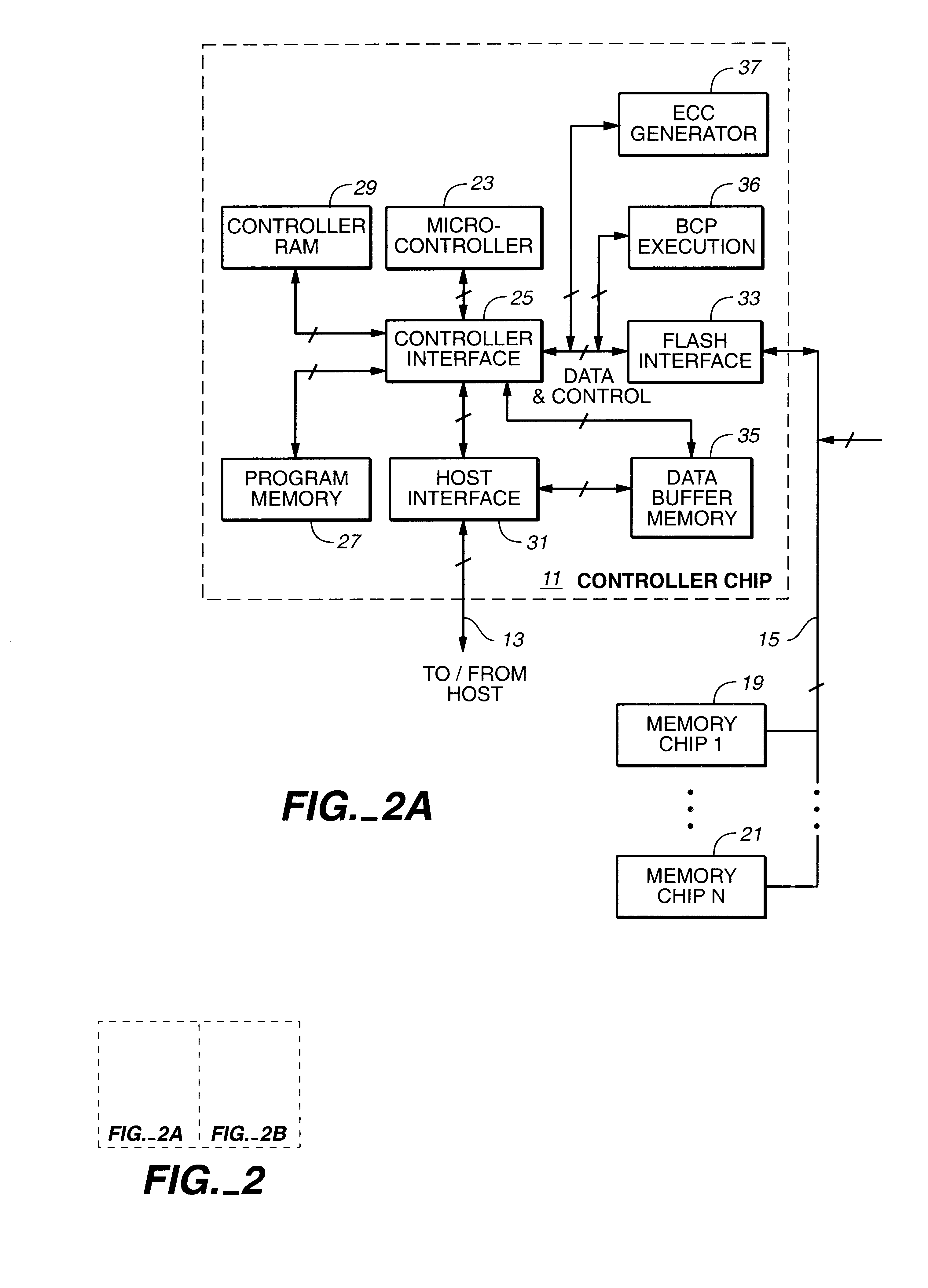

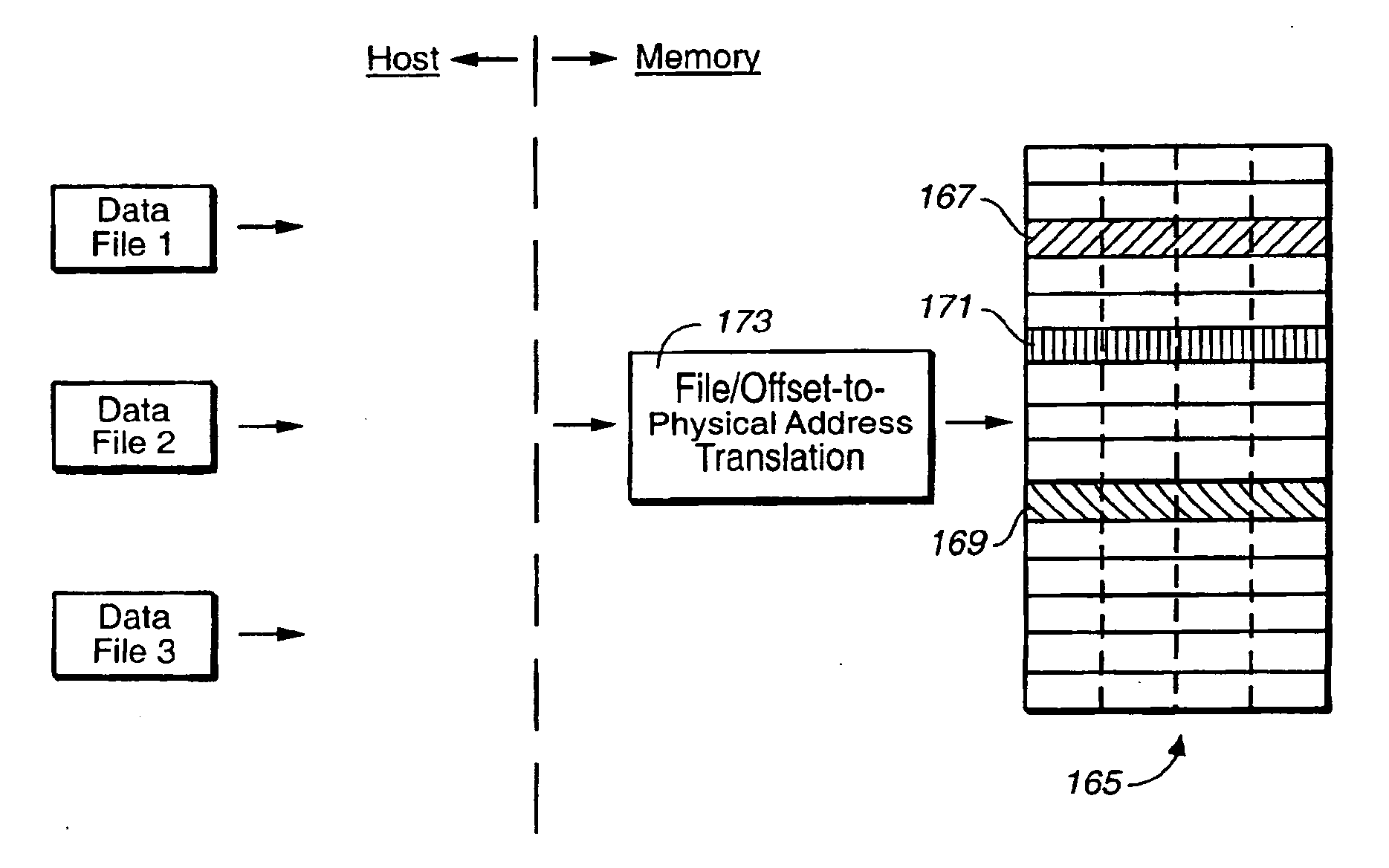

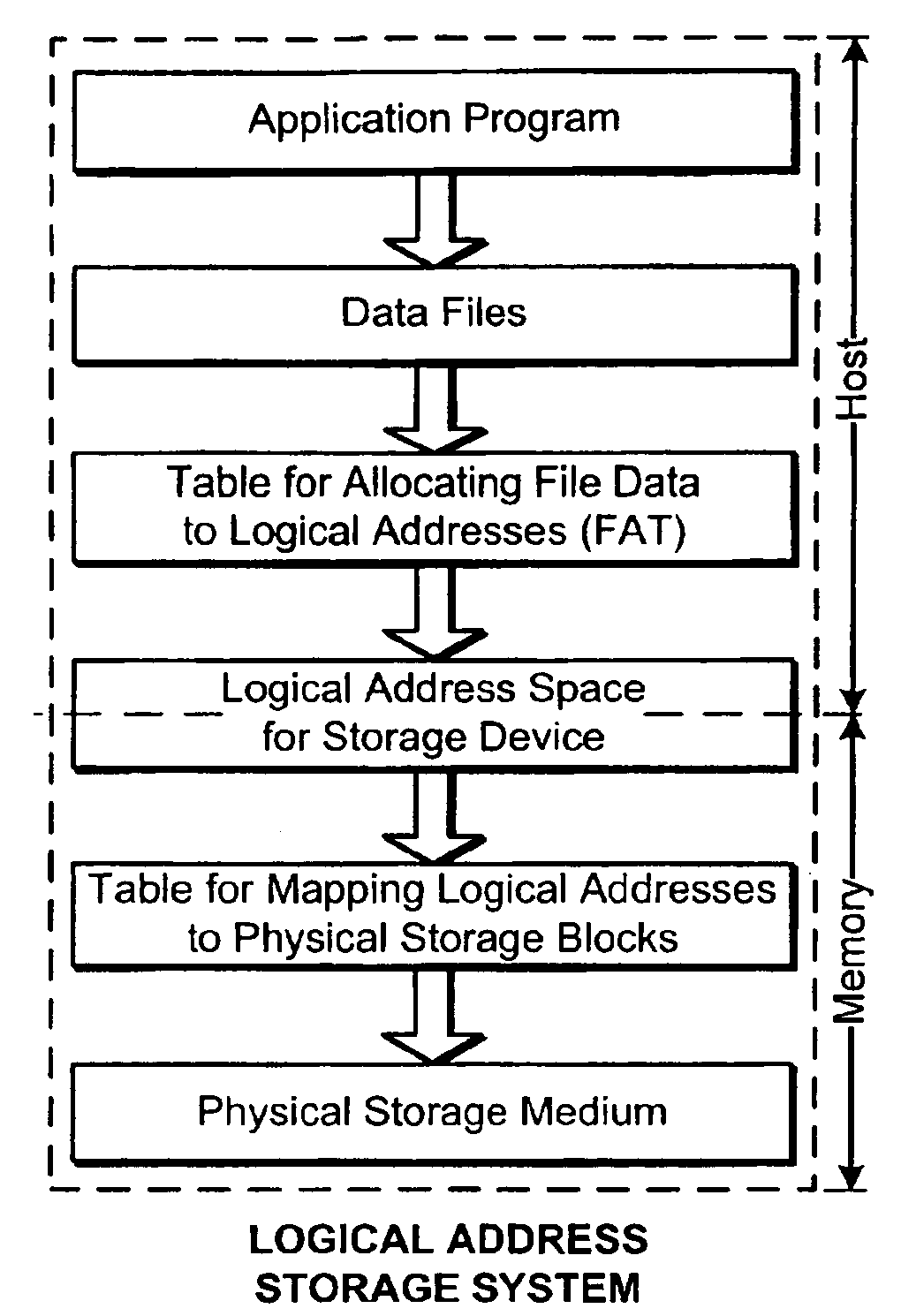

Methods for data alignment in non-volatile memories with a directly mapped file storage system

InactiveUS20070143567A1Promote high performanceImprove performanceMemory architecture accessing/allocationMemory systemsAudio power amplifierData file

In the file storage system, each portion belonging to a data file is identified by its file ID and an offset along the data file, where the offset is a constant for the file and every file data portion is always kept at the same position within a memory page to be read or programmed in parallel. In this way, every time a page containing a file portion is read and copy to another page, the data in it is always page-aligned, and each bit within the file portion can always be manipulated by the same sense amplifier and same set data latches within the same memory column. In a preferred implementation, the page alignment is such that (offset within a page)=(data offset within a file) MOD (page size). Any gaps that may exist in page can be padded with any existing page-aligned valid data.

Owner:SANDISK TECH LLC

Method of reducing disturbs in non-volatile memory

Owner:SANDISK TECH LLC

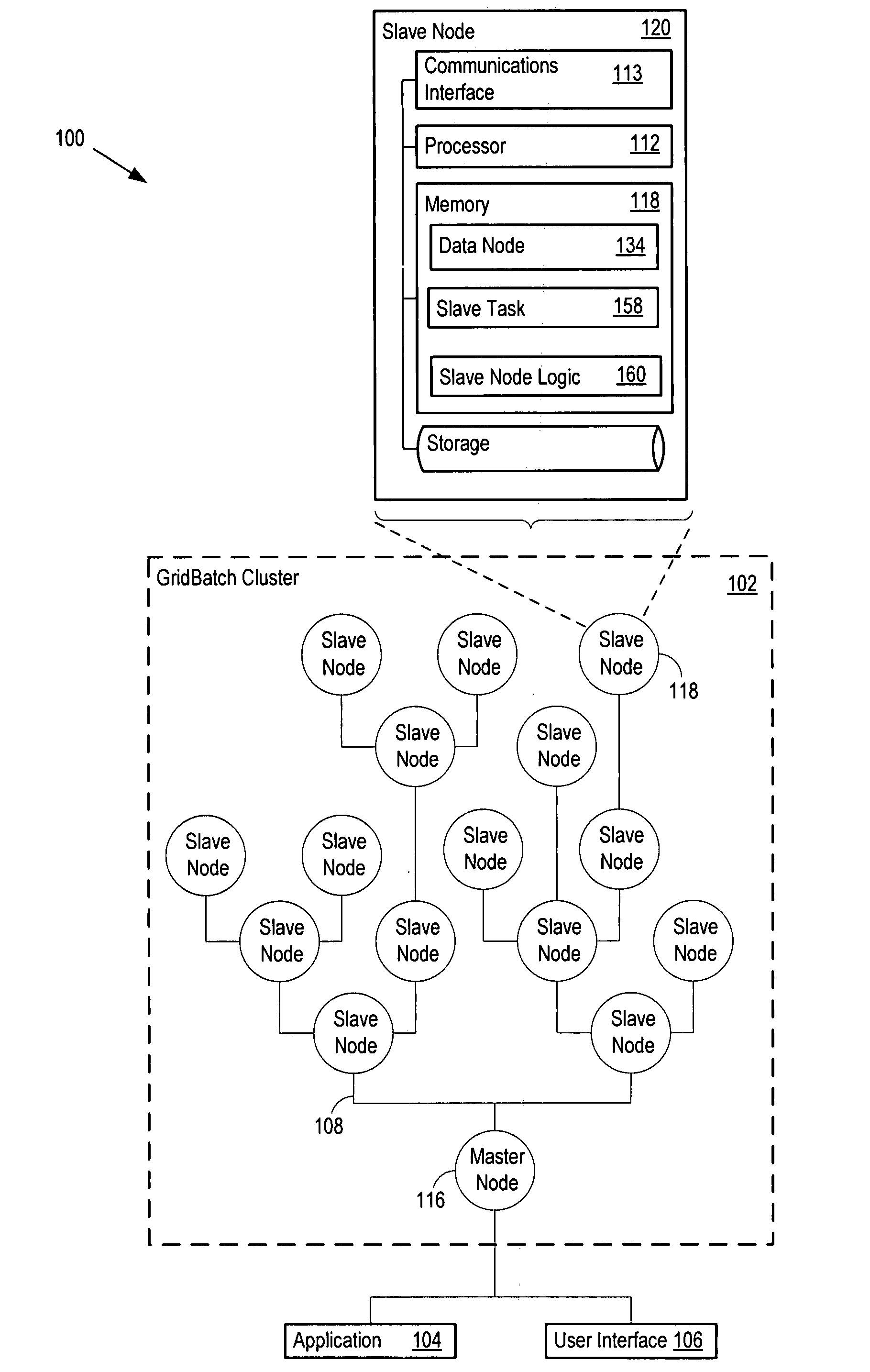

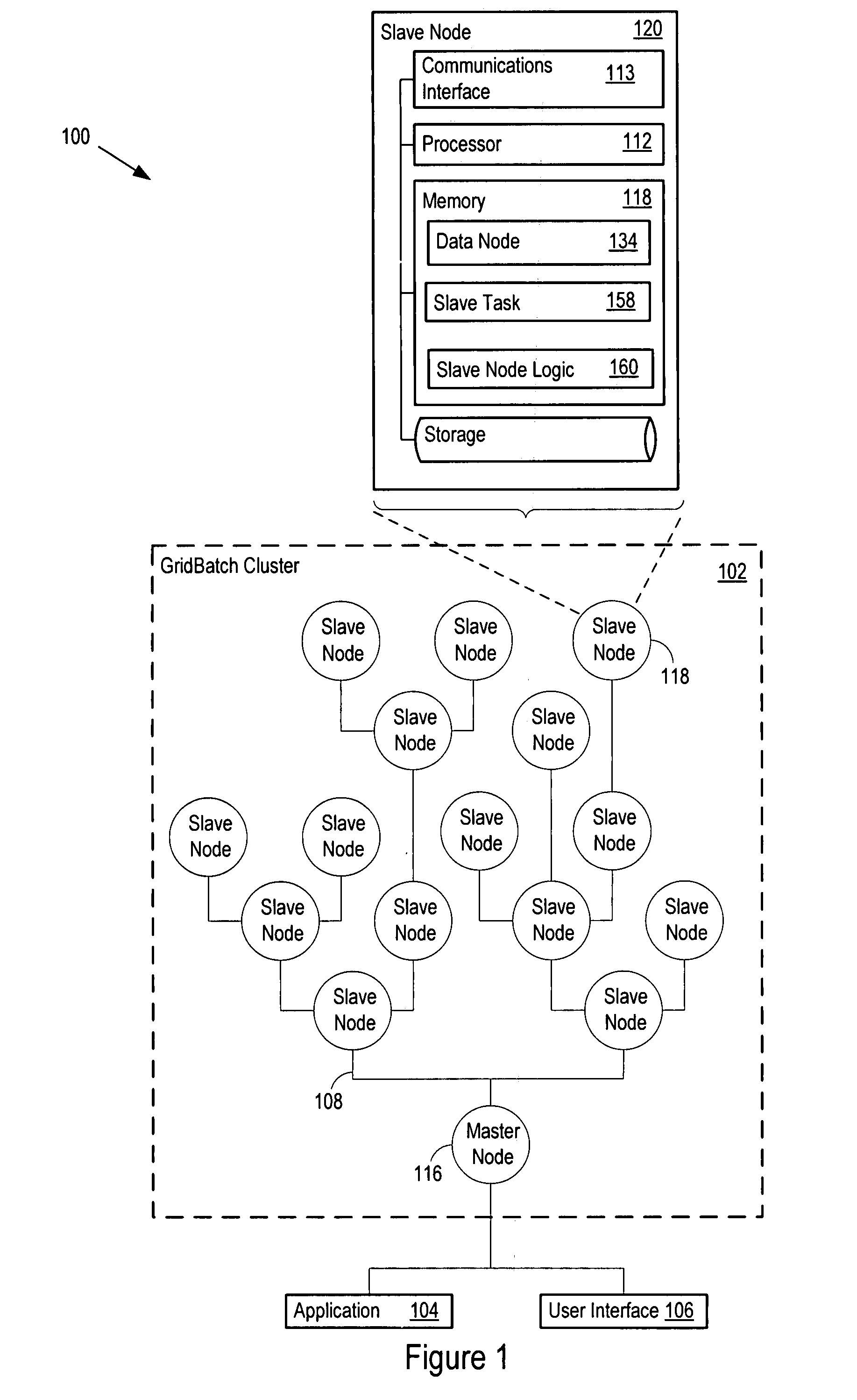

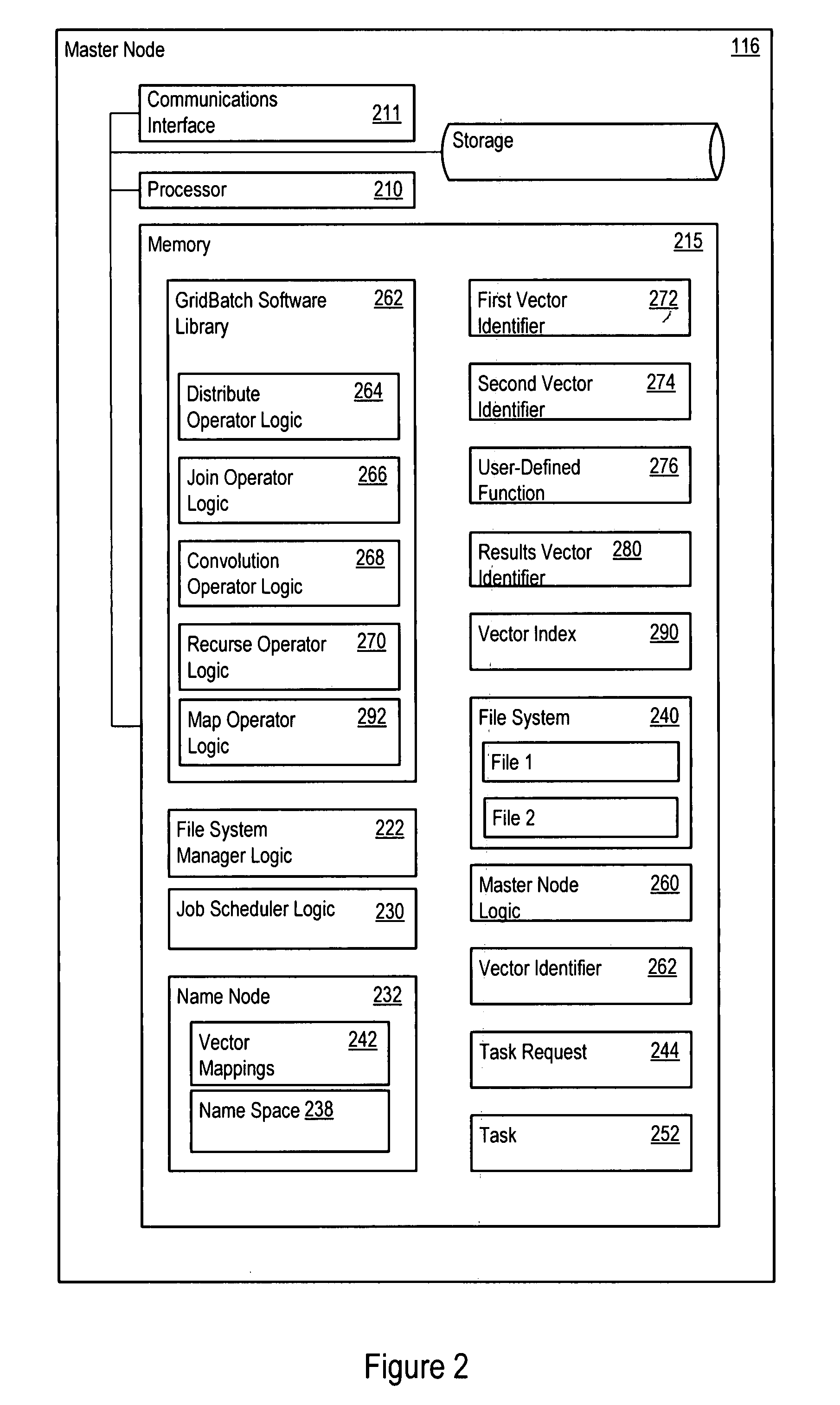

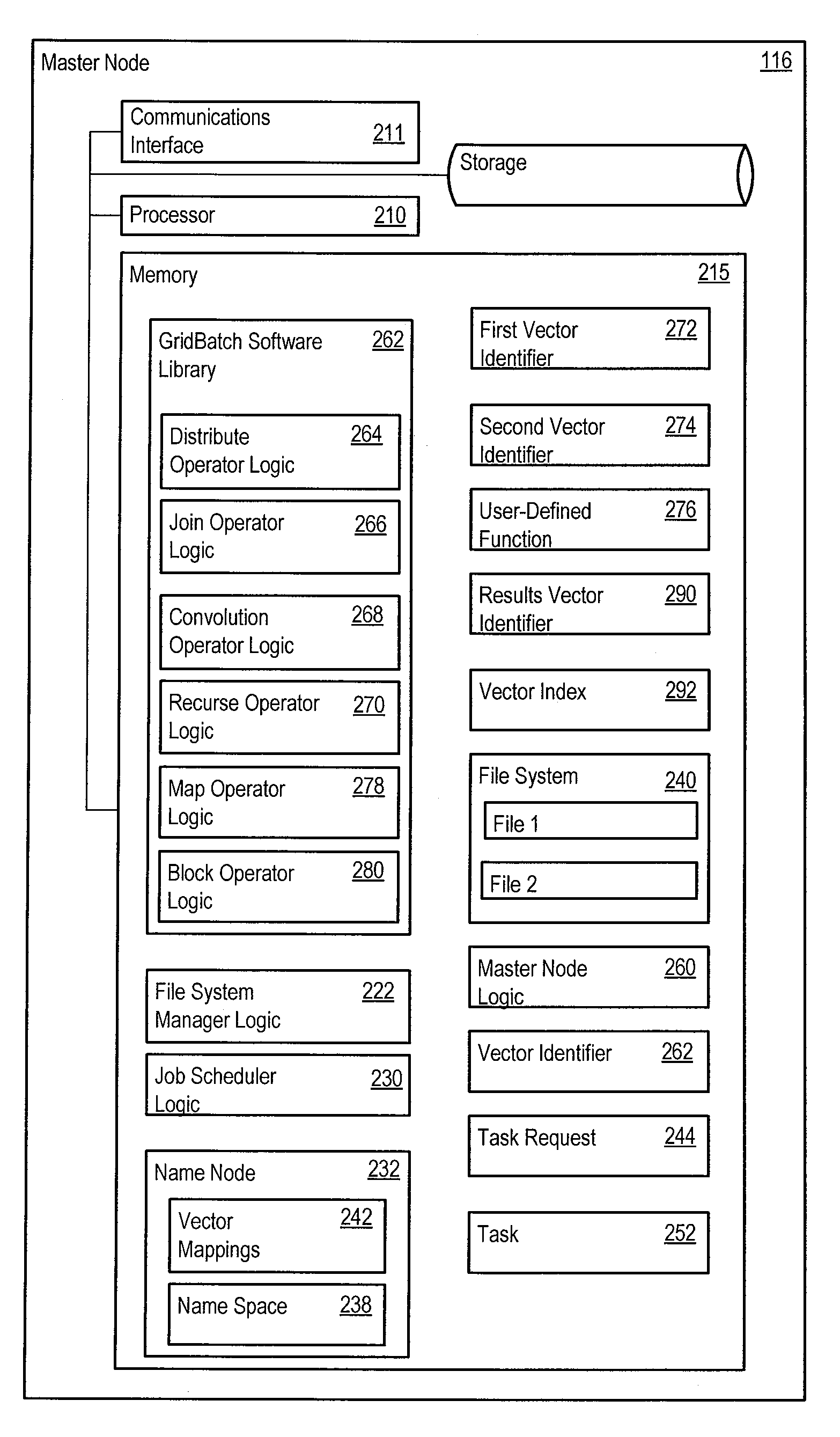

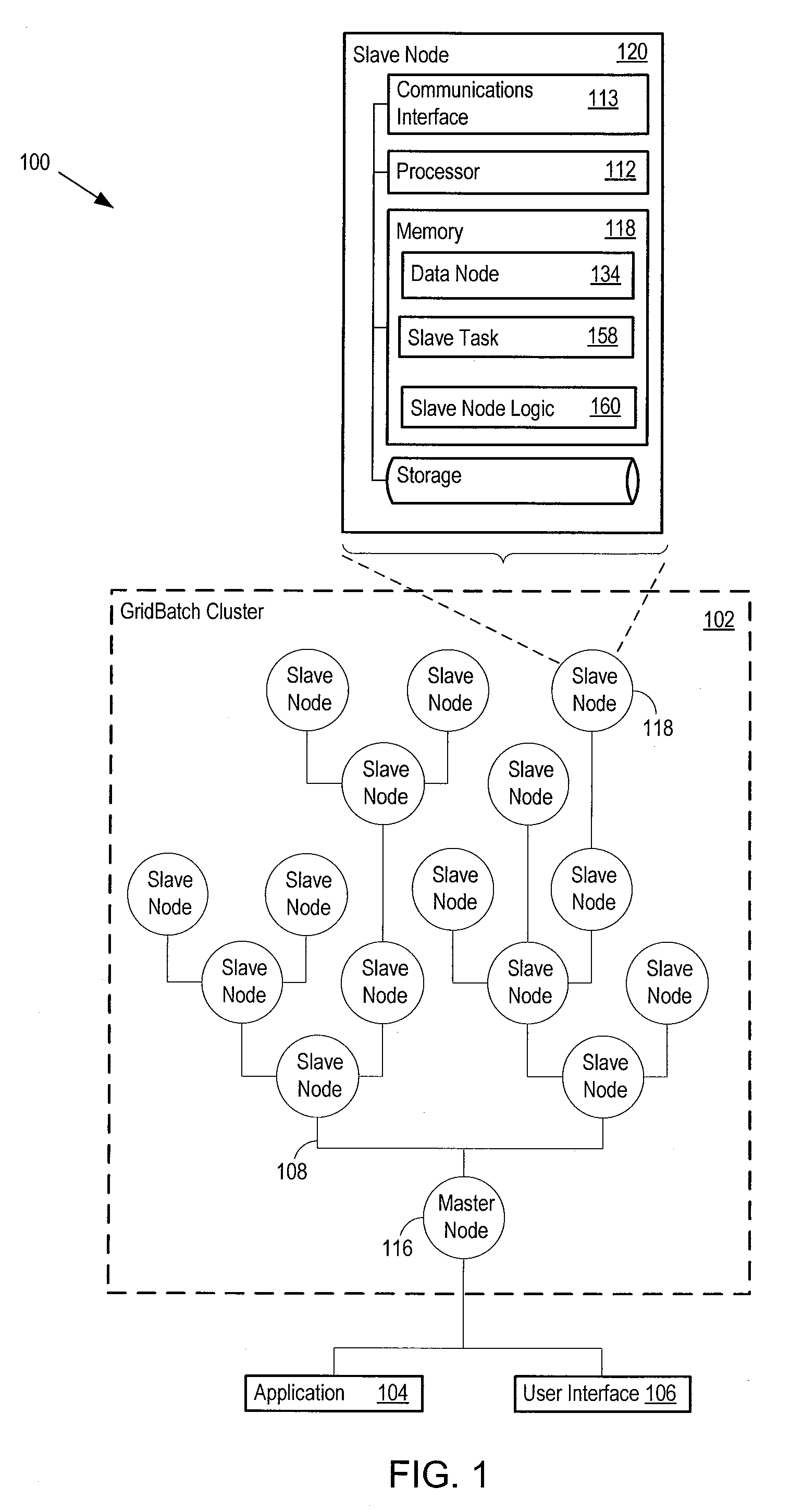

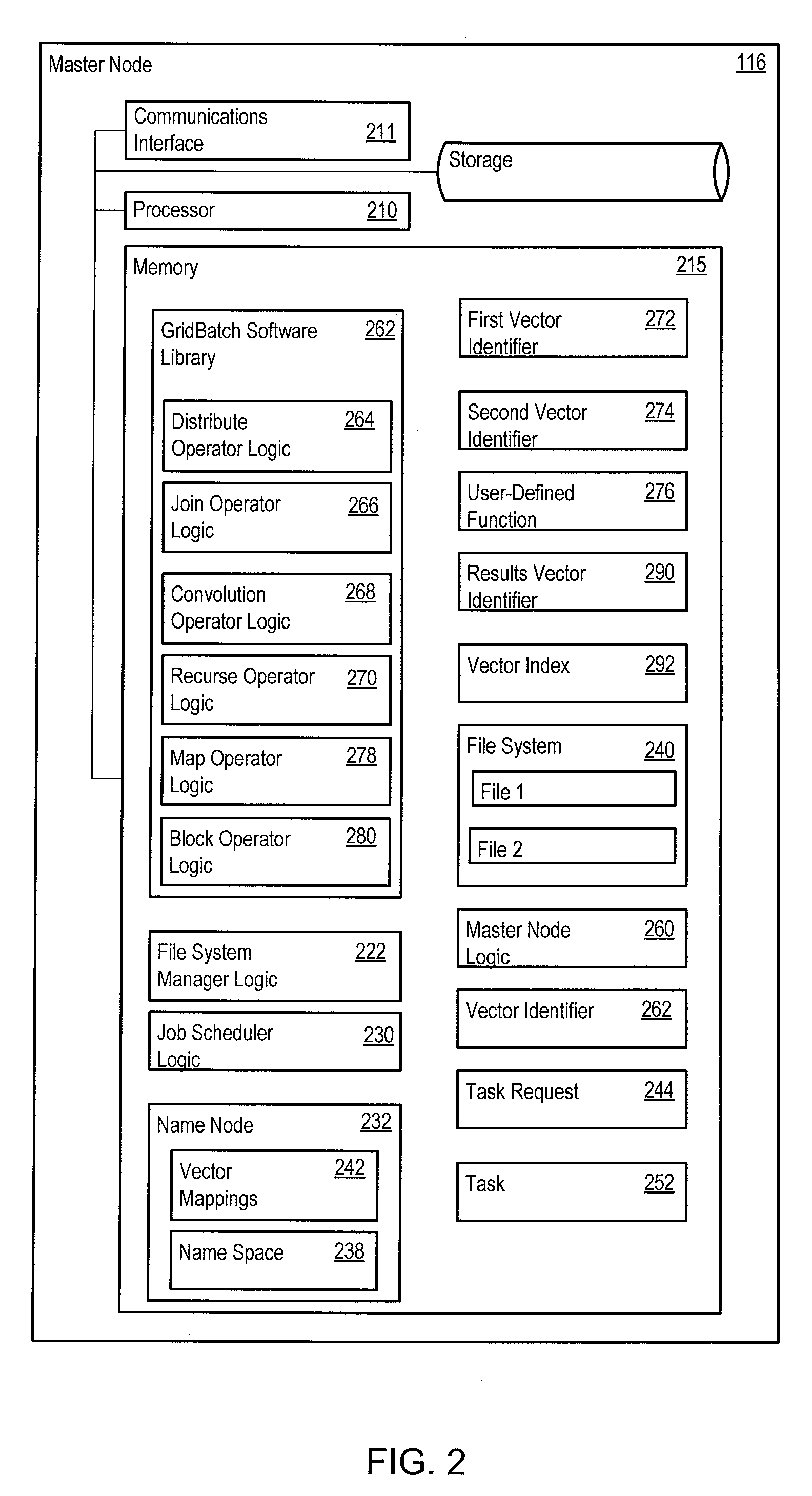

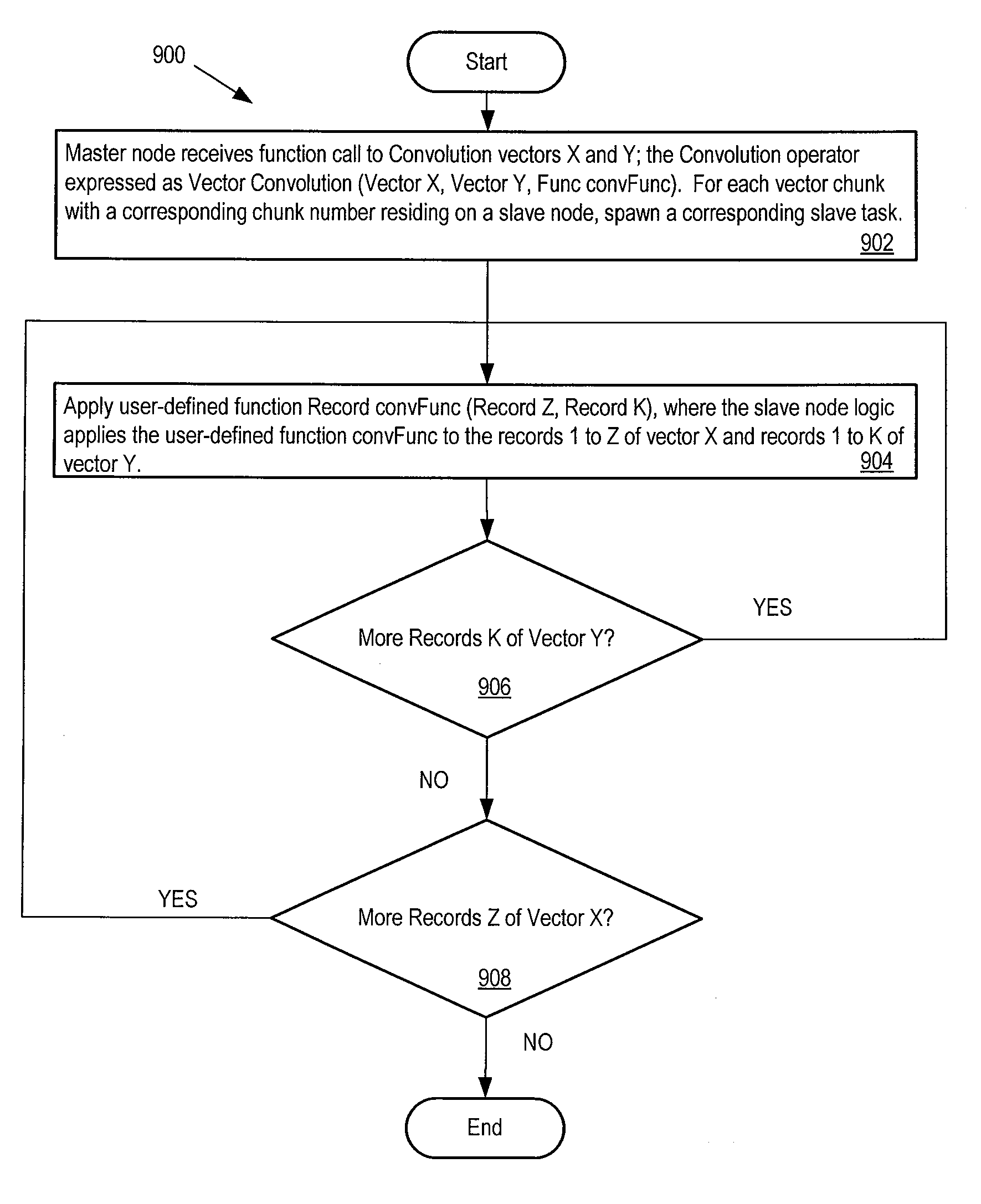

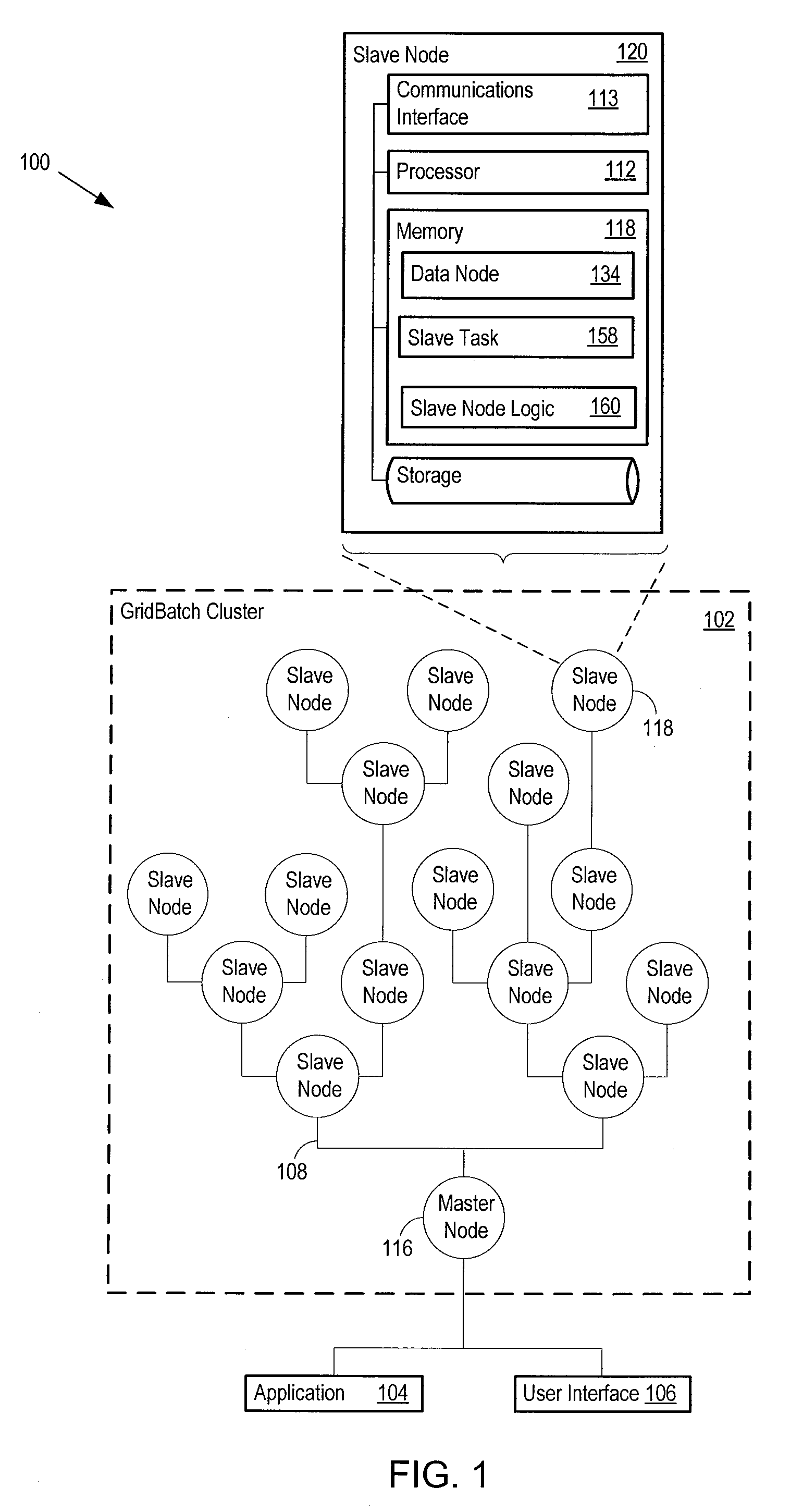

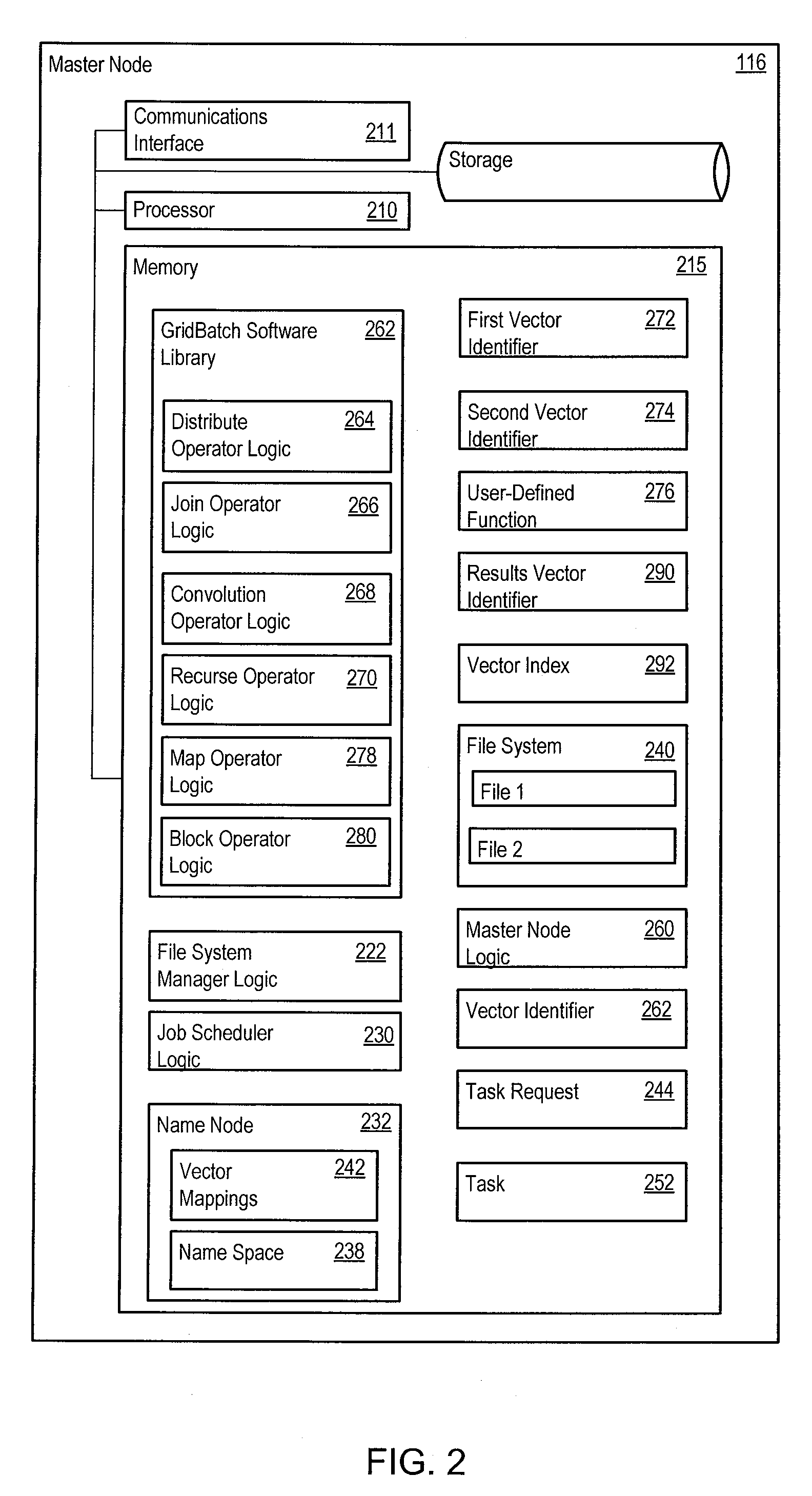

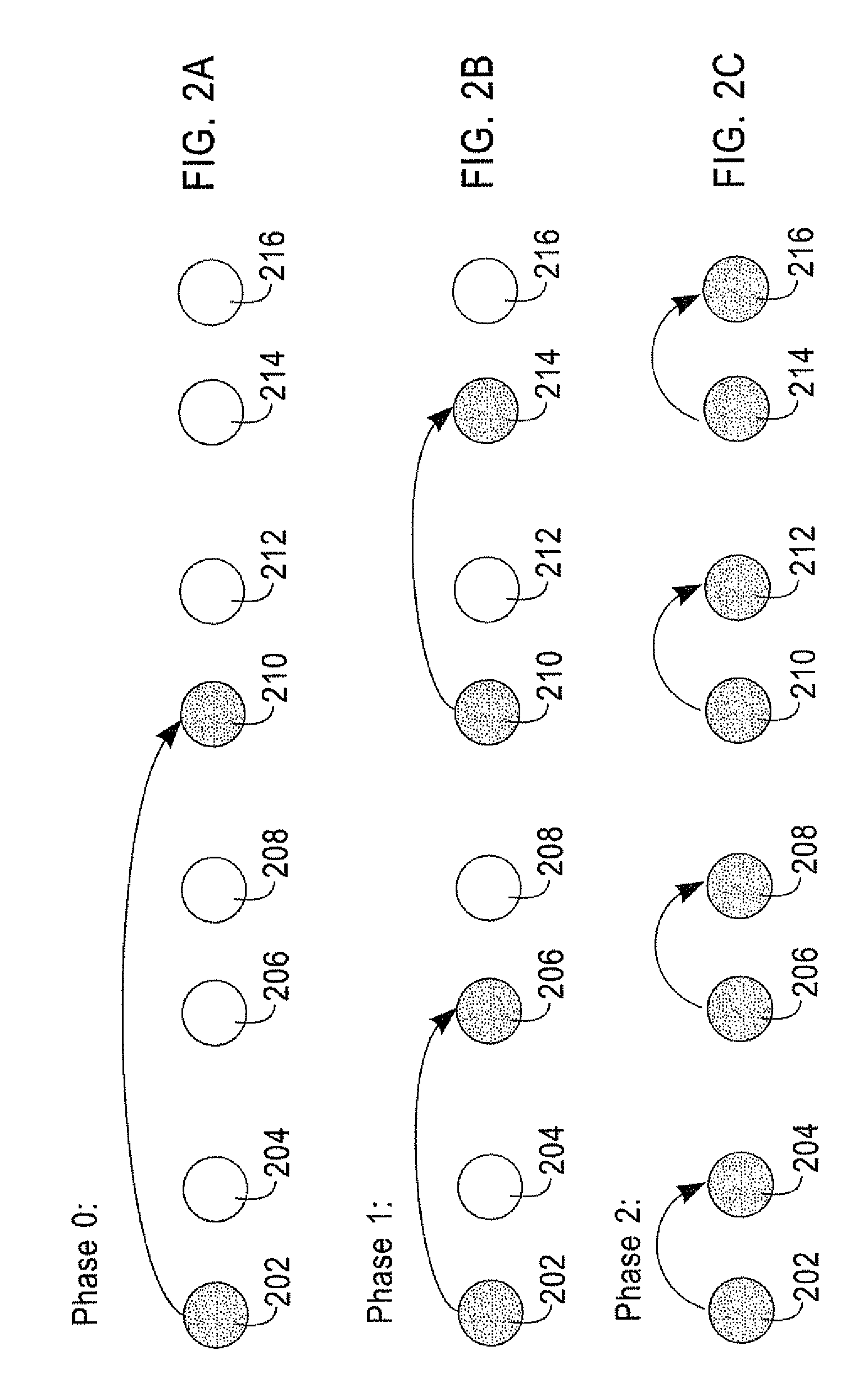

Infrastructure for parallel programming of clusters of machines

ActiveUS20090089544A1Easy to convertImprove performanceDigital data information retrievalGeneral purpose stored program computerParallel programming modelMulti processor

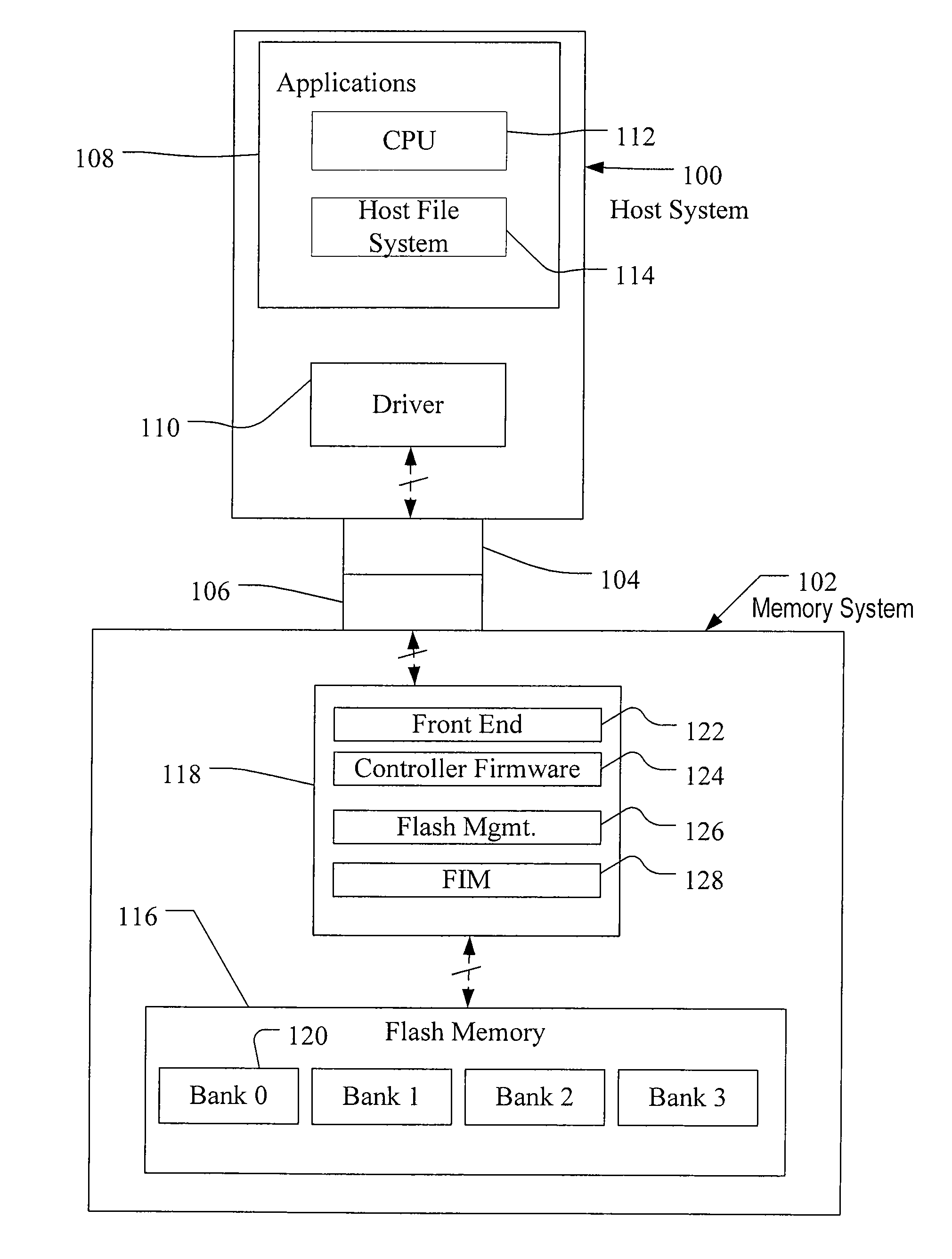

GridBatch provides an infrastructure framework that hides the complexities and burdens of developing logic and programming application that implement detail parallelized computations from programmers. A programmer may use GridBatch to implement parallelized computational operations that minimize network bandwidth requirements, and efficiently partition and coordinate computational processing in a multiprocessor configuration. GridBatch provides an effective and lightweight approach to rapidly build parallelized applications using economically viable multiprocessor configurations that achieve the highest performance results.

Owner:ACCENTURE GLOBAL SERVICES LTD

Non-volatile memories with data alignment in a directly mapped file storage system

InactiveUS20070143566A1Improve performanceEfficient packagingMemory architecture accessing/allocationMemory systemsAudio power amplifierData file

In the file storage system, each portion belonging to a data file is identified by its file ID and an offset along the data file, where the offset is a constant for the file and every file data portion is always kept at the same position within a memory page to be read or programmed in parallel. In this way, every time a page containing a file portion is read and copy to another page, the data in it is always page-aligned, and each bit within the file portion can always be manipulated by the same sense amplifier and same set data latches within the same memory column. In a preferred implementation, the page alignment is such that (offset within a page)=(data offset within a file) MOD (page size). Any gaps that may exist in page can be padded with any existing page-aligned valid data.

Owner:SANDISK TECH LLC

Method of reducing disturbs in non-volatile memory

In a non-volatile memory, the displacement current generated in non-selected word lines that results when the voltage levels on an array's bit lines are changed can result in disturbs. Techniques for reducing these currents are presented. In a first aspect, the number of cells being simultaneously programmed on a word line is reduced. In a non-volatile memory where an array of memory cells is composed of a number of units, and the units are combined into planes that share common word lines, the simultaneous programming of units within the same plane is avoided. Multiple units may be programmed in parallel, but these are arranged to be in separate planes. This is done by selecting the number of units to be programmed in parallel and their order such that all the units programmed together are from distinct planes, by comparing the units to be programmed to see if any are from the same plane, or a combination of these. In a second, complementary aspect, the rate at which the voltage levels on the bit lines are changed is adjustable. By monitoring the frequency of disturbs, or based upon the device's application, the rate at which the bit line drivers change the bit line voltage can be adjusted. This can be implemented by setting the rate externally, or by the controller based upon device performance and the amount of data error being generated.

Owner:SANDISK TECH LLC

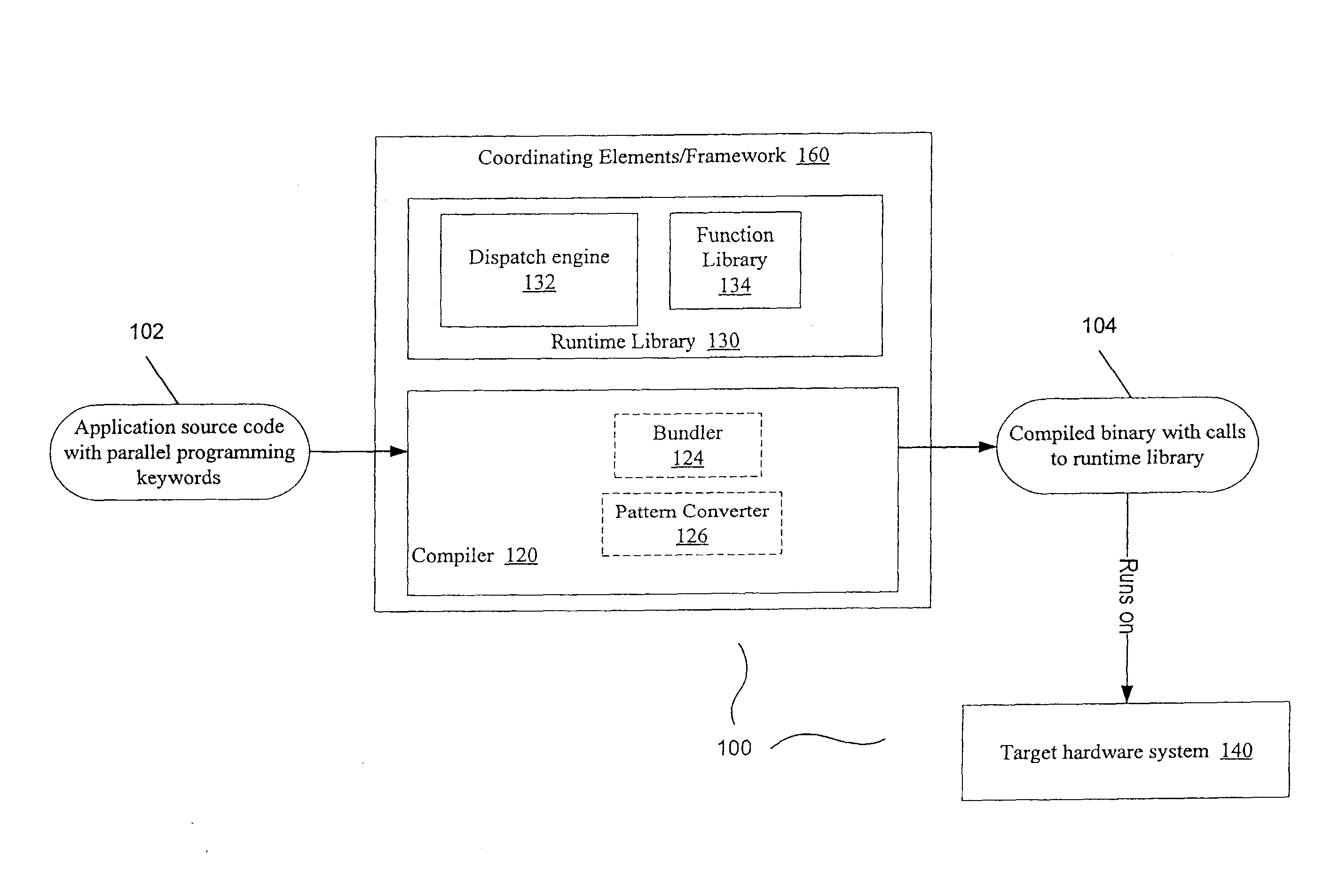

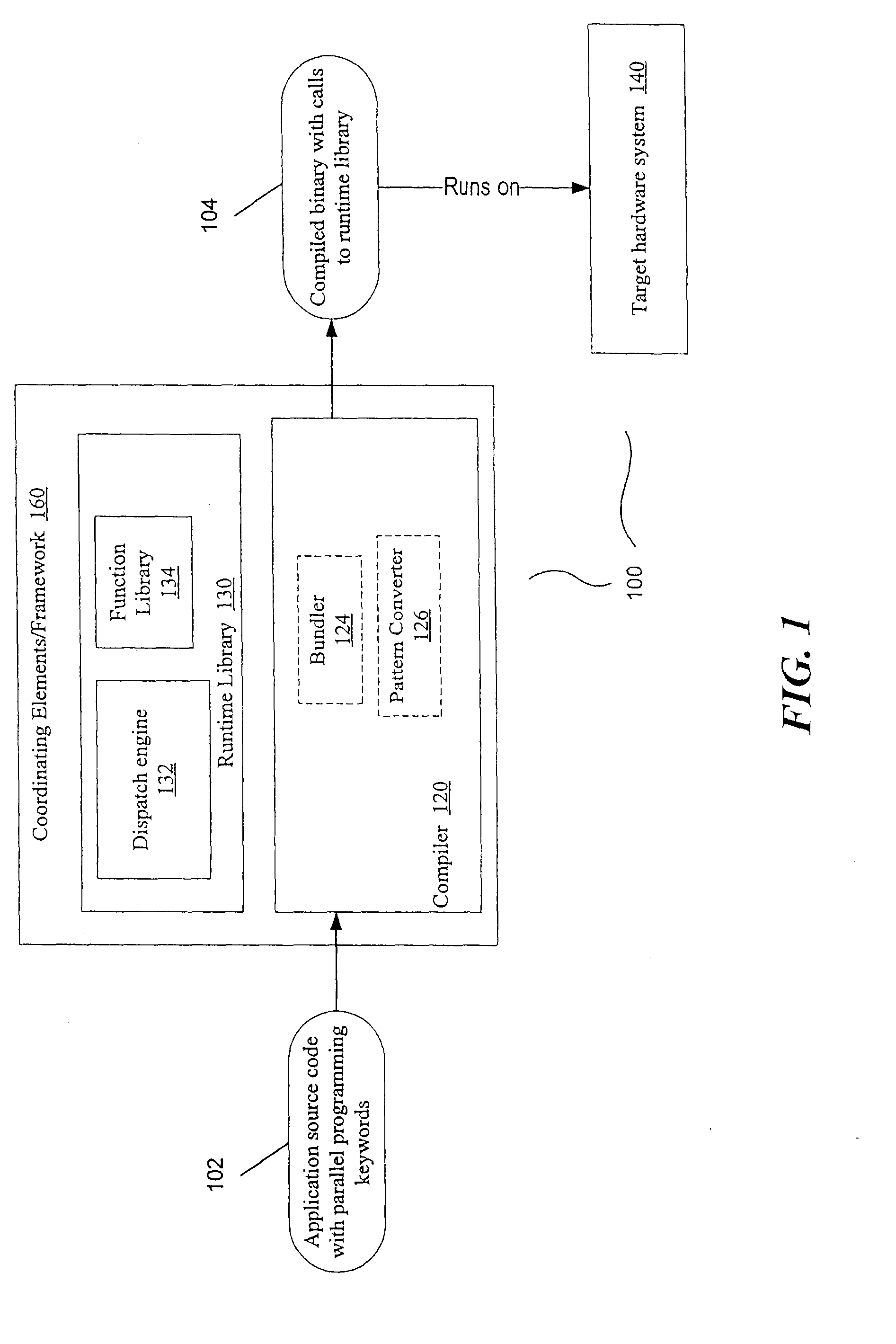

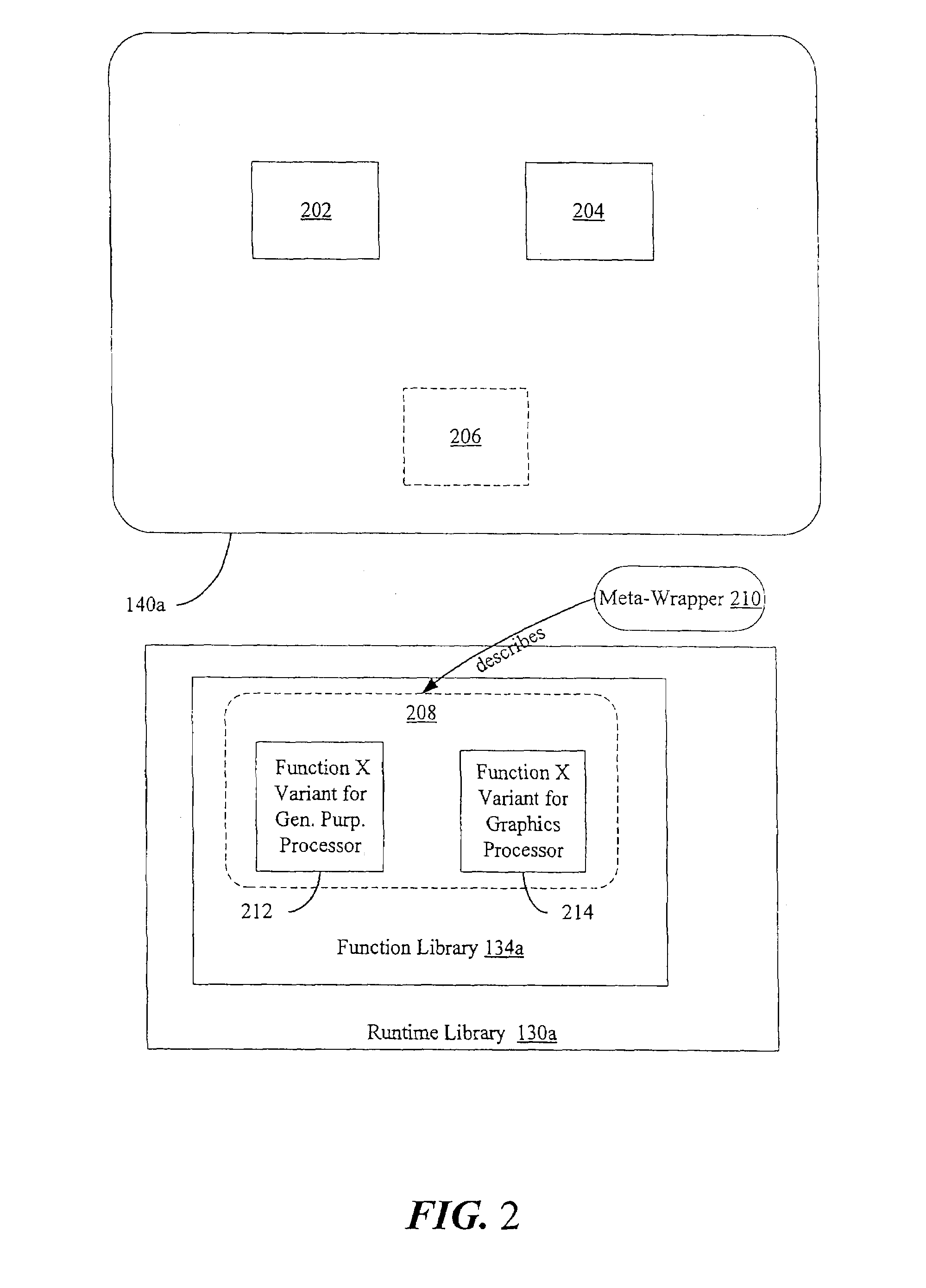

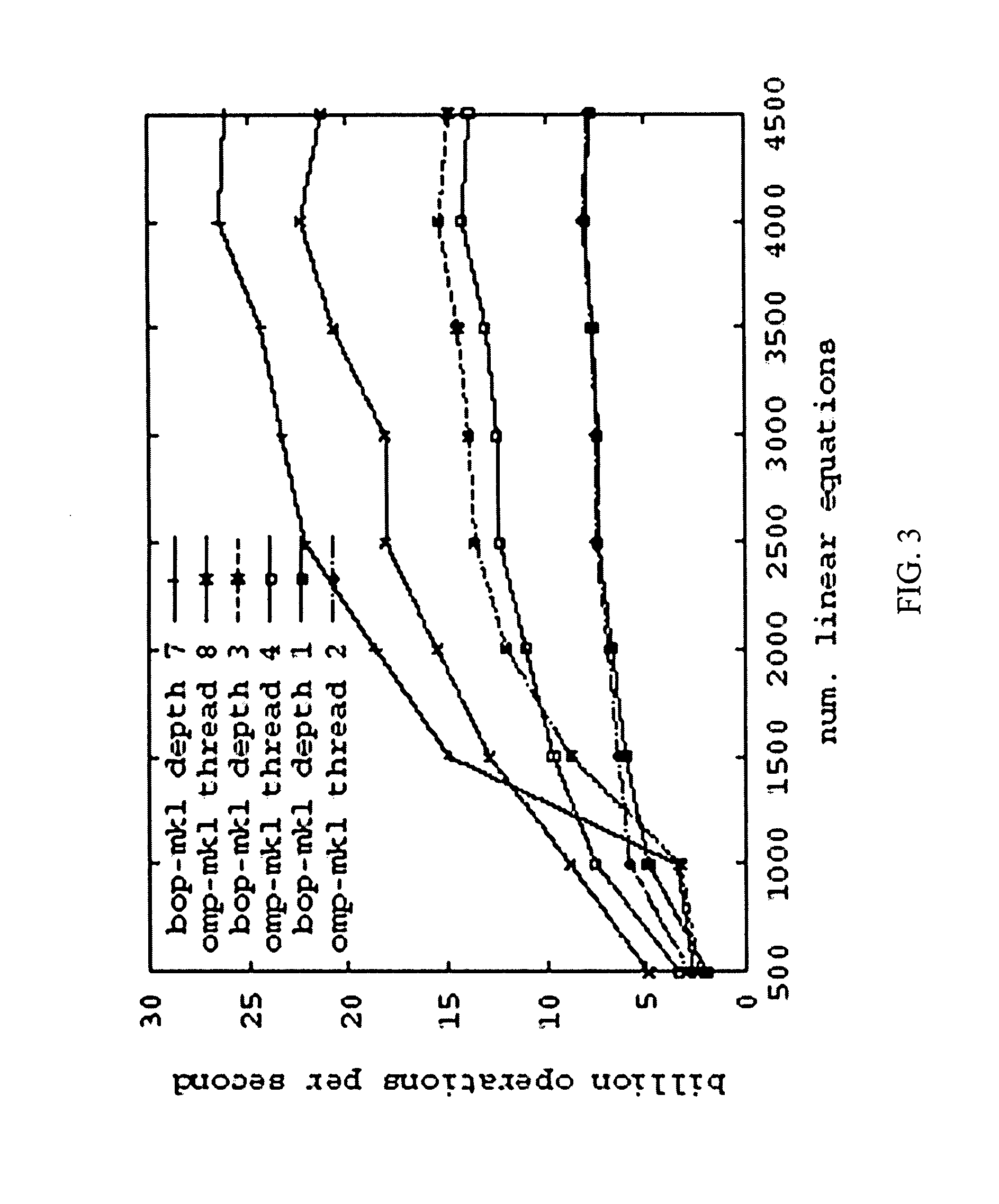

Compiler and runtime for heterogeneous multiprocessor systems

ActiveUS8296743B2Specific program execution arrangementsMemory systemsTheoretical computer scienceGeneric function

Owner:INTEL CORP

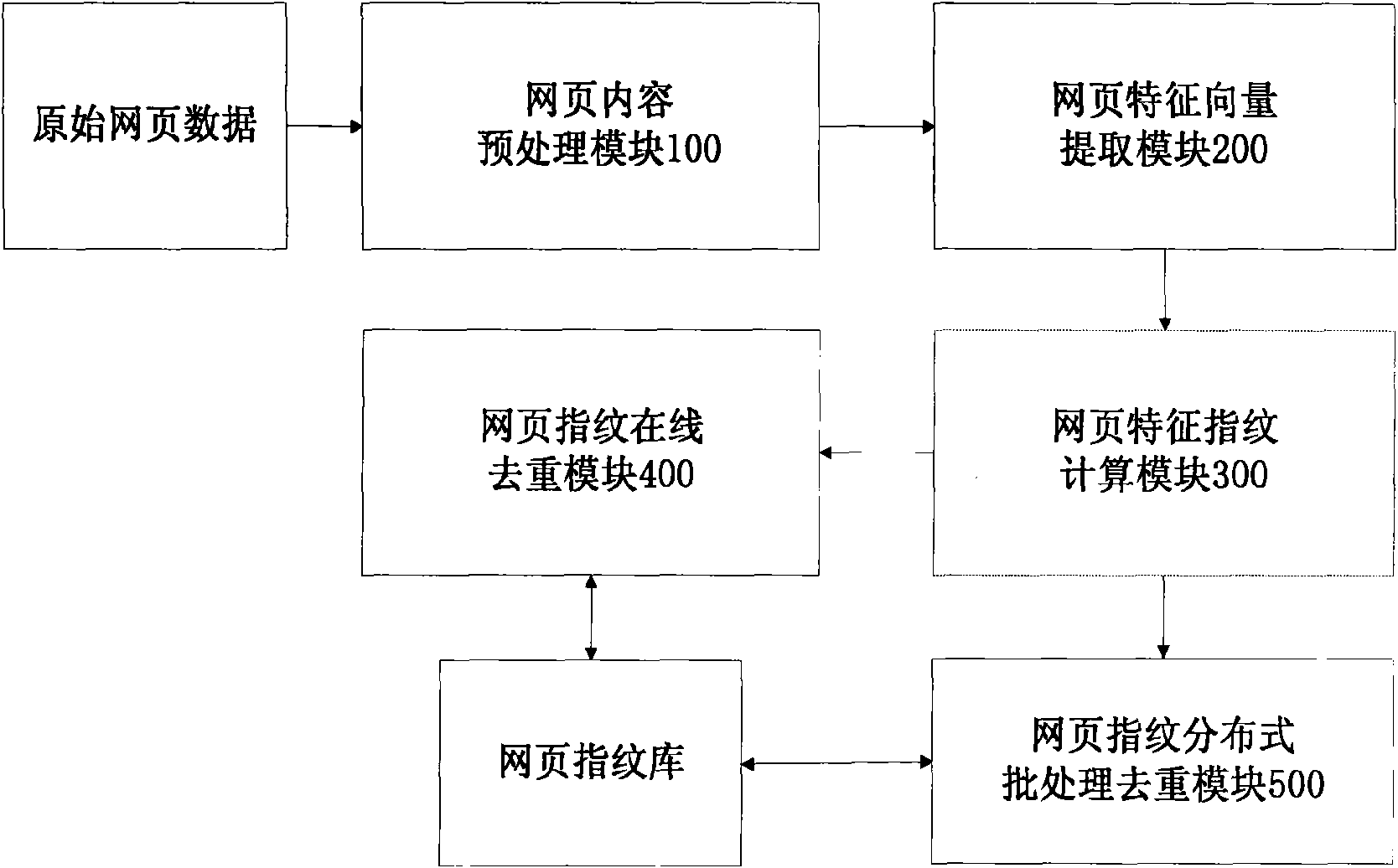

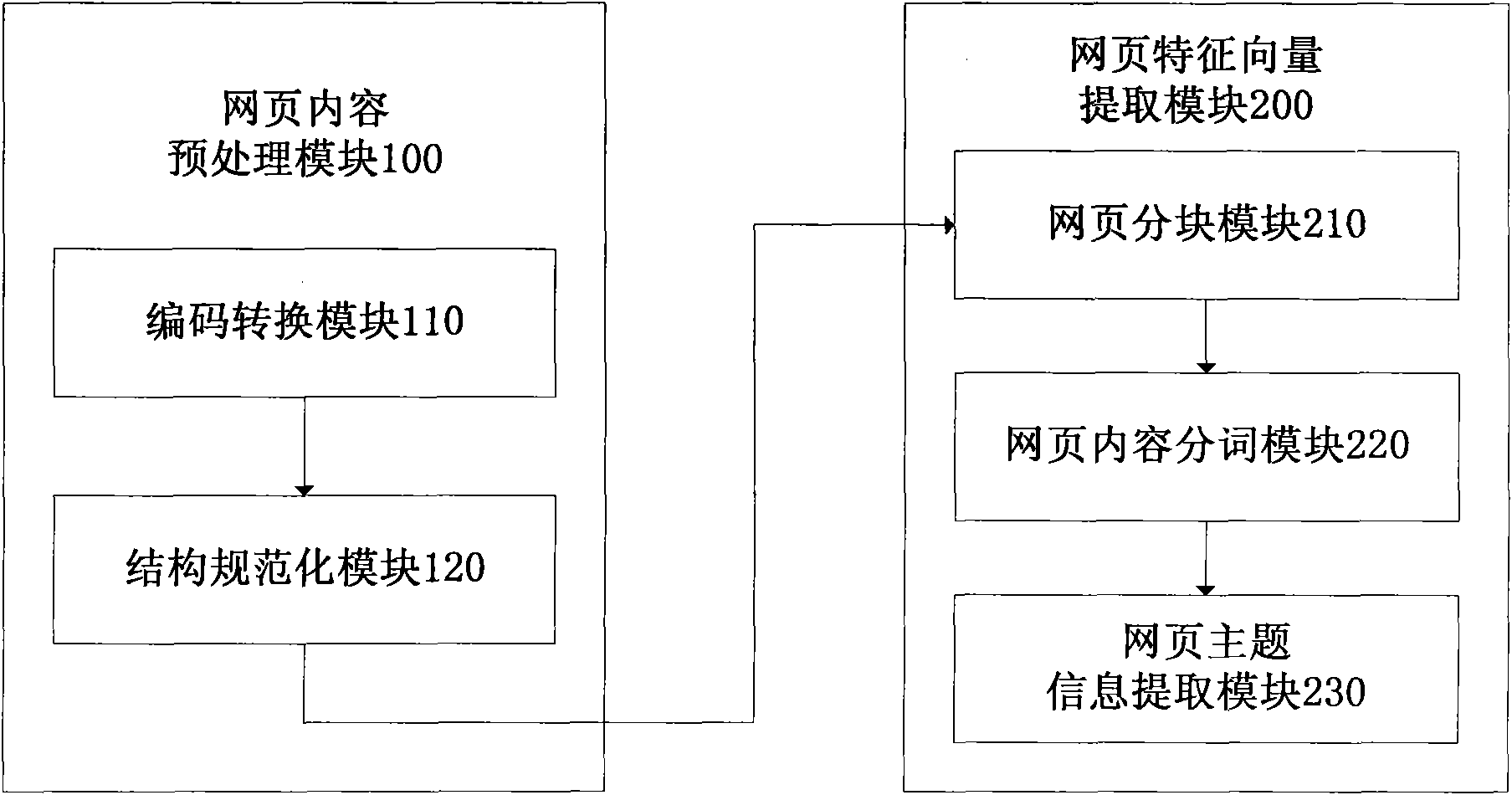

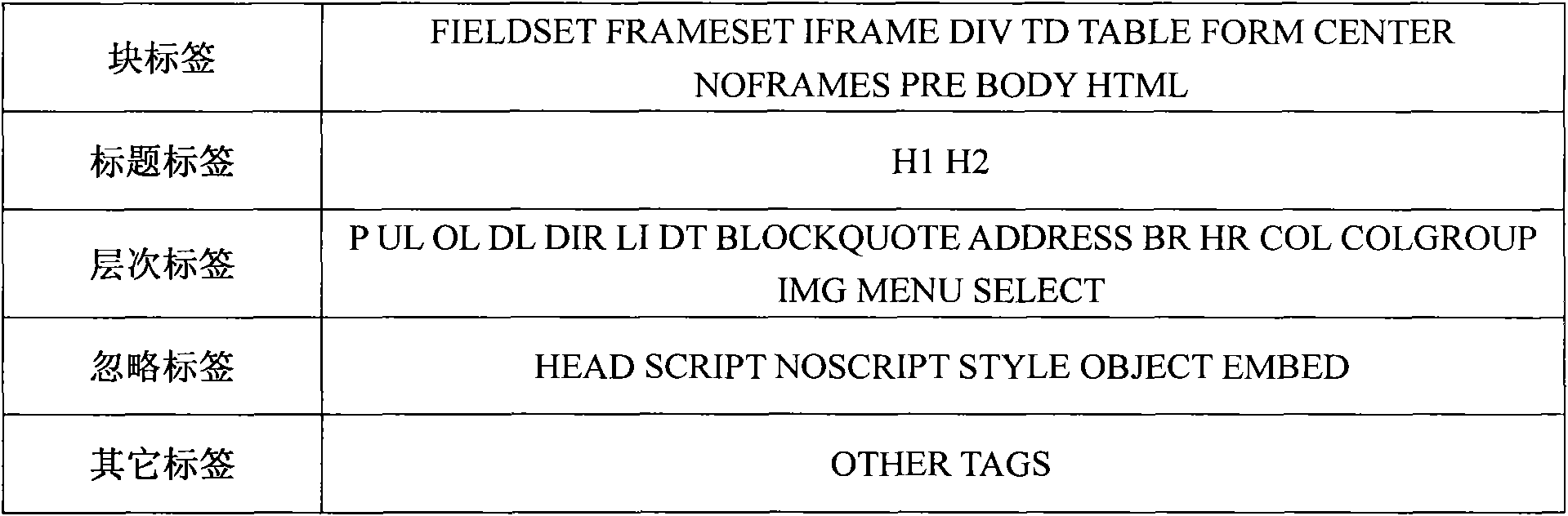

Similar web page duplicate-removing system based on parallel programming mode

InactiveCN101645082AAvoid judgment biasImprove efficiencySpecial data processing applicationsDocument preparationWeb crawler

The invention provides a similar web page duplicate-removing system based on a parallel programming mode, comprises a web page content pre-processing module, a web page eigenvector extracting module,a web page feature fingerprint calculation module, a web page fingerprint on-line duplicate-removing module, a web page fingerprint distributed batch duplicate-removing module and a computing platformbased on specific distribution. The system can complete links of carrying out unified conversion of text content encoding, standardization of document structure, web page noise content abortion, thematic content analysis and identification of web pages, lexical segmentation of continuous text content, and the like on the web pages obtained by crawling of web crawlers, thereby forming eigenvectorswhich can present the web pages. Relative algorithms can be used to obtain web page fingerprints which present web page characteristics aiming at the vector. The system provided by the invention accurately and fast detects fully complete repetition or approximate repetition of the web page contents caused by site mirroring, web document transshipment, and the like on the condition of massive amount of data of Internet and completes corresponding repetition-removing works, thereby enhancing the storage efficiency of search engines and bringing better use experience for the search engines.

Owner:HUAZHONG UNIV OF SCI & TECH

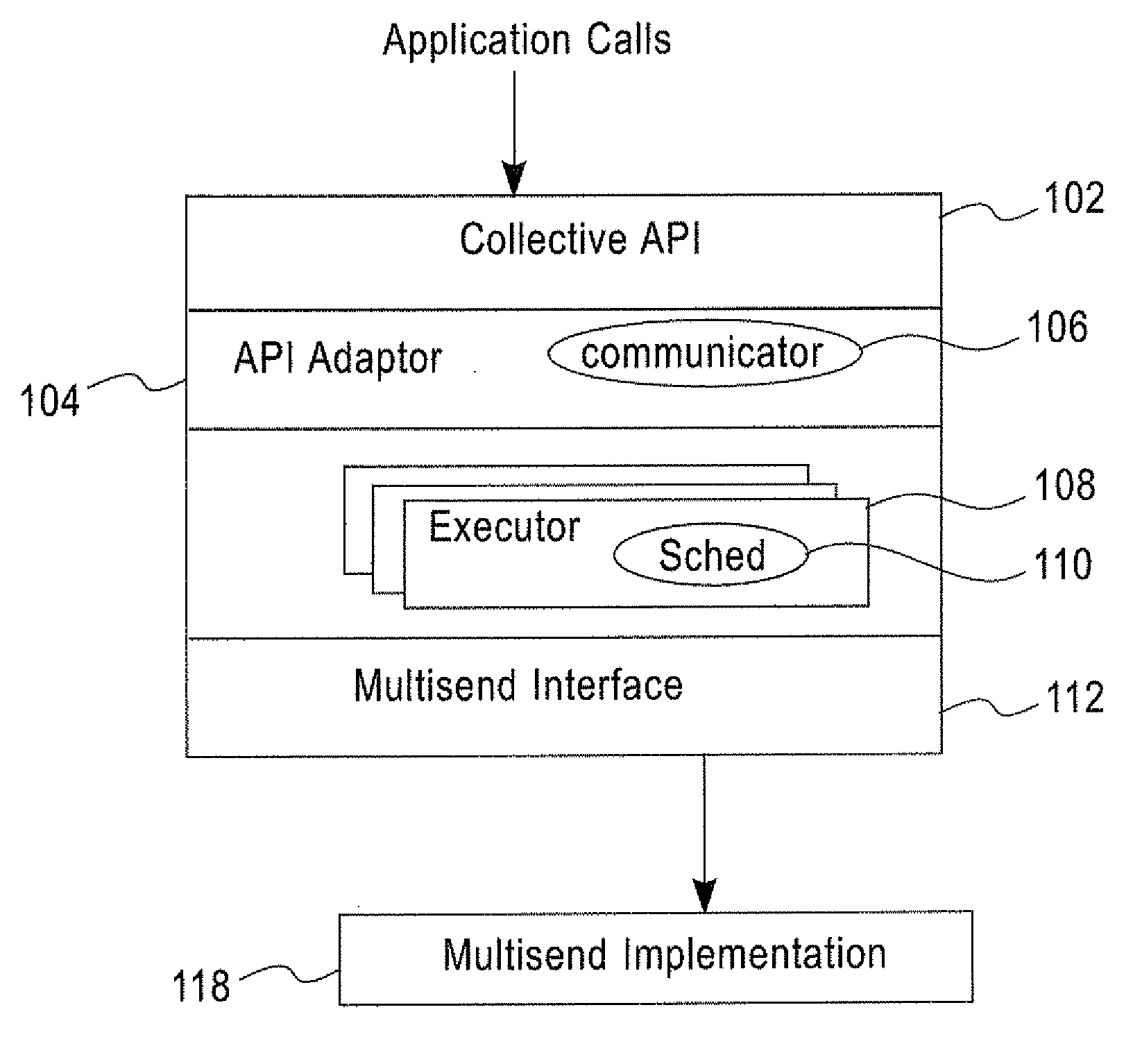

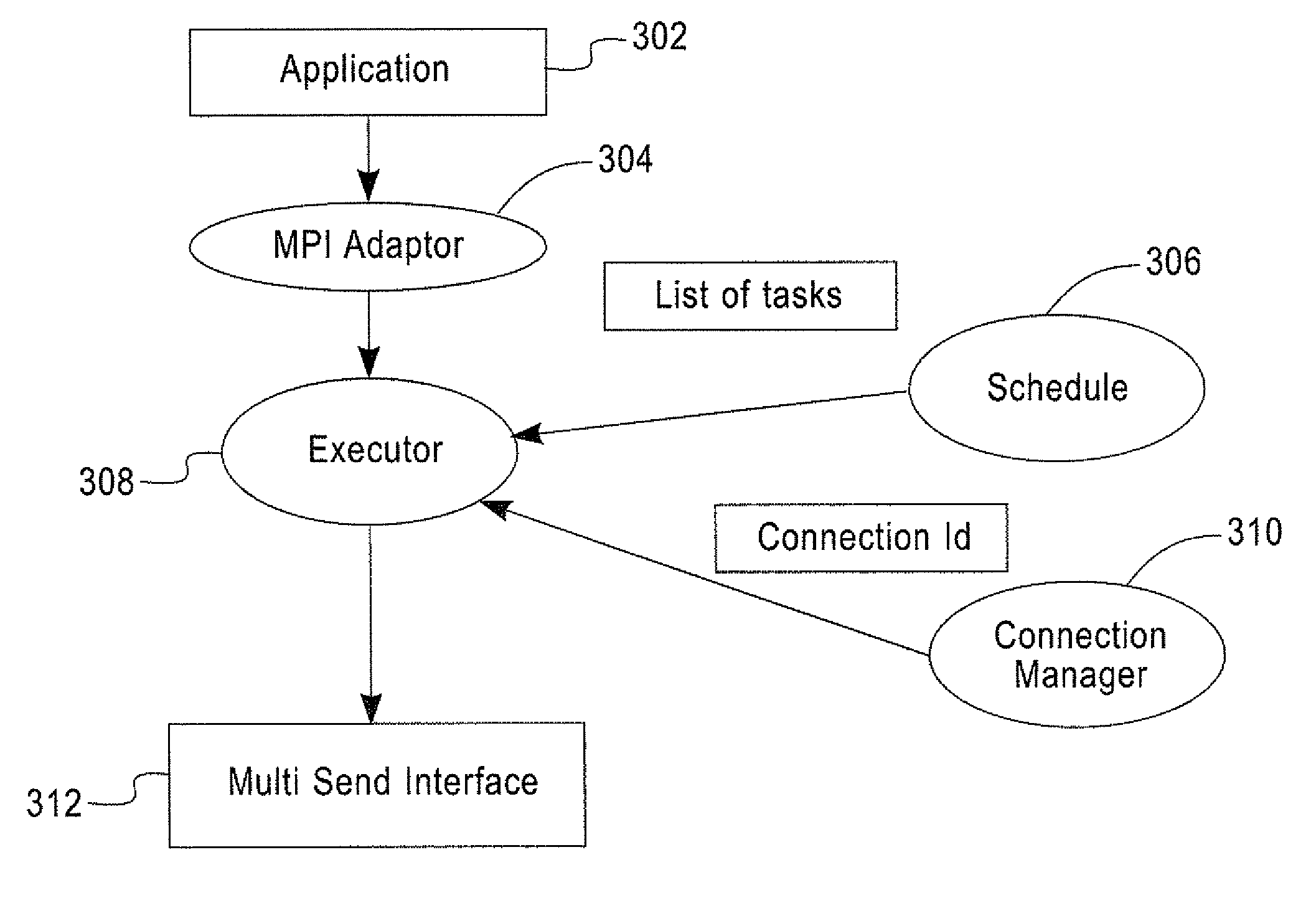

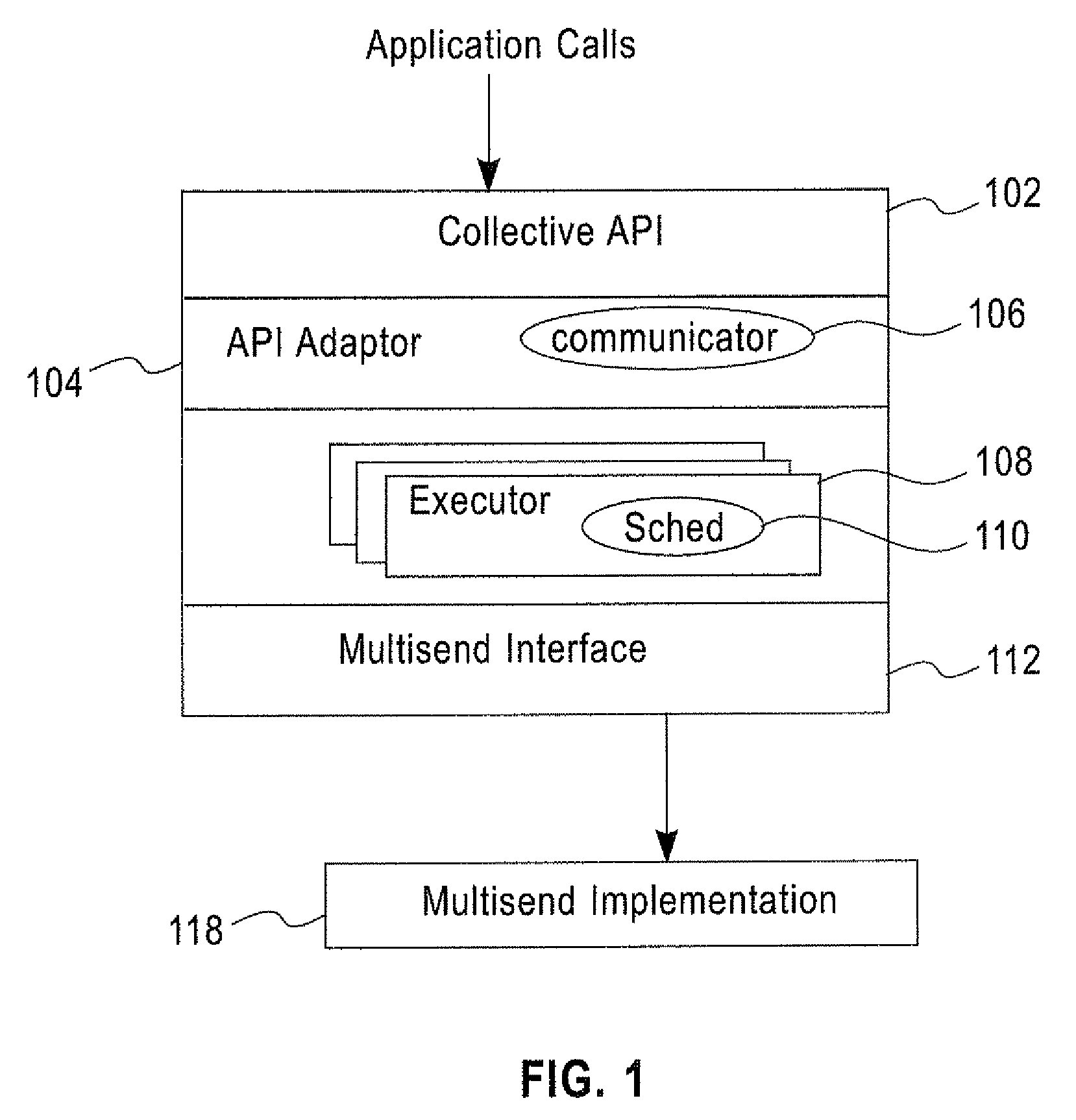

Mechanism to support generic collective communication across a variety of programming models

InactiveUS20090006810A1Interprogram communicationGeneral purpose stored program computerCollective communicationTime schedule

A system and method for supporting collective communications on a plurality of processors that use different parallel programming paradigms, in one aspect, may comprise a schedule defining one or more tasks in a collective operation an executor that executes the task, a multisend module to perform one or more data transfer functions associated with the tasks, and a connection manager that controls one or more connections and identifies an available connection. The multisend module uses the available connection in performing the one or more data transfer functions. A plurality of processors that use different parallel programming paradigms can use a common implementation of the schedule module, the executor module, the connection manager and the multisend module via a language adaptor specific to a parallel programming paradigm implemented on a processor.

Owner:IBM CORP

Compiler and Runtime for Heterogeneous Multiprocessor Systems

ActiveUS20090158248A1Specific program execution arrangementsMemory systemsTheoretical computer scienceGeneric function

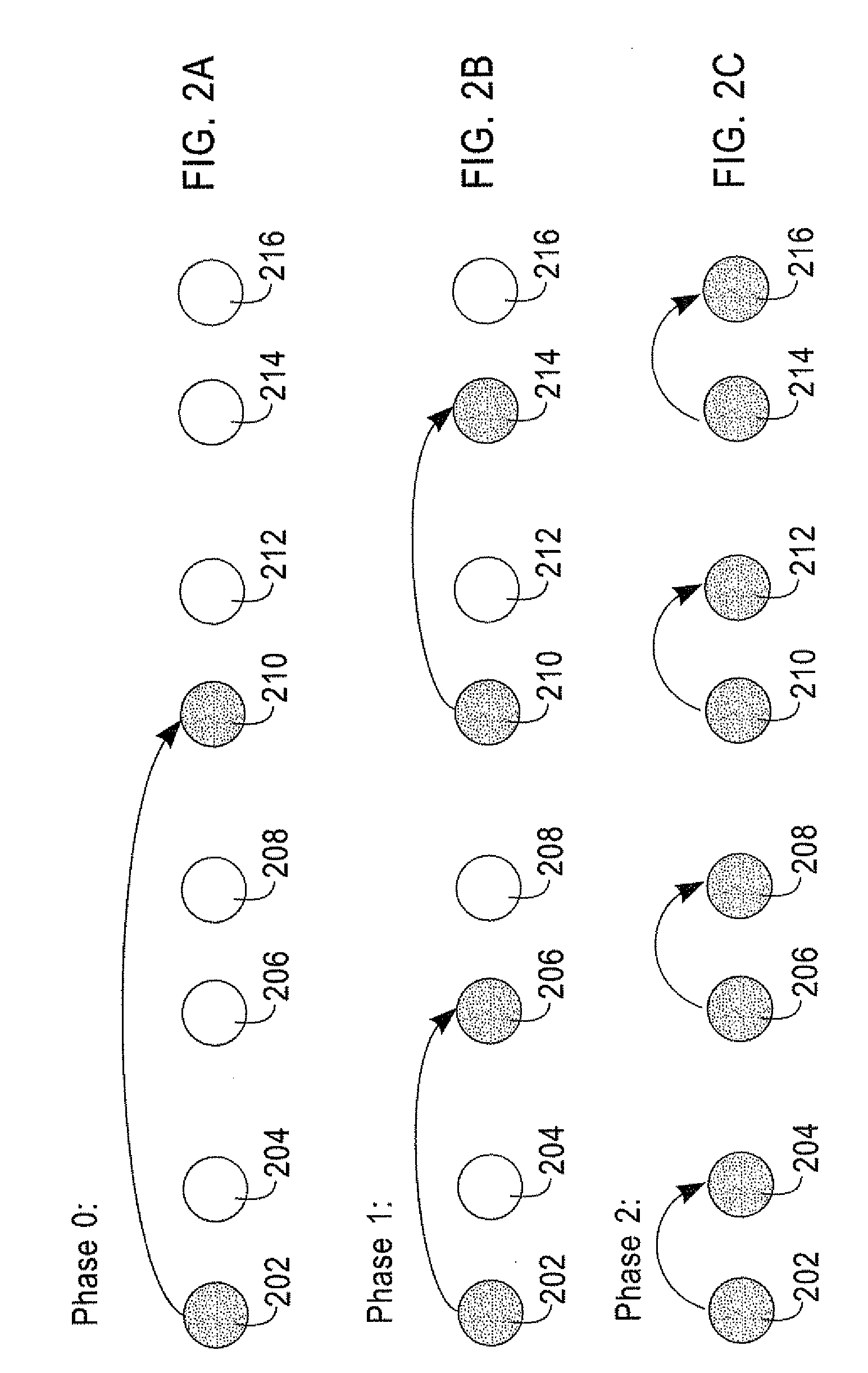

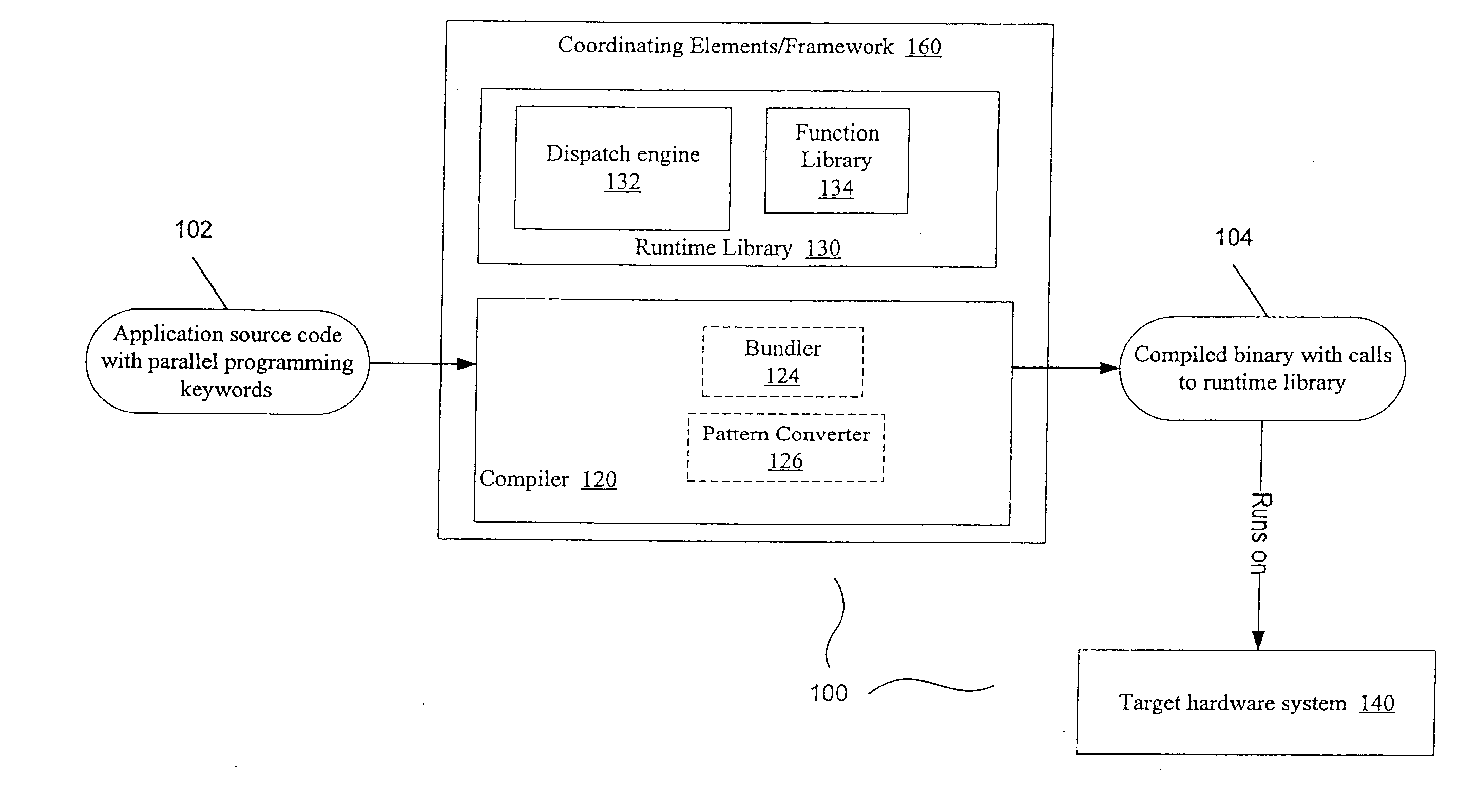

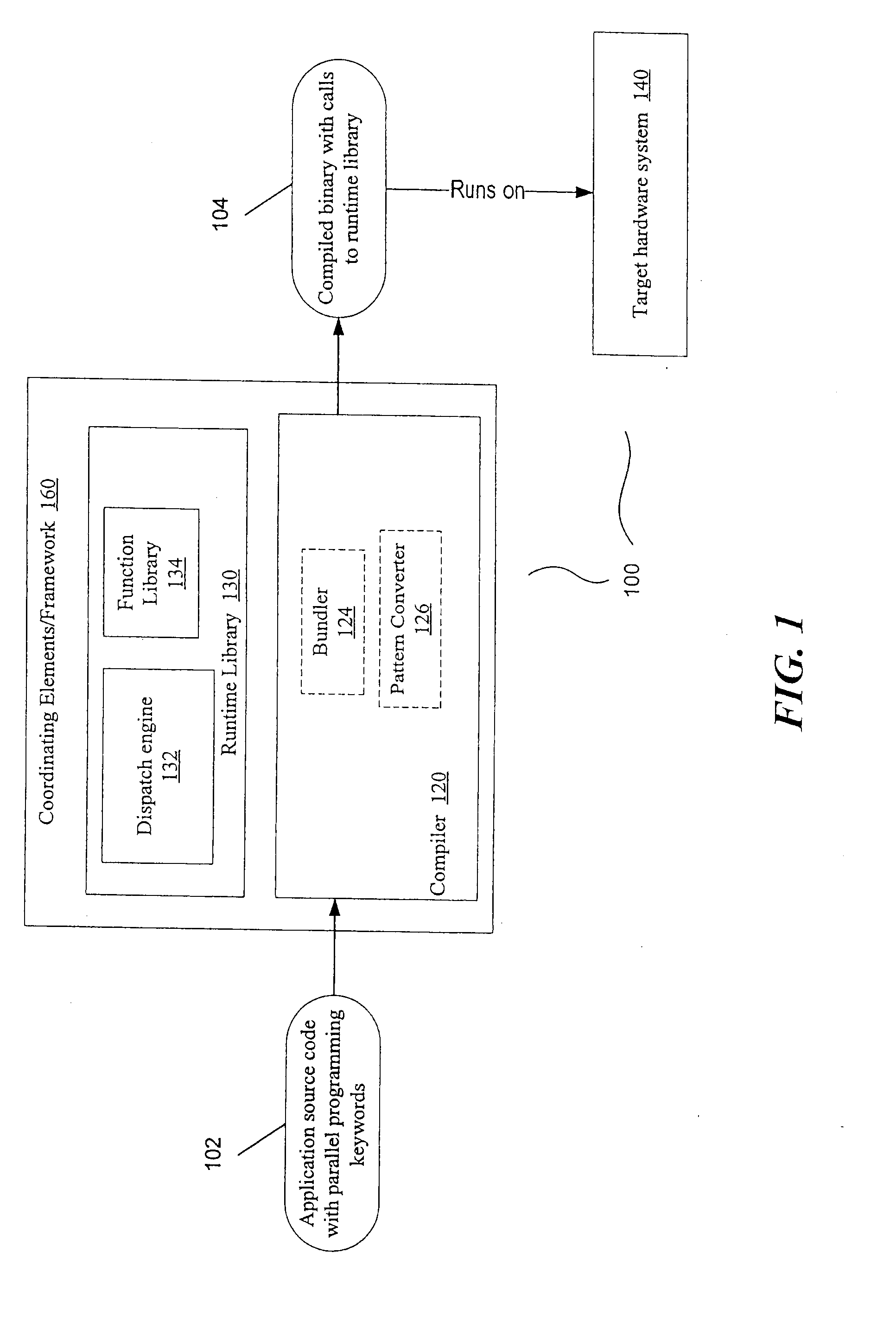

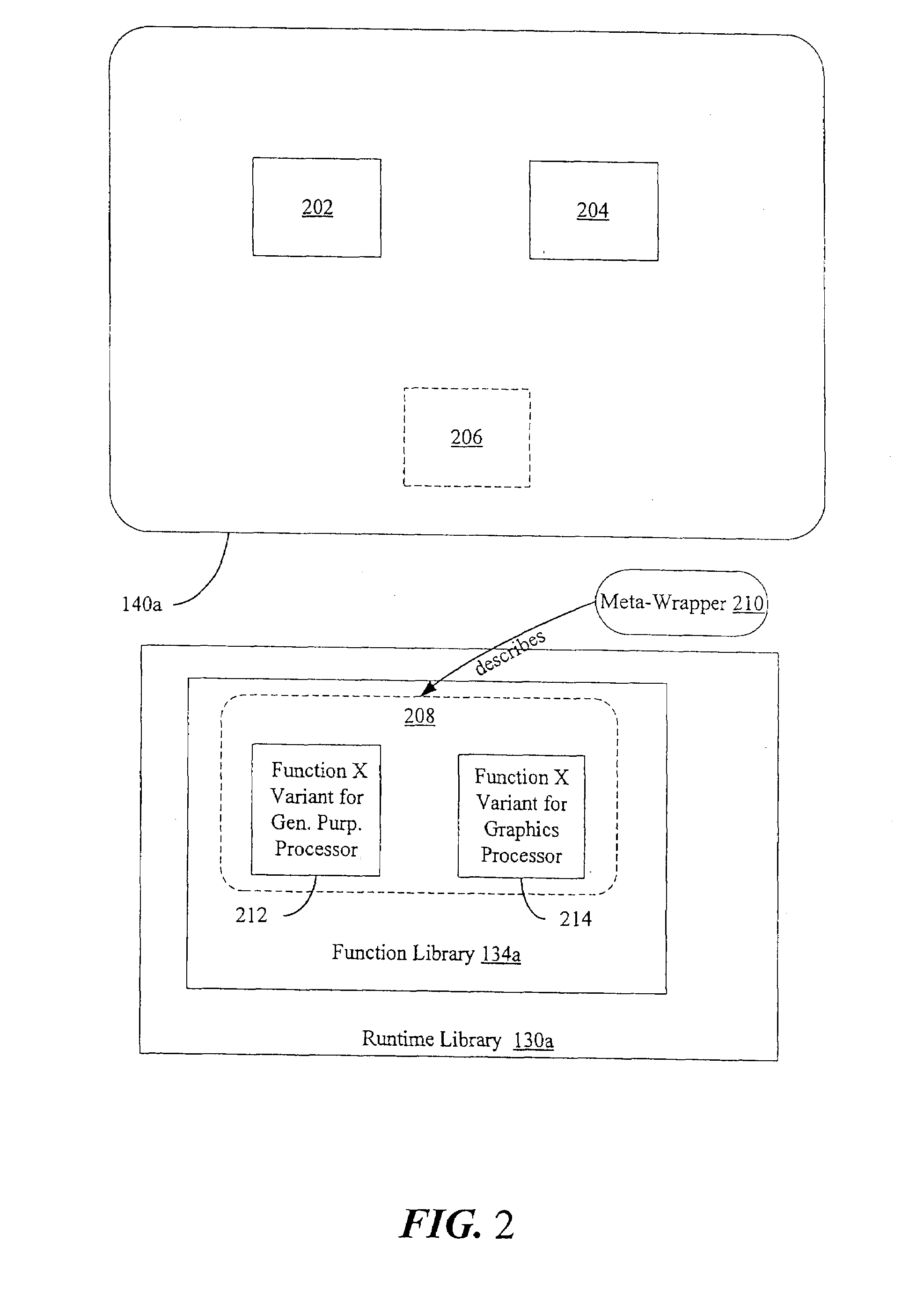

Presented are embodiments of methods and systems for library-based compilation and dispatch to automatically spread computations of a program across heterogeneous cores in a processing system. The source program contains a parallel-programming keyword, such as mapreduce, from a high-level, library-oriented parallel programming language. The compiler inserts one or more calls for a generic function, associated with the parallel-programming keyword, into the compiled code. A runtime library provides a predicate-based library system that includes multiple hardware specific implementations (“variants”) of the generic function. A runtime dispatch engine dynamically selects the best-available (e.g., most specific) variant, from a bundle of hardware-specific variants, for a given input and machine configuration. That is, the dispatch engine may take into account run-time availability of processing elements, choose one of them, and then select for dispatch an appropriate variant to be executed on the selected processing element. Other embodiments are also described and claimed.

Owner:INTEL CORP

Infrastructure for parallel programming of clusters of machines

InactiveUS20090089560A1Easy to convertImprove performanceAnalogue secracy/subscription systemsMultiple digital computer combinationsMulti processorParallel programming model

GridBatch provides an infrastructure framework that hides the complexities and burdens of developing logic and programming application that implement detail parallelized computations from programmers. A programmer may use GridBatch to implement parallelized computational operations that minimize network bandwidth requirements, and efficiently partition and coordinate computational processing in a multiprocessor configuration. GridBatch provides an effective and lightweight approach to rapidly build parallelized applications using economically viable multiprocessor configurations that achieve the highest performance results.

Owner:ACCENTURE GLOBAL SERVICES LTD

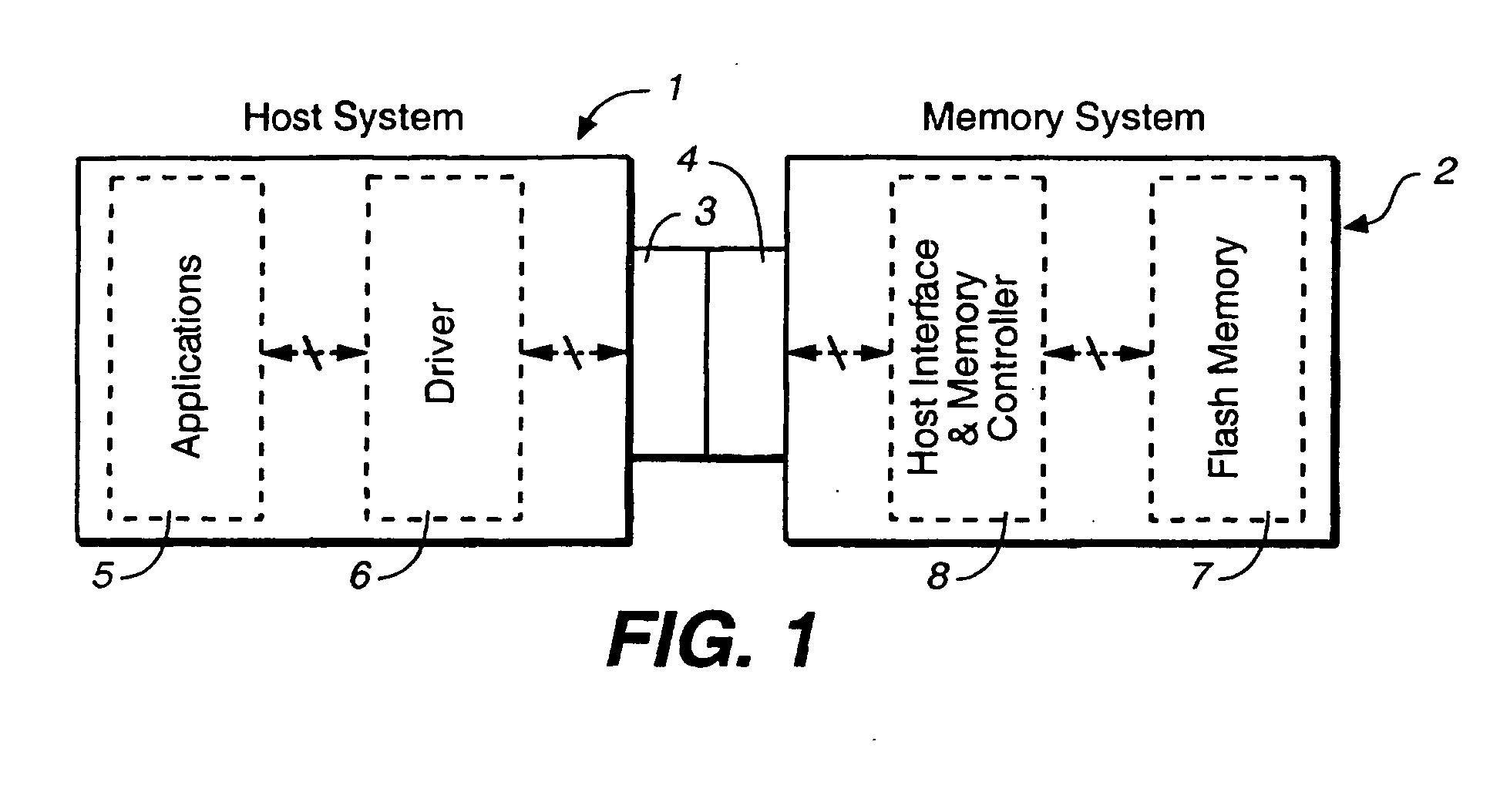

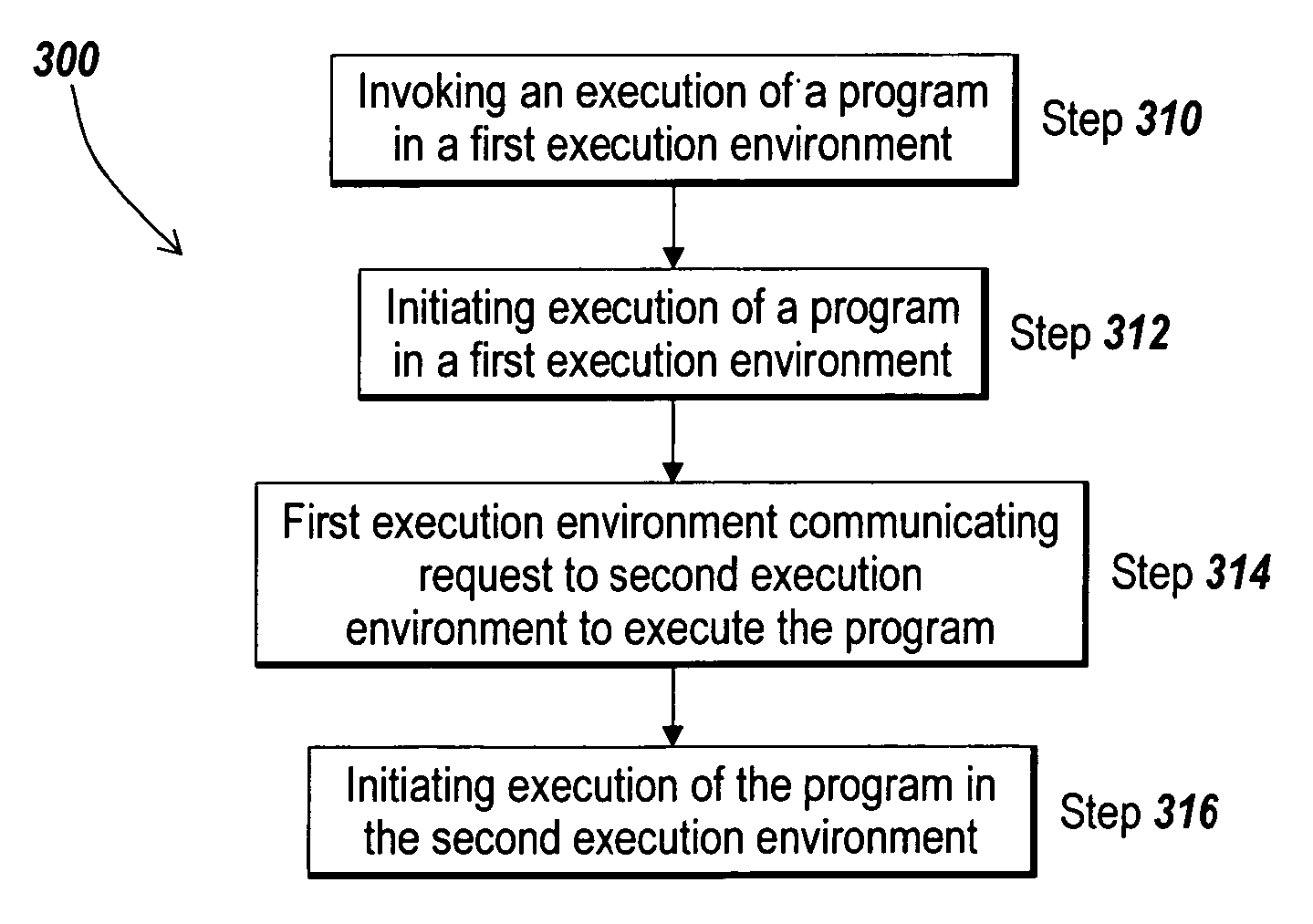

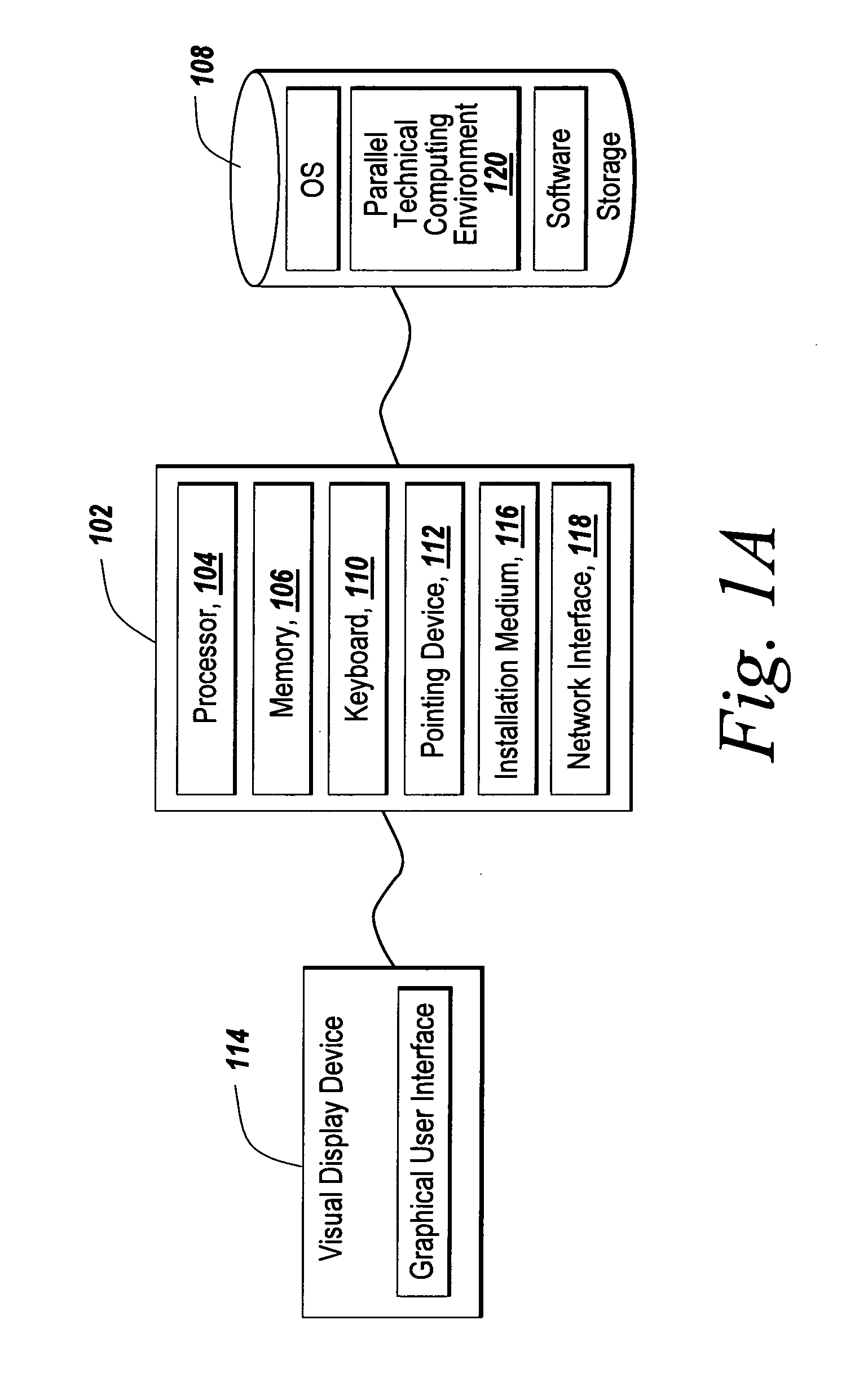

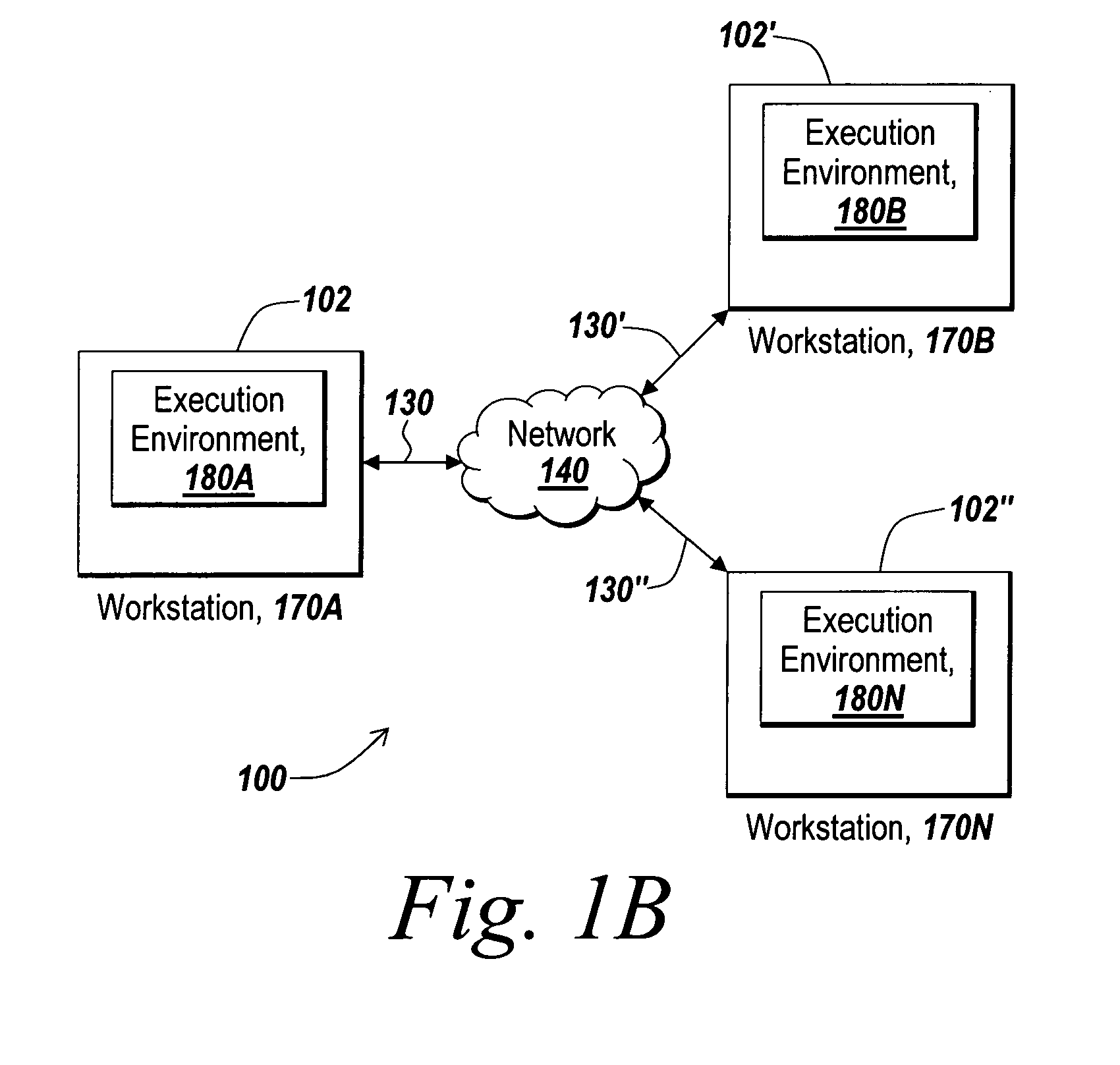

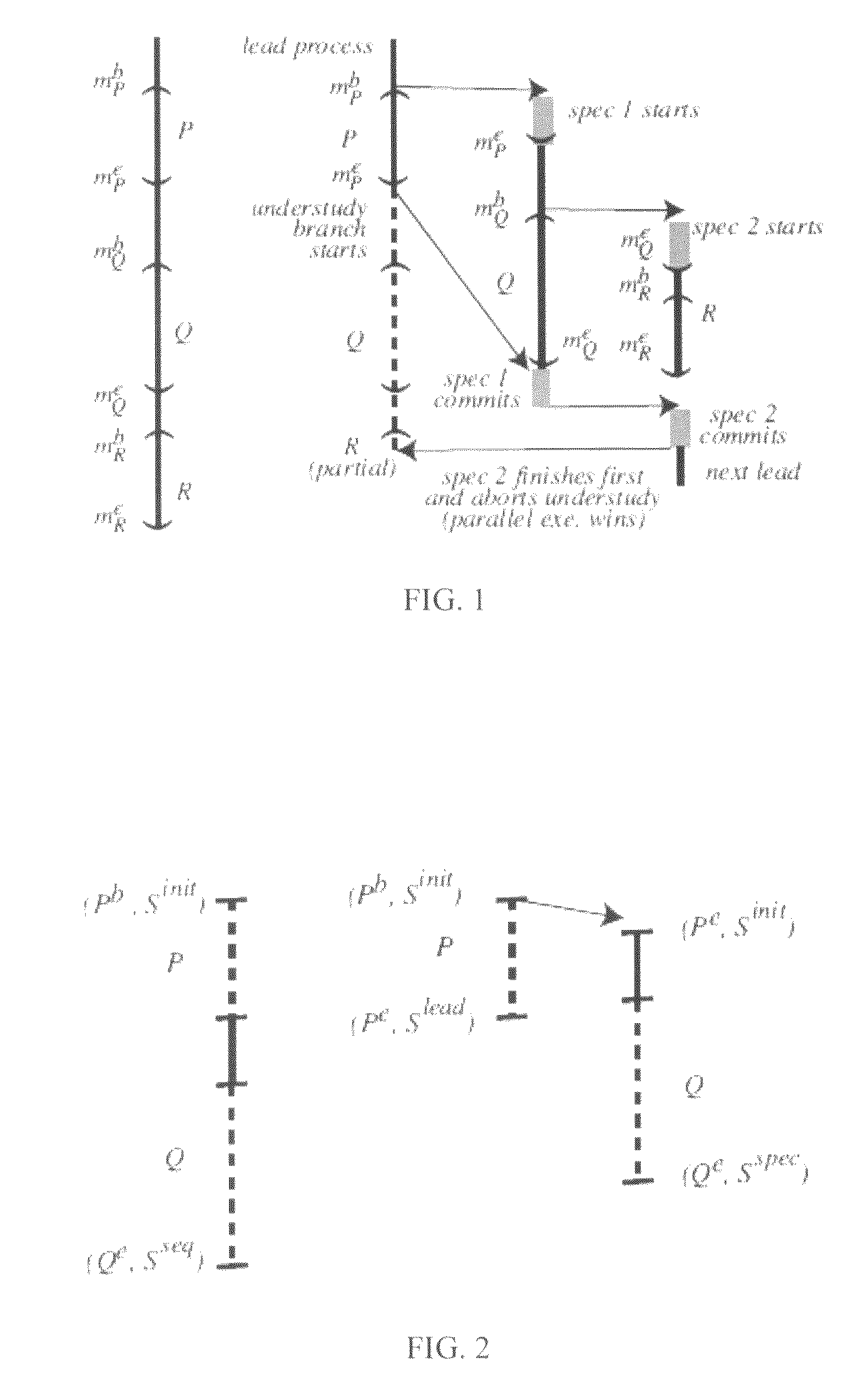

Methods and system for executing a program in multiple execution environments

ActiveUS20060059473A1Easy to switchSpecific program execution arrangementsMemory systemsOperation modeHuman language

A system and methods are disclosed for executing a technical computing program in parallel in multiple execution environments. A program is invoked for execution in a first execution environment and from the invocation the program is executed in the first execution environment and one or more additional execution environments to provide for parallel execution of the program. New constructs in a technical computing programming language are disclosed for parallel programming of a technical computing program for execution in multiple execution environments. It is also further disclosed a system and method for changing the mode of operation of an execution environment from a sequential mode to a parallel mode of operation and vice-versa.

Owner:THE MATHWORKS INC

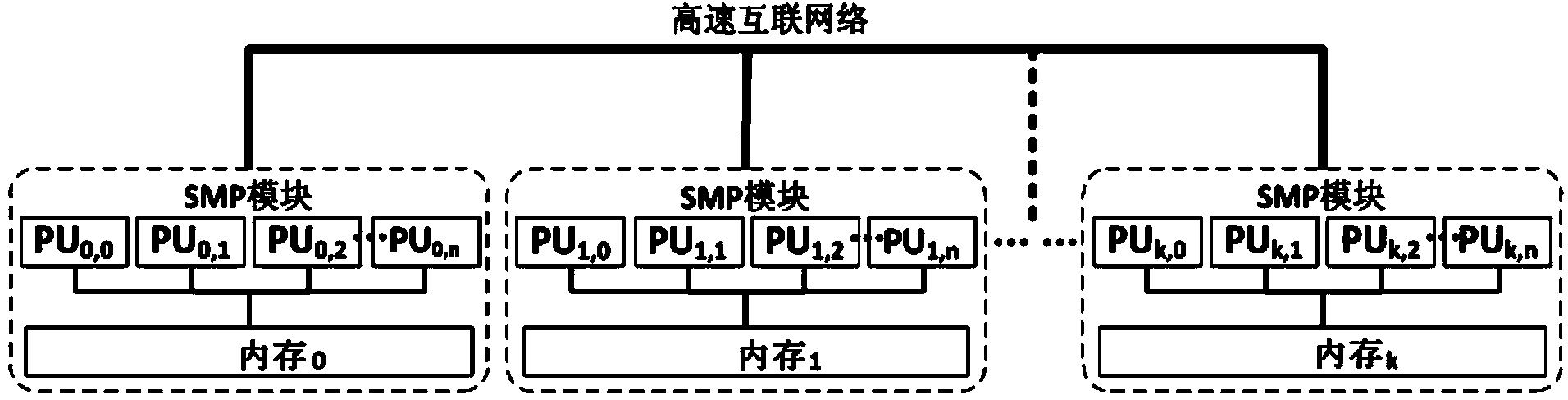

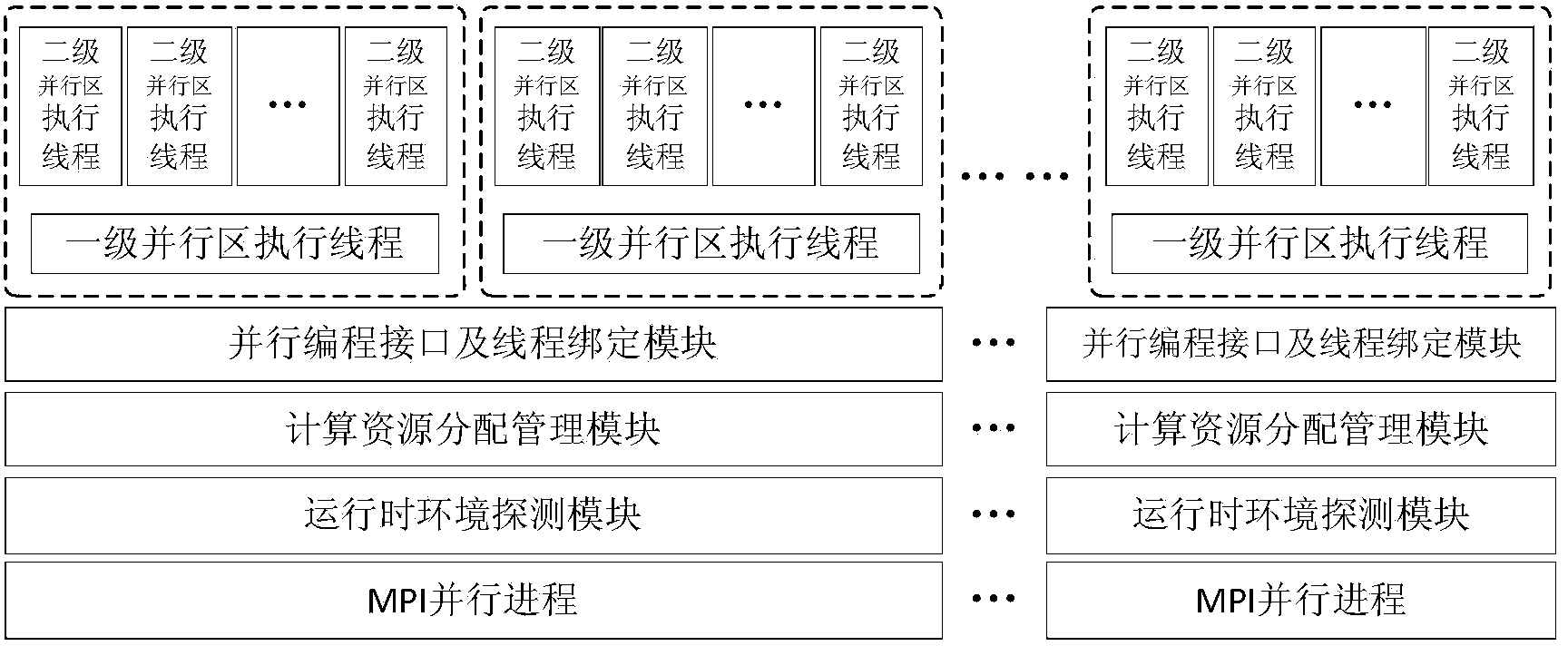

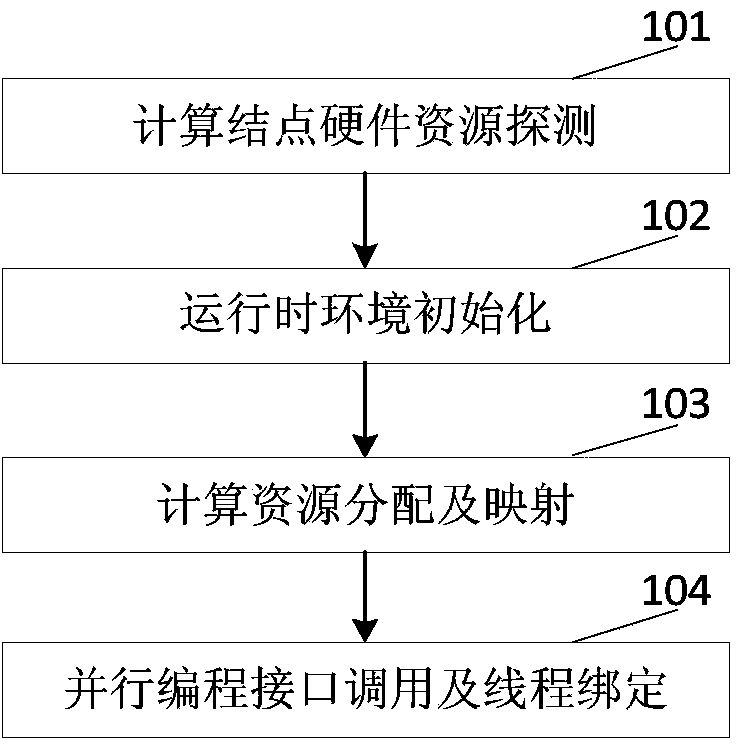

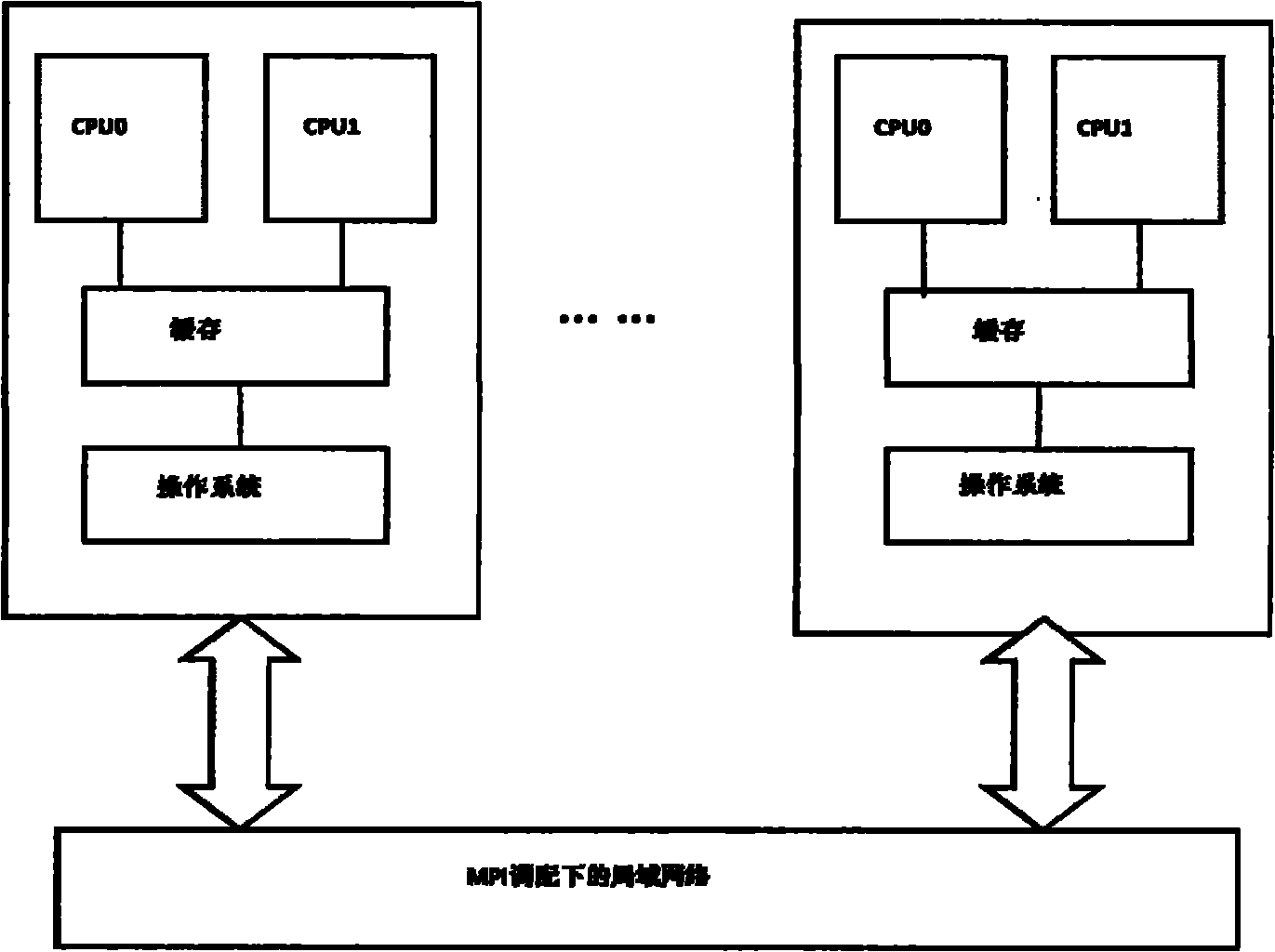

Thread for high-performance computer NUMA perception and memory resource optimizing method and system

ActiveCN104375899ASolve the problem of excessive granularity of memory managementSolve fine-grained memory access requirementsResource allocationComputer architecturePerformance computing

The invention discloses a thread for high-performance computer NUMA perception and a memory resource optimizing method and system. The system comprises a runtime environment detection module used for detecting hardware resources and the number of parallel processes of a calculation node, a calculation resource distribution and management module used for distributing calculation resources for parallel processes and building the mapping between the parallel processes and the thread and a processor core and physical memory, a parallel programming interface, and a thread binding module which is used for providing the parallel programming interface, obtaining a binding position mask of the thread according to mapping relations and binding the executing thread to a corresponding CPU core. The invention further discloses a multi-thread memory manager for NUMA perception and a multi-thread memory management method of the multi-thread memory manager. The manager comprises a DSM memory management module and an SMP module memory pool which manage SMP modules which the MPI processes belong to and memory distributing and releasing in the single SMP module respectively, the system calling frequency of the memory operation can be reduced, the memory management performance is improved, remote site memory access behaviors of application programs are reduced, and the performance of the application programs is improved.

Owner:INST OF APPLIED PHYSICS & COMPUTATIONAL MATHEMATICS

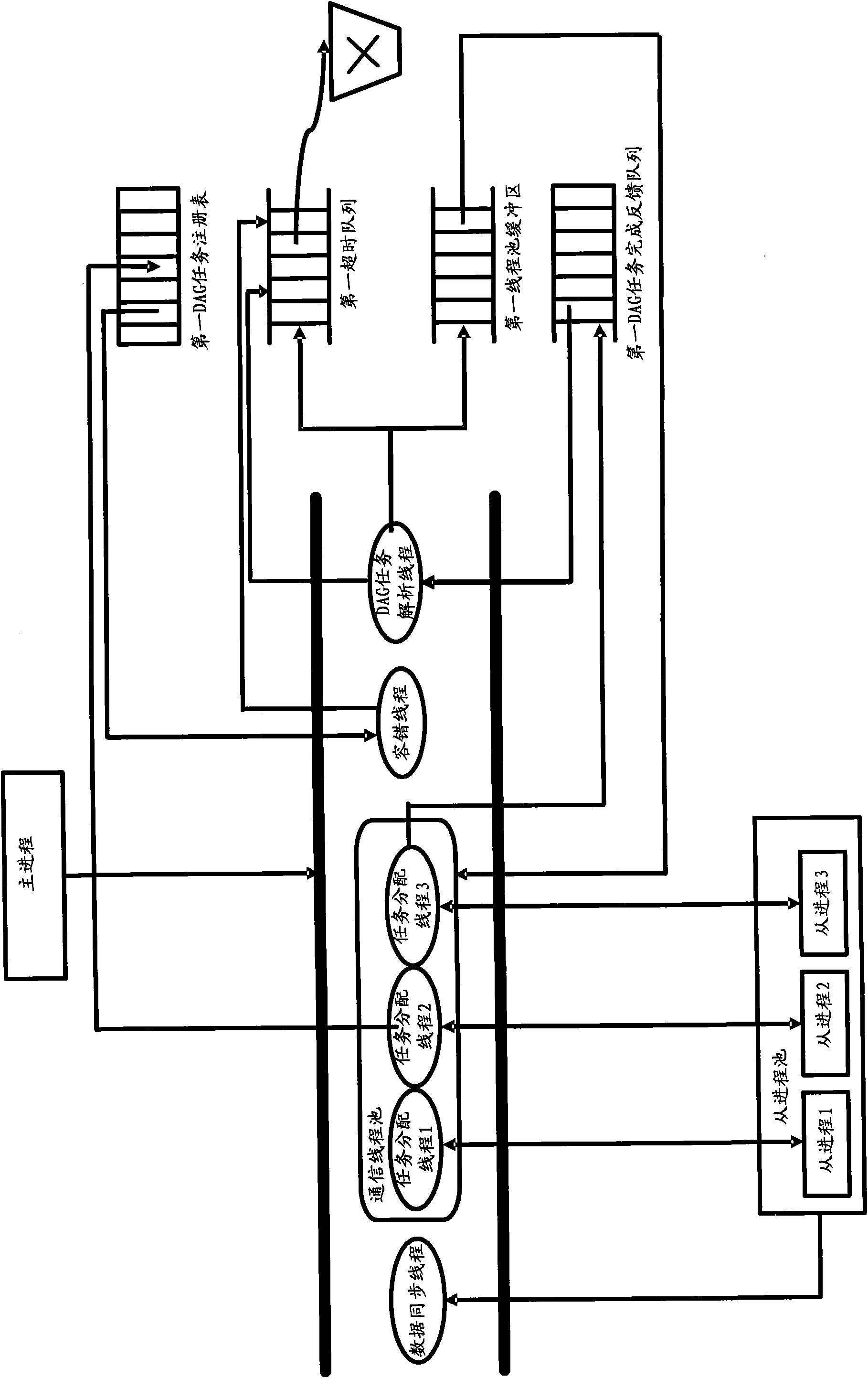

Multi-level parallel programming method

ActiveCN101887367AIncrease profitReduce complexityMultiprogramming arrangementsSpecific program execution arrangementsFault toleranceHardware architecture

The invention discloses a multi-level parallel programming method and relates to the field of parallel programming model, mode and method. The method fully combines the characteristics of a mixed hardware architecture, has obvious effect of improving the utilization rate of a group hardware environment, helps developers simplify the parallel programming and multiplex the existing parallel codes, reduces the programming complexity and the error rate through inter-process and intra-process processing and further reduces the error rate by taking fault tolerance into full consideration.

Owner:TIANJIN UNIV

Non-volatile memory and method with reduced neighboring field errors

InactiveUS20060023502A1Large capacityImprove performanceRead-only memoriesDigital storageNon-volatile memoryParallel programing

A memory device and a method thereof allow programming and sensing a plurality of memory cells in parallel in order to minimize errors caused by coupling from fields of neighboring cells and to improve performance. The memory device and method have the plurality of memory cells linked by the same word line and a read / write circuit is coupled to each memory cells in a contiguous manner. Thus, a memory cell and its neighbors are programmed together and the field environment for each memory cell relative to its neighbors during programming and subsequent reading is less varying. This improves performance and reduces errors caused by coupling from fields of neighboring cells, as compared to conventional architectures and methods in which cells on even columns are programmed independently of cells in odd columns.

Owner:SANDISK TECH LLC

Programming reversible resistance switching elements

ActiveUS20100321977A1Shorten the timeSave powerRead-only memoriesDigital storageElectrical resistance and conductanceTime efficient

A storage system and method for operating the storage system that uses reversible resistance-switching elements is described. Techniques are disclosed herein for varying programming conditions to account for different resistances that memory cells have. These techniques can program memory cells in fewer attempts, which can save time and / or power. Techniques are disclosed herein for achieving a high programming bandwidth while reducing the worst case current and / or power consumption. In one embodiment, a page mapping scheme is provided that programs multiple memory cells in parallel in a way that reduces the worst case current and / or power consumption.

Owner:SANDISK TECH LLC

Aspect-oriented parallel programming language extensions

InactiveUS20100281464A1Software engineeringSpecific program execution arrangementsMulti-core processorHuman language

Owner:IBM CORP

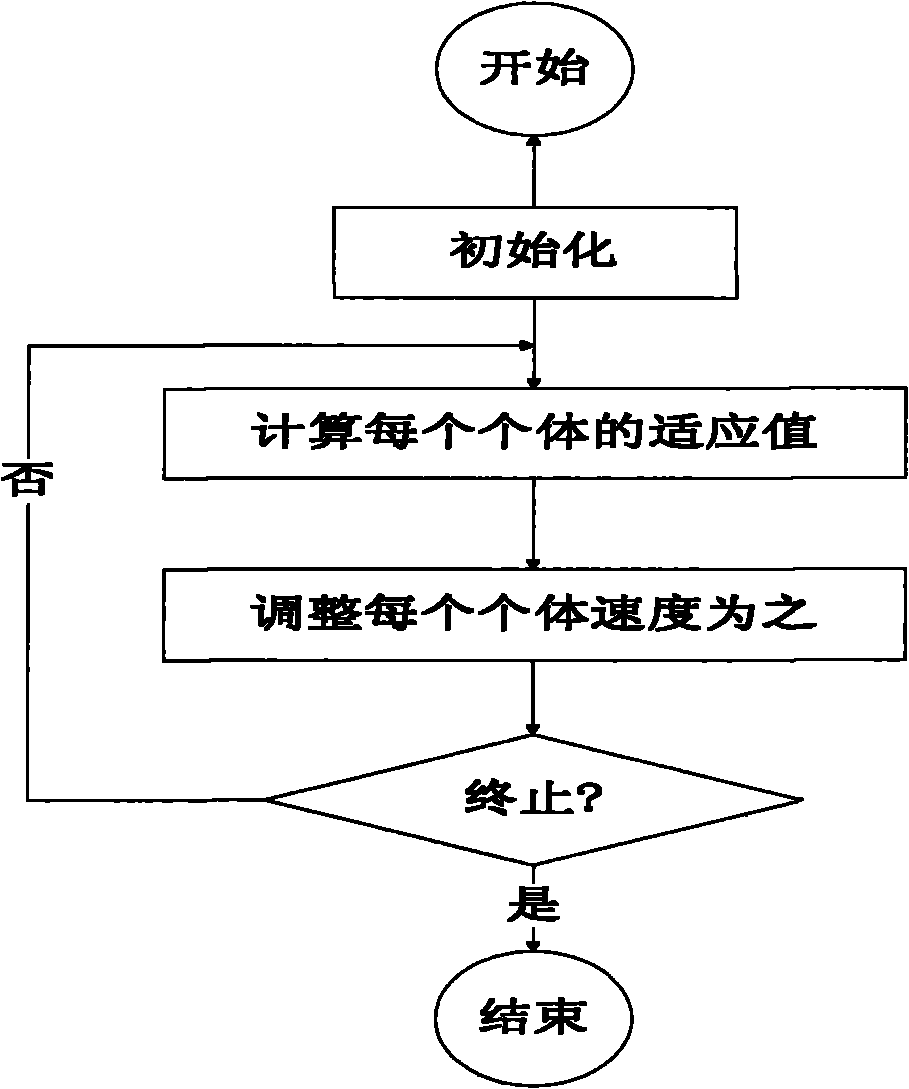

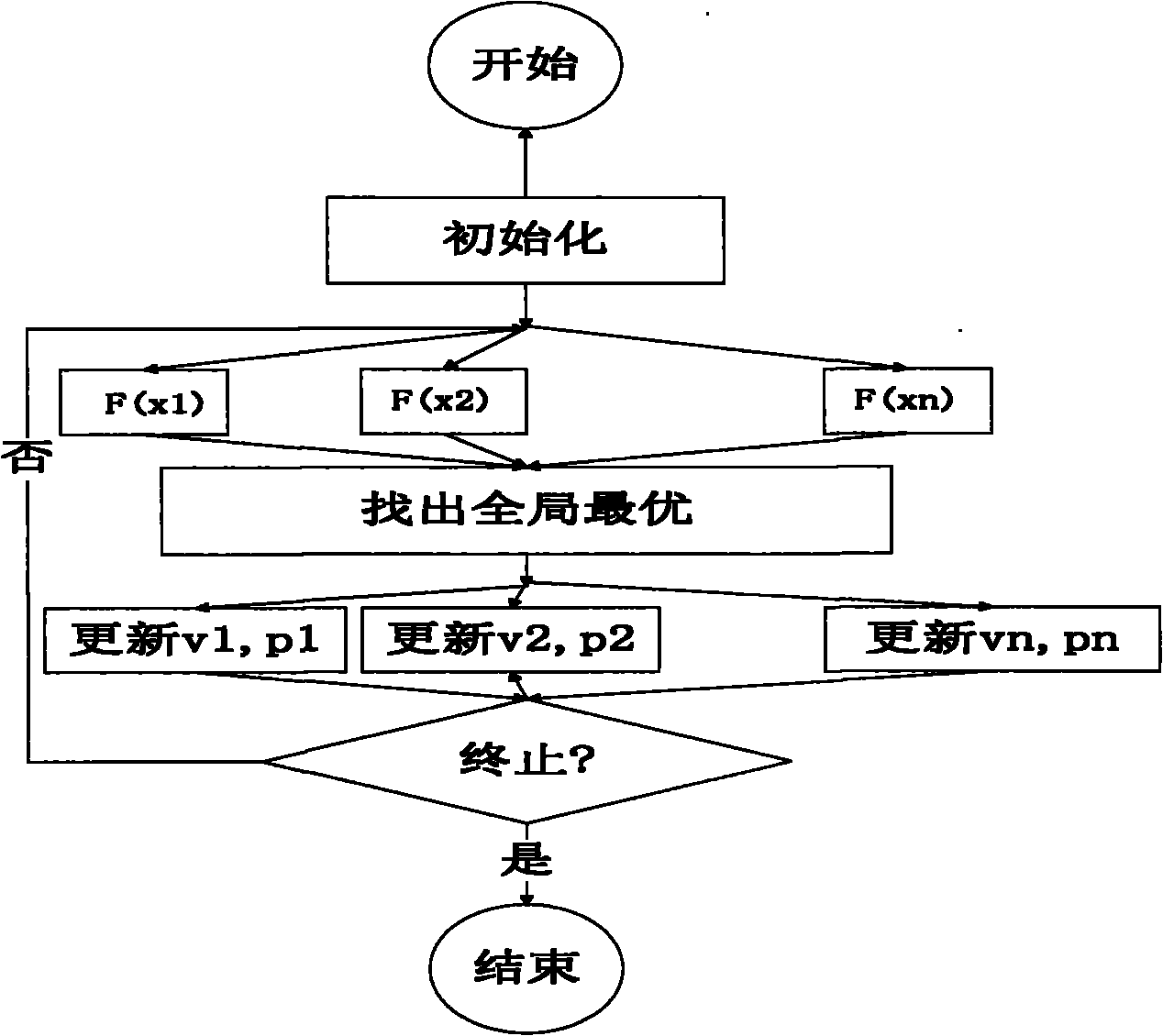

Method for parallel execution of particle swarm optimization algorithm on multiple computers

InactiveCN101819651ASolve the problem of long calculation timeReduce computing timeResource allocationBiological modelsMulti core programmingComputer programming

The invention discloses a method for parallel execution of particle swarm optimization algorithm on multiple computers. The method comprises the initialization step, the evaluation and adjustment step, the step of judging termination conditions and the termination and output step, wherein the evaluation and adjustment is the part for realizing parallel computation through parallel programming of MPI plus OpenMP. The method carries out parallelization on the operations of updating particles and evaluating particles in the particle swarm optimization algorithm by combining with the existing MPI plus OpenMP multi-core programming method according to the independence before and after updating the particle swarm optimization algorithm. The invention adopts a master-slave parallel programming mode for solving the problem of too slow speed of running the particle swarm optimization algorithm on the single computer in the past and accelerating the speed of the particle swarm optimization algorithm, thereby greatly expanding the application value and the application field of the particle swarm optimization algorithm.

Owner:ZHEJIANG UNIV

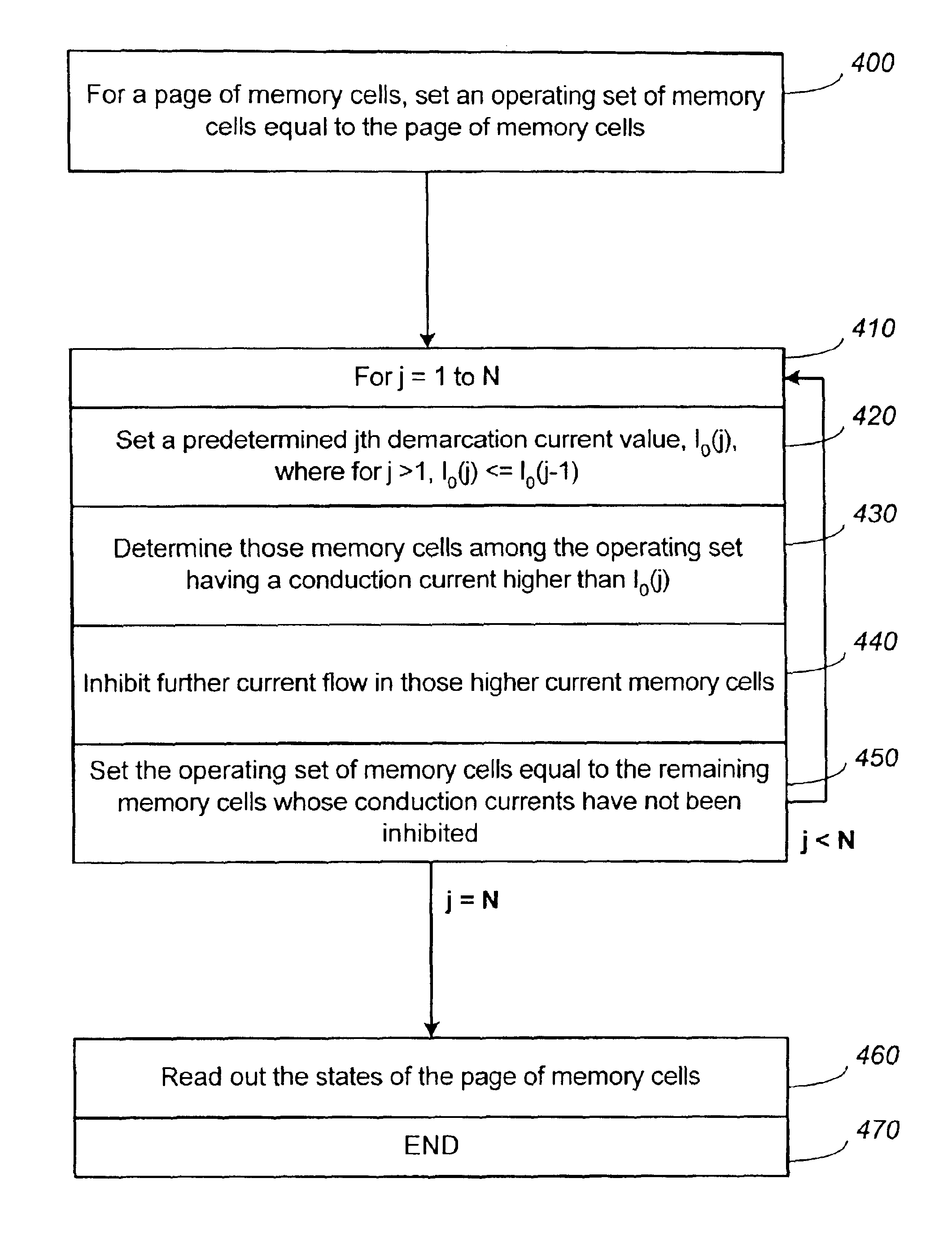

Nonvolatile memory and method for improved programming with reduced verify

ActiveUS8472257B2Tighter threshold distributionReduce stepsRead-only memoriesDigital storageNon-volatile memoryParallel programing

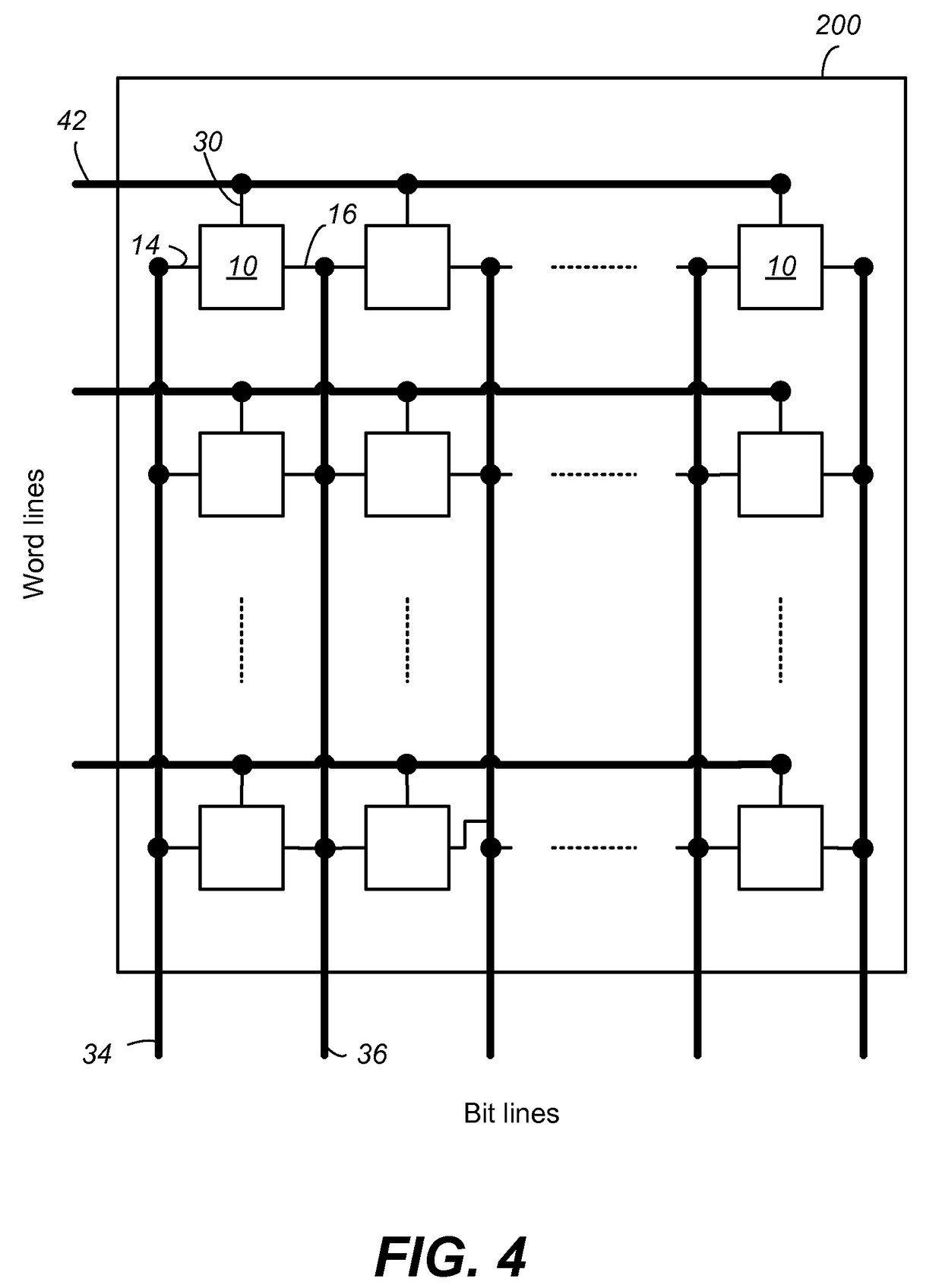

A group of memory cells of a nonvolatile memory is programmed in parallel in a programming pass with a minimum of verify steps from an erased state to respective target states by a staircase waveform. The memory states are demarcated by a set of increasing demarcation threshold values (V1, . . . , VN). Initially in the programming pass, the memory cells are verified relative to a test reference threshold value. This test reference threshold has a value offset past a designate demarcation threshold value Vi among the set by a predetermined margin. The overshoot of each memory cell when programmed past Vi, to be more or less than the margin can be determined. Accordingly, memory cells found to have an overshoot more than the margin are counteracted by having their programming rate slowed down in a subsequent portion of the programming pass so as to maintain a tighter threshold distribution.

Owner:SANDISK TECH LLC

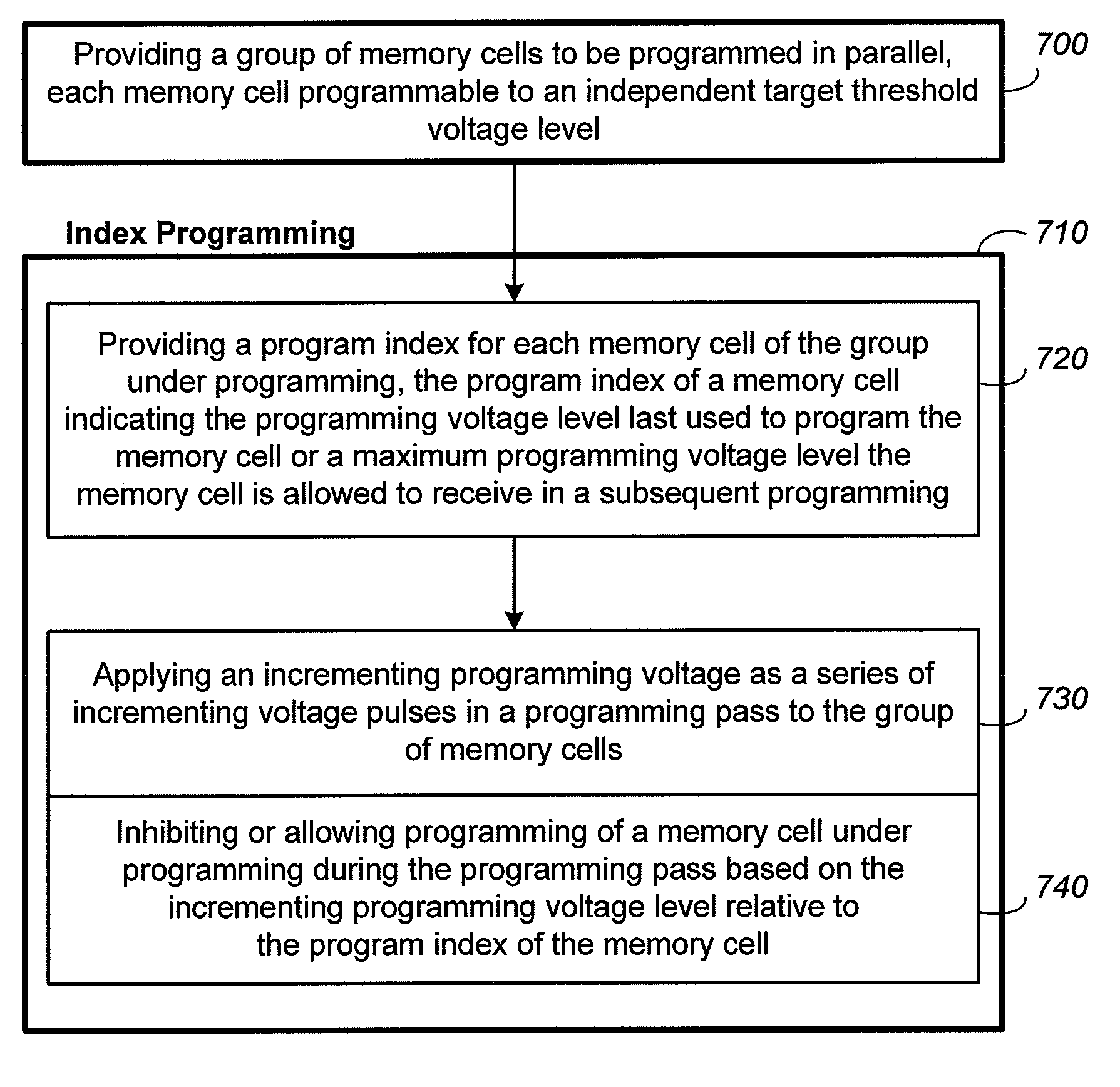

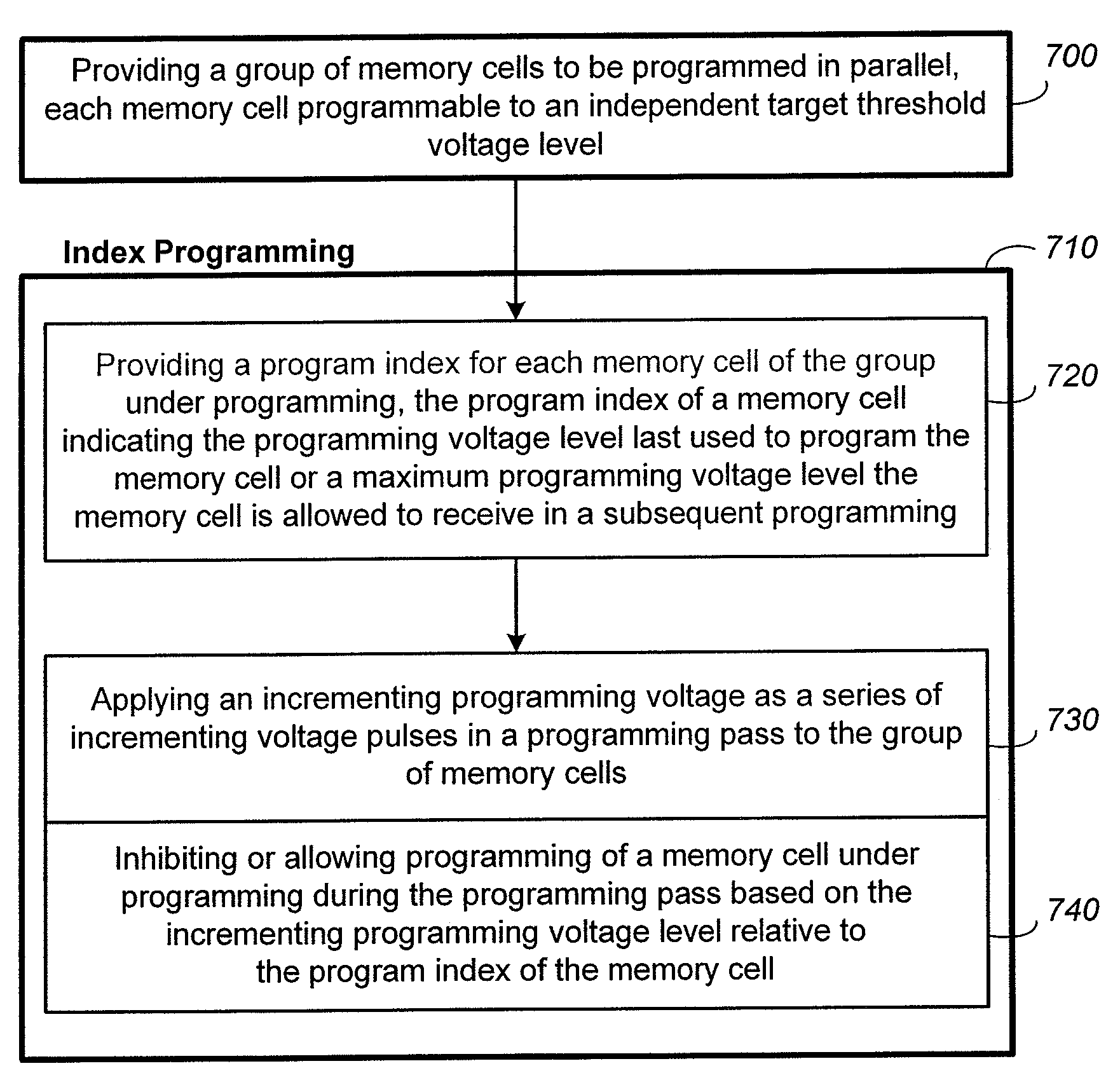

Method for index programming and reduced verify in nonvolatile memory

ActiveUS7800945B2Save stepsTighten distributionRead-only memoriesDigital storageInductive programmingMultiple pass

Owner:SANDISK TECH LLC

Infrastructure for parallel programming of clusters of machines

InactiveUS7970872B2Easy to convertImprove performanceAnalogue secracy/subscription systemsMultiple digital computer combinationsParallel programming modelApplication software

GridBatch provides an infrastructure framework that hides the complexities and burdens of developing logic and programming application that implement detail parallelized computations from programmers. A programmer may use GridBatch to implement parallelized computational operations that minimize network bandwidth requirements, and efficiently partition and coordinate computational processing in a multiprocessor configuration. GridBatch provides an effective and lightweight approach to rapidly build parallelized applications using economically viable multiprocessor configurations that achieve the highest performance results.

Owner:ACCENTURE GLOBAL SERVICES LTD

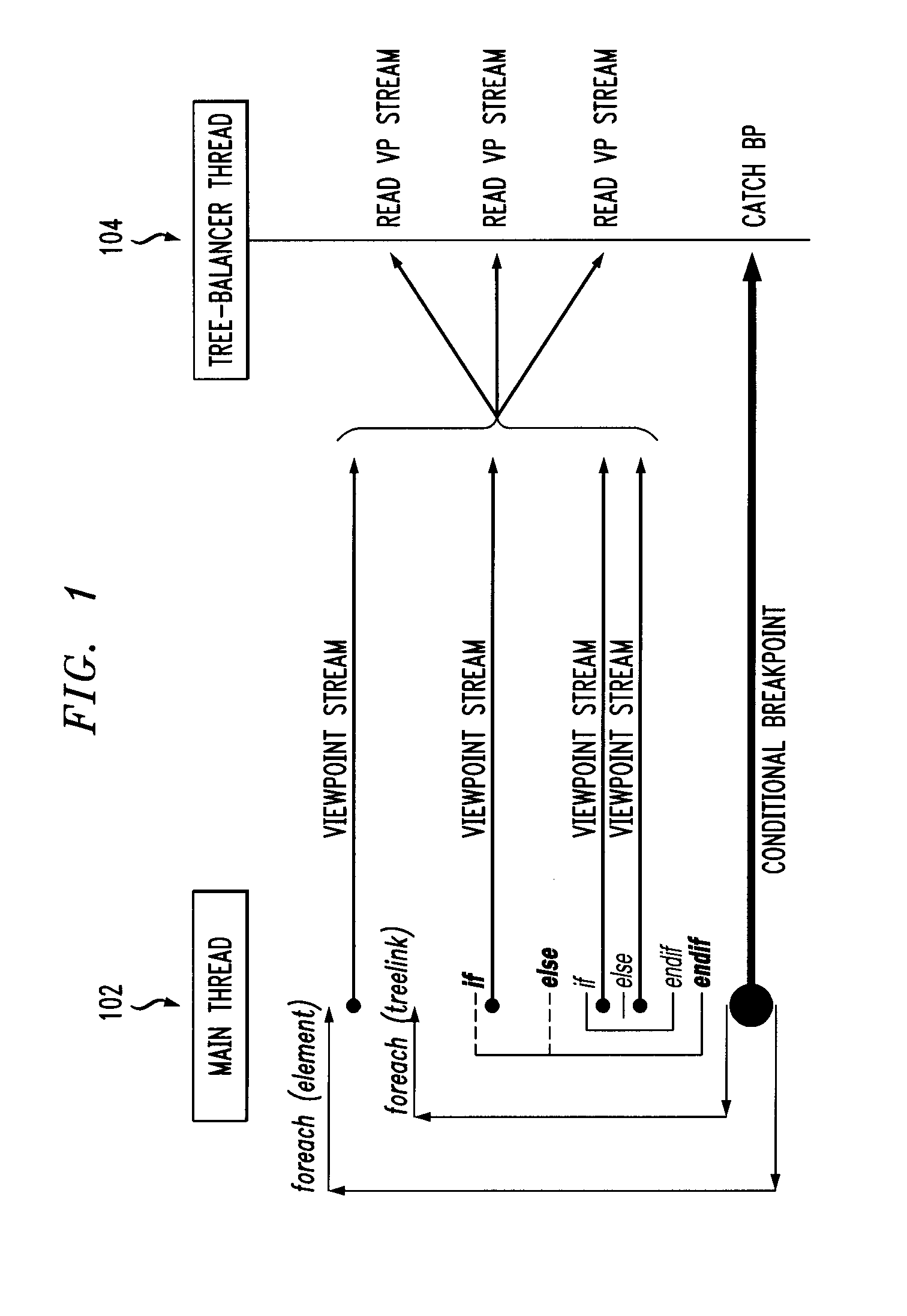

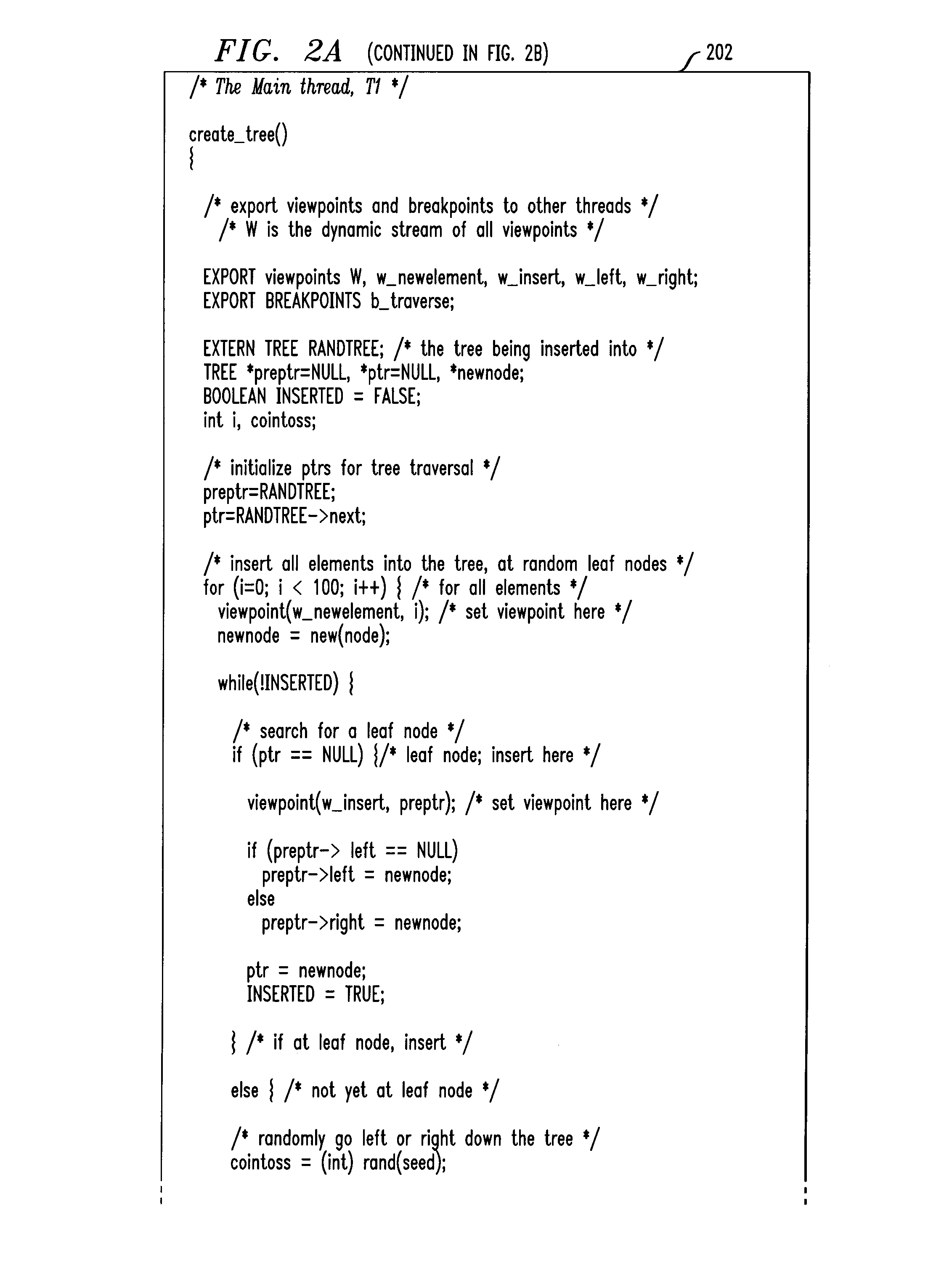

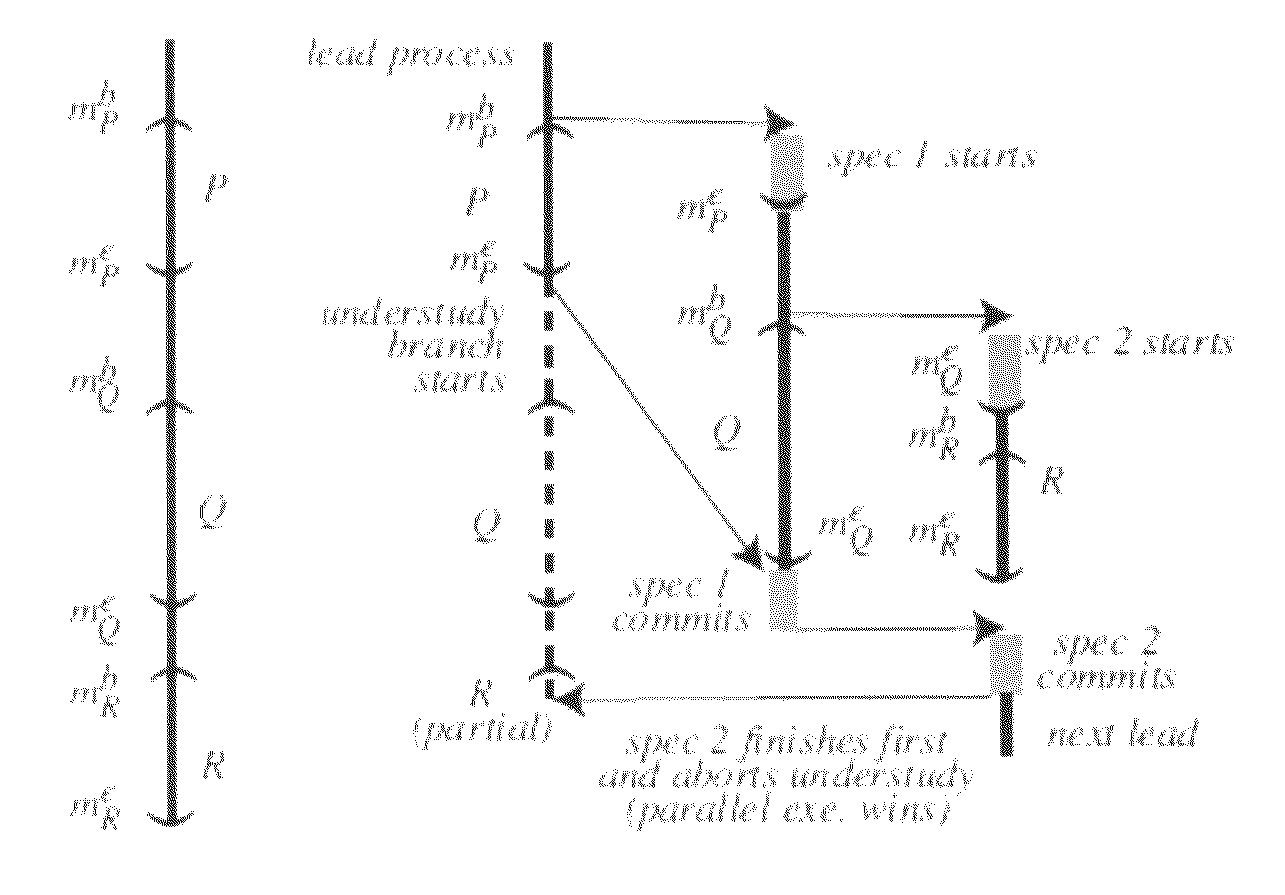

Parallel programming using possible parallel regions and its language profiling compiler, run-time system and debugging support

InactiveUS8549499B1Ensure performanceImprove programming performanceSoftware engineeringDigital computer detailsParallel programming modelRunning time

A method of dynamic parallelization for programs in systems having at least two processors includes examining computer code of a program to be performed by the system, determining a largest possible parallel region in the computer code, classifying data to be used by the program based on a usage pattern and initiating multiple, concurrent processes to perform the program. The multiple, concurrent processes ensure a baseline performance that is at least as efficient as a sequential performance of the computer code.

Owner:UNIVERSITY OF ROCHESTER

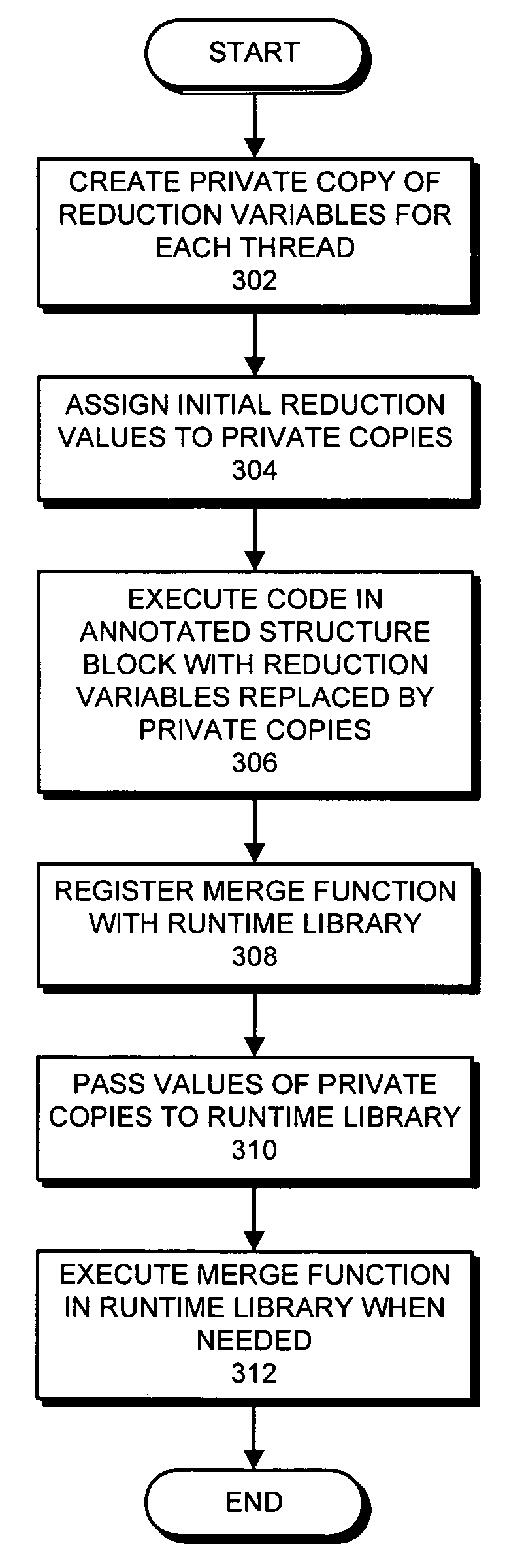

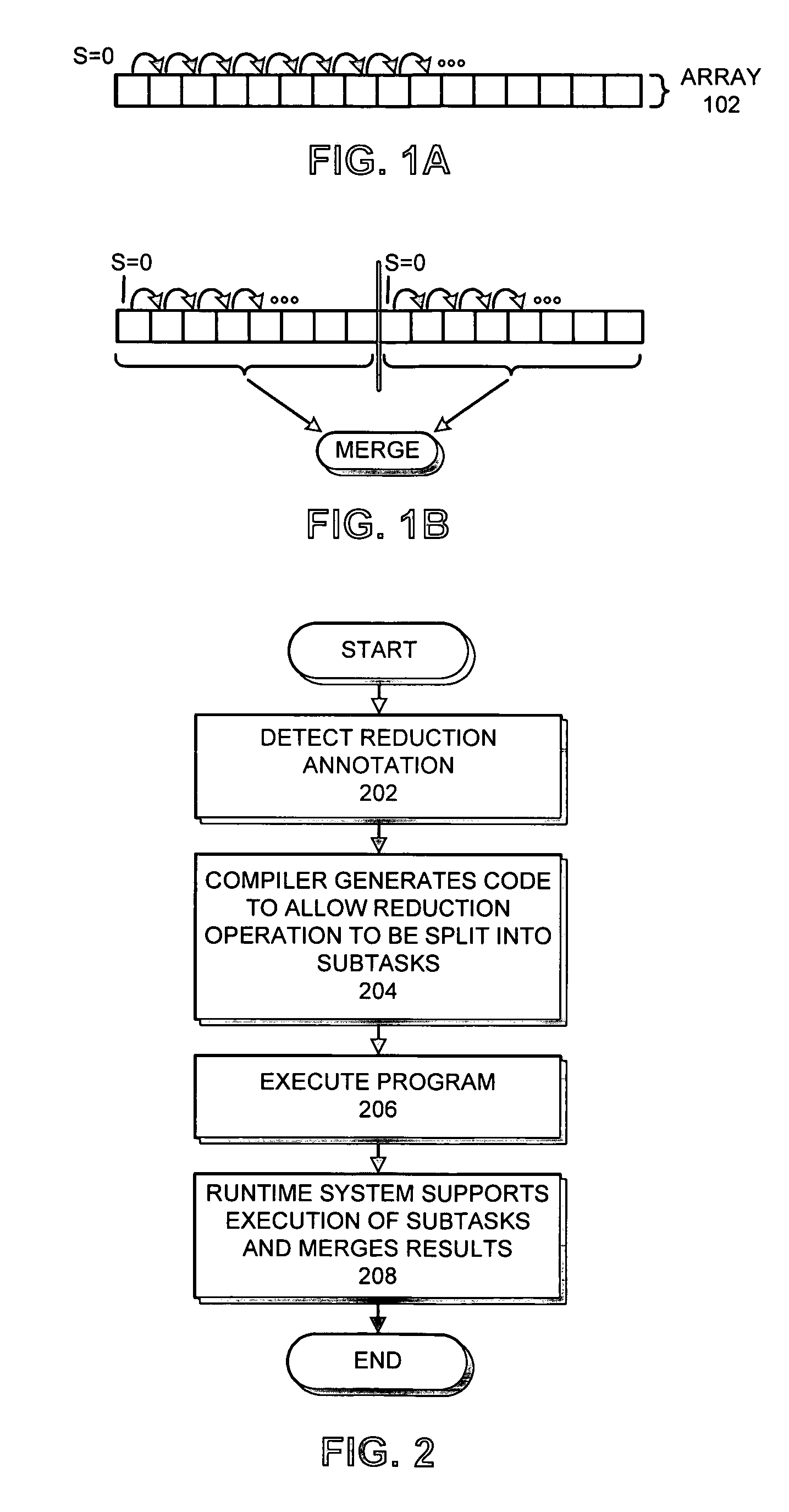

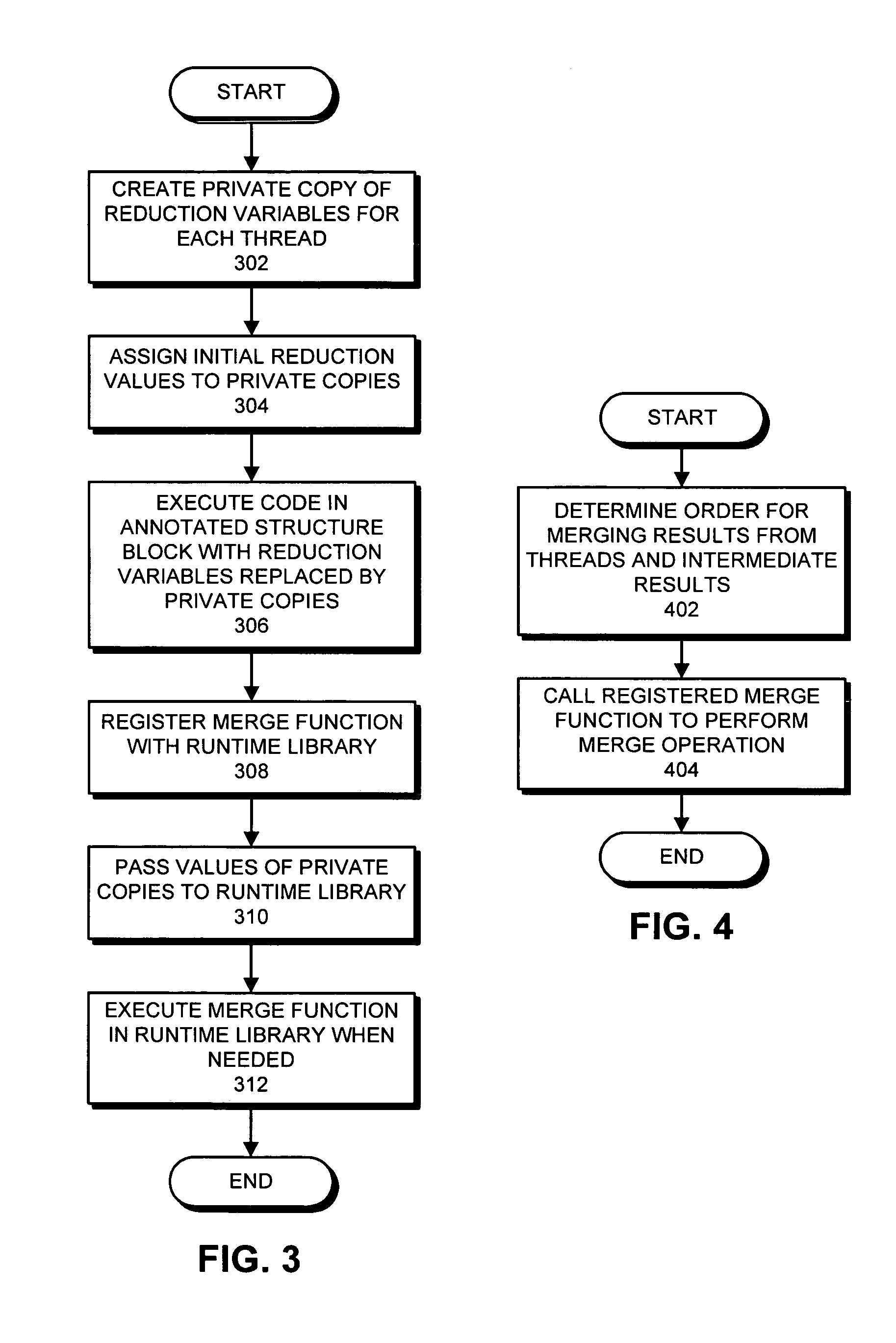

Parallelization scheme for generic reduction

One embodiment of the present invention provides a system that supports parallelized generic reduction operations in a parallel programming language, wherein a reduction operation is an associative operation that can be divided into a group of sub-operations that can execute in parallel. During operation, the system detects generic reduction operations in source code. In doing so, the system identifies a set of reduction variables upon which the generic reduction operation will operate, along with a set of initial values for the variables. The system additionally identifies a merge operation that merges partial results from the parallel generic reduction operations into a final result. The system then compiles the program's source code into a form which facilitates executing the generic reduction operations in parallel. By supporting the parallel execution of such generic reduction operations in this way, the present invention extends parallel execution for reduction operations beyond basic commutative and associative operations such as addition and multiplication.

Owner:SUN MICROSYSTEMS INC

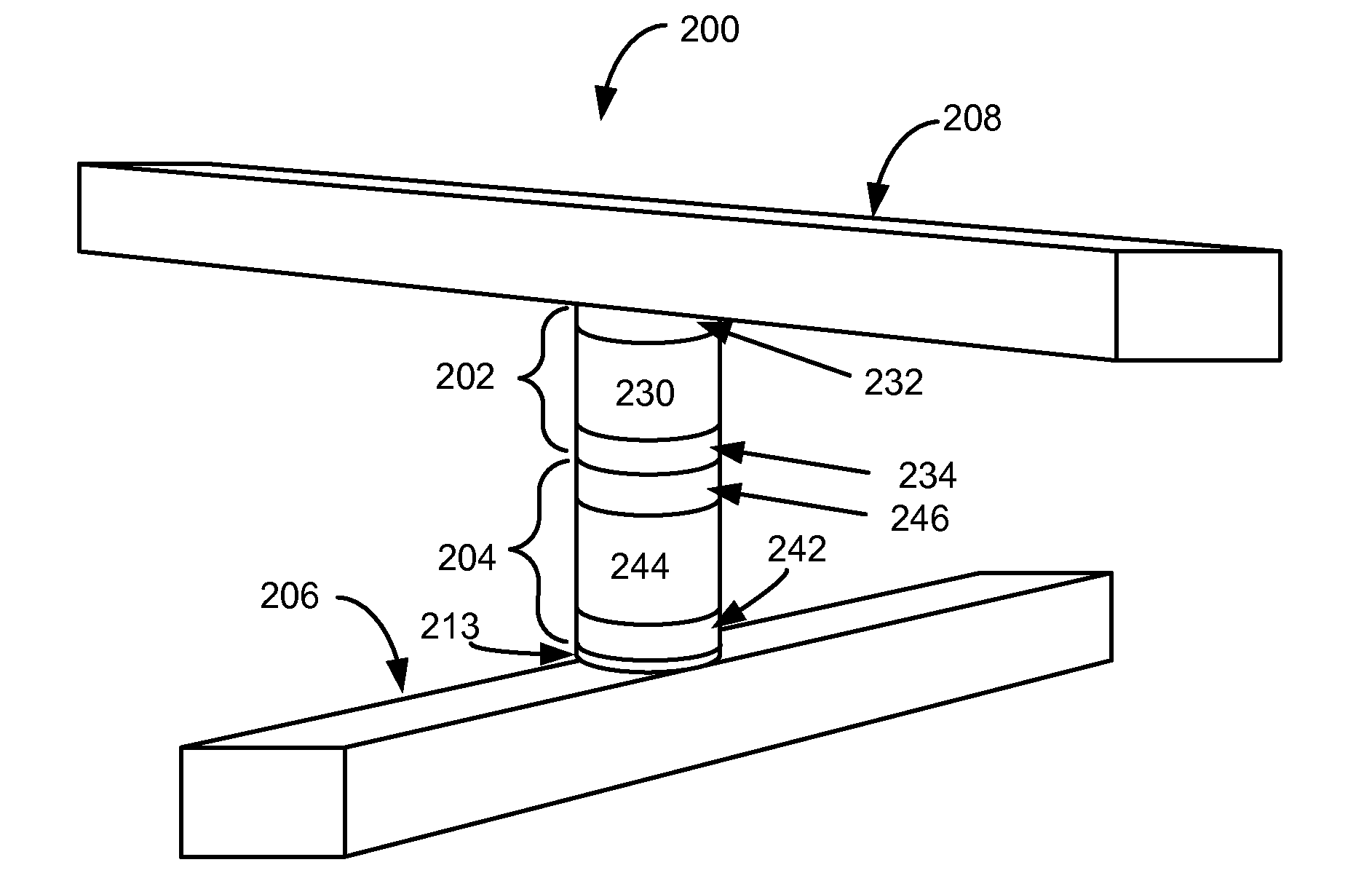

Nonvolatile Memory with Correlated Multiple Pass Programming

ActiveUS20090310421A1Fine step sizeImprove programming performanceRead-only memoriesDigital storageImage resolutionEngineering

A group of memory cells is programmed respectively to their target states in parallel using a multiple-pass programming method in which the programming voltages in the multiple passes are correlated. Each programming pass employs a programming voltage in the form of a staircase pulse train with a common step size, and each successive pass has the staircase pulse train offset from that of the previous pass by a predetermined offset level. The predetermined offset level is less than the common step size and may be less than or equal to the predetermined offset level of the previous pass. Thus, the same programming resolution can be achieved over multiple passes using fewer programming pulses than conventional method where each successive pass uses a programming staircase pulse train with a finer step size. The multiple pass programming serves to tighten the distribution of the programmed thresholds while reducing the overall number of programming pulses.

Owner:SANDISK TECH LLC

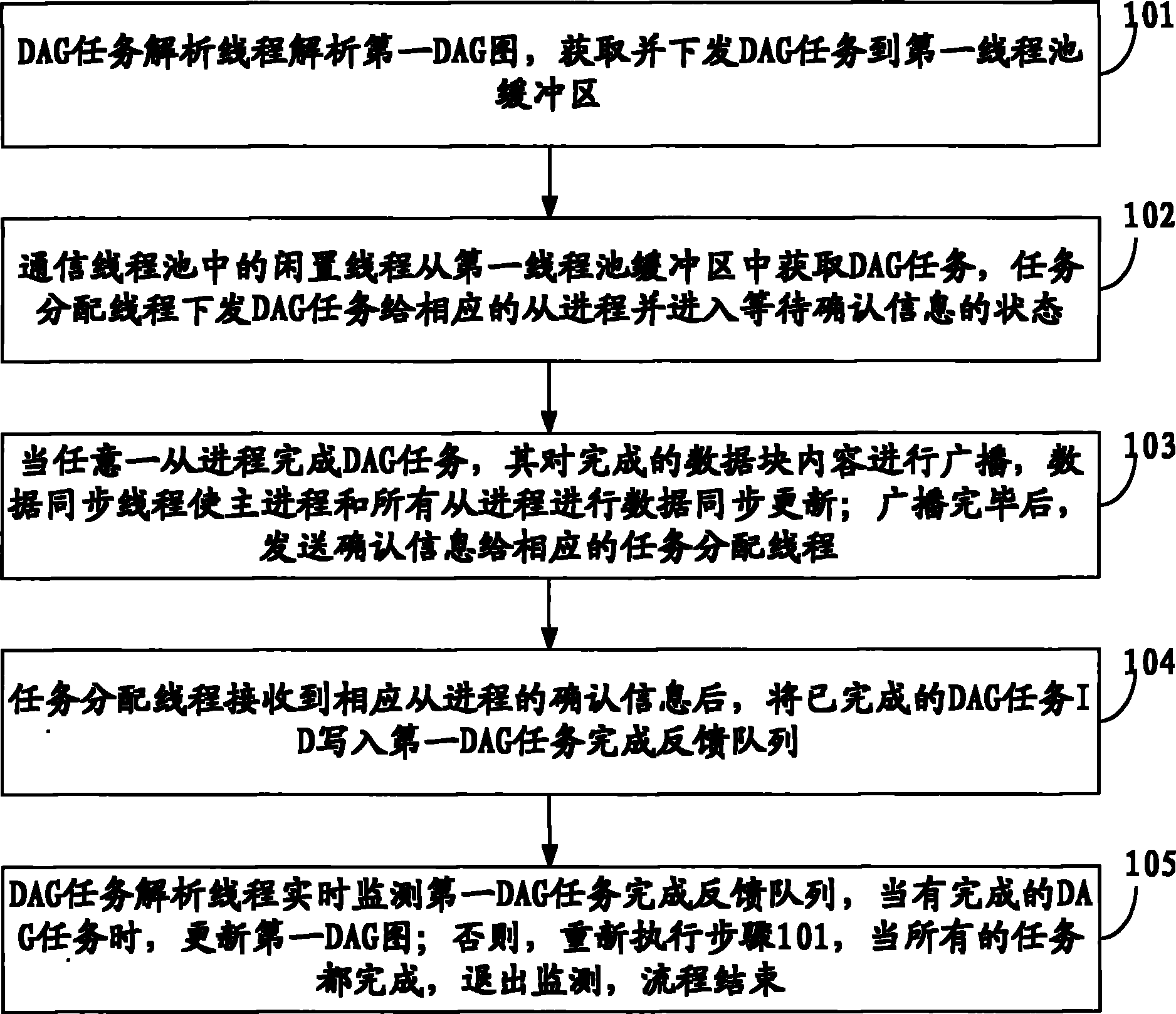

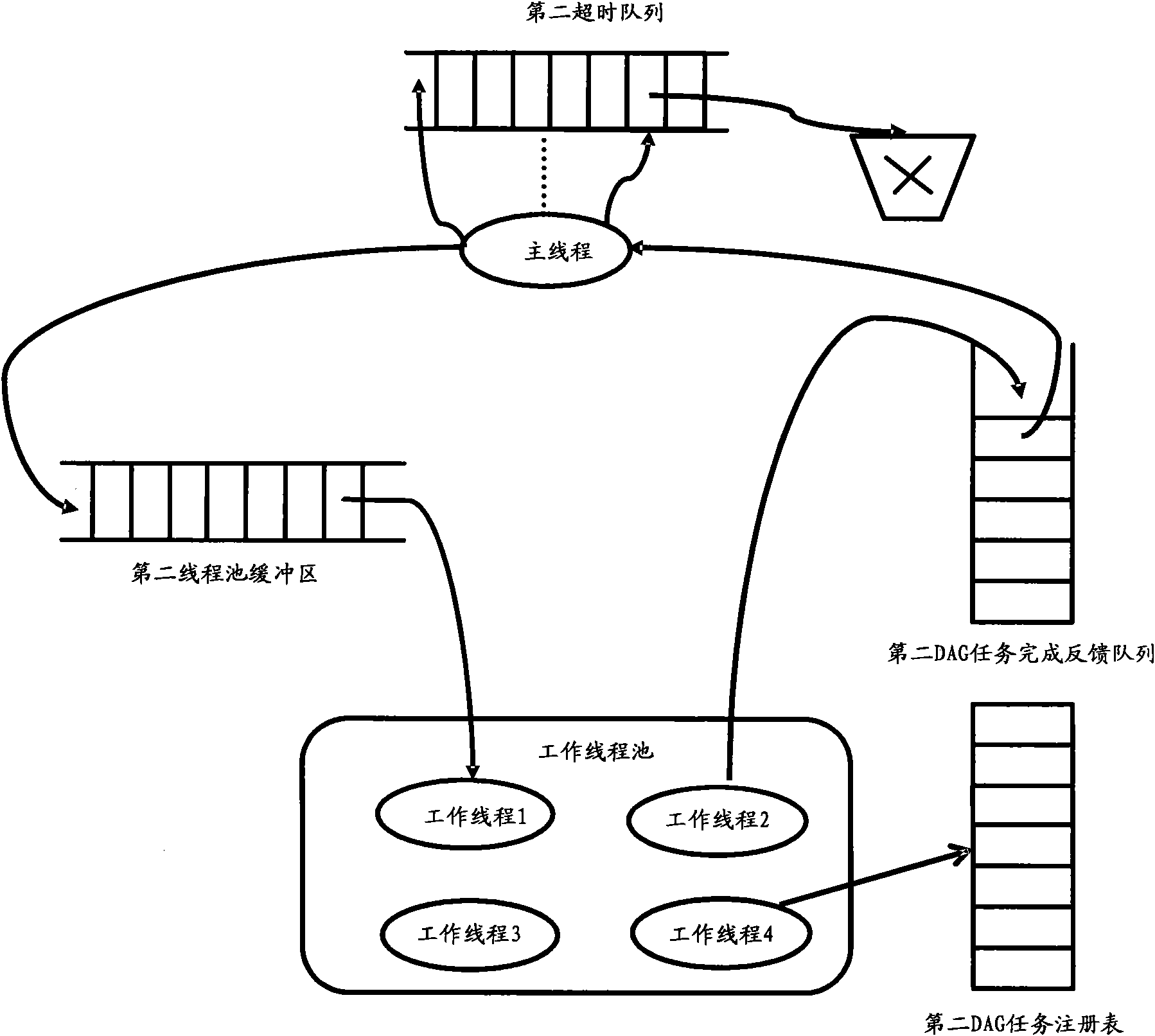

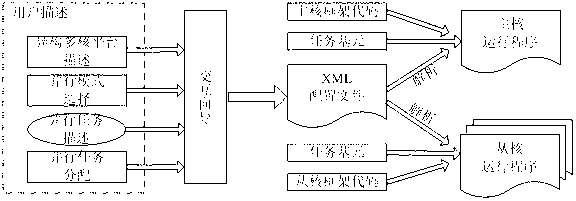

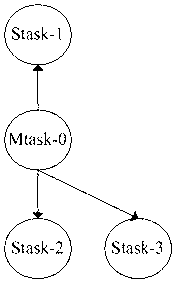

User description based programming design method on embedded heterogeneous multi-core processor

InactiveCN102707952AOutstanding FeaturesHighlight significant progressSpecific program execution arrangementsPerformance computingParallel programming model

The invention relates to a user description based programming design method on an embedded heterogeneous multi-core processor. The method includes the steps that a user configures a guide through an image interface to perform description of a heterogeneous multi-core processor platform and a task, a parallel mode is set, an element task is established and registered, a task relation graph (directed acyclic graph (DAG)) is generated, the element task is subjected to a static assignment on the heterogeneous multi-core processor, and processor platform characteristics, parallel demands and task assignment are expressed in a configuration file mode (extensible markup language (XML)). Then the element task after a configuration file is subjected to a parallel analysis is embedded into a position of a heterogeneous multi-core framework code task label, a corresponding serial source program is constructed, a serial compiler is invoked, and finally an executable code on the heterogeneous multi-core processor can be generated. By means of the user description based programming design method on the embedded heterogeneous multi-core processor, parallel programming practices such as developing a parallel compiler on a general personal computer (PC) or a high-performance computing platform, establishing a parallel programming language and porting a parallel library are effectively avoided, the difficulty of developing a parallel program on the heterogeneous multi-core processor platform in the embedded field is greatly reduced, the purpose of parallel programming based on the user description and parallelization interactive guide is achieved.

Owner:SHANGHAI UNIV

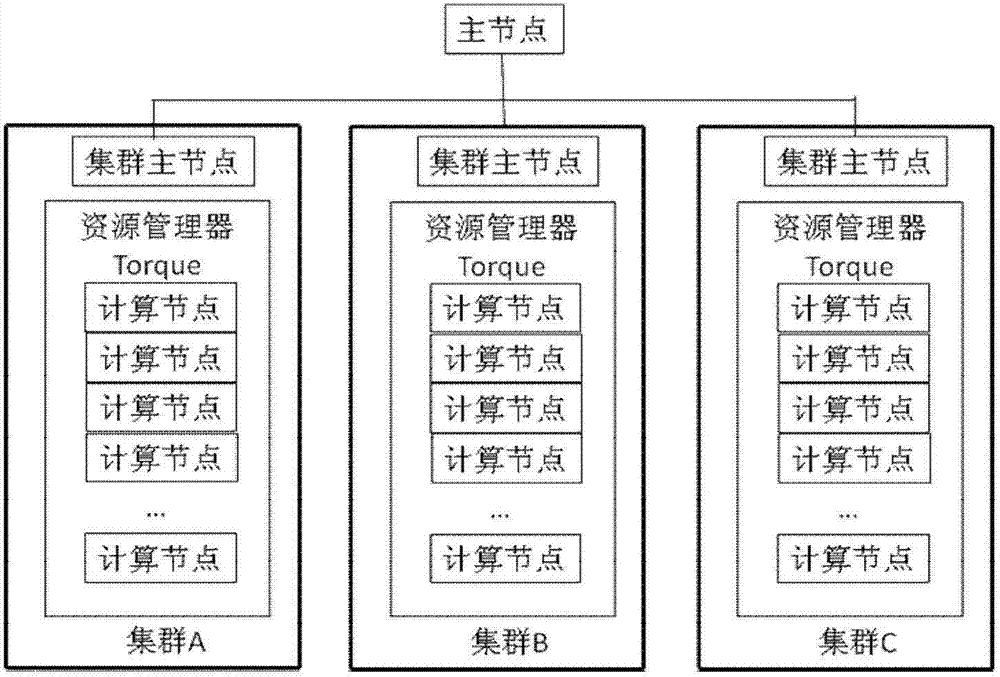

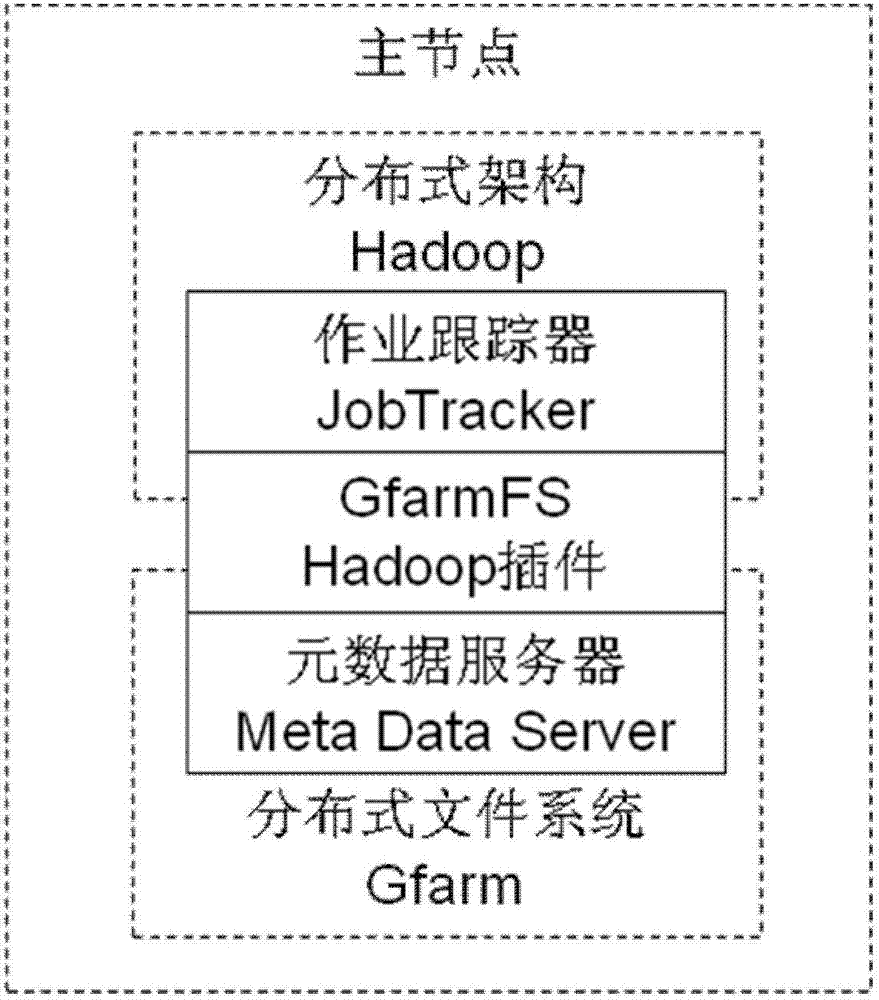

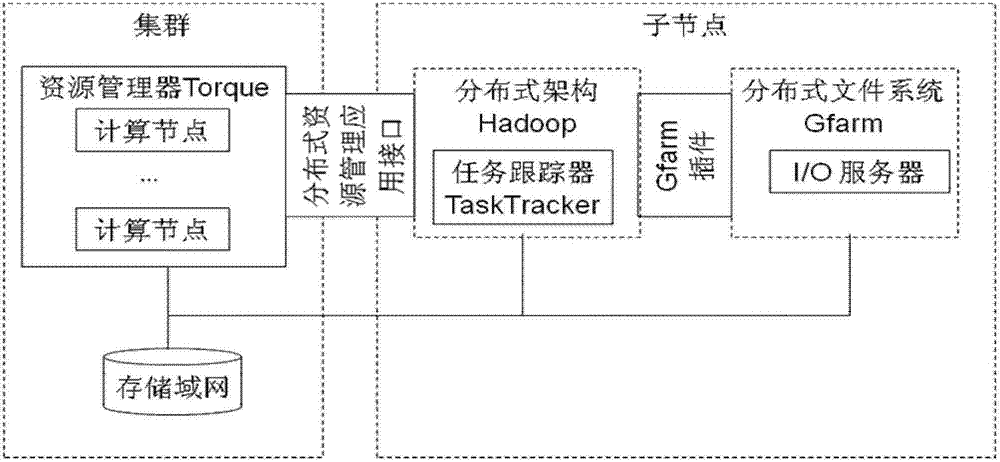

Parallel programming method oriented to data intensive application based on multiple data architecture centers

InactiveCN102880510AEasy to handleImprove parallel efficiencyResource allocationCollocationCluster systems

The invention relates to a parallel programming method oriented to data intensive application based on multiple data architecture centers. The method comprises the following steps of: constructing a main node of system architecture, constructing a sub node of the system architecture, performing loading, performing execution and the like. The parallel programming method has the advantages that a technicist in the field of a large scale of data intensive scientific data does not need to know well a parallel calculation mode based on multiple data centers and does not need to have a MapReduce and multi-point interface (MPI) parallel programming technology relevant to high-performance calculation; a plurality of distributed clusters are simply configured, and a MapReduce calculation task is loaded to the distributed clusters; the hardware and software collocation of the existing cluster system is not required to be changed, and the architecture can quickly parallel the data intensive application based on the MapReduce programming model on multiple data centers; and therefore, relatively high parallelization efficiency is achieved, and the processing capacity of the large-scale distributed data intensive scientific data can be greatly improved.

Owner:CENT FOR EARTH OBSERVATION & DIGITAL EARTH CHINESE ACADEMY OF SCI

Nonvolatile memory with index programming and reduced verify

ActiveUS7826271B2Save stepsTighten distributionRead-only memoriesDigital storageTheoretical computer scienceInductive programming

In a non-volatile memory a group of memory cells is programmed respectively to their target states in parallel using a multiple-pass index programming method which reduces the number of verify steps. For each cell a program index is maintained storing the last programming voltage applied to the cell. Each cell is indexed during a first programming pass with the application of a series of incrementing programming pulses. The first programming pass is followed by verification and one or more subsequent programming passes to trim any short-falls to the respective target states. If a cell fails to verify to its target state, its program index is incremented and allows the cell to be programmed by the next pulse from the last received pulse. The verify and programming pass are repeated until all the cells in the group are verified to their respective target states. No verify operations between pulses are necessary.

Owner:SANDISK TECH LLC

Flushed data alignment with physical structures

ActiveUS20130042067A1Memory architecture accessing/allocationMemory adressing/allocation/relocationMemory systemsPhysical structure

A method and system are disclosed herein for performing operations on a parallel programming unit in a memory system. The parallel programming unit includes multiple physical structures (such as memory cells in a row) in the memory system that are configured to be operated on in parallel. The method and system perform a first operation on the parallel programming unit, the first operation operating on only part of the parallel programming unit and not operating on a remainder of the parallel programming unit, set a pointer to indicate at least one physical structure in the remainder of the parallel programming unit, and perform a second operation using the pointer to operate on no more than the remainder of the parallel programming unit. In this way, the method and system may realign programming to the parallel programming unit when partial writes to the parallel programming unit occur.

Owner:SANDISK TECH LLC

Mechanism to support generic collective communication across a variety of programming models

InactiveUS7984448B2Interprogram communicationGeneral purpose stored program computerCollective communicationConnection manager

A system and method for supporting collective communications on a plurality of processors that use different parallel programming paradigms, in one aspect, may comprise a schedule defining one or more tasks in a collective operation, an executor that executes the task, a multisend module to perform one or more data transfer functions associated with the tasks, and a connection manager that controls one or more connections and identifies an available connection. The multisend module uses the available connection in performing the one or more data transfer functions. A plurality of processors that use different parallel programming paradigms can use a common implementation of the schedule module, the executor module, the connection manager and the multisend module via a language adaptor specific to a parallel programming paradigm implemented on a processor.

Owner:INT BUSINESS MASCH CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com