Patents

Literature

141 results about "Collective operation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A collective operation is a concept in parallel computing in which data is simultaneously sent to or received from many nodes. Common examples of collective operations are gather (in which data is collected from all nodes), scatter (in which a set of data is broken up into pieces, and a different piece is sent...

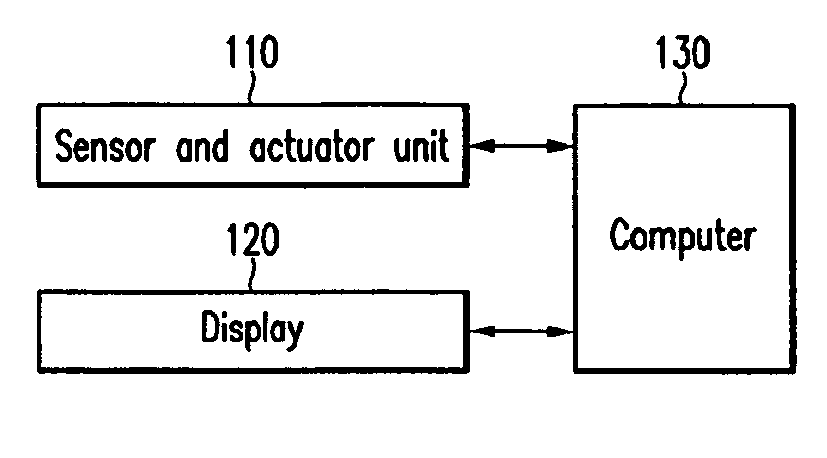

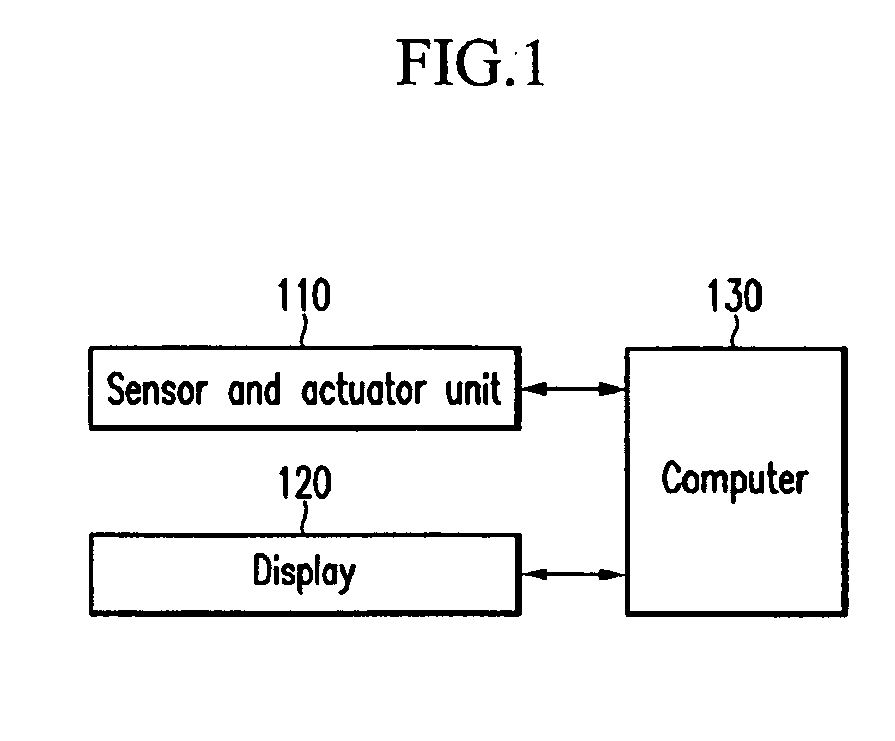

Smart digital modules and smart digital wall surfaces combining the same, and context aware interactive multimedia system using the same and operation method thereof

ActiveUS20050254505A1Easy to reconfigureEasy maintenanceDigital computer detailsData switching by path configurationState variationContext awareness

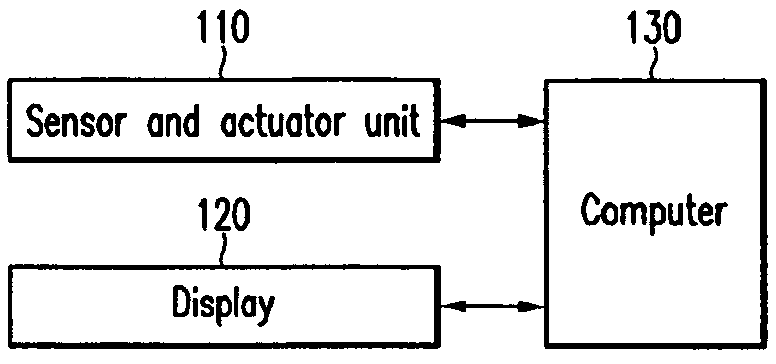

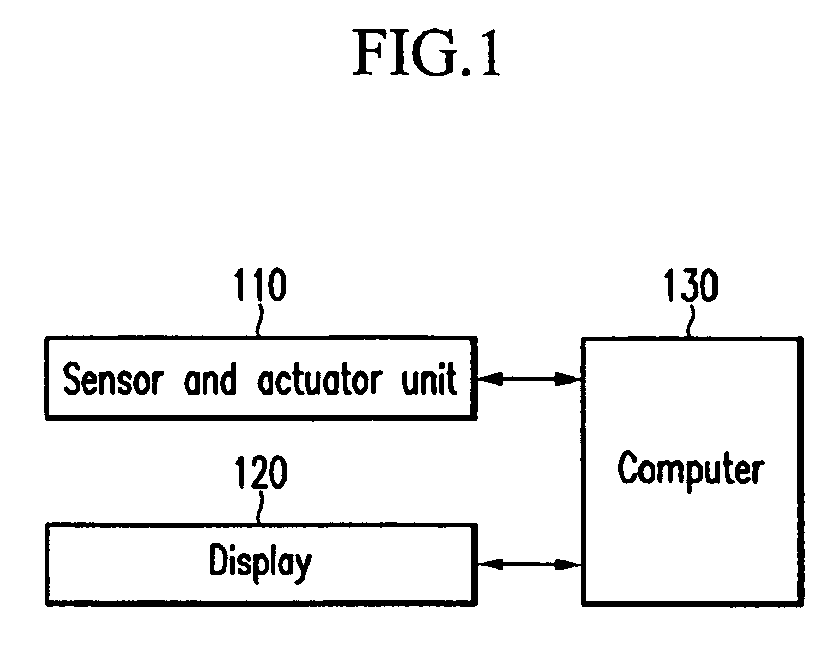

Disclosed are smart digital modules, a smart digital wall combining the smart digital modules, a context-aware interactive multimedia system, and an operating method thereof. The smart digital wall includes local coordinators for controlling the smart digital modules, and a coordination process for controlling the smart digital modules. The smart digital modules sense ambient states and changes of states and independently display corresponding actuations. The coordination process is connected to the smart digital modules via radio communication, and combines smart digital modules to control collective operations of the smart digital modules.

Owner:INFORMATION & COMM UNIV RES & INDAL COOPERATION GROUP +1

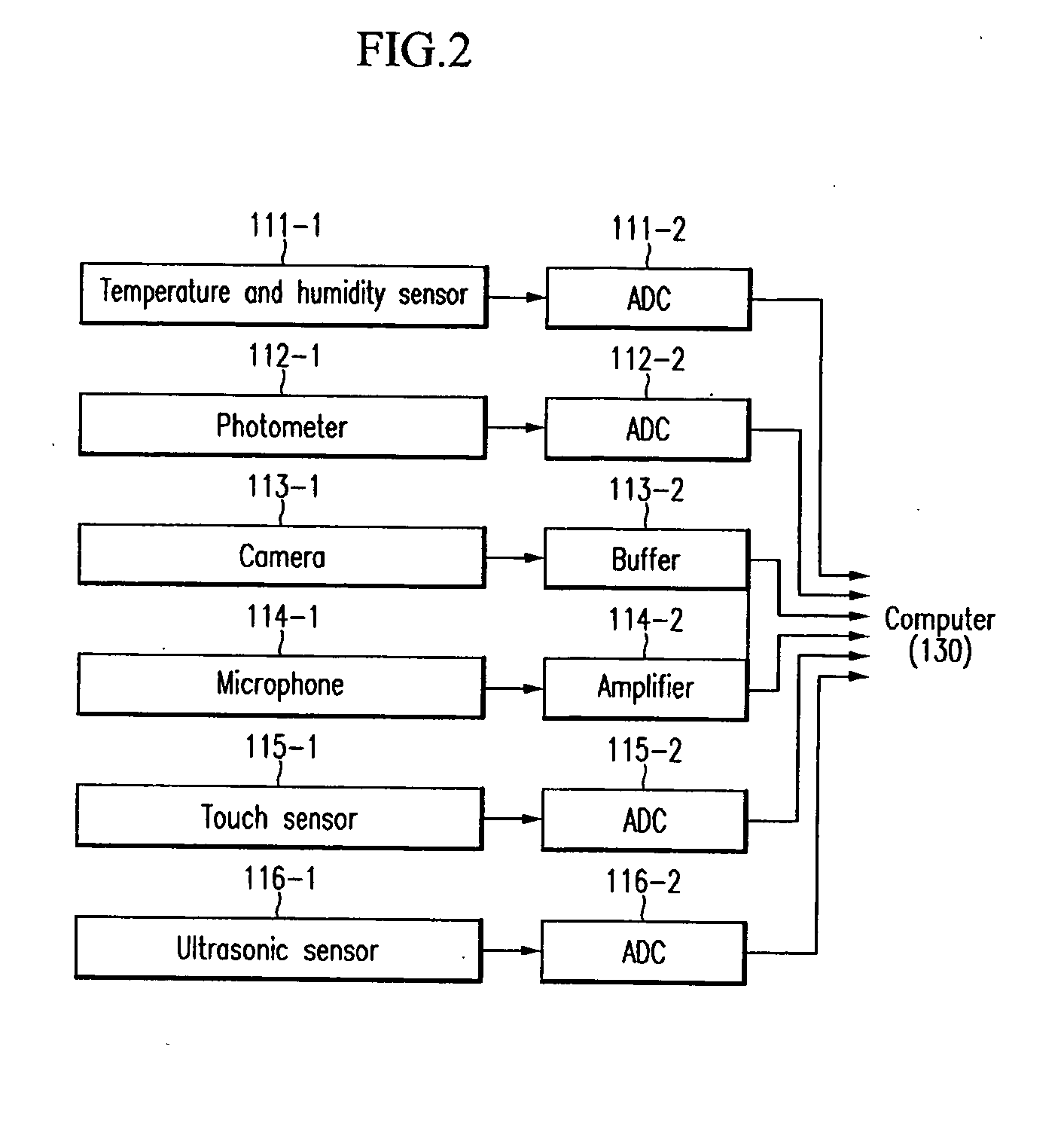

Parallel-aware, dedicated job co-scheduling method and system

InactiveUS20050131865A1Improve performanceImprove scalabilityProgram initiation/switchingSpecial data processing applicationsExtensibilityOperational system

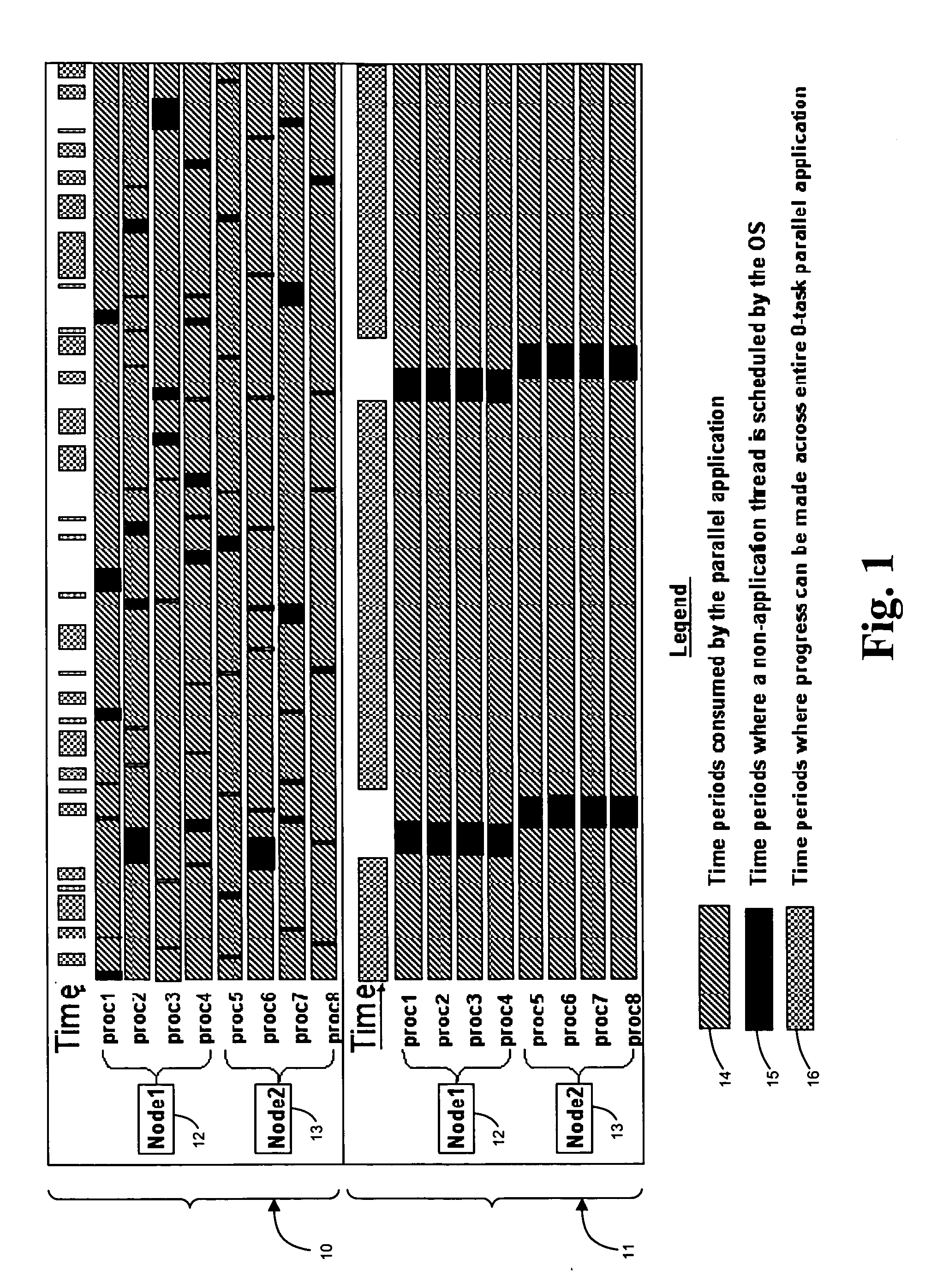

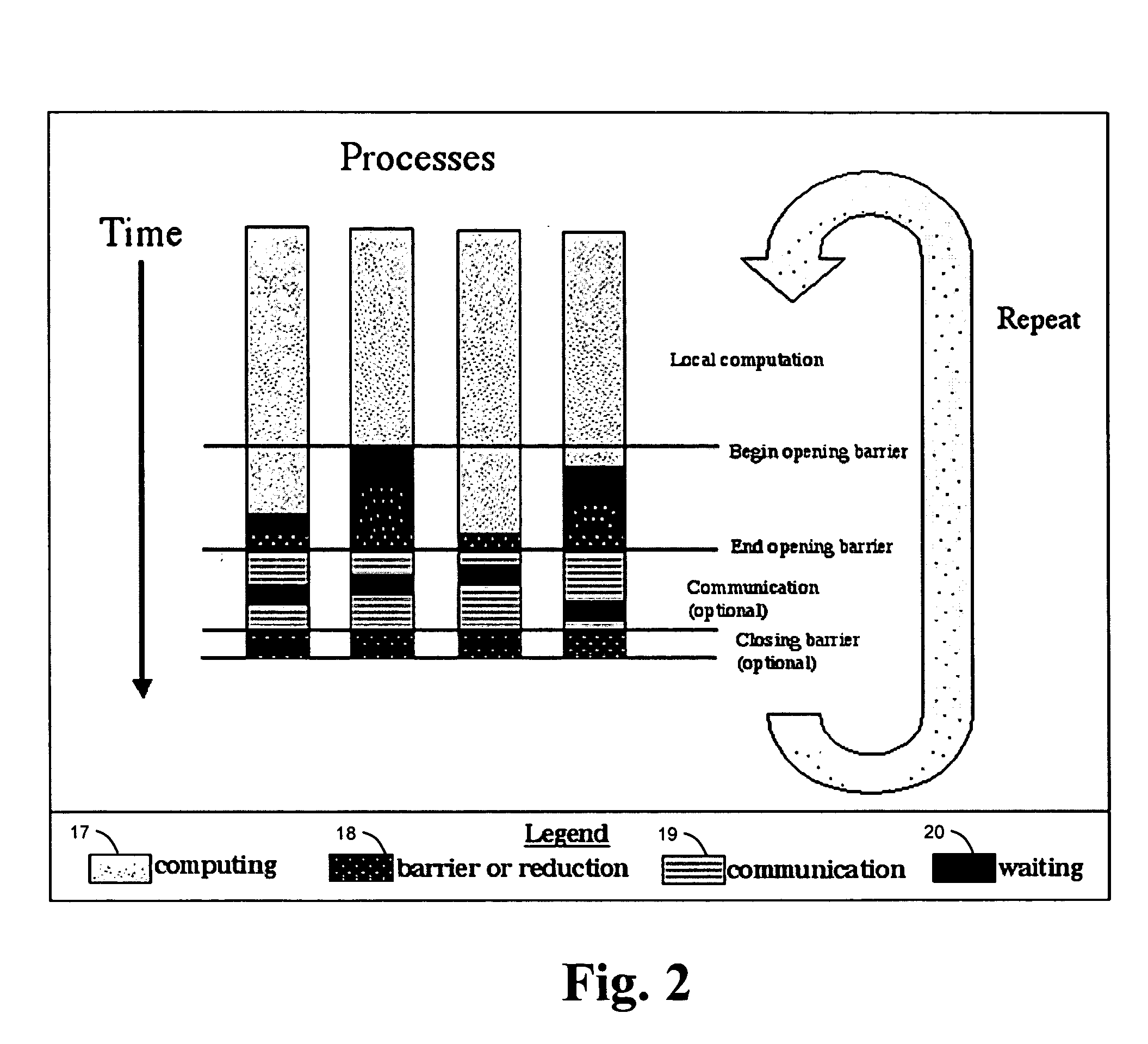

In a parallel computing environment comprising a network of SMP nodes each having at least one processor, a parallel-aware co-scheduling method and system for improving the performance and scalability of a dedicated parallel job having synchronizing collective operations. The method and system uses a global co-scheduler and an operating system kernel dispatcher adapted to coordinate interfering system and daemon activities on a node and across nodes to promote intra-node and inter-node overlap of said interfering system and daemon activities as well as intra-node and inter-node overlap of said synchronizing collective operations. In this manner, the impact of random short-lived interruptions, such as timer-decrement processing and periodic daemon activity, on synchronizing collective operations is minimized on large processor-count SPMD bulk-synchronous programming styles.

Owner:LAWRENCE LIVERMORE NAT SECURITY LLC

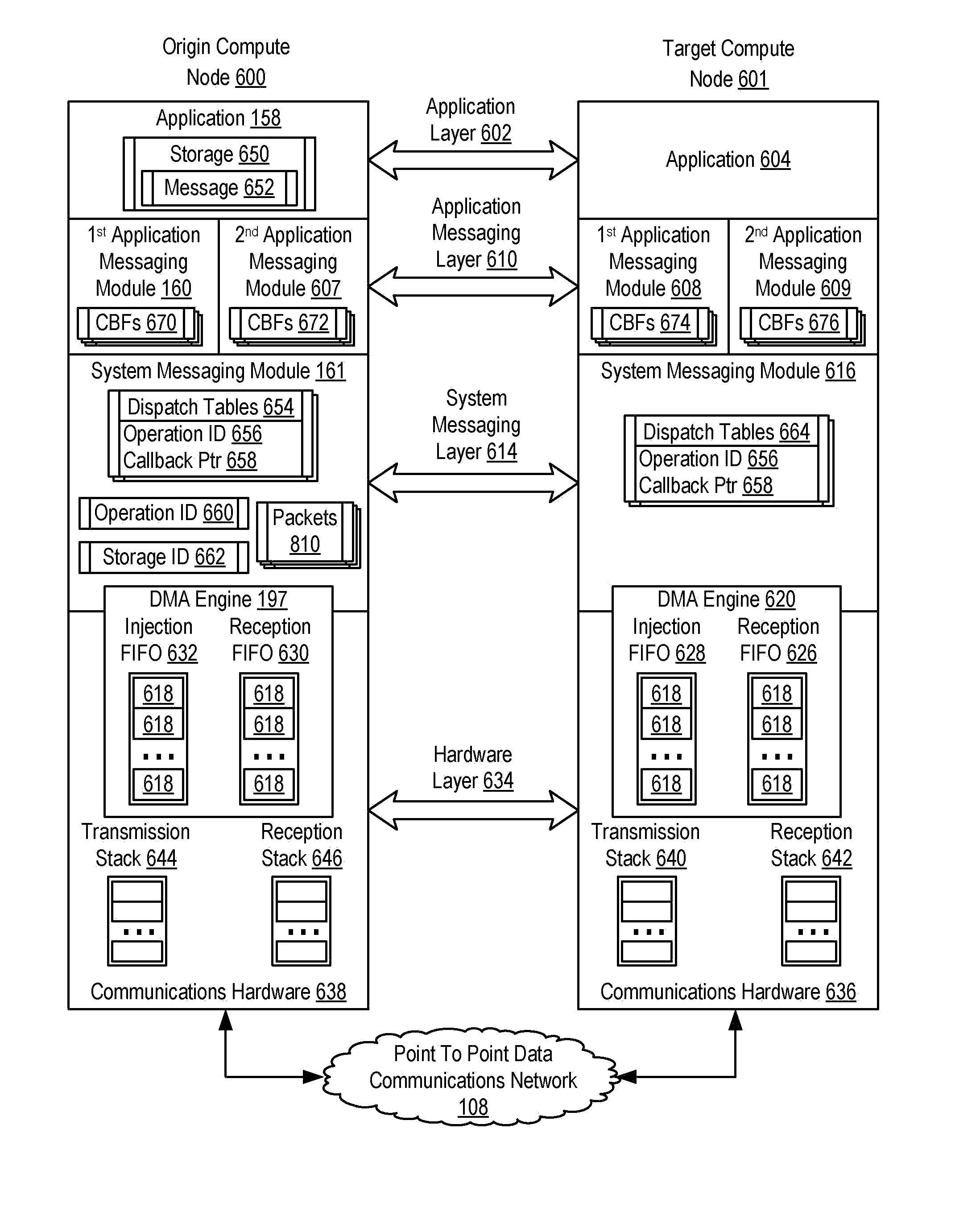

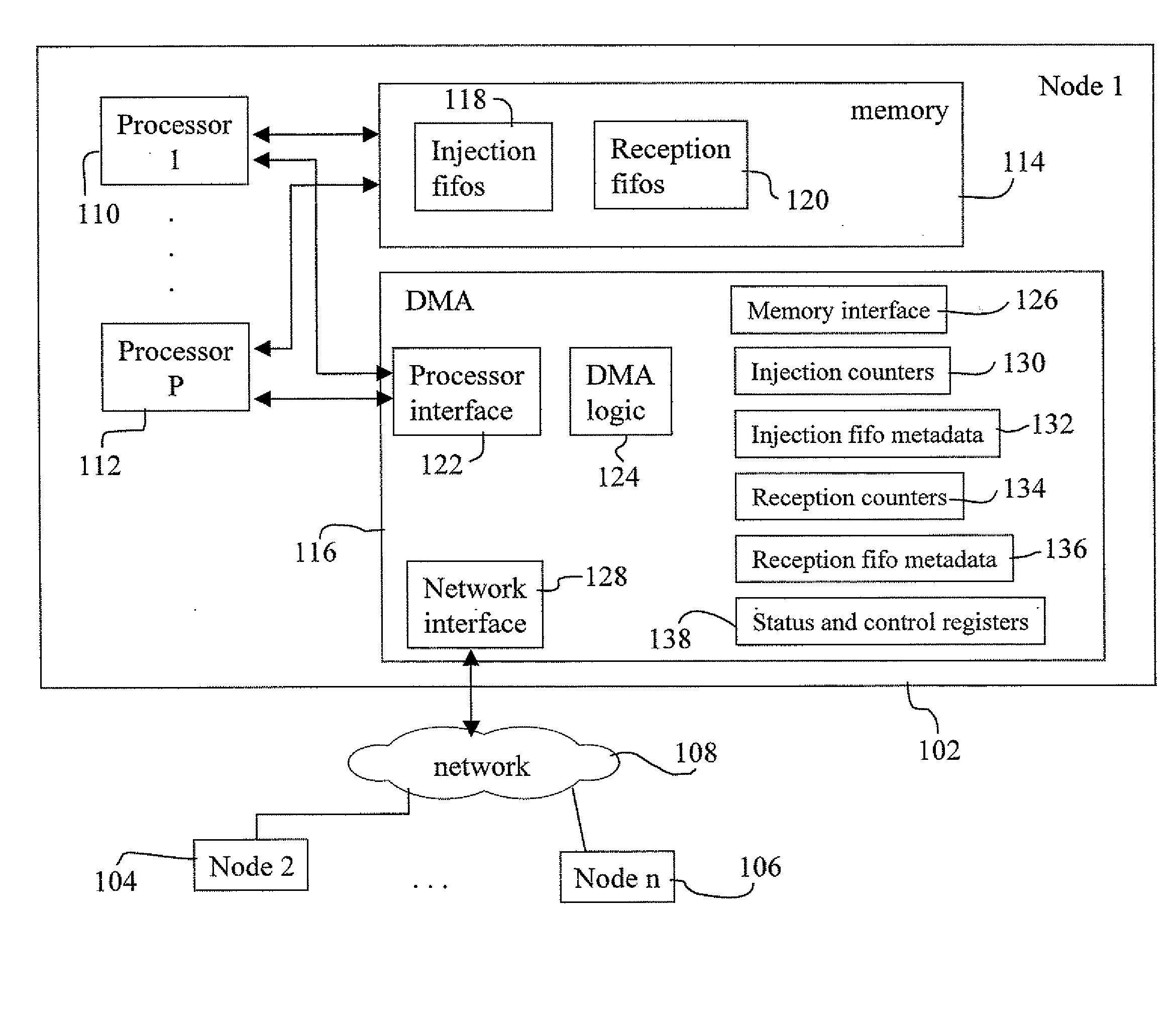

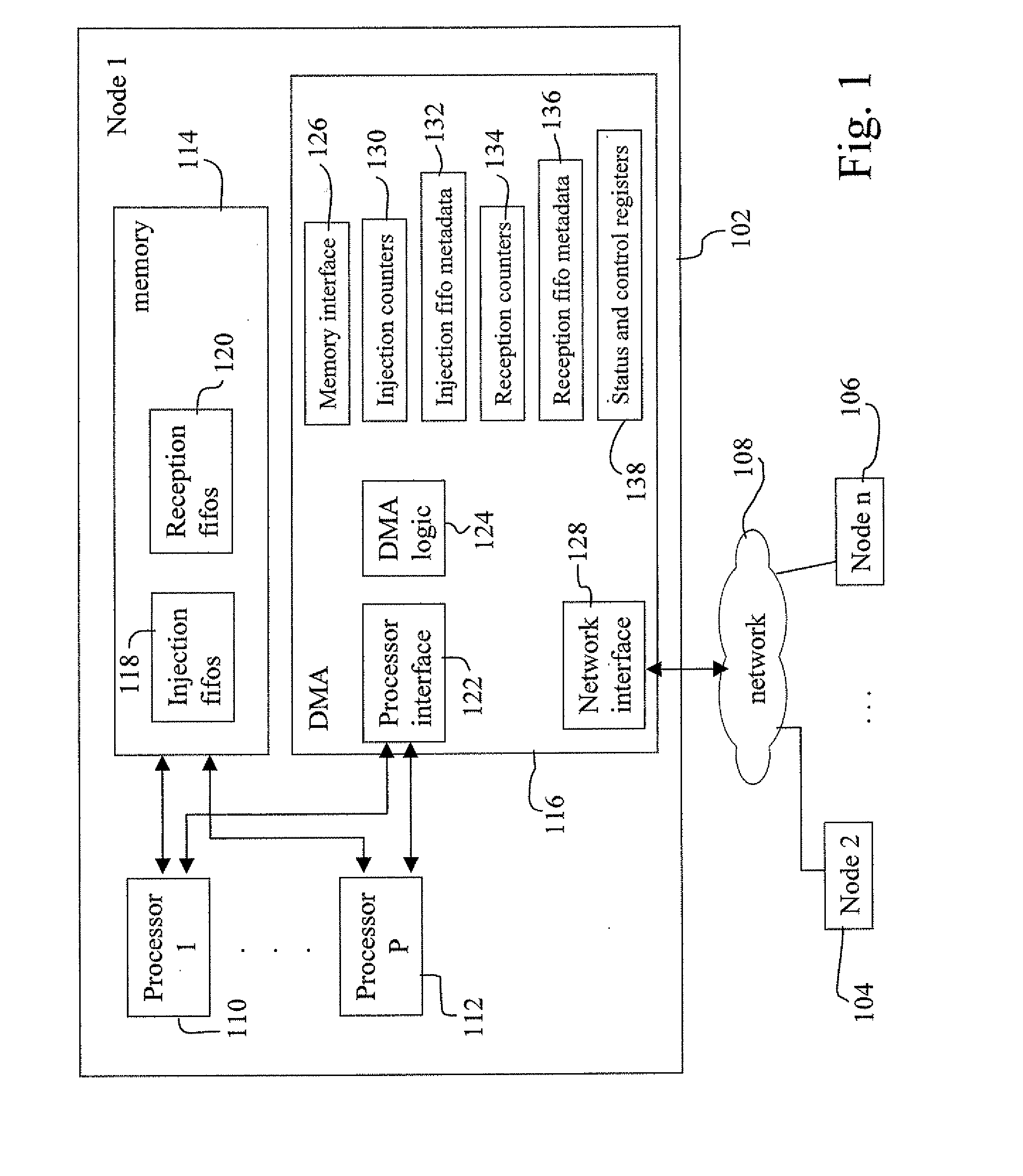

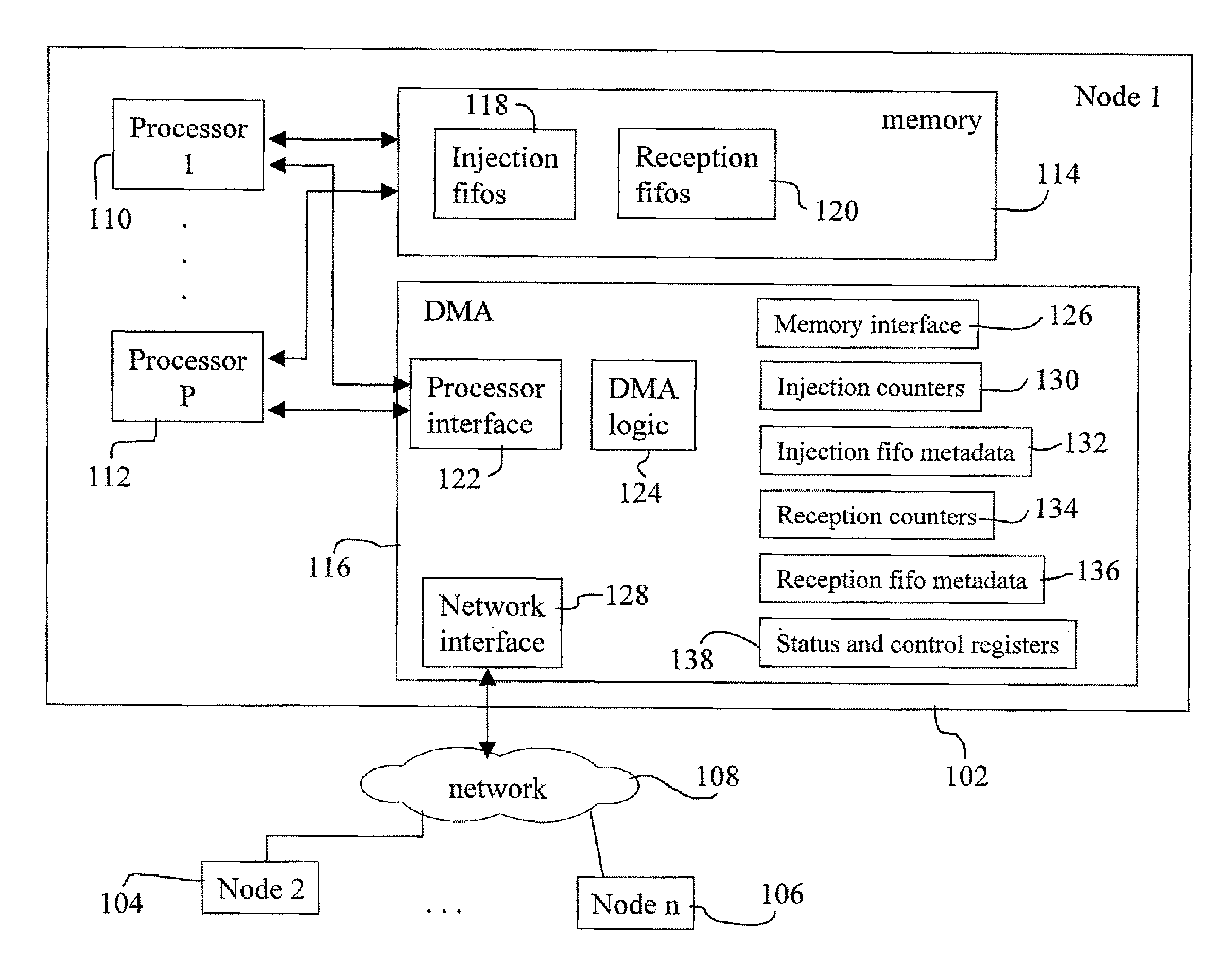

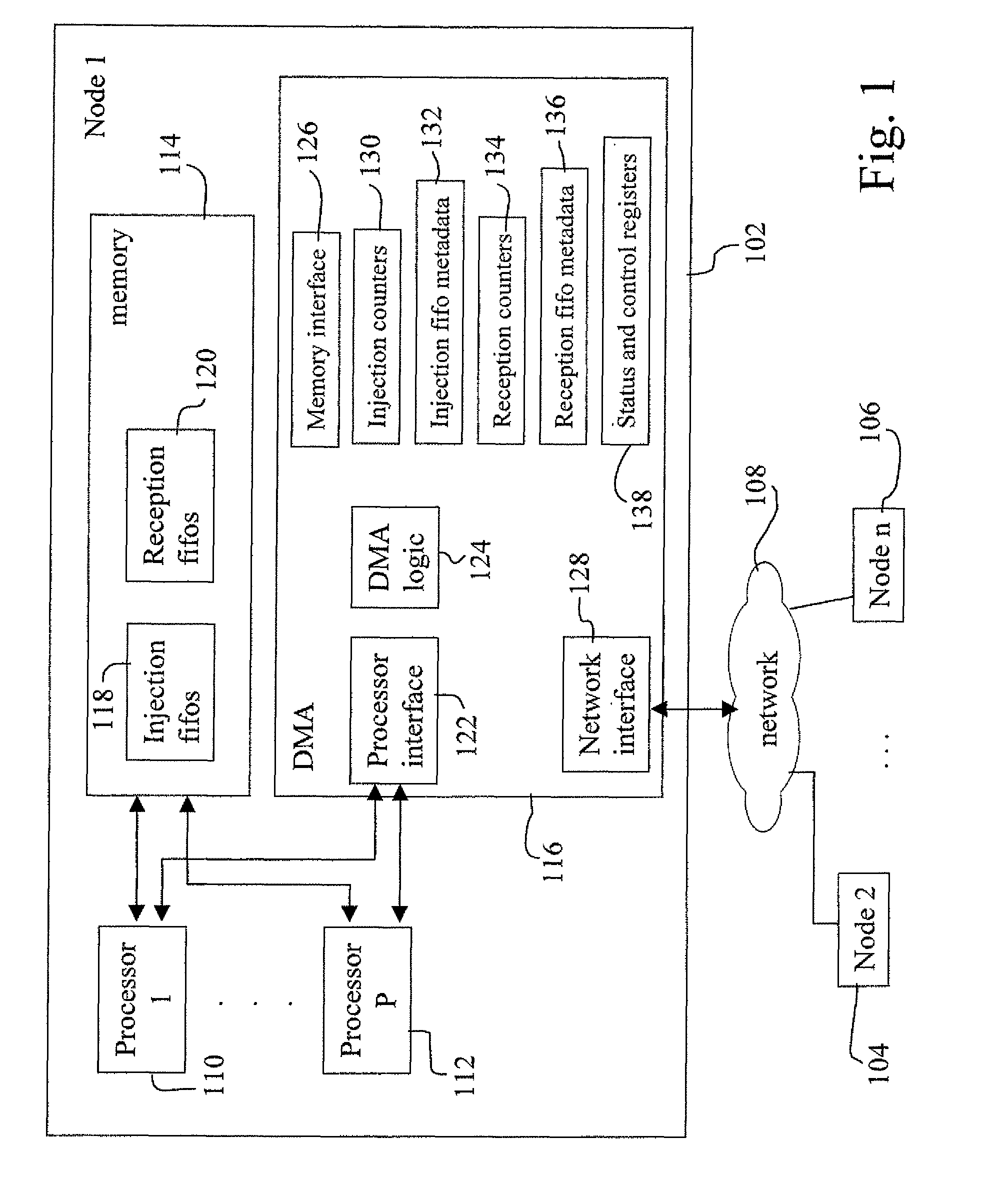

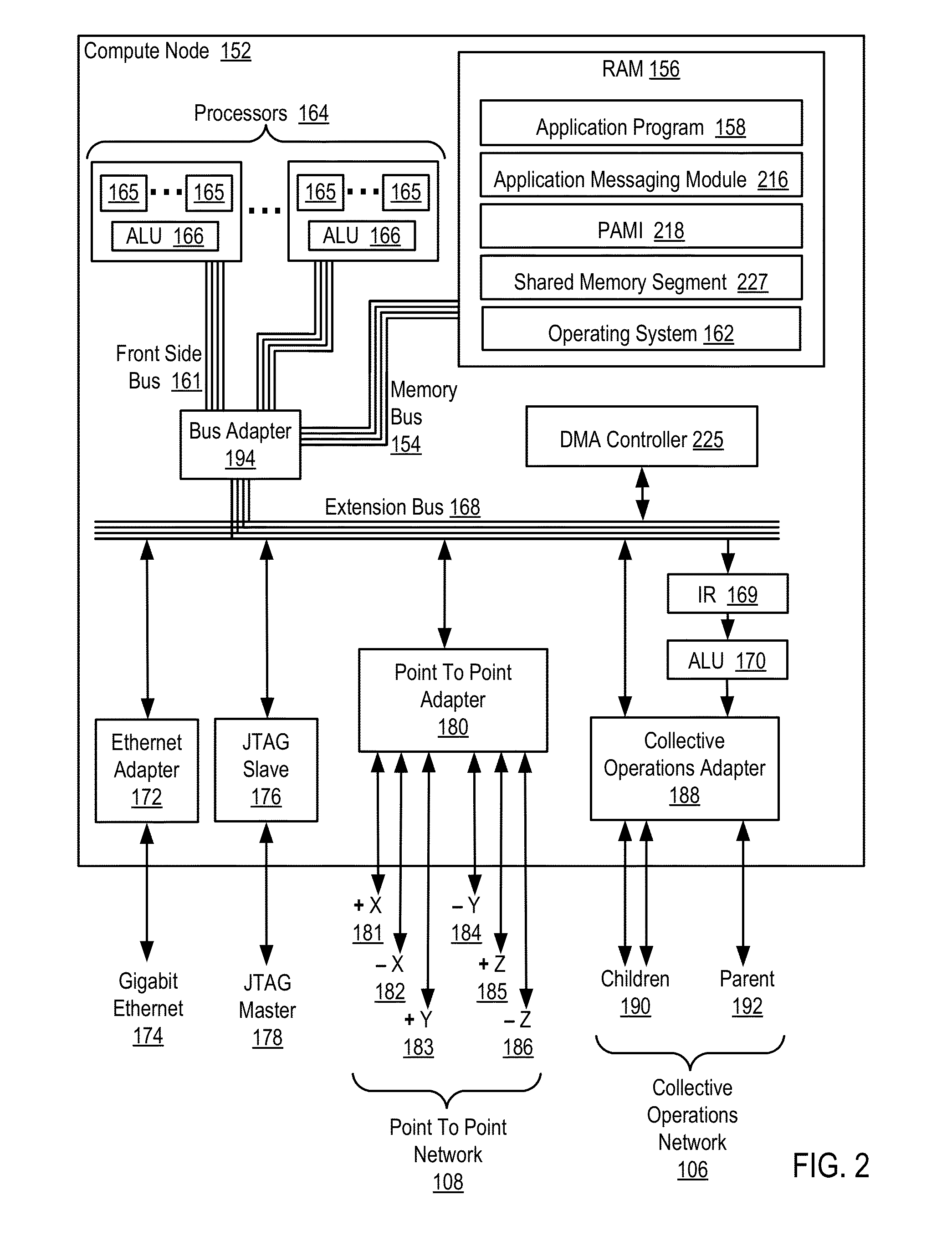

Remote DMA systems and methods for supporting synchronization of distributed processes in a multi-processor system using collective operations

InactiveUS20080109569A1Memory architecture accessing/allocationMultiple digital computer combinationsMulti processorRemote system

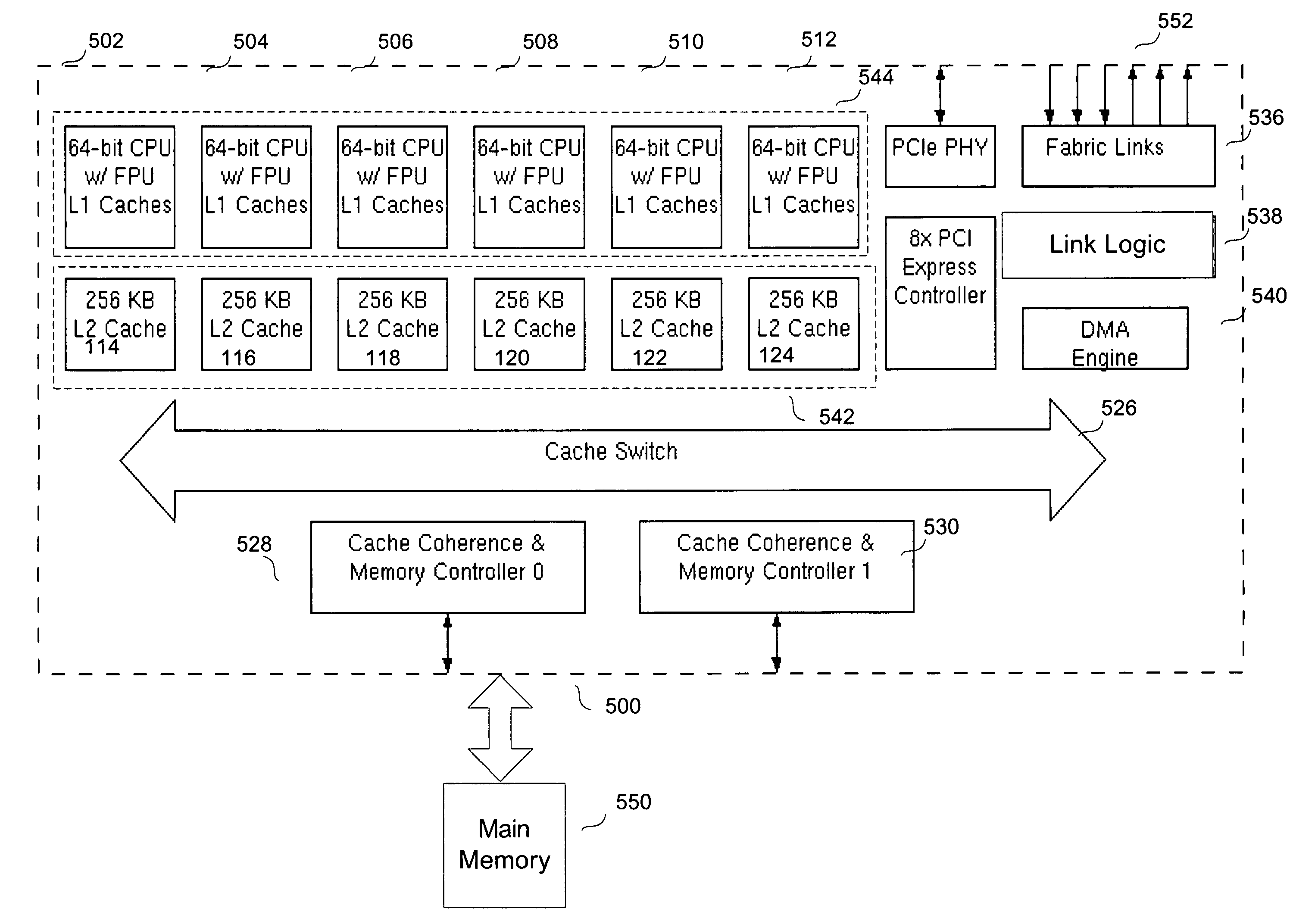

The invention relates to a remote DMA system, and methods for supporting synchronization of distributed processes in a multiprocessor system using collective operations. One aspect of the invention is a multi-node computer system having a plurality of interconnected processing nodes. This system uses DMA engines to perform collective operations synchronizing processes executing on a set of nodes. Each process in the set of processes causes the DMA engine on the node on which the process executes, to transmit a collective operation command to the master node when the process reaches a synchronization point in its execution. The DMA engine on the master node receives and executes the collective operations from the processes, and in response to receiving a pre-established number of the collective operations, conditionally executing the set of associated commands.

Owner:SICORTEX

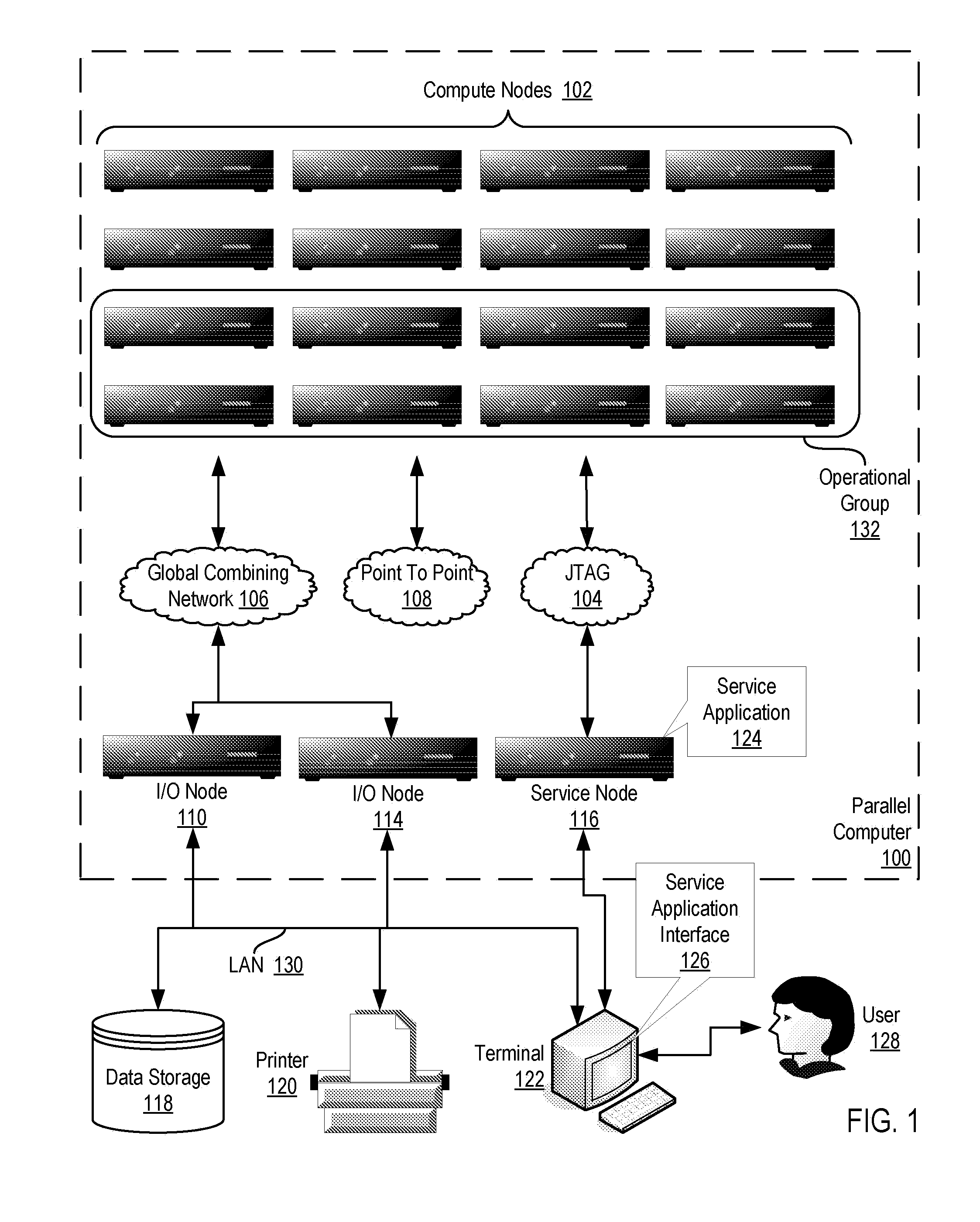

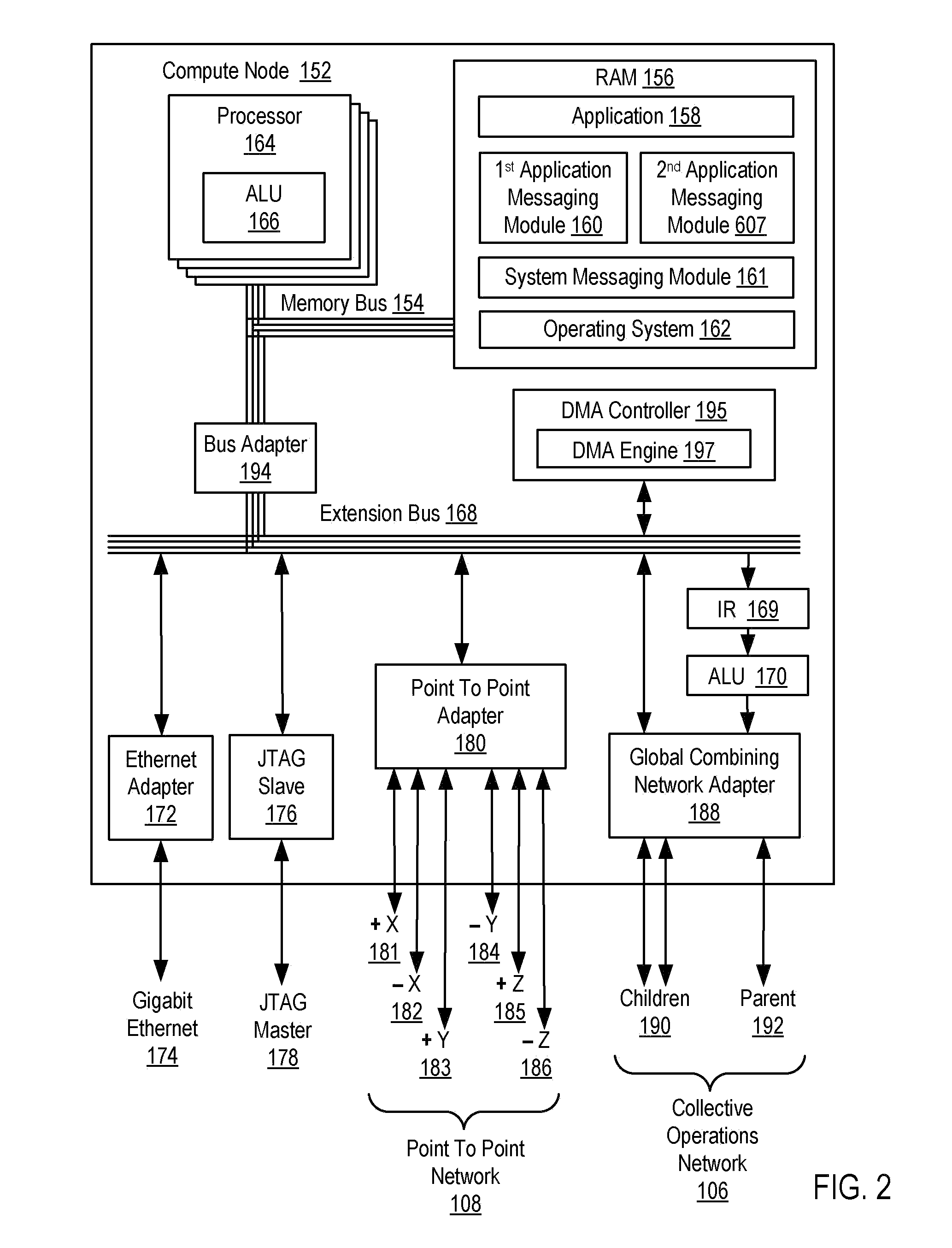

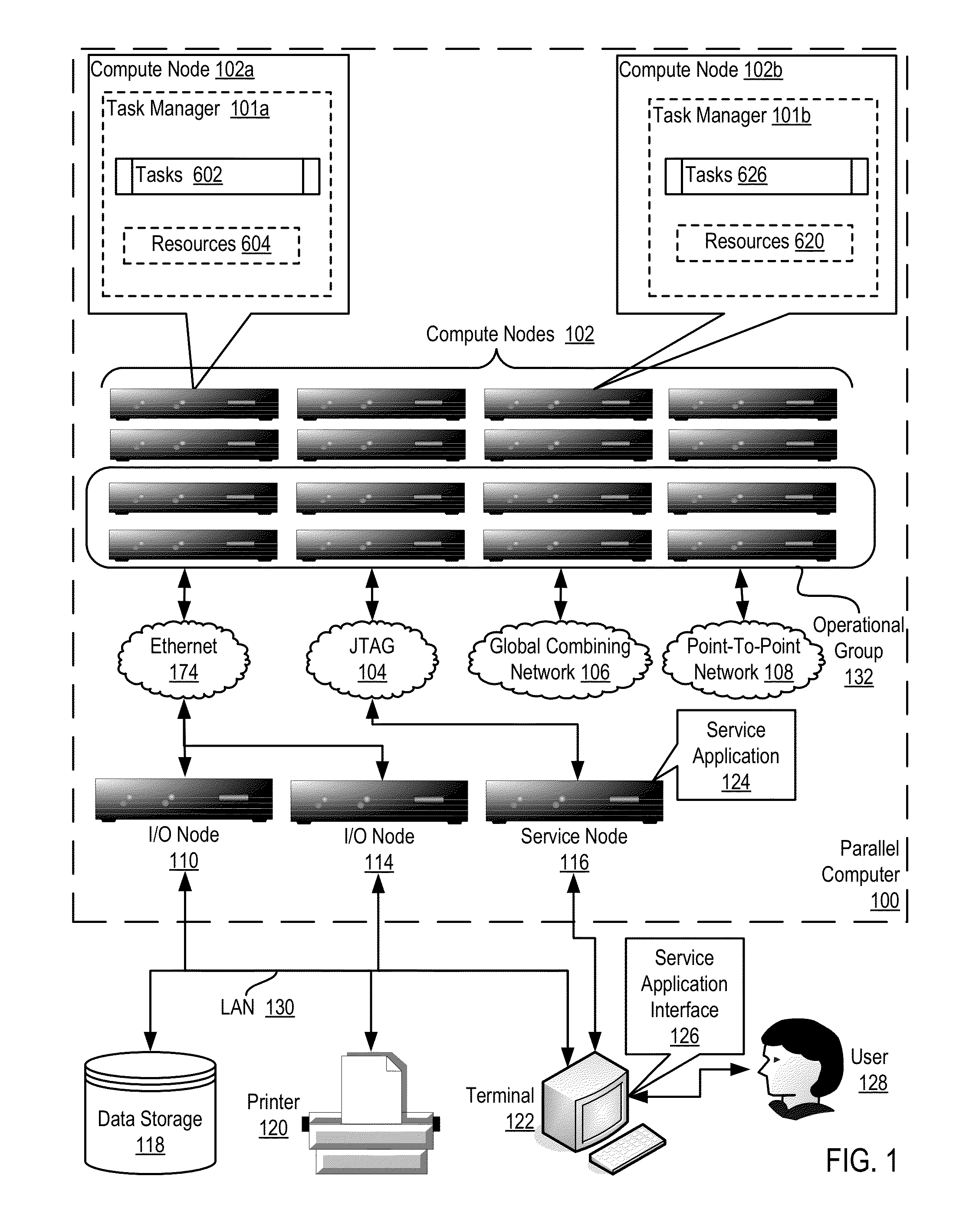

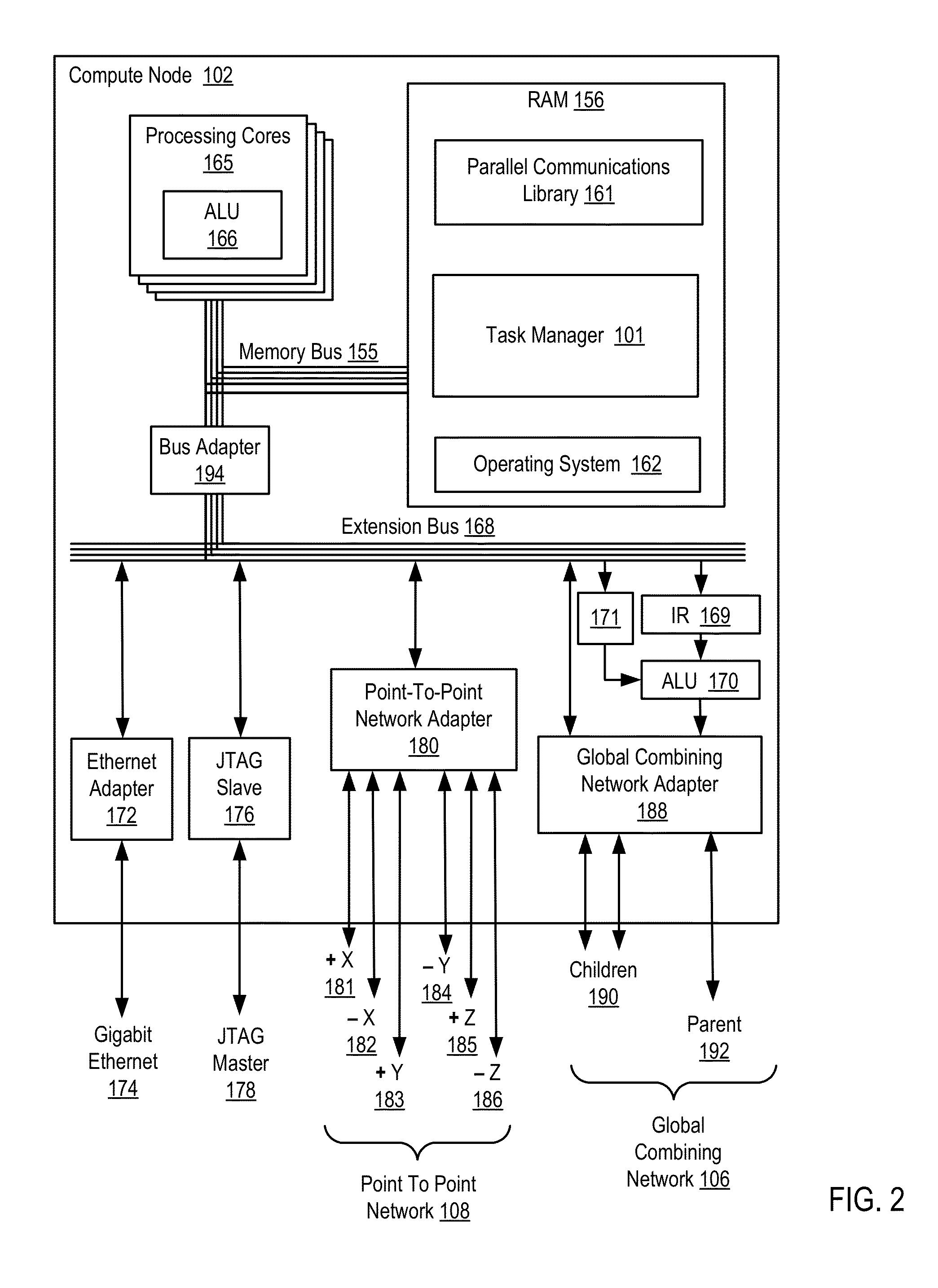

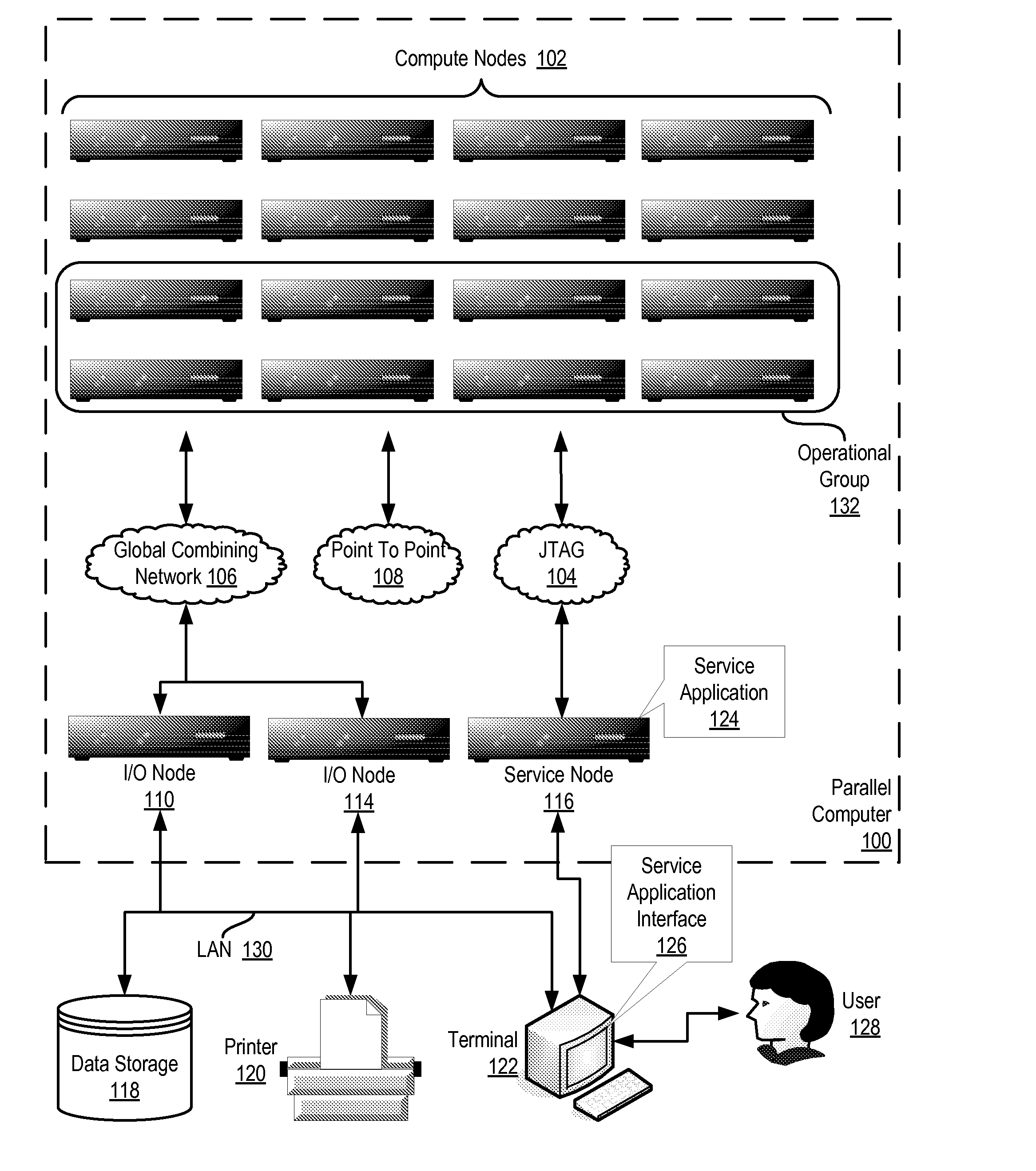

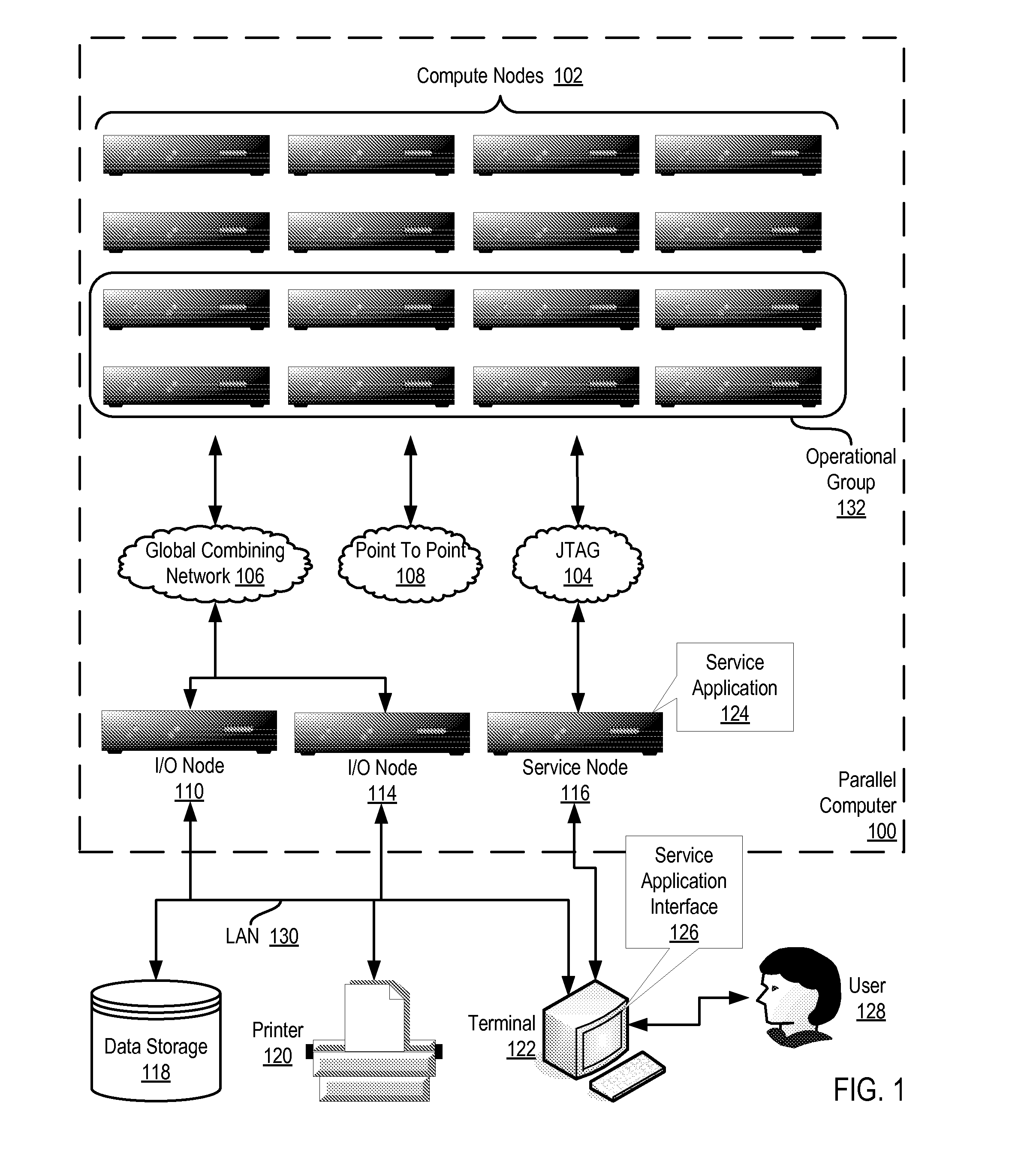

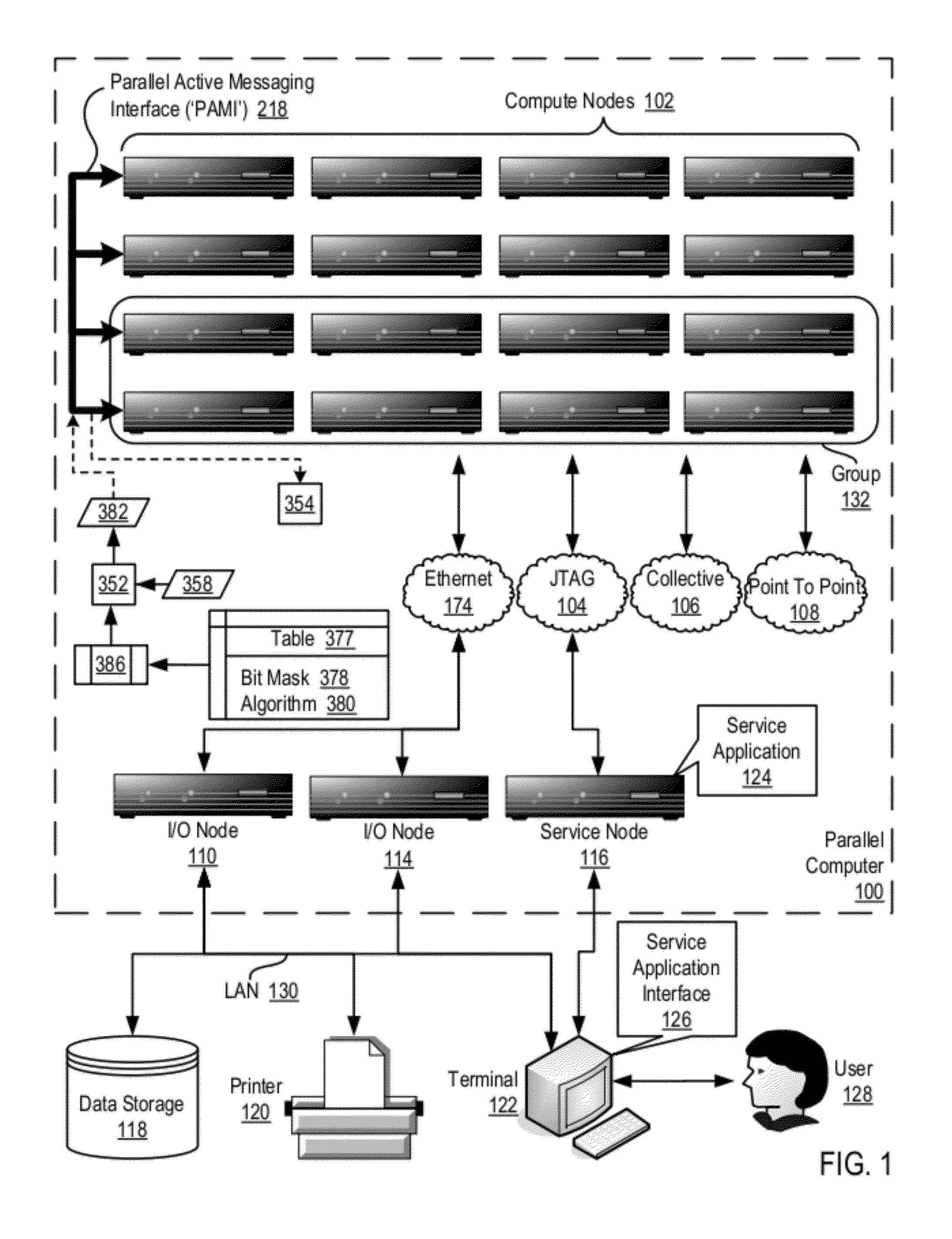

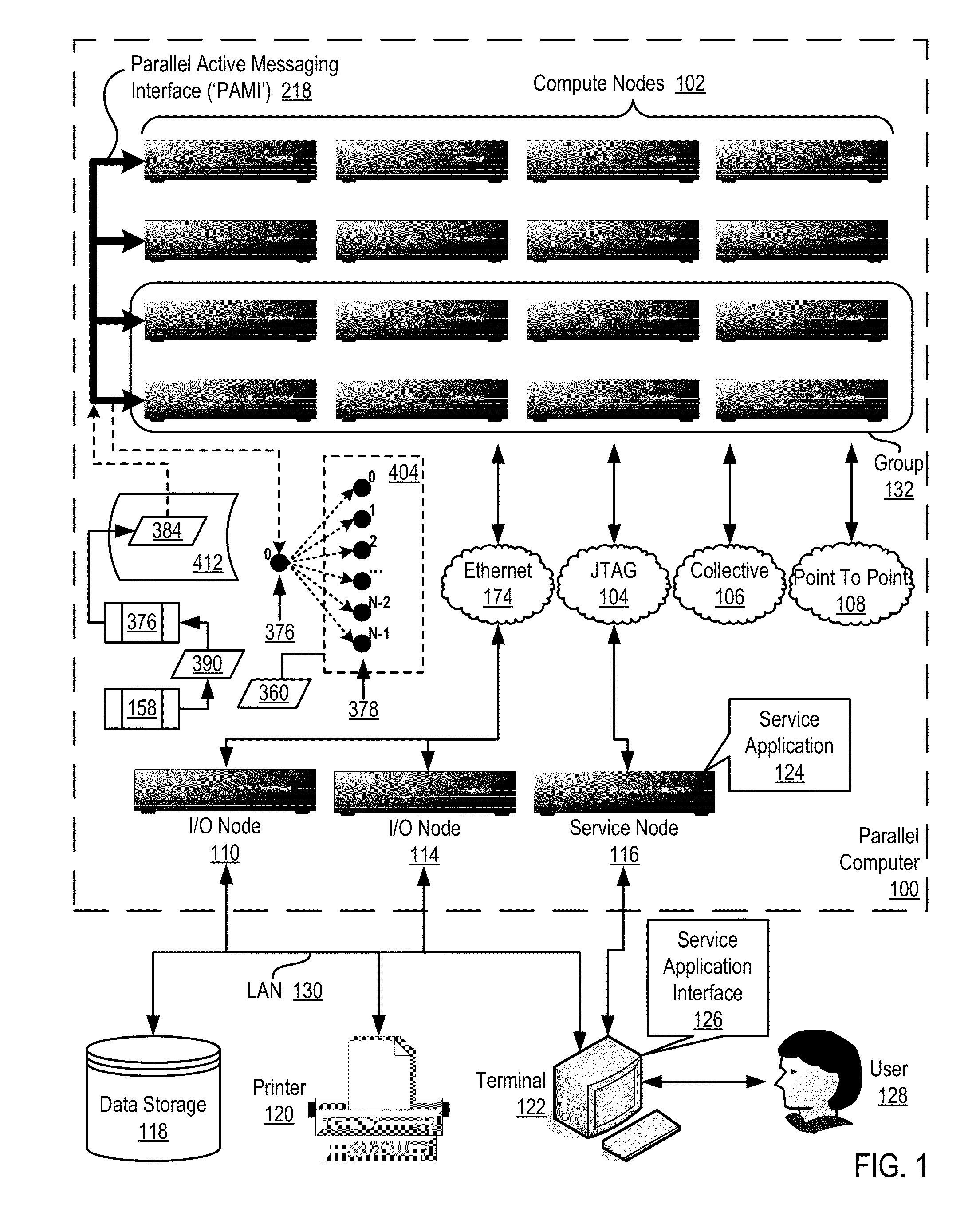

Dispatching Packets on a Global Combining Network of a Parallel Computer

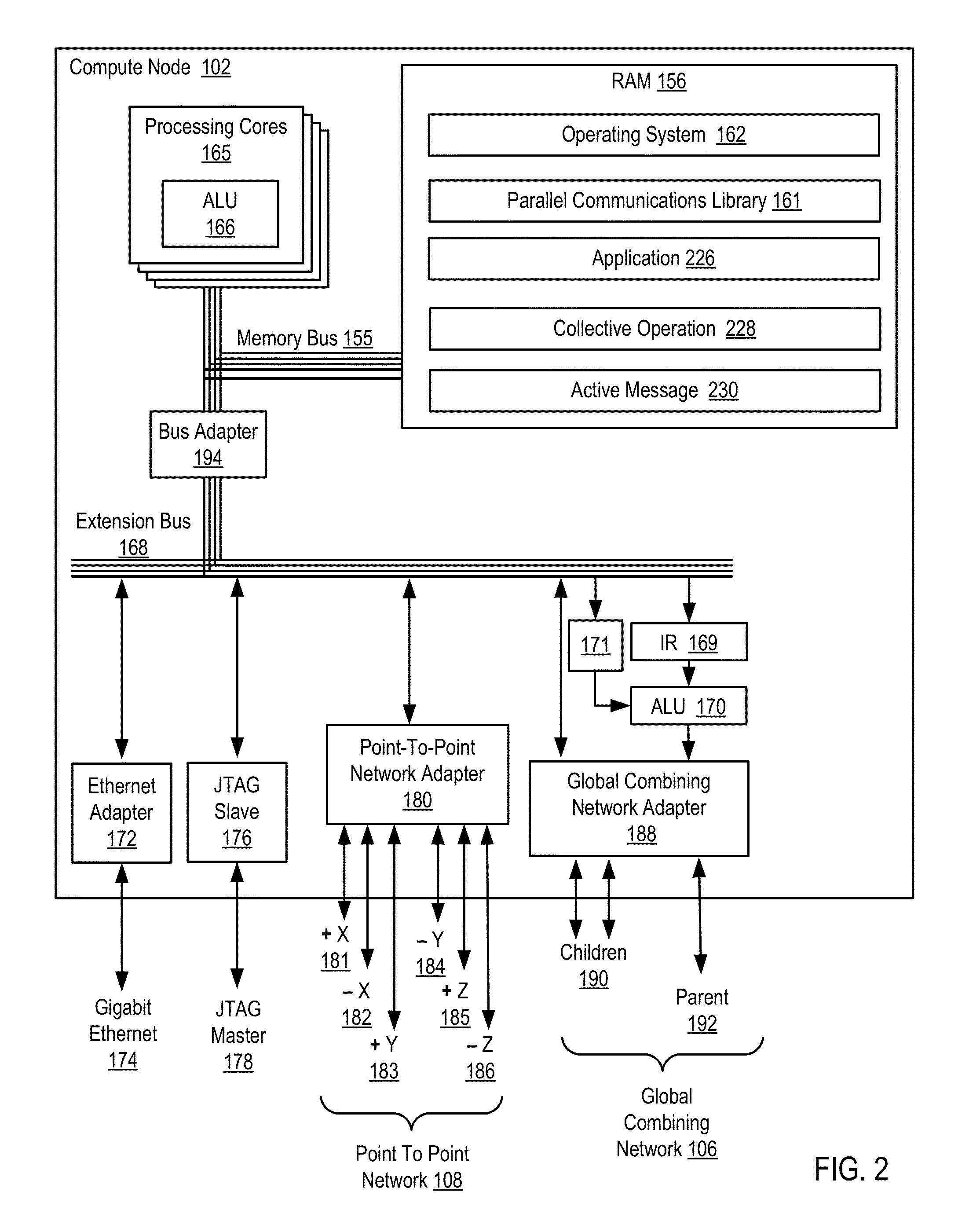

Methods, apparatus, and products are disclosed for dispatching packets on a global combining network of a parallel computer comprising a plurality of nodes connected for data communications using the network capable of performing collective operations and point to point operations that include: receiving, by an origin system messaging module on an origin node from an origin application messaging module on the origin node, a storage identifier and an operation identifier, the storage identifier specifying storage containing an application message for transmission to a target node, and the operation identifier specifying a message passing operation; packetizing, by the origin system messaging module, the application message into network packets for transmission to the target node, each network packet specifying the operation identifier and an operation type for the message passing operation specified by the operation identifier; and transmitting, by the origin system messaging module, the network packets to the target node.

Owner:IBM CORP

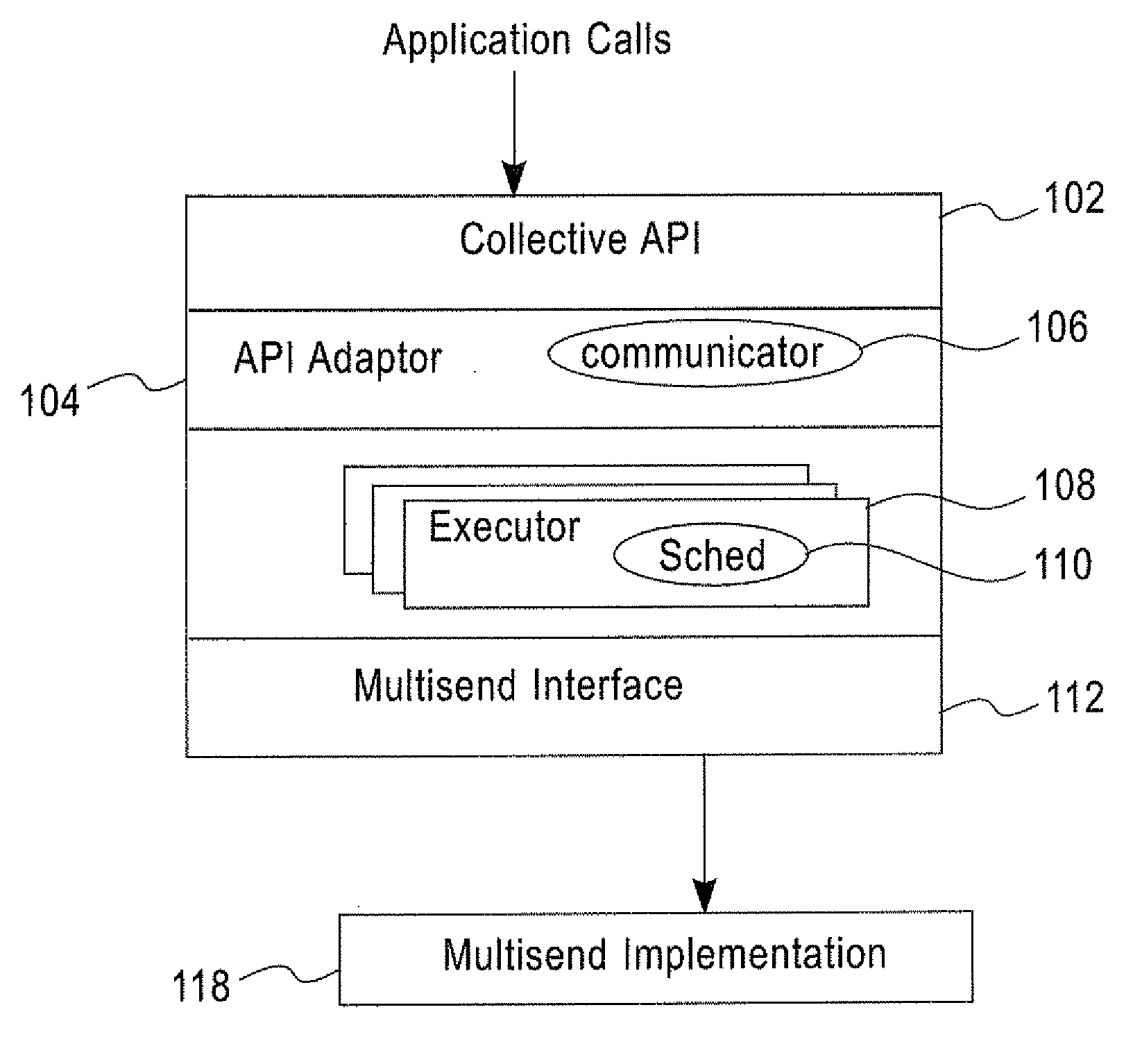

Mechanism to support generic collective communication across a variety of programming models

InactiveUS20090006810A1Interprogram communicationGeneral purpose stored program computerCollective communicationTime schedule

A system and method for supporting collective communications on a plurality of processors that use different parallel programming paradigms, in one aspect, may comprise a schedule defining one or more tasks in a collective operation an executor that executes the task, a multisend module to perform one or more data transfer functions associated with the tasks, and a connection manager that controls one or more connections and identifies an available connection. The multisend module uses the available connection in performing the one or more data transfer functions. A plurality of processors that use different parallel programming paradigms can use a common implementation of the schedule module, the executor module, the connection manager and the multisend module via a language adaptor specific to a parallel programming paradigm implemented on a processor.

Owner:IBM CORP

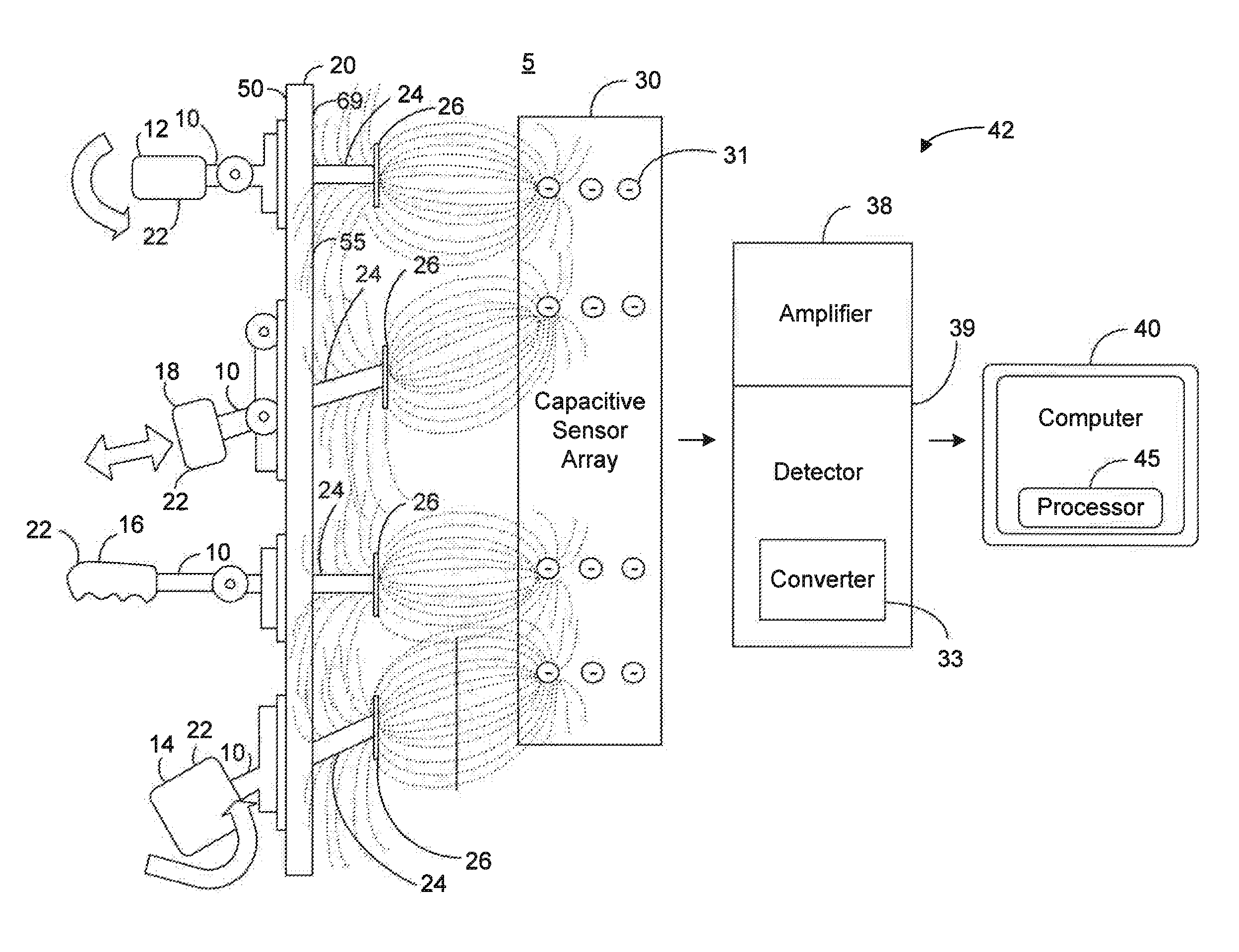

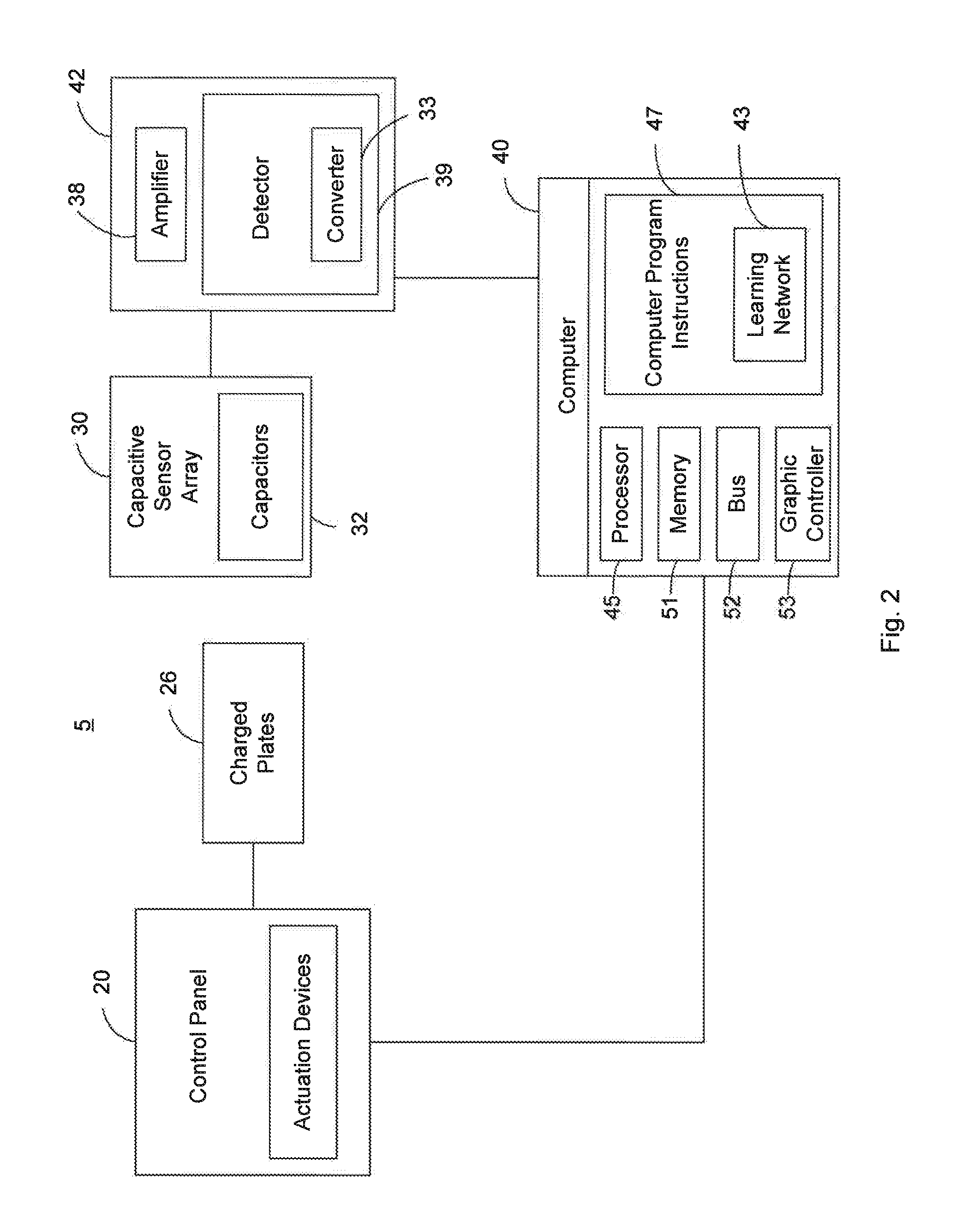

System, method, and computer program product to provide wireless sensing based on an aggregate magnetic field reading

ActiveUS9329020B1Using electrical meansElectrical/magnetic solid deformation measurementSensor arrayEngineering

A system including a plurality of actuation devices with each actuation device configured to have a magnetized part which moves to a designated position representative of a designated manipulation, when at least one actuation device is manipulated, a magnetic sensor array configured to measure a composite magnetic field produced by the magnetized parts of the plurality of actuation devices, an acquisition device configured to acquire magnetic field data from the sensor array indicative of the measured composite magnetic field, a converter configured to convert the acquired composite magnetic field data into analog values, and a processor configured to evaluate to the analog values to determine which of the at least one activation device is manipulated, how the manipulation reflects collective operation of the plurality of actuation devices, and / or to provide a response indicative of the manipulation. A method and a computer program product are also disclosed.

Owner:LOCKHEED MARTIN CORP

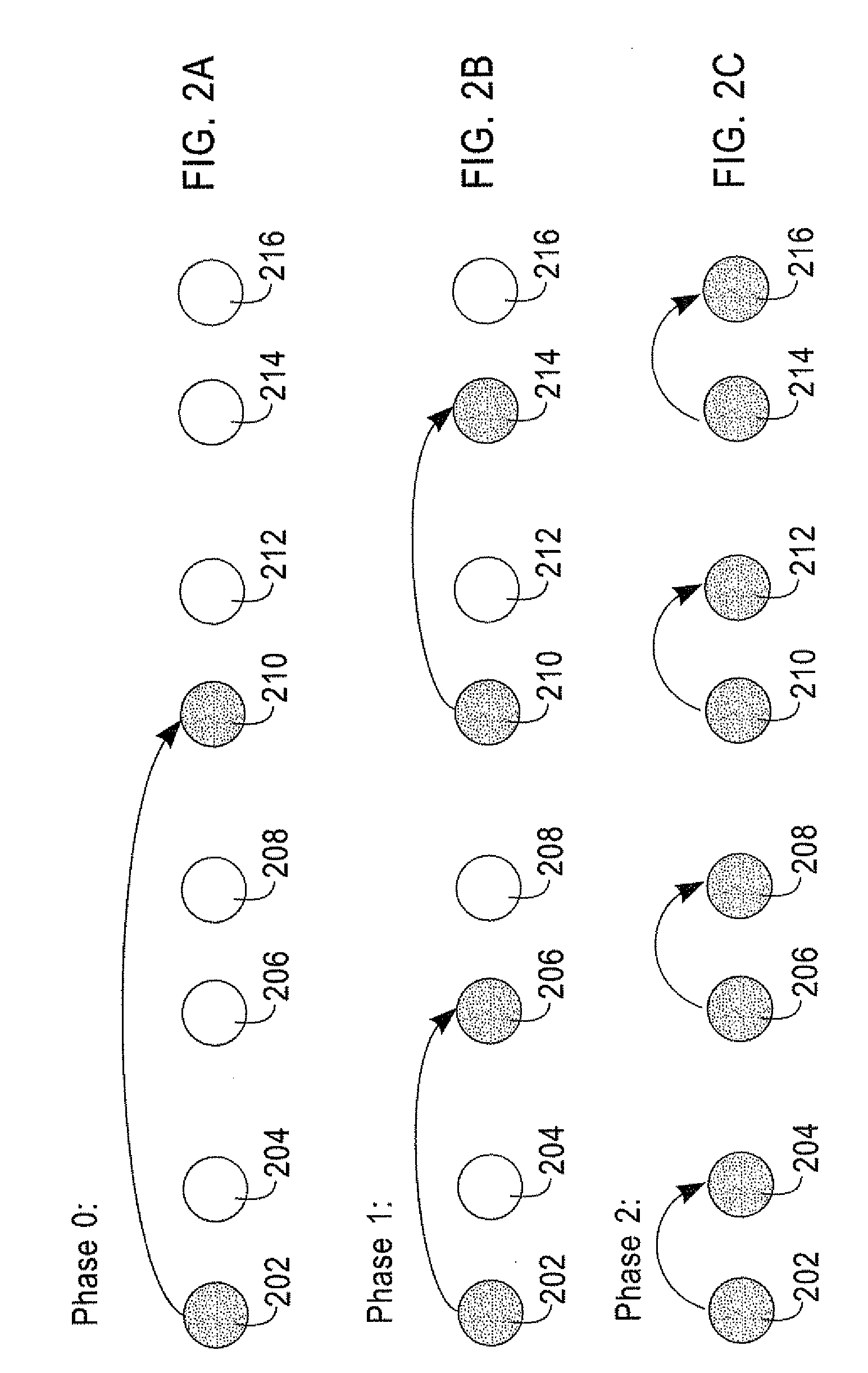

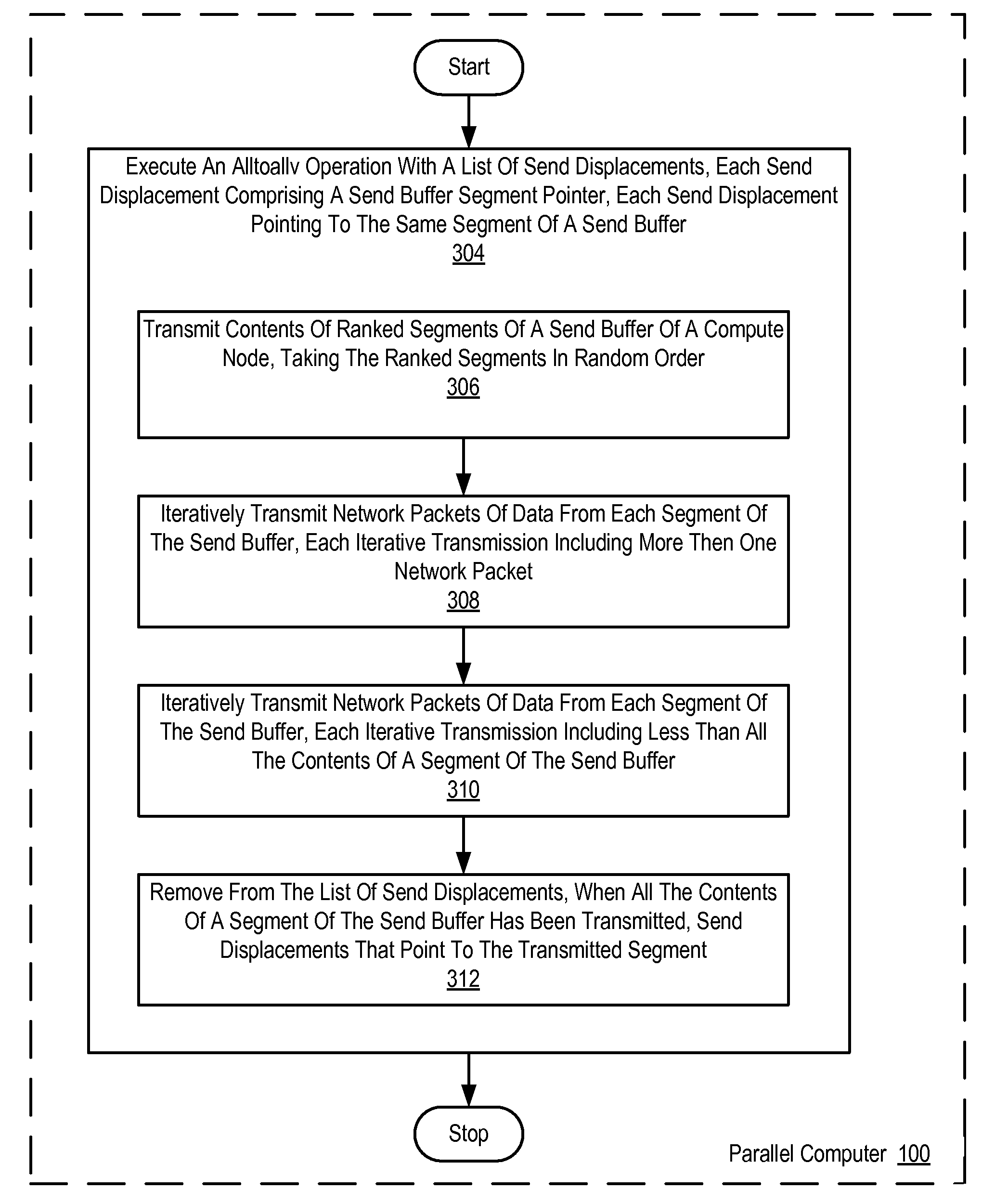

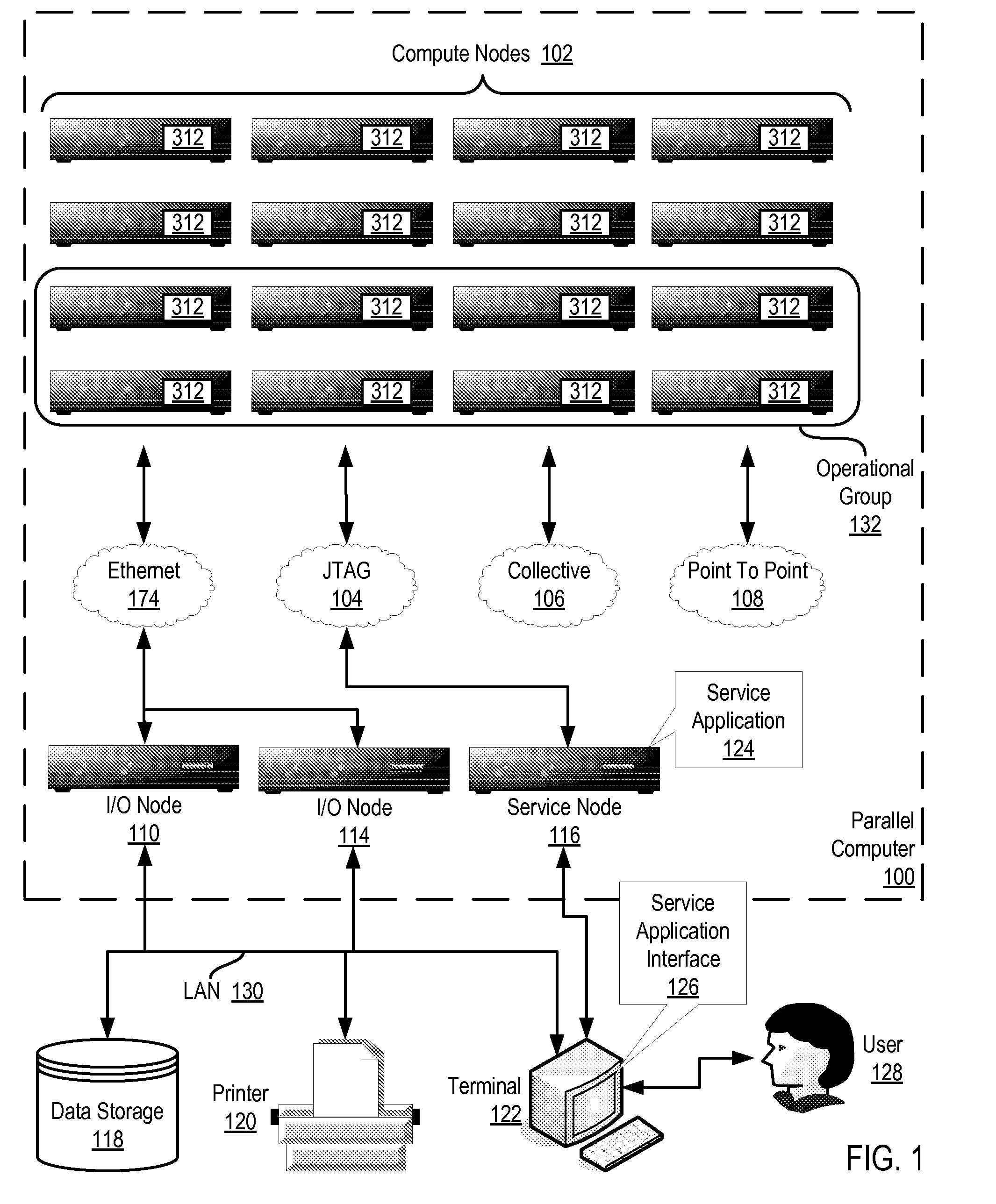

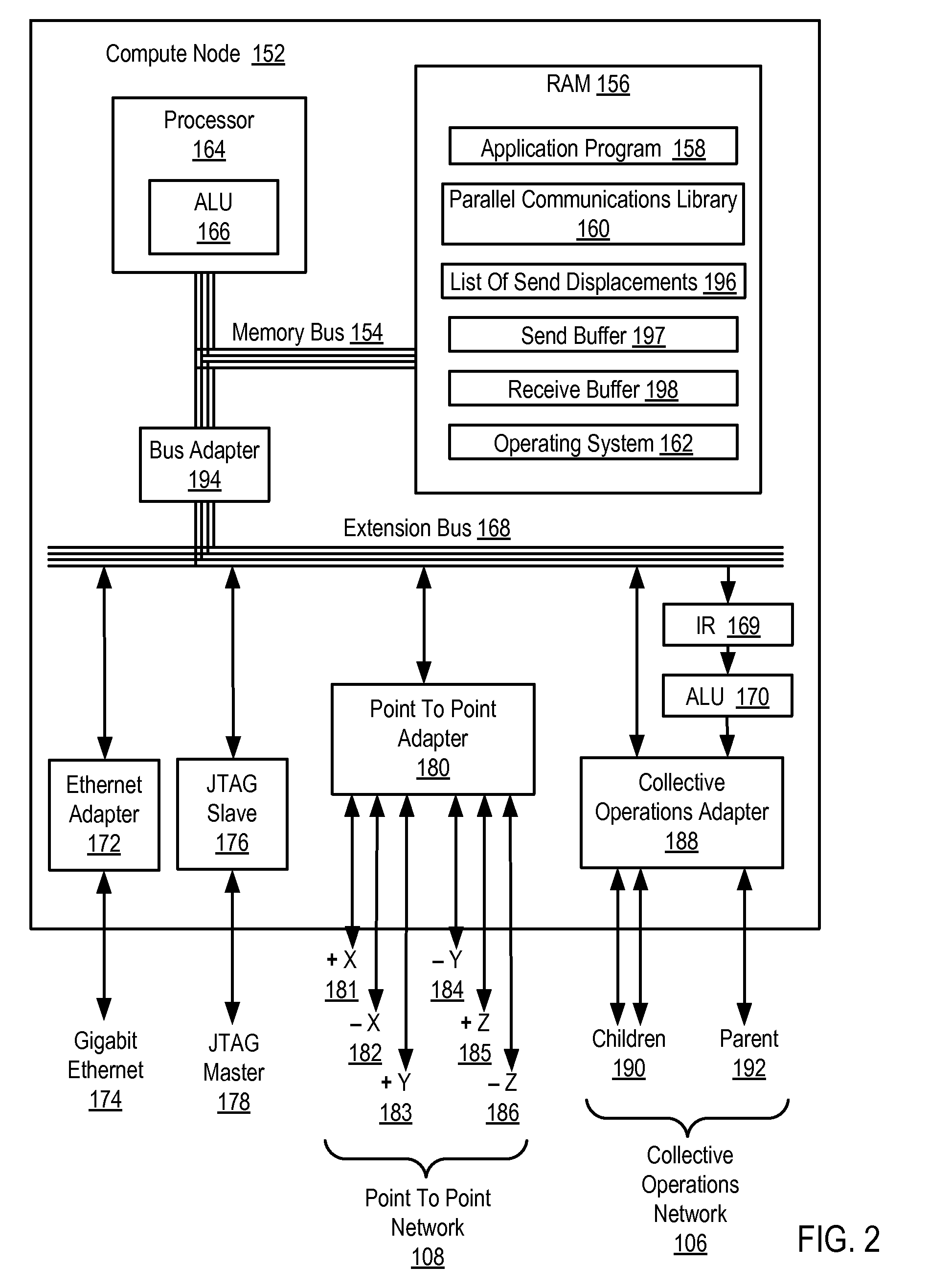

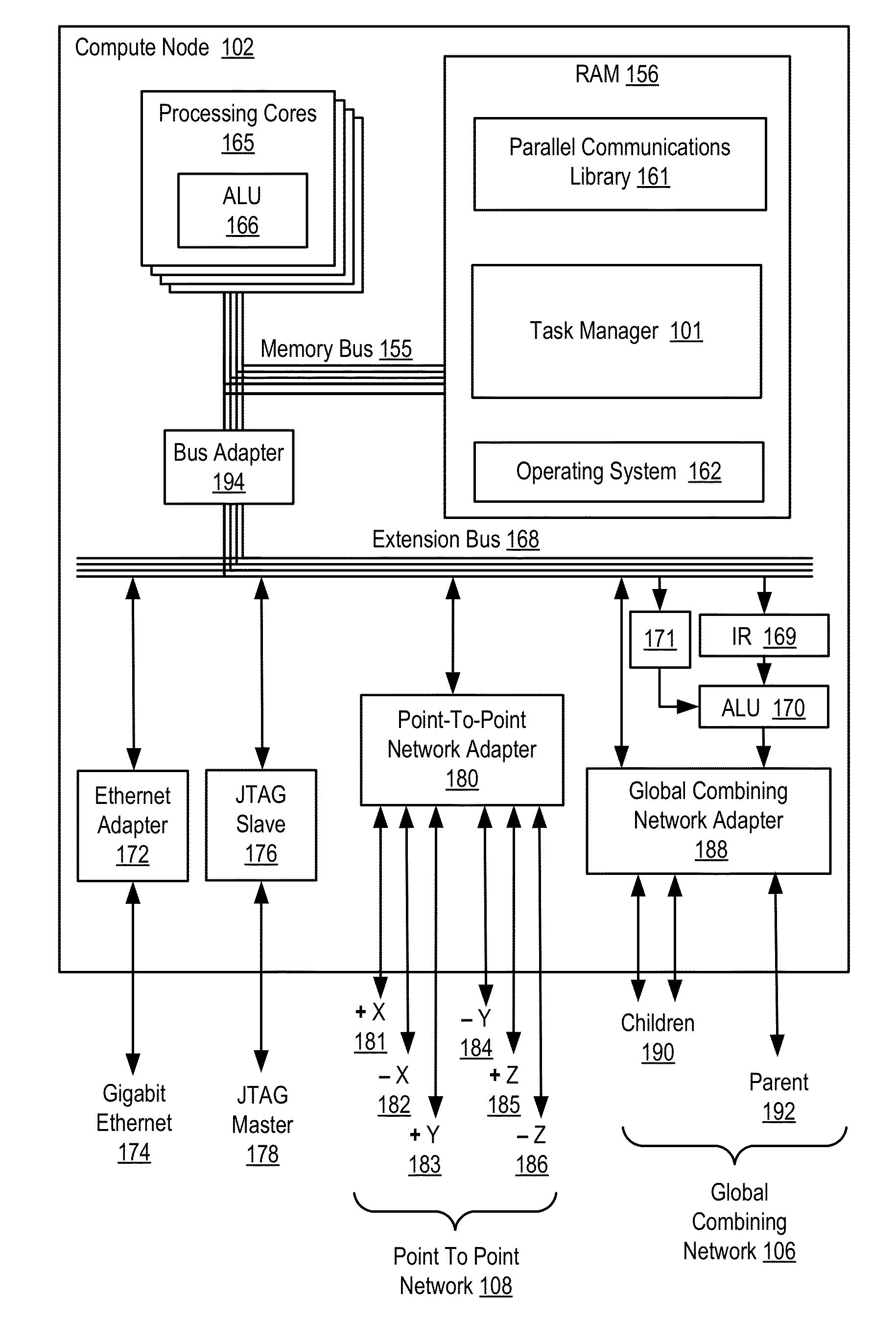

Executing an allgather operation with an alltoallv operation in a parallel computer

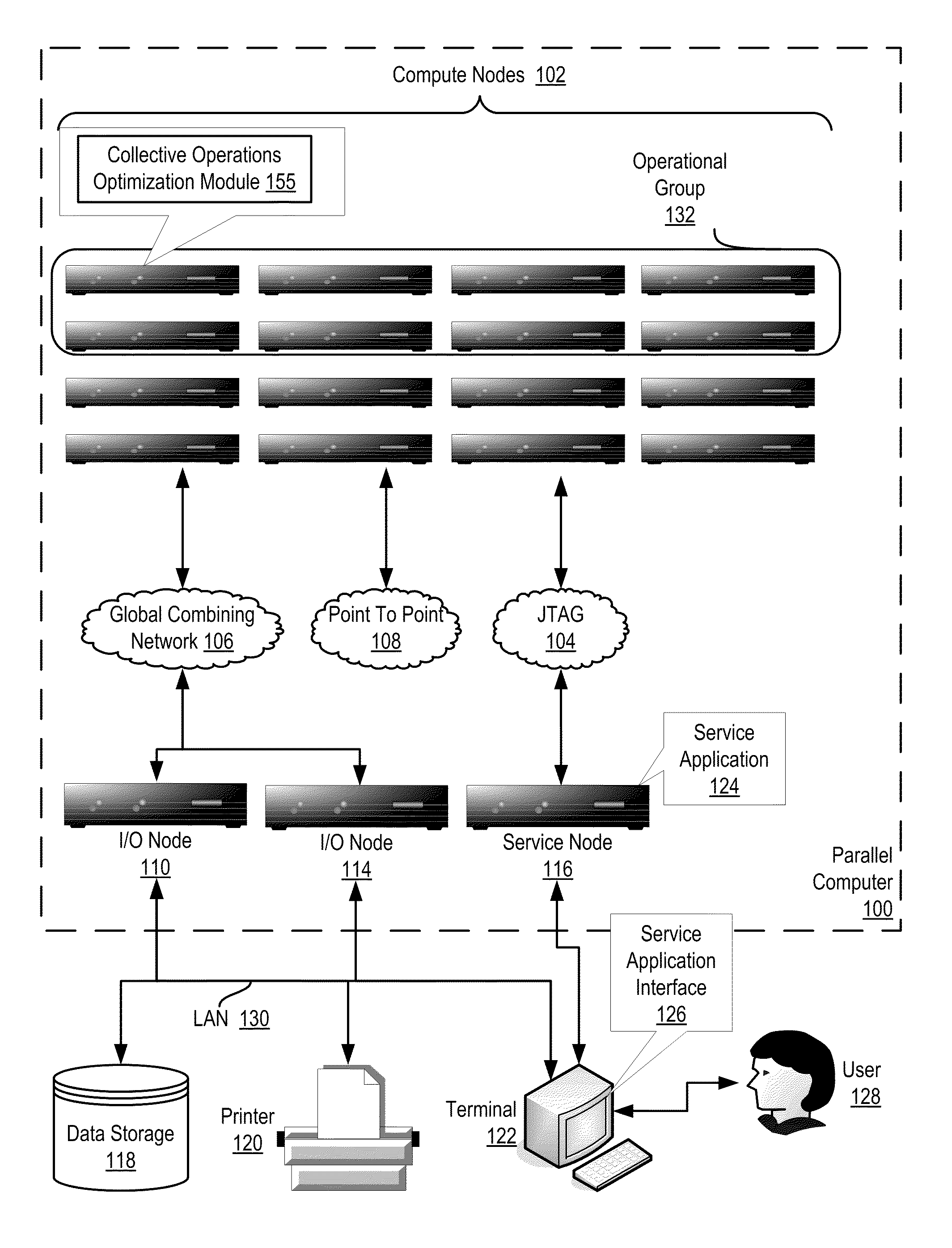

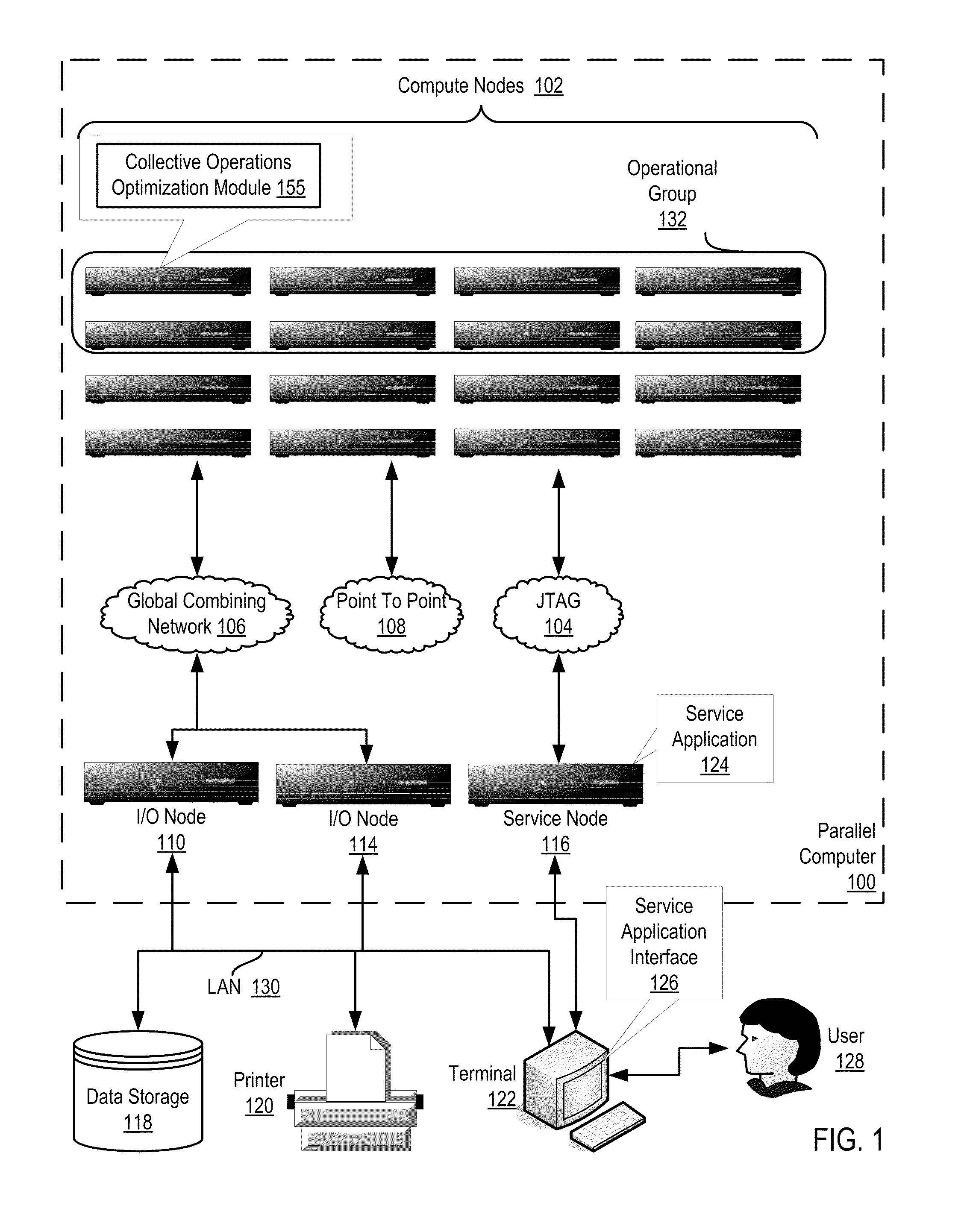

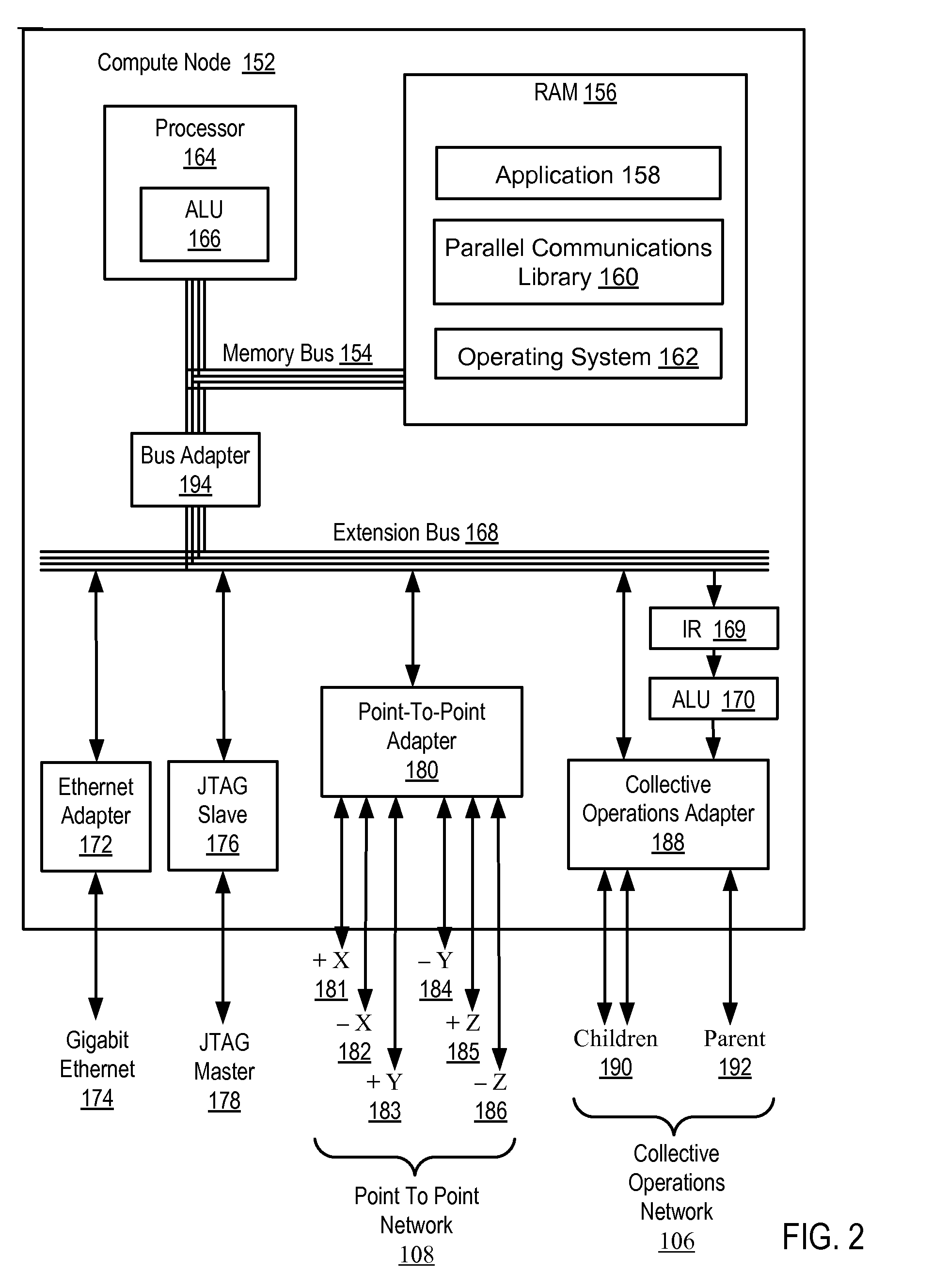

Executing an allgather operation on a parallel computer, including executing an alltoallv operation with a list of send displacements, where each send displacement is a send buffer segment pointer, each send displacement points to the same segment of a send buffer, the parallel computer includes a plurality of compute nodes, each compute node includes a send buffer, the compute nodes are organized into at least one operational group of compute nodes for collective operations, each compute node in the operational group is assigned a unique rank, and each send buffer is segmented according to the ranks.

Owner:IBM CORP

Performing Collective Operations In A Distributed Processing System

InactiveUS20130018947A1Multiple digital computer combinationsProgram controlHandling systemNetwork topology

Methods, apparatuses, and computer program products for performing collective operations on a hybrid distributed processing system are provided. The hybrid distributed processing system includes a plurality of compute nodes where each compute node has a plurality of tasks, each task is assigned a unique rank, and each compute node is coupled for data communications by at least one data communications network implementing at least two different networking topologies. At least one of the two networking topologies is a tiered tree topology having a root task and at least two child tasks and the at least two child tasks are peers of one another in the same tier. Embodiments include for each task, sending at least a portion of data corresponding to the task to all child tasks of the task through the tree topology; and sending at least a portion of the data corresponding to the task to all peers of the task at the same tier in the tree topology through the second topology.

Owner:IBM CORP

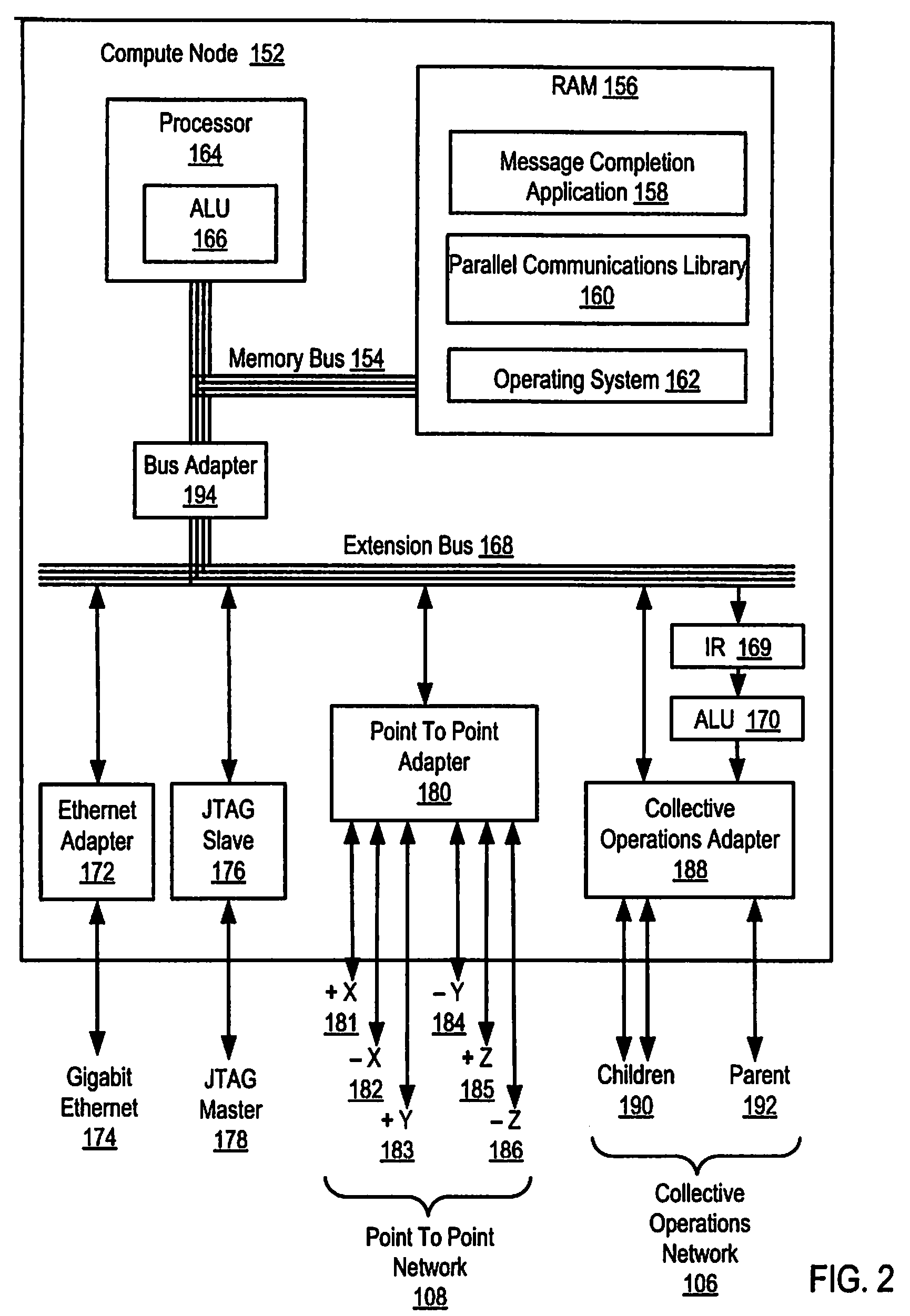

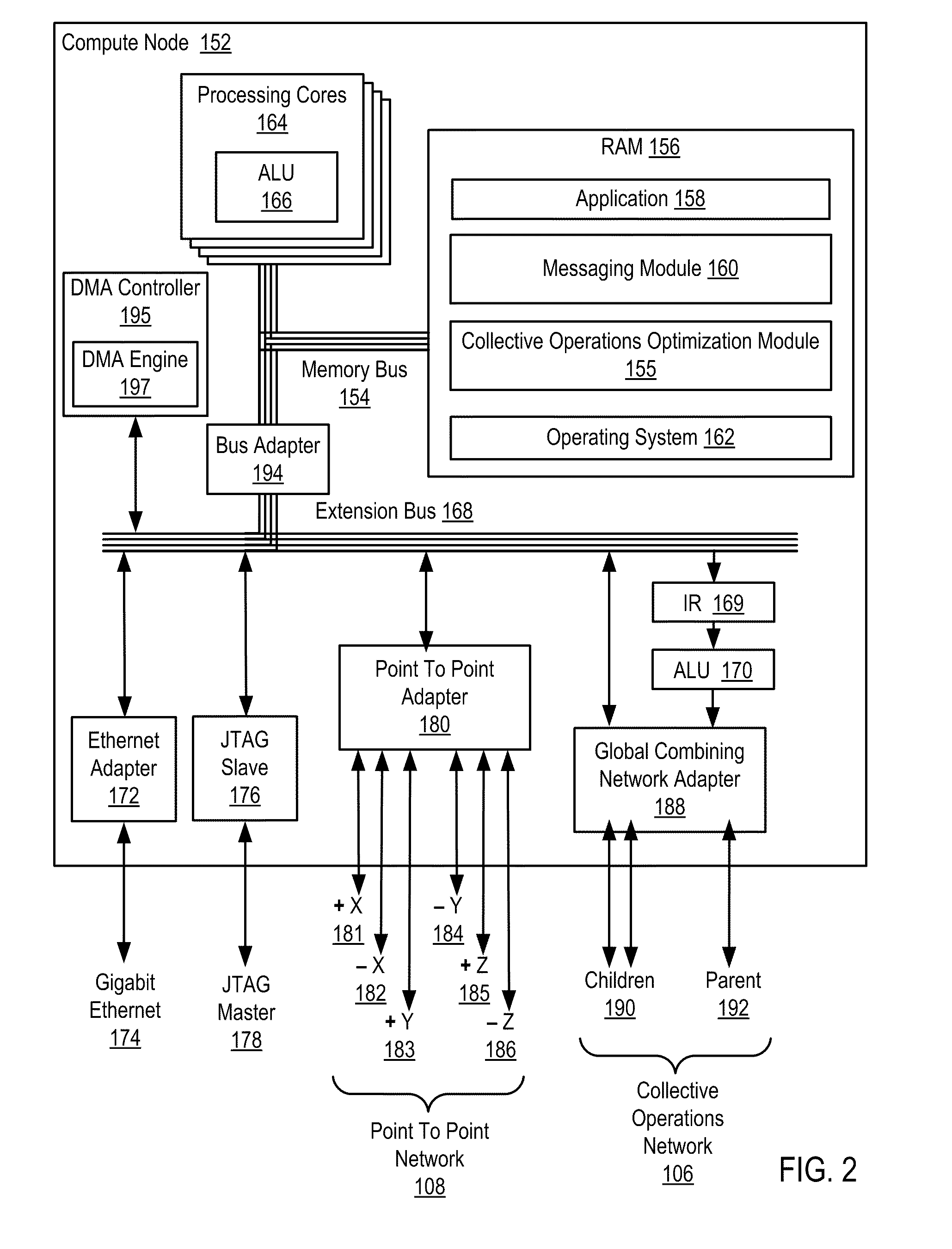

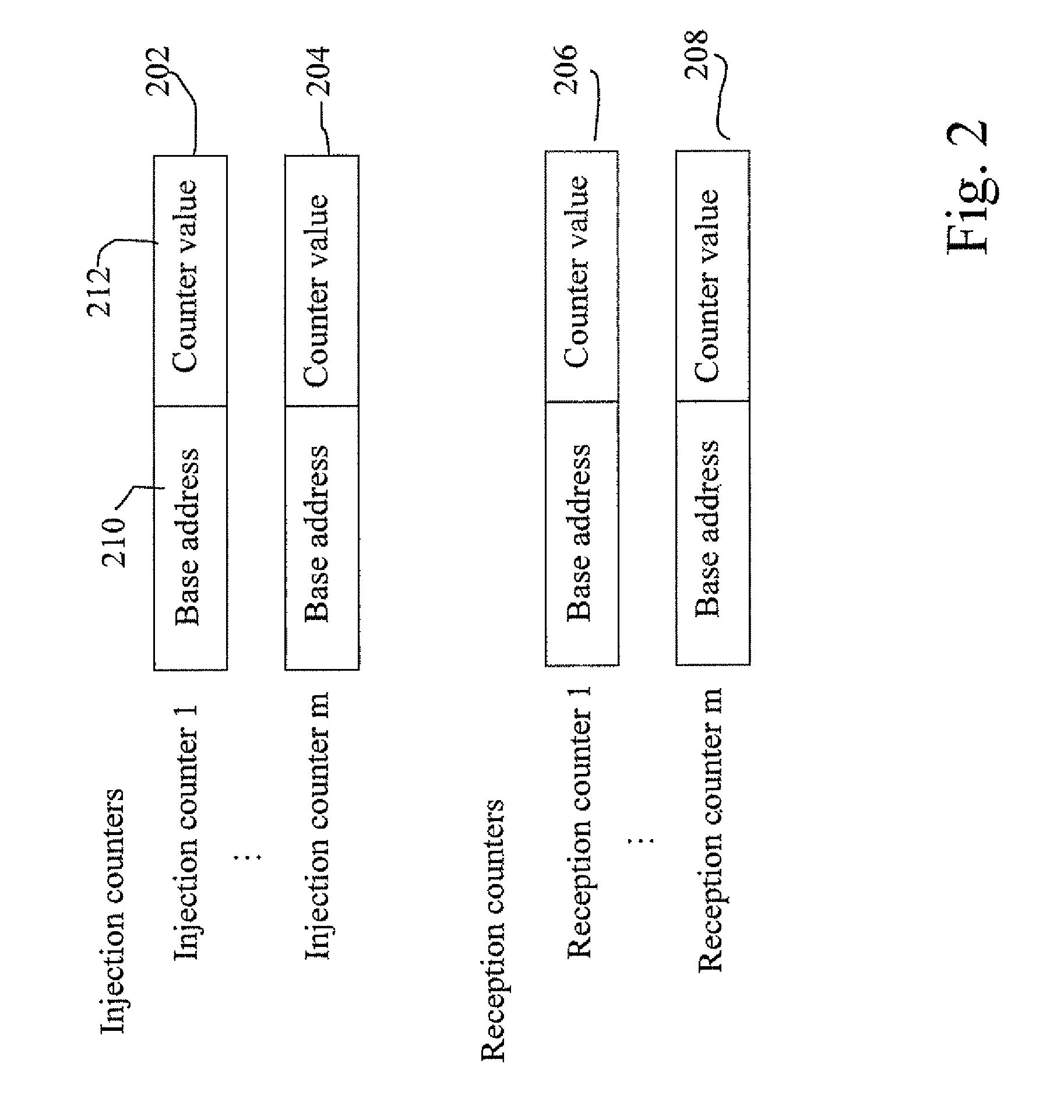

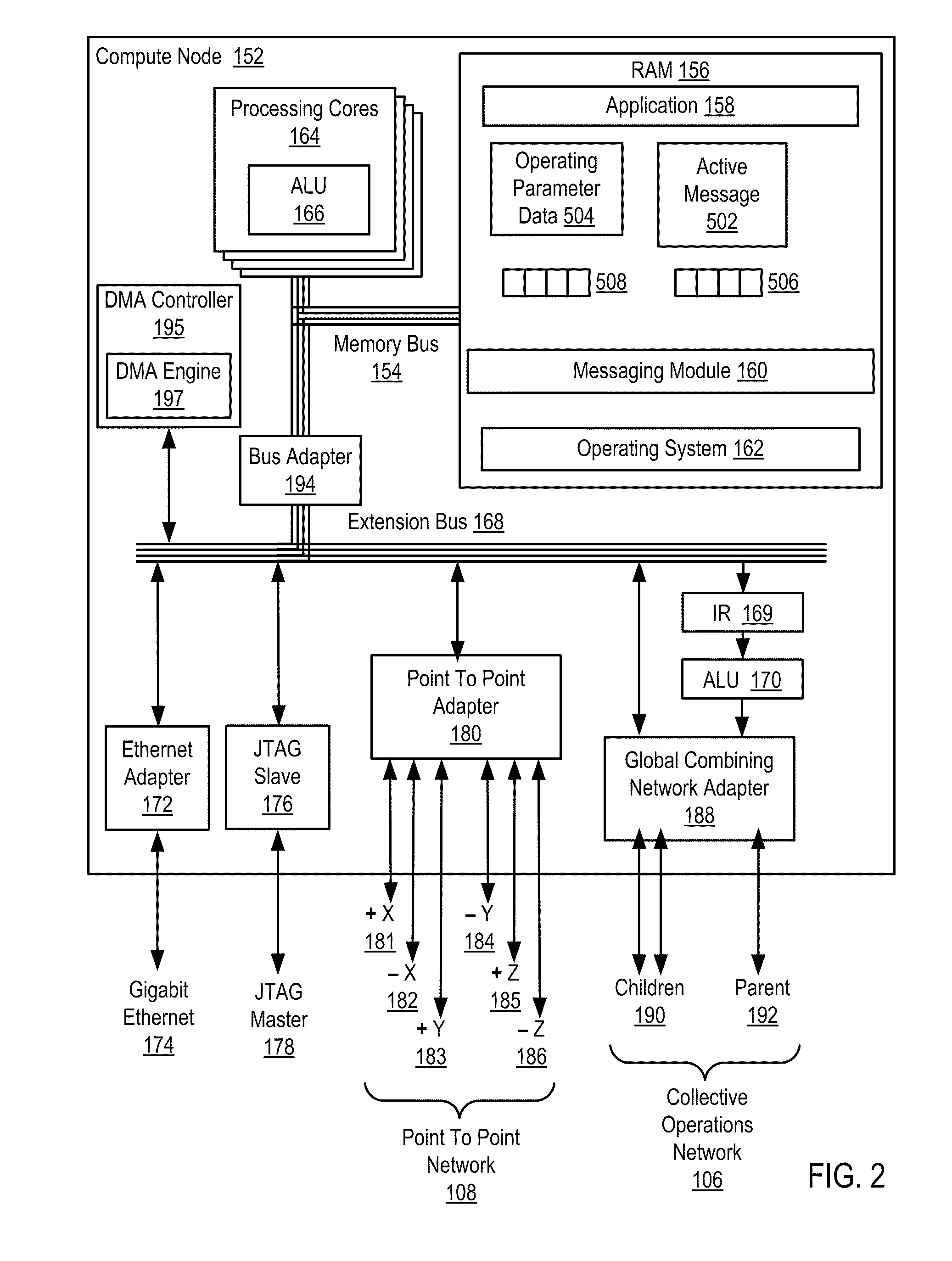

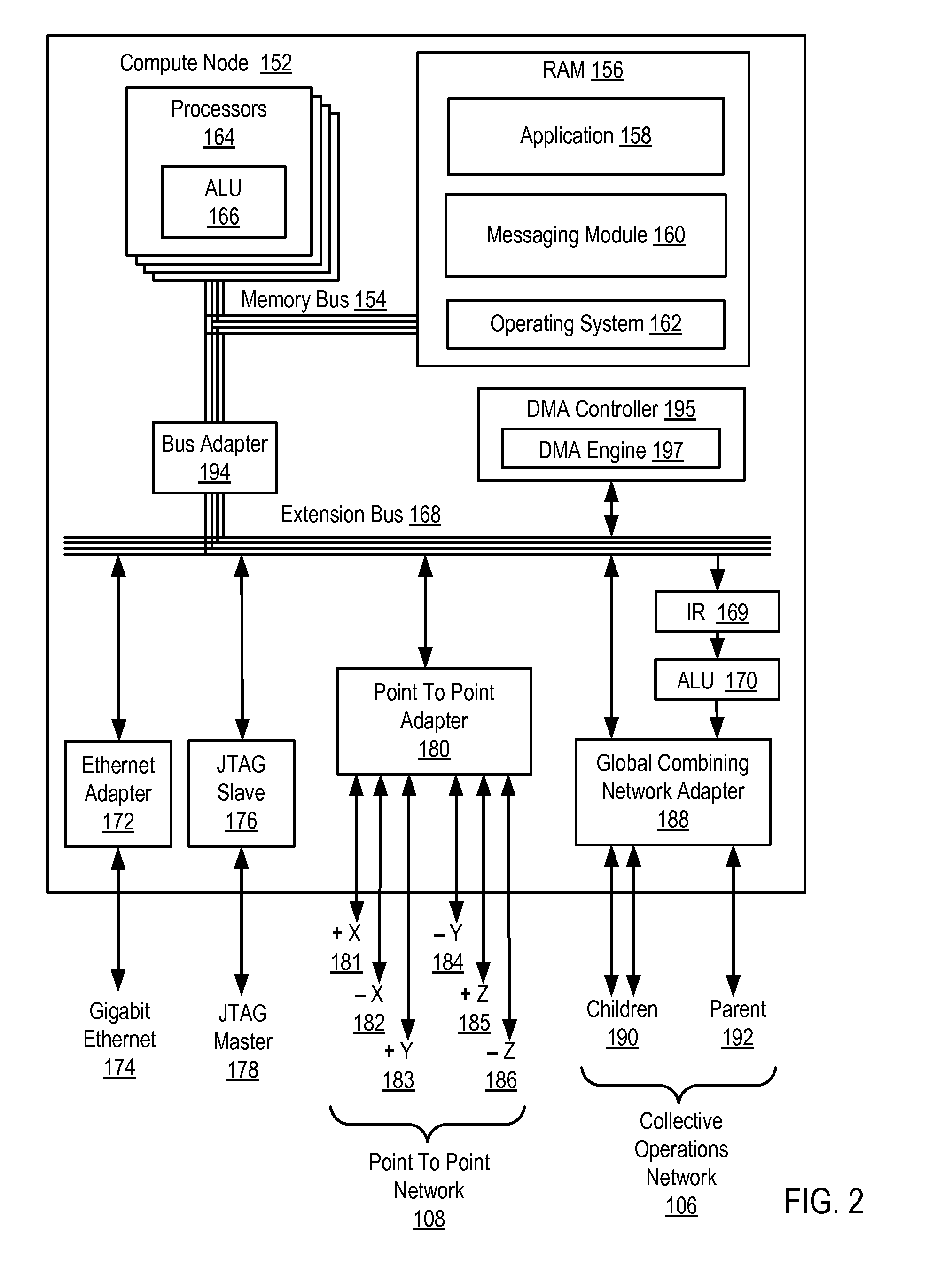

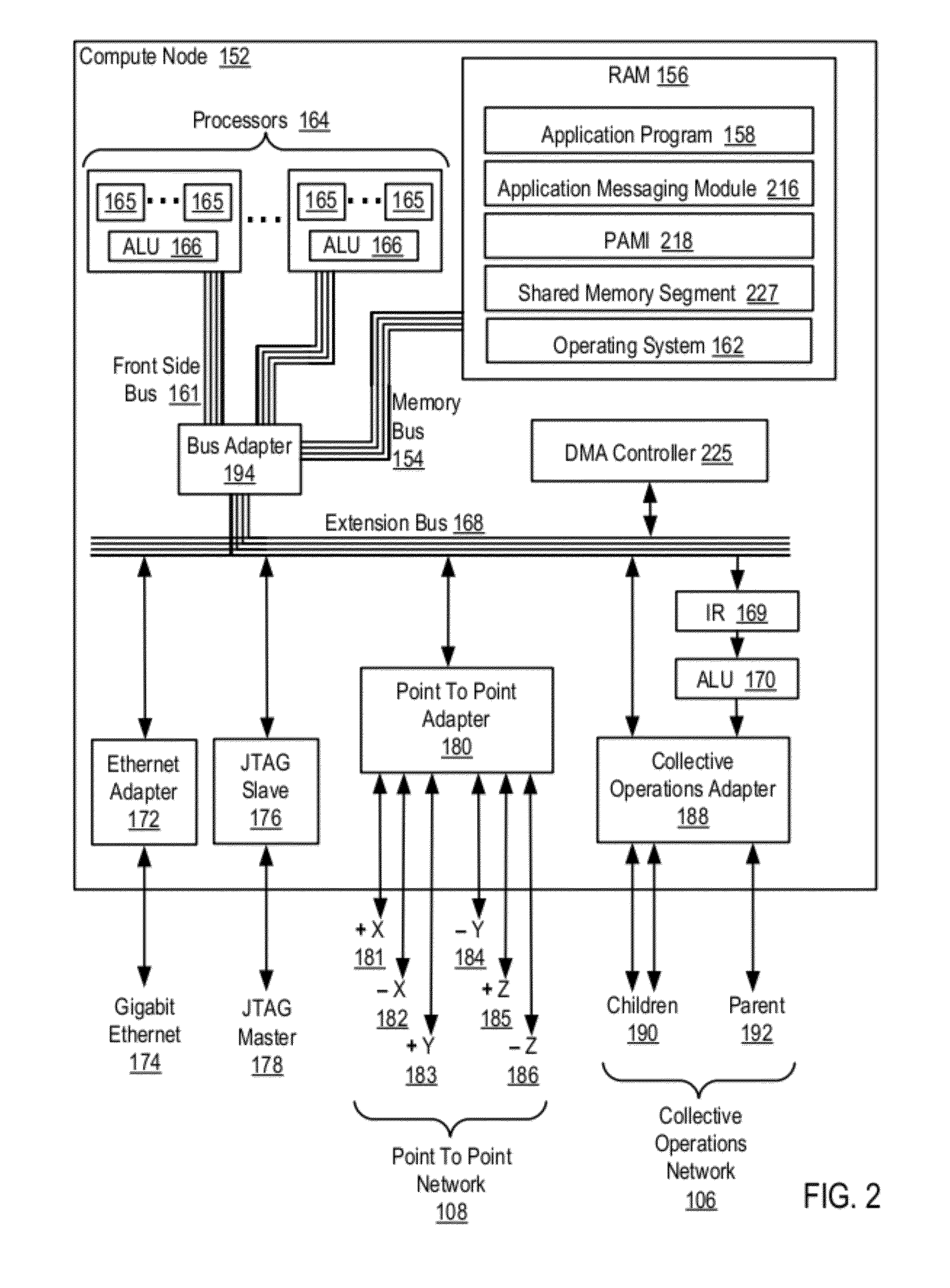

Optimized collectives using a DMA on a parallel computer

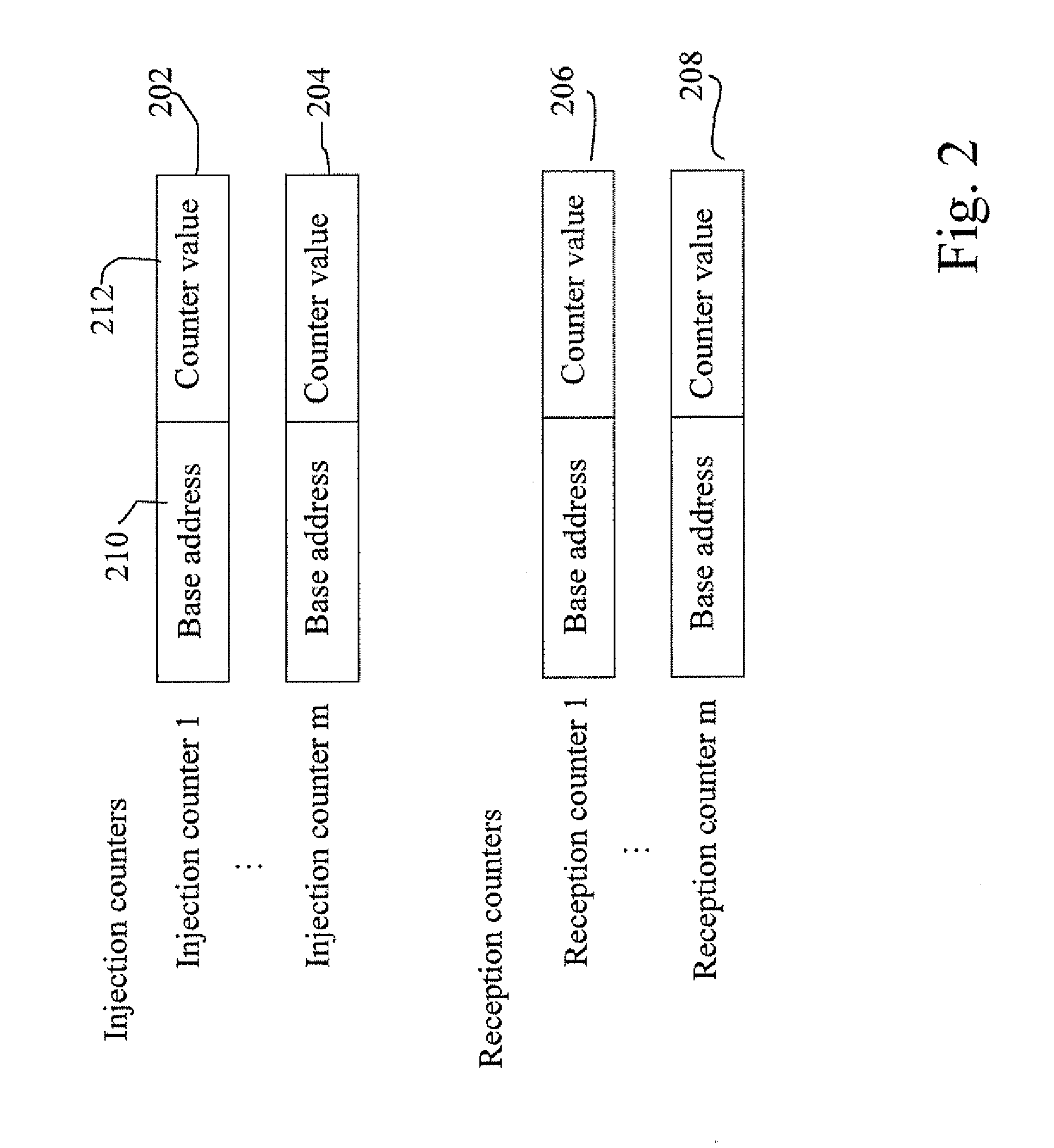

InactiveUS20090006662A1Easy to operateElectric digital data processingDirect memory accessConcurrent computation

Optimizing collective operations using direct memory access controller on a parallel computer, in one aspect, may comprise establishing a byte counter associated with a direct memory access controller for each submessage in a message. The byte counter includes at least a base address of memory and a byte count associated with a submessage. A byte counter associated with a submessage is monitored to determine whether at least a block of data of the submessage has been received. The block of data has a predetermined size, for example, a number of bytes. The block is processed when the block has been fully received, for example, when the byte count indicates all bytes of the block have been received. The monitoring and processing may continue for all blocks in all submessages in the message.

Owner:IBM CORP

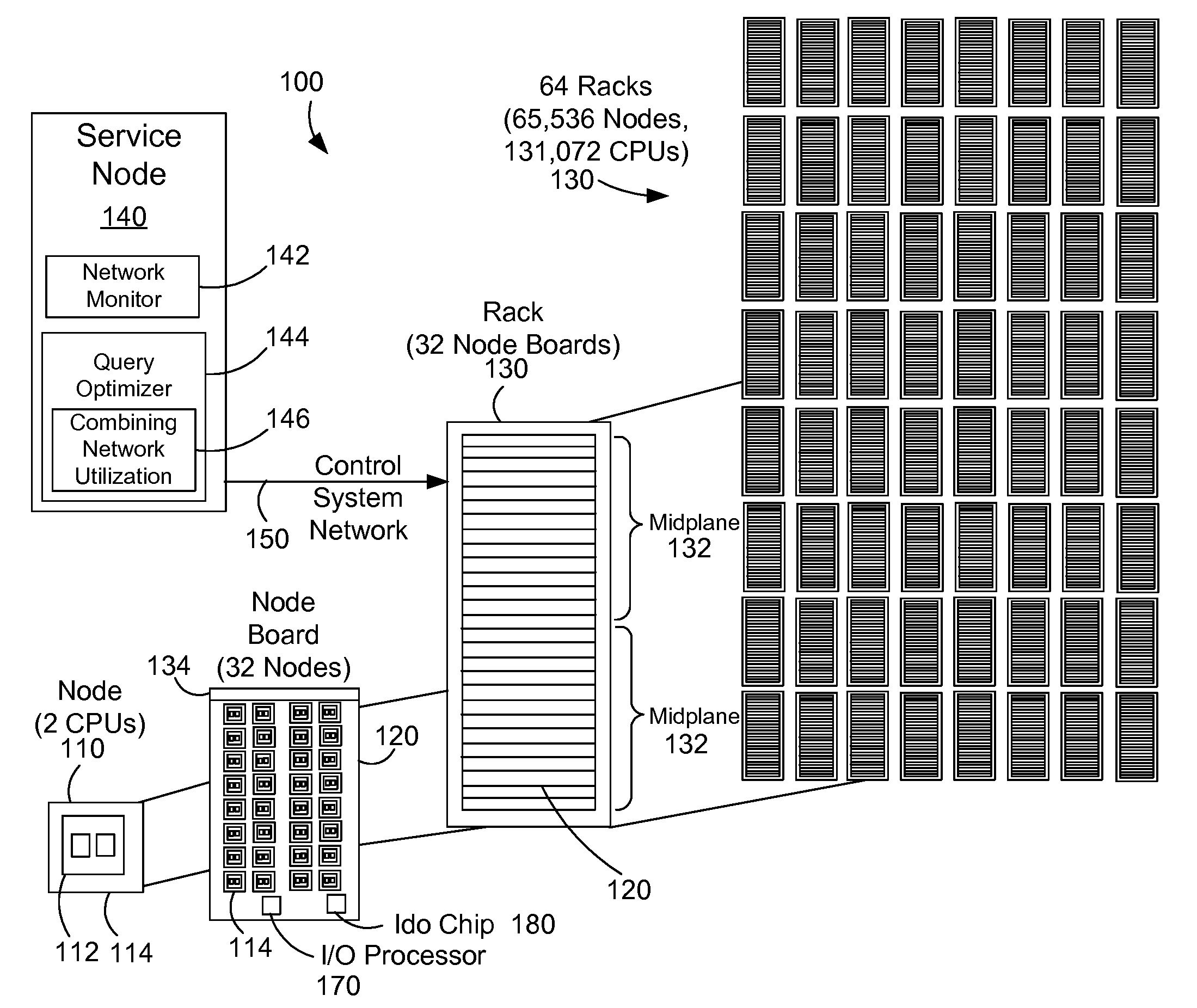

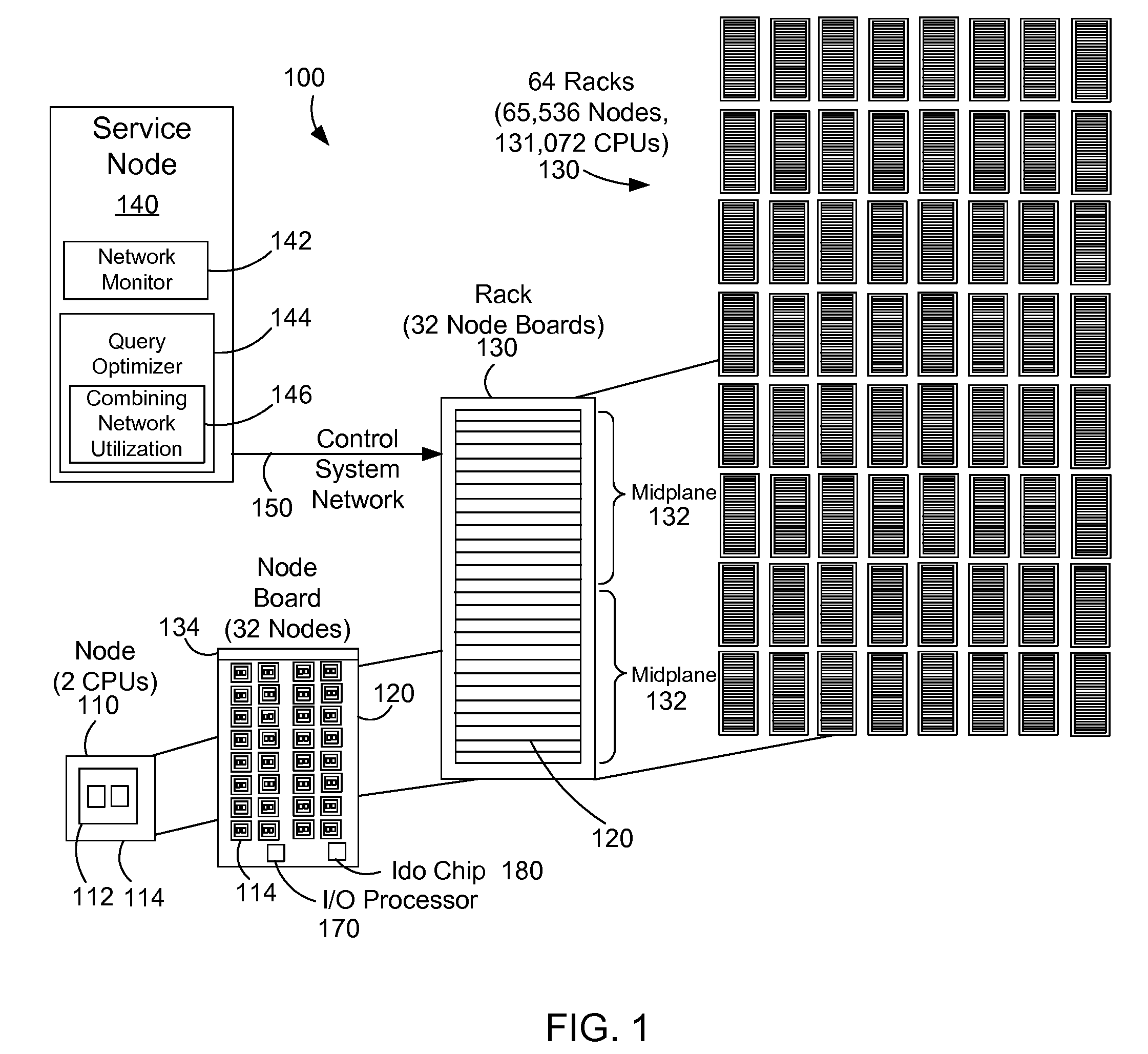

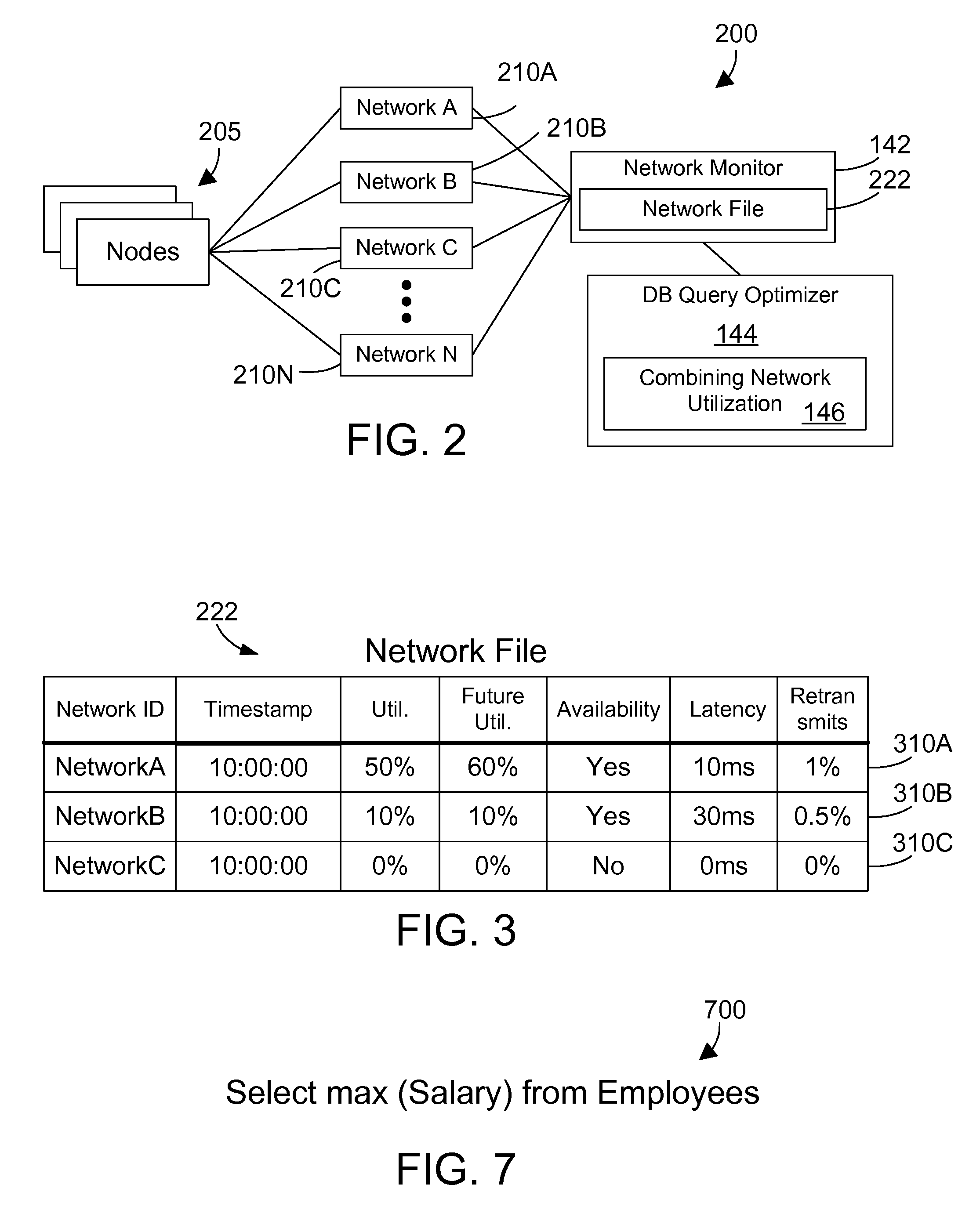

Query Execution and Optimization Utilizing a Combining Network in a Parallel Computer System

InactiveUS20090043910A1Improve parallel efficiencyImprove efficiencyData processing applicationsDigital data information retrievalDatabase queryComputerized system

An apparatus and method for a database query optimizer utilizes a combining network to optimize a portion of a query in a parallel computer system with multiple nodes. The efficiency of the parallel computer system is increased by offloading collective operations on node data to the global combining network. The global combining network performs collective operations such as minimum, maximum, sum, and logical functions such as OR and XOR.

Owner:IBM CORP

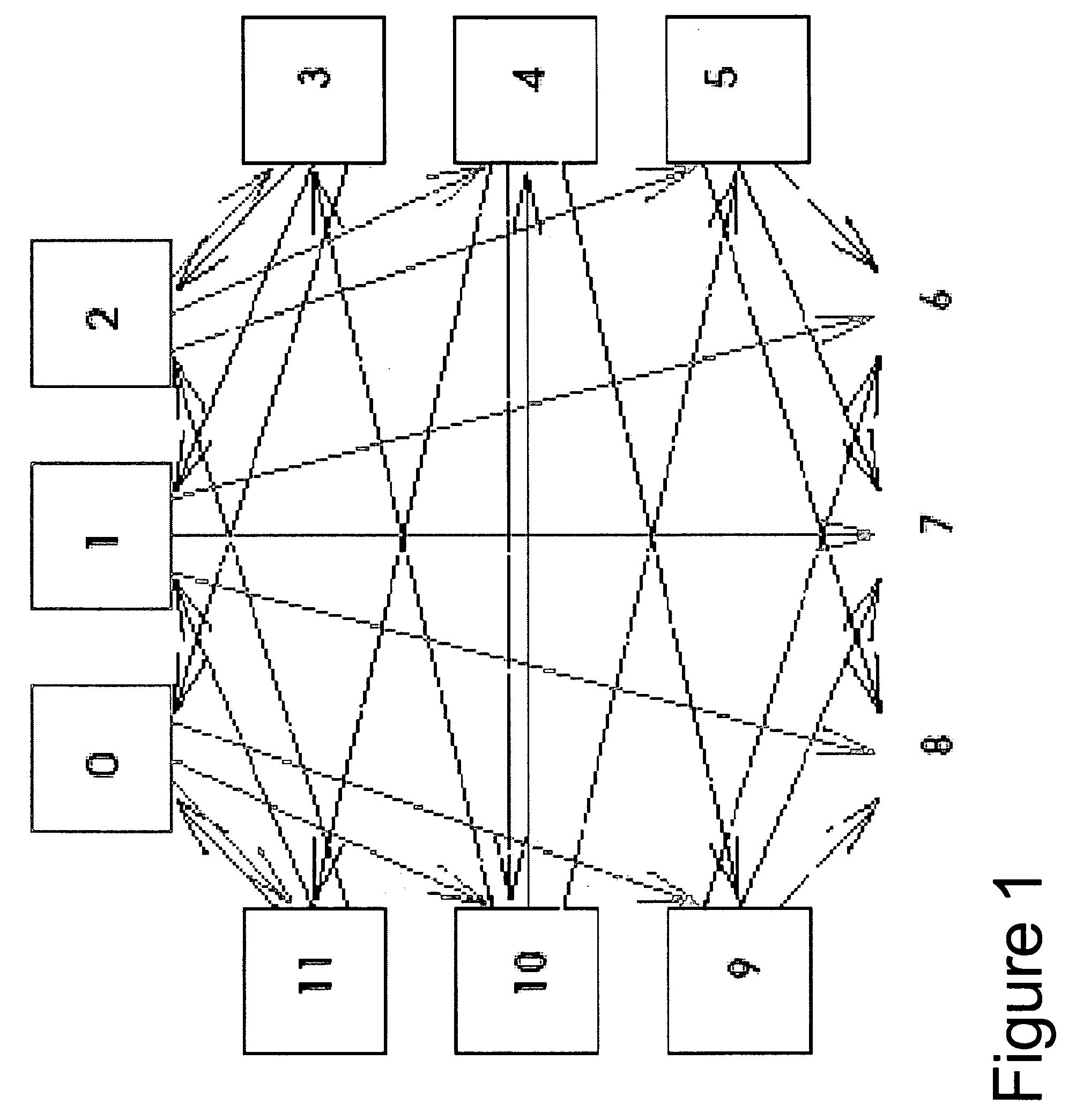

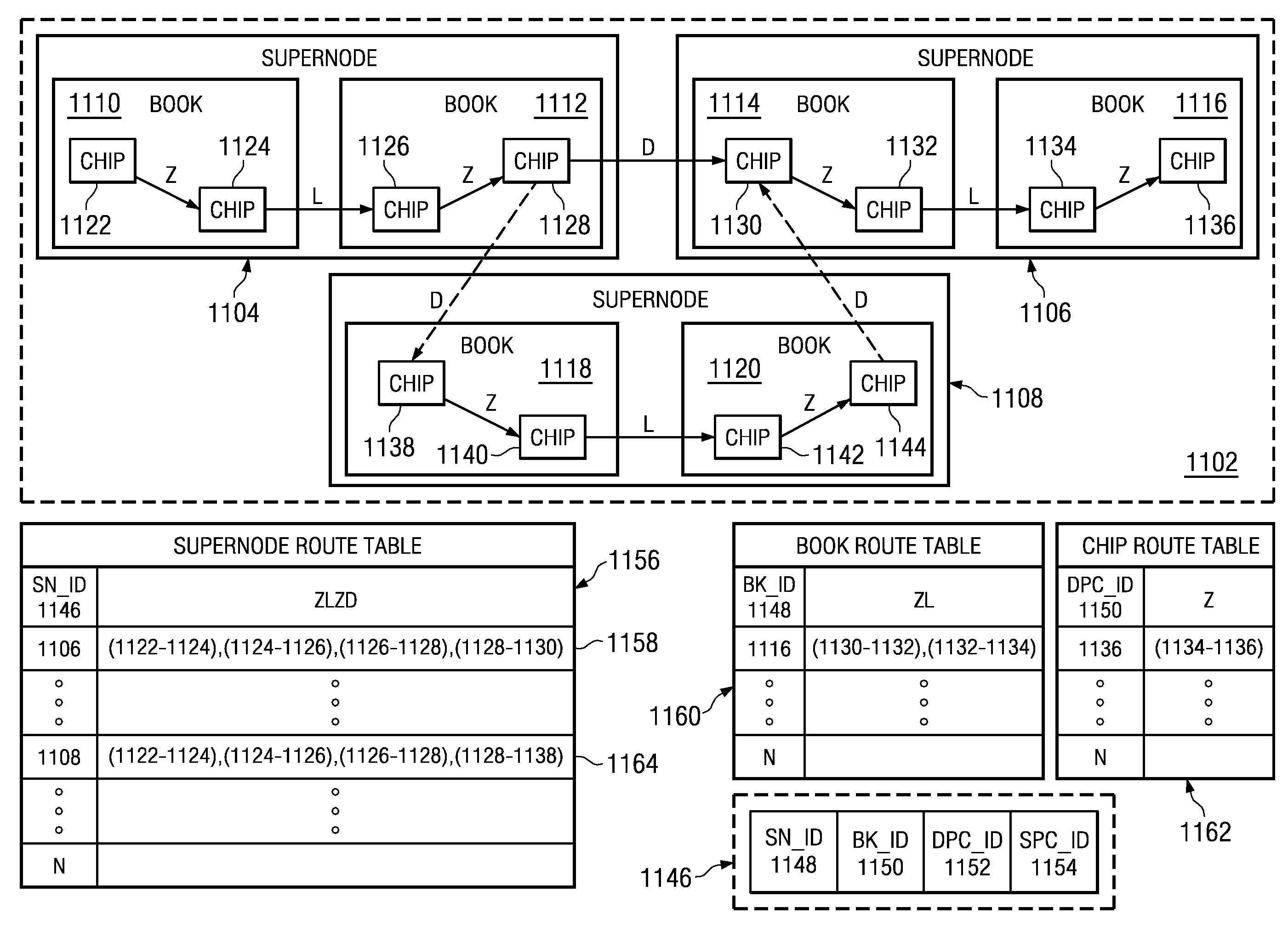

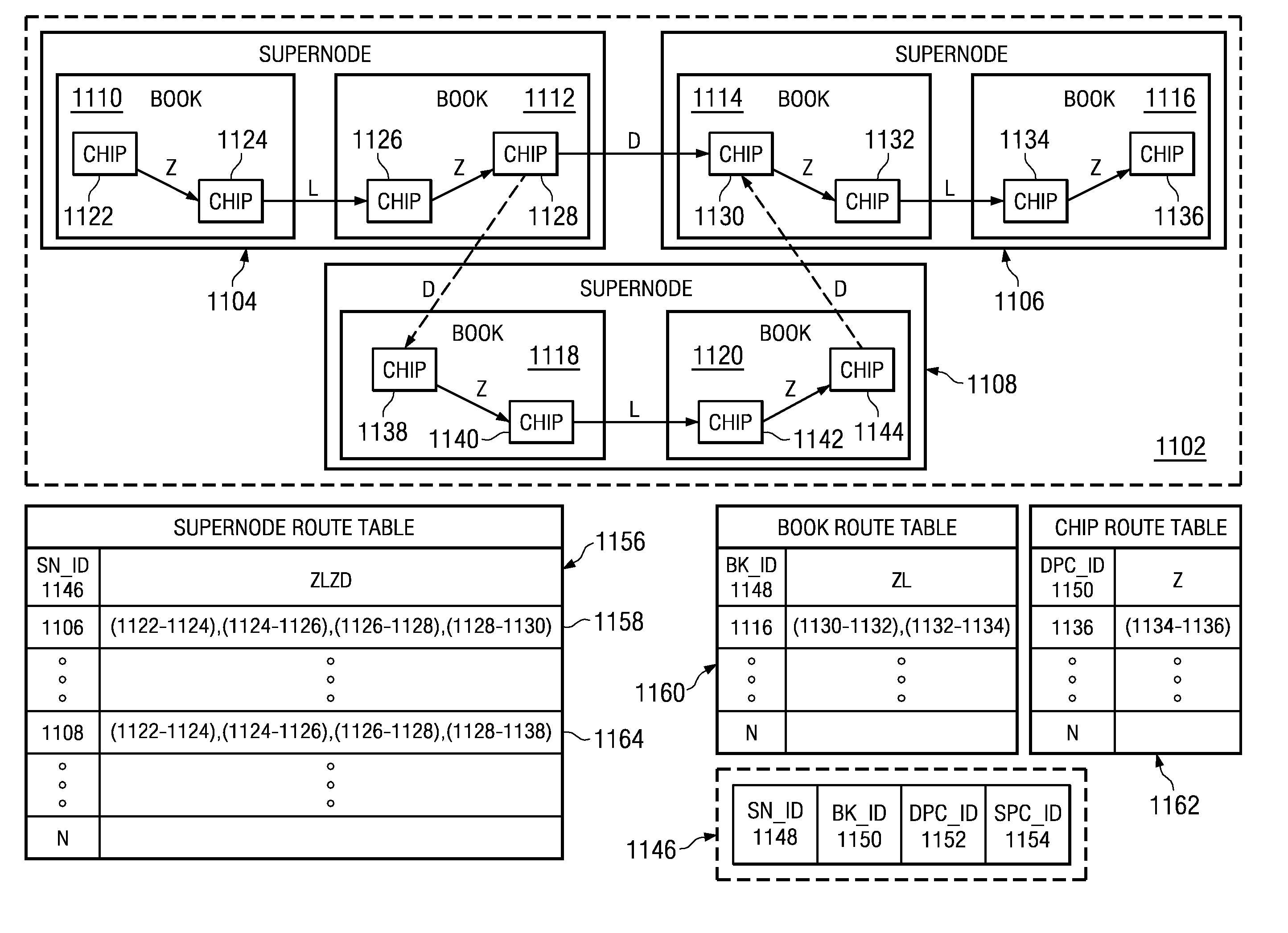

System and Method for Providing Full Hardware Support of Collective Operations in a Multi-Tiered Full-Graph Interconnect Architecture

InactiveUS20090063815A1Improve communication performanceImprove productivityProgram control using wired connectionsGeneral purpose stored program computerData processing systemCollective operation

A method, computer program product, and system are provided for performing collective operations. In hardware of a parent processor in a first processor book, a number of other processors are determined in a same or different processor book of the data processing system that is needed to execute the collective operation, thereby establishing a plurality of processors comprising the parent processor and the other processors. In hardware of the parent processor, the plurality of processors are logically arranged as a plurality of nodes in a hierarchical structure. The collective operation is transmitted to the plurality of processors based on the hierarchical structure. In hardware of the parent processor, results are received from the execution of the collective operation from the other processors, a final result is generated of the collective operation based on the received results, and the final result is output.

Owner:IBM CORP

System and Method for Performing Collective Operations Using Software Setup and Partial Software Execution at Leaf Nodes in a Multi-Tiered Full-Graph Interconnect Architecture

InactiveUS20090063816A1Improve communication performanceImprove productivityProgram control using wired connectionsGeneral purpose stored program computerData processing systemCollective operation

A method, computer program product, and system are provided for performing collective operations. In software executing on a parent processor in a first processor book, a number of other processors are determined in a same or different processor book of the data processing system that is needed to execute the collective operation, thereby establishing a plurality of processors comprising the parent processor and the other processors. In software executing on the parent processor, the plurality of processors are logically arranged as a plurality of nodes in a hierarchical structure. The collective operation is transmitted to the plurality of processors based on the hierarchical structure. In hardware of the parent processor, results are received from the execution of the collective operation from the other processors, a final result is generated of the collective operation based on the received results, and the final result is output.

Owner:IBM CORP

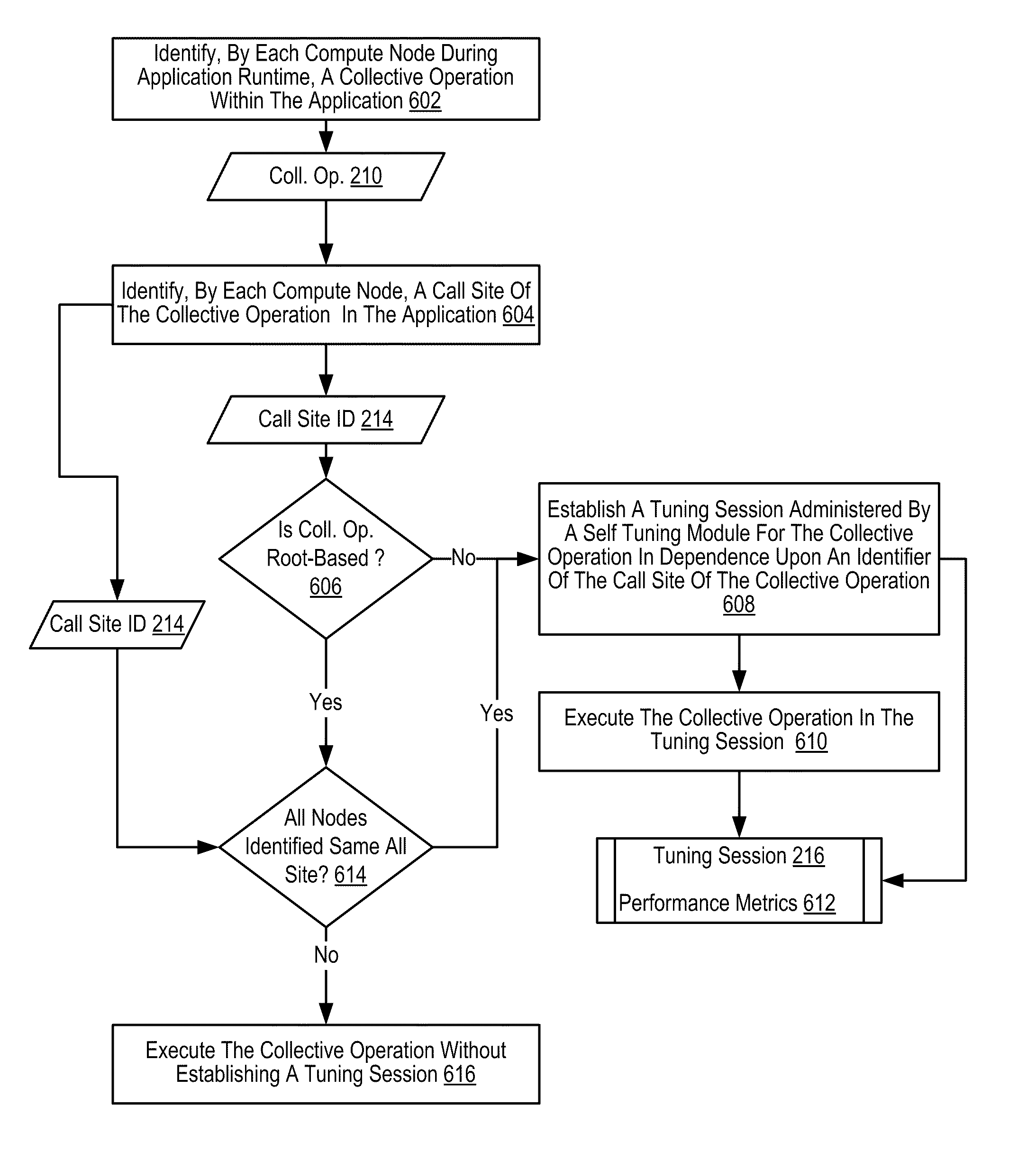

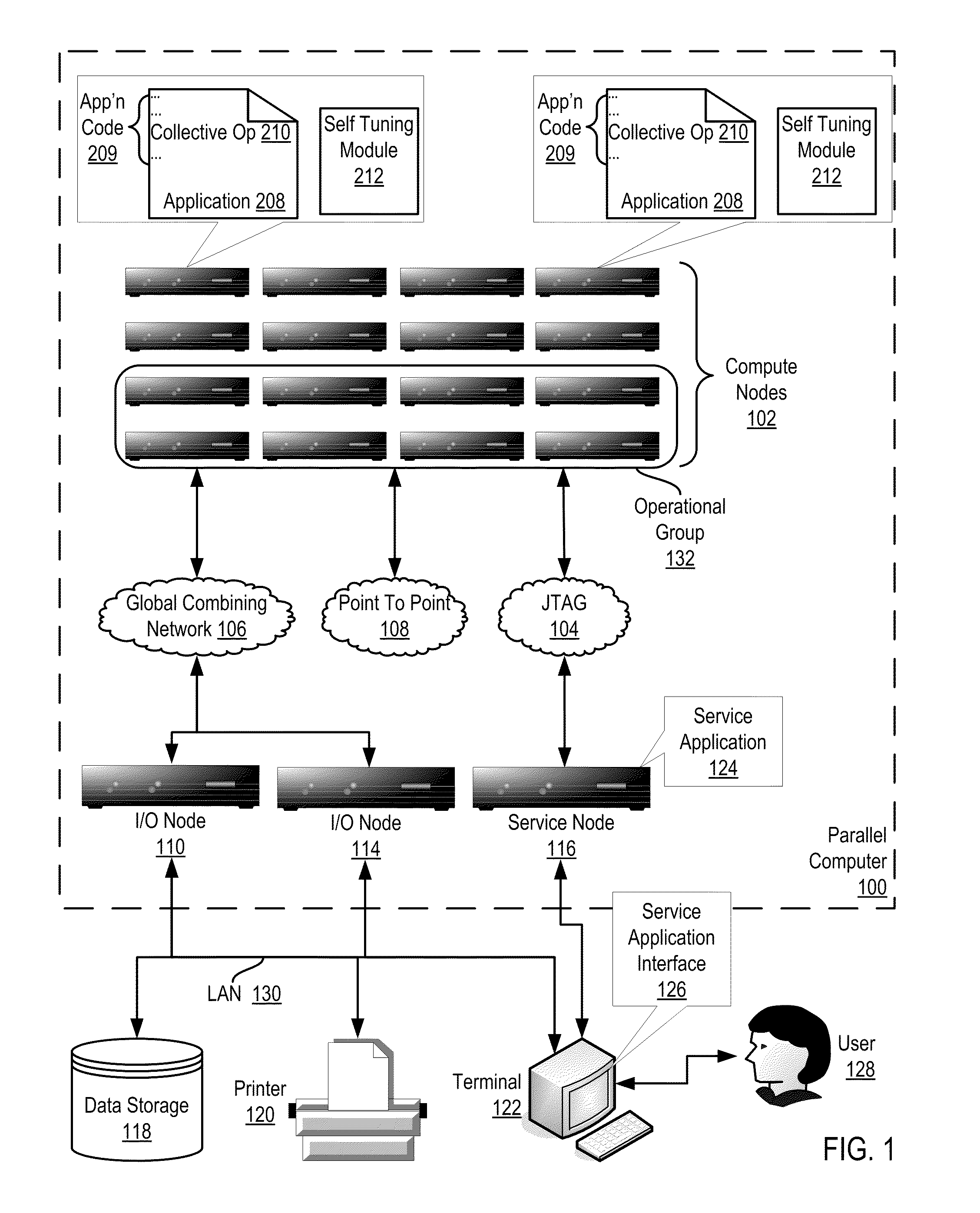

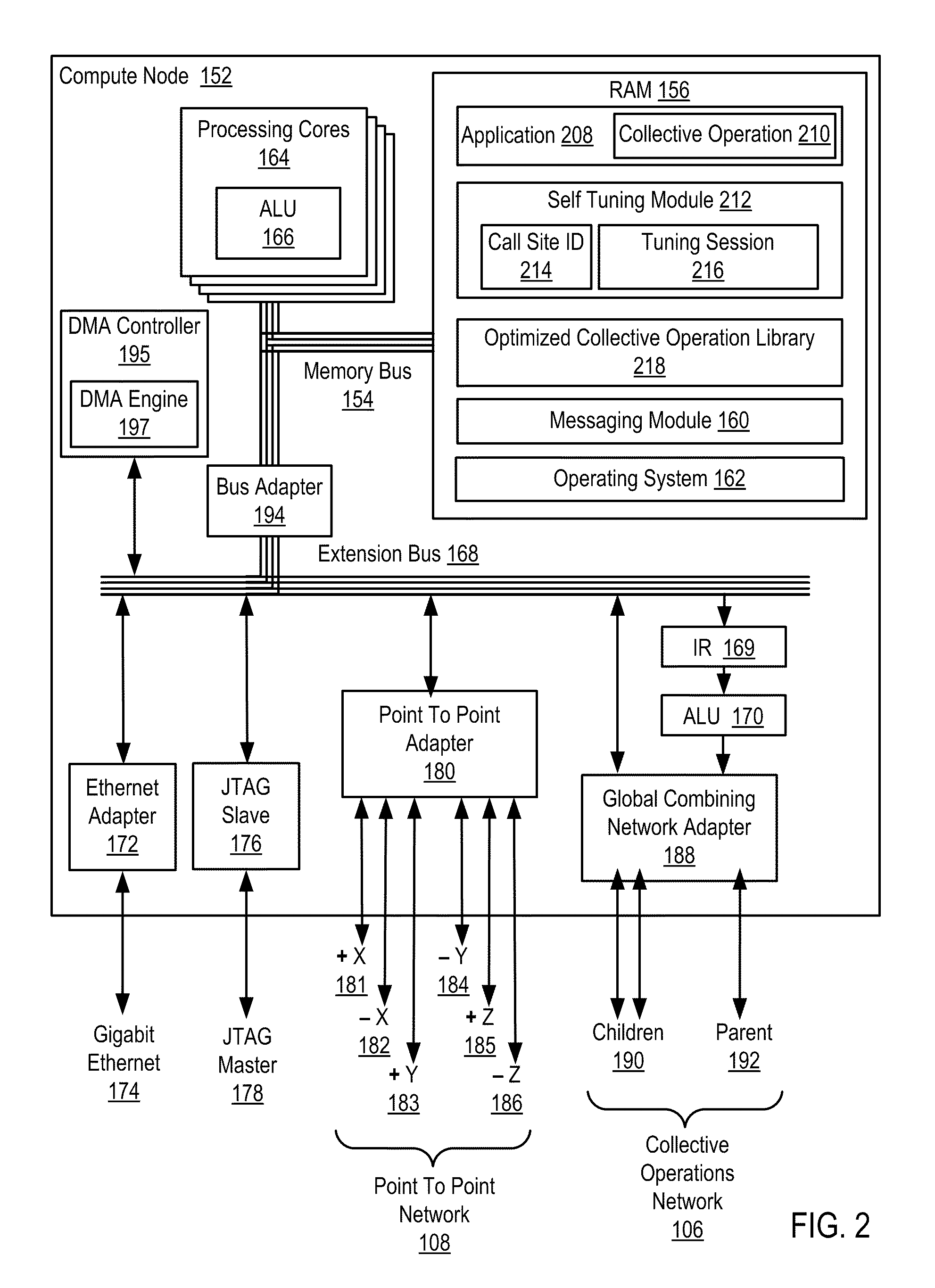

Runtime Optimization Of An Application Executing On A Parallel Computer

InactiveUS20110258627A1Program synchronisationError detection/correctionApplication softwareComputer science

Identifying a collective operation within an application executing on a parallel computer; identifying a call site of the collective operation; determining whether the collective operation is root-based; if the collective operation is not root-based: establishing a tuning session and executing the collective operation in the tuning session; if the collective operation is root-based, determining whether all compute nodes executing the application identified the collective operation at the same call site; if all compute nodes identified the collective operation at the same call site, establishing a tuning session and executing the collective operation in the tuning session; and if all compute nodes executing the application did not identify the collective operation at the same call site, executing the collective operation without establishing a tuning session.

Owner:IBM CORP

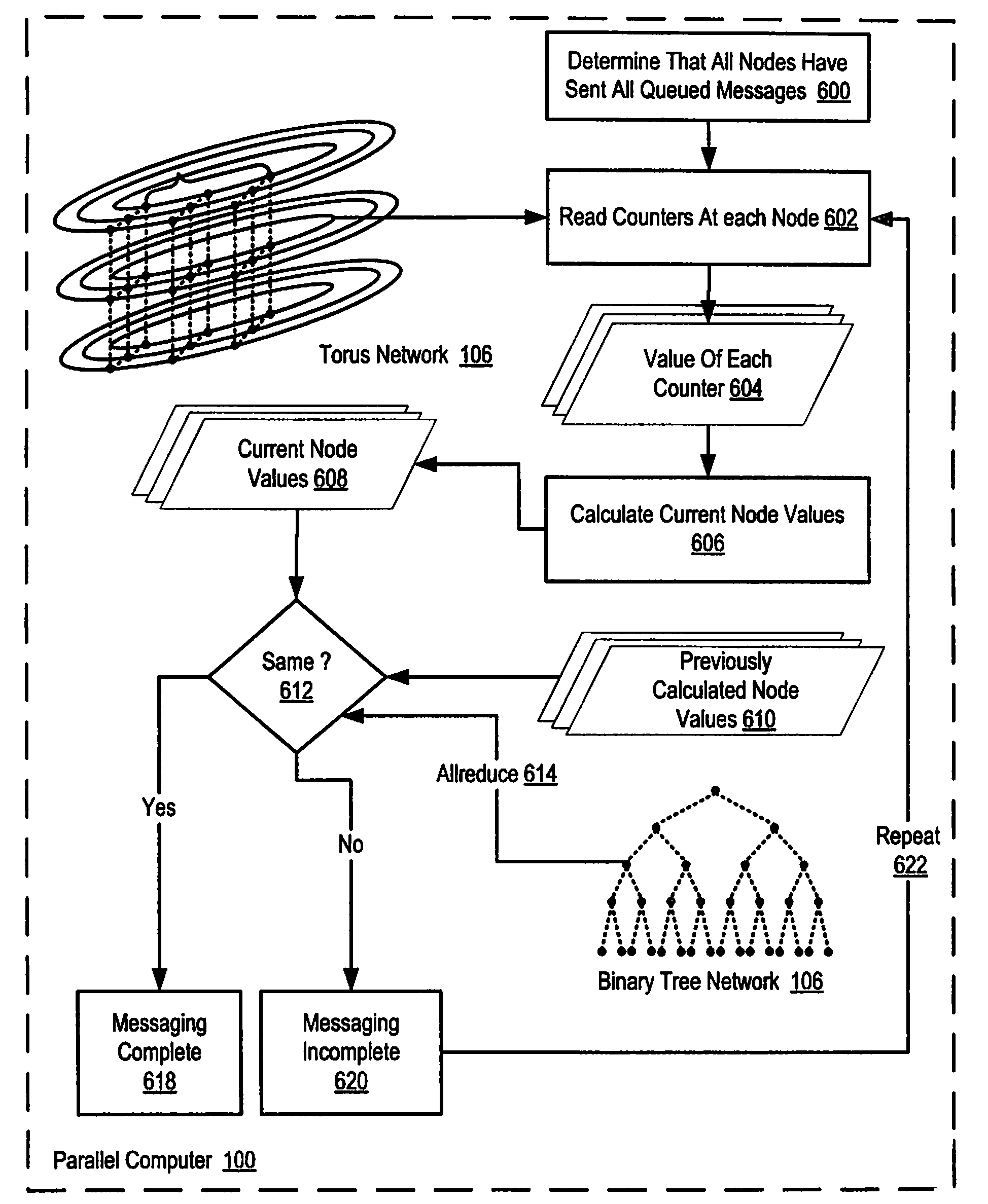

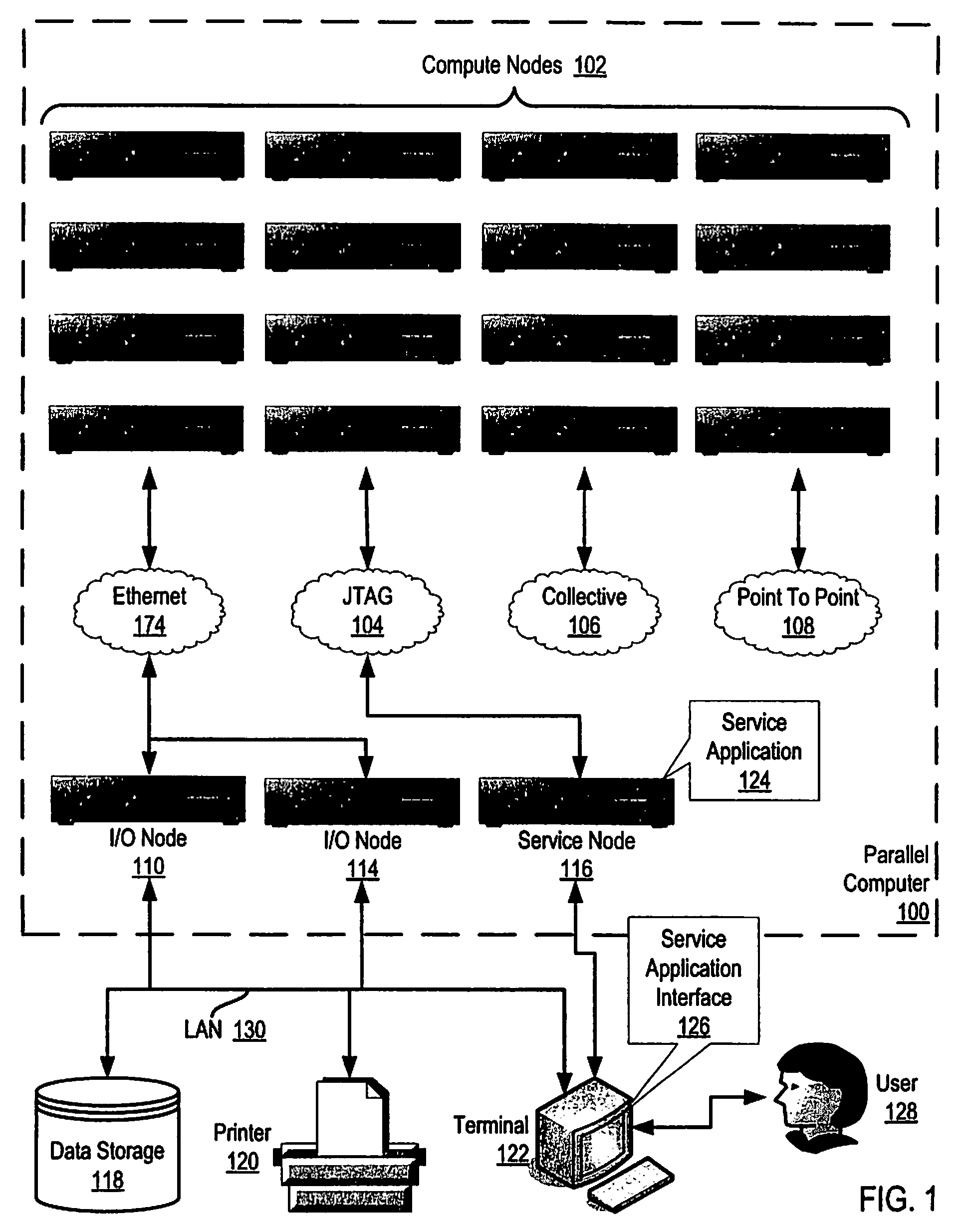

Identifying messaging completion in a parallel computer by checking for change in message received and transmitted count at each node

InactiveUS7552312B2General purpose stored program computerMultiple digital computer combinationsBinary treeMessage passing

Methods, parallel computers, and products are provided for identifying messaging completion on a parallel computer. The parallel computer includes a plurality of compute nodes, the compute nodes coupled for data communications by at least two independent data communications networks including a binary tree data communications network optimal for collective operations that organizes the nodes as a tree and a torus data communications network optimal for point to point operations that organizes the nodes as a torus. Embodiments include reading all counters at each node of the torus data communications network; calculating at each node a current node value in dependence upon the values read from the counters at each node; and determining for all nodes whether the current node value for each node is the same as a previously calculated node value for each node. If the current node is the same as the previously calculated node value for all nodes of the torus data communications network, embodiments include determining that messaging is complete and if the current node is not the same as the previously calculated node value for all nodes of the torus data communications network, embodiments include determining that messaging is currently incomplete.

Owner:INT BUSINESS MASCH CORP

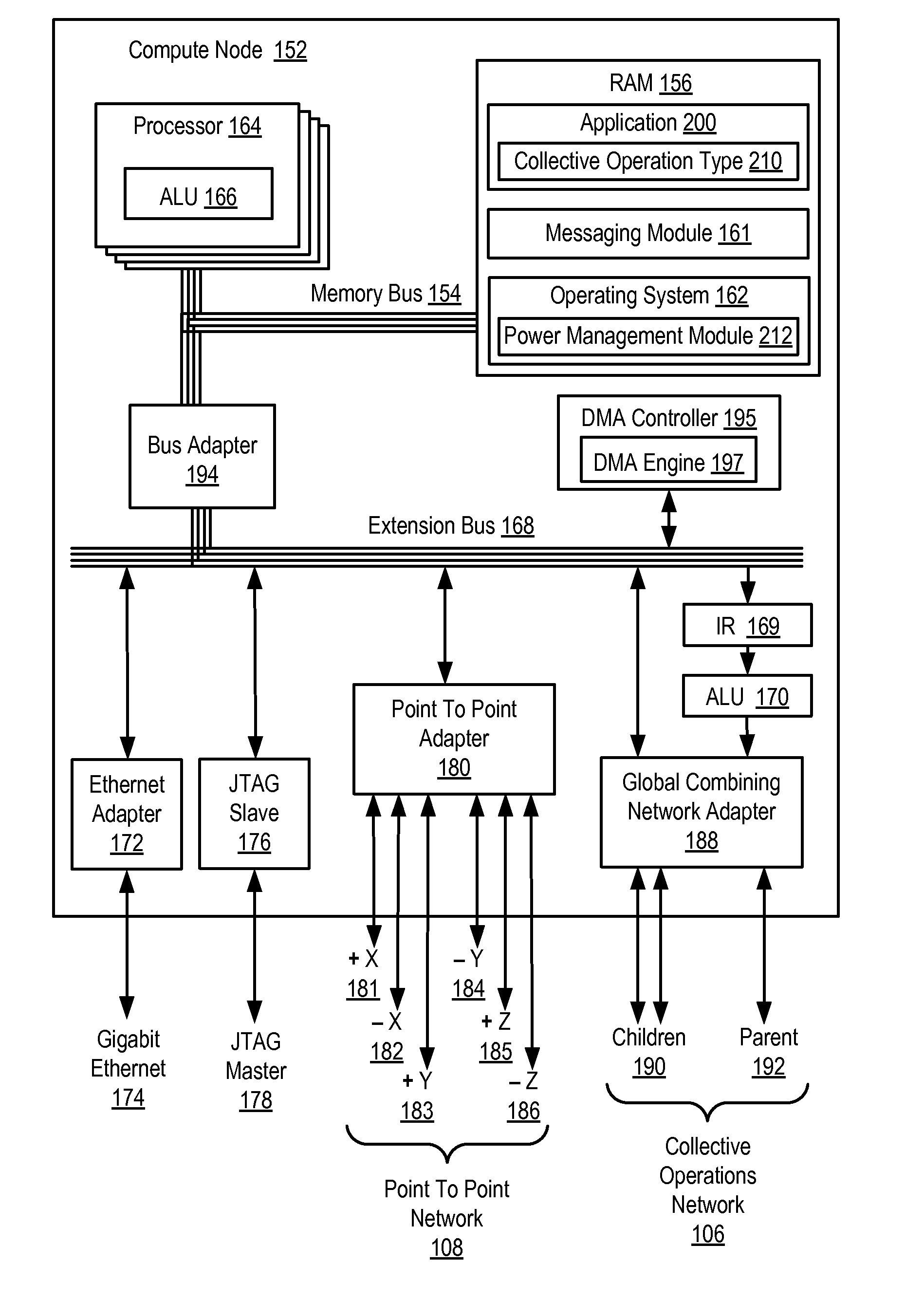

Optimizing Collective Operations

InactiveUS20110270986A1Multiple digital computer combinationsProgram controlData miningType selection

Optimizing collective operations including receiving an instruction to perform a collective operation type; selecting an optimized collective operation for the collective operation type; performing the selected optimized collective operation; determining whether a resource needed by the one or more nodes to perform the collective operation is not available; if a resource needed by the one or more nodes to perform the collective operation is not available: notifying the other nodes that the resource is not available; selecting a next optimized collective operation; and performing the next optimized collective operation.

Owner:IBM CORP

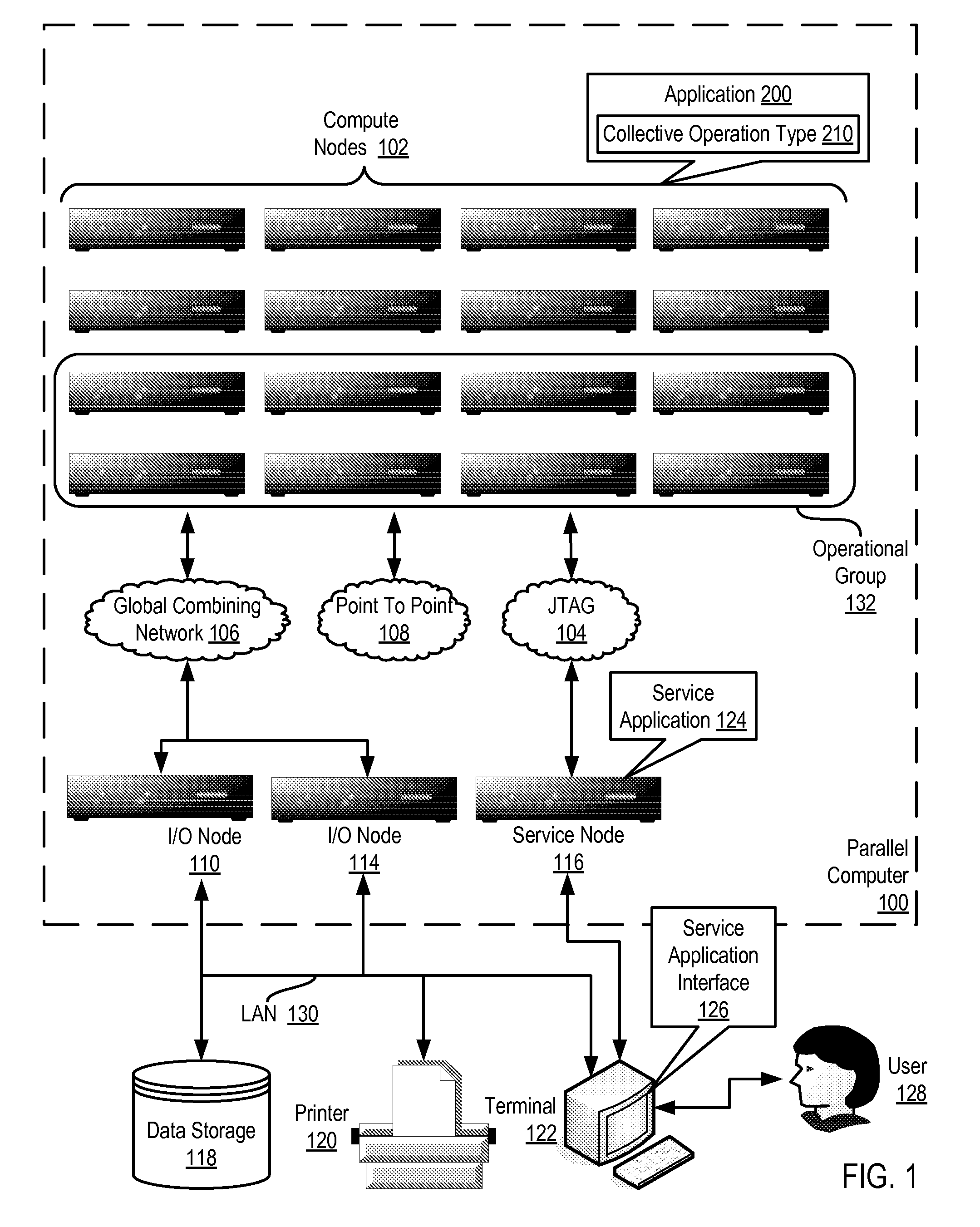

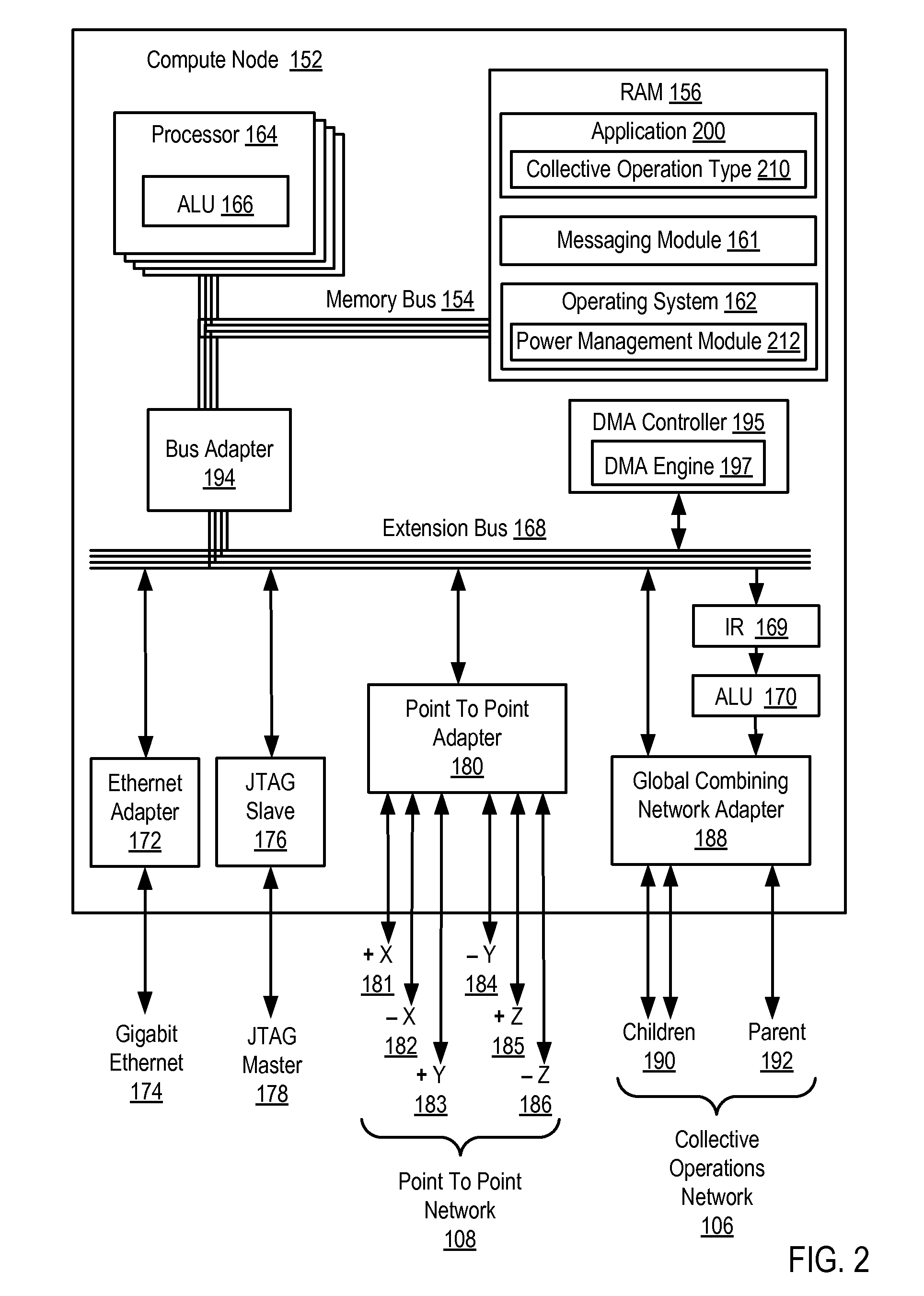

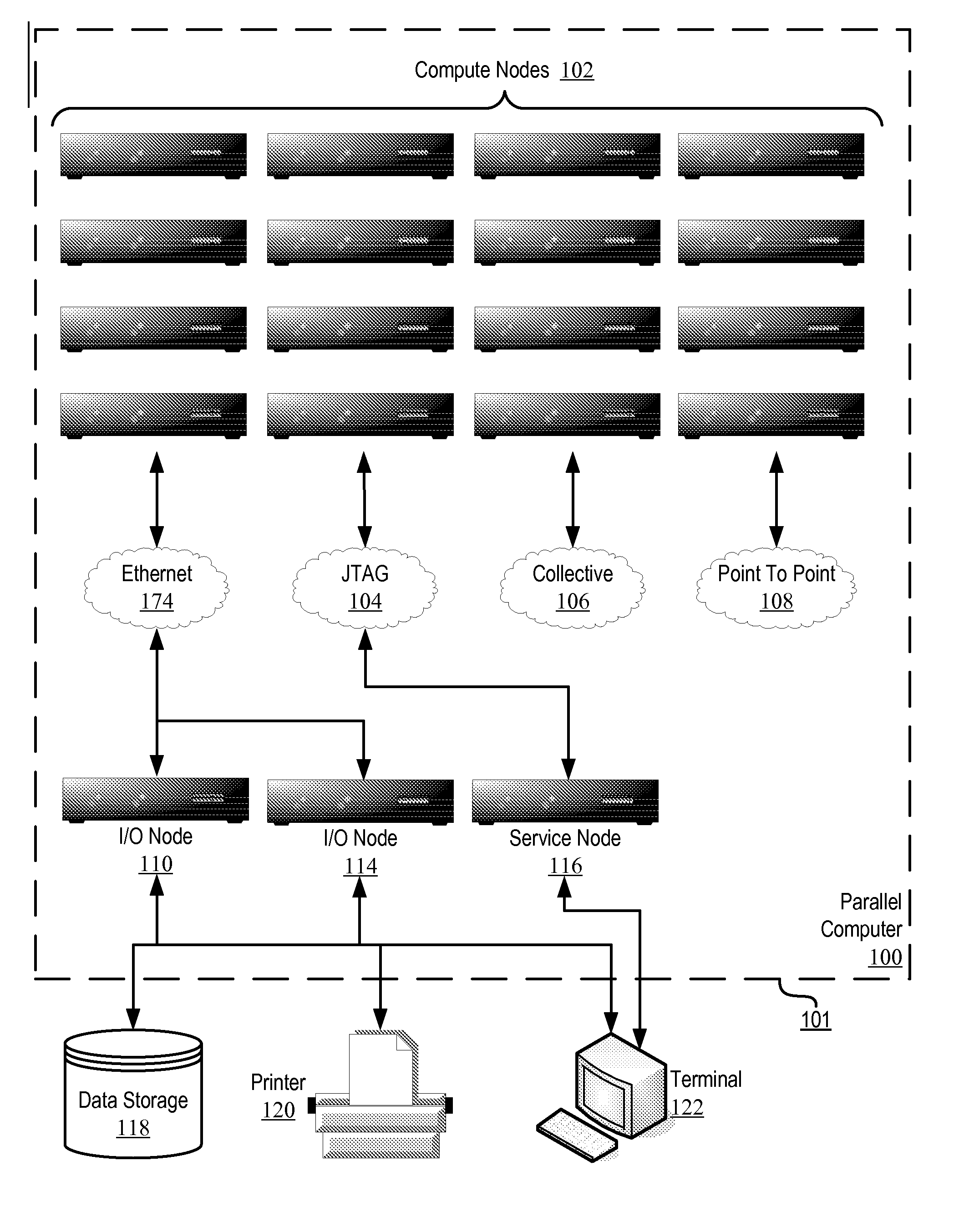

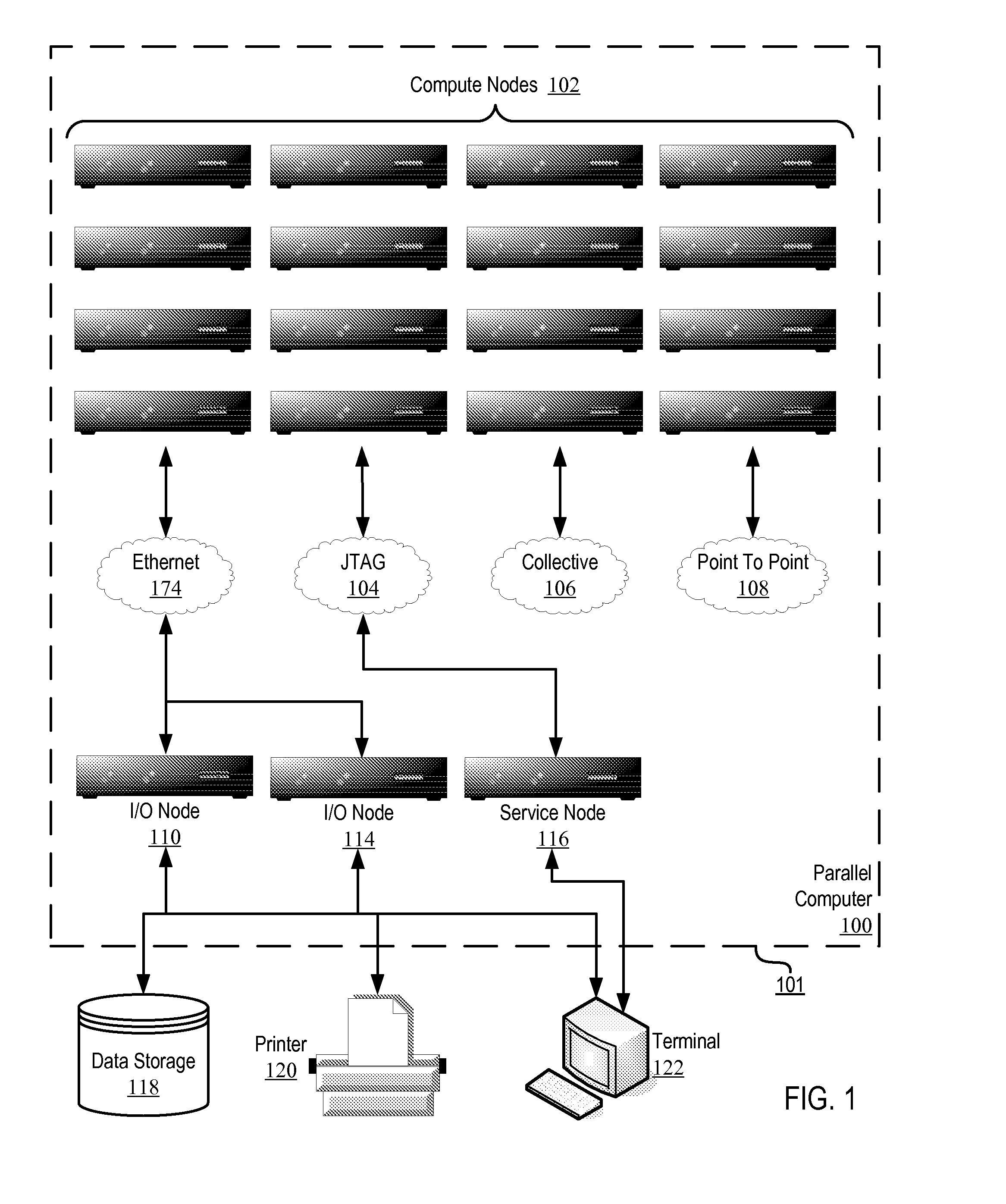

Reducing power consumption while performing collective operations on a plurality of compute nodes

ActiveUS8041969B2Energy efficient ICTVolume/mass flow measurementCollective operationPower consumption

Owner:INT BUSINESS MASCH CORP

Computer Hardware Fault Diagnosis

Methods, apparatus, and computer program products are disclosed for computer hardware fault diagnosis carried out in a parallel computer, where the parallel computer includes a plurality of compute nodes. The compute nodes are coupled for data communications by at least two independent data communications networks, where each data communications network includes data communications links among the compute nodes. Typical embodiments carry out hardware fault diagnosis by executing a collective operation through a first data communications network upon a plurality of the compute nodes of the computer, executing the same collective operation through a second data communications network upon the same plurality of the compute nodes of the computer, and comparing results of the collective operations.

Owner:IBM CORP

Optimized collectives using a DMA on a parallel computer

Optimizing collective operations using direct memory access controller on a parallel computer, in one aspect, may comprise establishing a byte counter associated with a direct memory access controller for each submessage in a message. The byte counter includes at least a base address of memory and a byte count associated with a submessage. A byte counter associated with a submessage is monitored to determine whether at least a block of data of the submessage has been received. The block of data has a predetermined size, for example, a number of bytes. The block is processed when the block has been fully received, for example, when the byte count indicates all bytes of the block have been received. The monitoring and processing may continue for all blocks in all submessages in the message.

Owner:INT BUSINESS MASCH CORP

Smart digital modules and smart digital wall surfaces combining the same, and context aware interactive multimedia system using the same and operation method thereof

ActiveUS7636365B2Minimally fixed or centralized infrastructureDigital computer detailsData switching by path configurationContext awarenessEmbedded system

Disclosed are smart digital modules, a smart digital wall combining the smart digital modules, a context-aware interactive multimedia system, and an operating method thereof. The smart digital wall includes local coordinators for controlling the smart digital modules, and a coordination process for controlling the smart digital modules. The smart digital modules sense ambient states and changes of states and independently display corresponding actuations. The coordination process is connected to the smart digital modules via radio communication, and combines smart digital modules to control collective operations of the smart digital modules.

Owner:INFORMATION & COMM UNIV RES & INDAL COOPERATION GROUP +1

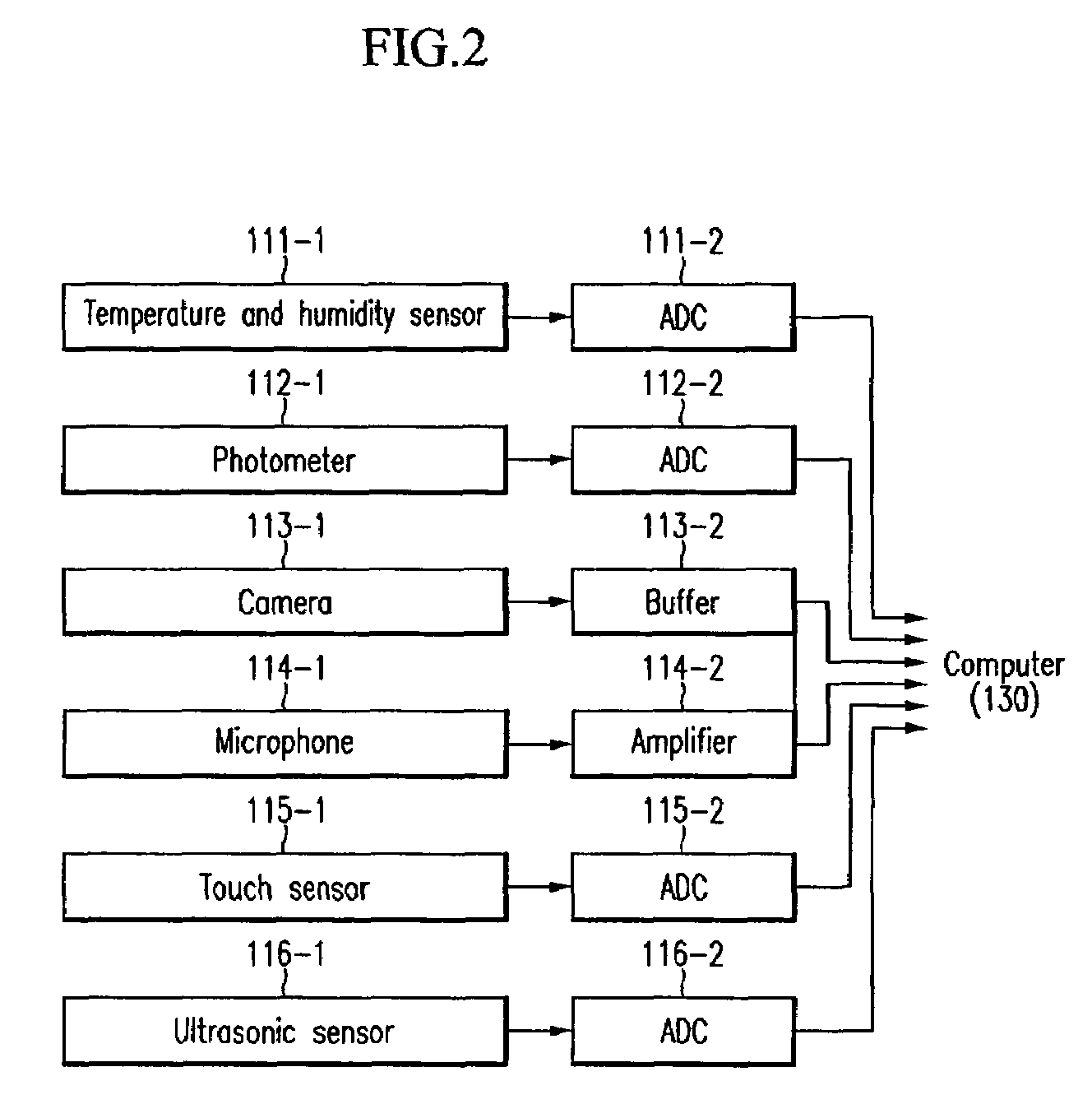

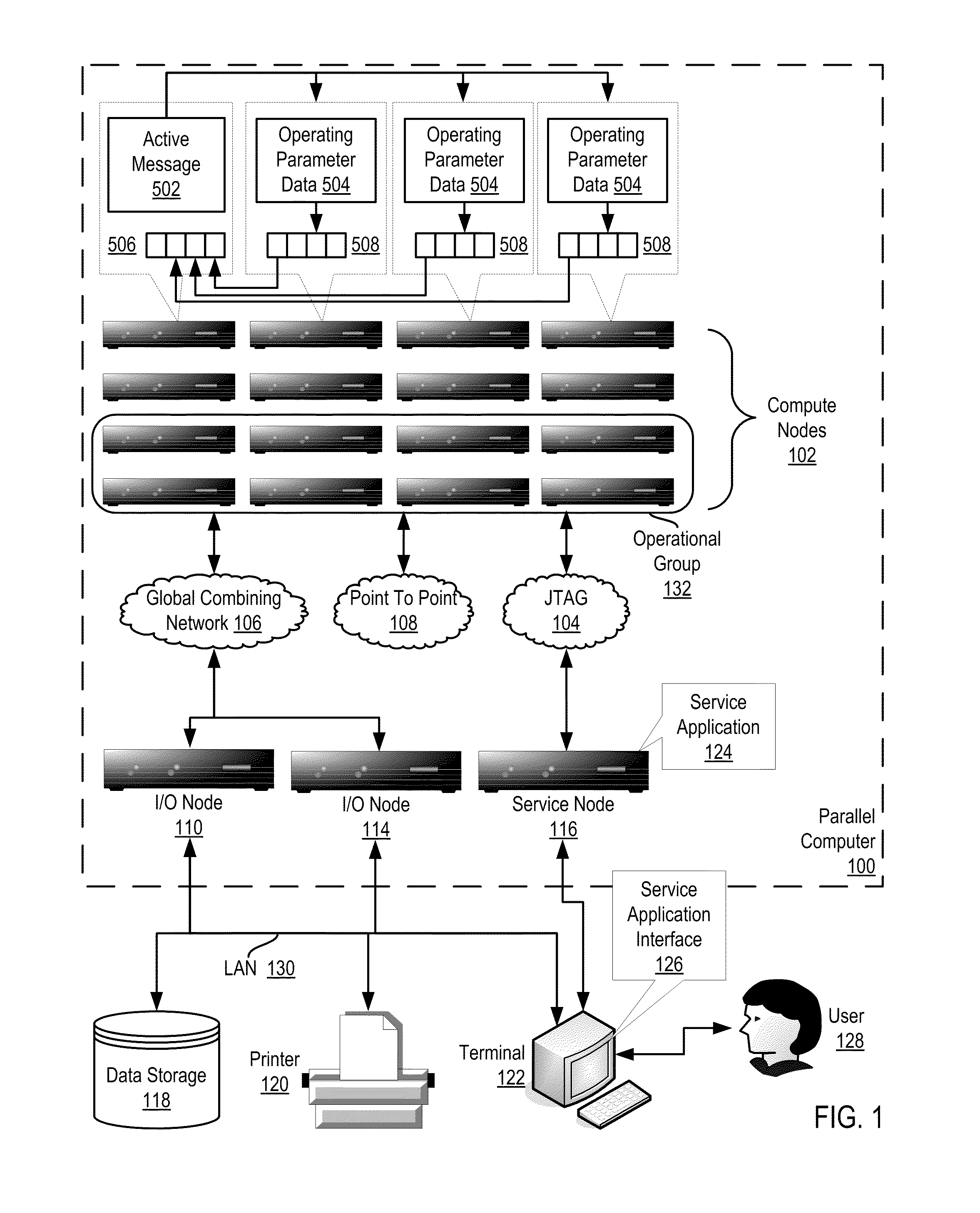

Monitoring Operating Parameters In A Distributed Computing System With Active Messages

InactiveUS20110267197A1Energy efficient ICTDigital data processing detailsActive messageCollective operation

In a distributed computing system including a nodes organized for collective operations: initiating, by a root node through an active message to all other nodes, a collective operation, the active message including an instruction to each node to store operating parameter data in each node's send buffer; and, responsive to the active message: storing, by each node, the node's operating parameter data in the node's send buffer and returning, by the node, the operating parameter data as a result of the collective operation.

Owner:IBM CORP

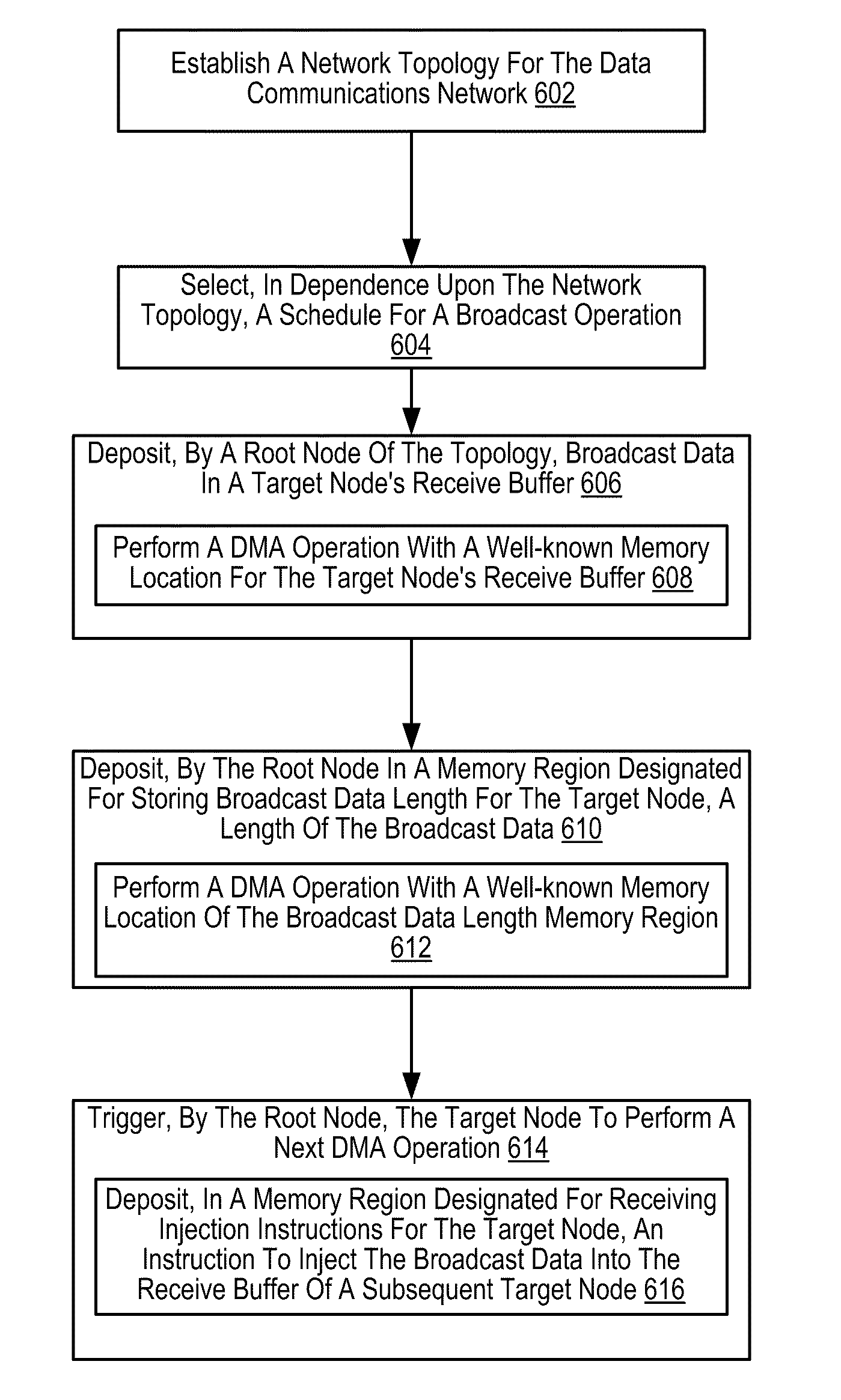

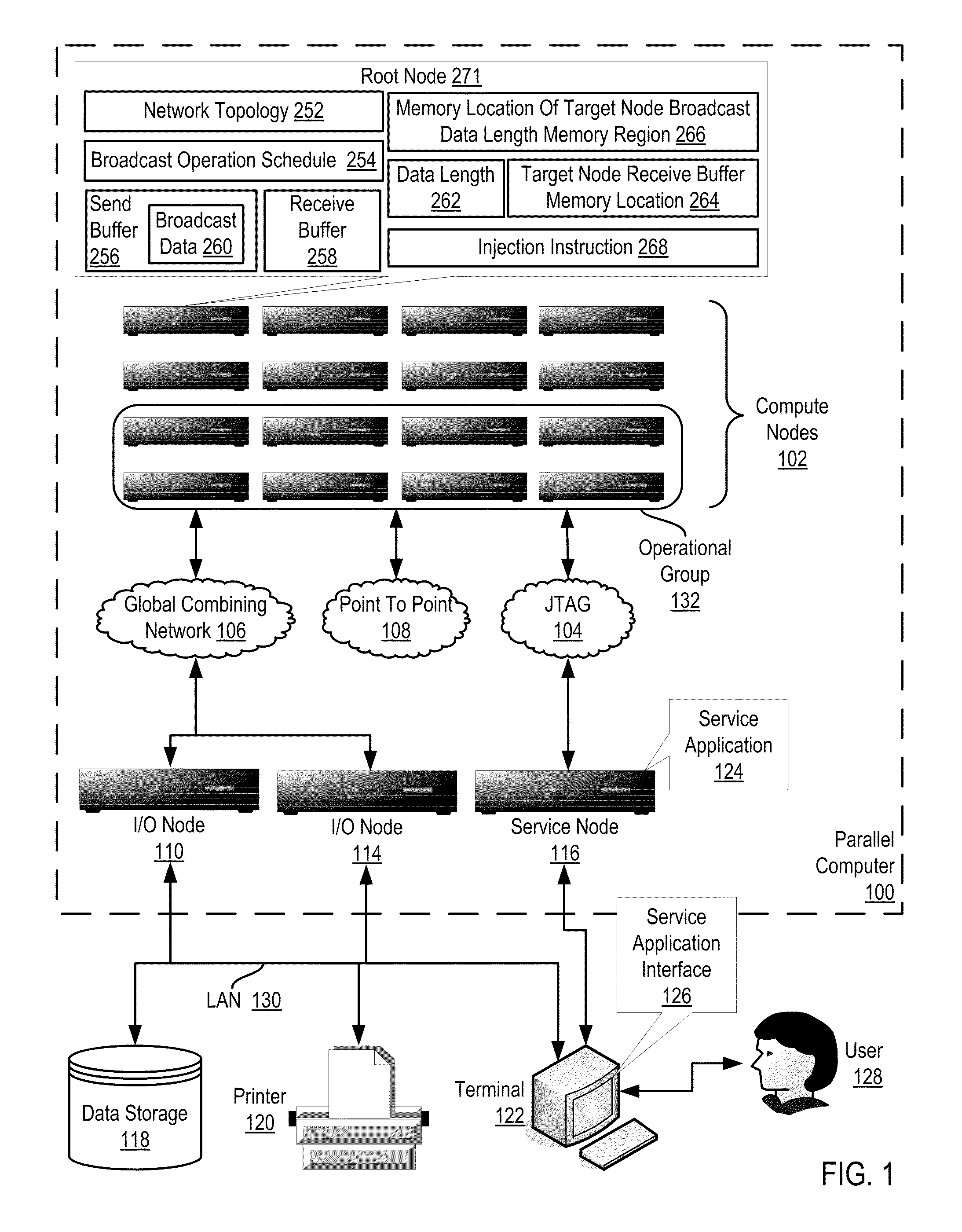

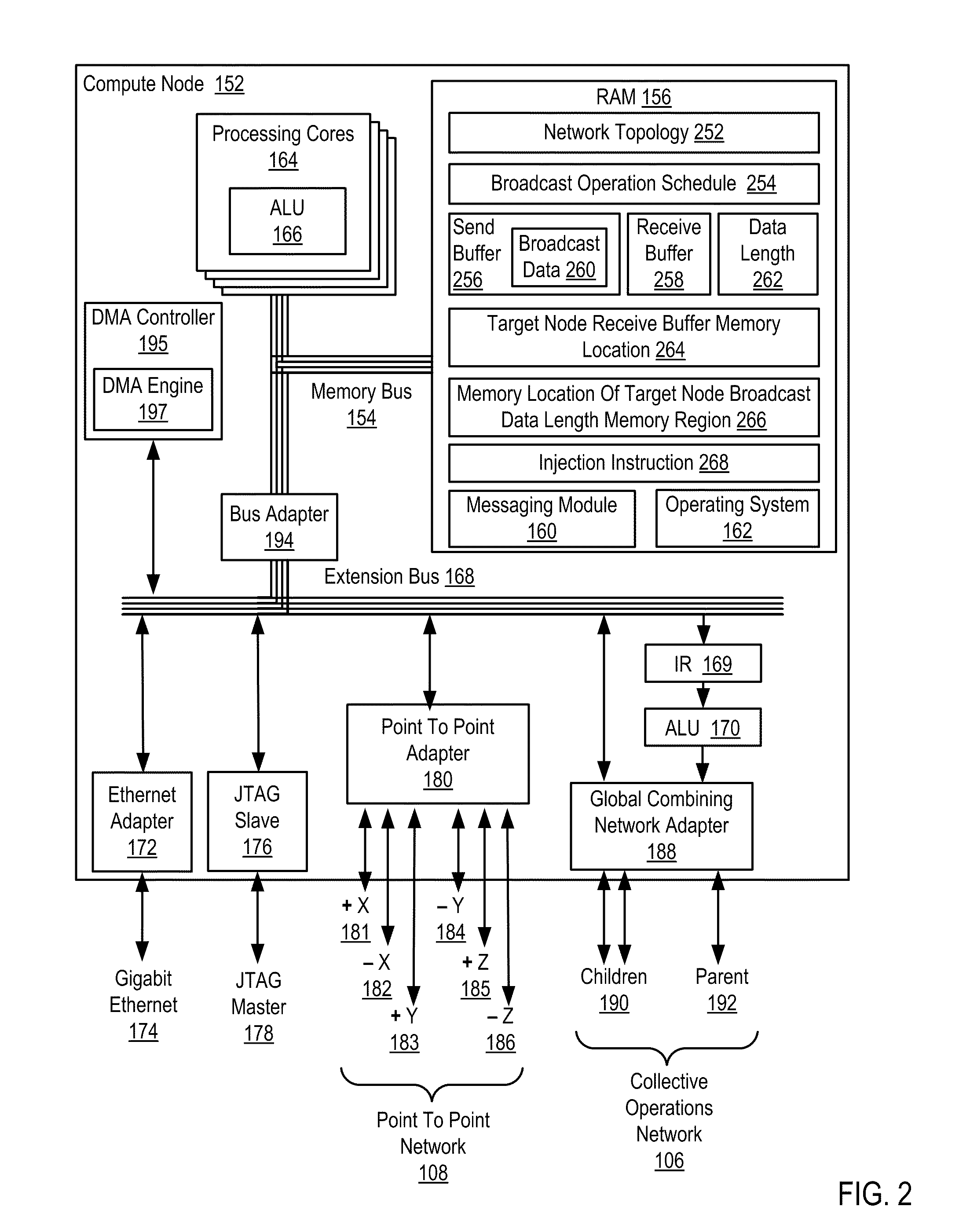

Effecting Hardware Acceleration Of Broadcast Operations In A Parallel Computer

InactiveUS20110289177A1Digital data processing detailsMultiple digital computer combinationsBroadcast domainBroadcast data

Compute nodes of a parallel computer organized for collective operations via a network, each compute node having a receive buffer and establishing a topology for the network; selecting a schedule for a broadcast operation; depositing, by a root node of the topology, broadcast data in a target node's receive buffer, including performing a DMA operation with a well-known memory location for the target node's receive buffer; depositing, by the root node in a memory region designated for storing broadcast data length, a length of the broadcast data, including performing a DMA operation with a well-known memory location of the broadcast data length memory region; and triggering, by the root node, the target node to perform a next DMA operation, including depositing, in a memory region designated for receiving injection instructions for the target node, an instruction to inject the broadcast data into the receive buffer of a subsequent target node.

Owner:IBM CORP

Broadcasting Collective Operation Contributions Throughout A Parallel Computer

InactiveUS20090240915A1Operational speed enhancementGeneral purpose stored program computerNetwork linkCollective operation

Owner:IBM CORP

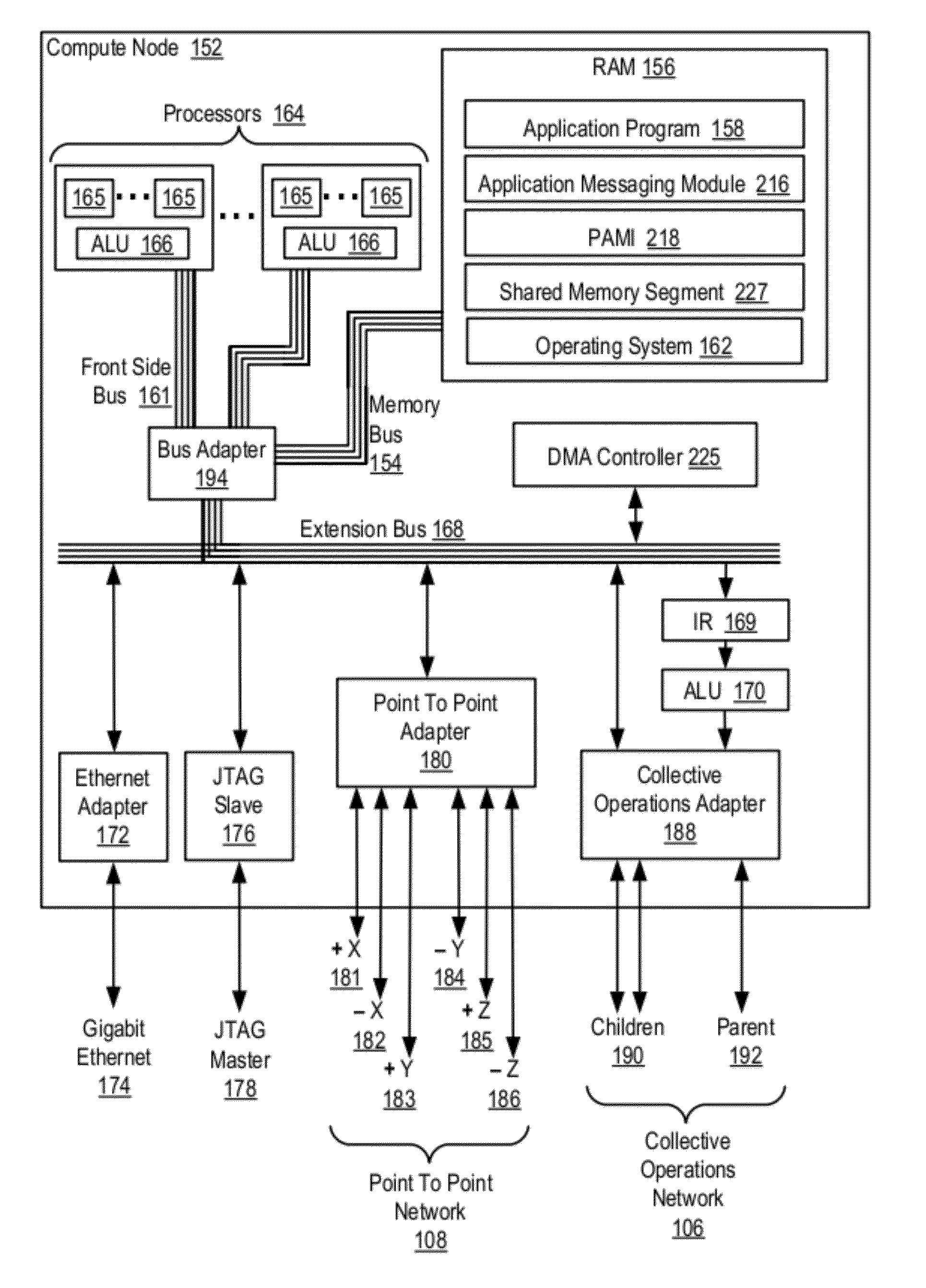

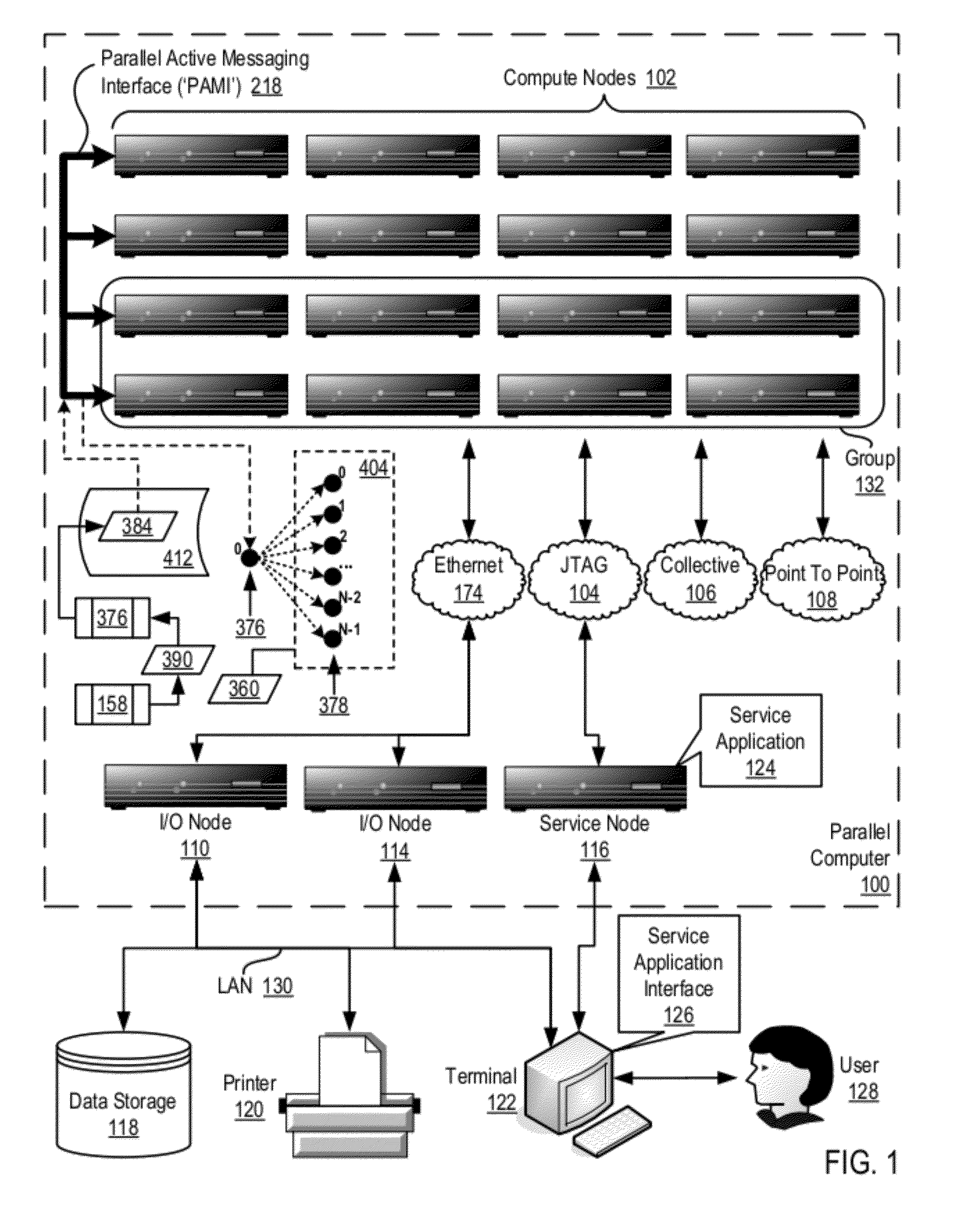

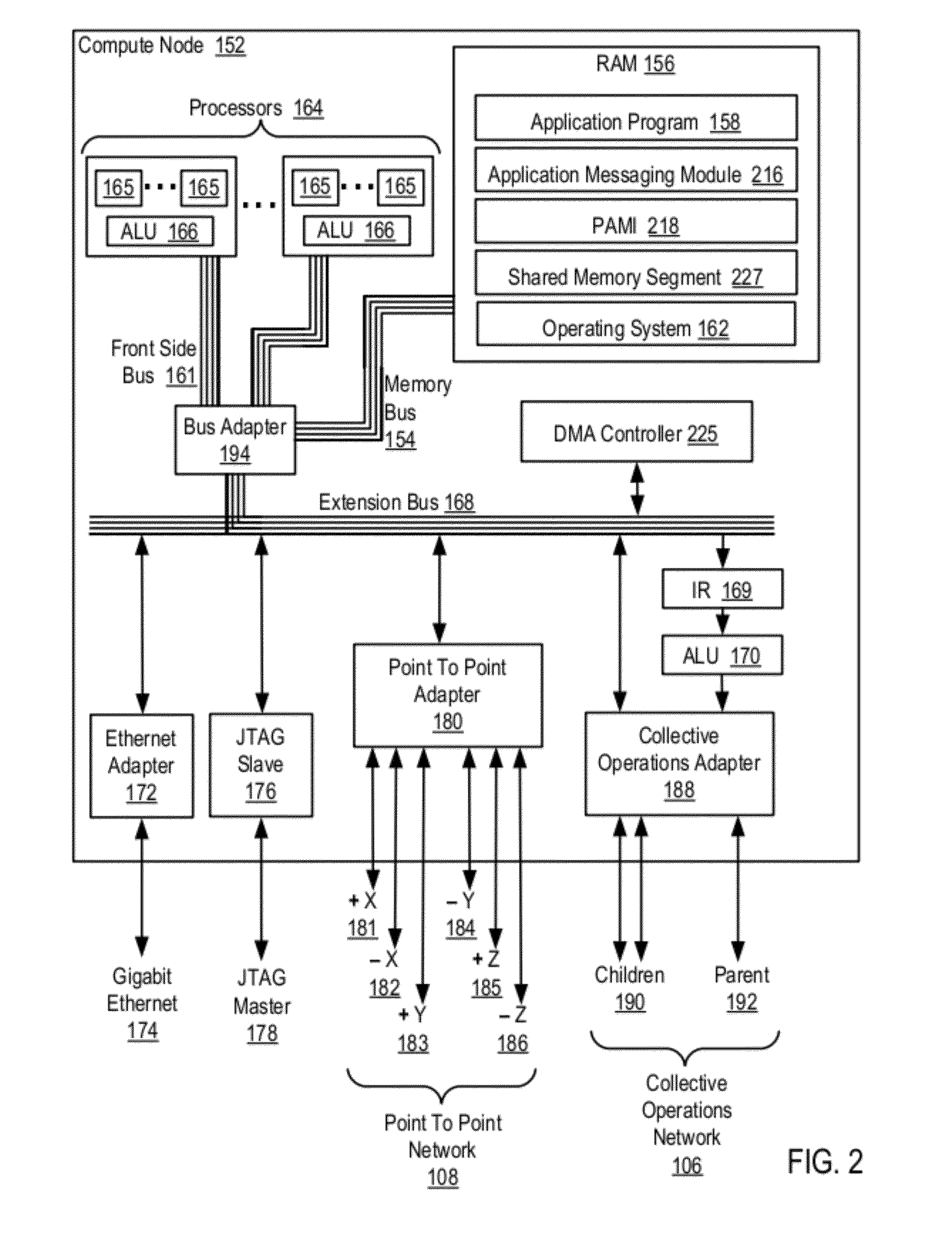

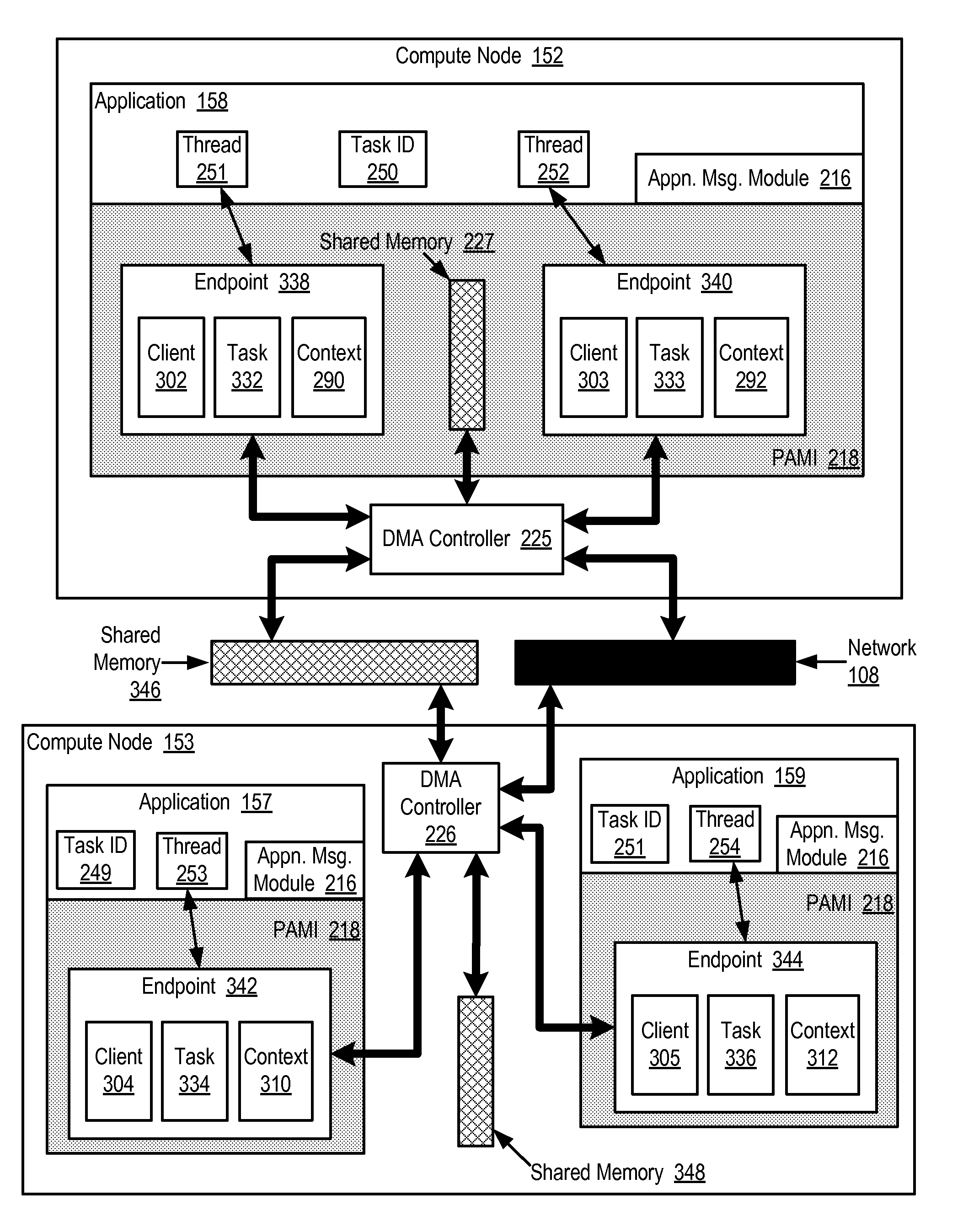

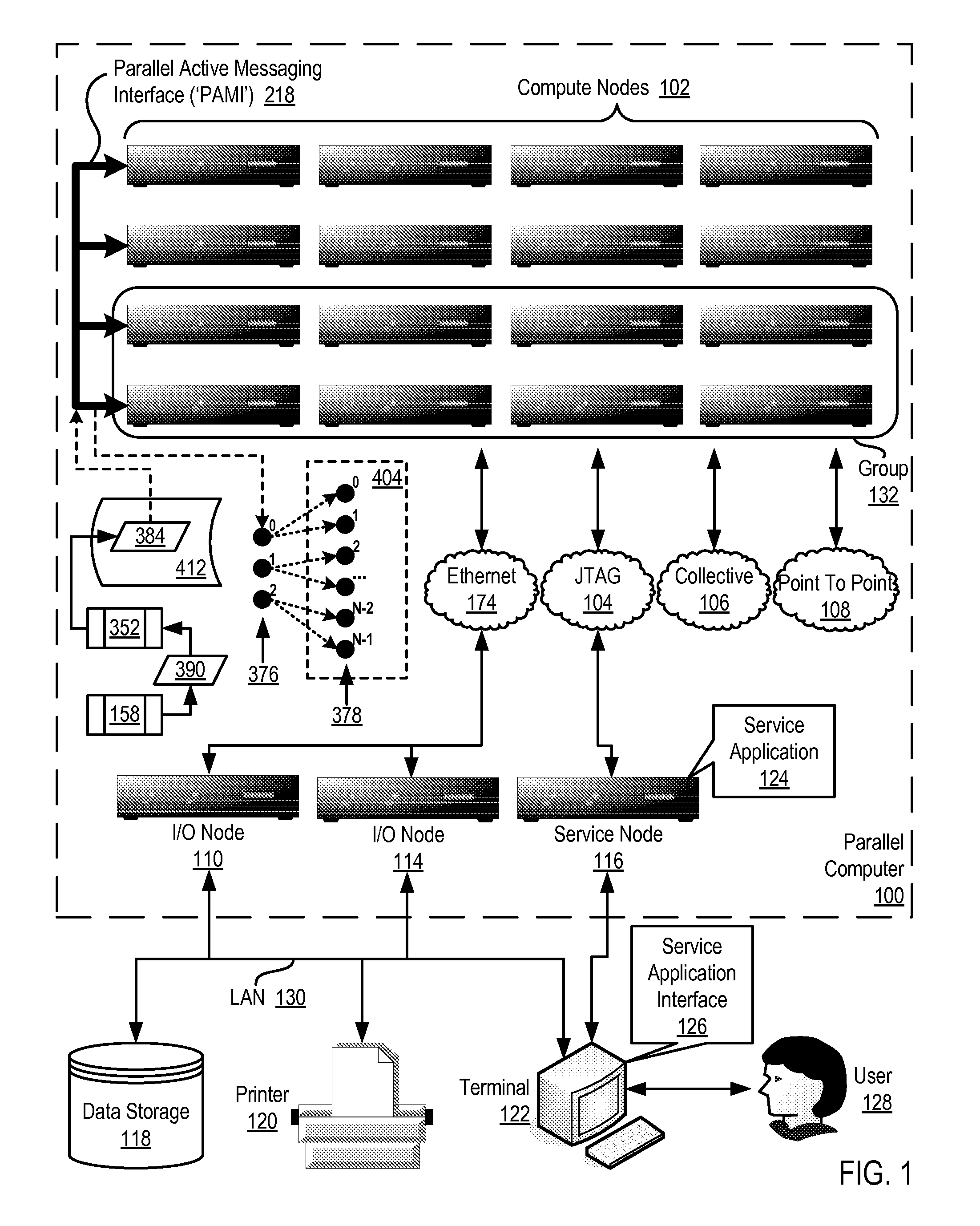

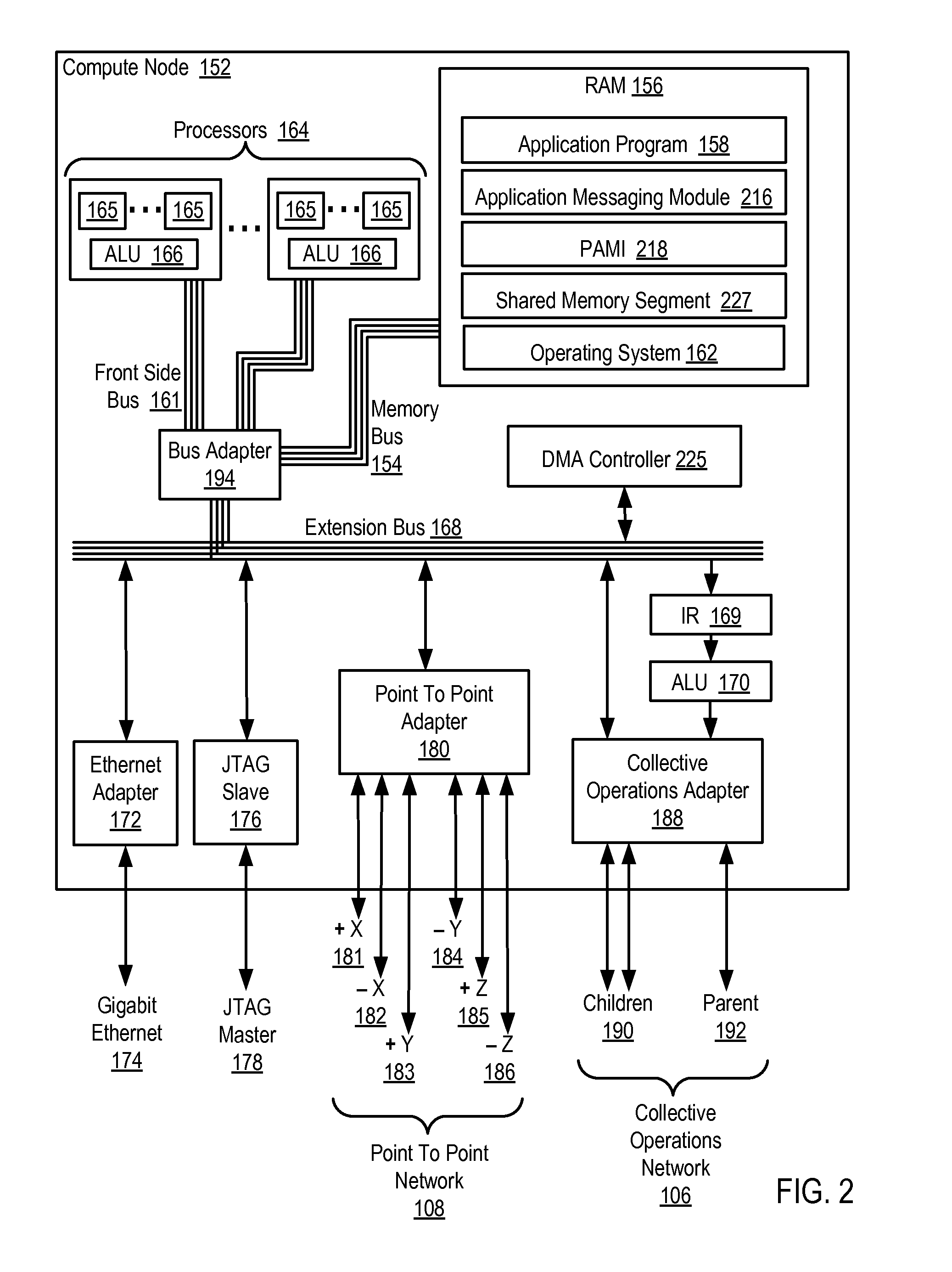

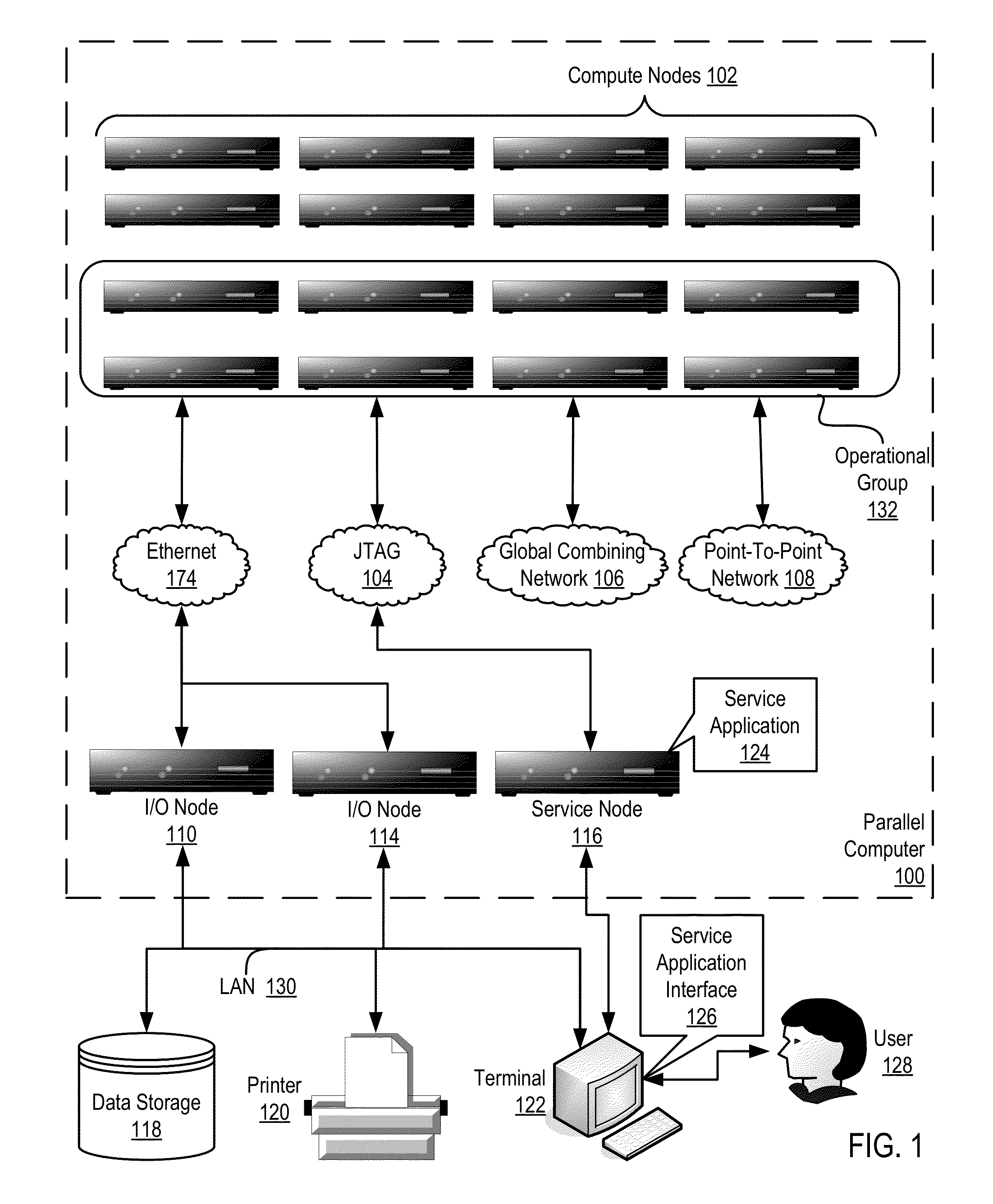

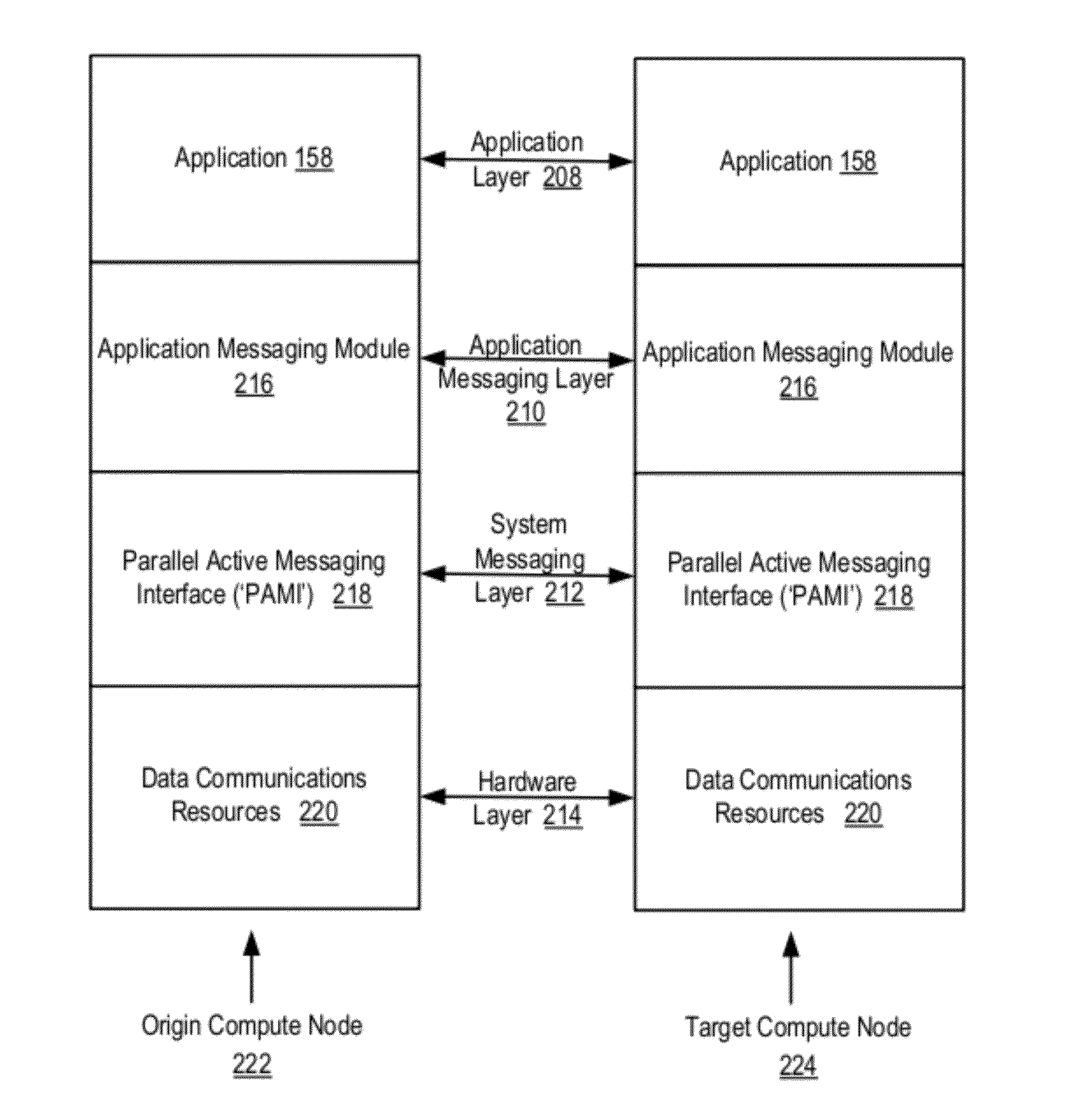

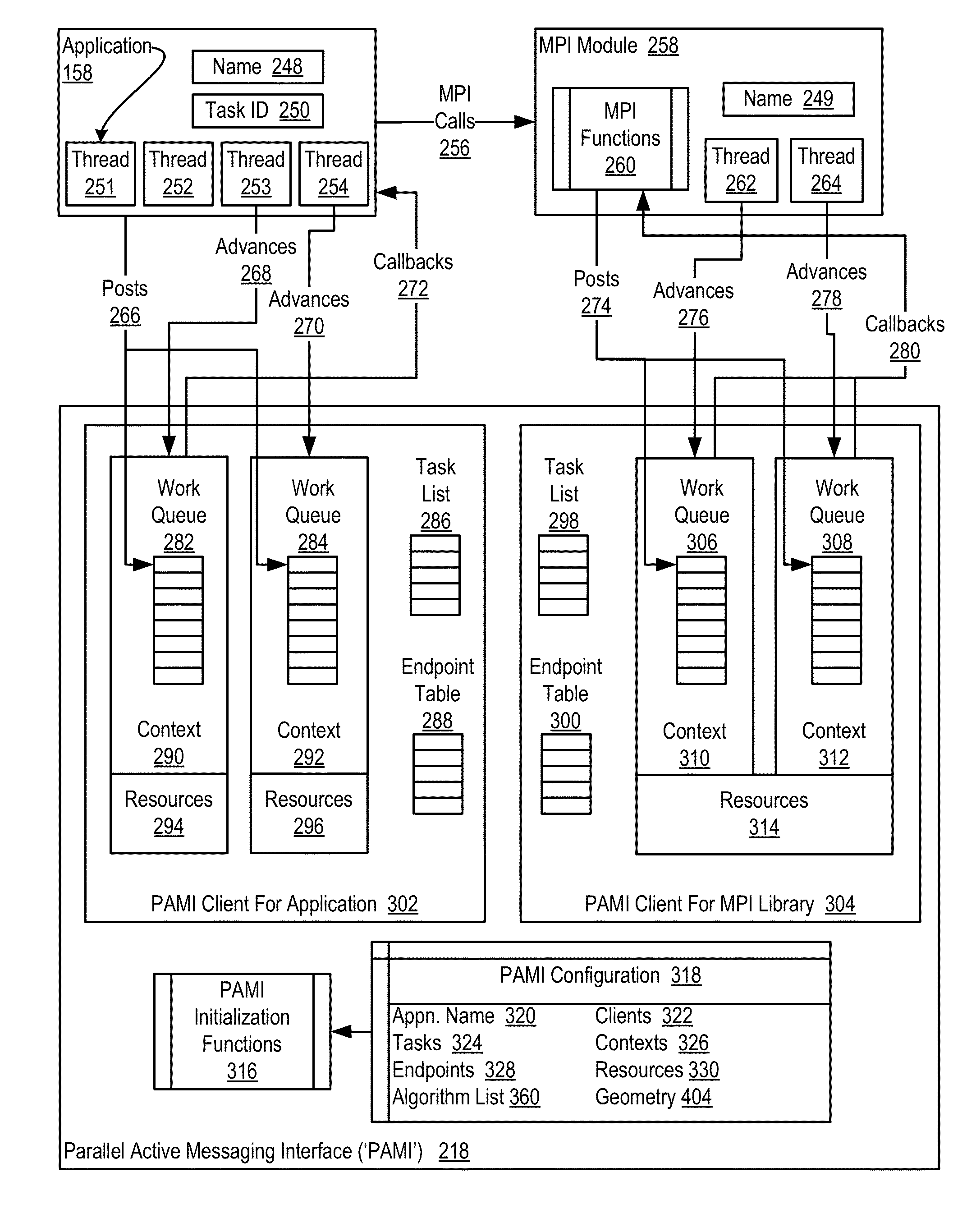

Endpoint-Based Parallel Data Processing With Non-Blocking Collective Instructions In A Parallel Active Messaging Interface Of A Parallel Computer

InactiveUS20120185679A1Interprogram communicationDigital computer detailsCommunication endpointConcurrent computation

Endpoint-based parallel data processing with non-blocking collective instructions in a parallel active messaging interface (‘PAMI’) of a parallel computer, the PAMI composed of data communications endpoints, each endpoint including a specification of data communications parameters for a thread of execution on a compute node, including specifications of a client, a context, and a task, the compute nodes coupled for data communications through the PAMI, including establishing by the parallel application a data communications geometry, the geometry specifying a set of endpoints that are used in collective operations of the PAMI, including associating with the geometry a list of collective algorithms valid for use with the endpoints of the geometry; registering in each endpoint in the geometry a dispatch callback function for a collective operation; and executing without blocking, through a single one of the endpoints in the geometry, an instruction for the collective operation.

Owner:IBM CORP

Endpoint-Based Parallel Data Processing In A Parallel Active Messaging Interface Of A Parallel Computer

InactiveUS20120254344A1Multiple digital computer combinationsProgram controlCommunication endpointApplication software

Endpoint-based parallel data processing in a parallel active messaging interface (‘PAMI’) of a parallel computer, the PAMI composed of data communications endpoints, each endpoint including a specification of data communications parameters for a thread of execution on a compute node, including specifications of a client, a context, and a task, the compute nodes coupled for data communications through the PAMI, including establishing a data communications geometry, the geometry specifying, for tasks representing processes of execution of the parallel application, a set of endpoints that are used in collective operations of the PAMI including a plurality of endpoints for one of the tasks; receiving in endpoints of the geometry an instruction for a collective operation; and executing the instruction for a collective operation through the endpoints in dependence upon the geometry, including dividing data communications operations among the plurality of endpoints for one of the tasks.

Owner:IBM CORP

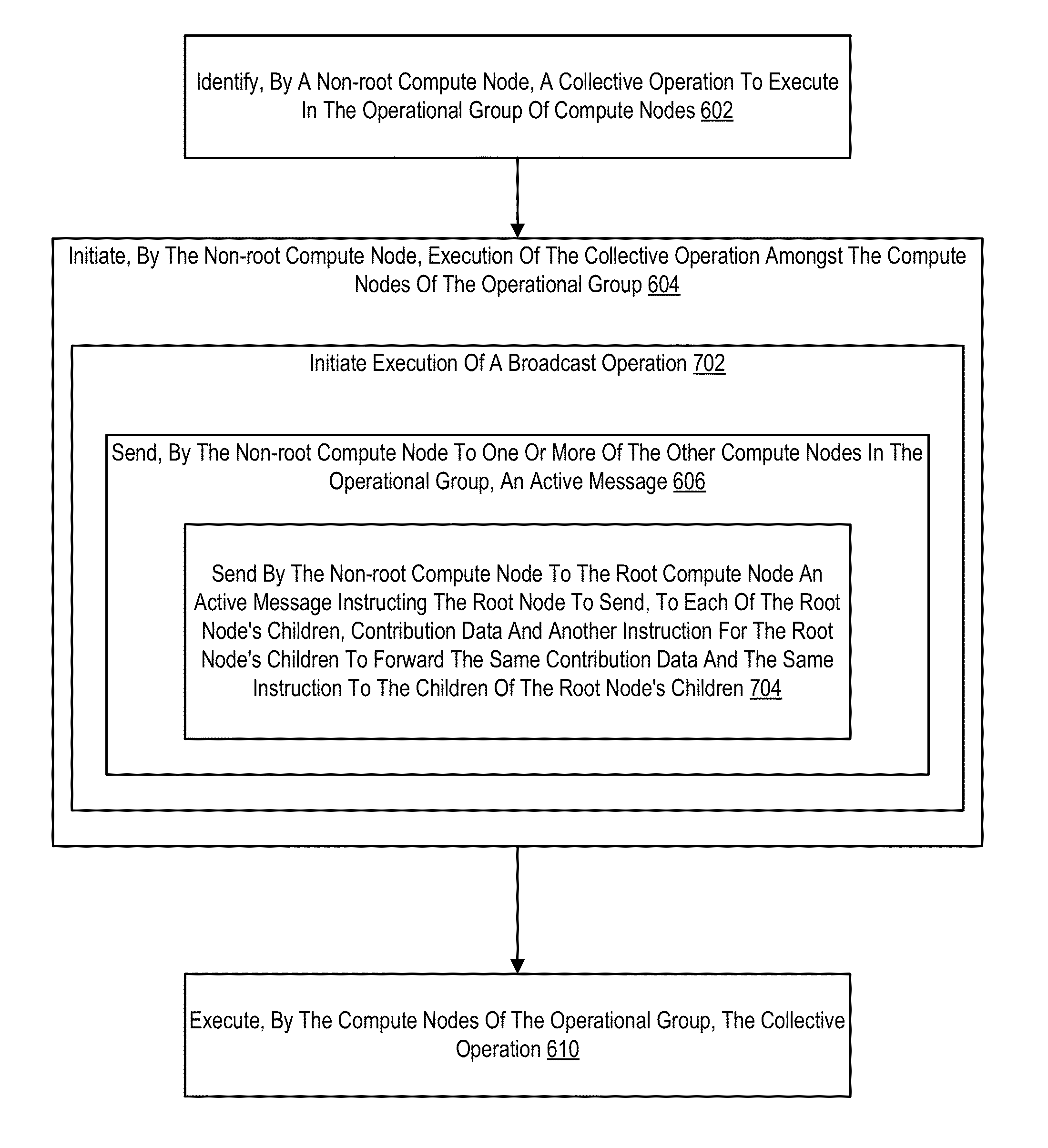

Initiating A Collective Operation In A Parallel Computer

InactiveUS20130212145A1Multiple digital computer combinationsProgram controlActive messageCollective operation

Initiating a collective operation in a parallel computer that includes compute nodes coupled for data communications and organized in an operational group for collective operations with one compute node assigned as a root node, including: identifying, by a non-root compute node, a collective operation to execute in the operational group of compute nodes; initiating, by the non-root compute node, execution of the collective operation amongst the compute nodes of the operational group including: sending, by the non-root compute node to one or more of the other compute nodes in the operational group, an active message, the active message including information configured to initiate execution of the collective operation amongst the compute nodes of the operational group; and executing, by the compute nodes of the operational group, the collective operation.

Owner:IBM CORP

Data Communications For A Collective Operation In A Parallel Active Messaging Interface Of A Parallel Computer

InactiveUS20120144401A1Interprogram communicationDigital computer detailsCommunication endpointClient-side

Algorithm selection for data communications in a parallel active messaging interface (‘PAMI’) of a parallel computer, the PAMI composed of data communications endpoints, each endpoint including specifications of a client, a context, and a task, endpoints coupled for data communications through the PAMI, including associating in the PAMI data communications algorithms and bit masks; receiving in an origin endpoint of the PAMI a collective instruction, the instruction specifying transmission of a data communications message from the origin endpoint to a target endpoint; constructing a bit mask for the received collective instruction; selecting, from among the associated algorithms and bit masks, a data communications algorithm in dependence upon the constructed bit mask; and executing the collective instruction, transmitting, according to the selected data communications algorithm from the origin endpoint to the target endpoint, the data communications message.

Owner:IBM CORP

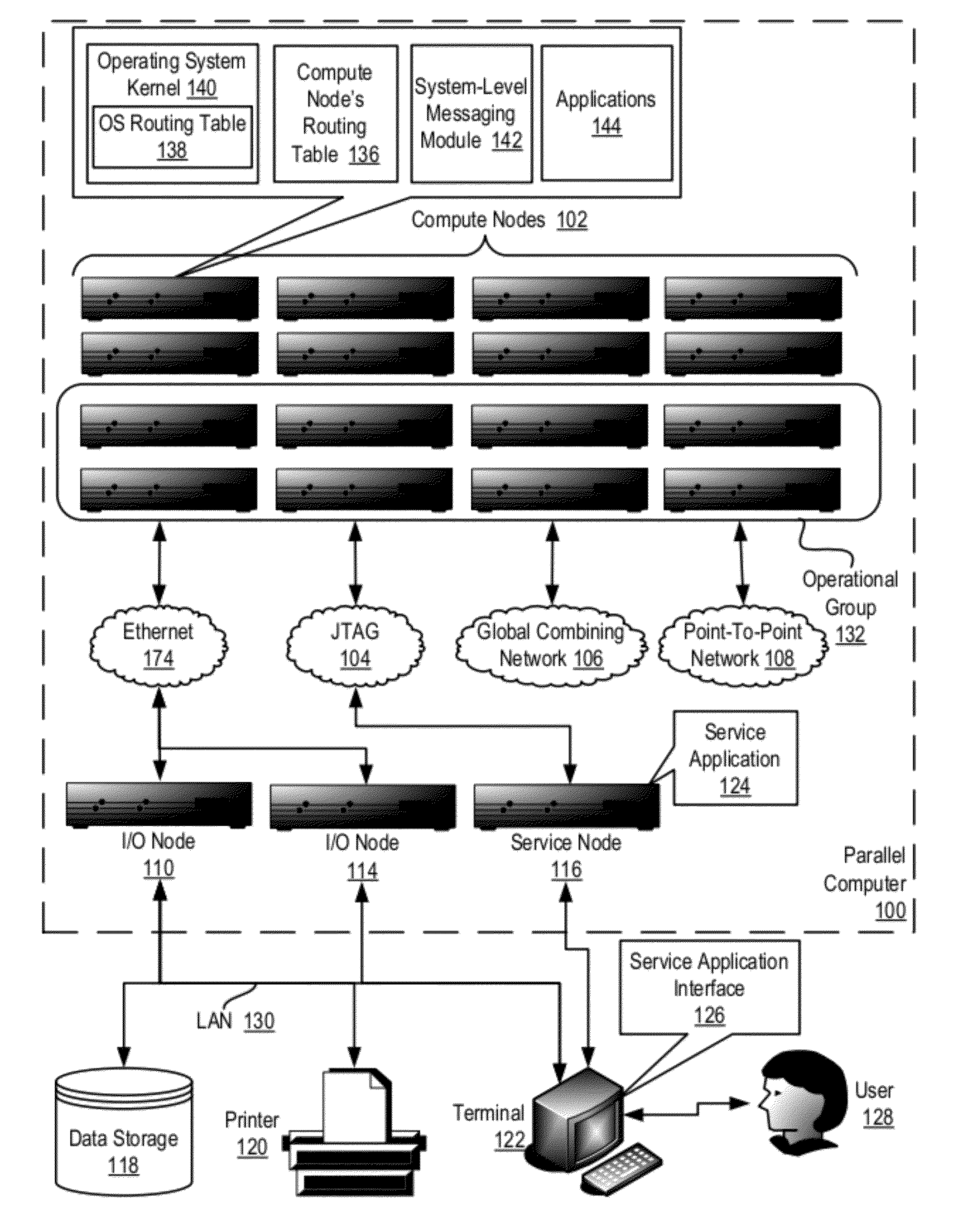

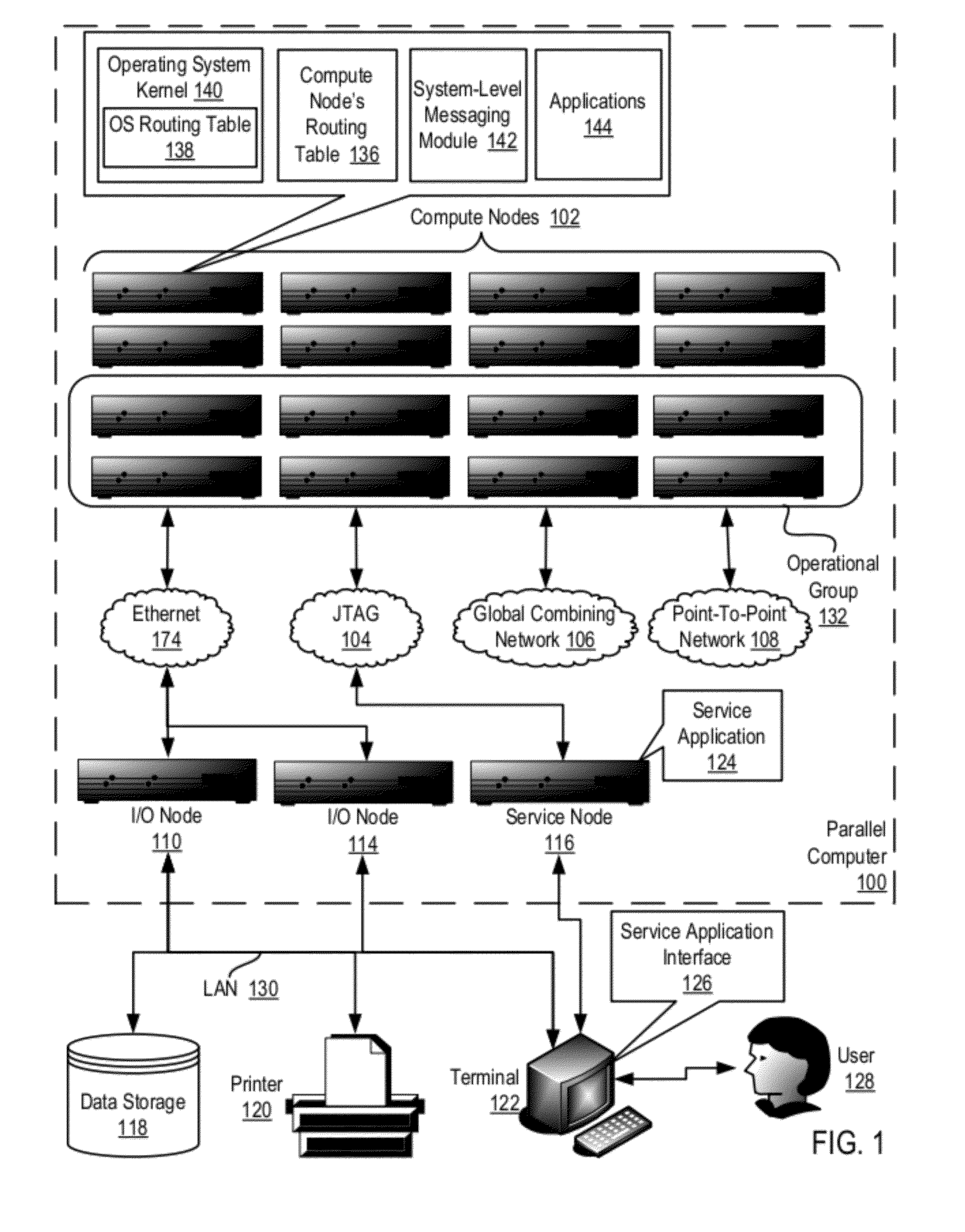

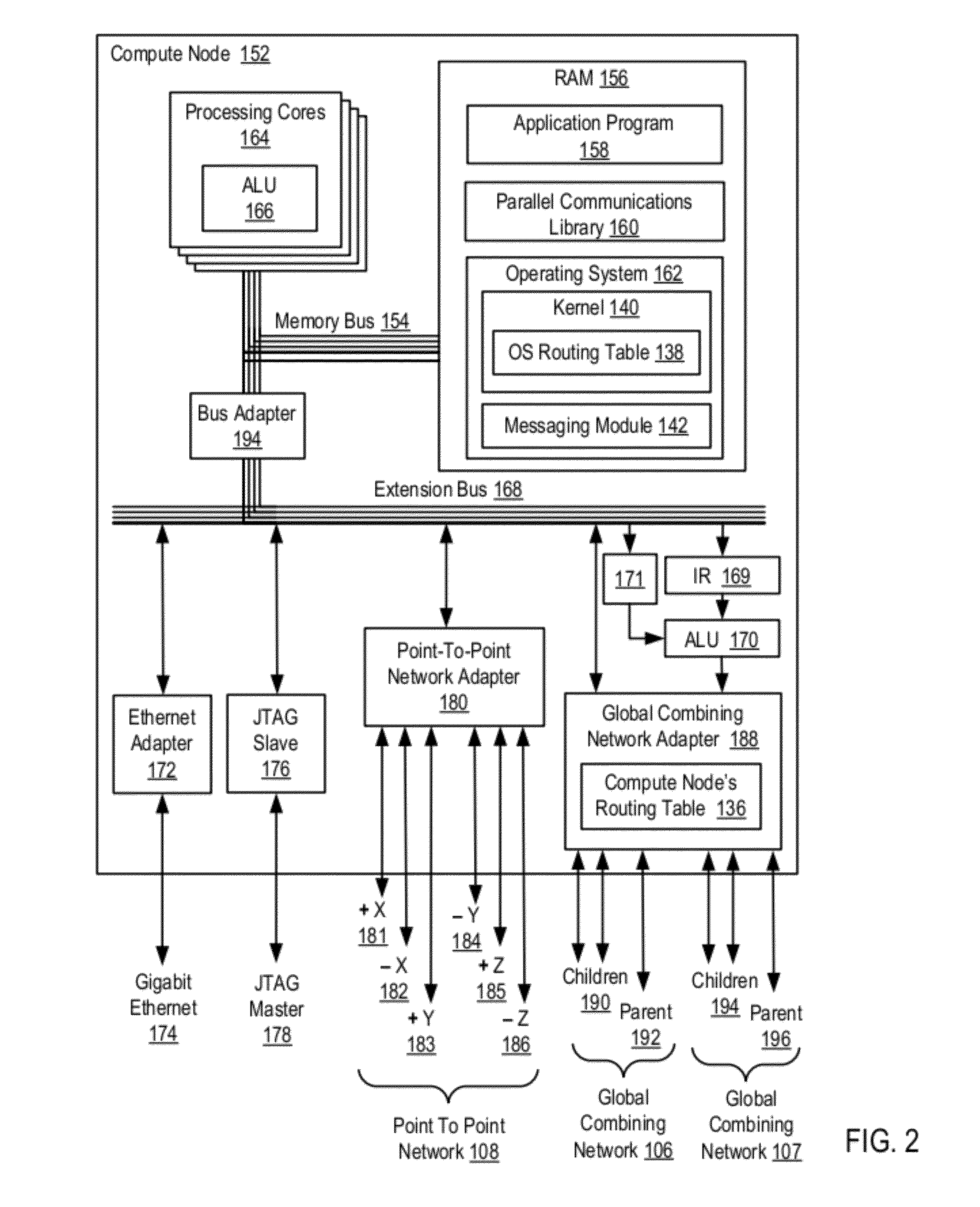

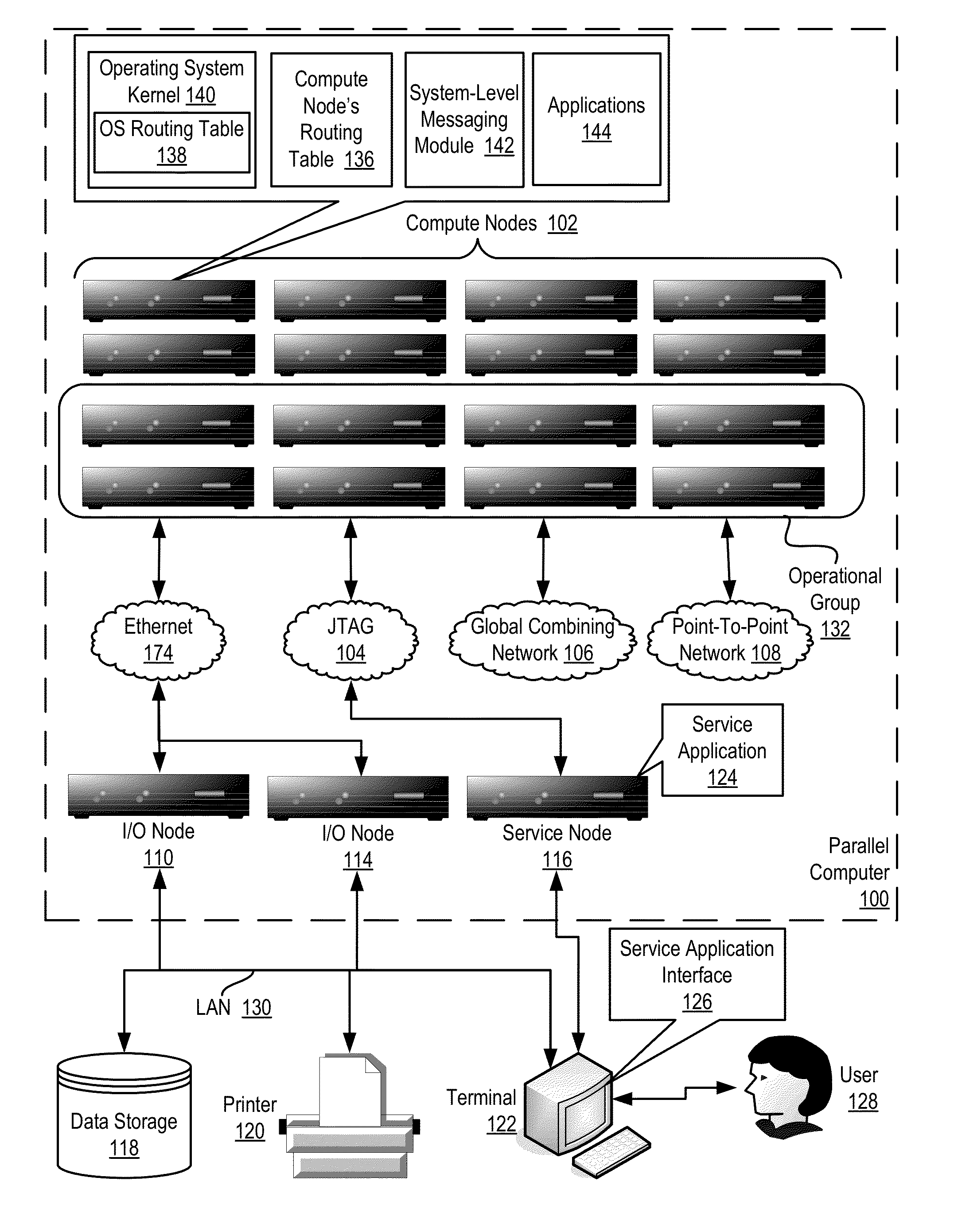

Routing Data Communications Packets In A Parallel Computer

InactiveUS20120079133A1Multiple digital computer combinationsProgram controlOperational systemRouting table

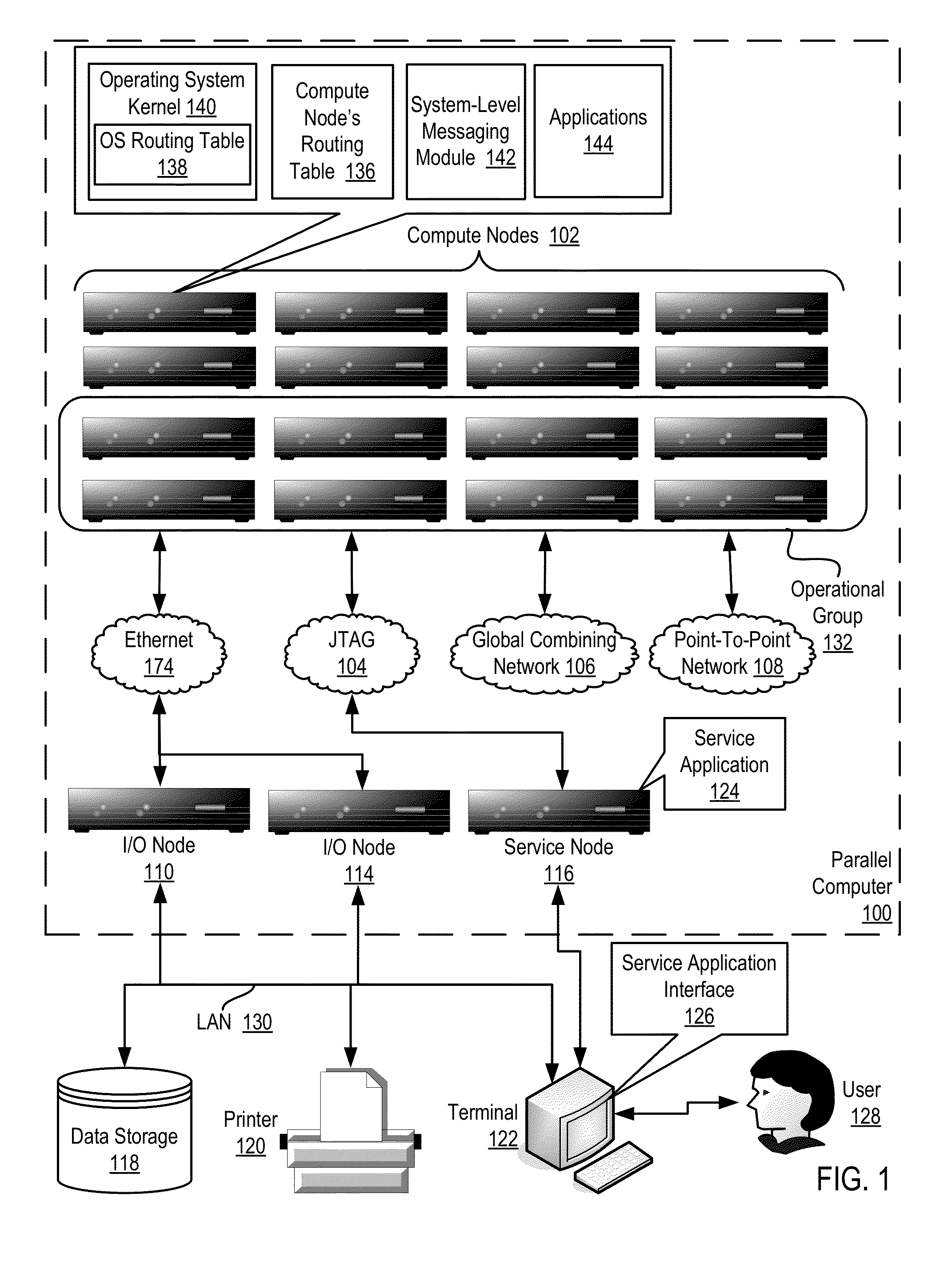

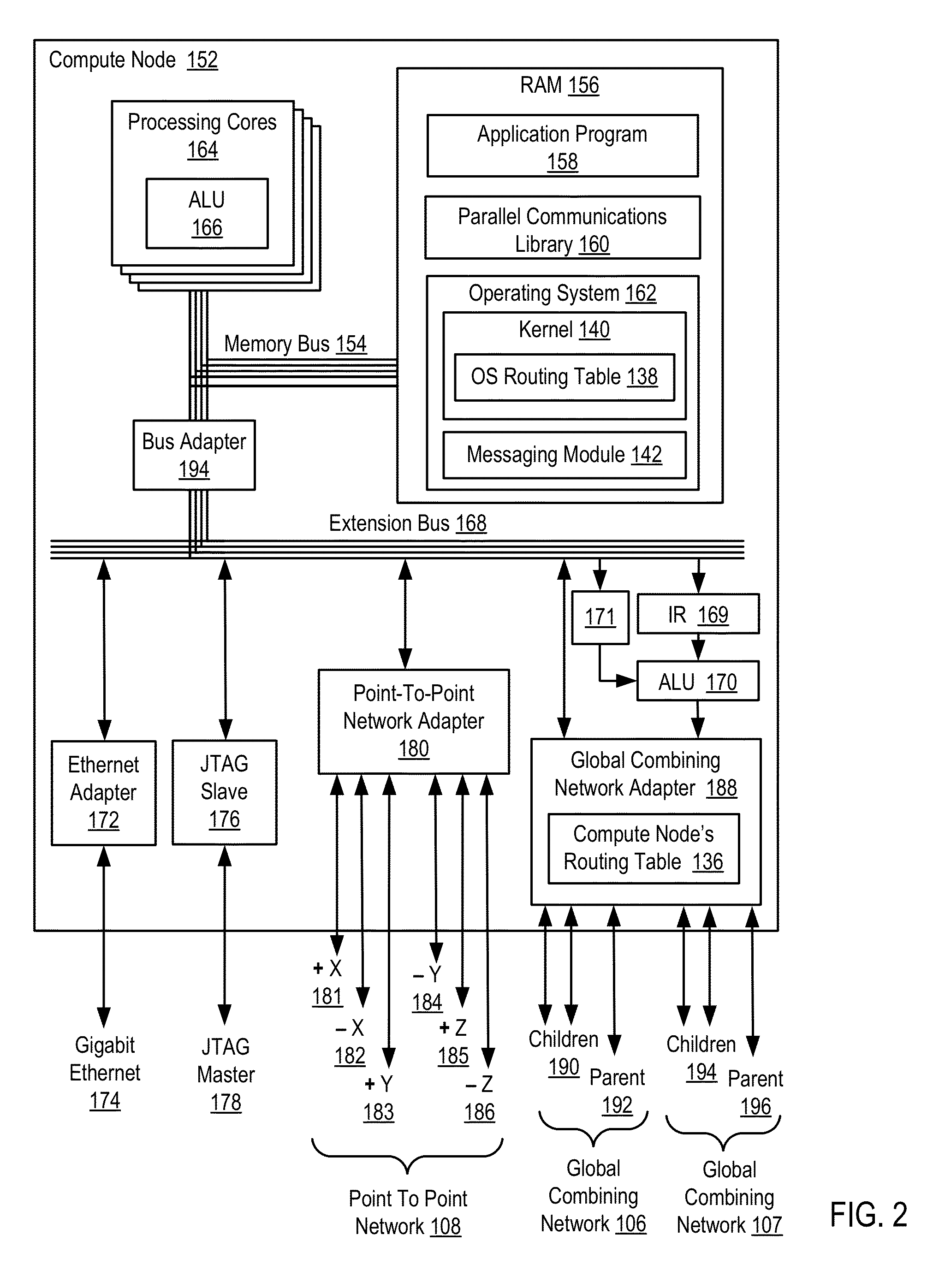

Routing data communications packets in a parallel computer that includes compute nodes organized for collective operations, each compute node including an operating system kernel and a system-level messaging module that is a module of automated computing machinery that exposes a messaging interface to applications, each compute node including a routing table that specifies, for each of a multiplicity of route identifiers, a data communications path through the compute node, including: receiving in a compute node a data communications packet that includes a route identifier value; retrieving from the routing table a specification of a data communications path through the compute node; and routing, by the compute node, the data communications packet according to the data communications path identified by the compute node's routing table entry for the data communications packet's route identifier value.

Owner:IBM CORP

Routing data communications packets in a parallel computer

Routing data communications packets in a parallel computer that includes compute nodes organized for collective operations, each compute node including an operating system kernel and a system-level messaging module that is a module of automated computing machinery that exposes a messaging interface to applications, each compute node including a routing table that specifies, for each of a multiplicity of route identifiers, a data communications path through the compute node, including: receiving in a compute node a data communications packet that includes a route identifier value; retrieving from the routing table a specification of a data communications path through the compute node; and routing, by the compute node, the data communications packet according to the data communications path identified by the compute node's routing table entry for the data communications packet's route identifier value.

Owner:INT BUSINESS MASCH CORP

Performing collective operations using software setup and partial software execution at leaf nodes in a multi-tiered full-graph interconnect architecture

InactiveUS7958183B2Improve communication performanceHighly-configurableGeneral purpose stored program computerMultiple digital computer combinationsData processing systemCollective operation

A mechanism for performing collective operations. In software executing on a parent processor in a first processor book, a number of other processors are determined in a same or different processor book of the data processing system that is needed to execute the collective operation, thereby establishing a plurality of processors comprising the parent processor and the other processors. In software executing on the parent processor, the plurality of processors are logically arranged as a plurality of nodes in a hierarchical structure. The collective operation is transmitted to the plurality of processors based on the hierarchical structure. In hardware of the parent processor, results are received from the execution of the collective operation from the other processors, a final result is generated of the collective operation based on the received results, and the final result is output.

Owner:IBM CORP

Endpoint-based parallel data processing with non-blocking collective instructions in a parallel active messaging interface of a parallel computer

Endpoint-based parallel data processing with non-blocking collective instructions in a parallel active messaging interface (‘PAMI’) of a parallel computer, the PAMI composed of data communications endpoints, each endpoint including a specification of data communications parameters for a thread of execution on a compute node, including specifications of a client, a context, and a task, the compute nodes coupled for data communications through the PAMI, including establishing by the parallel application a data communications geometry, the geometry specifying a set of endpoints that are used in collective operations of the PAMI, including associating with the geometry a list of collective algorithms valid for use with the endpoints of the geometry; registering in each endpoint in the geometry a dispatch callback function for a collective operation; and executing without blocking, through a single one of the endpoints in the geometry, an instruction for the collective operation.

Owner:INT BUSINESS MASCH CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com