A Shared Data Dynamic Update Method for Data Conflict-Free Programs

A technology for sharing data and dynamically updating, applied in inter-program communication, multi-program device, program control design, etc., can solve problems such as unnatural support for monitoring protocols, complex correctness of directory protocols, and reduced protocol performance, etc., to achieve Efficient automatic dynamic update and invalidation, elimination of invalidation or invalidation messages, and the effect of reducing network and area overhead

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] The present invention will be further described below in conjunction with the accompanying drawings and specific preferred embodiments, but the protection scope of the present invention is not limited thereby.

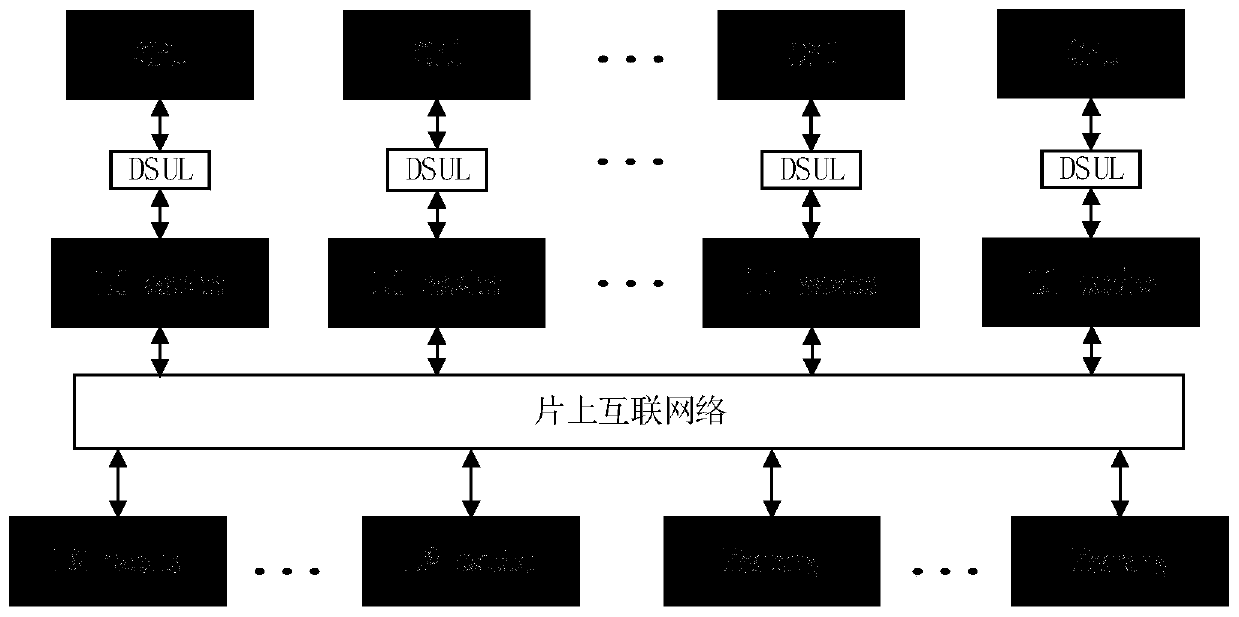

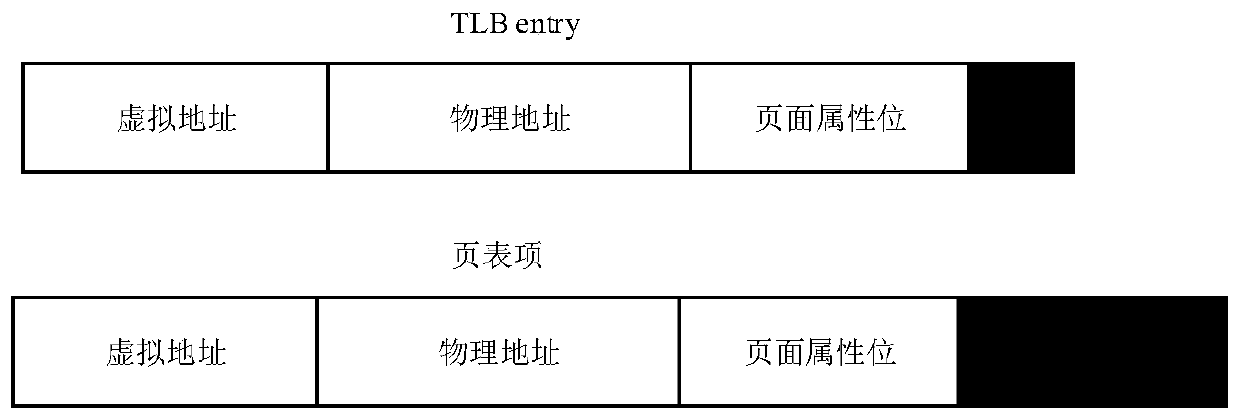

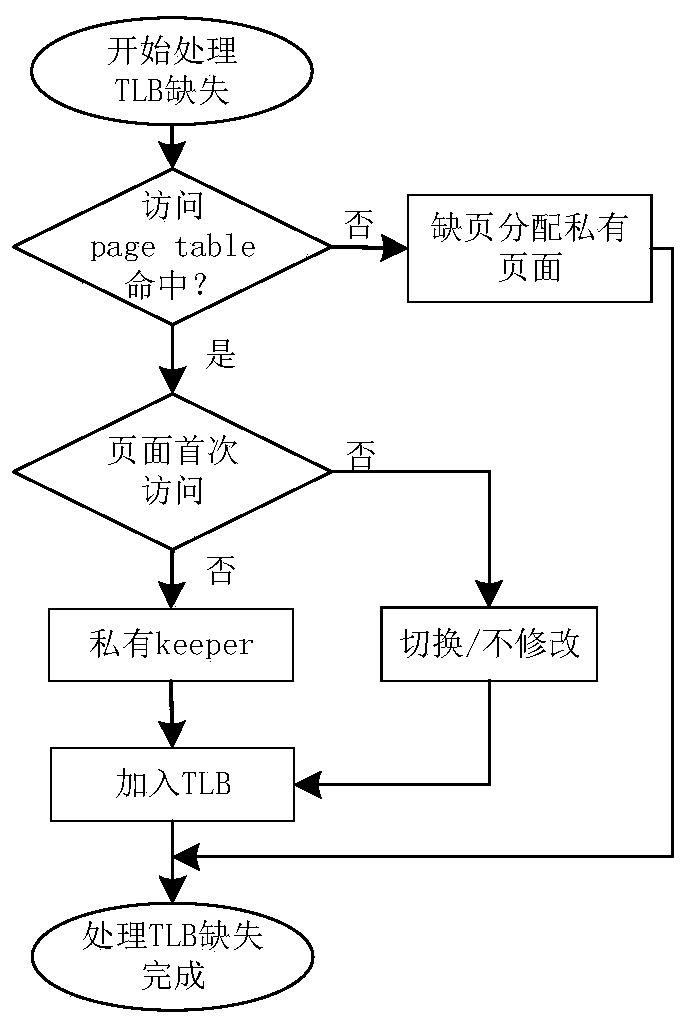

[0033] Such as Figure 1~4 As shown, the method for dynamically updating shared data oriented to programs with no data conflicts in this embodiment includes: during the execution of parallel programs with no data conflicts, when the CPU executes memory access instructions, it identifies requests for shared data and collects the history of shared data being accessed Information, when at the synchronization point, the cache controller performs dynamic update or invalidation operations on the expired shared data in the local cache according to the collected historical information of the shared data being accessed, among which the dynamic update operation is performed on the shared data that is judged to be the first type , perform an invalidation operation on the s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com