Method for acquiring position and posture of images within city range based on deep learning

A technology for acquiring images and deep learning, which is applied in the field of acquiring the position and attitude of images within the city based on deep learning, which can solve the problem of time-consuming calculation process of RANSAC, and achieve the effect of enriching the location information of pictures and reducing costs.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] Below in conjunction with embodiment and accompanying drawing, further illustrate the present invention.

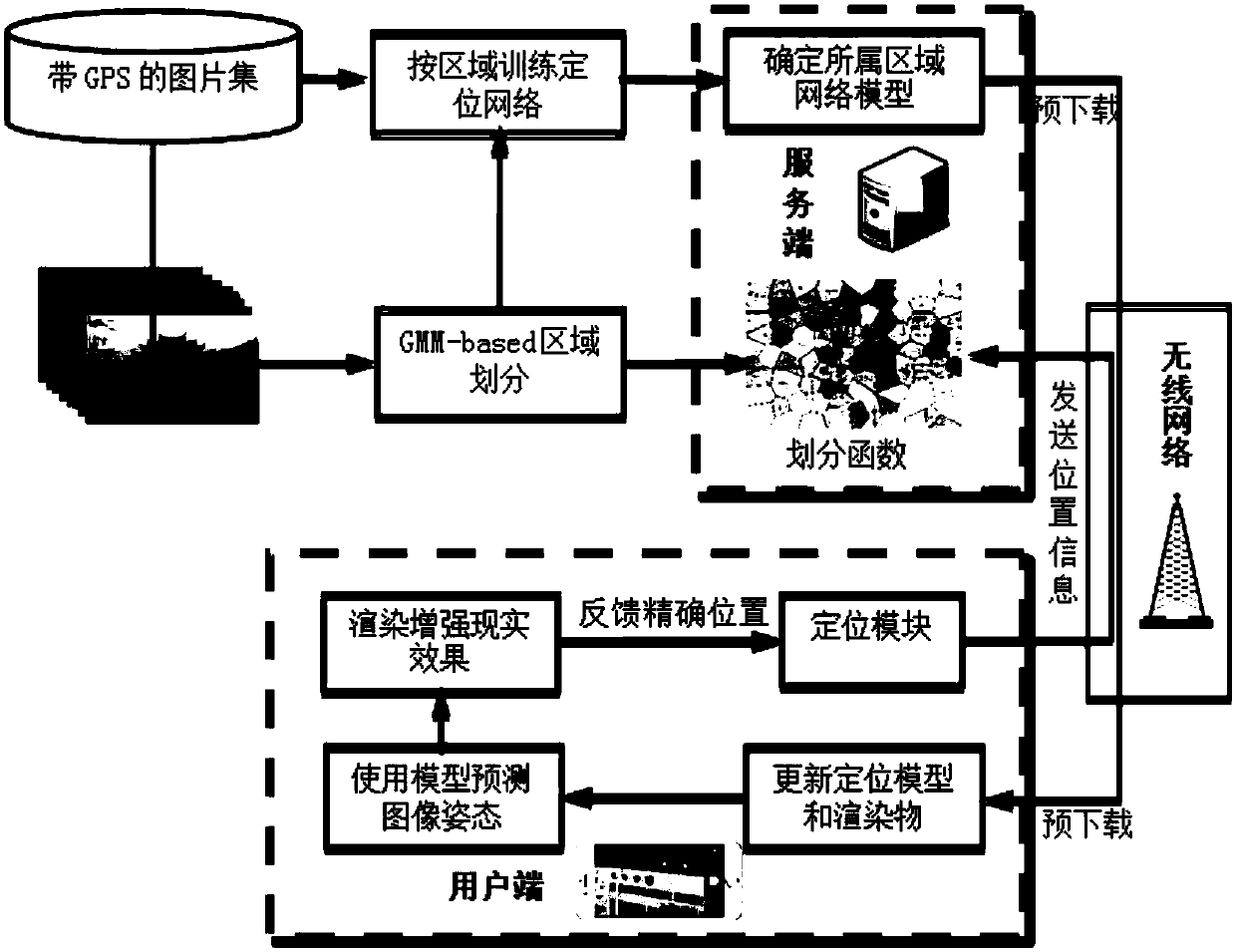

[0044] 1. Overall process design of the invention

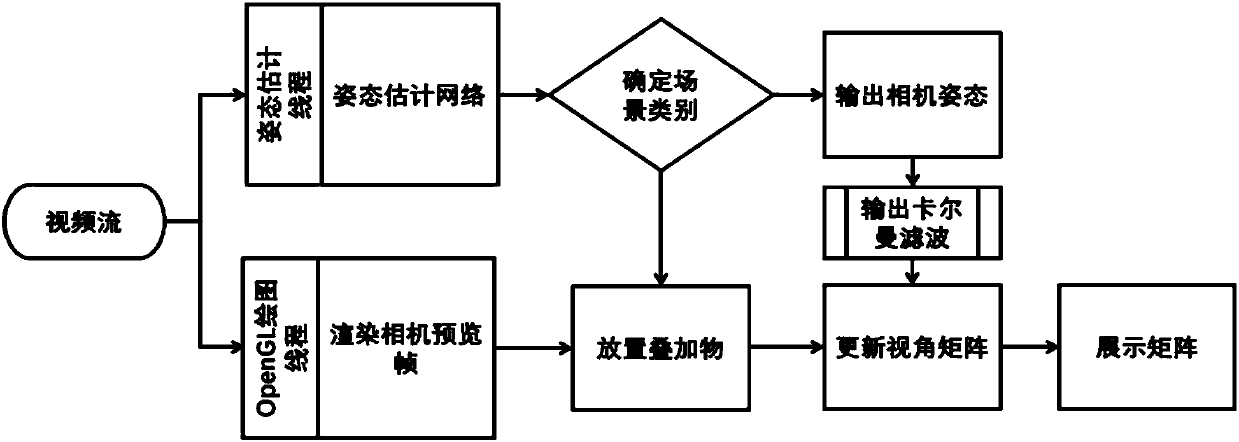

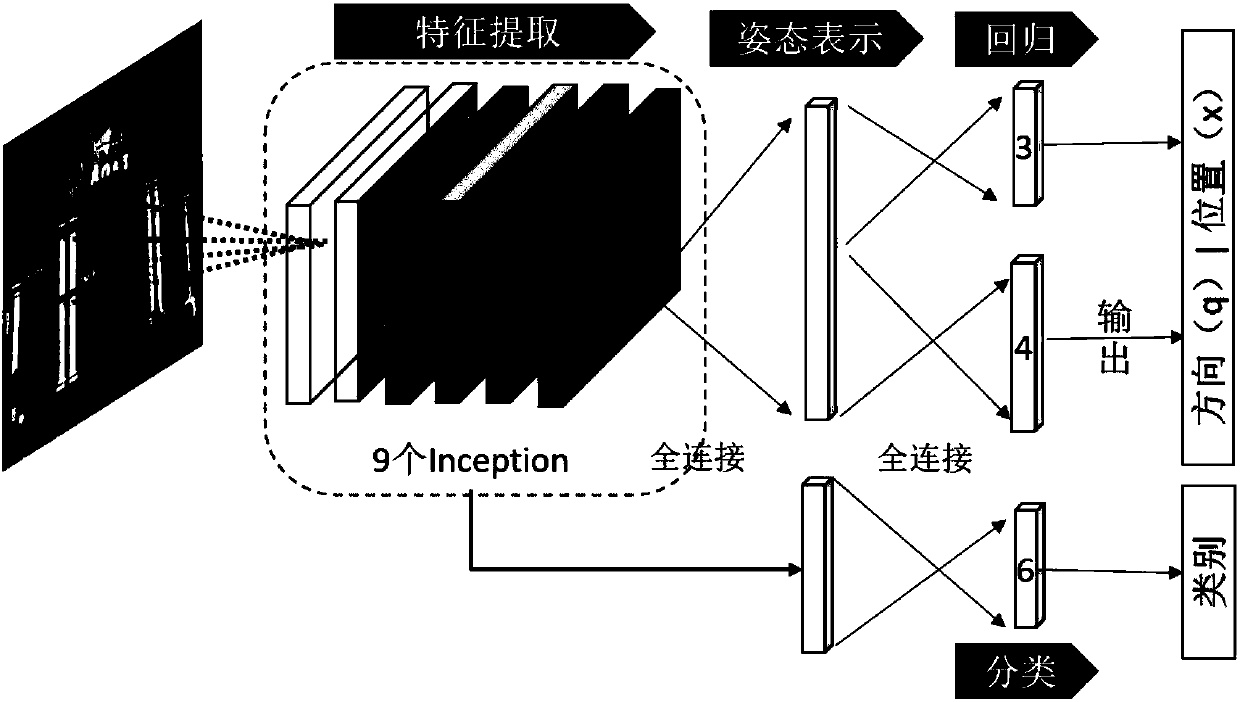

[0045] The present invention designs an implementation system based on deep learning to obtain the position and attitude of the image within the city range on the PC side. The frame diagram is as follows figure 1 shown. The whole invented system is divided into online part and online part. The offline part is mainly on the server side. The training area division learner divides the whole city into sub-areas, and then uses the migration learning method to train the pose regression and scene classification networks proposed in Chapter 4 for each sub-area. The online part is mainly on the mobile client. After the user arrives in a certain area, the server sends the GPS or the geographical location of the mobile phone base station to the server. The server determines the area (scenario) to which the user belongs acc...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com