Human-computer interaction method and system based on multi-modal emotion and face attribute identification

A technology for attribute recognition and human-computer interaction, applied in the field of human-computer interaction to achieve good experience functions and improve accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] Embodiments of the technical solutions of the present invention will be described in detail below in conjunction with the accompanying drawings. The following examples are only used to illustrate the technical solution of the present invention more clearly, so they are only examples, and should not be used to limit the protection scope of the present invention.

[0038] It should be noted that, unless otherwise specified, the technical terms or scientific terms used in this application shall have the usual meanings understood by those skilled in the art to which the present invention belongs.

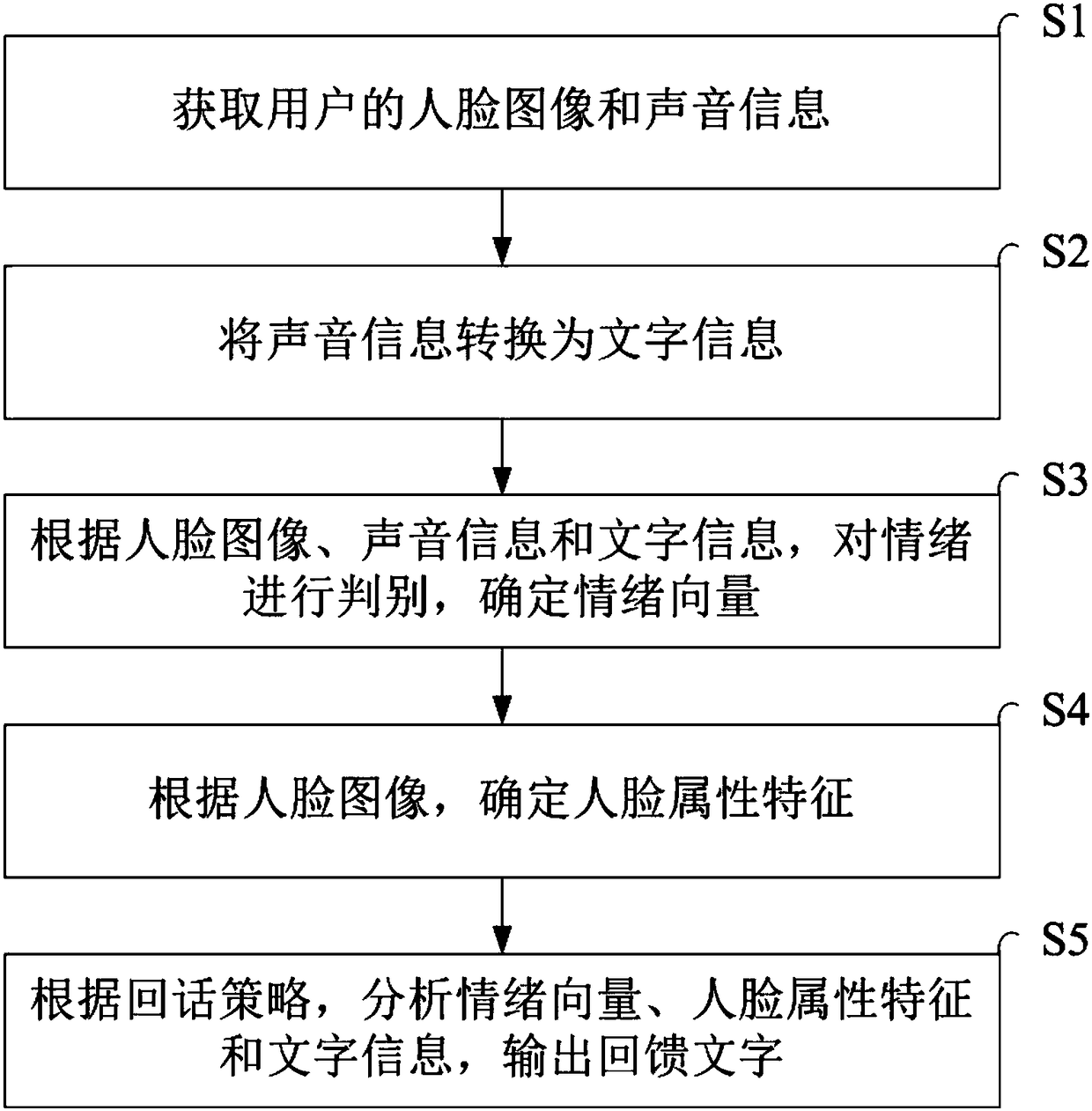

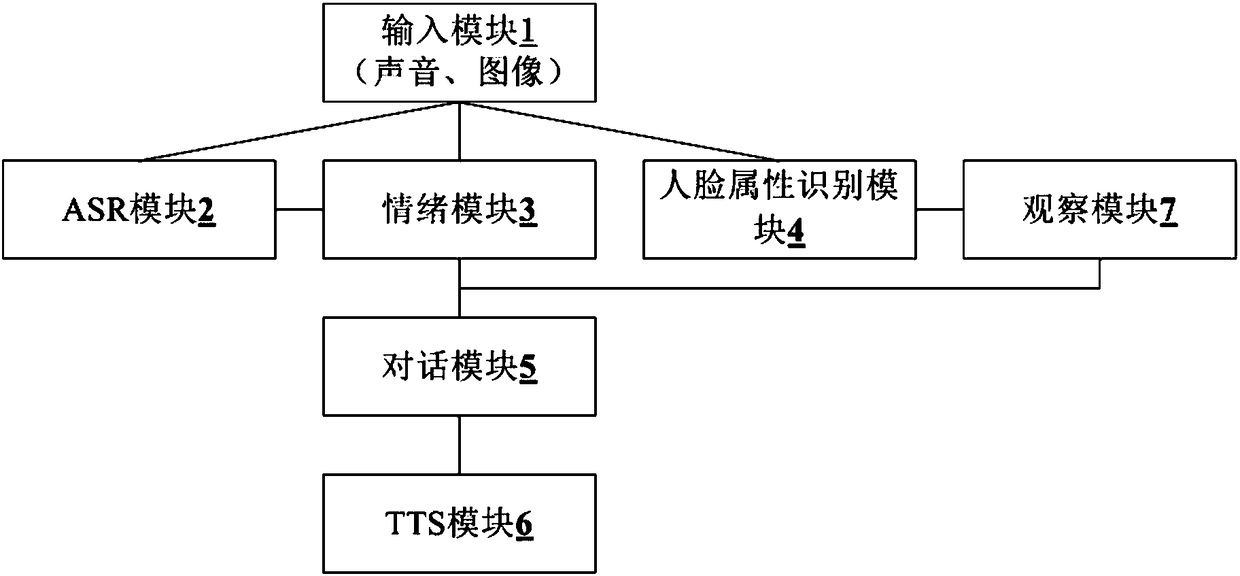

[0039] The human-computer interaction method and system based on multi-modal emotion and facial attribute recognition provided by the embodiments of the present invention integrate natural language understanding and speech recognition systems through designing interactive platforms, such as web pages and APP programs, to target human faces. Multi-modal emotion, face attribute rec...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com