A human posture recognition method based on depth learning

A deep learning and recognition method technology, applied in the field of human action and gesture recognition based on deep learning, can solve the problems of unfavorable action and gesture feature extraction, too much RGB image information, affecting recognition accuracy, etc., to reduce network complexity, reduce Network parameters, the effect of high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] The present invention is described below in conjunction with accompanying drawing.

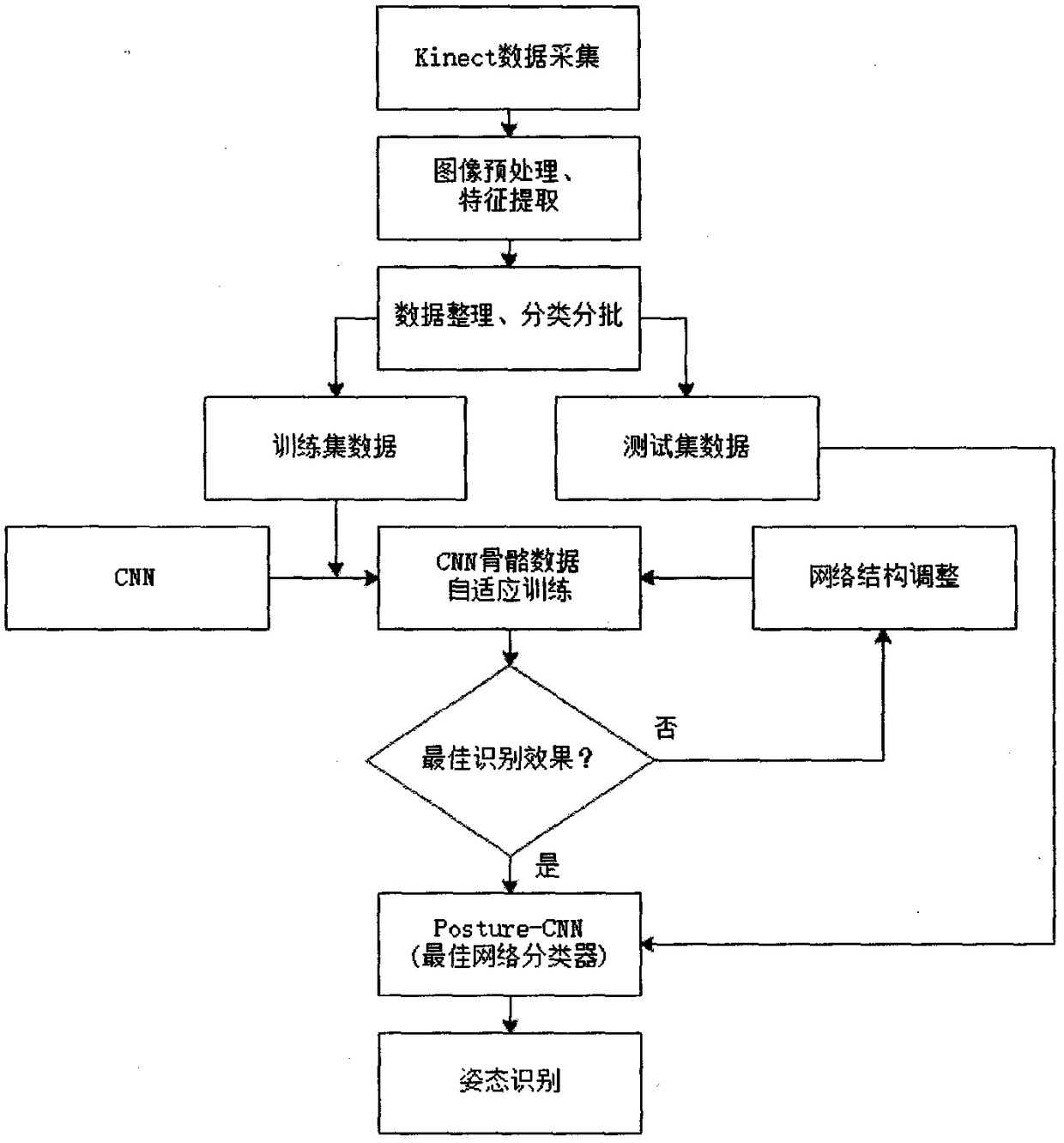

[0026] Flowchart such as figure 1 The shown human action gesture recognition method based on deep learning mainly includes the following steps.

[0027] Step 1: Install the Kinect for Window SDK on the PC, and fix the Kinect V2.0 depth sensor at a horizontal position with a certain height from the horizontal ground. The effective acquisition area of Kinect V2.0 is the horizontal 70° range in front of Kinect V2.0 Inside, a trapezoidal area 0.5m-4.5m away from it. Determine on the display of the PC that Kinect can capture most of the human targets.

[0028] Step 2: The person to be collected enters the field of view of Kinect V2.0 to display their actions and postures. The PC side collects 8-12 times for each action of each person. The objects to be collected should include as many body shapes as possible. In this invention, there are 15 people to be collected, with a height range o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com