Self-increasing and decreasing method of neural network nodes, computer equipment and storage medium

A technology of computer equipment and neural network, which is applied in the field of neural network, can solve the problems of increasing the complexity of network structure, slow learning speed of neural network, and the inability of network to have learning ability and information processing ability, so as to reduce the amount of useless calculation and improve learning. effect of ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

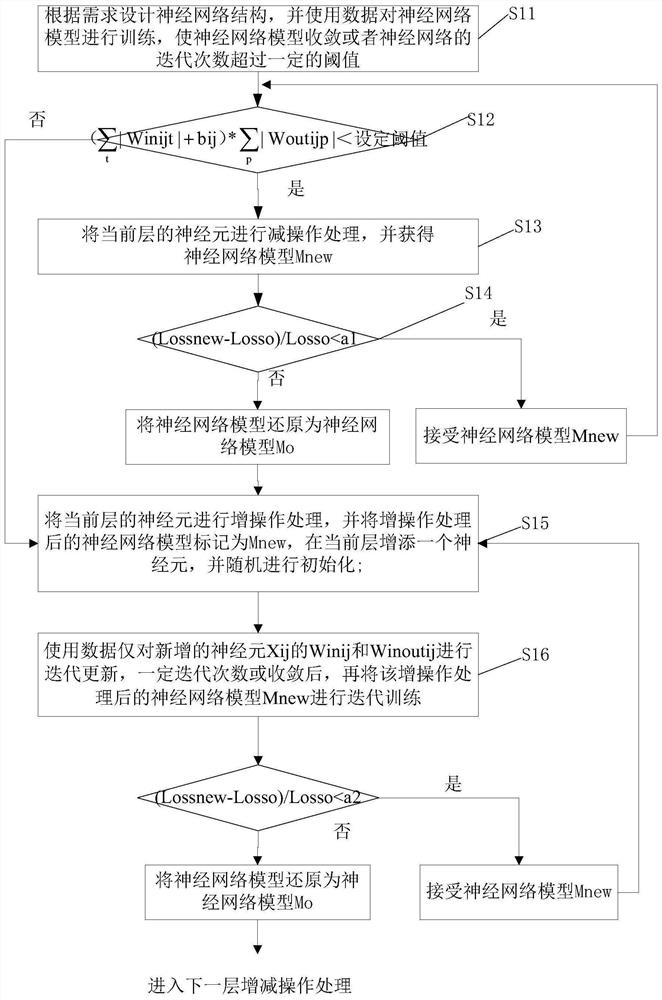

[0039] An embodiment of the present invention provides a neural network node self-increasing method, which is applied to neural network training. The neural network node self-increasing method includes the following steps, as shown in the attached figure 1 Shown:

[0040] Step S11, designing the neural network structure according to requirements, and using the data to train the neural network model, so that the neural network model converges or the number of iterations of the neural network exceeds a set threshold;

[0041] Step S12, adding and subtracting neurons layer by layer;

[0042] Mark the current neural network model as Mo, judge whether there are neurons that can be subtracted in the current layer, if there are neurons that can be subtracted in the current layer, then enter step S13, otherwise, enter step S15;

[0043] Among them, the current layer is the i-th layer, and the current node is the j-th node in the i-th layer, which is recorded as neuron Xij. Judging ...

Embodiment 2

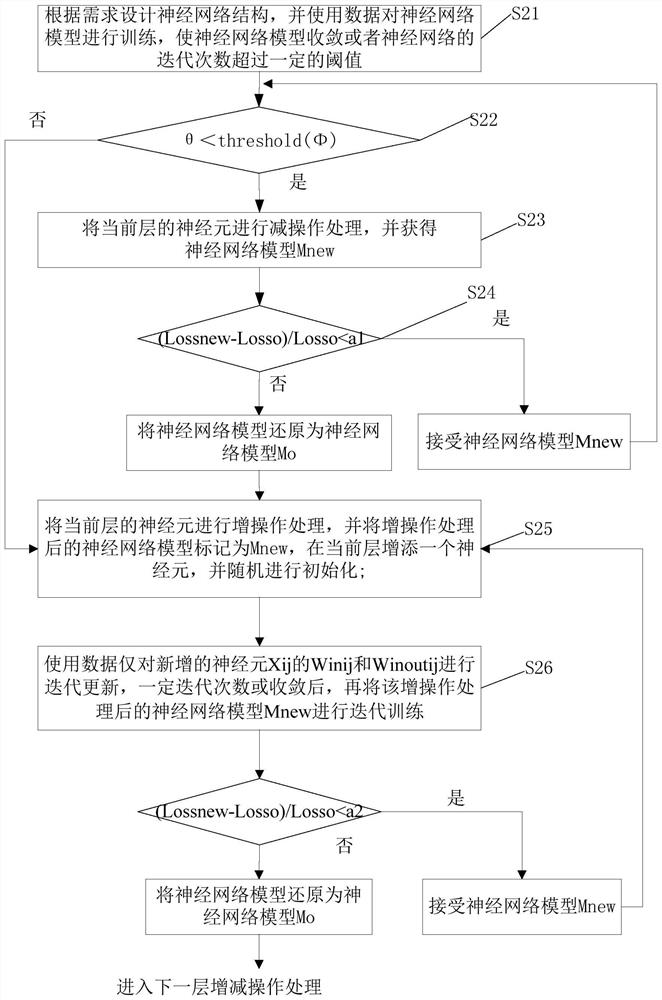

[0053] An embodiment of the present invention provides a neural network node self-increasing method, which is applied to neural network training. The neural network node self-increasing method includes the following steps, as shown in the attached figure 2 Shown:

[0054] Step S21, designing the neural network structure according to requirements, and using the data to train the neural network model, so that the neural network model converges or the number of iterations of the neural network exceeds a set threshold;

[0055] Step S22, adding and subtracting neurons layer by layer;

[0056] Mark the current neural network model as Mo, and judge whether there are neurons that can be subtracted in the current layer. If there are neurons that can be subtracted in the current layer, then enter step S23, otherwise, enter step S25;

[0057] The current layer is the i-th layer, and the next layer is the (i+1)-th layer, where j≠j';

[0058] Get the jth neuron Xij of the i-th layer an...

Embodiment 3

[0069] In an embodiment of the present invention, a computer device is also provided, including at least one processor, and a memory connected to the at least one processor in communication, and the memory stores instructions executable by the at least one processor, so The instructions are executed by the at least one processor, so that the at least one processor executes the self-increasing and decrementing method of the neural network node, the self-increasing and decrementing method of the neural network node is the same as that in Embodiment 1 or 2, and the present invention is based on This will not be repeated here.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com