A method for selecting a freehand gesture motion expression based on a three-dimensional object contour

A gesture action and three-dimensional object technology, applied in the field of human-computer interaction, can solve problems such as the difficulty in meeting the requirements of natural and efficient selection of three-dimensional objects, the large computational load of grasping interaction technology, and the challenging research and development of software algorithms, etc., to achieve high efficiency Human-computer interaction with natural 3D objects, low computational load, and high-efficiency human-computer interaction process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

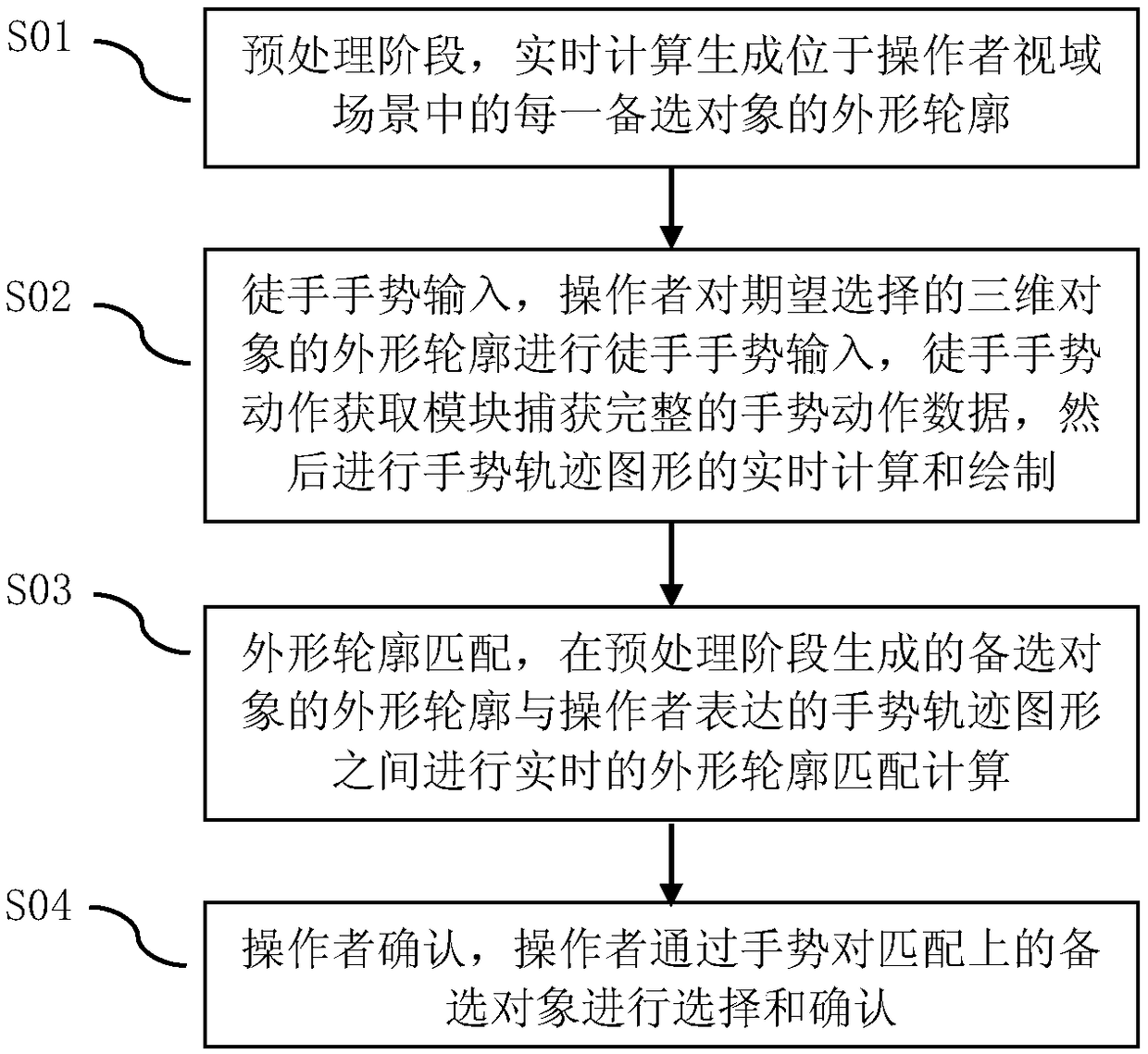

[0045] The present invention will be further described in detail according to the accompanying drawings and specific embodiments of the specification.

[0046] In this embodiment, the sharp movement gesture capture sensor is used to capture gesture action signals and record the gesture action data of the subjects during the experiment. In order to meet the experimental requirements of the gesture operation space, the sharp gesture capture sensor is placed about 35cm below the starting position of the gesture of the subject to ensure that the effective gesture operation space of the subject is within about 2.5cm to 60cm above the sensor. The capture accuracy of the capture sensor in this effective gesture operation space is 1.2mm. The EPSON CB-X04 projector is used to project the picture on the screen. The resolution of the whole picture is 1024px×768px, and the size projected on the projection screen is 124cm×87cm. The vertical distance between the center of the projection scree...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com