Data batch processing method and device based on cluster computing, electronic device and medium

A data processing and batch processing technology, applied in the field of computing virtualization services, can solve the problems of response delay, low processing efficiency, poor processing convenience, etc., to avoid response delay, improve use efficiency, and improve user experience.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

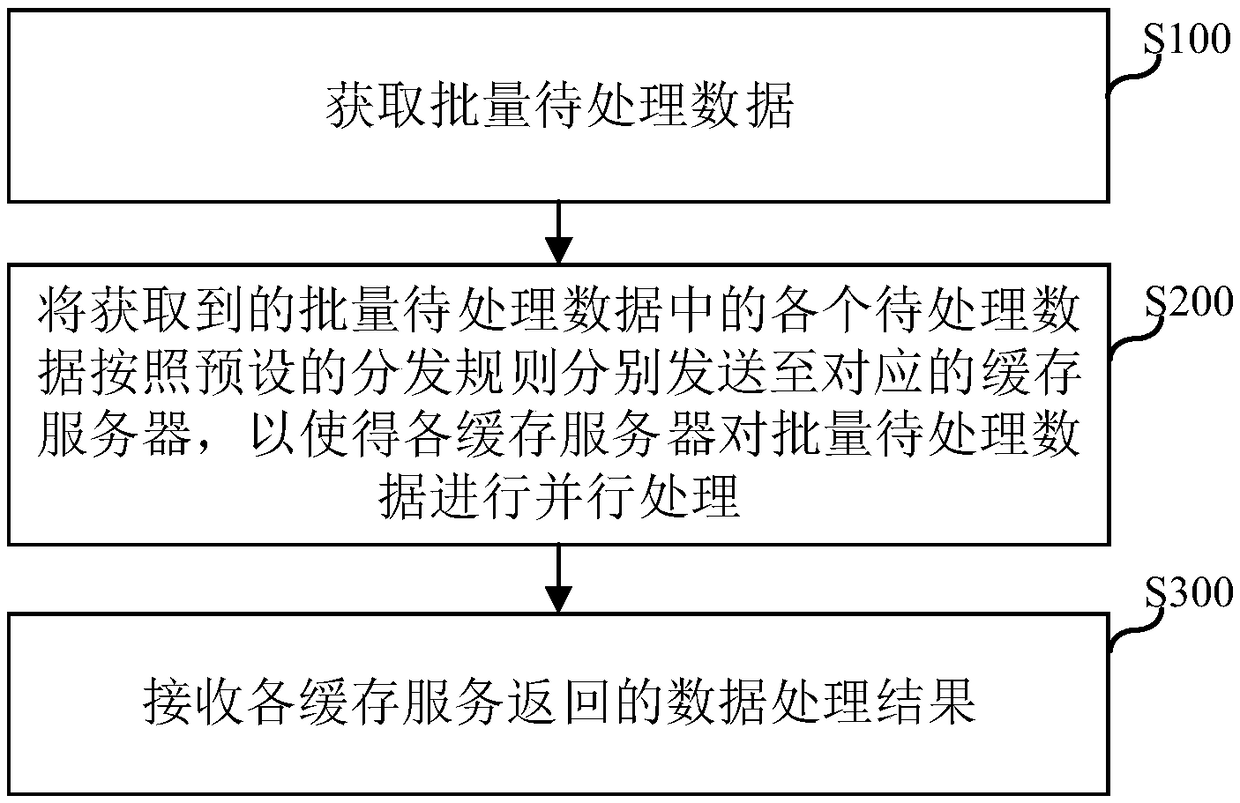

[0030] The embodiment of this application provides a data batch processing method based on cluster computing, such as figure 1 shown, including:

[0031] Step S100, acquiring batches of data to be processed.

[0032] Specifically, the central server obtains batches of data to be processed sent by the client, wherein the central server is at the business layer and directly performs information interaction with the client, and the client provides a program of local services for the user. Applications on terminal devices such as laptops or touchscreen phones.

[0033] In step S200, each of the obtained batches of data to be processed is sent to corresponding cache servers according to a preset distribution rule, so that each cache server processes the batch of data to be processed in parallel.

[0034] Specifically, the central server at the business layer is connected to several cache servers to form a star topology server cluster, in which users can directly establish a conne...

Embodiment 2

[0041] The embodiment of the present application provides another possible implementation manner. On the basis of the first embodiment, the method shown in the second embodiment is also included, wherein,

[0042]Step S100 includes step S101 (not marked in the figure) and step S102 (not marked in the figure), wherein,

[0043] Step S101: Receive data processing information sent by the client.

[0044] Step S102: Obtain batches of data to be processed according to the data processing information.

[0045] Wherein, the data processing information includes any of the following: batches of data to be processed; data processing requests carrying batches of data to be processed; data processing requests carrying at least one storage address of batches of data to be processed.

[0046] Specifically, the central server at the business layer directly exchanges information with the client, such as receiving a request from the client terminal, then processing the received request, and t...

Embodiment 3

[0052] The embodiment of the present application provides another possible implementation manner. On the basis of the second embodiment, the method shown in the third embodiment is also included, wherein,

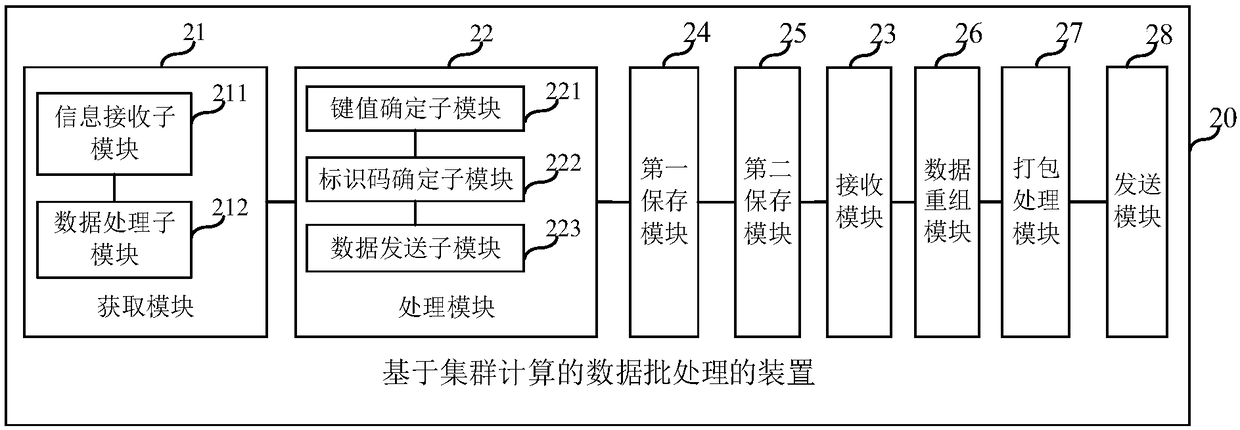

[0053] Step S200 includes step S202 (not marked in the figure): when the data processing information includes batches of data to be processed or data processing requests carry batches of data to be processed, determine the key value of each data to be processed in the batch of data to be processed; and according to The CRC16 algorithm determines the cache server identification code corresponding to each key value; and determines the corresponding cache server according to each cache server identification code, and sends each data to be processed to the found corresponding cache server.

[0054] Step S201 (not marked in the figure) is also included before step S202: storing the execution sequence of each data to be processed in the batch of data to be processed in terms of lo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com