Action Classification Method Based on Joint Spatiotemporal Simple Recurrent Network and Attention Mechanism

A simple loop, classification method technology, applied in the field of pattern recognition, can solve the problems of limited sequence processing speed, long training time, more time consumption, etc., to overcome the slow calculation speed and improve the accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

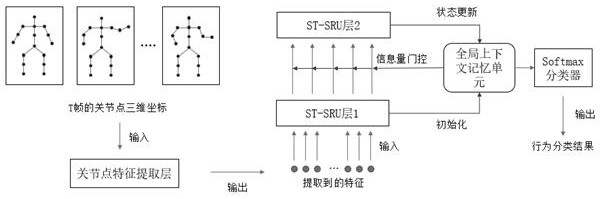

[0018] The following describes in detail the action classification method based on joint spatio-temporal simple loop network and attention mechanism of the present invention in conjunction with the accompanying drawings. figure 1 for the implementation flow chart.

[0019] Such as figure 1 , the implementation of the method of the present invention mainly comprises three steps: (1) extract feature from the articulation point data that represents action with deep learning method; (2) input the feature that extracts in step (1) to two-layer ST-SRU model (3) Use the output of the ST-SRU in step (2) to update the state of the global context memory unit, and play a gating role in the inflow of information into the ST-SRU of the second layer in step (2). , when the iterative update process of the attention model ends, the final classification result is obtained.

[0020] Each step will be described in detail below one by one.

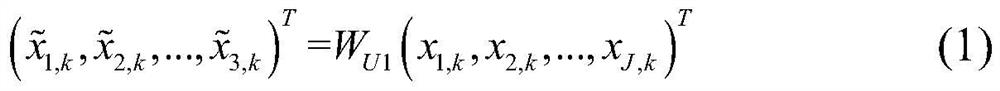

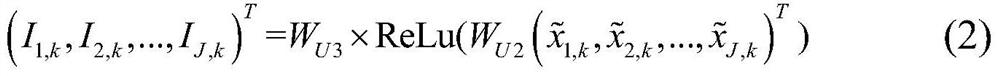

[0021] Step 1: Extract features from joint point dat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com