Fusion system for lane lines and fusion method for lane lines

A fusion method and lane line technology, applied in control/adjustment system, vehicle position/route/height control, road network navigator, etc., can solve problems such as lack of mature method for lane line fusion and affect lane change warning, etc., to achieve Great application value, good precision and accuracy, the effect of high precision and accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

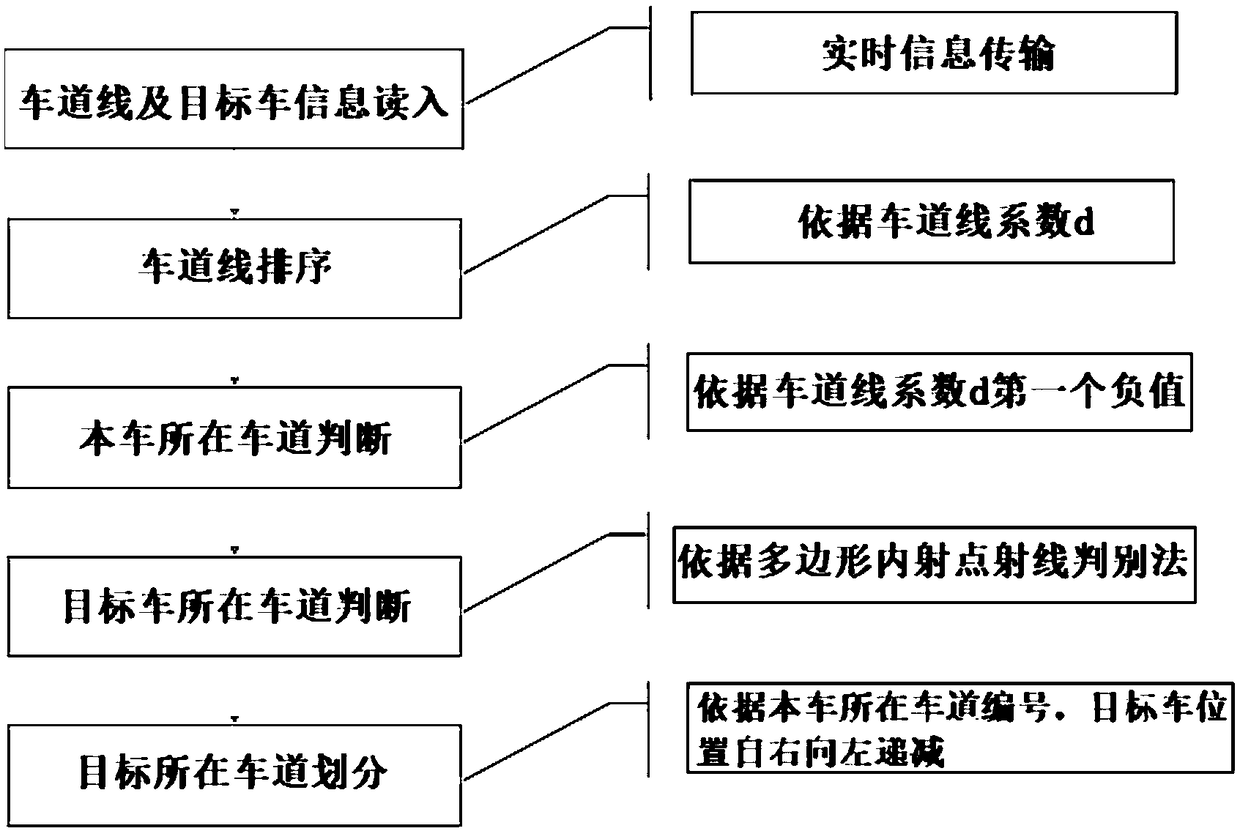

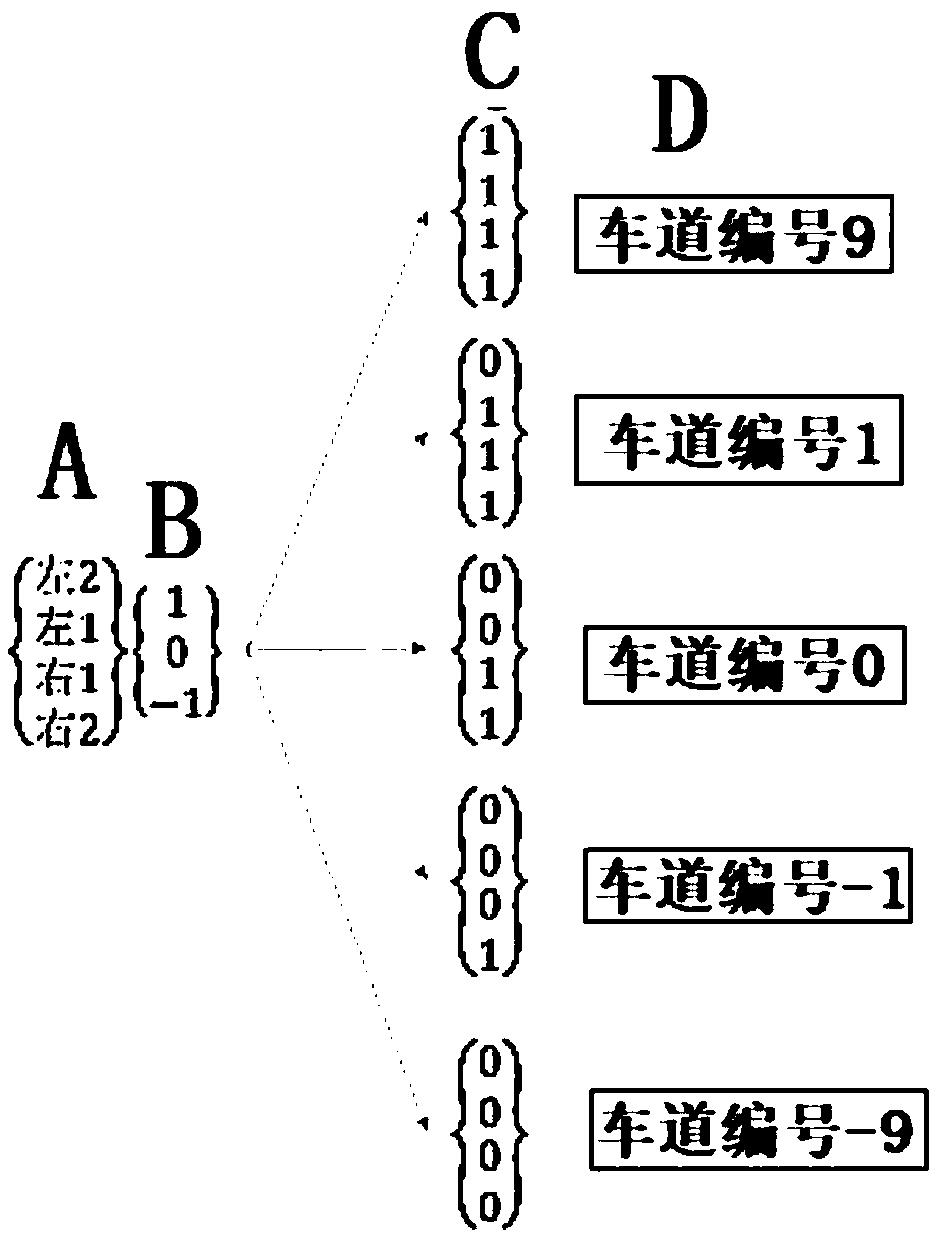

[0056] An embodiment of the lane line fusion system provided by the present invention, based on multi-sensor target level data, includes: a data receiving module, a data conversion module, a lane judging module and a target lane line division module;

[0057] The data receiving module at least includes the position and speed of the target vehicle monitored by the radar, and the lane line equation coefficient of the lane line detection system.

[0058] The data conversion module converts the target vehicle data and lane line data into data in a preset coordinate system, forms a lane line equation in a preset coordinate system, and sorts the lane lines formed by the lane line equation in a preset coordinate system;

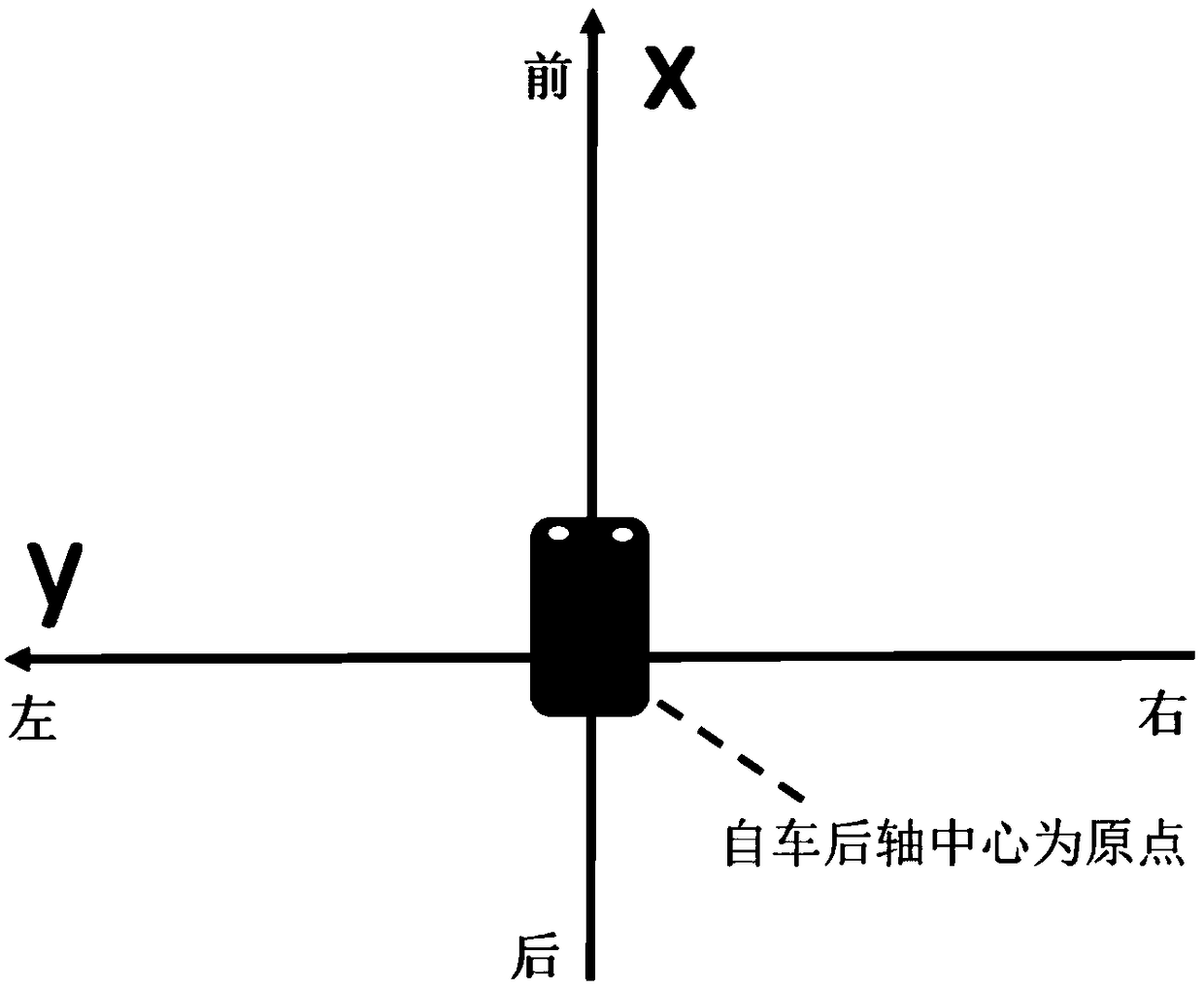

[0059] refer to figure 1 As shown, the preset coordinate system is a coordinate system with the center of the rear axle of the vehicle as the origin, the axis of the vehicle as the x-axis, the front of the vehicle as positive, the direction of the rear axis of the v...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com