Node classification method, model training method and device

A node classification and node technology, applied in the Internet field, can solve the problems of high computing cost and large computing resource consumption, and achieve the effect of saving computing resources and reducing computing overhead

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

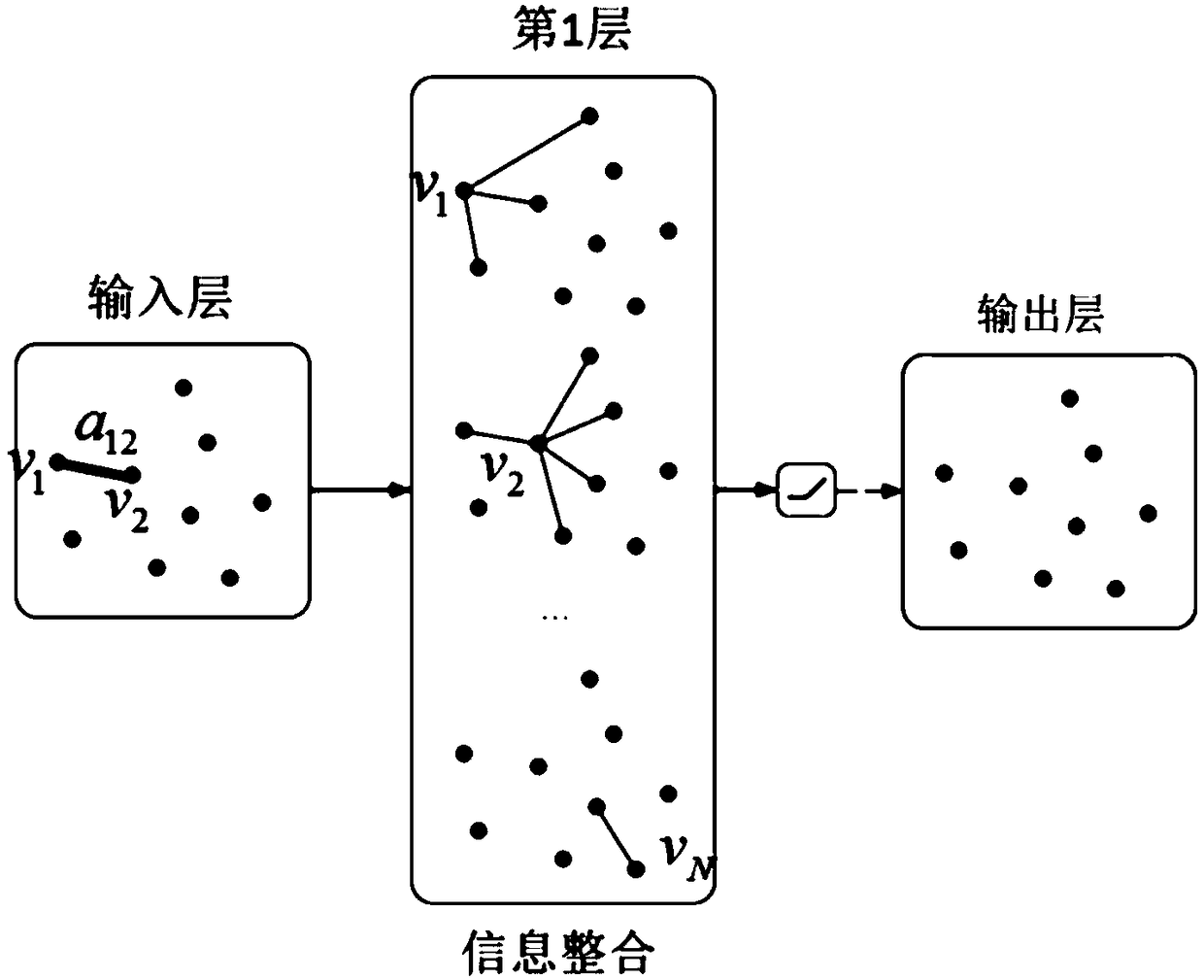

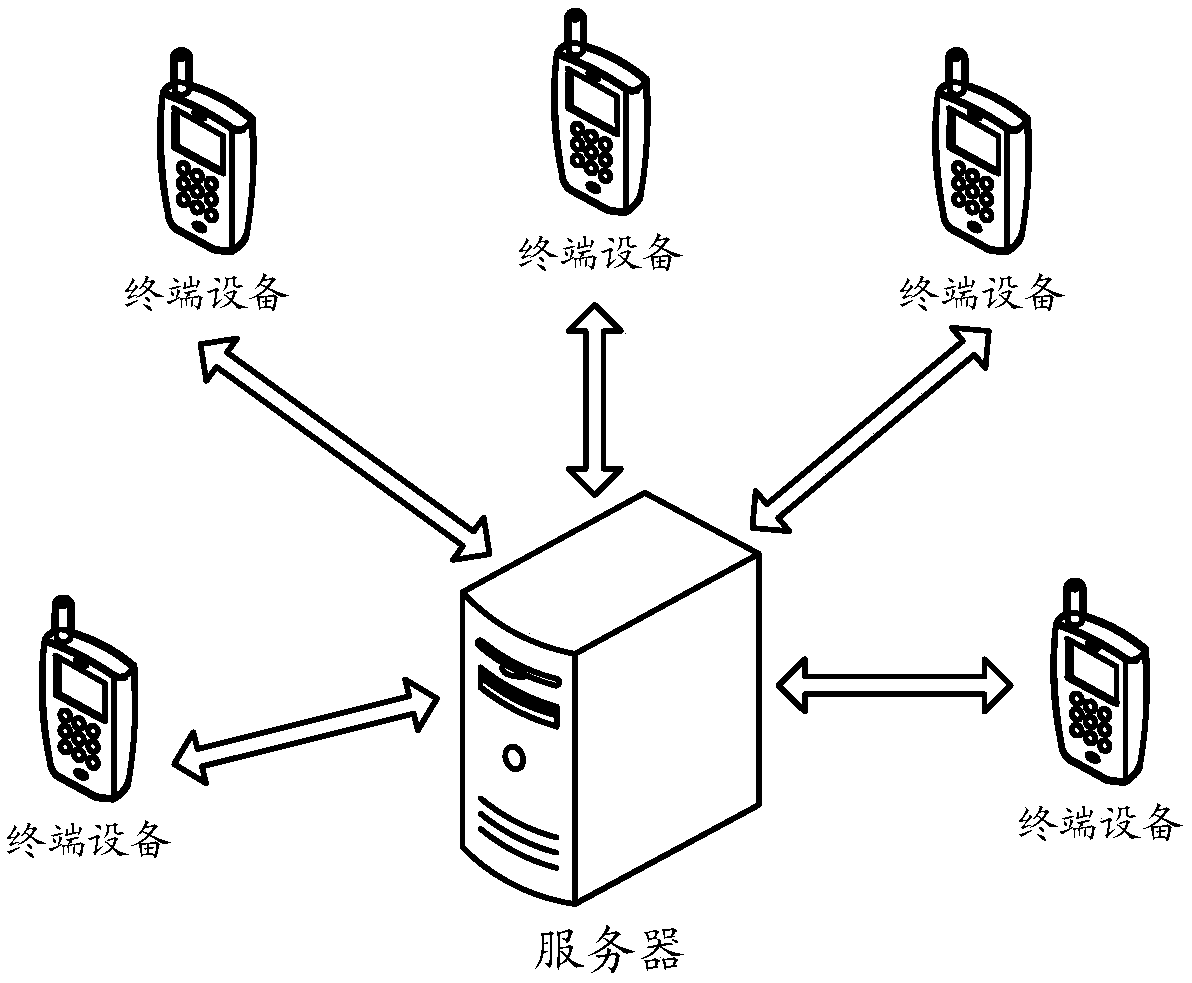

[0058] Embodiments of the present invention provide a node classification method, a model training method, and a device. For a large-scale graph, training can be performed based on only a part of the nodes, and each iteration calculates a part of the nodes in the graph without traversing the nodes in the graph. Each node greatly reduces computing overhead and saves computing resources.

[0059] The terms "first", "second", "third", "fourth", etc. (if any) in the description and claims of the present invention and the above drawings are used to distinguish similar objects, and not necessarily Used to describe a specific sequence or sequence. It is to be understood that the data so used are interchangeable under appropriate circumstances such that the embodiments of the invention described herein are, for example, capable of practice in sequences other than those illustrated or described herein. Furthermore, the terms "comprising" and "having", as well as any variations thereof...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com