Automatic mapping robot mapping and finishing method based on visual marks

A visual marking and robot technology, applied in the field of navigation, can solve problems such as map offset, low positioning accuracy, and distortion, and achieve the effects of reducing mapping and positioning errors, flexible and convenient layout, and easy implementation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The present invention will be further described below in conjunction with the embodiments shown in the accompanying drawings.

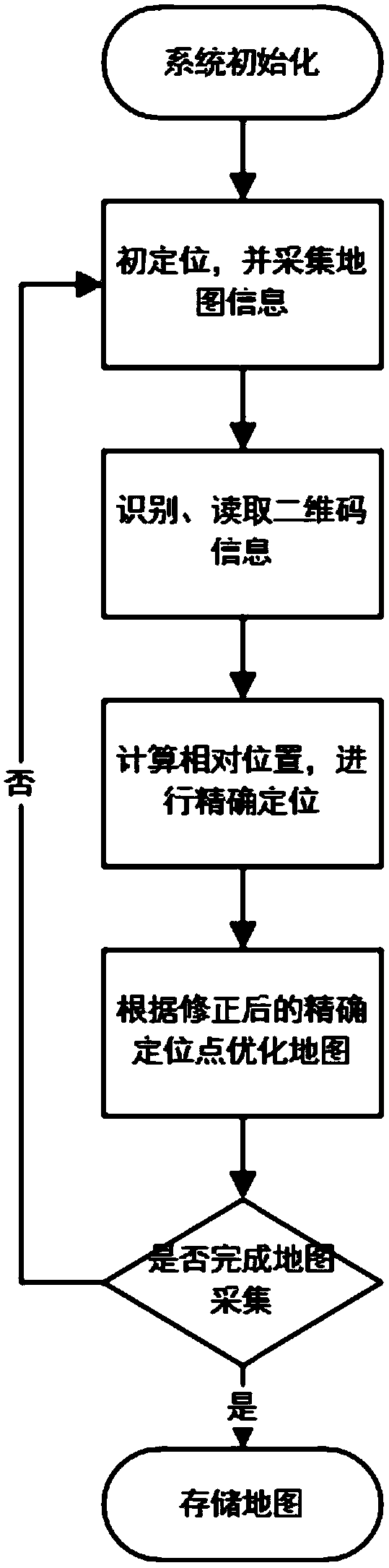

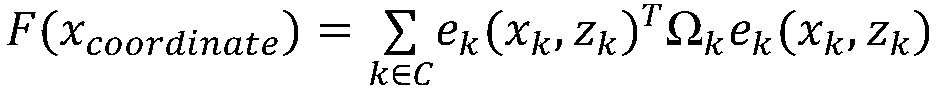

[0019] The present invention proposes a visual marker-based automatic mapping robot mapping repair method, in which the automatic mapping robot obtains the precise positioning information from the artificial visual markers set at a certain interval multiple times during the mapping process, and reads the artificial The location information encoded in the visual marker is used to calculate the relative position between the artificial visual marker and the automatic mapping robot, and the error correction for the GPS positioning and dead reckoning of the automatic mapping robot is obtained. The method generally consists of three parts:

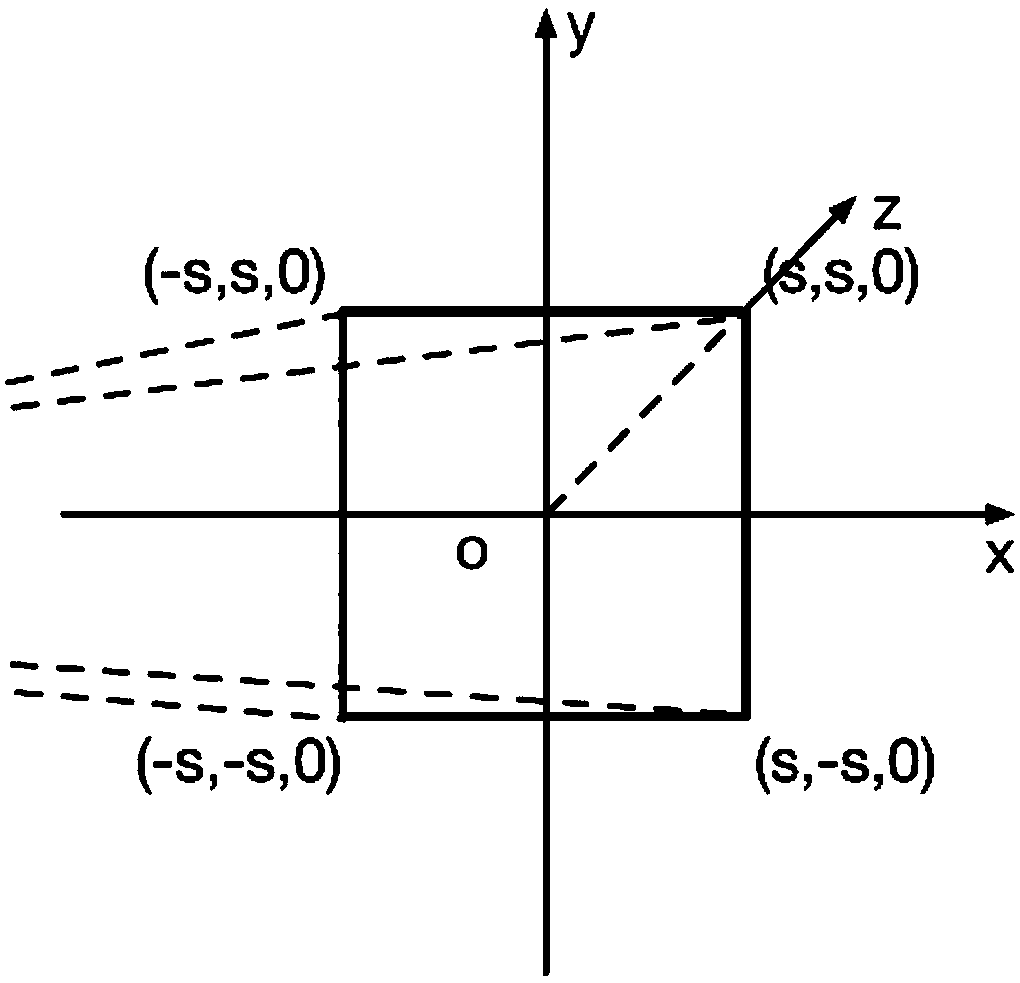

[0020] Reading artificial visual marks: capture and identify artificial visual marks (such as two-dimensional codes) arranged at positioning points (visual mark points) through forward-looking visual sensors, and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com