Overlapped particulate matter layered counting method based on a color image and a depth image

A depth image and color image technology, applied in image analysis, image data processing, image enhancement, etc., can solve the problems of low counting accuracy, multi-layer overlapping occlusion, counting, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

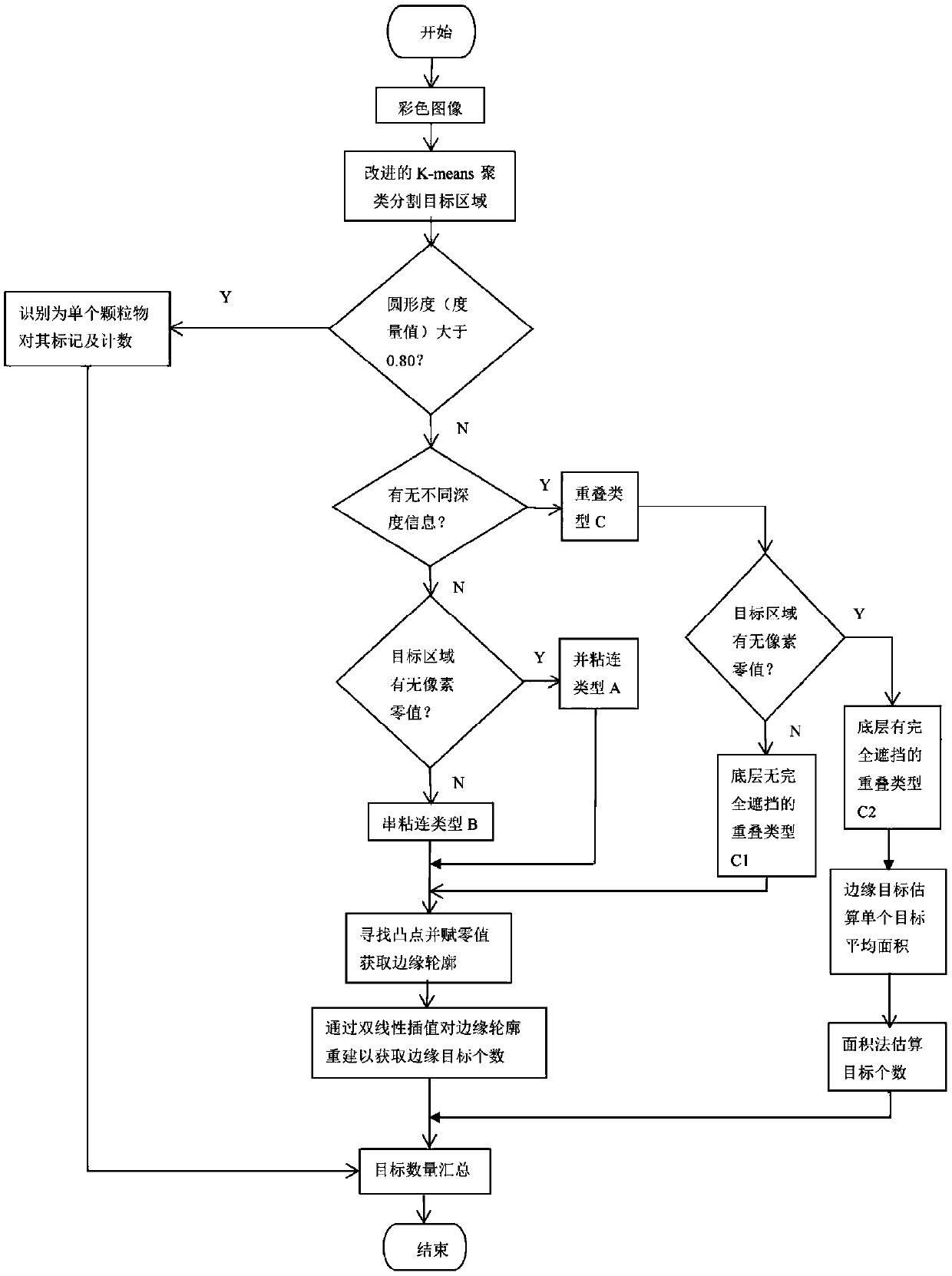

Method used

Image

Examples

Embodiment Construction

[0049] The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments, but the protection scope of the present invention is not limited thereto.

[0050] (1) Collect the target image.

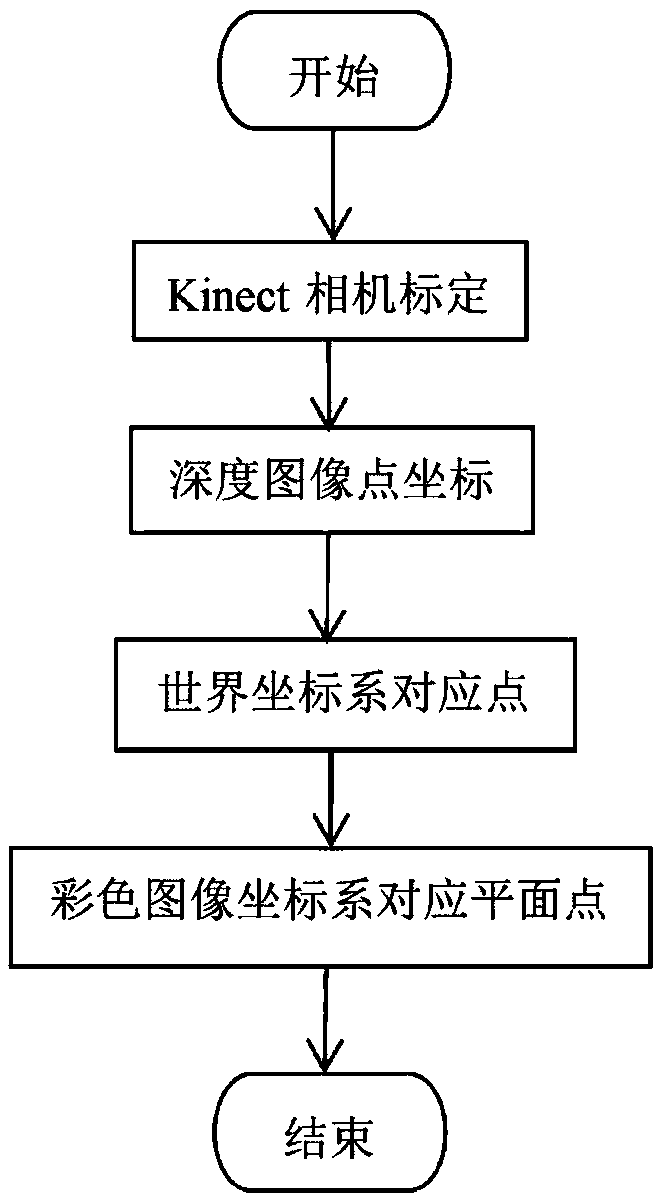

[0051] The specific method is as follows: using the Microsoft Kinect camera, the color image and the depth image of the same scene can be obtained at the same time. The pixel intensity of the depth image corresponds to the distance from the camera. By adjusting the appropriate shooting distance, the target image with the best effect can be obtained.

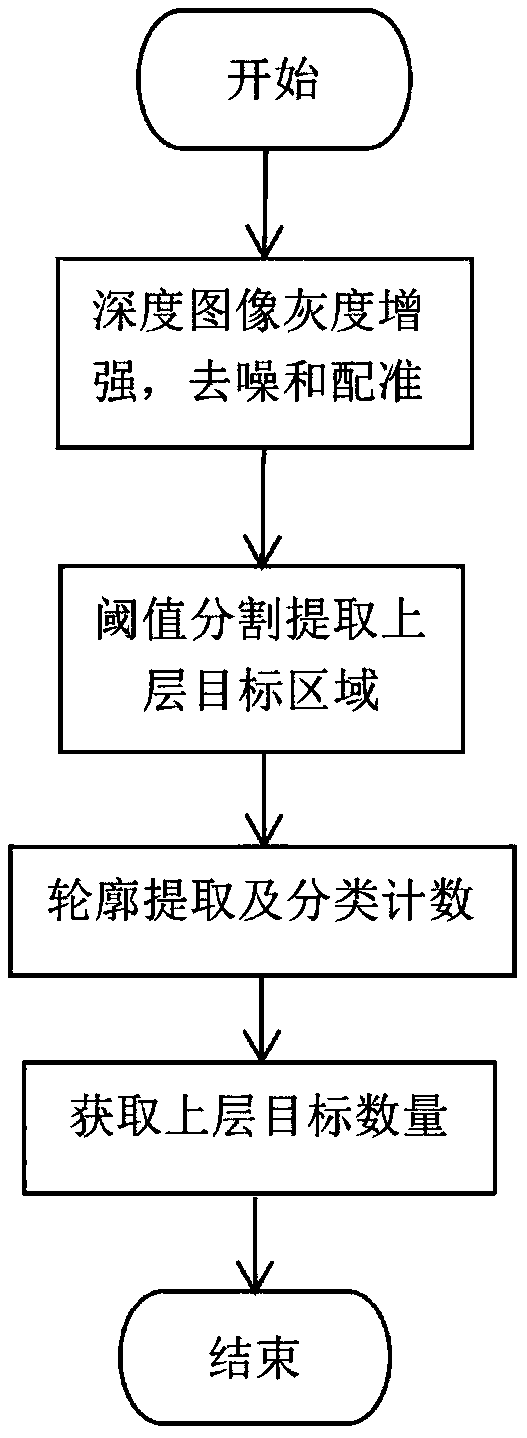

[0052] (2) Image preprocessing operation.

[0053] The specific method is as follows: In order to complete the enhancement of the original depth image, it is enhanced by grayscale transformation and a multi-frame improved median filter method is proposed to complete the denoising of the depth image. The algorithm steps: 1. Continuously when taking pictures Save multiple frames of ima...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com