Method for fusing a color depth image and a gray scale depth image

A depth image and color image technology, applied in the fields of image processing, computer vision and human-computer interaction, can solve the problems that it is difficult to fully reflect the depth information of the physical space, the color image does not contain the depth information of the physical space, and the scene information is limited, etc., to achieve The depth map is rich in information, the scene details are eye-catching, and the effect is easy for human eyes to observe

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] The technical solution of the present disclosure will be described in detail below with reference to the drawings and embodiments.

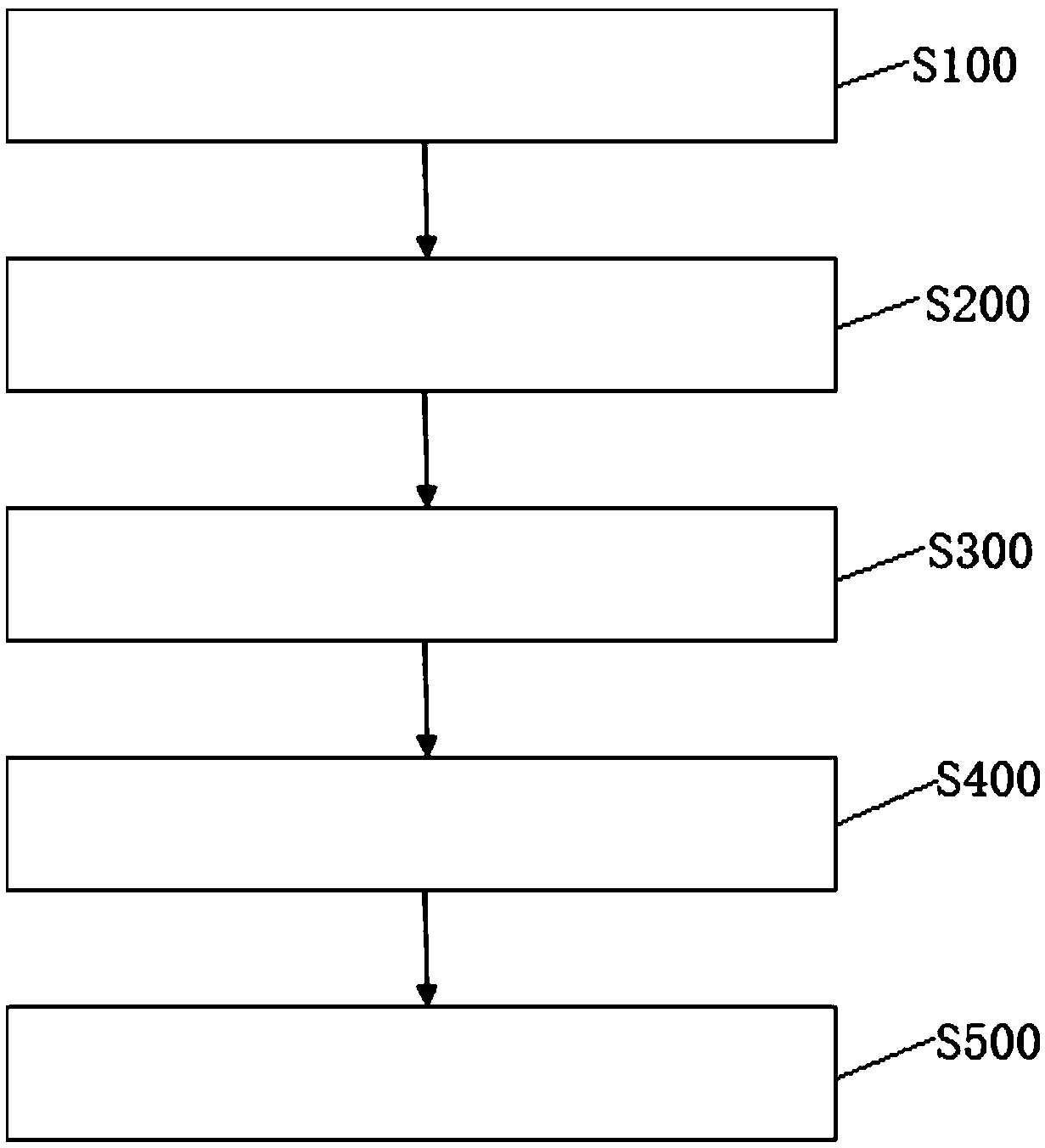

[0037] see figure 1 , a method for fusing color and grayscale depth images, comprising the steps of:

[0038] S100: Calibrate the internal and external parameters of the depth sensor and the RGB sensor of the 3D depth perception device;

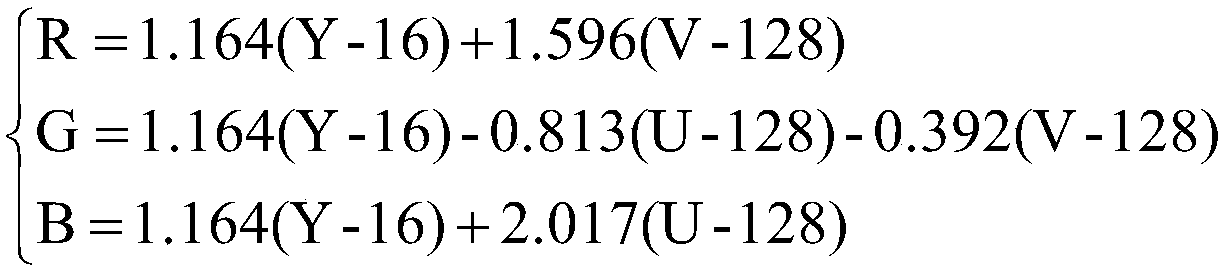

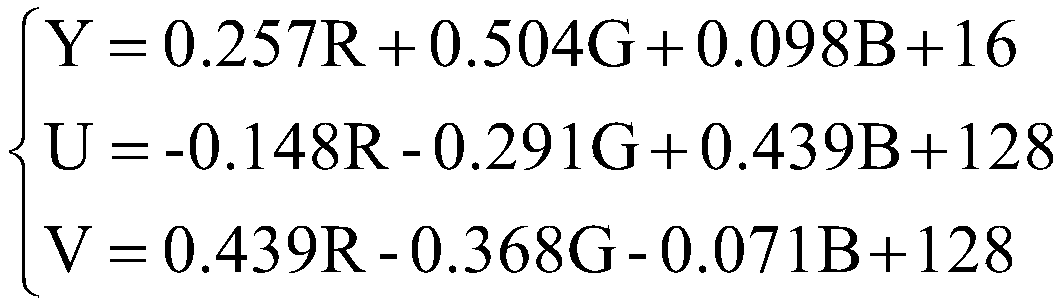

[0039] S200: Obtain a gray-scale depth image through the depth sensor, and map the depth value of each pixel in the gray-scale depth image to the YU or YV channel in the YUV image format to generate a corresponding YUV depth image, and at the same time, through the The RGB sensor acquires RGB color images;

[0040] S300: Convert the YUV depth image in step S200 into an RGB depth image, and output it after compressing and coding with the RGB color image;

[0041] S400: Decompress the compressed RGB depth image and RGB color image in step S300, convert the decompressed RGB depth image into a YUV depth imag...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com