Large-field-of-view scene perception method and system based on omnidirectional vision, medium and device

A scene perception and large field of view technology, applied in the field of large field of view scene perception, can solve the problems of low algorithm complexity and inability to effectively identify obstacles, etc., to achieve accurate perception of the surrounding environment, excellent scene understanding and robustness sexual effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0035] The omnidirectional vision-based large-field-of-view scene perception method of this embodiment can run on the ROS (Robot Operating System) platform.

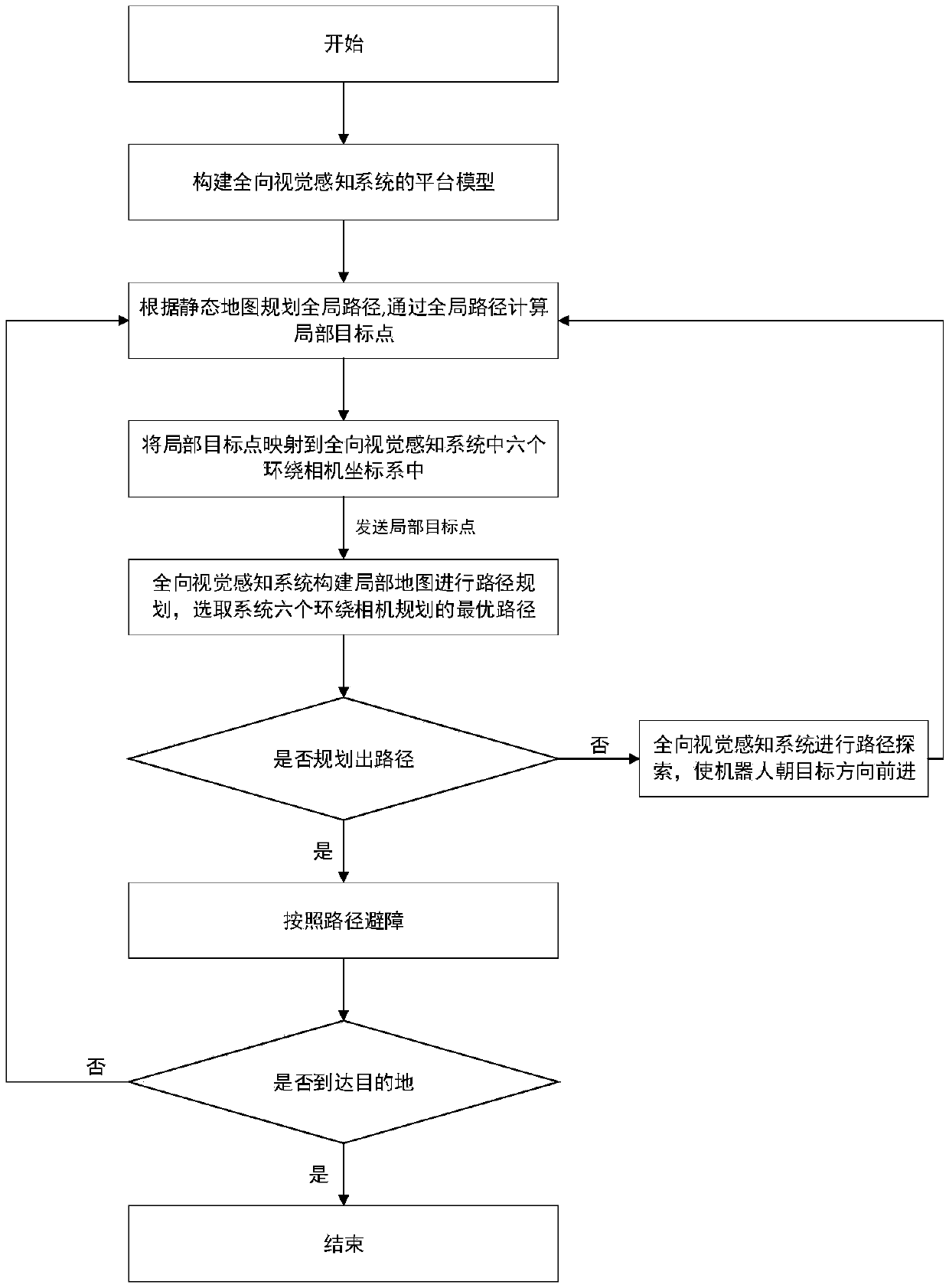

[0036] Such as figure 1 with figure 2 As shown, the omnidirectional vision-based large field of view scene perception method of this embodiment at least includes:

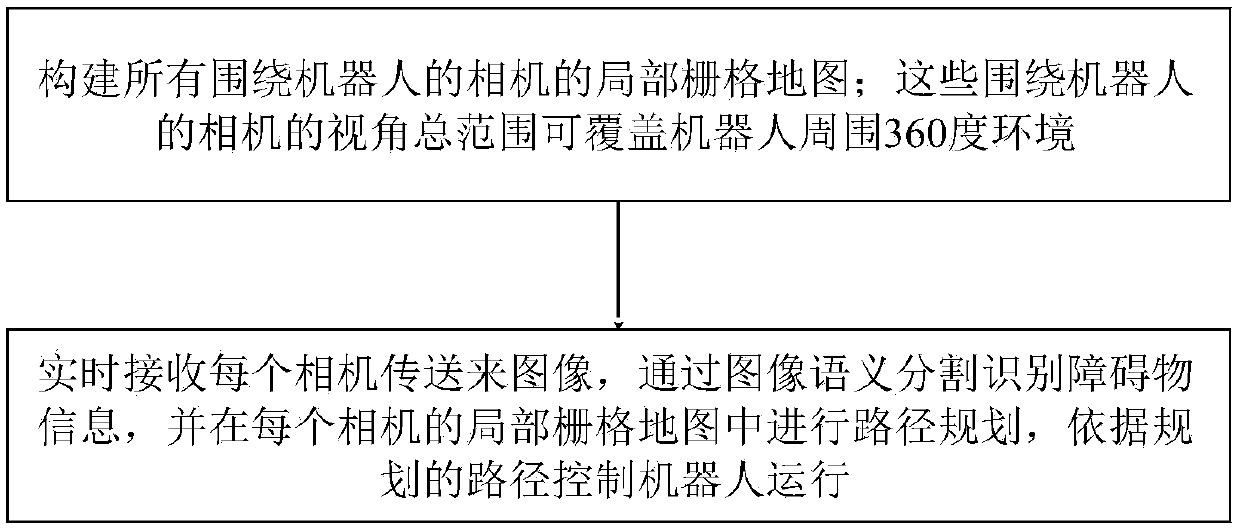

[0037] S101: Construct a local grid map of all cameras surrounding the robot; the total viewing angle range of these cameras surrounding the robot can cover a 360-degree environment around the robot.

[0038] In this embodiment, six cameras are taken as an example, and six surround cameras are built on the robot komodo2, so that environmental information in 360-degree directions around the robot can be collected.

[0039] It should be noted that other numbers of cameras can also be selected, and the actual number of cameras is determined according to the actual angle of view of the cameras, so as to ensure that the 360° environment is covered.

[0040] Sp...

Embodiment 2

[0120] The omnidirectional vision-based large field of view scene perception system of this embodiment at least includes:

[0121] (1) Several cameras, these cameras are arranged around the robot, and the total viewing angle range of all cameras can cover the 360-degree environment around the robot.

[0122] (2) perception processor, described perception processor comprises:

[0123] (2.1) A local grid map construction module, which is used to construct a local grid map of all cameras surrounding the robot; the total viewing angle range of these cameras surrounding the robot can cover a 360-degree environment around the robot.

[0124] Specifically, the local grid map construction module also includes:

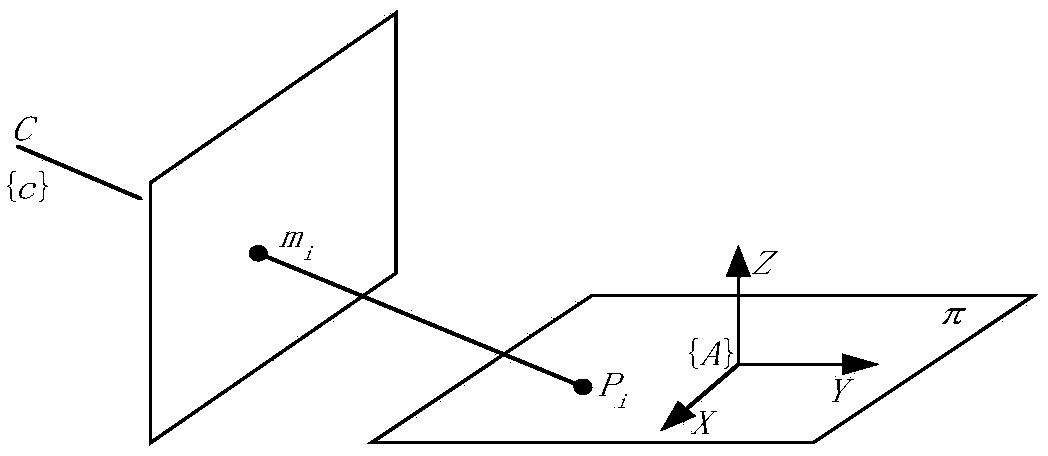

[0125] (2.1.1) a relationship building module, which is used to obtain the relationship between the pixel coordinates and the two-dimensional coordinates of the camera plane according to the corresponding transformation matrix of the camera;

[0126] (2.1.2) a global path pl...

Embodiment 3

[0141] This embodiment provides a computer-readable storage medium on which a computer program is stored, and it is characterized in that, when the program is executed by a processor, the following figure 1 The steps in the omnidirectional vision-based large-field-of-view scene perception method are shown.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com