An image description method based on deep learning

An image description and deep learning technology, applied in the field of image description based on deep learning, can solve the problems of low sentence accuracy, simple structure, slow model convergence speed, etc., to reduce training time, high accuracy, and good spatial expression ability. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

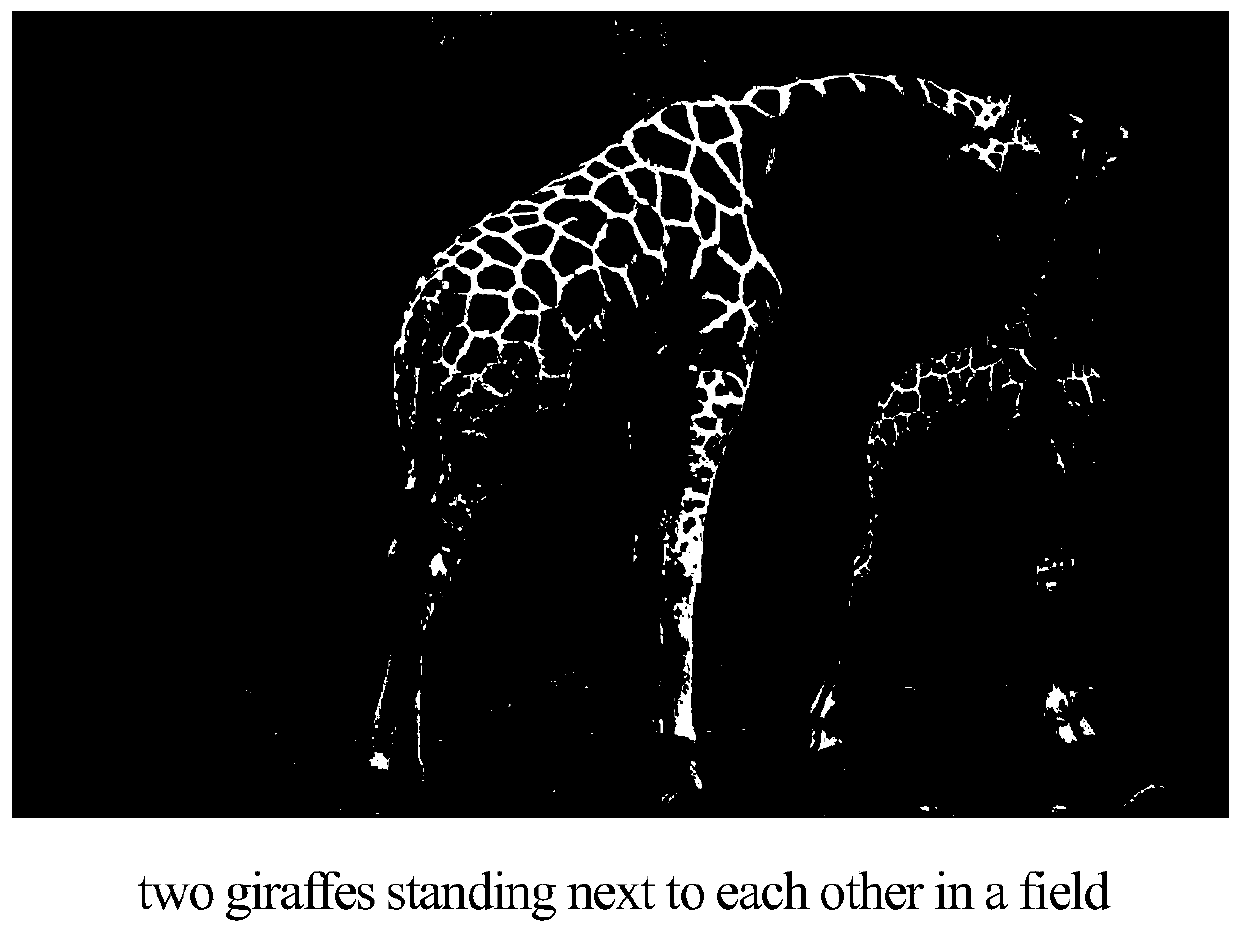

[0031] The image data set used in this embodiment is the MSCOCO data set, and the MSCOCO data set consists of images and manually annotated sentences corresponding to the images.

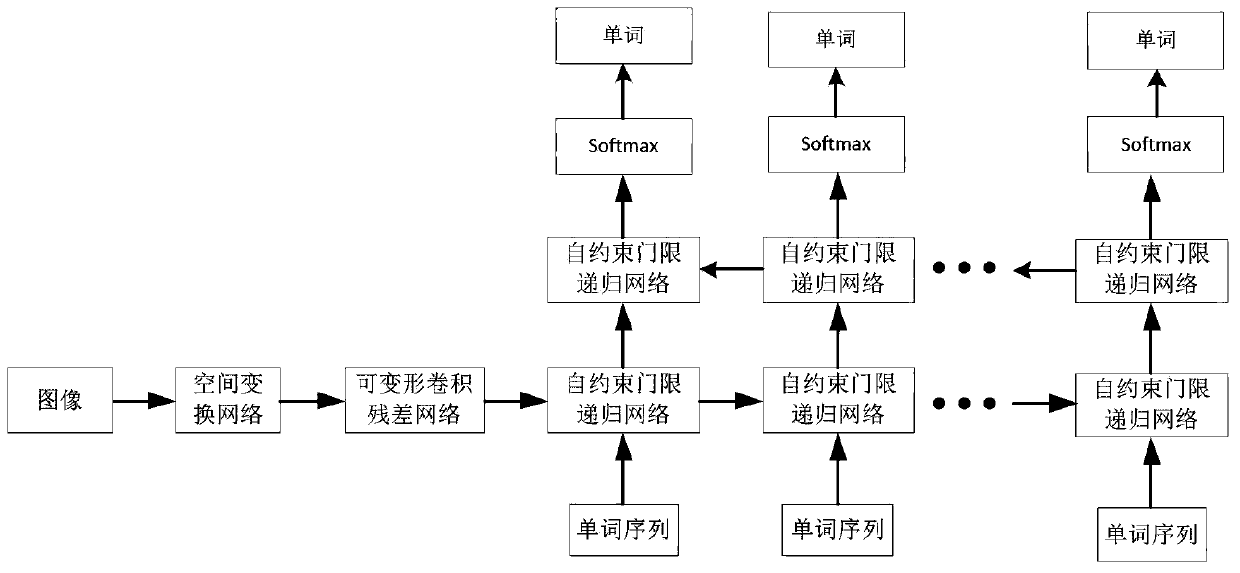

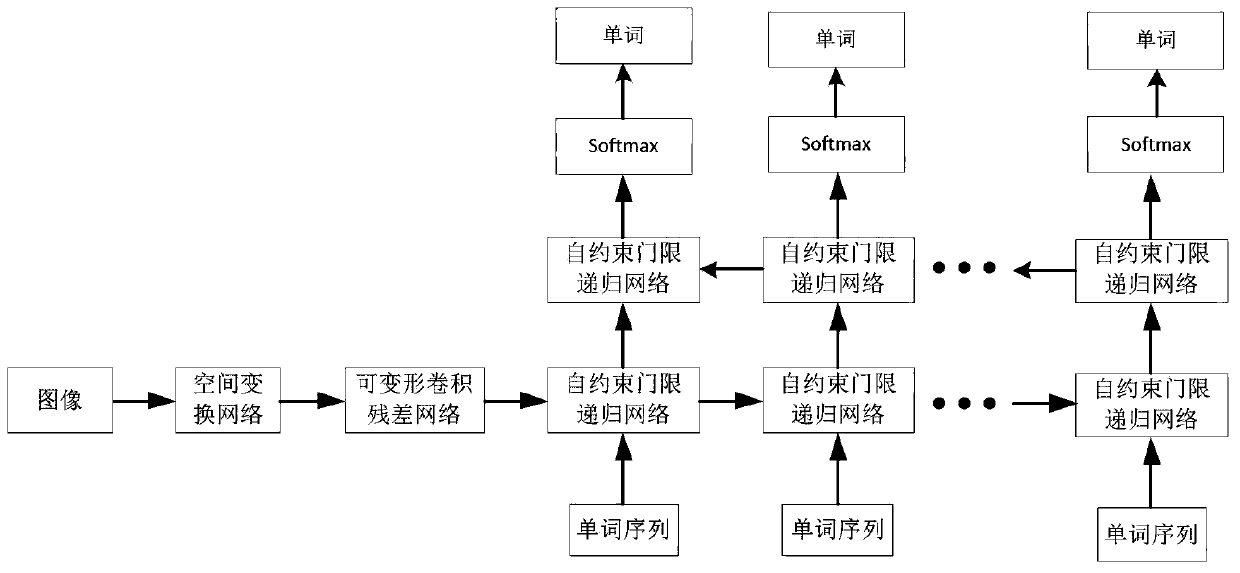

[0032] exist figure 1 , the image description method based on deep learning of the present embodiment consists of the following steps:

[0033] (1) Select 82,783 images and manually annotated sentences corresponding to the images from the MSCOCO dataset as the training set, and select 4,000 images as the test set;

[0034] (2) Build an image description model

[0035] The image description model is composed of a spatial transformation network, a deformable convolutional residual network, and a bidirectional self-constrained threshold recurrent network. The spatial transformation network and the deformable convolutional residual network are used to extract the features of the image. Constrained threshold recurrent network is used to construct language model to generate sentences corresponding to im...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com