A method for identifying an electroencephalogram image based on a deep convolutional neural network

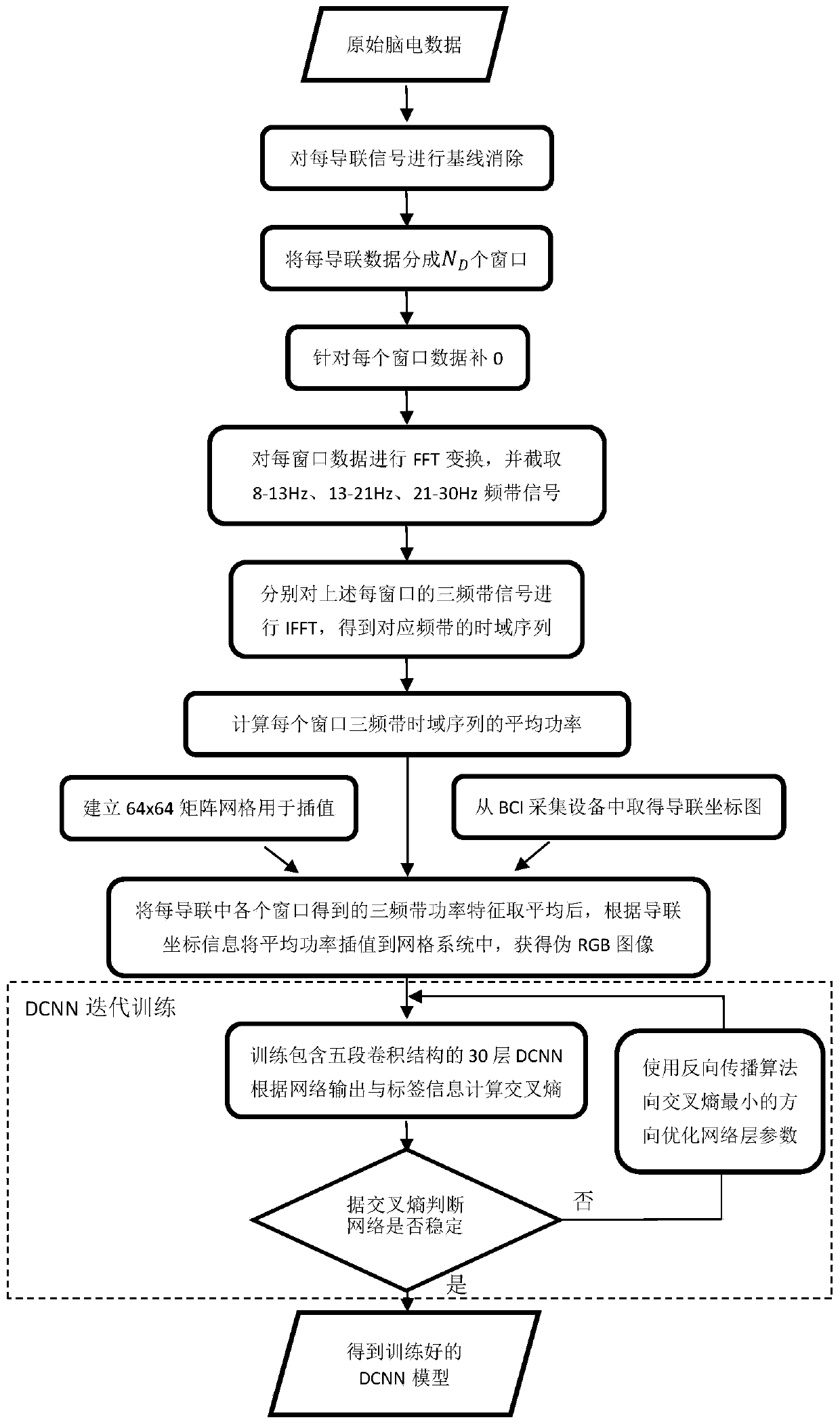

A neural network recognition and deep convolution technology, applied in the field of feature dimensionality reduction and classification, can solve problems affecting classification results, loss of lead electrode position information, poor network fitting ability, etc., to improve feature expression and recognition Accuracy, the effect of enhancing the fitting ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

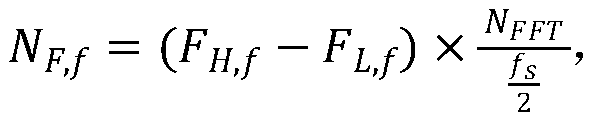

Method used

Image

Examples

Embodiment Construction

[0076] The concrete experiment of the present invention is carried out in the Tensorflow framework under the Ubuntu (64-bit) operating system, and the convolutional neural network training is completed on the NVIDIA GTX1080Ti graphics card.

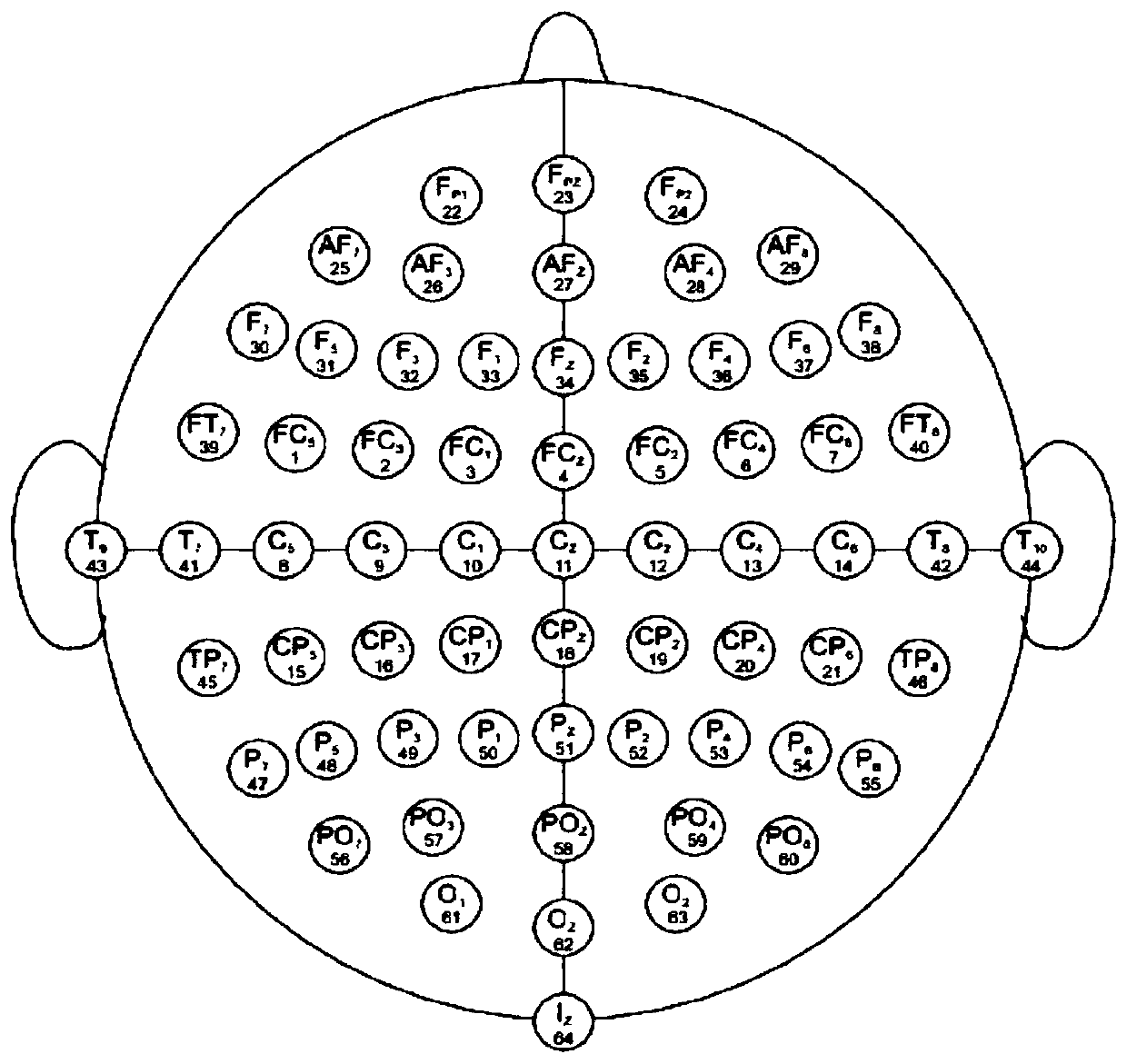

[0077] The MI-EEG data set used in the present invention comes from the public database of the BCI 2000 acquisition system, and is collected by developers using the 64-lead electrode caps of the international standard 10-10 lead BCI 2000 system. The sampling frequency is 160Hz. The distribution of electrodes on the scalp layer is as follows: Figure 1 shown.

[0078] Each experiment lasts 5 s. 0-1s is the period of resting state, a cross cursor appears on the screen, and at the same time t=0s, a short alarm sound is issued; 1s-4s is the period of motor imagery, a prompt cursor appears above or below the screen, if the cursor is above, then The subjects imagined moving their hands; if the cursor appeared below, the subjects imagined mov...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com