neural network acceleration method based on cooperative processing of multiple FPGAs

A neural network and collaborative processing technology, applied in the field of neural network optimization, can solve problems such as reducing neural network processing performance, and achieve the effect of improving energy efficiency ratio

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

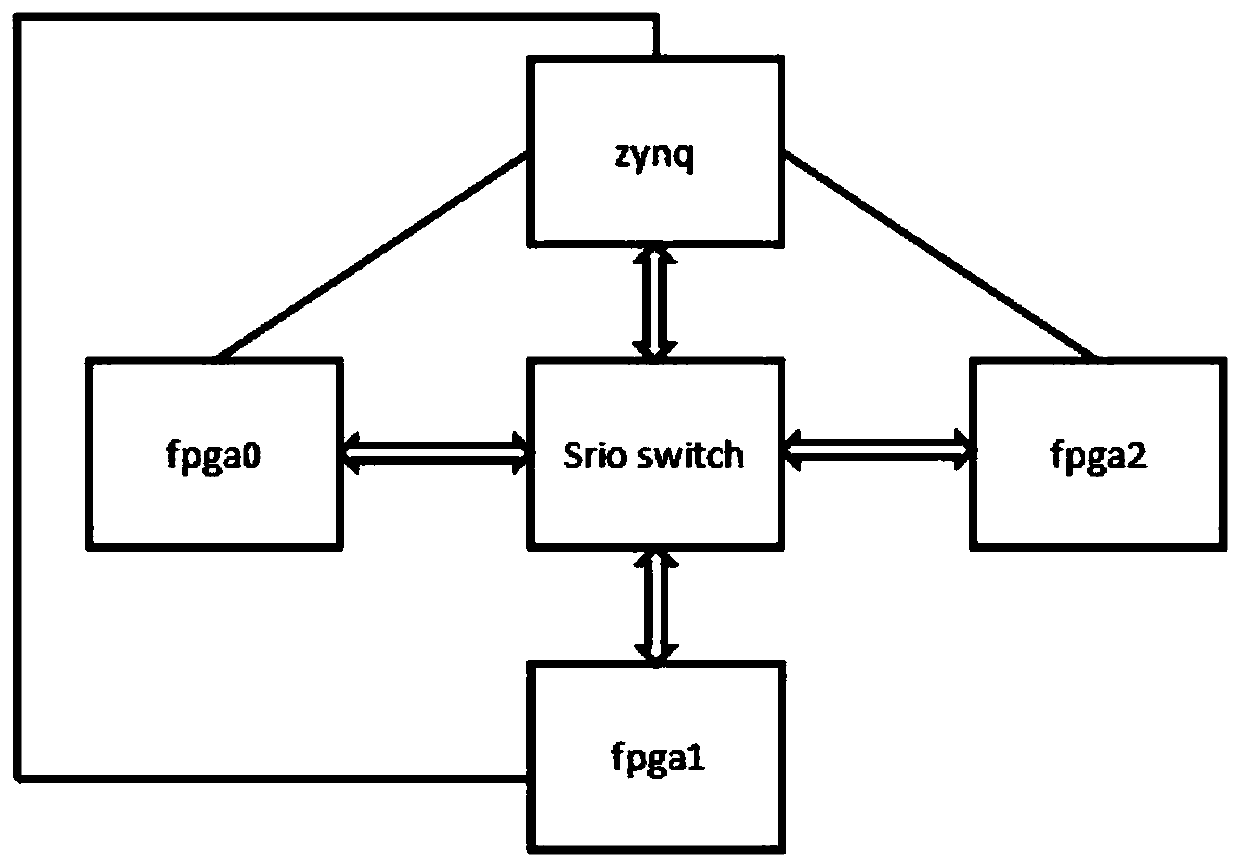

[0024] The present invention provides a neural network acceleration method based on multi-block FPGA cooperative processing, establishes a neural network acceleration board, and arranges an SOC chip and an FPGA on the acceleration board. The SOC chip includes a ZYNQ chip, and the ZYNQ chip is interconnected with each FPGA.

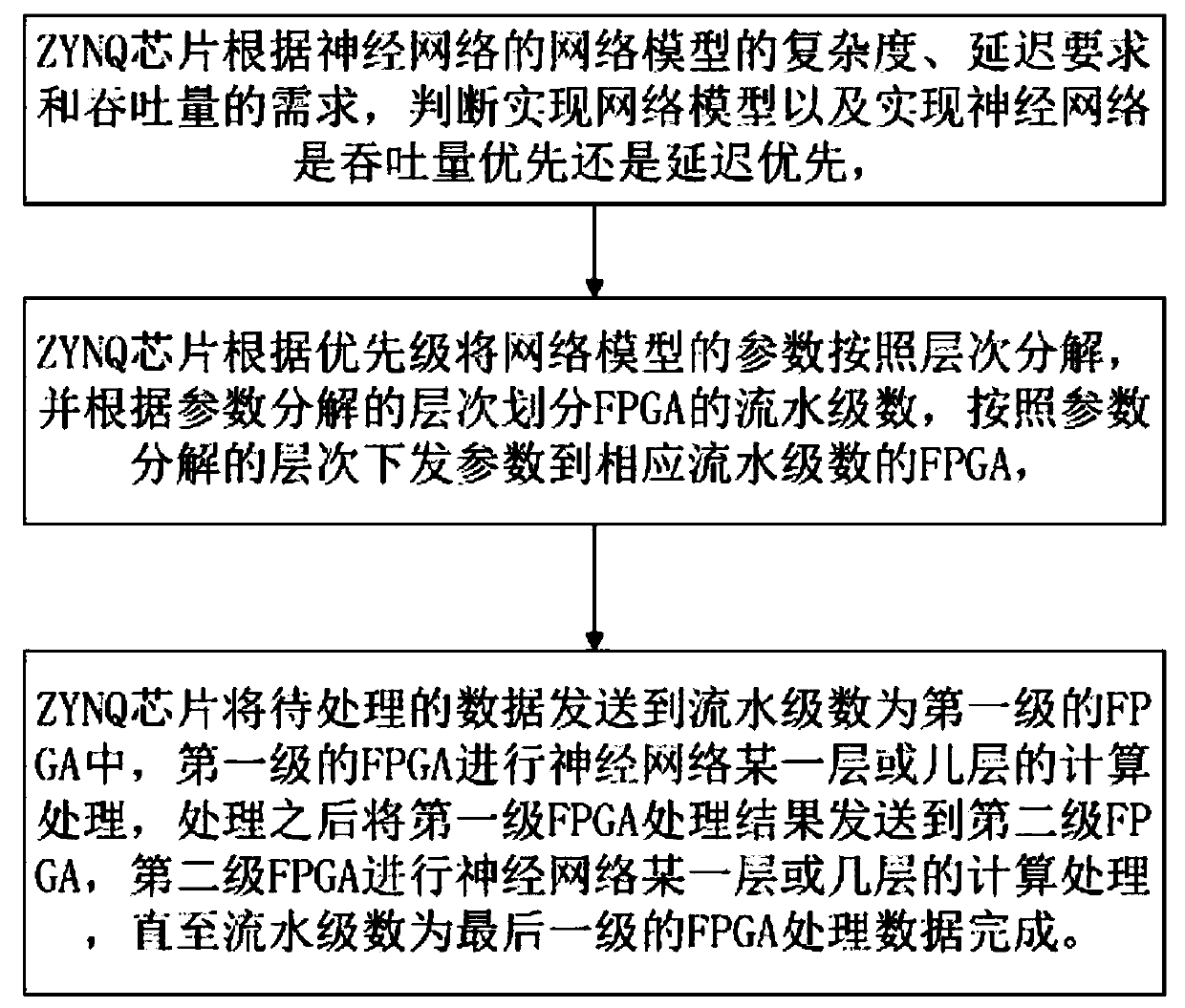

[0025] ZYNQ chip decomposes the parameters of the network model according to the level according to the complexity, delay requirements and throughput requirements of the network model of the neural network, divides the pipeline series of FPGA according to the level of parameter decomposition, and sends parameters according to the level of parameter decomposition To the FPGA of the corresponding pipeline level, control the FPGA activated by each pipeline level according to the neural network model, until the FPGA with the pipeline level of the last level completes the data processing.

[0026] Simultaneously provide a kind of neural network accelerator based...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com