Relational reasoning method, device and equipment based on deep neural network

A technology of deep neural network and reasoning method, which is applied in the direction of reasoning method, neural learning method, biological neural network model, etc. It can solve the problems of unreasonable use and low accuracy of relational reasoning, and achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

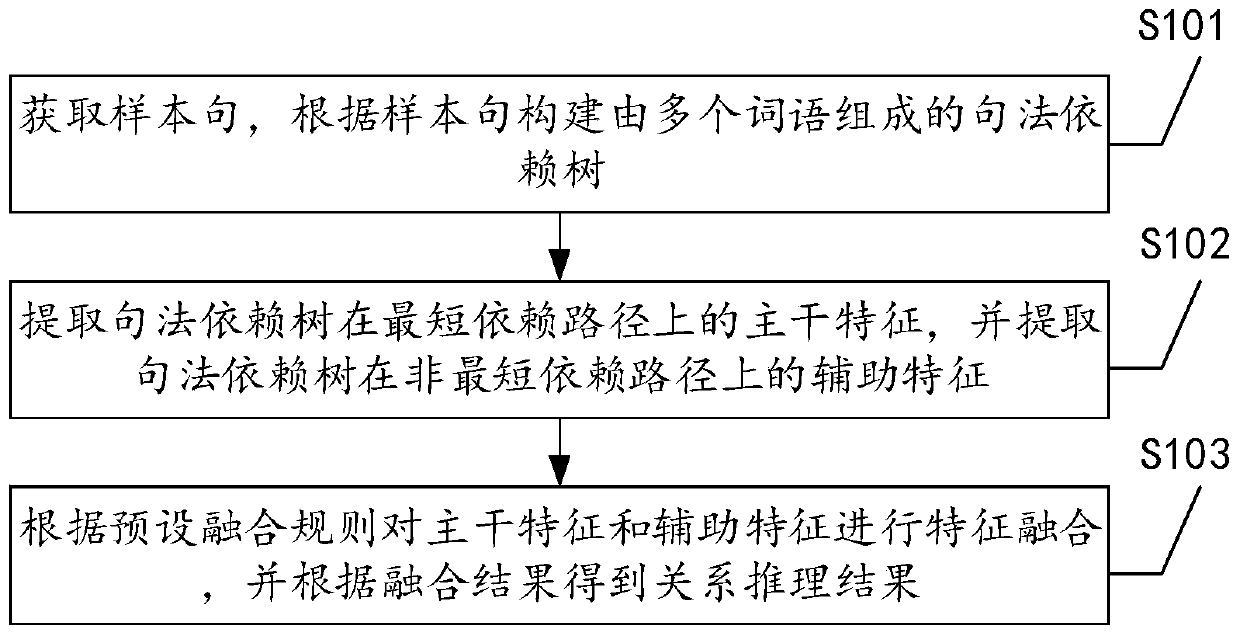

[0047] The following introduces the first embodiment of a method of relational reasoning based on deep neural network provided by this application, see figure 1 , The first embodiment includes:

[0048] Step S101: Obtain sample sentences, and construct a syntax dependency tree composed of multiple words according to the sample sentences.

[0049] The above sample sentence can be specifically a sequence of words. In this embodiment, the sample sentence is analyzed to realize the word segmentation of the sample sentence, and then the part of speech analysis is performed on the word segmentation result, and the dependency relationship between the word segmentation results is analyzed, and finally the sample sentence is obtained. Word segmentation result, part of speech result, syntax analysis tree, and syntax dependency tree (that is, the above-mentioned syntax dependency tree). Specifically, an open source parser can be used to implement the above process, such as a Stanford parser. ...

Embodiment 2

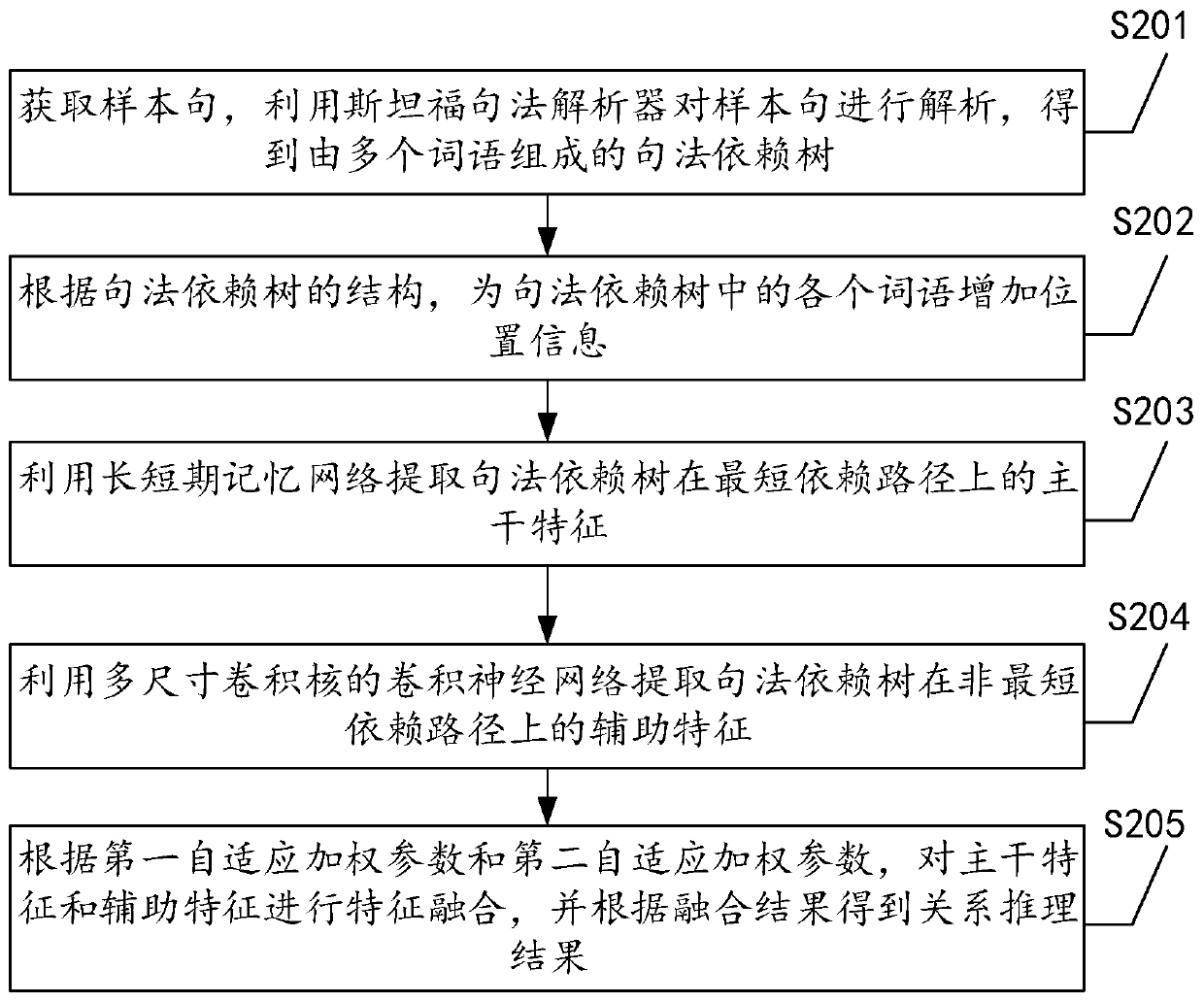

[0055] The second embodiment of a relational reasoning method based on a deep neural network provided by the present application will be introduced in detail below. The second embodiment is implemented based on the above-mentioned first embodiment, and is expanded to a certain extent on the basis of the first embodiment. Specifically, see figure 2 , The second embodiment includes:

[0056] Step S201: Obtain a sample sentence, analyze the sample sentence with a Stanford syntax parser, and obtain a syntax dependency tree composed of multiple words.

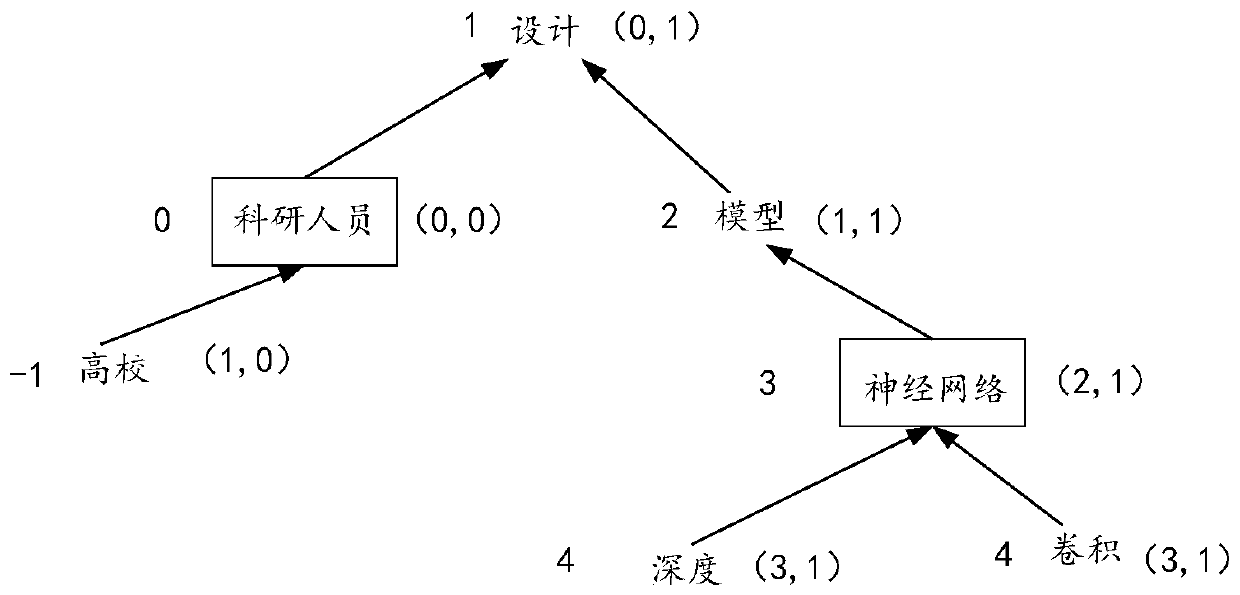

[0057] This embodiment analyzes the sample sentence to determine whether the structure of the sample sentence conforms to the given grammar, and analyzes the structure of the sentence and the relationship between the syntactic components of each level by constructing a syntax dependency tree, that is, to determine which of a sentence is Words constitute a phrase, and which words are the subject or object of the verb. Perform word segme...

Embodiment approach

[0087] As an optional implementation manner, the device further includes:

[0088] Location information adding module 904: used to add location information to each word in the syntax dependency tree according to the structure of the syntax dependency tree, where the location information includes first location information and second location information. The first position information is a path vector from the current word to the target word, the second position information is a binary array consisting of the length of the forward path and the length of the reverse path from the current word to the target word, and the target word is the waiting A pre-designated word among the two words for relational reasoning.

[0089] As an optional implementation manner, the relationship inference module 903 is specifically configured to:

[0090] The first adaptive weighting parameter and the second adaptive weighting parameter are set in advance for the main feature and the auxiliary feature, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com