Video target detection method based on convolutional gating recurrent neural unit

A neural unit and target detection technology, applied in the field of image processing, can solve the problems of low detection accuracy and insufficient feature accuracy, and achieve the effects of high detection accuracy, improved feature quality, and improved effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

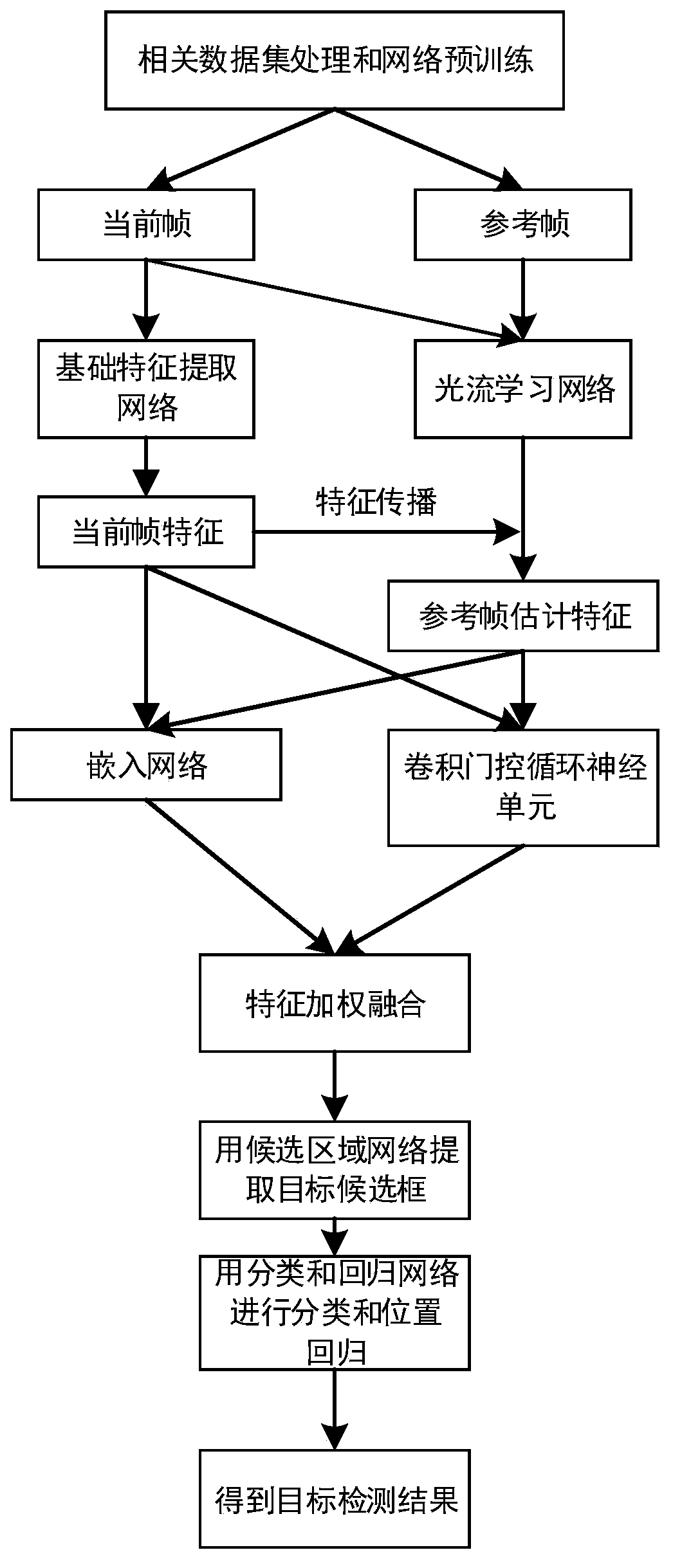

[0029]Video object detection requires correct object recognition and bounding box position prediction for each frame in the video. Compared with the target detection in the image, the target detection in the video adds a temporal relationship, and has some difficulties that are rarely seen in the image data. The single-frame target detection method cannot make full use of the temporal relationship, and is not well adaptable to the unique motion blur, video out-of-focus, occlusion, and singular poses of video data. The T-CNN series of methods consider the consistency constraints on timing, but the steps are complicated and cannot be trained end-to-end. The DFF series methods make full use of the redundancy between consecutive frames in time series, but do not make good use of the information between consecutive frames to improve the quality of feature extraction. Aiming at the shortcomings of the above method, the present invention introduces a circular gating convolutional ne...

Embodiment 2

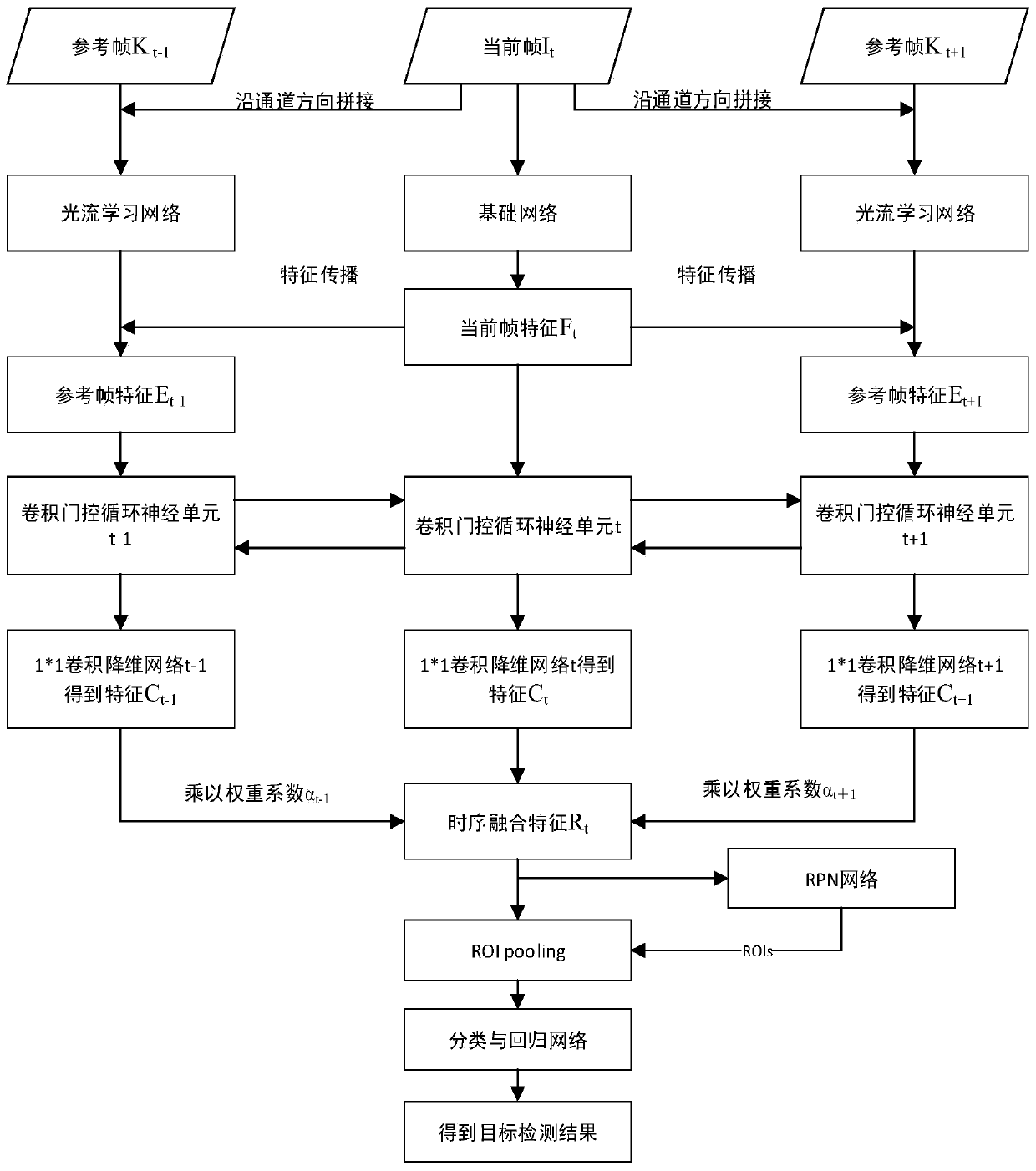

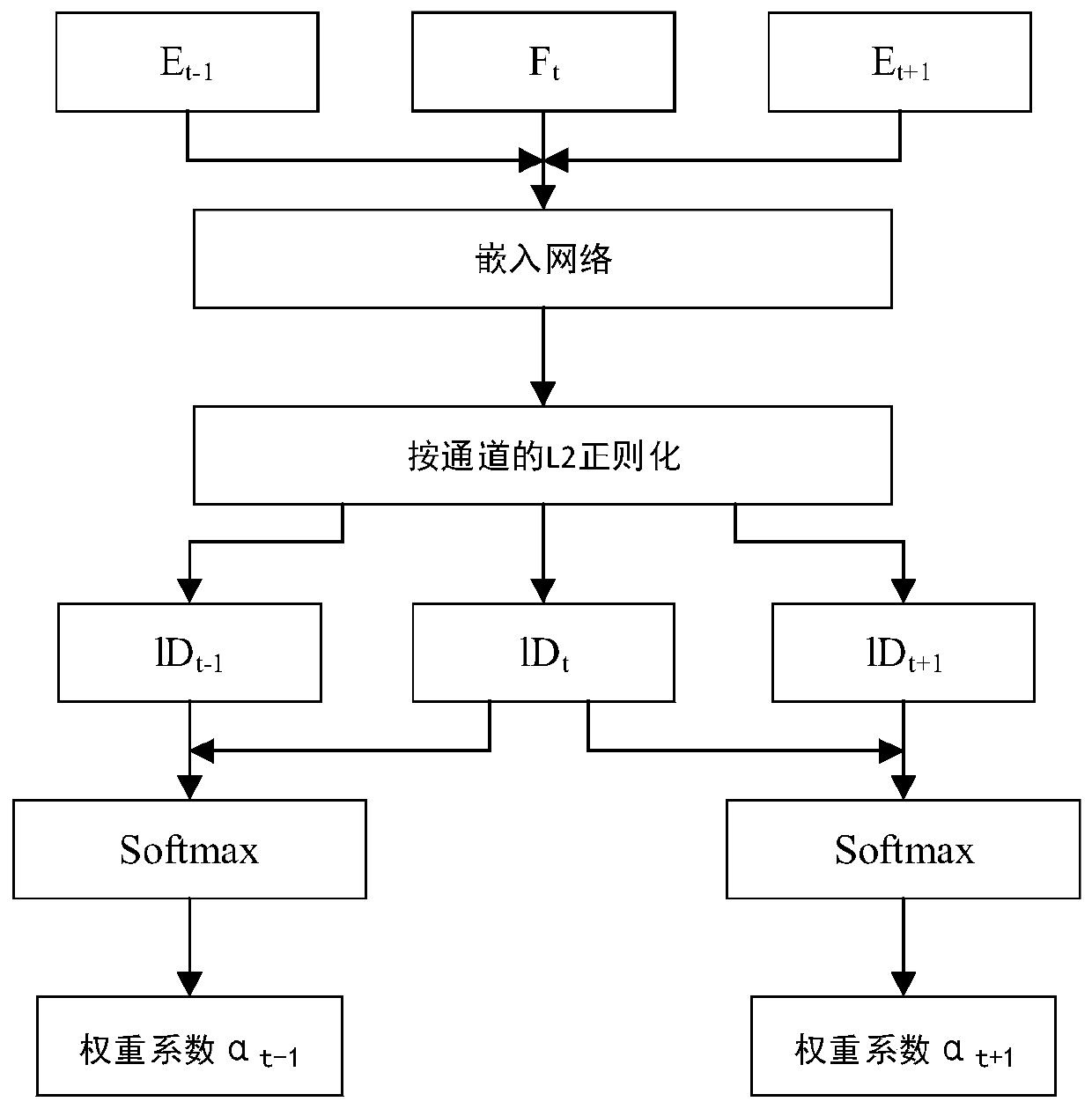

[0050] The video target detection method based on the convolution gated recurrent neural unit is the same as embodiment 1, and the reference frame feature is estimated based on the current frame feature described in step (4), specifically comprising the following steps:

[0051] 4.1) The reference frame K t-n / 2 ~K t+n / 2 with the current frame I t Splicing along the channel direction is used as the input of the optical flow learning network, and the result of the optical flow learning network is expressed as S i =M(K i , I t ). Among them, i represents the time range t-n / 2~t+n / 2, S i Represents the result of the optical flow learning network at the i-th moment, M represents the optical flow learning network, K i is the i-th reference frame, I t for the current frame.

[0052] In this embodiment, the FlowNet fully trained on the Flying Chairs data set is used as the optical flow learning network. The output of the network is 1 / 4 of the size of the original image, which n...

Embodiment 3

[0060] The video target detection method based on the convolution gated cyclic neural unit is the same as embodiment 1-2, step (5) based on the temporal context feature learning of the convolution gated cyclic neural unit, including the following detailed steps:

[0061] 5.1) The reference frame estimation feature E obtained by steps (1)~(4) in claim 1 t-n / 2 ~E t+n / 2 and the current frame feature F t According to the time sequence, it is used as the input of the convolutional gated recurrent neural unit, and is denoted as H;

[0062] 5.2) The specific calculation formula for the forward propagation of the convolutional gated recurrent neural unit is as follows:

[0063] z t =σ(W Z *H t +U z *M t-1 ),

[0064] r t =σ(W r *H t +U r *M t-1 ),

[0065]

[0066]

[0067] where H t is the input feature map of the convolution-gated recurrent neural unit at the current moment, M t-1 The feature map with memory learned by the convolutional gated recurrent neural u...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com