Three-dimensional reconstruction method based on deep learning

A 3D reconstruction and deep learning technology, applied in the field of 3D reconstruction based on deep learning, to achieve high-precision 3D reconstruction, avoid the accumulation of multi-link errors, and avoid the effects of camera calibration

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

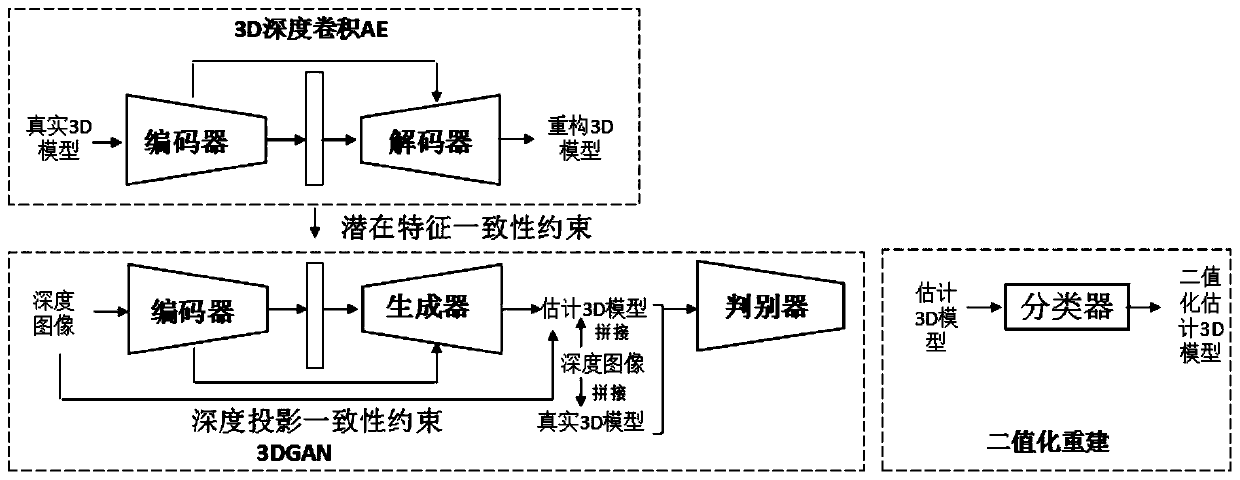

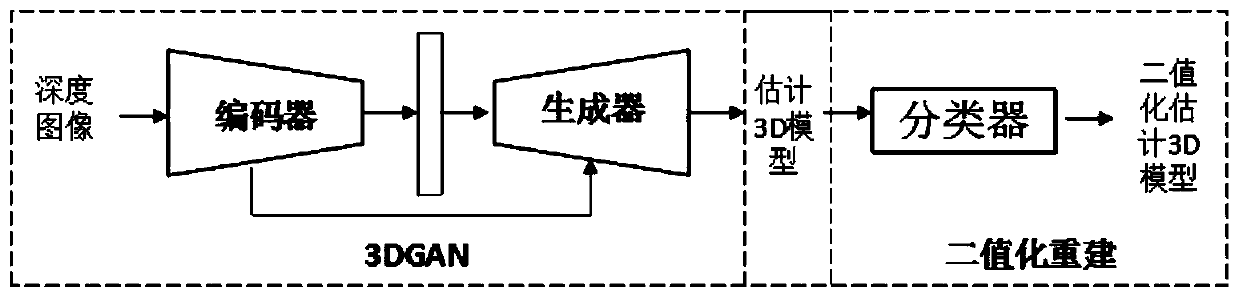

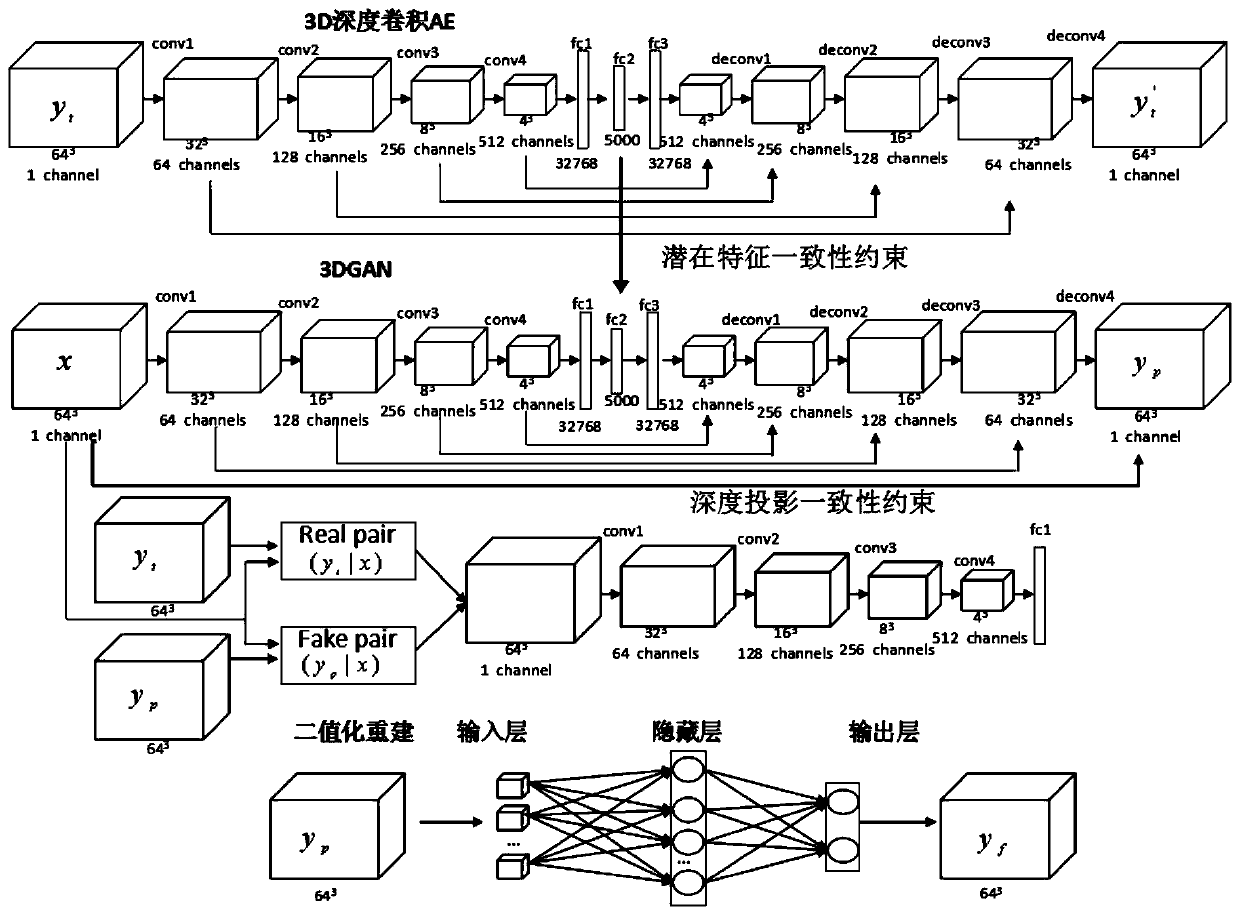

[0013] like image 3 As shown, this 3D reconstruction method based on deep learning includes the following steps:

[0014] (1) Reconstruct the complete 3D shape of the target from the constrained latent vector of the input image, learn the mapping between the partial and complete 3D shapes, and then realize the 3D reconstruction of a single depth image;

[0015] (2) Learning an intermediate feature representation between the 3D real object and the reconstructed object to obtain the target latent variable in step (1);

[0016] (3) Transform the voxel floating value predicted in step (1) into a binary value by using an extreme learning machine to complete high-precision reconstruction.

[0017] The present invention uses the deep neural network to perform high-performance feature extraction, avoiding the accumulation of multi-link errors in manual design; the input image is constrained by learning the potential information of the three-dimensional shape, so that the missing par...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com