Visual target tracking method based on self-adaptive subject sensitivity

A target tracking and adaptive technology, applied in the field of computer vision, can solve the problems that the model is difficult to adapt to different types of targets, the sensitivity of the target subject is not obtained, and the foreground and background can be effectively distinguished, so as to solve the gradient disappearance and improve the expression ability. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] Below in conjunction with accompanying drawing of description, the embodiment of the present invention is described in detail:

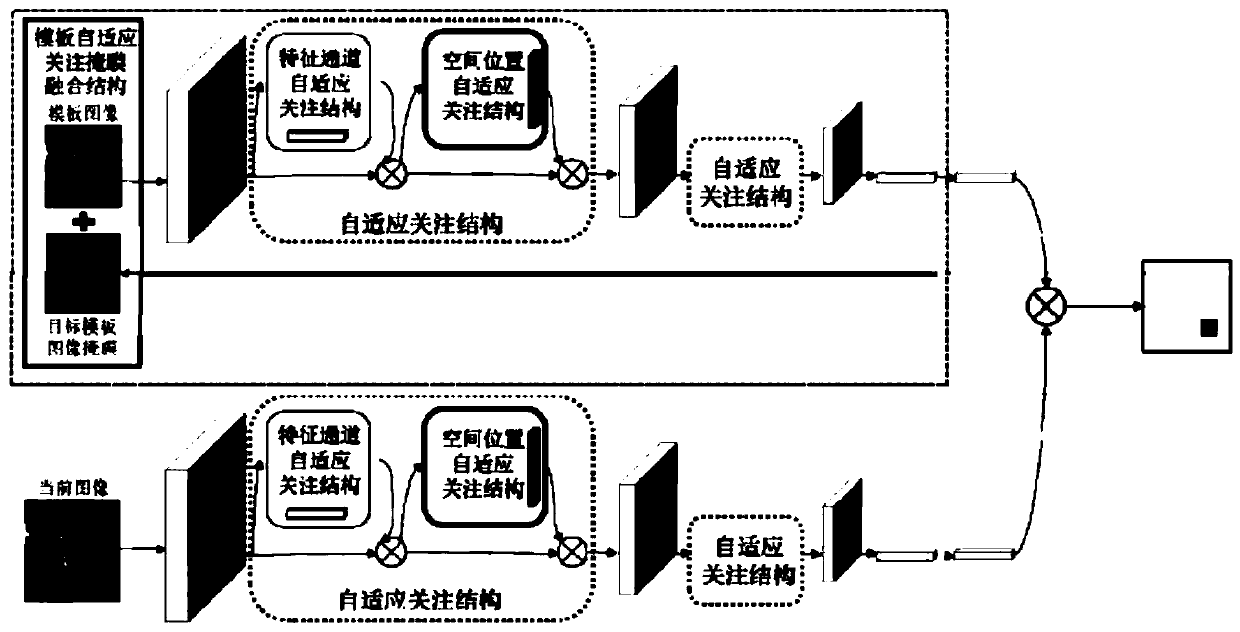

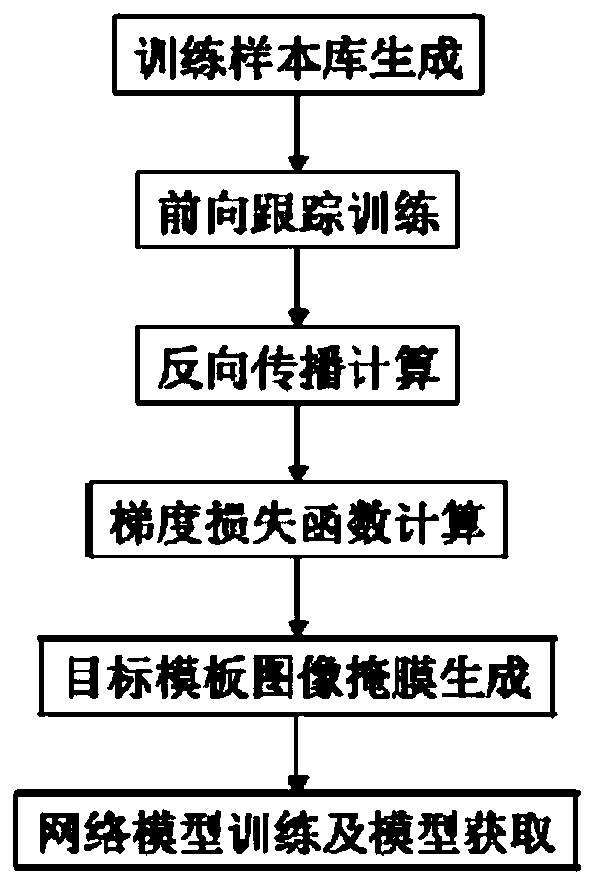

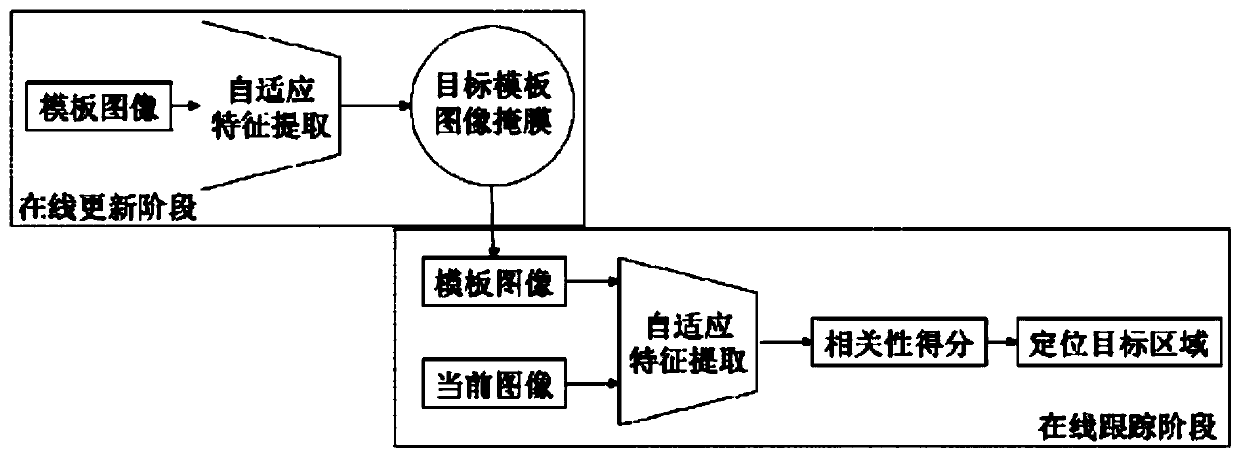

[0041] A visual target tracking method based on adaptive subject sensitivity, the overall flow chart is attached figure 1 shown; the algorithm is divided into an offline part and an online part; the flow charts are as attached figure 2 And attached image 3 As shown; in the offline part, the corresponding image pair is first generated according to the training sample set as the input template image and the current image respectively; then, the two are input into the built adaptive subject-sensitive Siamese network for feature extraction, and the tracking regression response is generated and calculated Track the regression loss function; then, calculate the backpropagation gradient through the chain derivation rule, and generate the target template image mask superimposed on the output of the network model; finally, calculate the tracking reg...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com