A multi-camera system and lidar joint system and its joint calibration method

A laser radar and joint calibration technology, applied in the computer field, can solve the problems of calibration, the inability to complete the internal and external parameters of the camera, and the lack of common field of view of the multi-camera system, so as to ensure the accuracy, save the time and process of calibration, and simplify the calibration steps. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

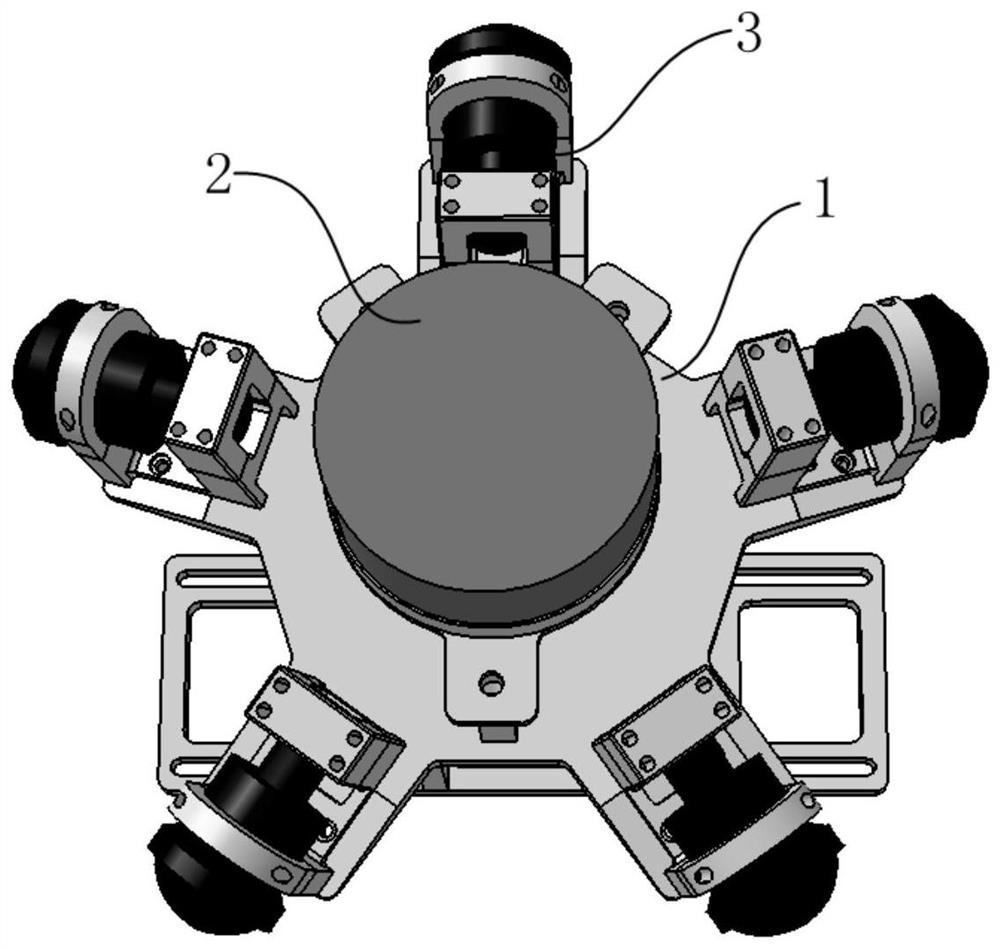

[0033] Such as Figure 1-3 As shown, the present invention discloses a multi-camera system and laser radar combined system, said combined system includes five sets of industrial cameras 3 and one set of laser radar 2, industrial cameras 3 and laser radar 2 are installed on the fixed bracket 1 , the industrial cameras 3 are installed on the outside of the laser radar 2 in an equicentric distribution with the laser radar 2 as the center.

[0034] Among them, the lidar 2 is a 2.5D lidar or a 3D lidar, and the vertical field of view of the lidar 2 is 10°-40°.

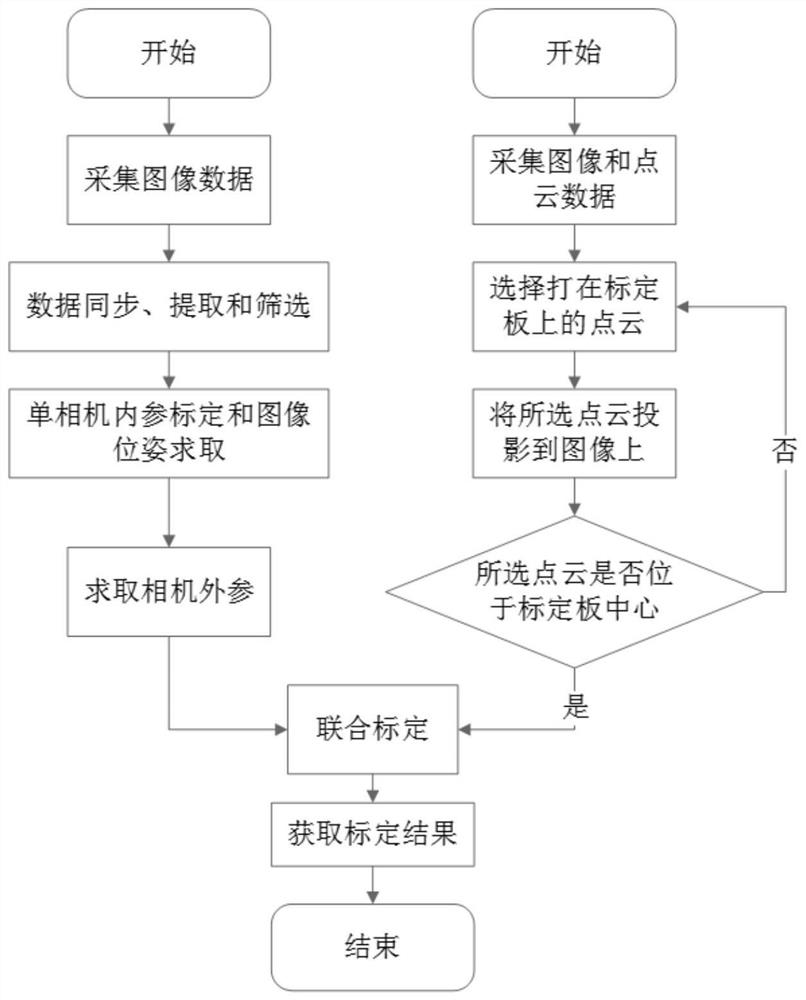

[0035] The present invention also discloses a multi-camera system and lidar joint calibration method, which adopts the above-mentioned joint system, and specifically includes the following steps:

[0036] S1. Install the fixed joint system, turn on the synchronous shooting function of the industrial camera 3, place the special calibration board outside the joint system, adjust the distance between the special calibration b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com