Music generation method based on facial expression recognition and recurrent neural network

A Recurrent Neural Network and Expression Recognition technology, applied in character and pattern recognition, acquisition/recognition of facial features, computer parts and other directions, can solve the problem of inability to meet the individual needs of the audience, achieve a good performance experience, low hardware dependence Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0050] The present invention will be further described below in conjunction with accompanying drawing.

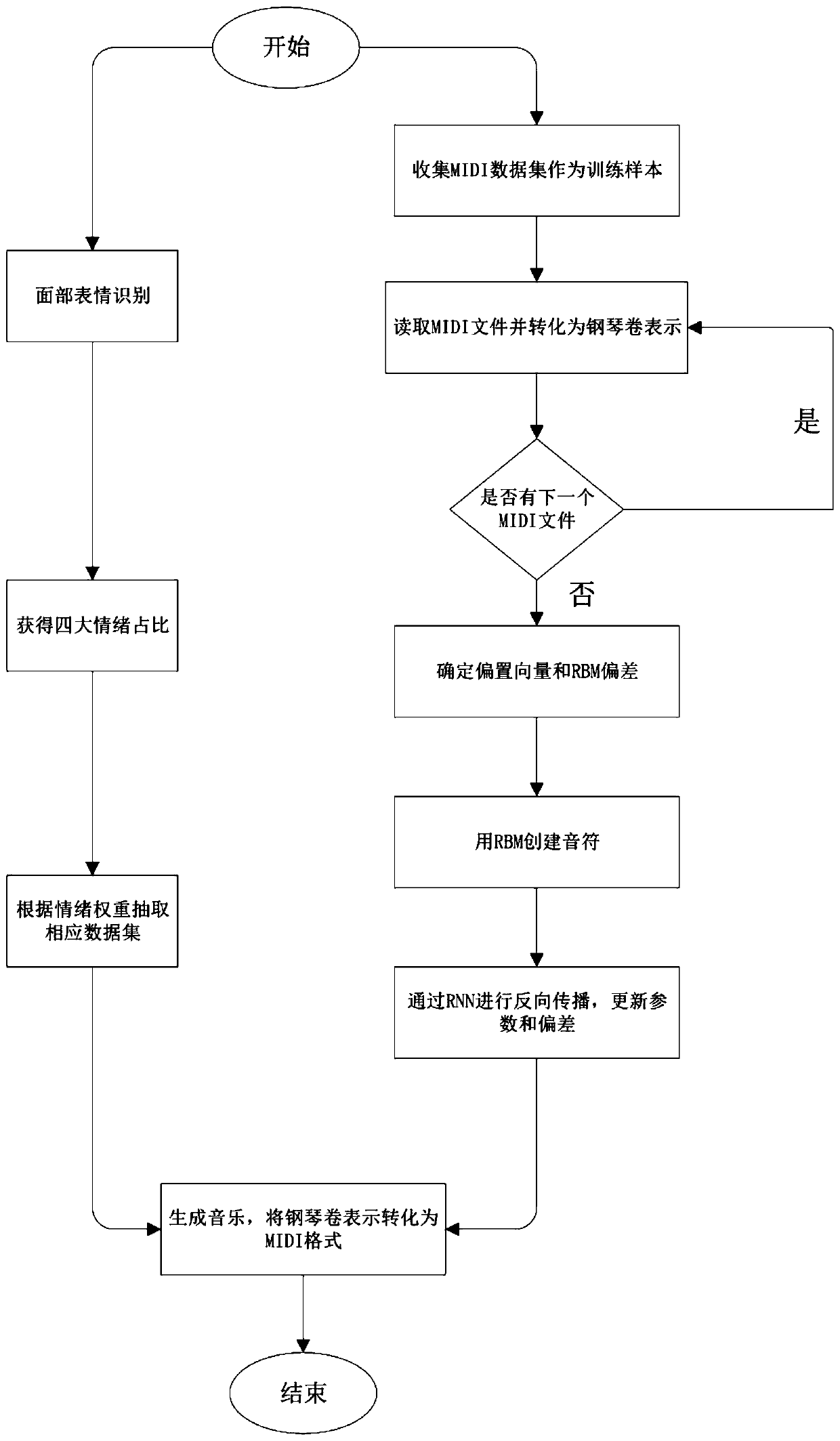

[0051] refer to Figure 1 ~ Figure 4 , a kind of music generation method based on facial expression recognition and recurrent neural network, comprises the following steps:

[0052] S1: Obtain music audio data and character expression data;

[0053] S2: classify and label data;

[0054] S3: audio data and image data processing;

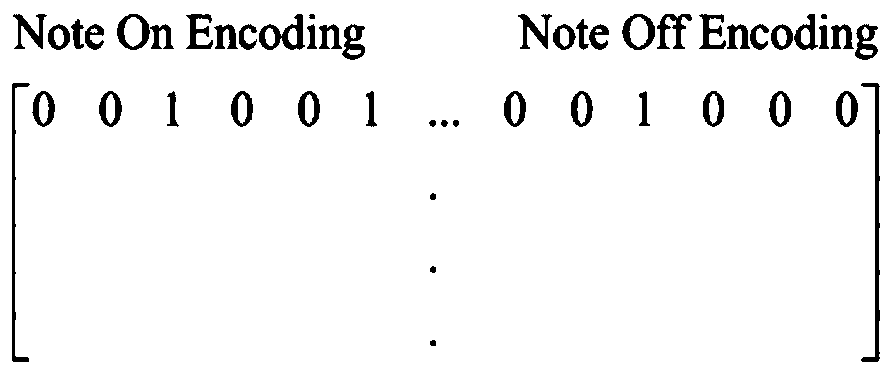

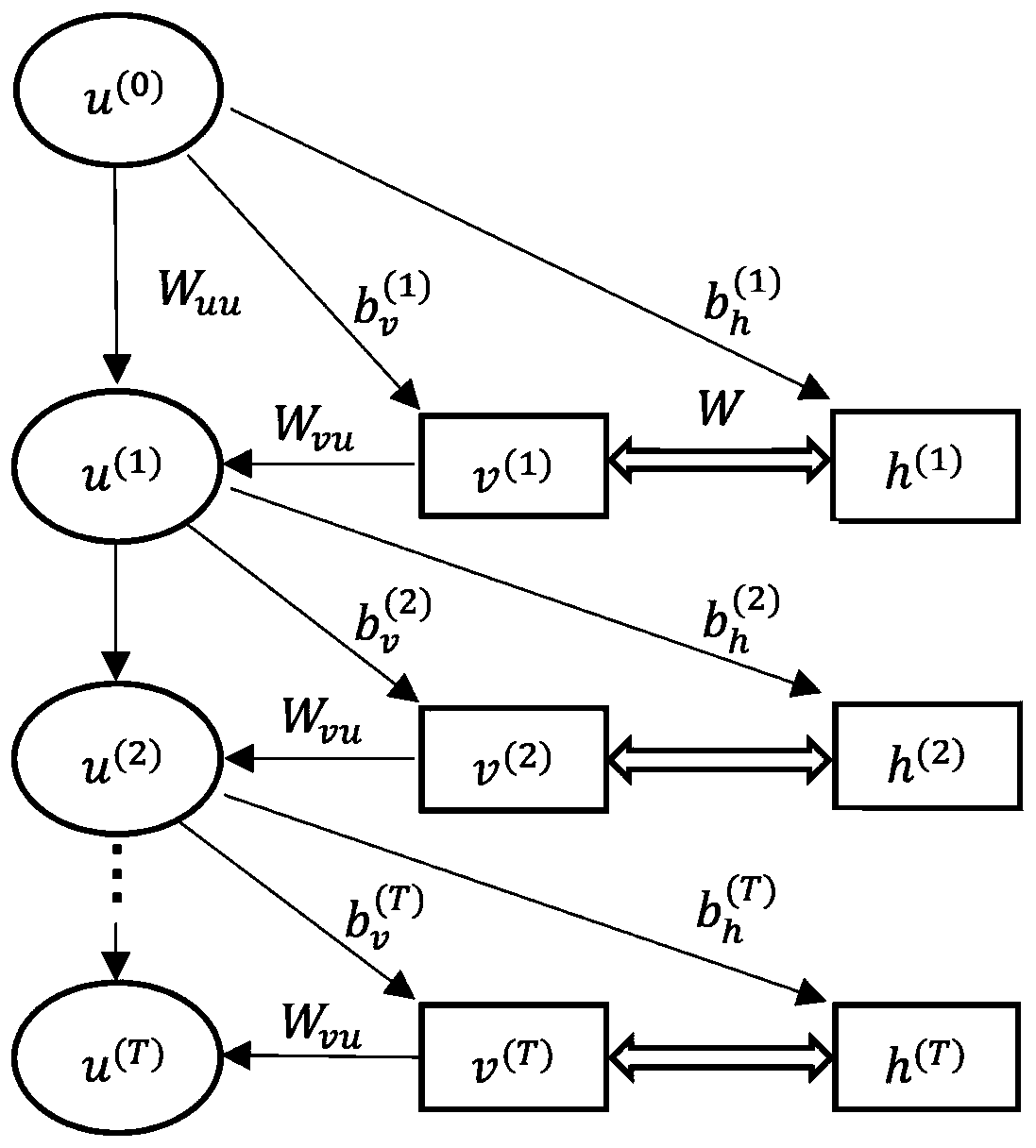

[0055] S4: Initialize the RNN-RBM neural network;

[0056] S5: training RNN-RBM neural network;

[0057] S6: Use VGG19 to recognize facial expressions;

[0058] S7: Input the recognized emotional information into the trained RNN-RBM network to obtain the final music generation.

[0059] In this example, music is generated from pictures and audio data collected by oneself, and the method includes the following steps:

[0060]S1: Get audio data and image data:

[0061] Some of the audio data comes from the Classical Piano Midi dataset. The m...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com