Patents

Literature

45results about How to "Improve performance experience" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

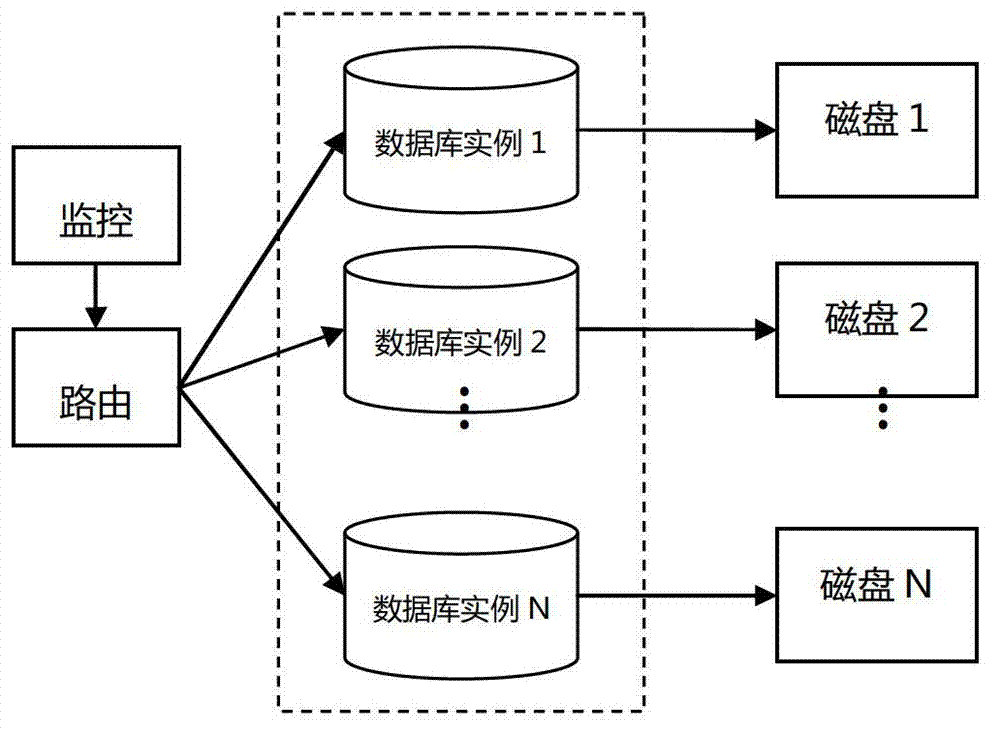

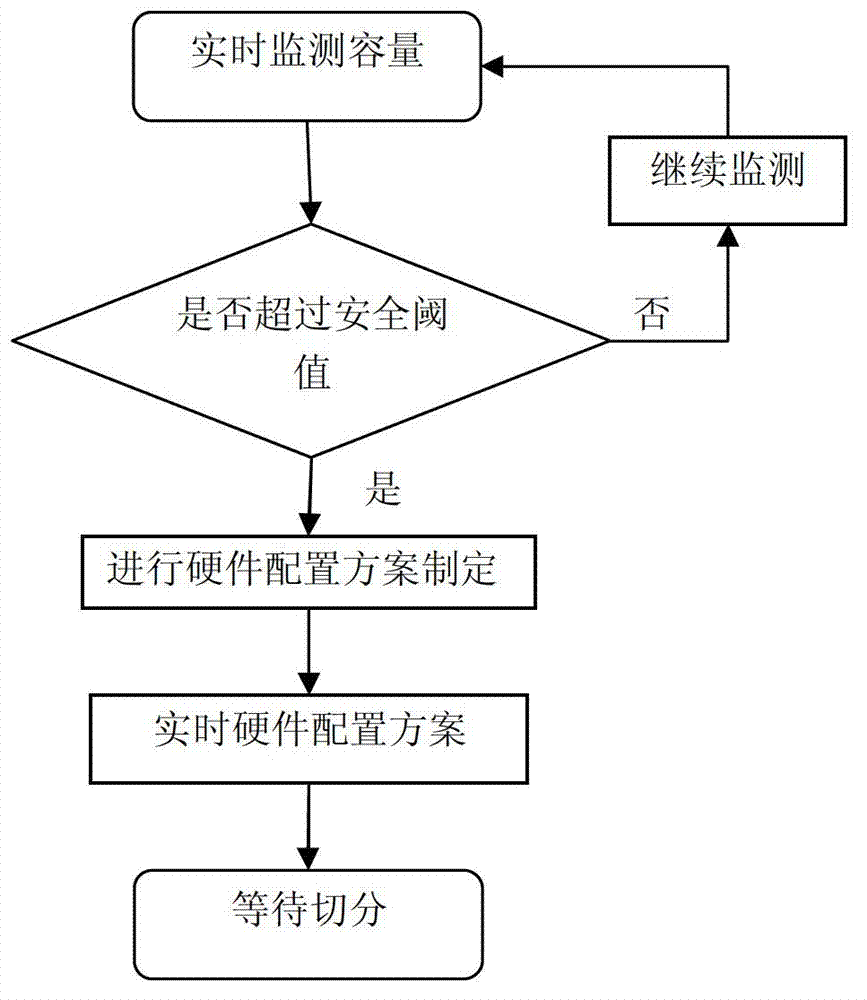

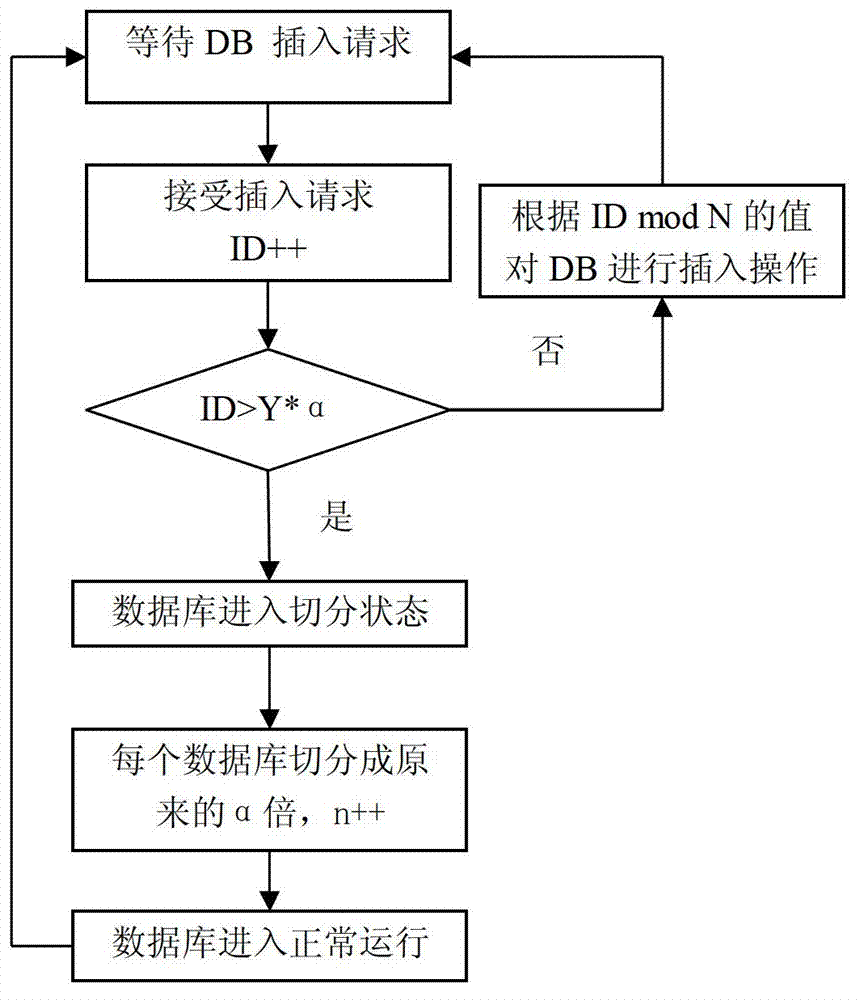

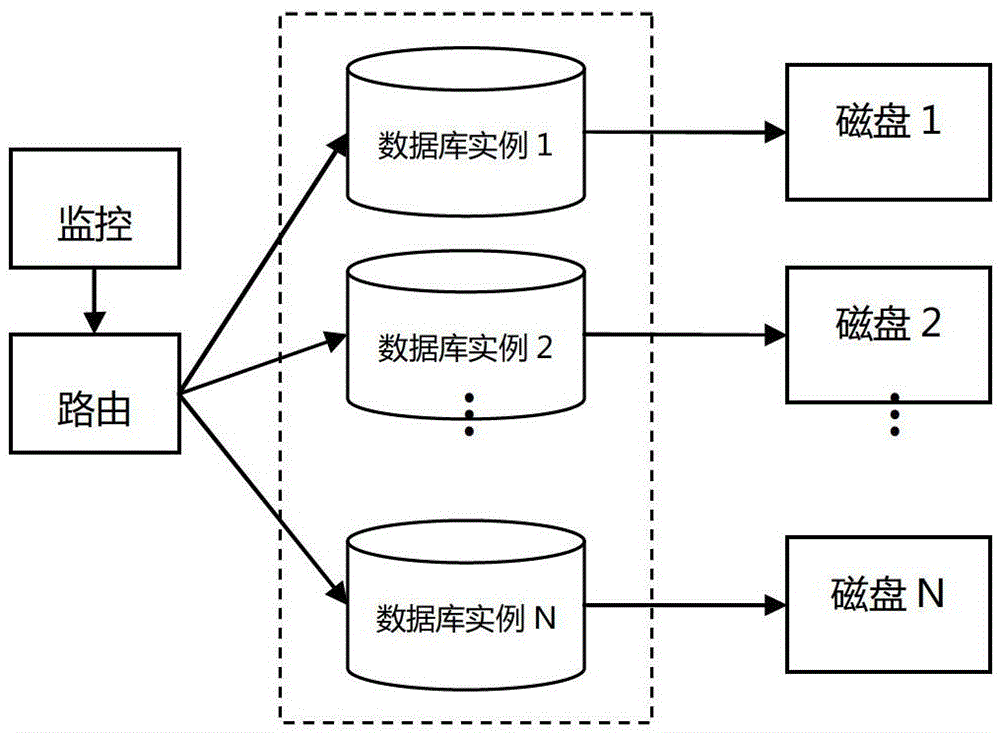

Rapid horizontal extending method for databases

ActiveCN102930062AImprove the speed of expansionEasy to monitorSpecial data processing applicationsData segmentDisk size

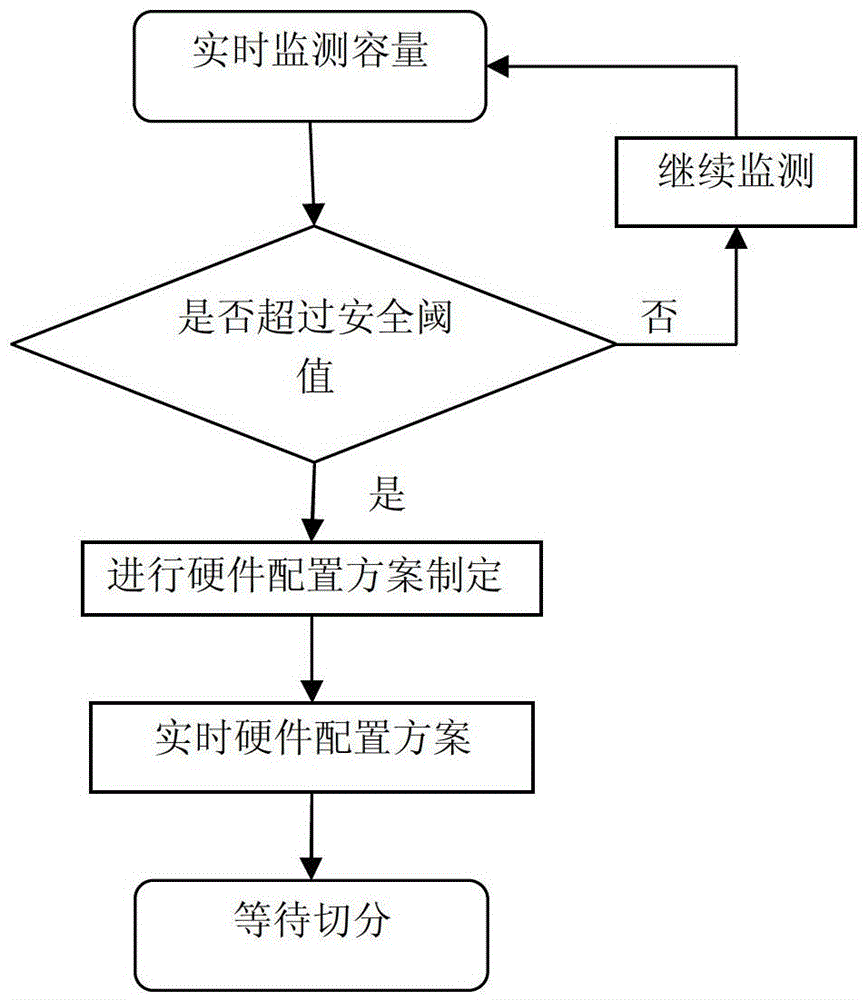

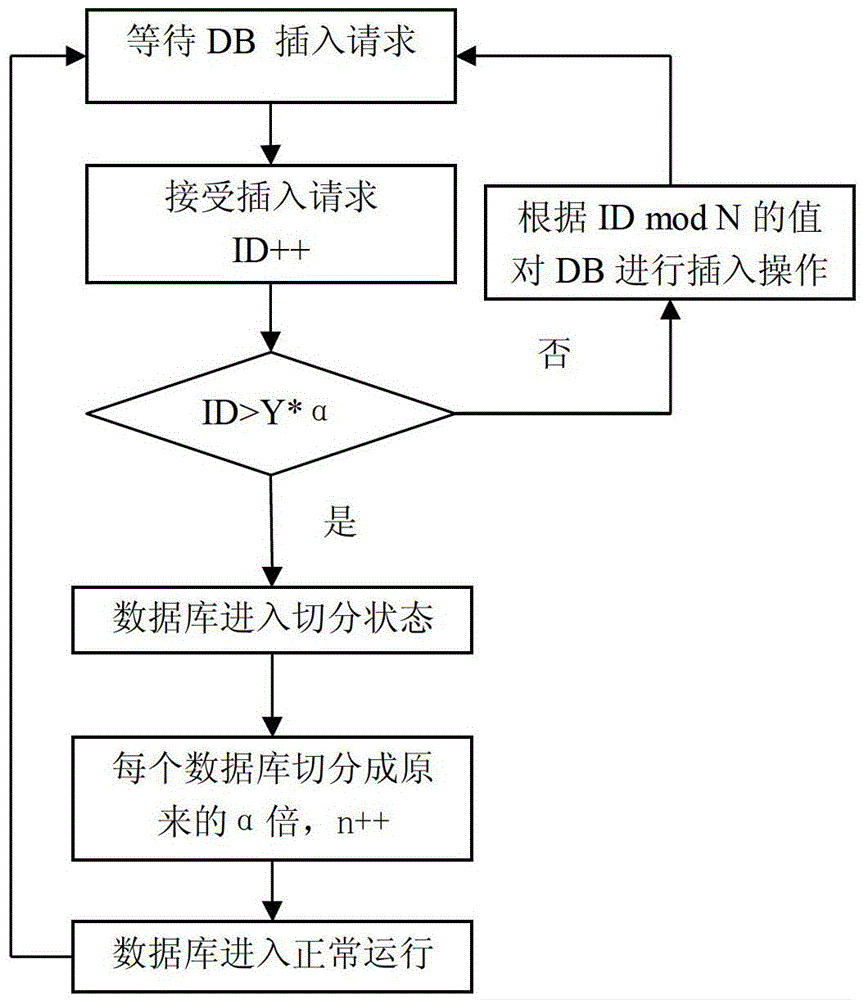

The invention discloses a rapid horizontal extending method for databases, which belongs to the field of data migration and storage. The method comprises the following steps: a monitoring and control system carries out monitoring on the disk storage space of the existing database, and when the disk size reaches a preset storage capacity threshold, the monitoring and control system triggers a hardware storage increasing action so as to make a hardware environment preparation for data migration; and a routing protocol carries out a modular computation on the instance number N of the current database according to a major key ID to be inserted currently, and routes the line number of data to be inserted into a specified database, and when the number of inserted lines exceeds a preset storage number threshold, the routing protocol triggers a database segmentation action so as to migrate specified data into a new database, thereby completing the rapid horizontal extending of the database. The method disclosed by the invention can be applied to disk databases or memory databases, and the method is easy to use and simple, and avoids the problem that hotspots are distributed nonuniformly caused by traditional horizontal extending of databases; in addition, as the scale of migration of data segmented each time is small, the extending speed is improved. In conclusion, the overall architecture meets the requirements on high availability, high reliability, high speed, high efficiency and the like.

Owner:NANJING FUJITSU NANDA SOFTWARE TECH

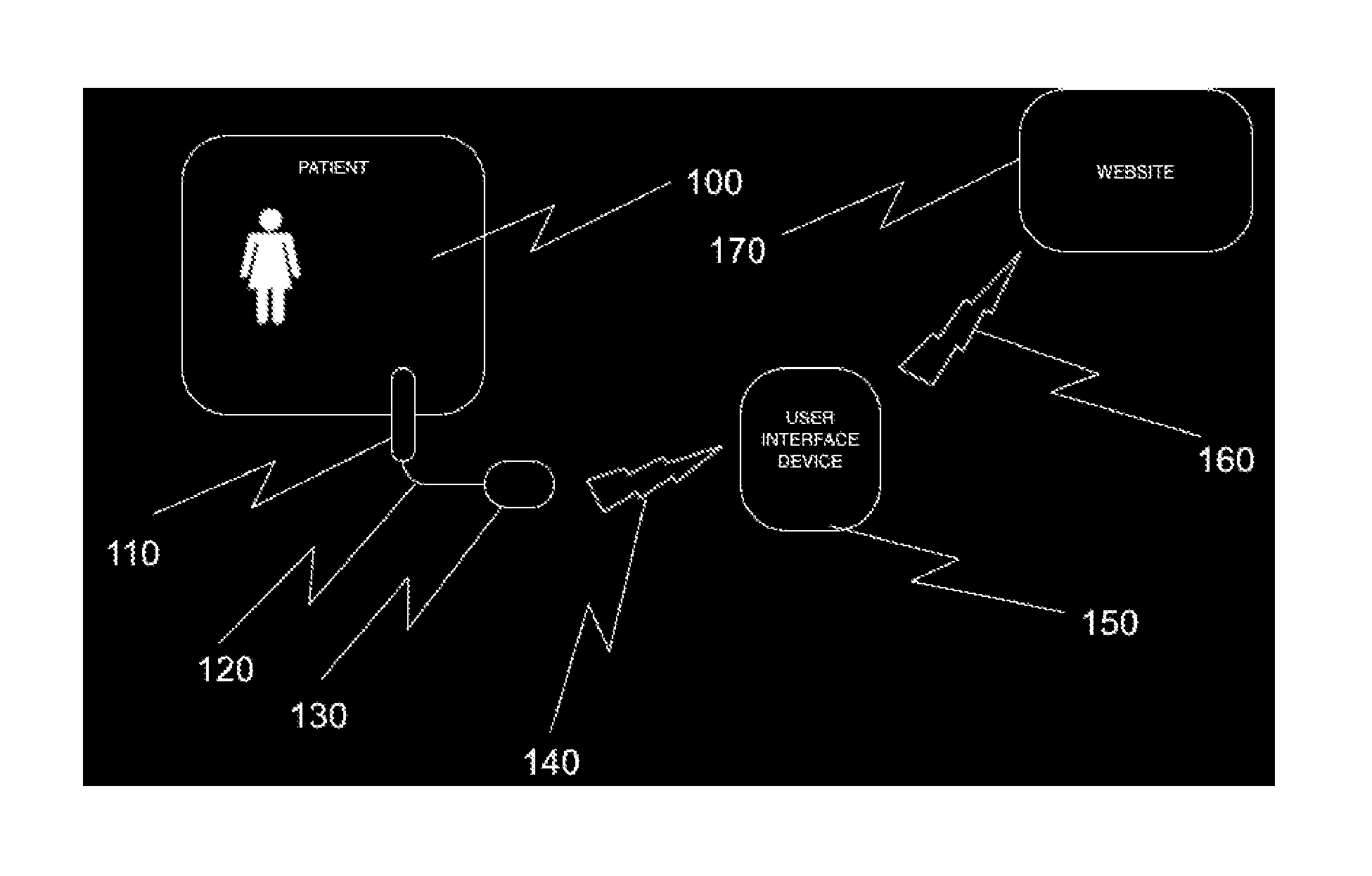

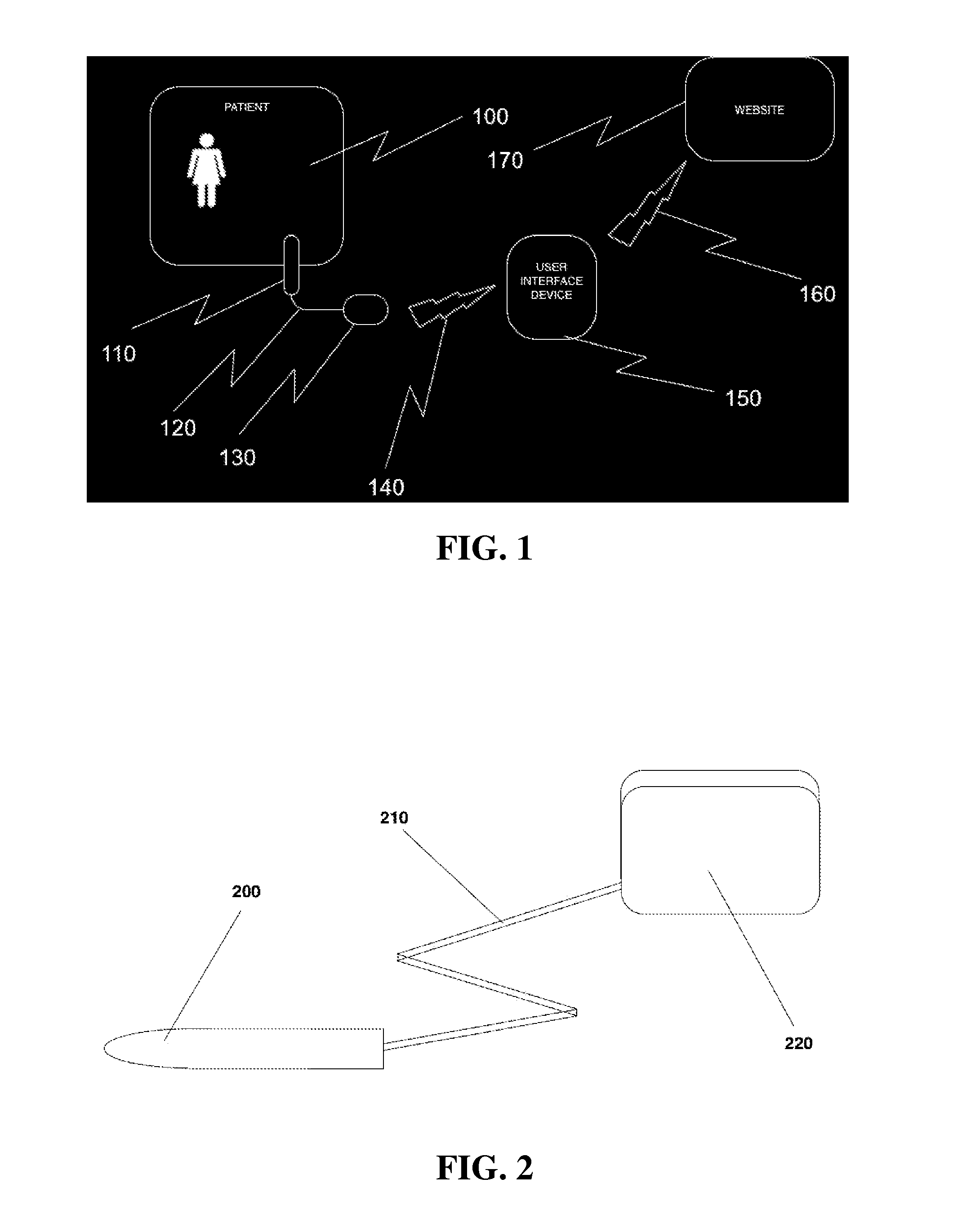

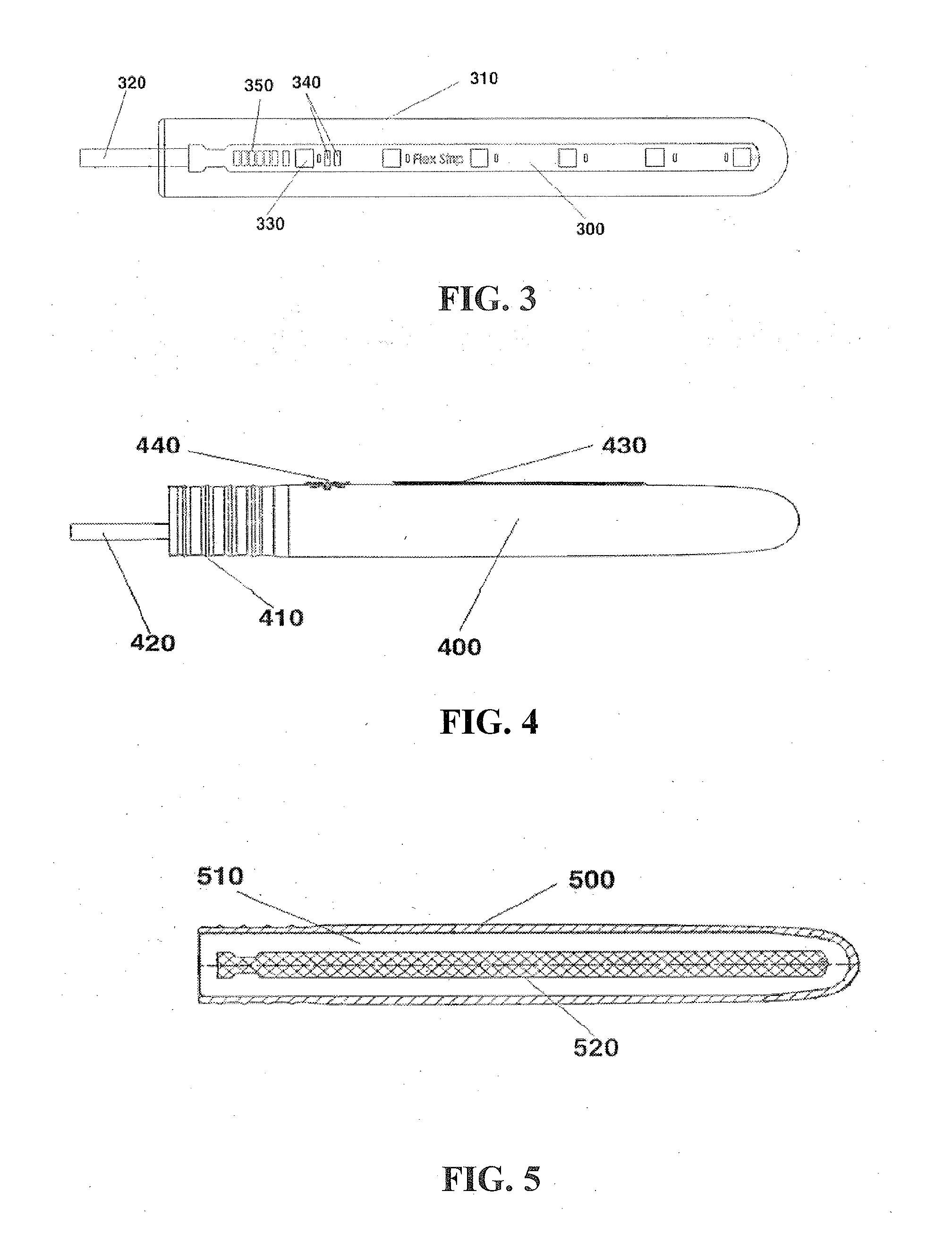

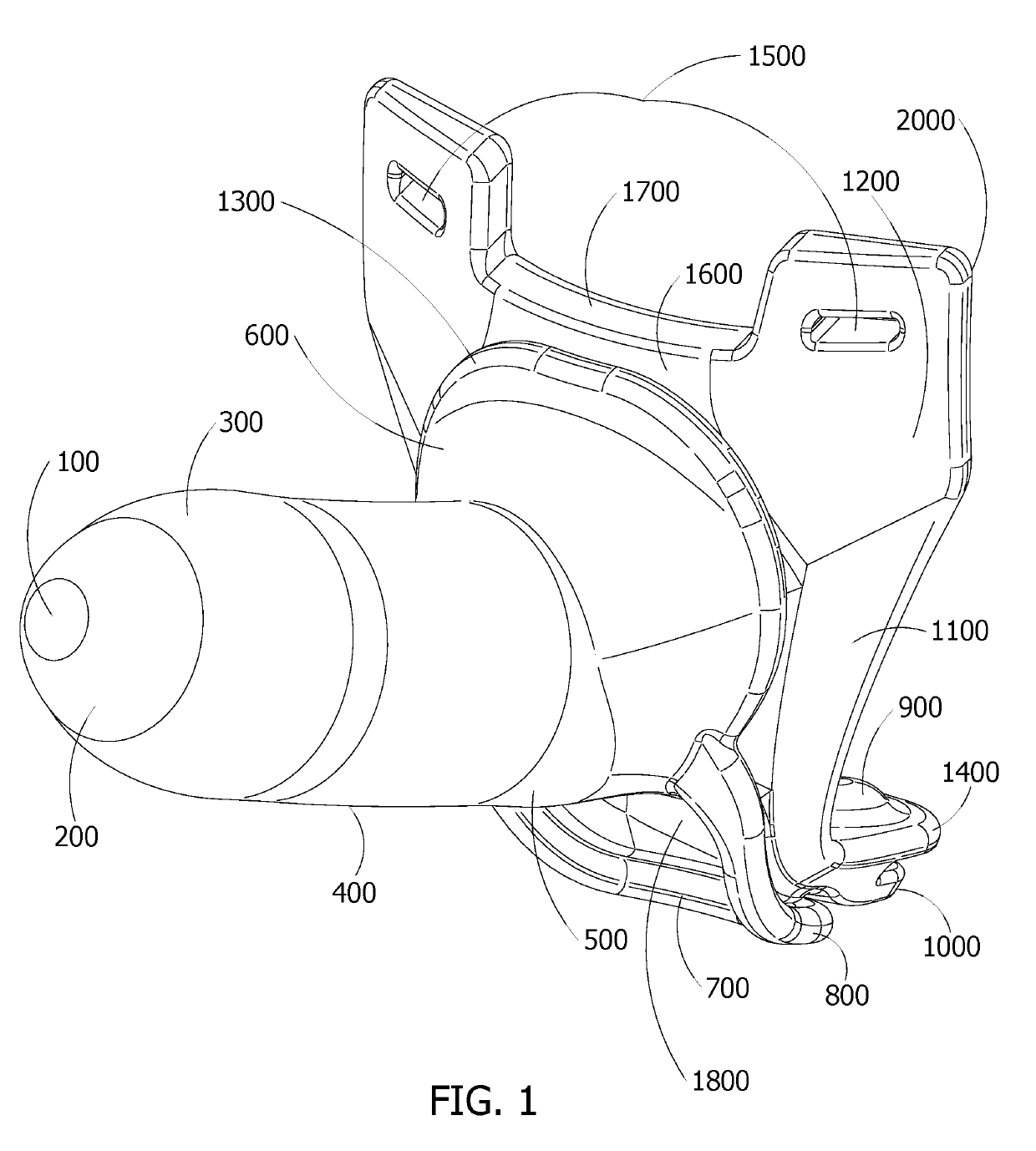

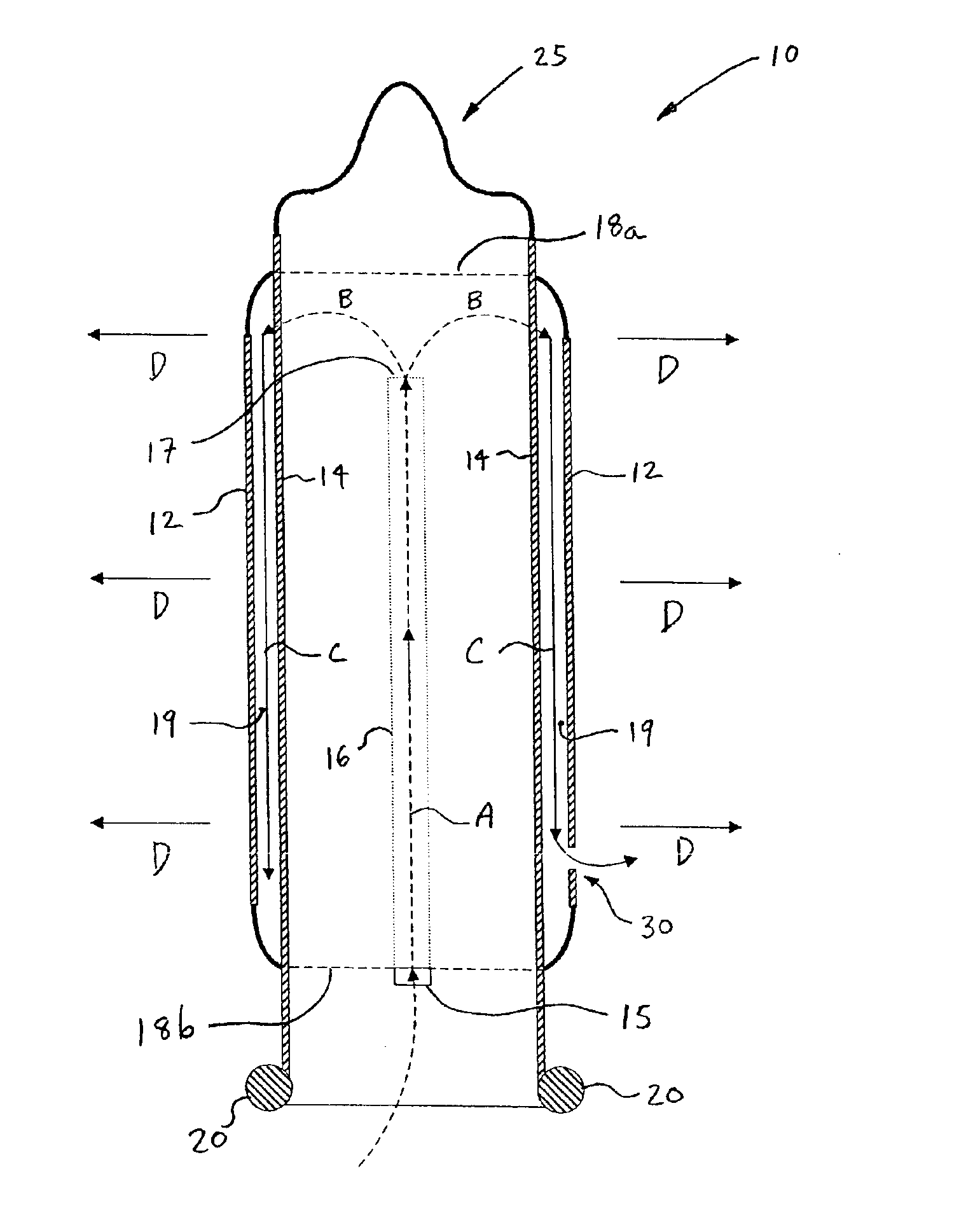

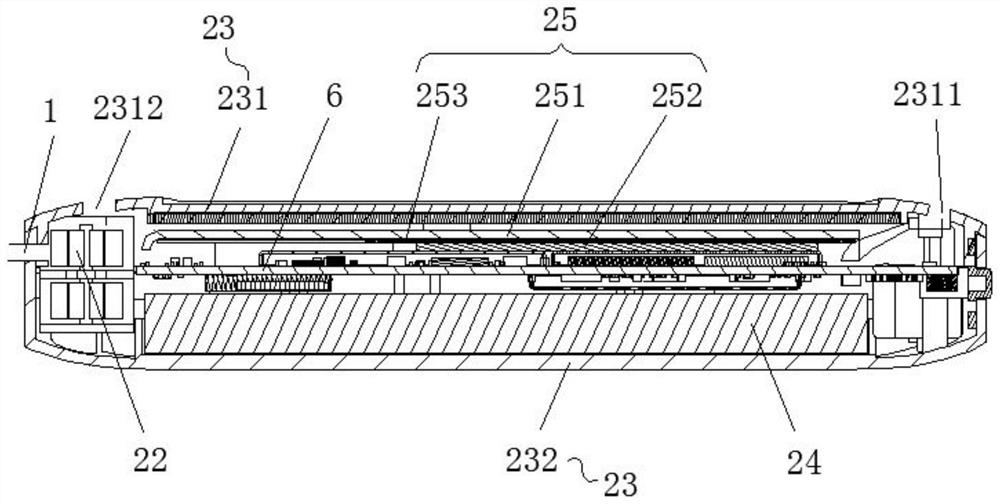

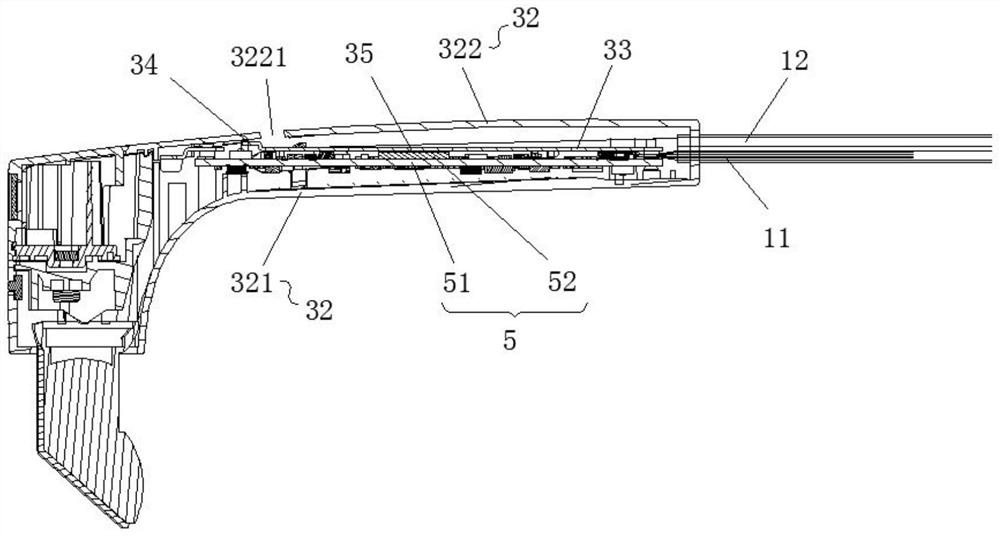

System and method for kegel training

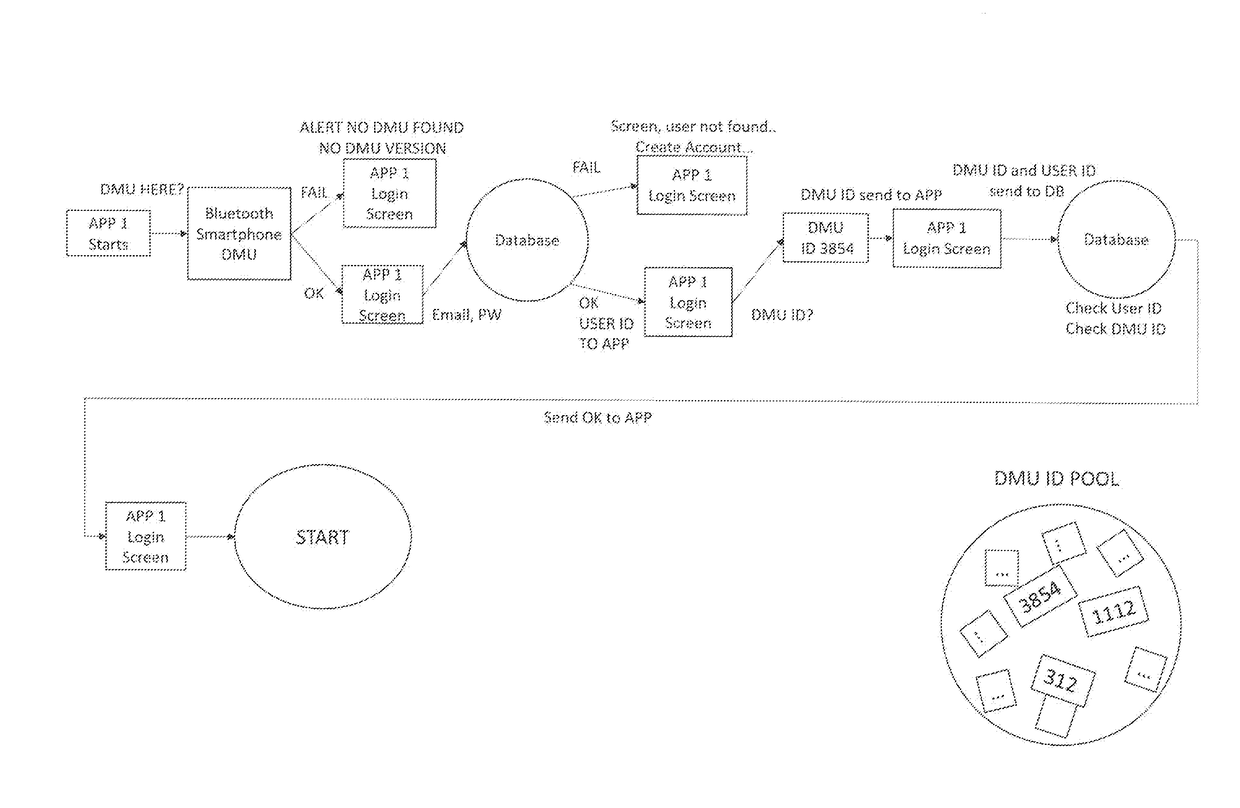

ActiveUS20160346610A1Prevention and mitigation of dysfunctionImprove performance experienceGymnastic exercisingInertial sensorsAccelerometerBluetooth

A system and method for optimizing a patient's Kegel exercises is provided. The system includes a user interface device and a vaginal device. The vaginal device includes an intra-vaginal probe having an accelerometer that is configured to generate a signal in response to movement of the probe. The user interface device is connected to the vaginal device and analyzes signals from the accelerometer to provide physiological feedback information to the patient. The vaginal device may be connected to the user interface device via wireless communications such as Bluetooth or by wire. The user interface device may be a smart device or a computer that transmits information to central web-based data server accessible by the patient or authorized healthcare providers or third-party payers.

Owner:AXENA HEALTH INC

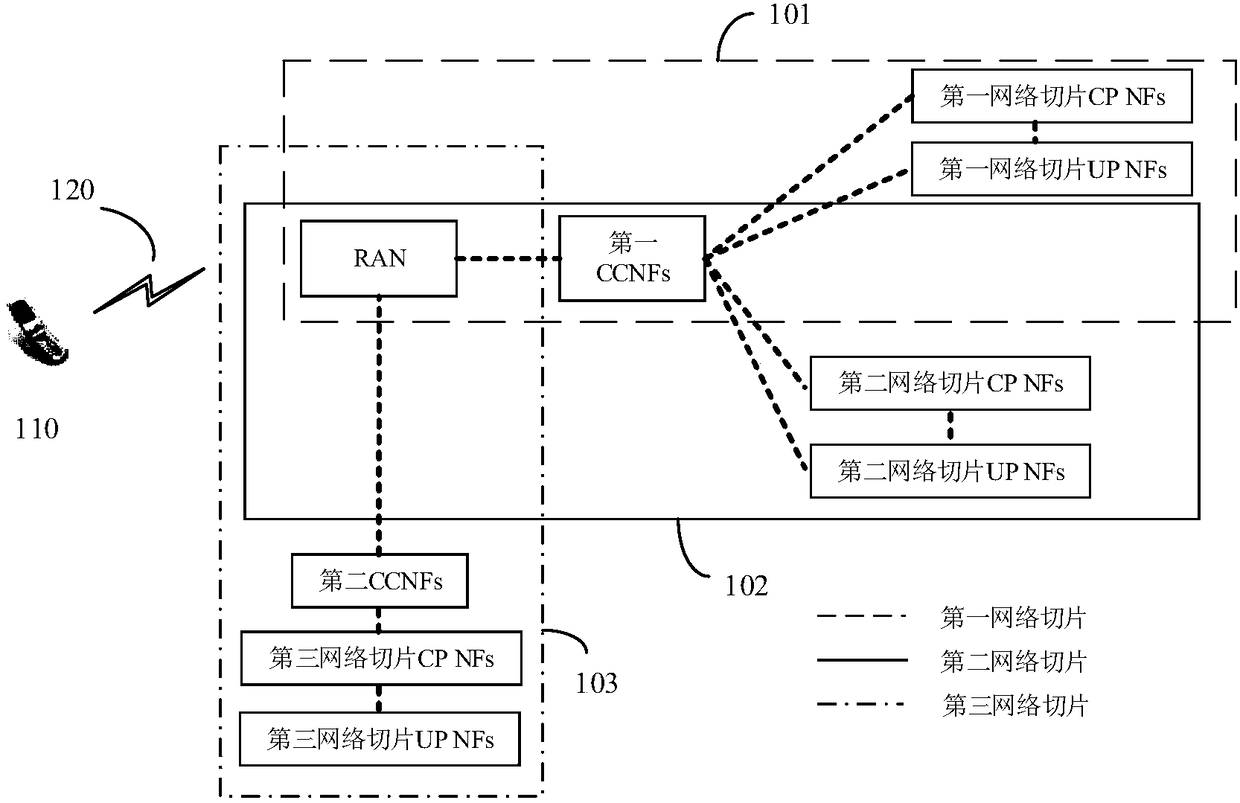

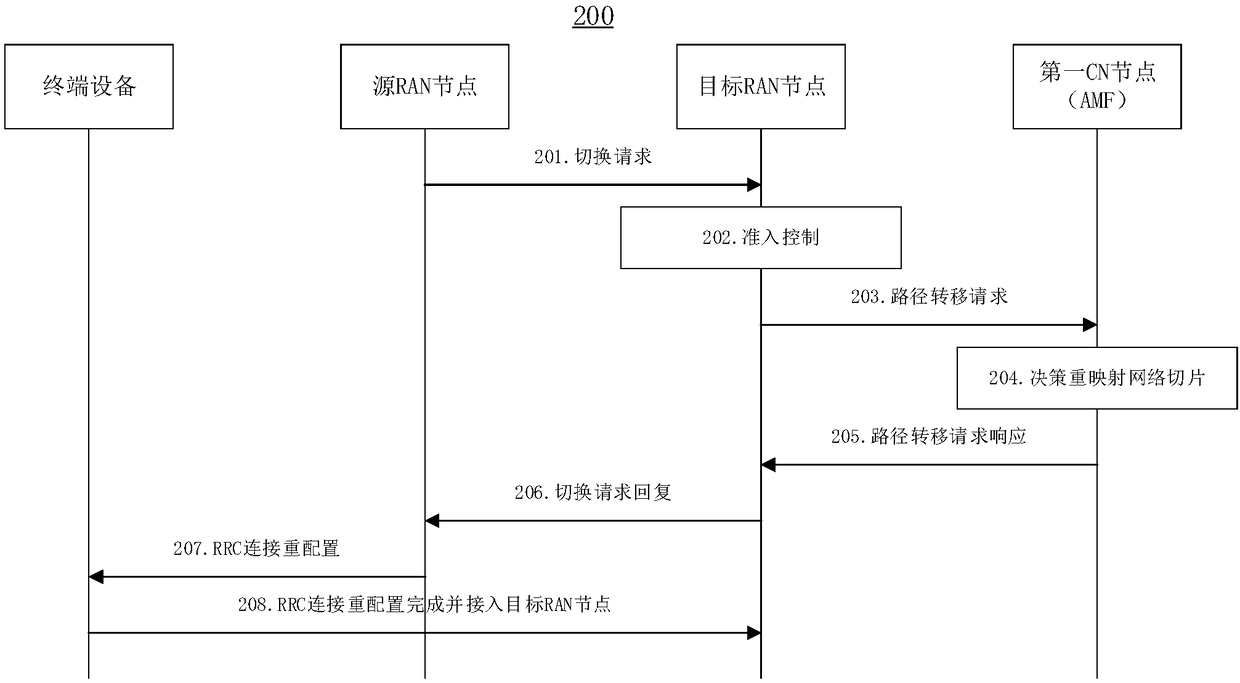

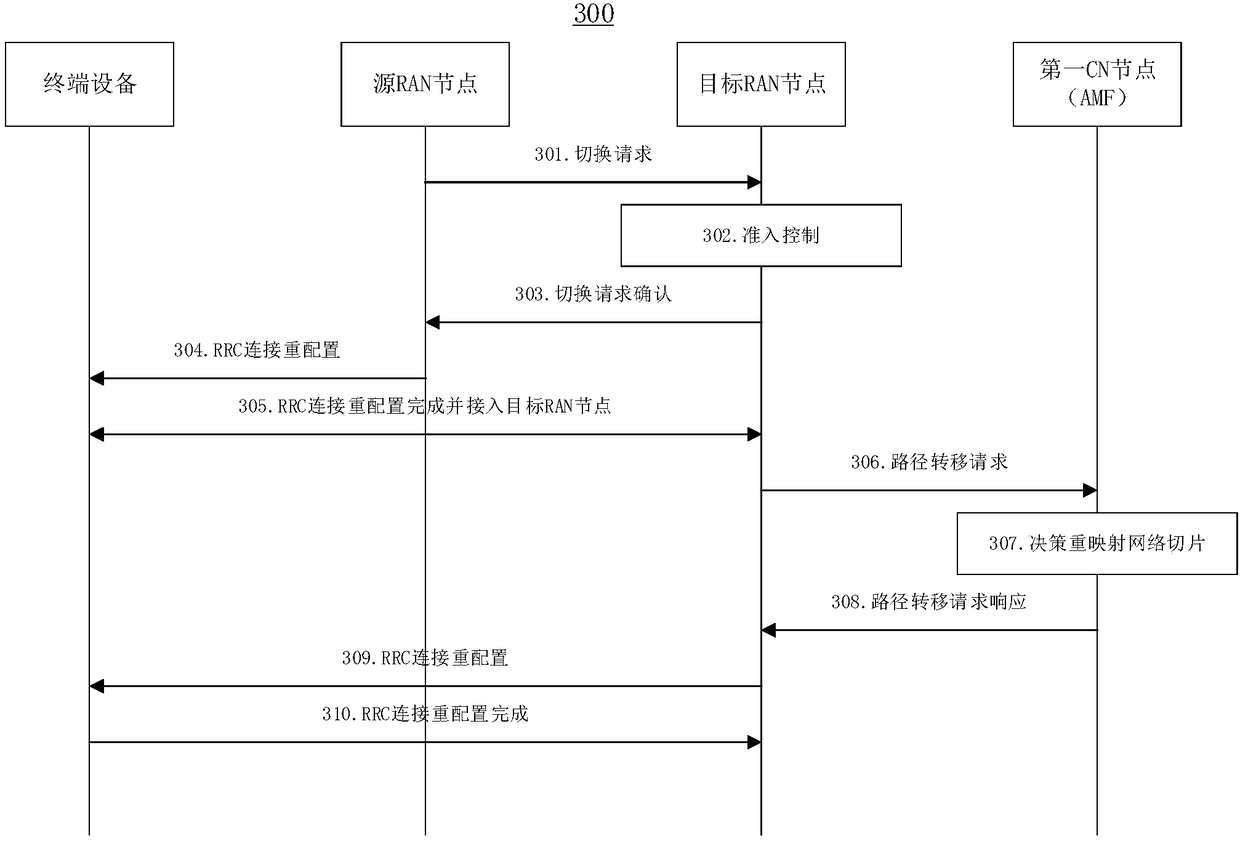

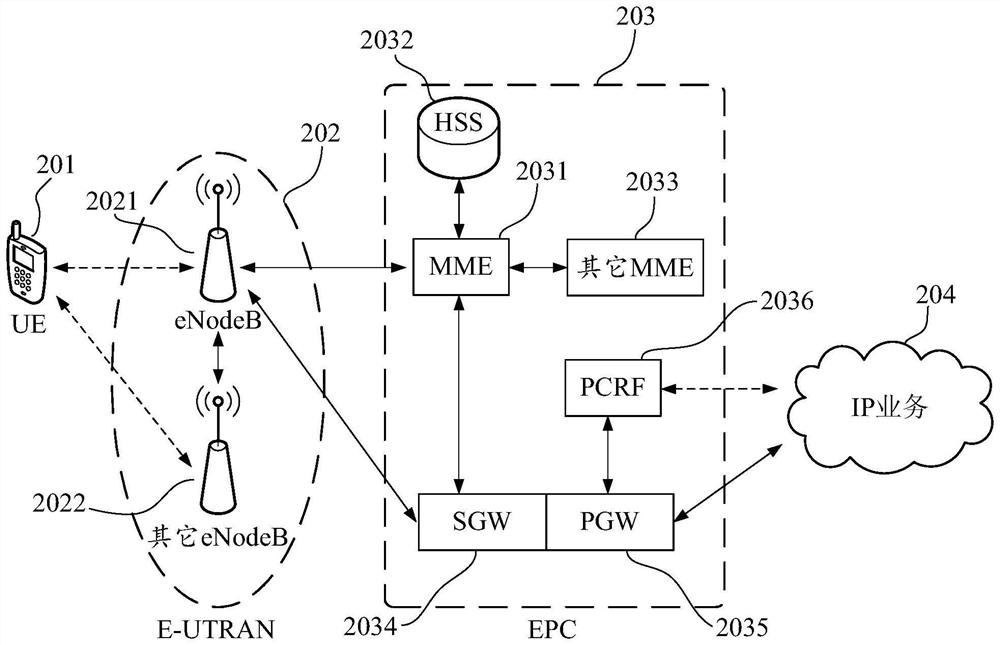

Switching method in mobile network and communication apparatus

ActiveCN108632927AGuaranteed continuityImprove performance experienceNetwork traffic/resource managementAssess restrictionAccess networkMobility management

The application provides a switching method in a mobile network and a communication apparatus. The method includes steps: a target access network node sends a path transfer request to a first core network node, wherein the first core network node refers to an access and mobility management function supported by both a source access network node and a target access network node, and the path transfer request comprises at least one piece of semi-receiving flow / session / radio bearing indication information; and the target access network node receives a path transfer request reply transmitted by the first core network node, wherein the path transfer request reply comprises at least one of following parameters: a flow / session / radio bearer of successful path transfer, a flow / session / radio bearerof a failed path transfer, new network slice indication information, and a context of the modified low / session / radio bearer.

Owner:HUAWEI TECH CO LTD

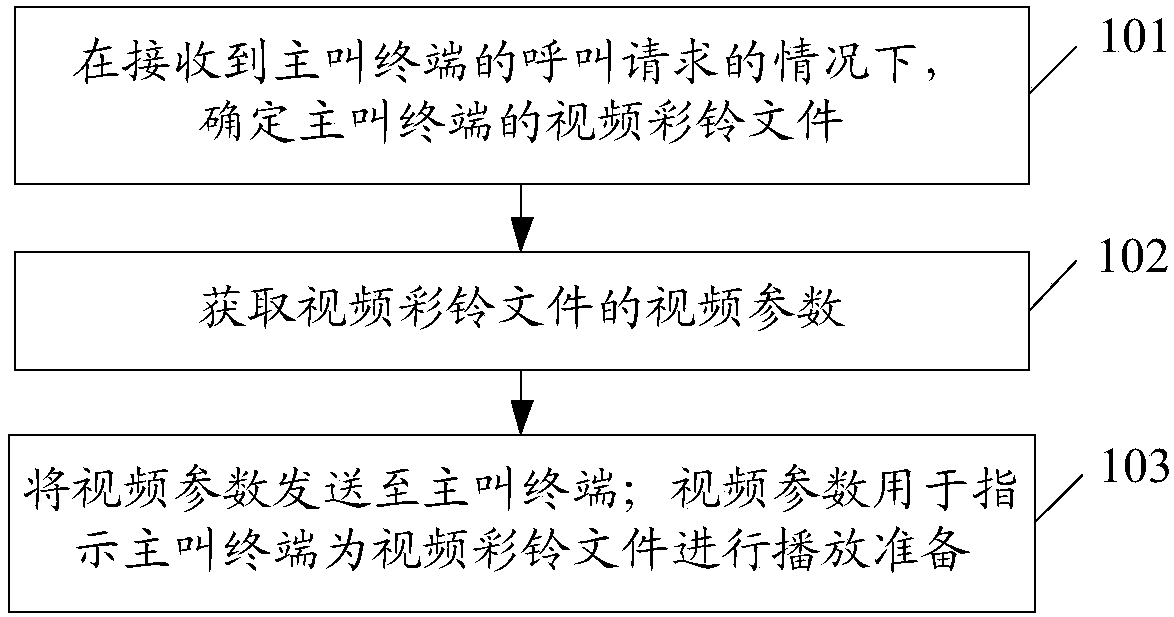

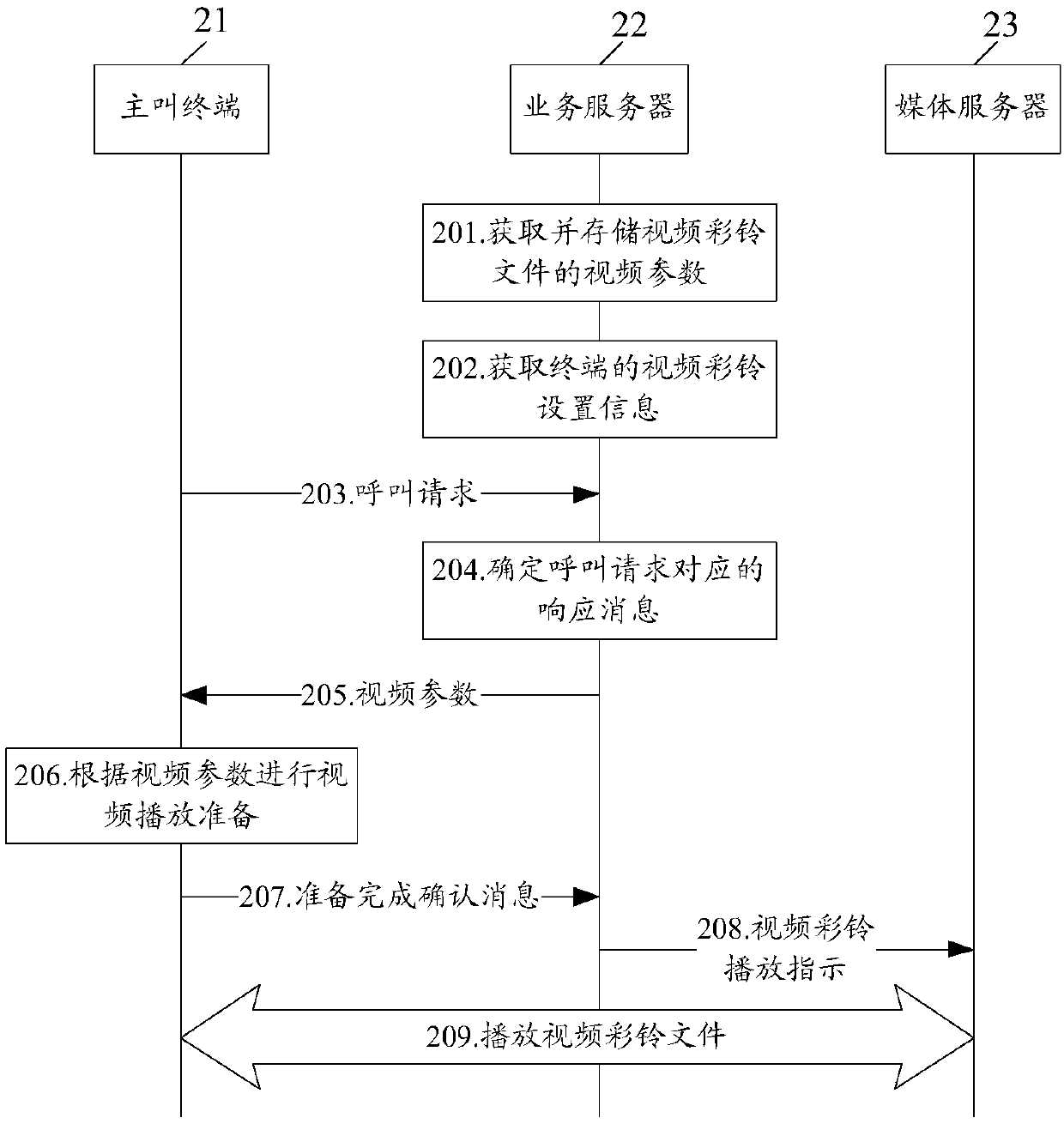

Video polyphonic ringtone playing method, device and system

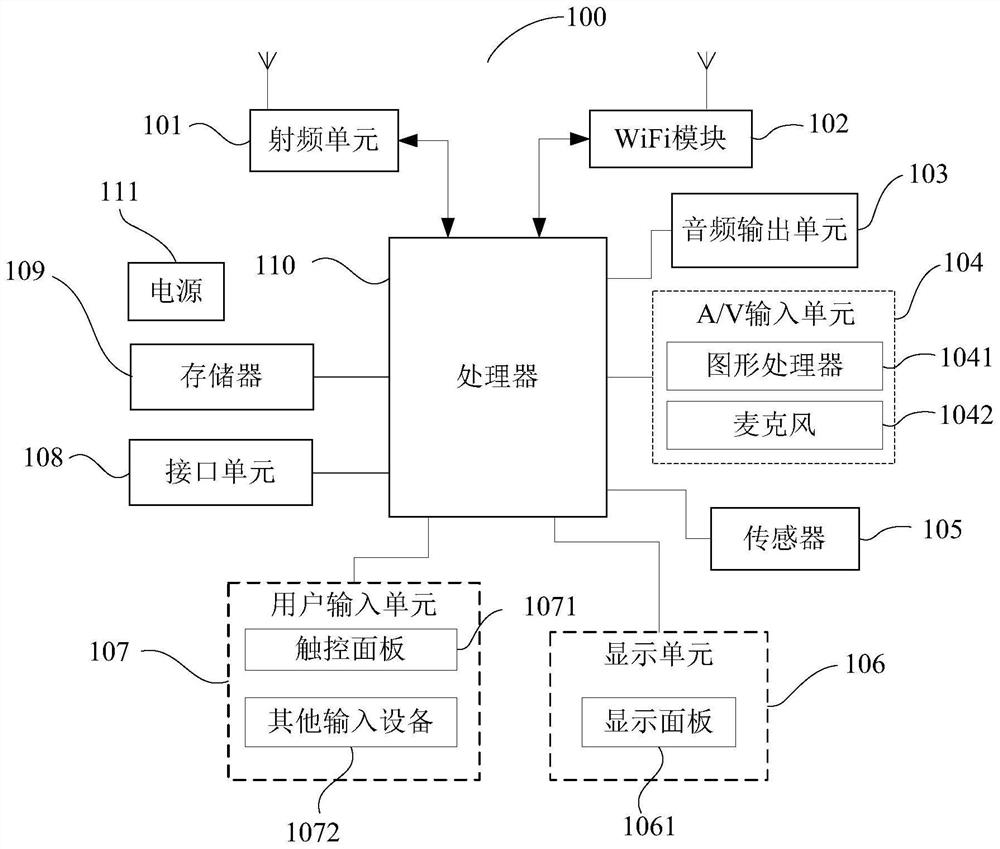

InactiveCN109803056AGuaranteed playback qualityImprove performance experienceSpecial service for subscribersTransmissionComputer terminalComputer science

The embodiment of the invention discloses a video polyphonic ringtone playing method which comprises the following steps: under the condition that a calling request of a calling terminal is received,determining a video polyphonic ringtone file of the calling terminal; Obtaining video parameters of the video polyphonic ringtone file; Sending the video parameters to the calling terminal; Wherein the video parameters are used for indicating the calling terminal to prepare for playing the video polyphonic ringtone file. Therefore, the terminal can prepare for playing the video polyphonic ringtonefile according to the video parameters received in advance, so that the playing success rate and the playing quality of the video polyphonic ringtone file are ensured. The embodiment of the inventionalso discloses a video polyphonic ringtone playing device and a video polyphonic ringtone playing system.

Owner:ZTE CORP

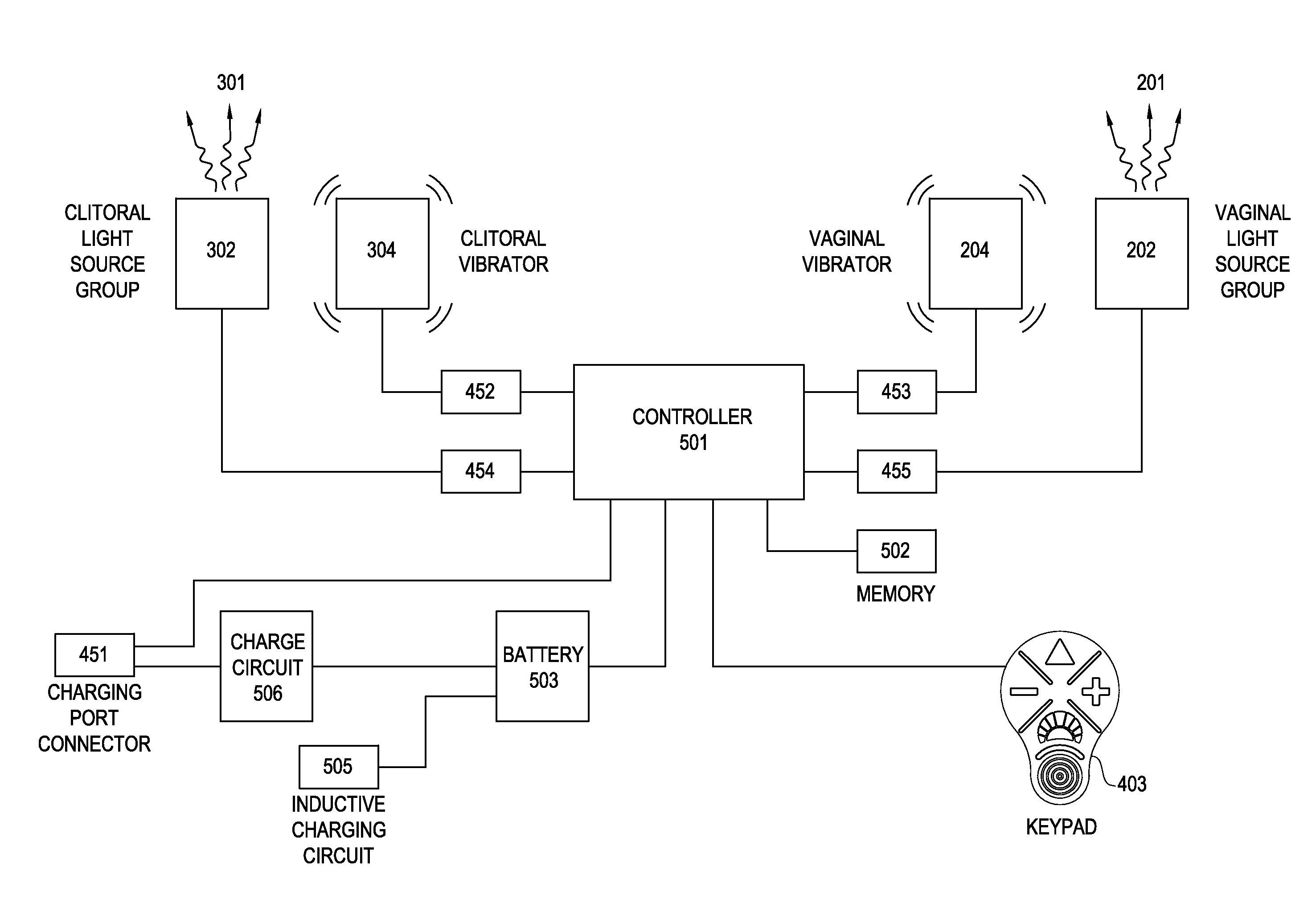

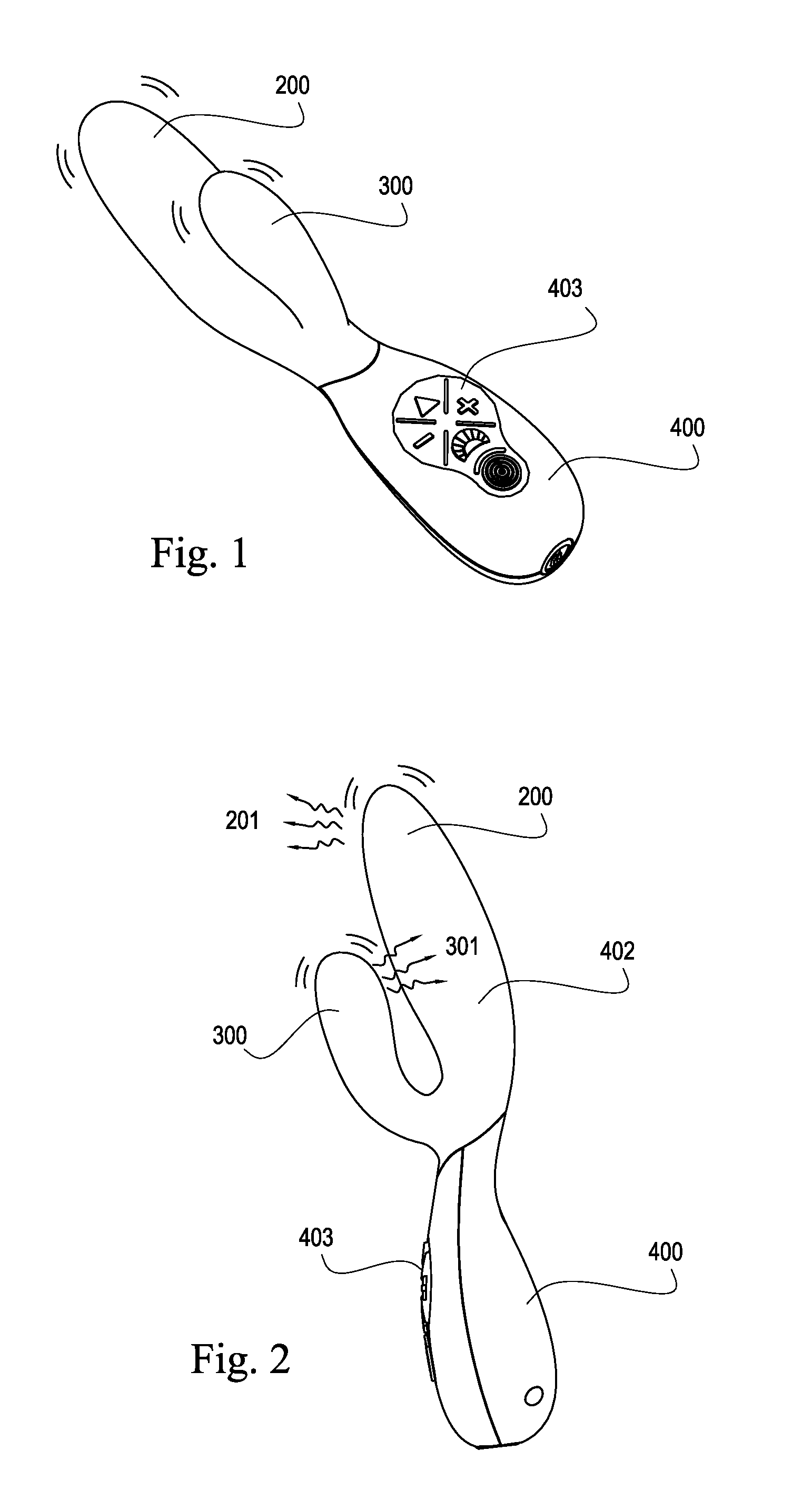

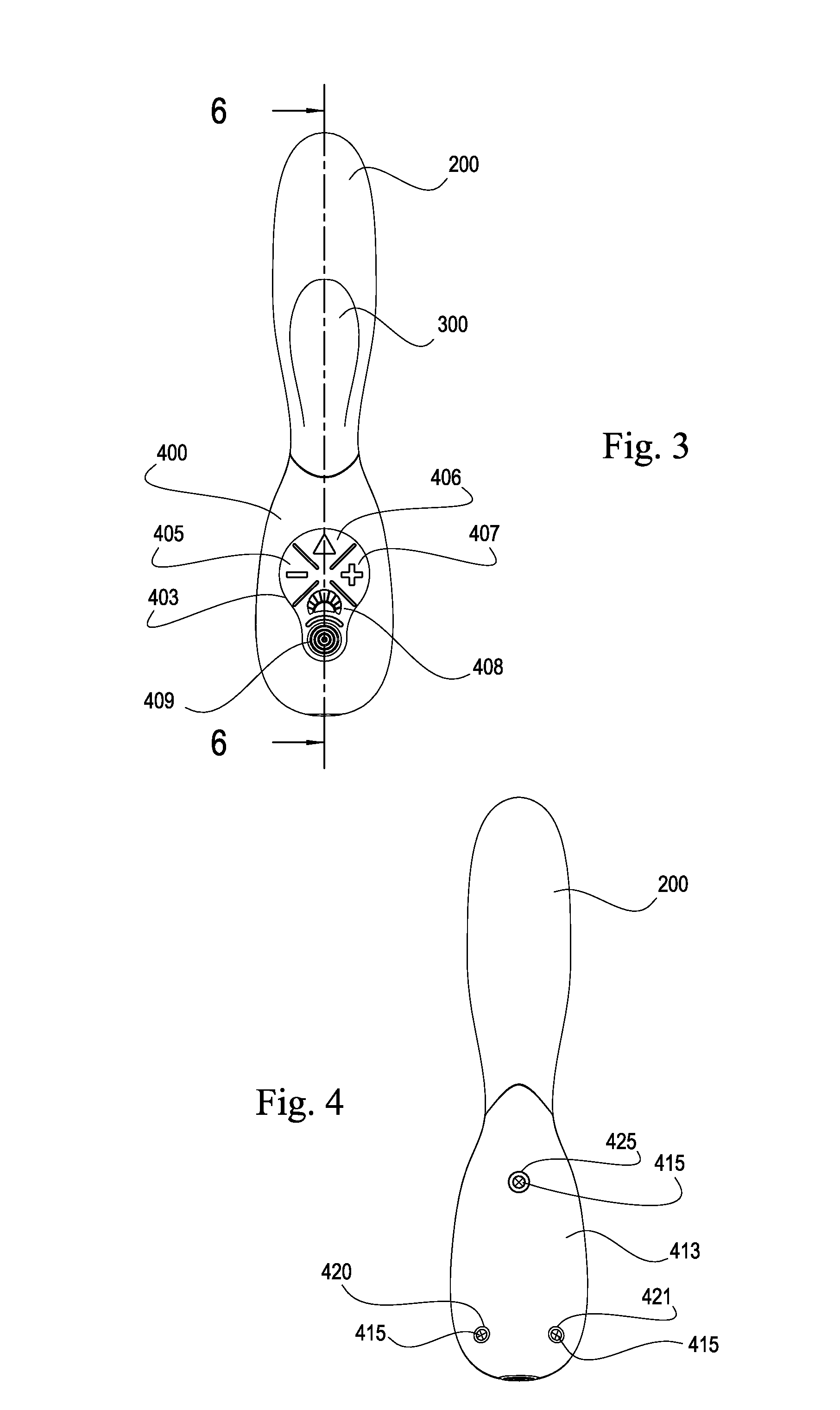

Sexual stimulation device using light therapy

ActiveUS8801600B2Improve performance experienceGood effectChiropractic devicesVibration massageMechanical irritationLight therapy

A sexual stimulation apparatus which may comprise a plurality of light sources for photostimulation and microbe reduction of the vagina, clitoris, or both; a plurality of vibrators for mechanical stimulation of the vagina, clitoris, or both; a plurality of modes of operation for achieving improved sexual stimulation in a user; a handle for ease of operation; a controller and programmable memory for containing modes of operation and driving the light sources and vibrators of the invention; a vaginal finger; a clitoral finger; a handle for ease of use; a keypad for user entry of commands; a charging and programming port; and a power source which may be a rechargeable battery which may be rechargeable by direct, inductive or other means; and a handle for ease of operation. The invention also comprises a flexible covering that provides smooth sliding engagement with the vagina and clitoris of a user.

Owner:ZIPPER RALPH

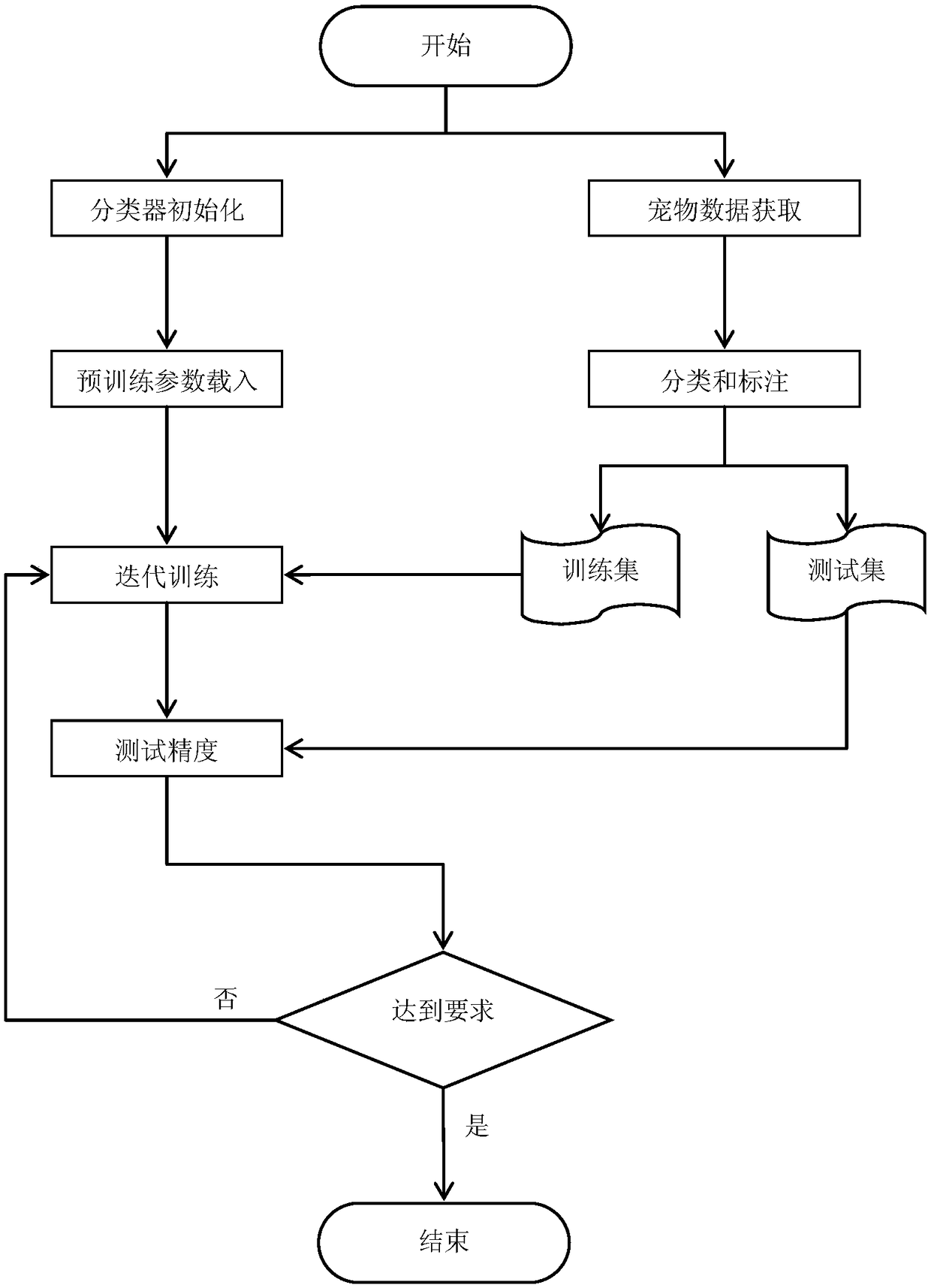

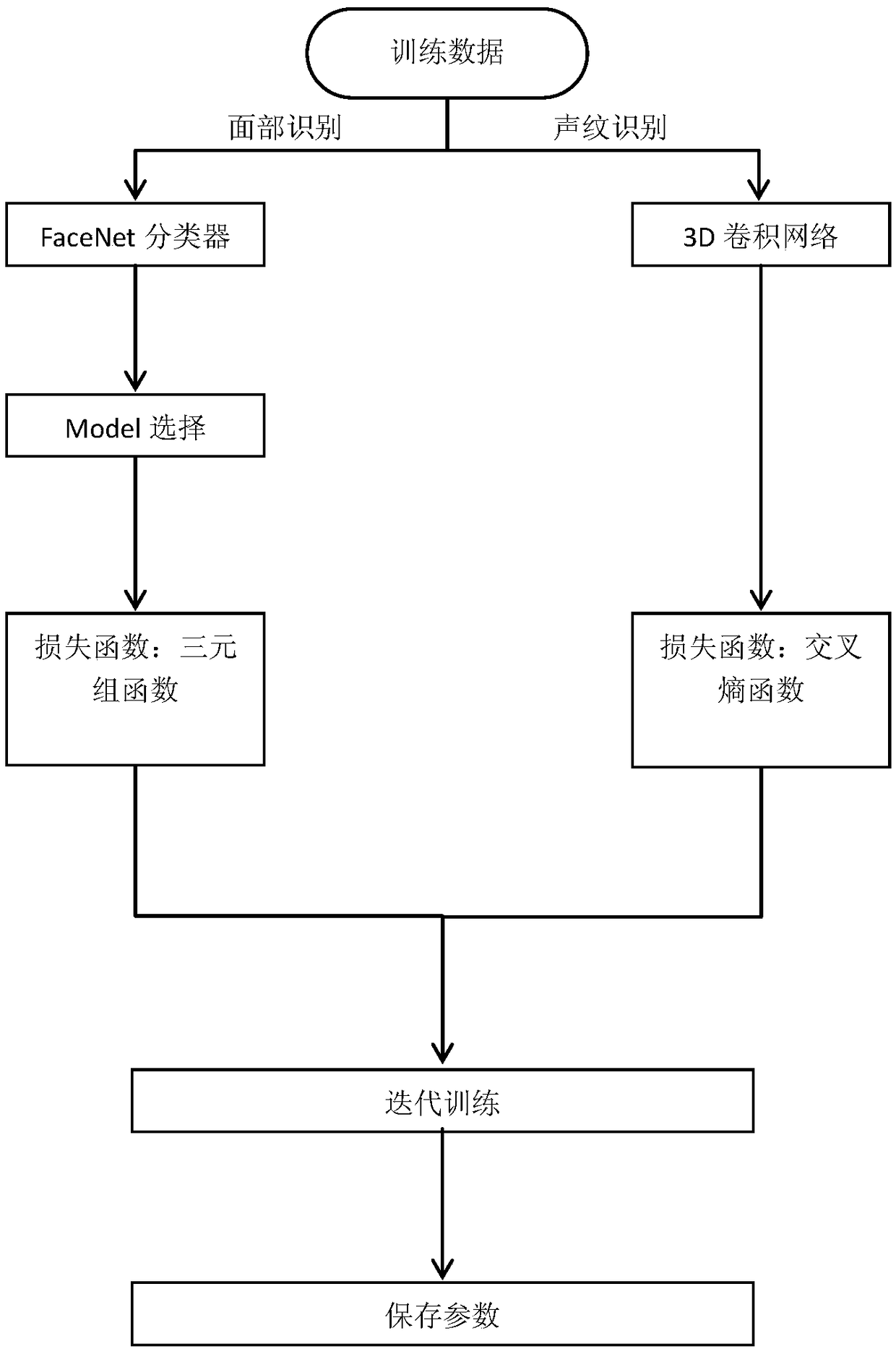

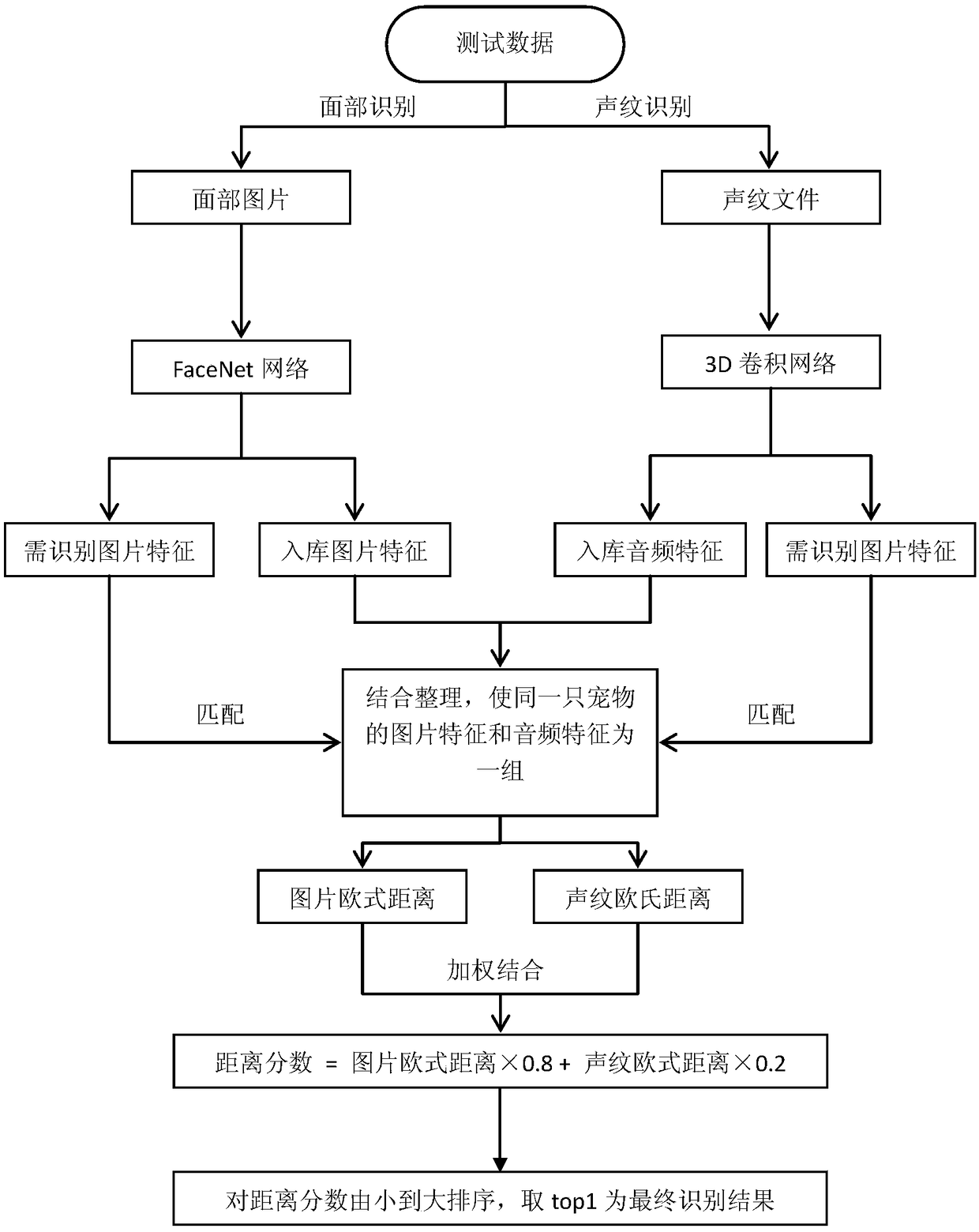

Pet recognition method combining face and voiceprint

InactiveCN108734114AImprove recognition accuracyImprove performance experienceSpeech analysisBiometric pattern recognitionPattern recognitionImaging data

A pet recognition method combining a face and a voiceprint is provided. The method comprises the following steps: S1: initializing a pet recognition classifier, including classifier structure initialization and classifier weight initialization; S2: acquiring image data, and acquiring voiceprint data; S3: sorting and marking the data; S4: performing voiceprint data processing; S5: iteratively updating the classifier; and S6: determining whether the classifier meets the accuracy requirement, if so, saving the current parameter and ending the program, and if not, continuing the training. The method provided by the present invention combines two recognition methods of face recognition and voiceprint recognition, and has high recognition precision.

Owner:ZHEJIANG UNIV OF TECH

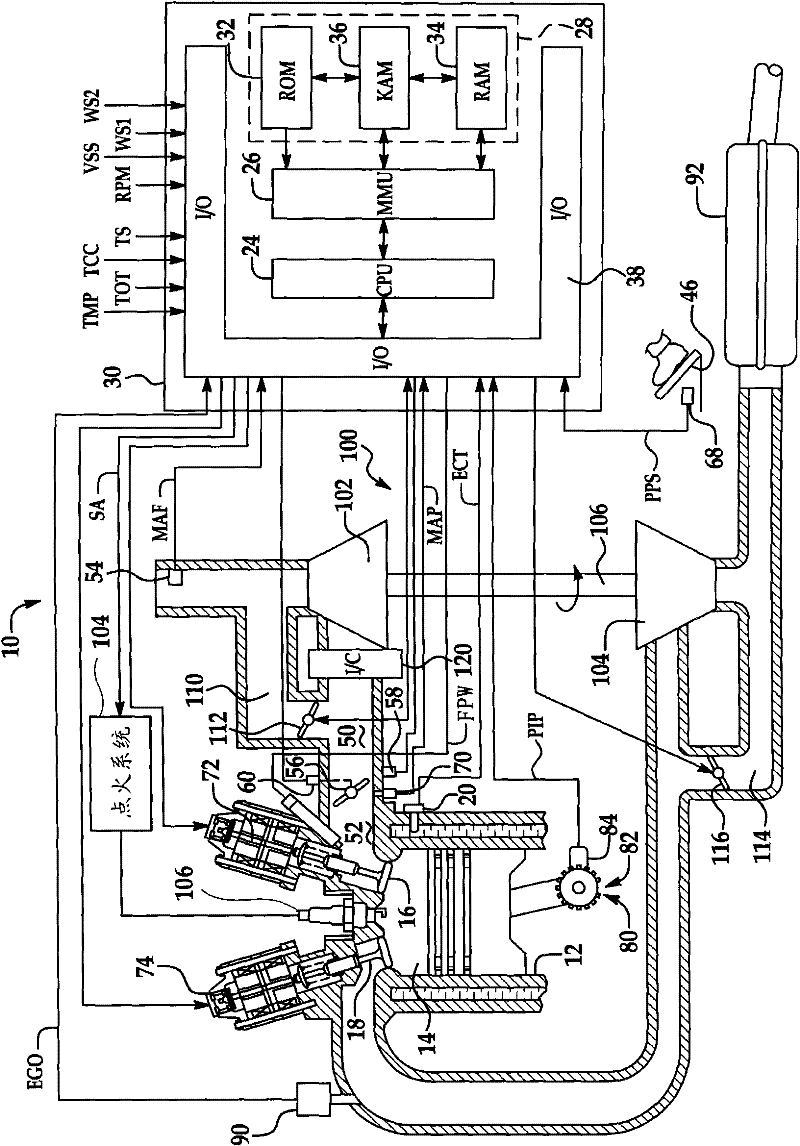

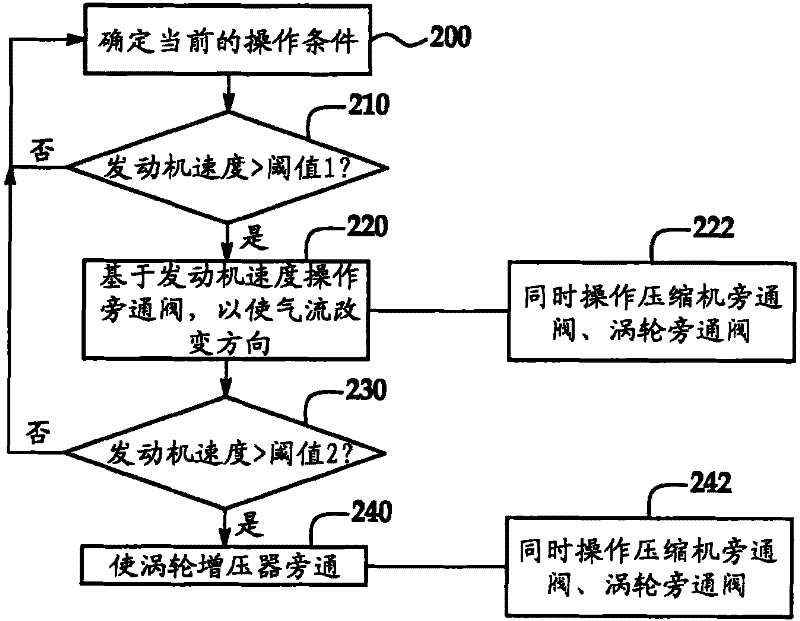

Turbocharged engine and method for controlling same

InactiveCN102200061ALow costImprove economyElectrical controlInternal combustion piston enginesNaturally aspirated engineInternal combustion engine

The invention provides a turbocharged engine and a method for controlling the same. A system and method for controlling a multiple cylinder internal combustion engine having an exhaust gas turbocharger with a compressor coupled to a turbine include redirecting substantially all intake airflow around the compressor and substantially all exhaust flow around the turbine when an engine operating parameter exceeds an associated threshold. In one embodiment, turbocharger boost is provided below an associated engine speed threshold with the turbocharger compressor and turbine bypassed at higher engine speeds such that the engine operates as a naturally aspirated engine.

Owner:FORD GLOBAL TECH LLC

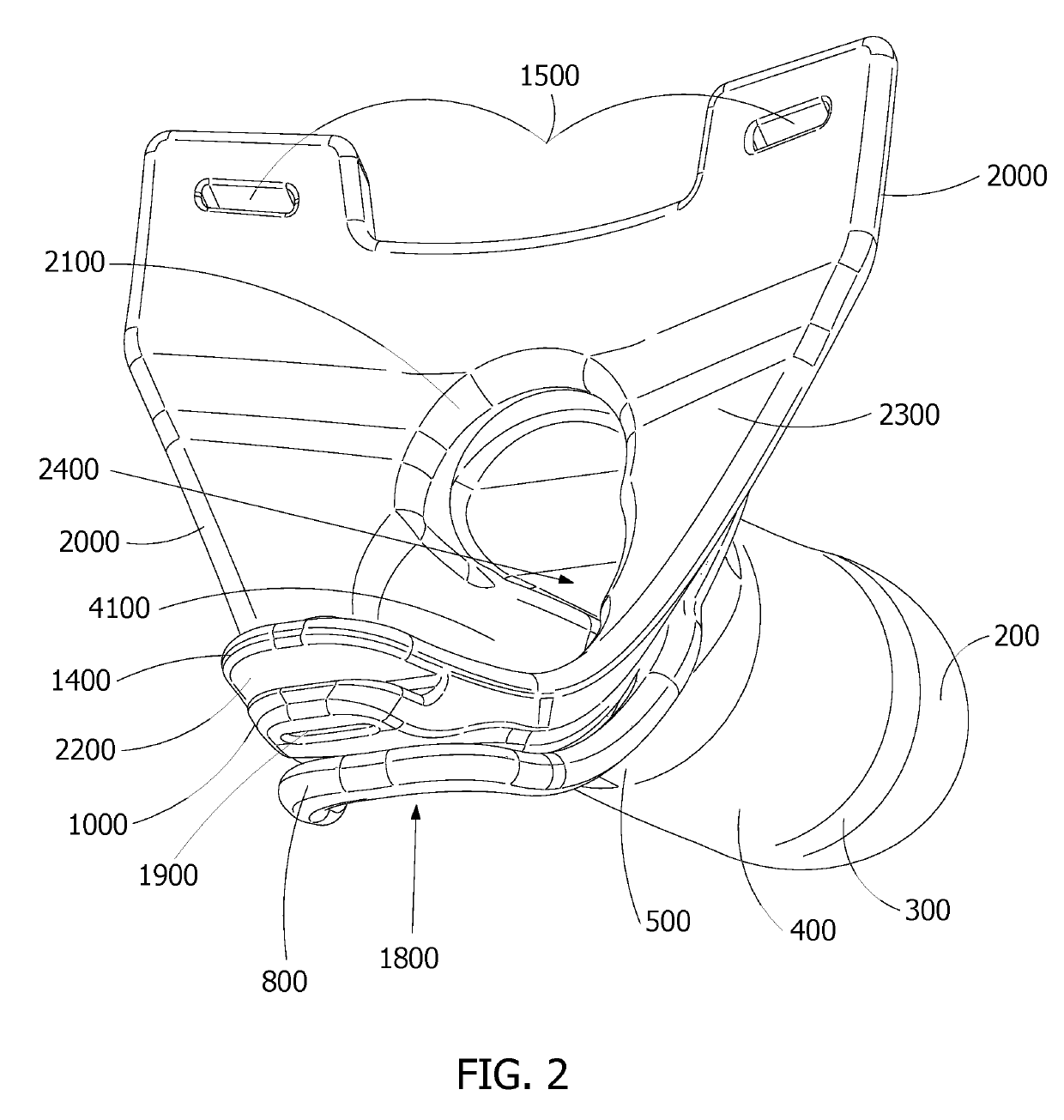

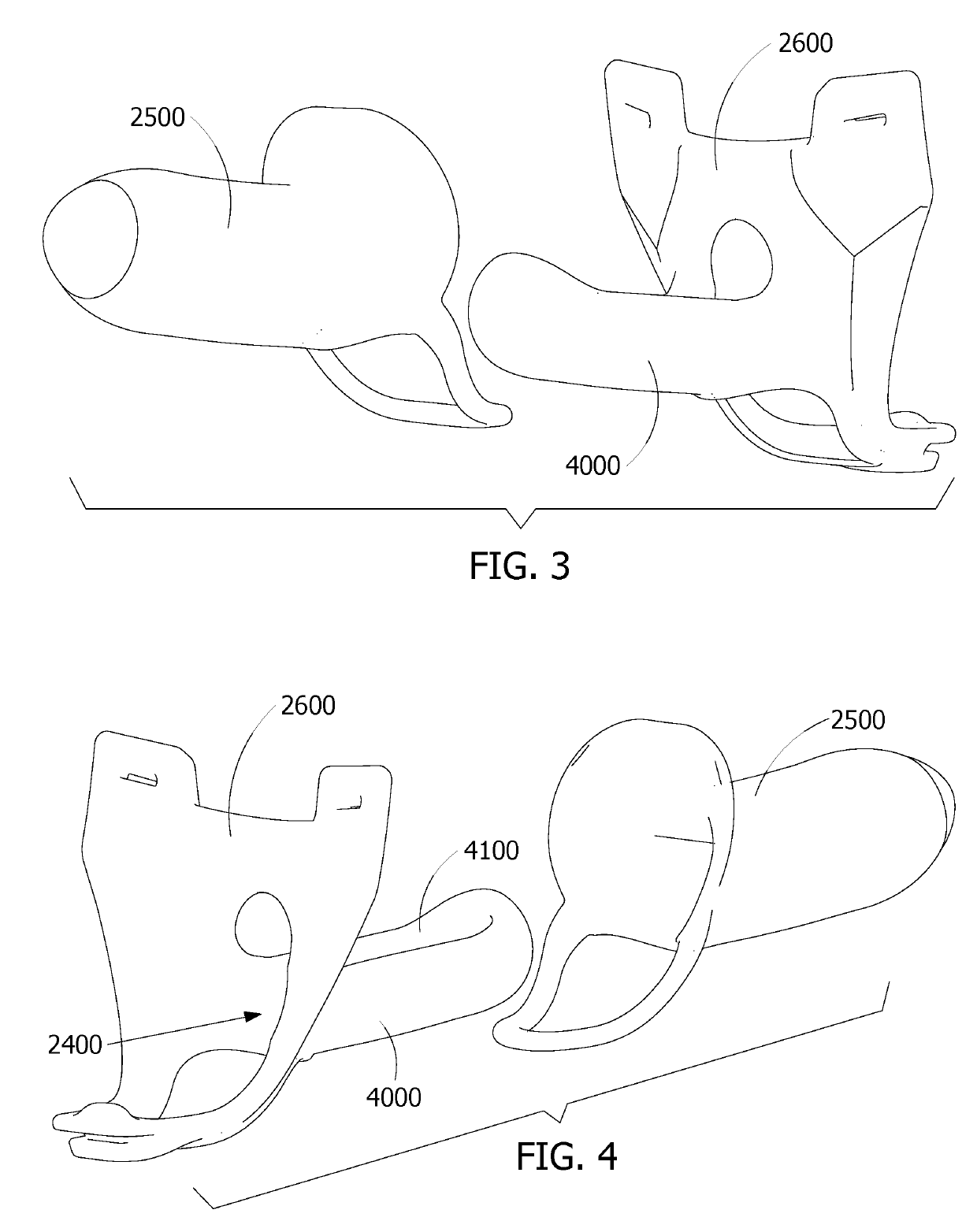

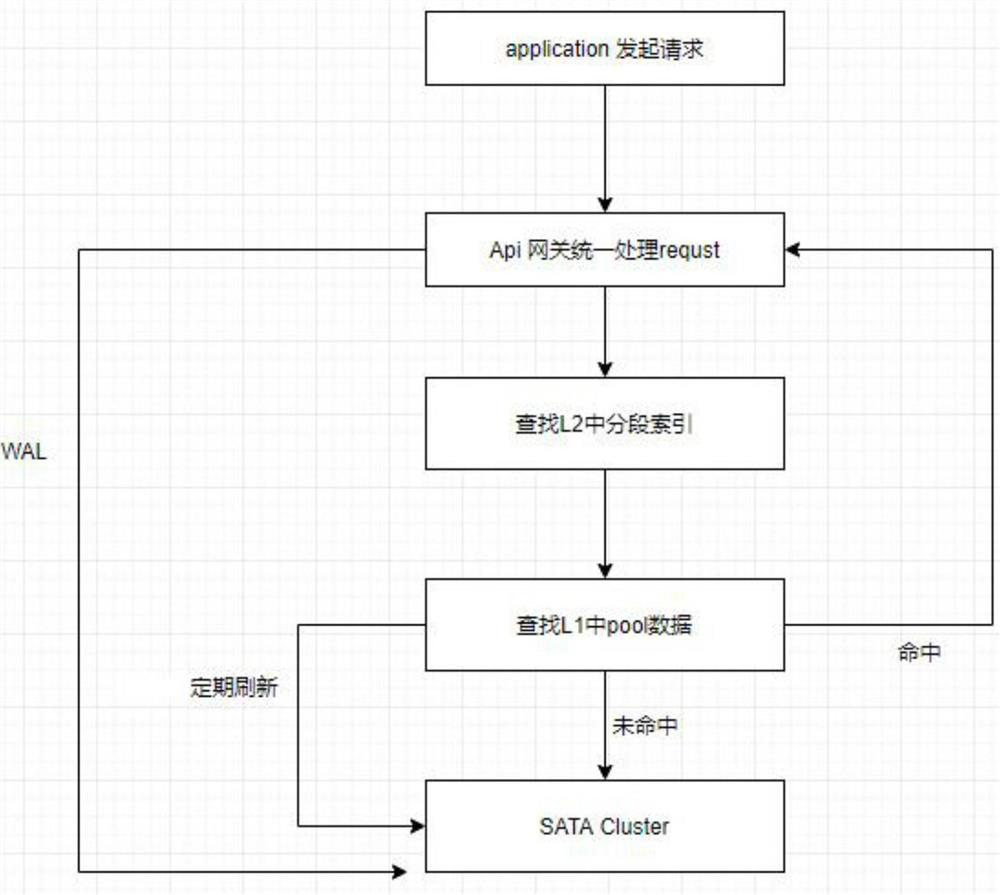

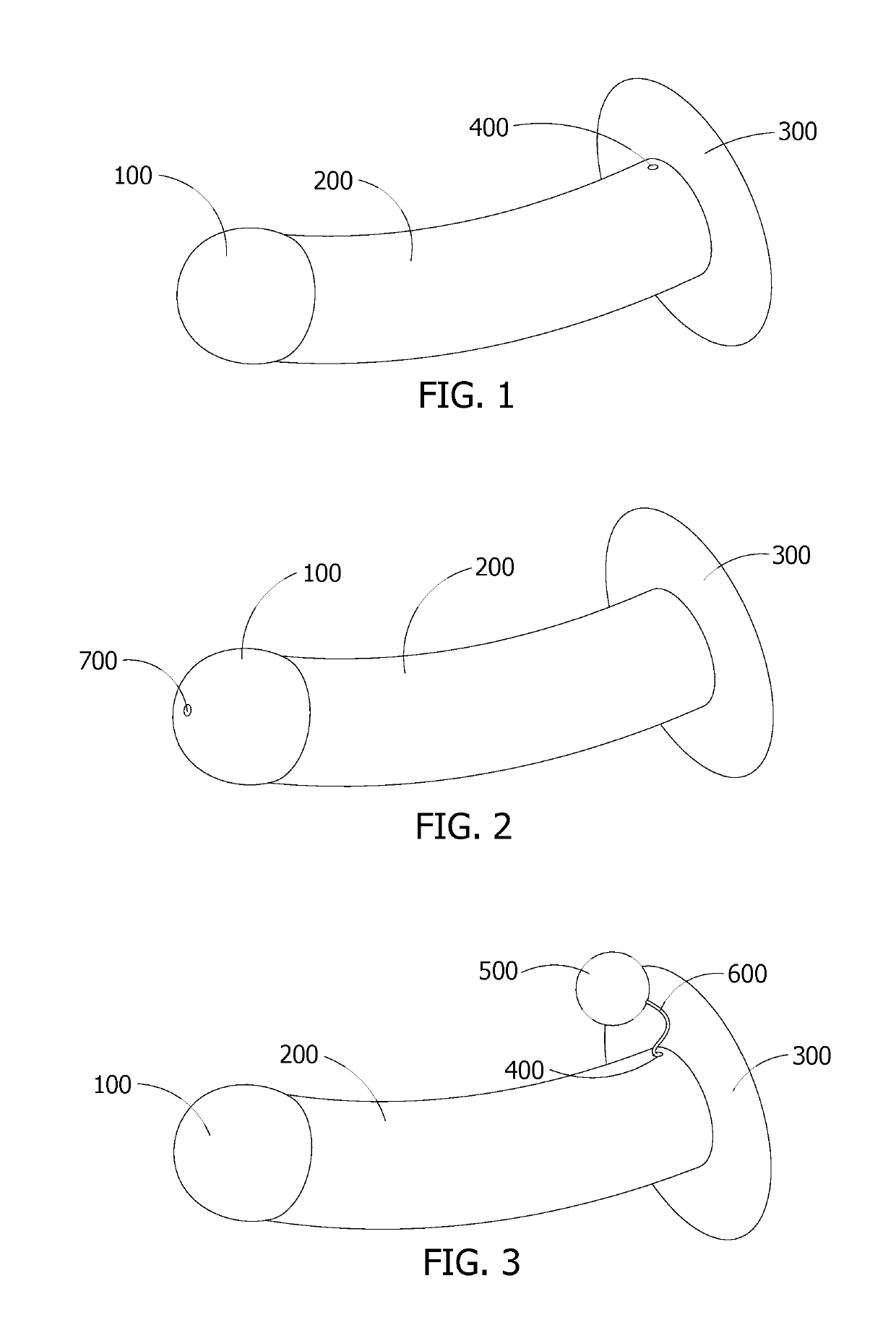

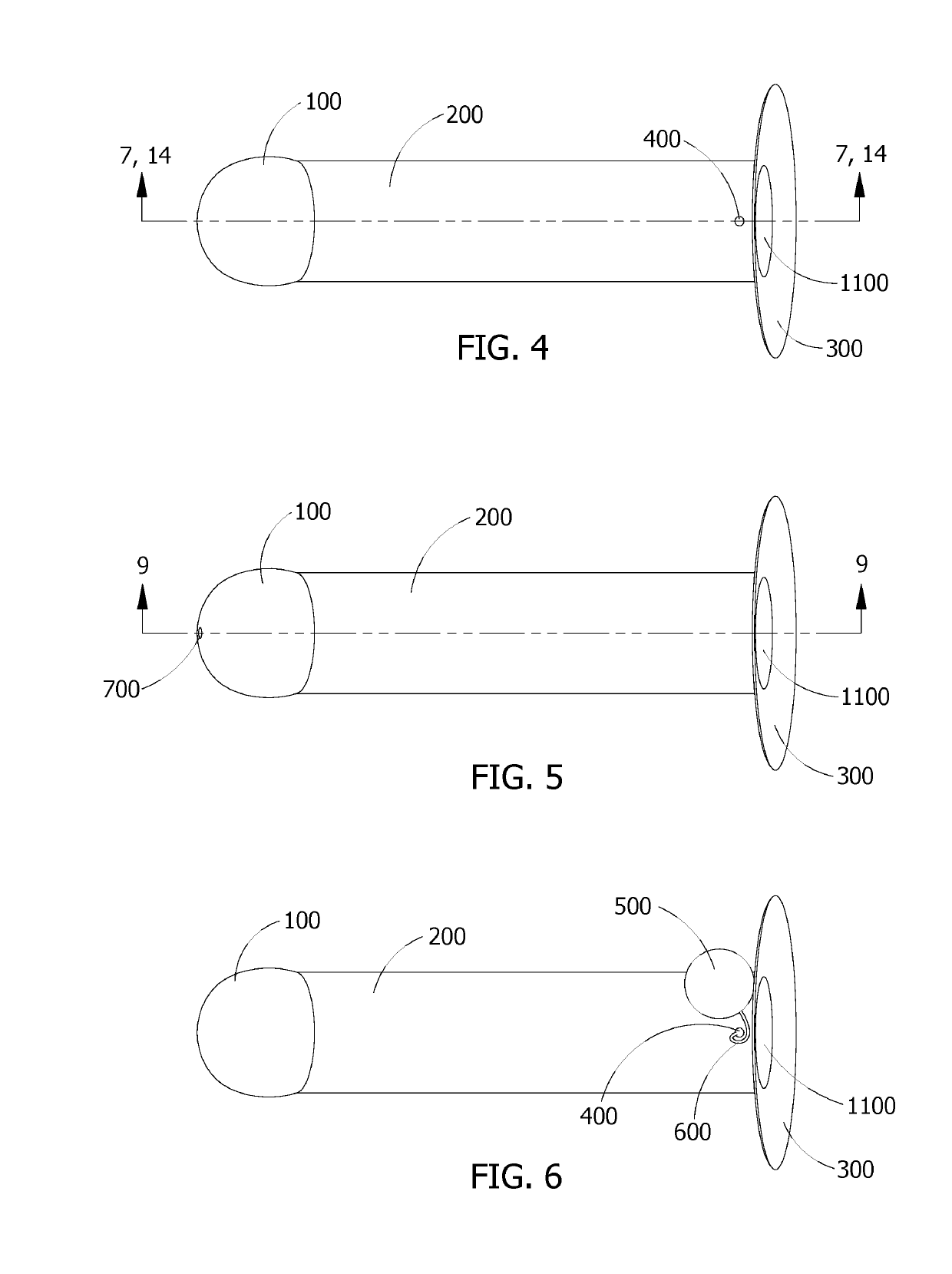

Sexual Aid Device, System and Encore Method of Intercourse

ActiveUS20190274868A1Improve performance experienceEasy to donPenis support devicesNon-surgical orthopedic devicesPenile TumescenceMassage

A sexual aid device, system and method comprising: a chassis with a distal end; a proximal end with at least one penile port; at least one penile support between the distal end and proximal end; at least one penile channel between the distal end and proximal end; wherein the chassis is configured to have an opening along the top toward the proximal end. When used with a sleeve and chassis cover the system provides additional support so that the combined device substantially surrounds the donning penis and enables new methods of intimate sexual intercourse without an erection and without substantially obstructing blood flow to and from the penis. In an optional saddled embodiment the inner and outer scrotal support of the chassis and sheath help to hold the device in place while providing a comfortable scrotal opening an enabling gentle massage of the scrotum and perineum through use of a perineum bump.

Owner:PERFECT FIT BRAND

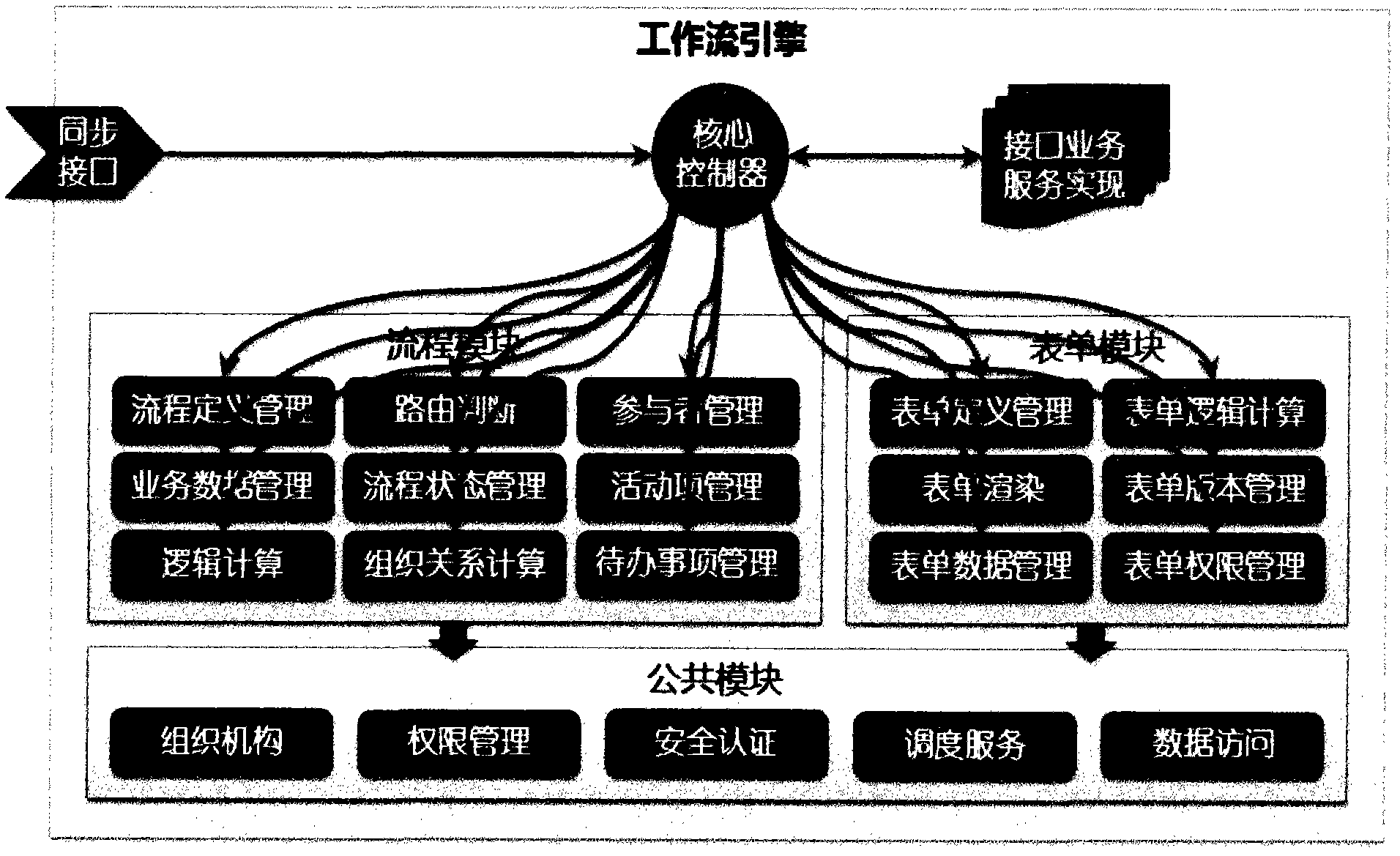

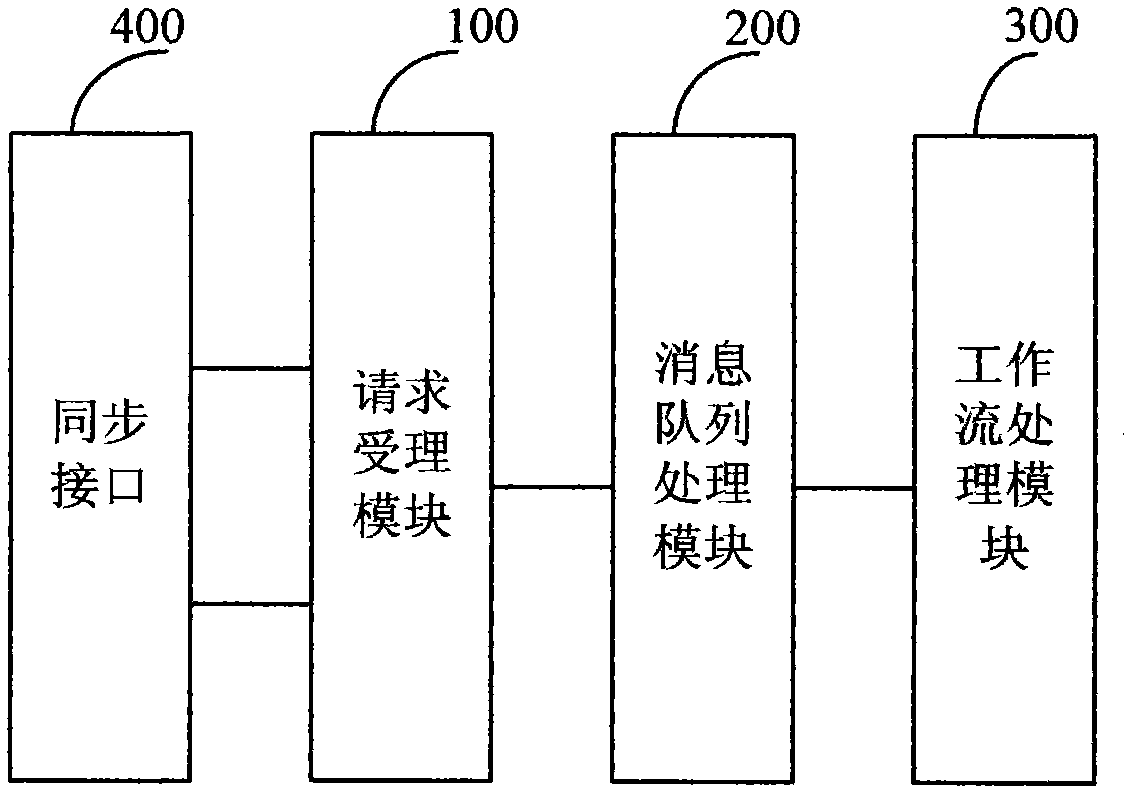

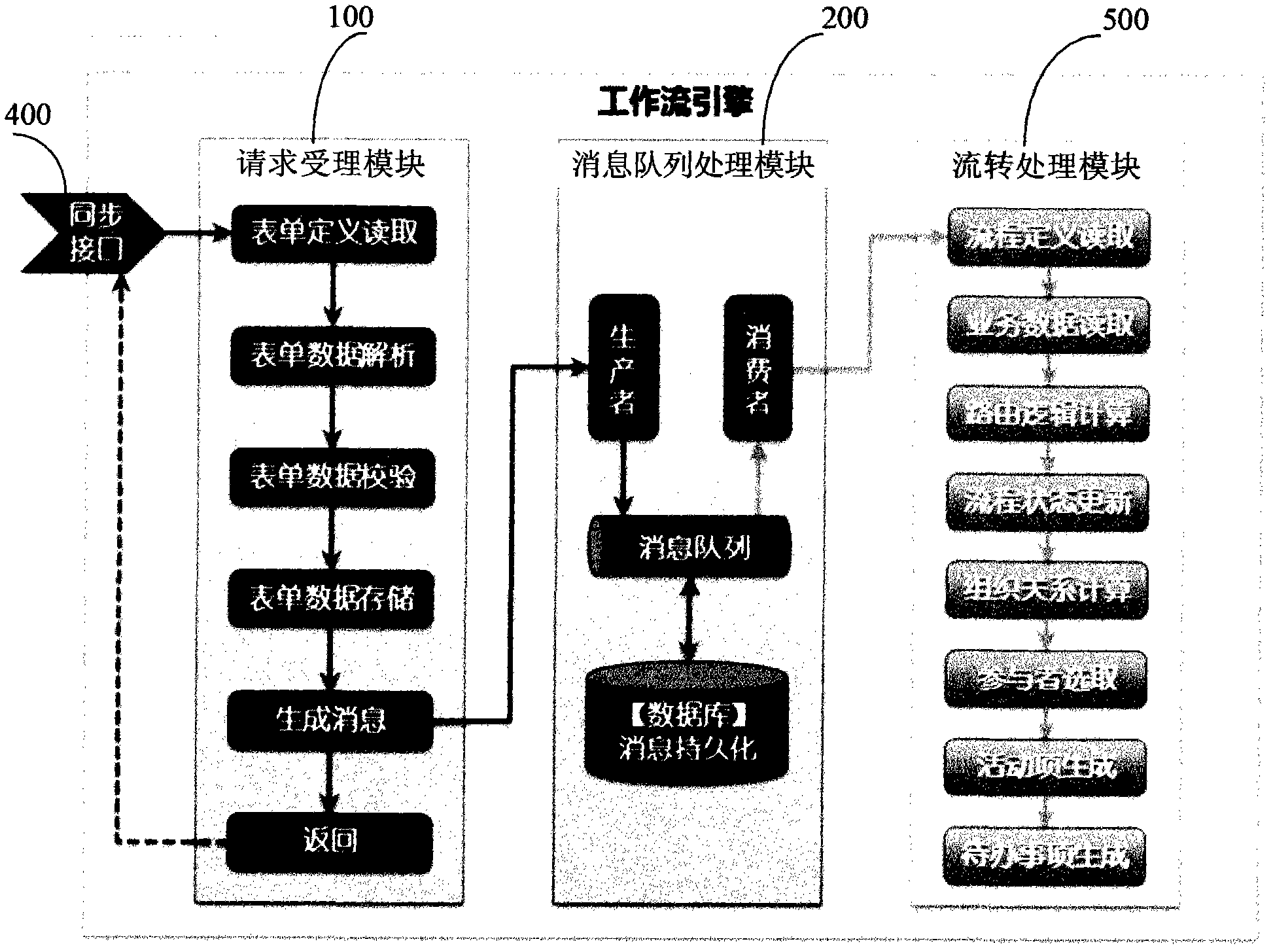

Workflow engine system and workflow processing method

InactiveCN102419833AAlleviate scheduling congestionImprove performance experienceResourcesSoftware engineeringWorkflow engine

The invention discloses a workflow engine system comprising a system interface, a request accepting module and a workflow processing module. The request accepting module is used for receiving a workflow request from the system interface, generating a corresponding workflow processing message and a request processing completion message according to the workflow request and returning the request processing completion message to a requesting user; and the workflow processing module is used for operating corresponding workflow processing procedures according to the workflow processing message generated by the request accepting module. The invention also provides a workflow processing method. According to the workflow engine system and the workflow processing method disclosed by the invention, the request processing completion message is returned to the requesting user in time, so that the scheduling congestion of the engine system can be relieved efficiently; and the user finally can obtain feedback from the system in time, so that performance experience of the system in use can be improved.

Owner:FORESEE TECH

Herbal compositions including cannabidiol to enhance the sexual experience

InactiveUS20170246120A9Improve performance experienceHydroxy compound active ingredientsEmulsion deliveryFlavorHuman body

The present invention provides herbal compositions comprising cannabidiol (CBD) for enhancing the sexual experience. In accordance with the invention, a physiologically acceptable composition including an emulsion of cannabidiol and a carrier substance is delivered to the skin, one or membranes or other surfaces of the human body where absorption of the herbal composition can occur. In some embodiments of the invention the delivery mechanism includes an apparatus, including but not limited to dissolving strips, vaporizers, pump sprays, single-use foil tubes, and condoms. The delivery occurs prior to or during sexual activity. Some embodiments of the invention provide carrier substances that will improve the effectiveness of the CBD in enhancing the sexual experience, and that may improve the experience in other ways, such as by adding flavors or fragrances.

Owner:STEPOVICH MATTHEW J

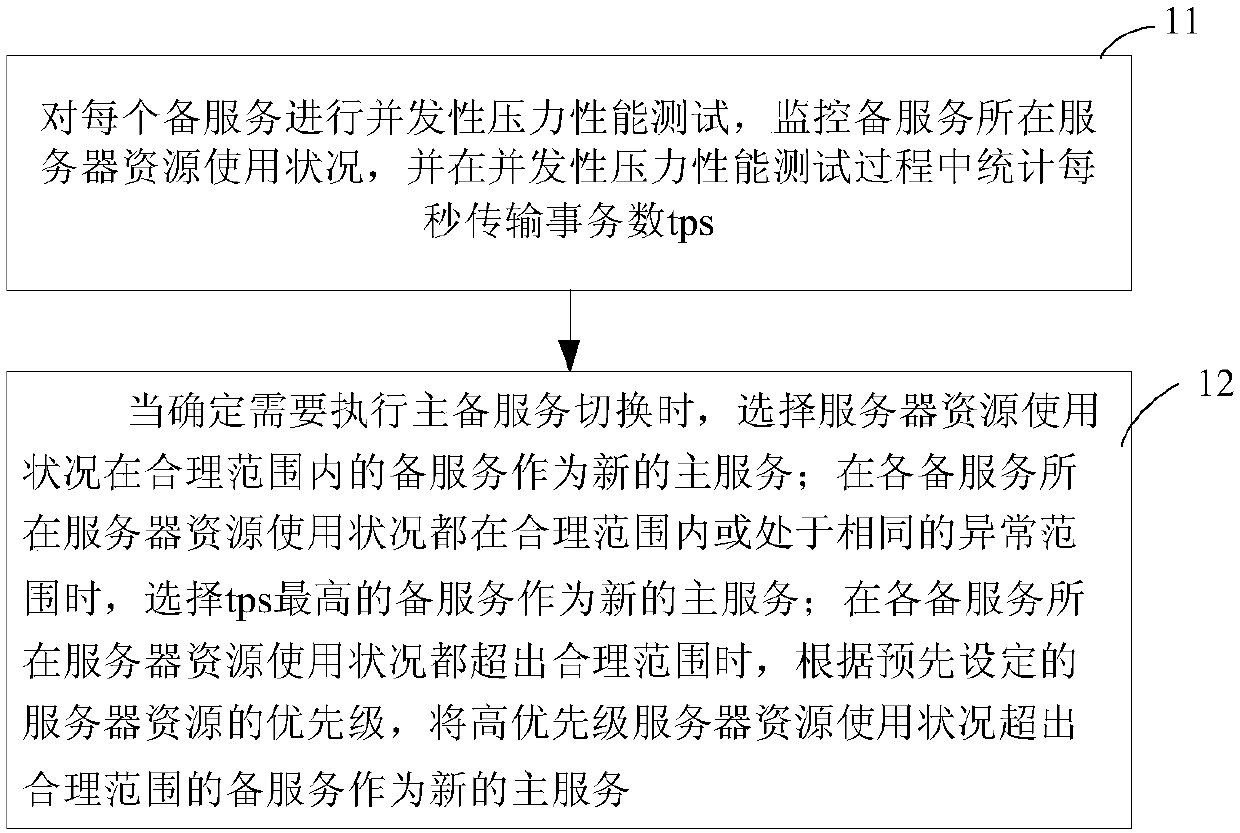

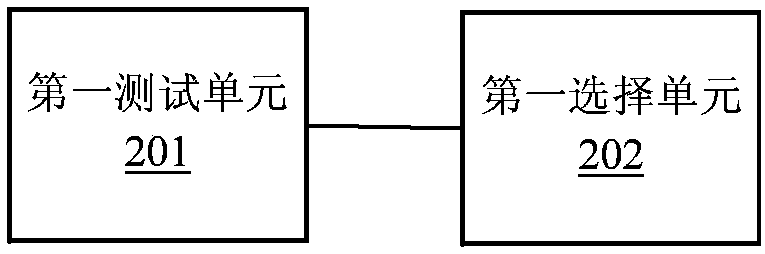

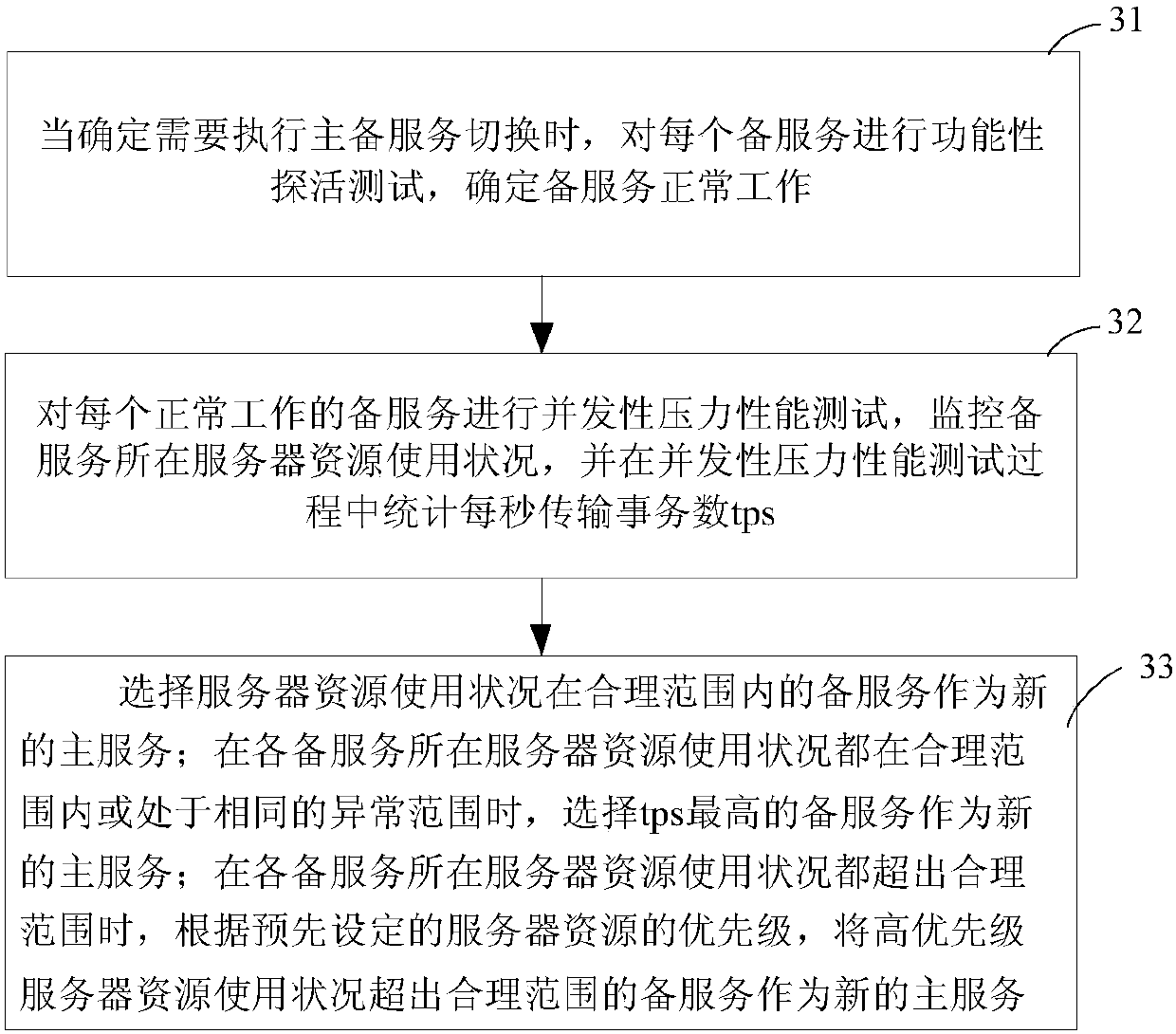

Primary and standby services switching method and device

ActiveCN107846294AImprove performance experienceData switching networksService conditionComputer science

The invention discloses a primary and standby services switching method and device. The method comprises the following steps: performing concurrent pressure performance test on each standby service, monitoring the service condition of a server resource where the standby service is located, and counting the Transaction Per Second tps in the concurrent pressure performance test process; when determining that primary and standby services switching needs to be performed, selecting a standby service, of which the service condition of the server resource is within a reasonable range, as a new primary service; when the service conditions of server resources where various standby services are located are within a reasonable range or within a same abnormal range, selecting a standby service with the highest tps as the new primary service; and when the service conditions of the server resources where various standby services are located exceed the reasonable range, taking a high-priority standbyservice with the service condition of the server resource exceeding the reasonable range as the new primary service according to the preset priority of the server resources. The primary and standby services switching method provided by the invention can automatically select a standby service with better performance as the primary service.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

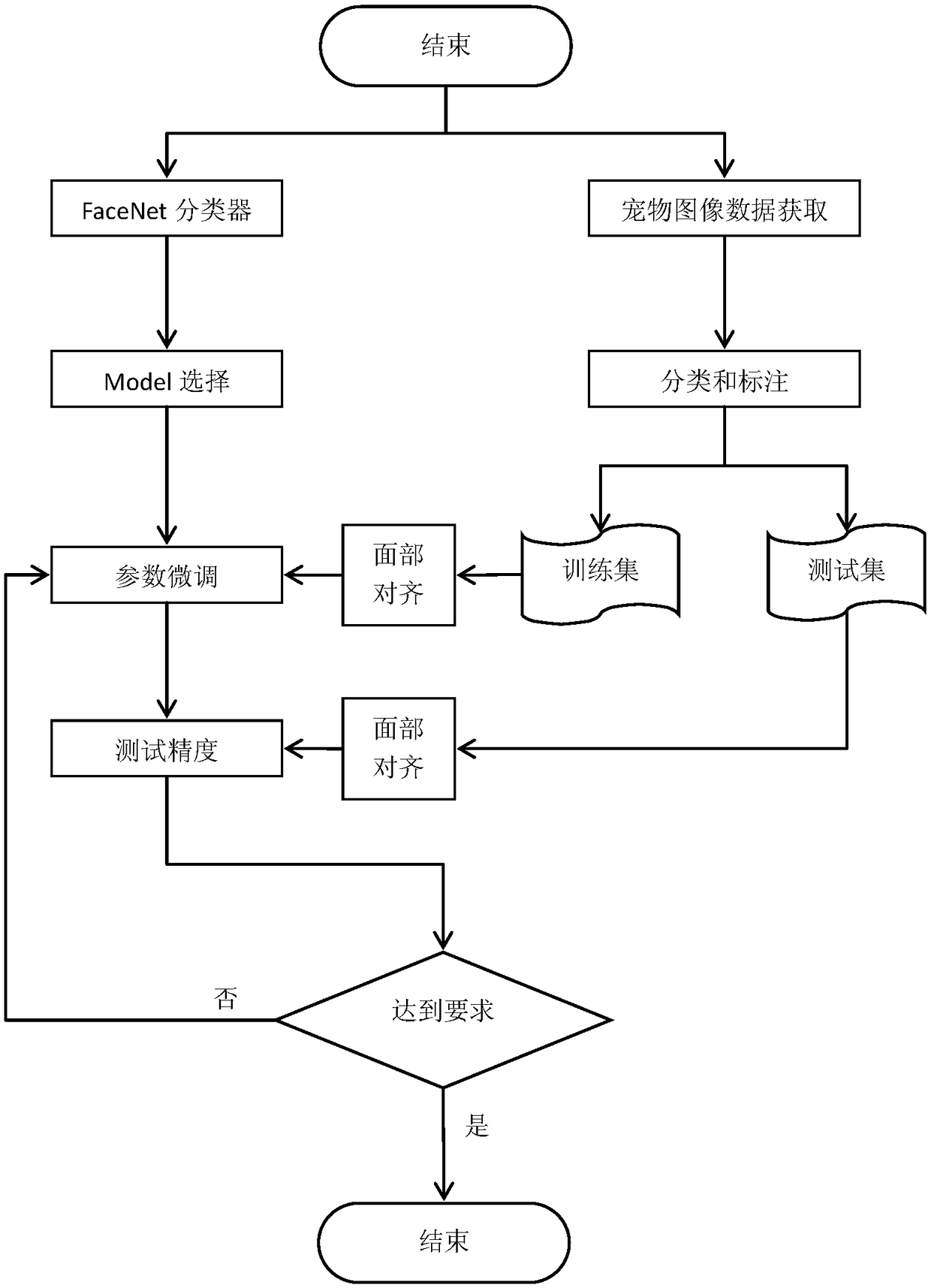

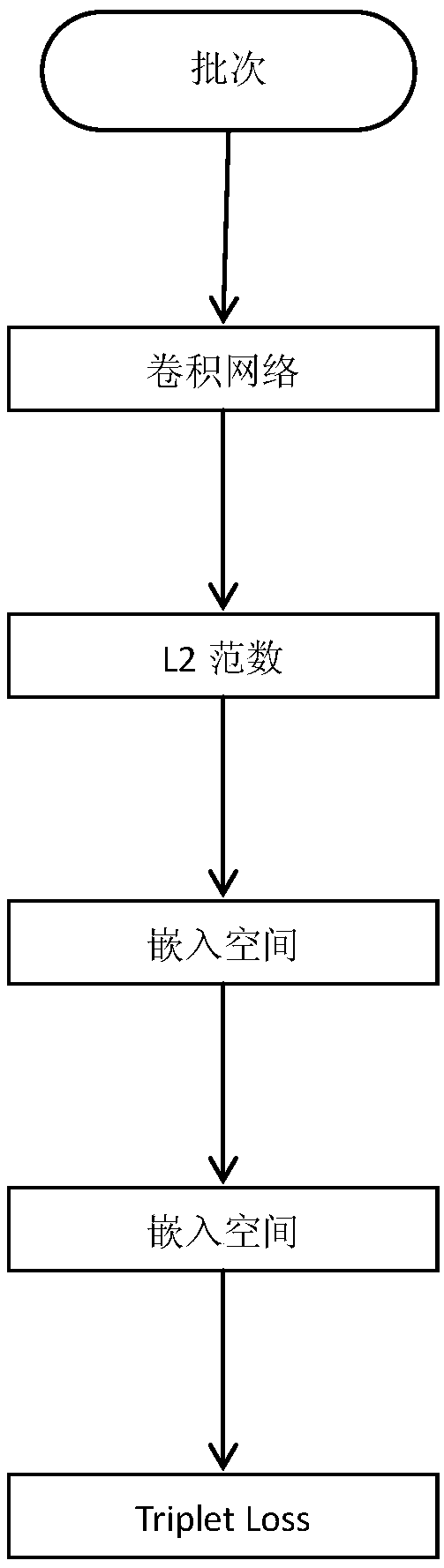

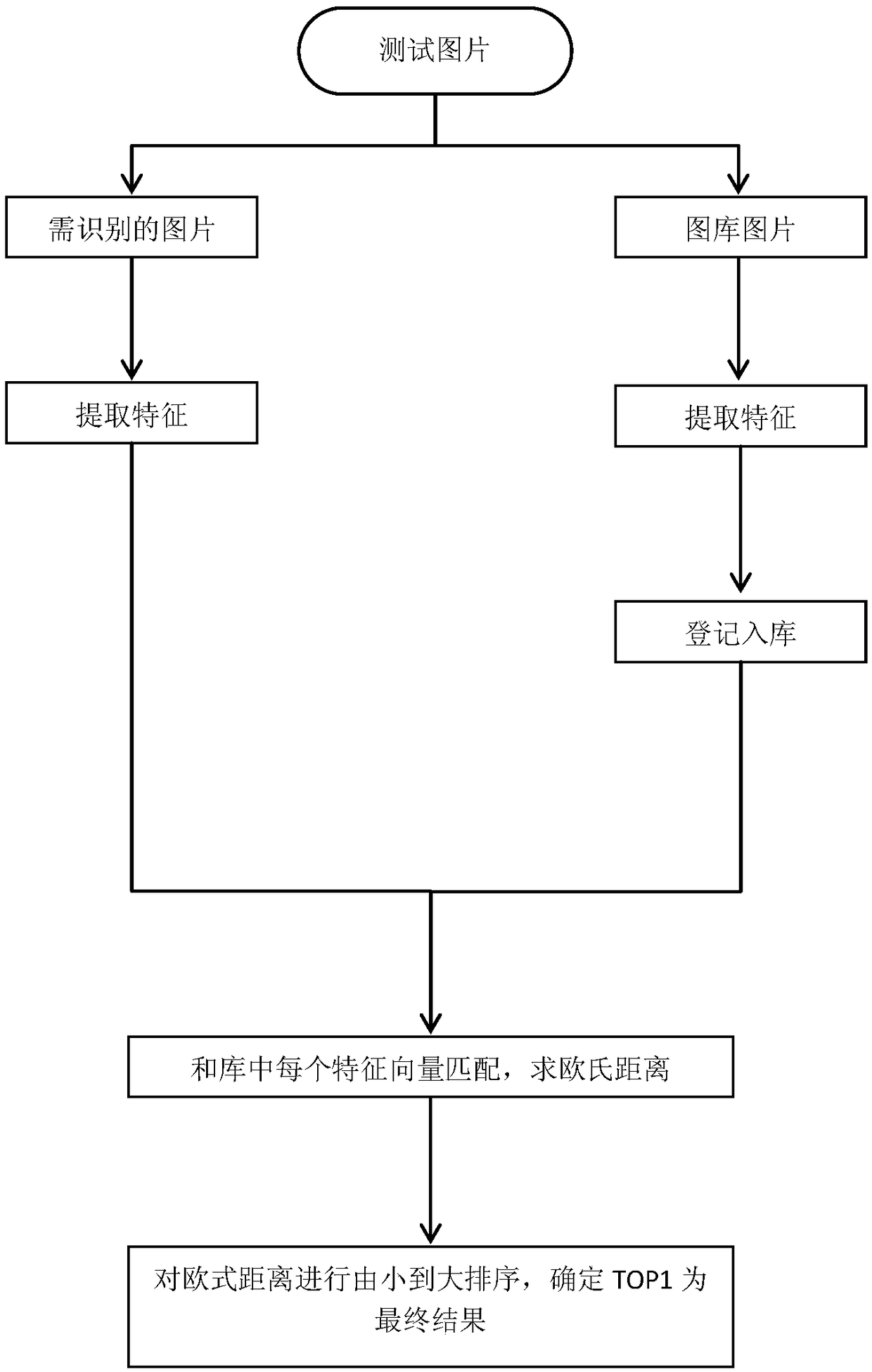

Pet face recognition method

InactiveCN108875564AImprove recognition accuracyImprove performance experienceBiometric pattern recognitionOther apparatusPattern recognitionImaging data

The invention discloses a pet face recognition method. The method comprises the following steps: S1, initializing a pet face classifier, wherein the initialization comprises classifier structure initialization and classifier weight initialization; S2, acquiring image data, collecting through a web crawler and a real-time camera; S3, classifying and marking the data; S4, performing face alignment on the image data; S5, iteratively updating the classifier; and S6, judging whether the classifier meets a precision requirement, if the classifier meets the precision requirement, saving the current parameter and ending the program; if the classifier does not meet the precision requirement, continuing the training. The method disclosed by the invention is suitable for identifying the individual from batch of pets, and has high precision.

Owner:ZHEJIANG UNIV OF TECH

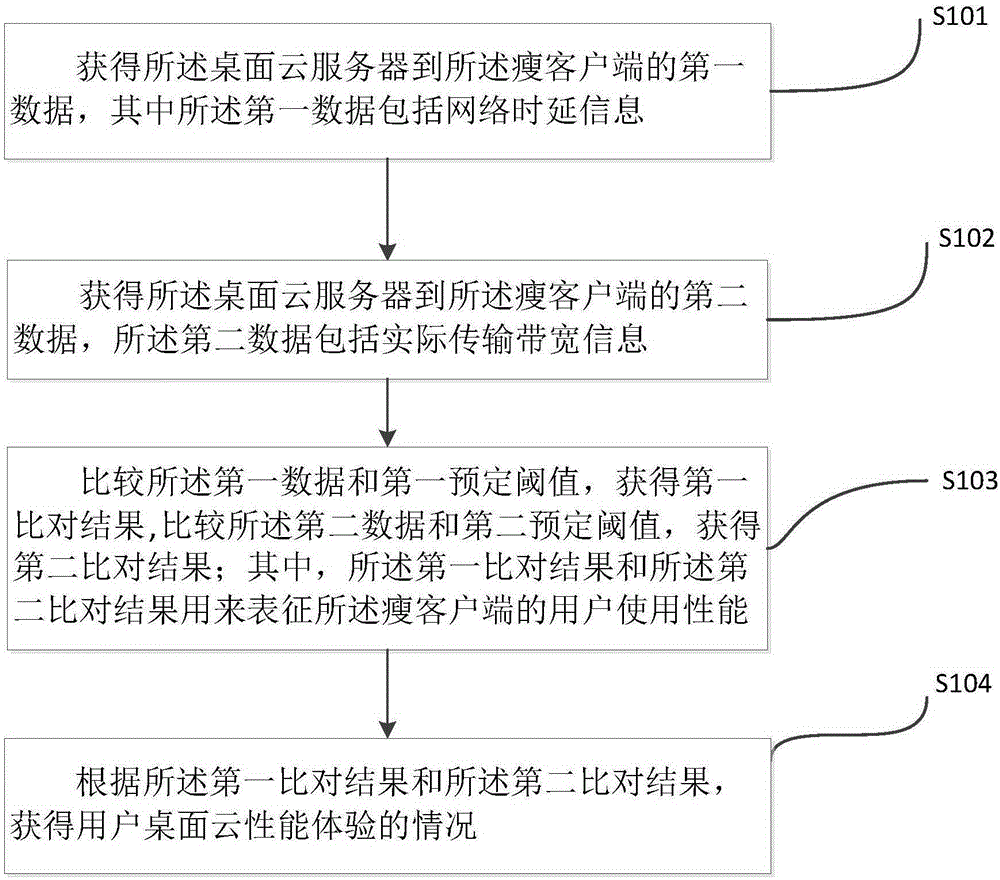

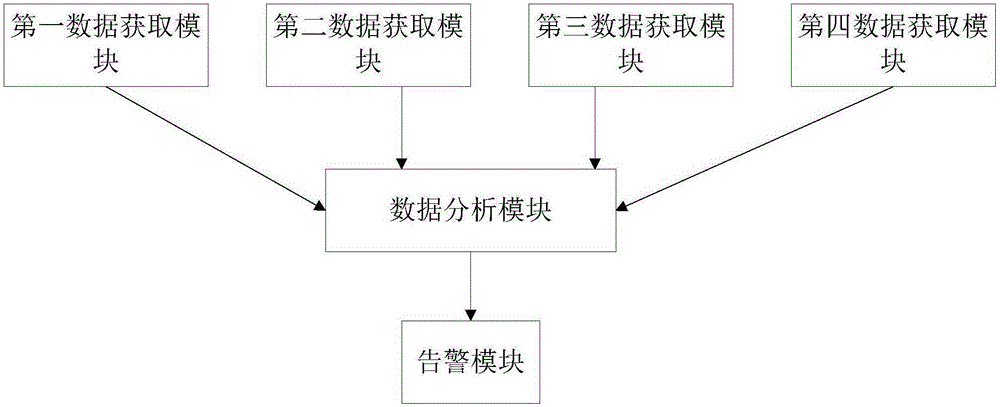

Method and system for monitoring desktop cloud performance experience

ActiveCN105245591AImprove performance experienceEasy accessData switching networksData acquisitionComputer terminal

The invention discloses a method and a system for monitoring desktop cloud performance experience, belonging to the field of desktop cloud technology. The method comprises: acquiring first data from a desktop cloud server to a thin terminal, and comparing the first data with a first predefined threshold to obtain a first comparison result; acquiring second data from the desktop cloud server to the thin terminal, and comparing the second data with a second predefined threshold to obtain a second comparison result; and acquiring the condition of client desktop cloud performance experience according to the first comparison result and the second comparison result. The system comprises a first data acquisition module, a second data acquisition module and a data analysis module. By adopting the method and the system for monitoring the desktop cloud performance experience, the actual desktop cloud performance experience condition of a user can be quickly and accurately acquired, and corresponding alarm information is obtained when the user desktop cloud performance experience becomes worse.

Owner:武钢集团有限公司

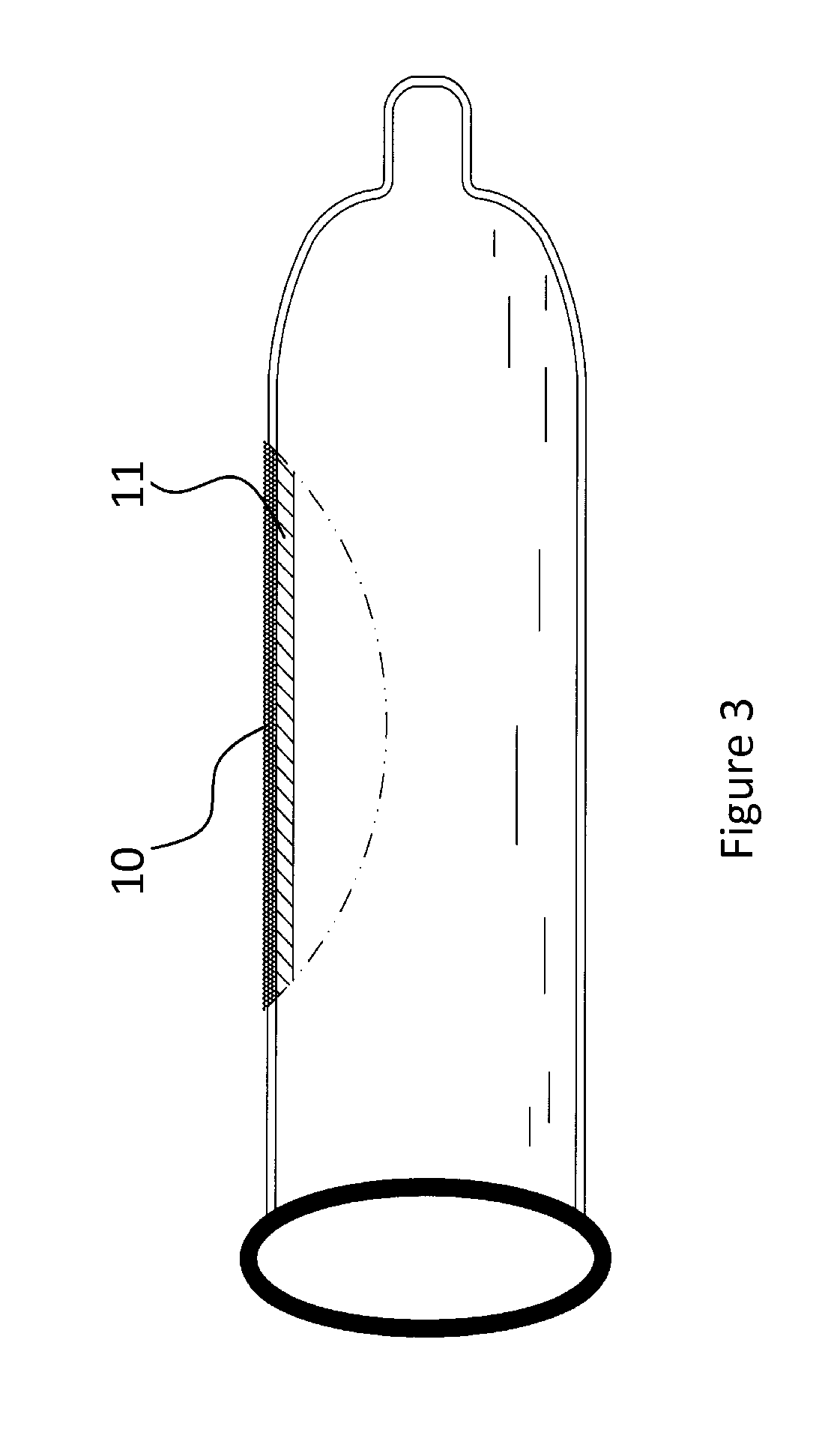

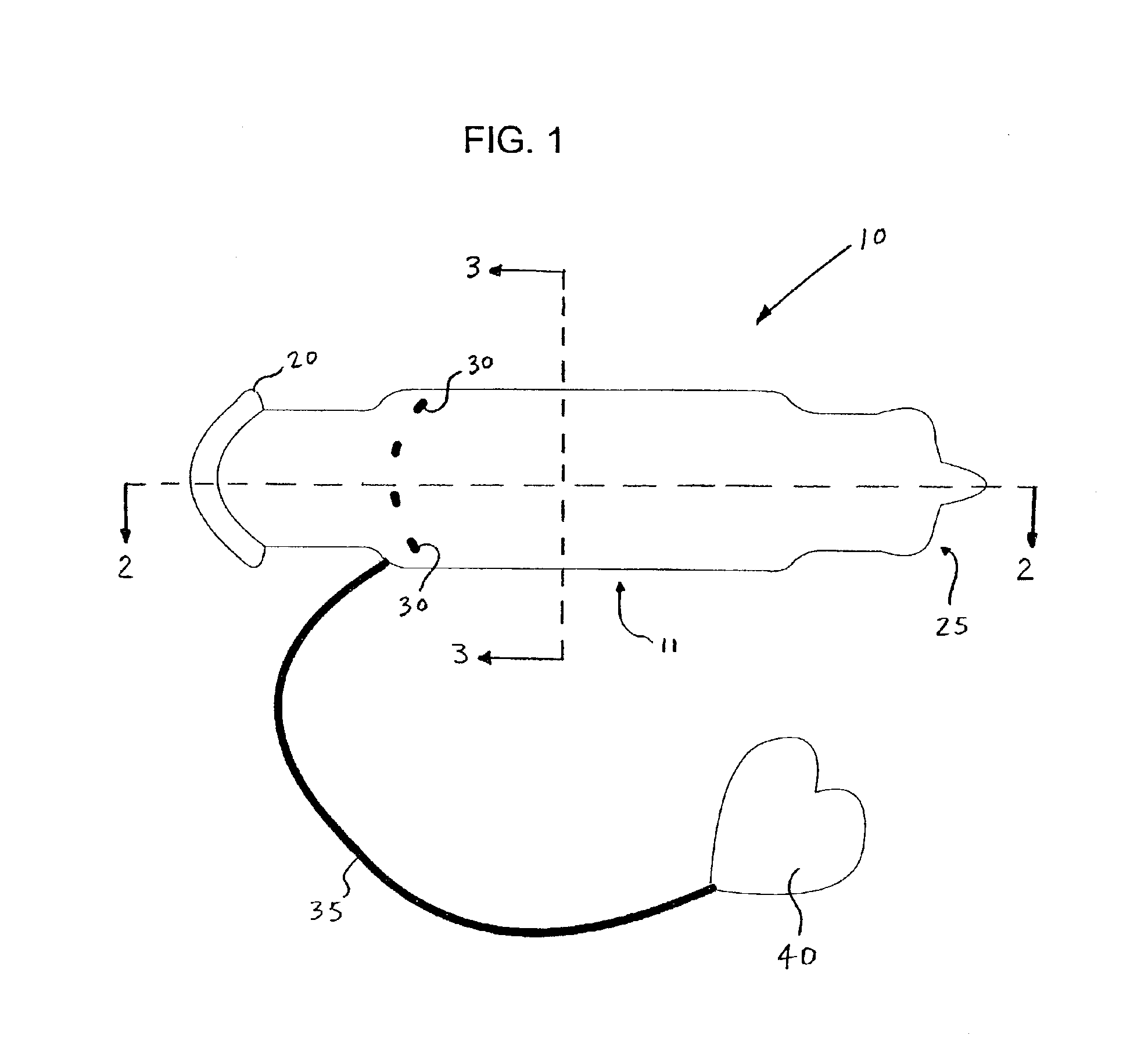

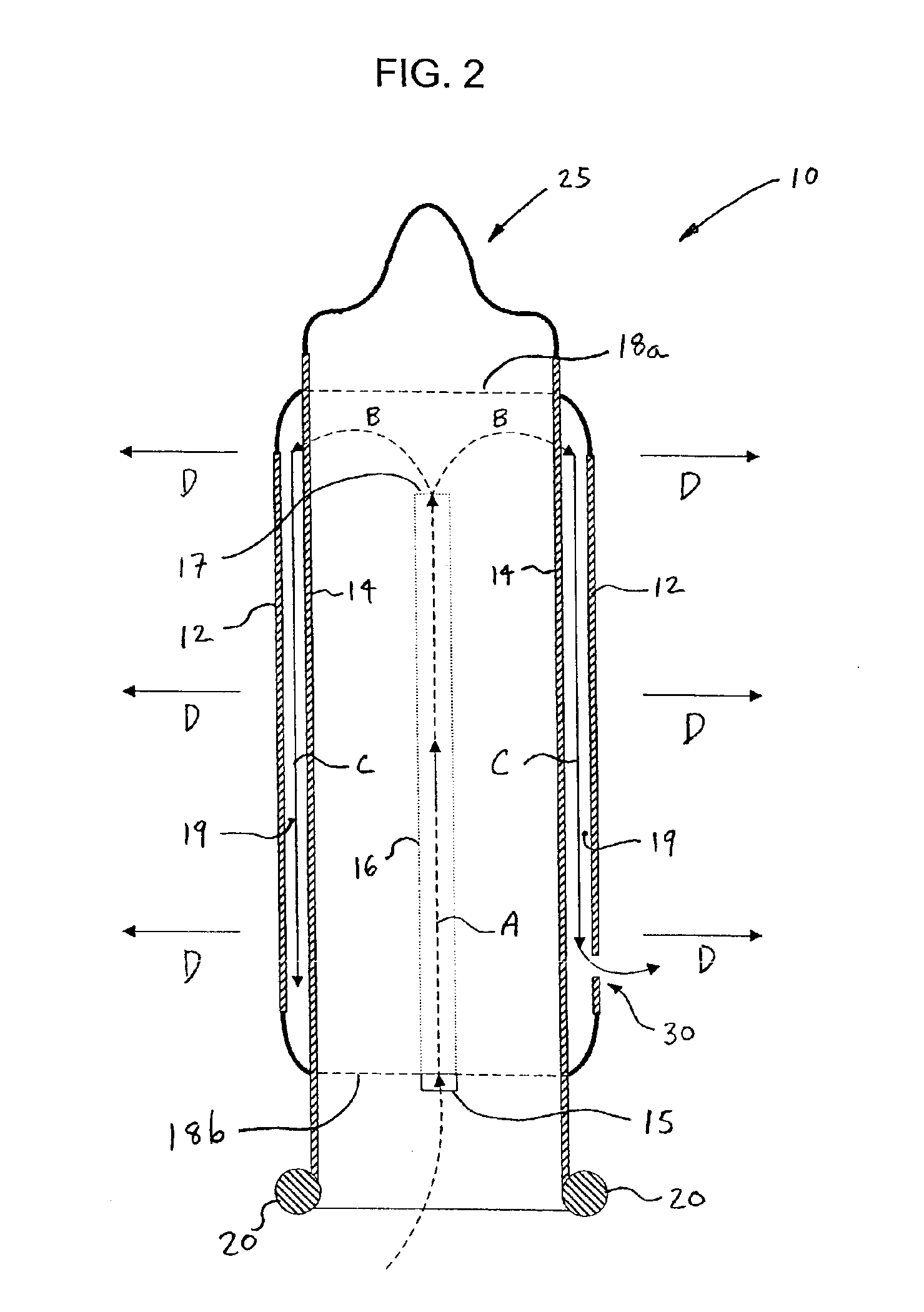

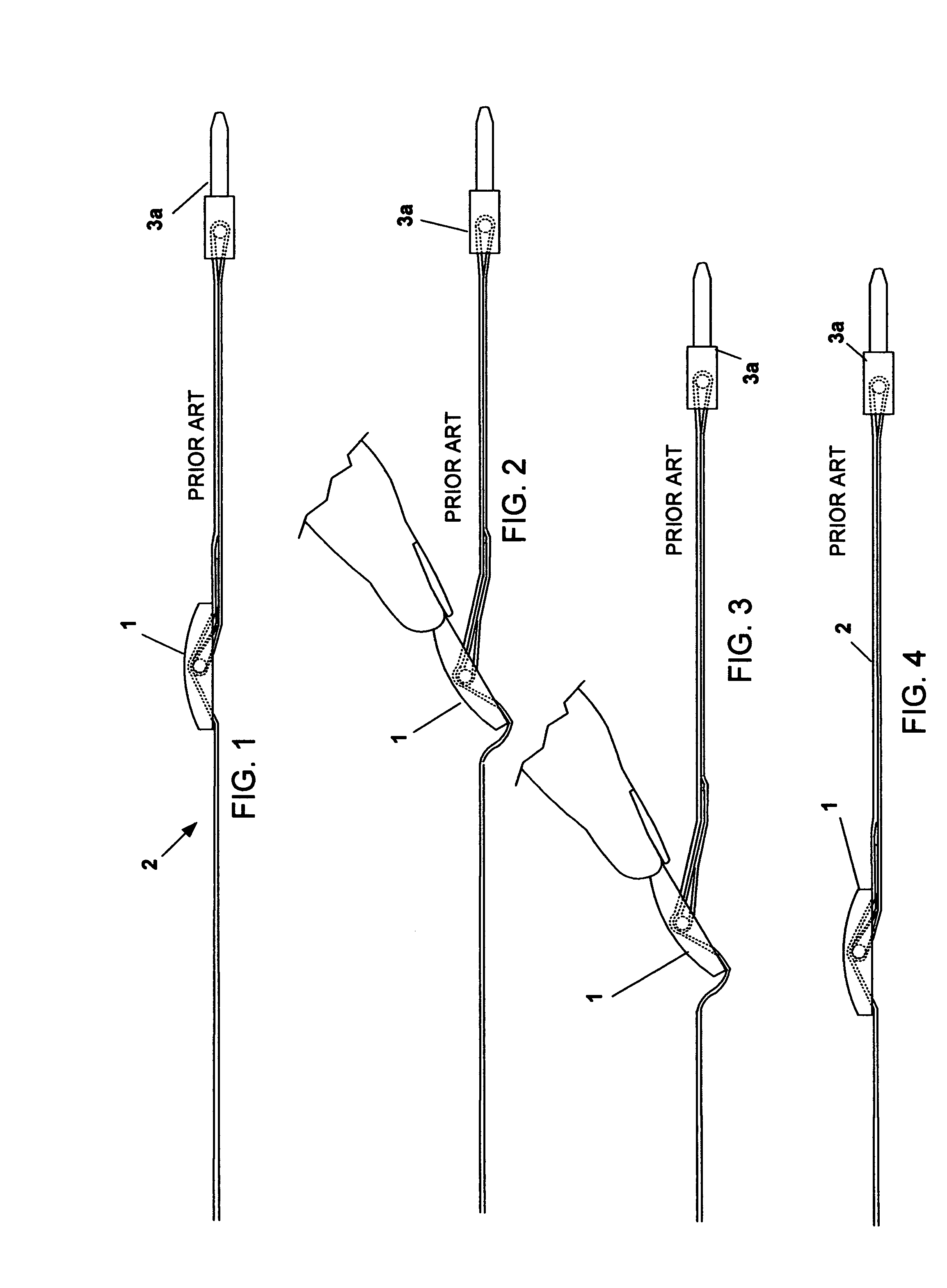

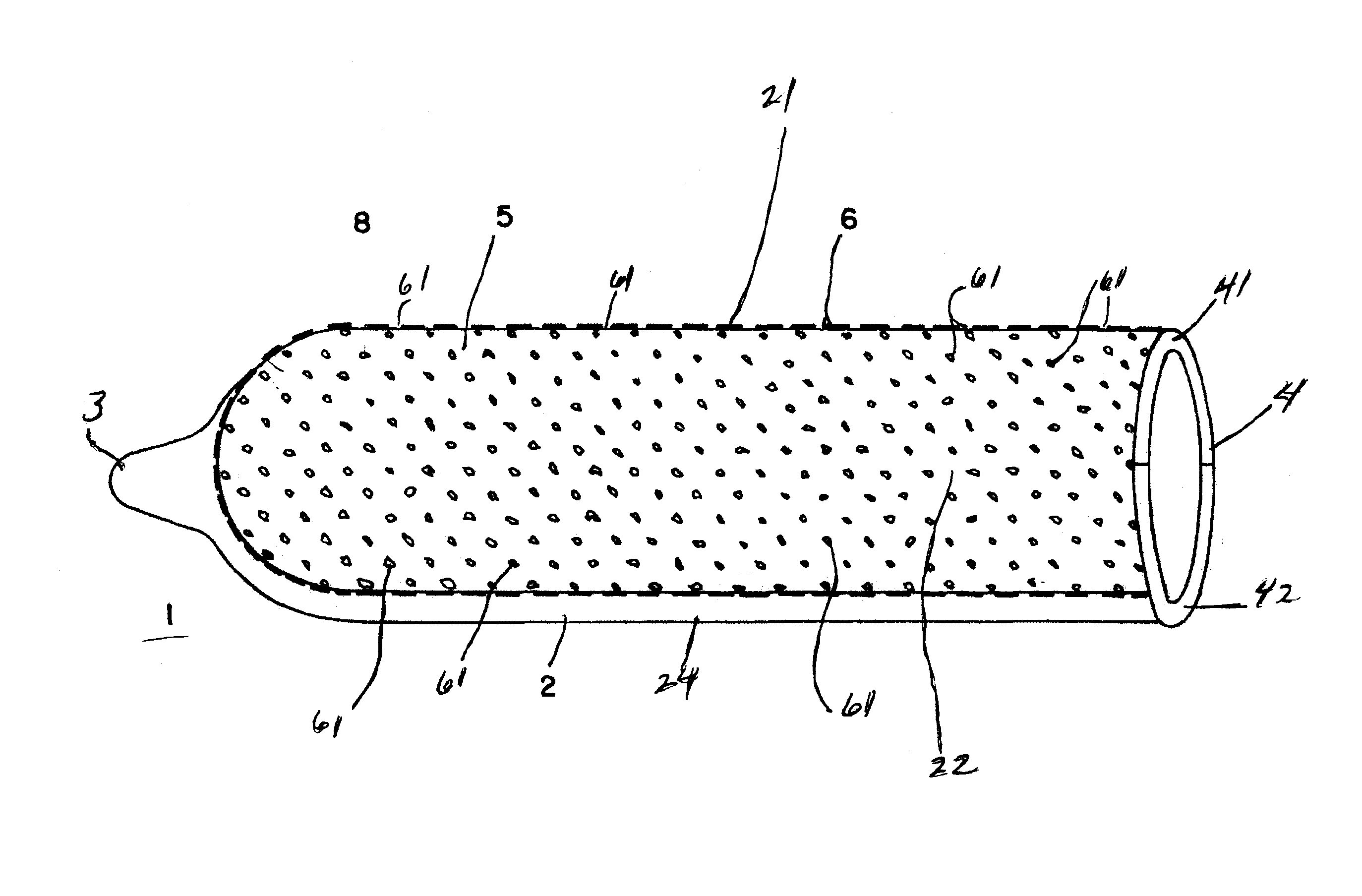

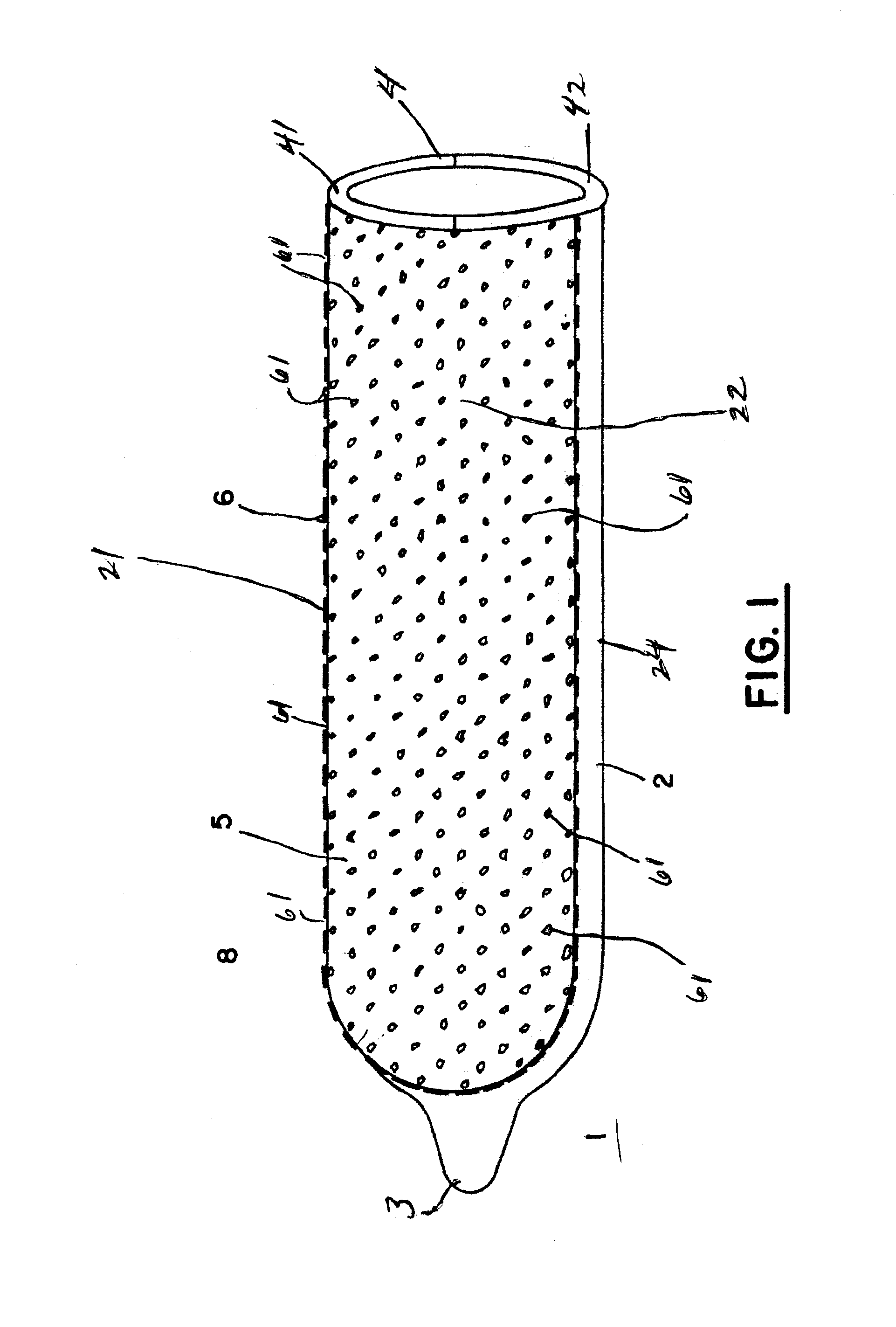

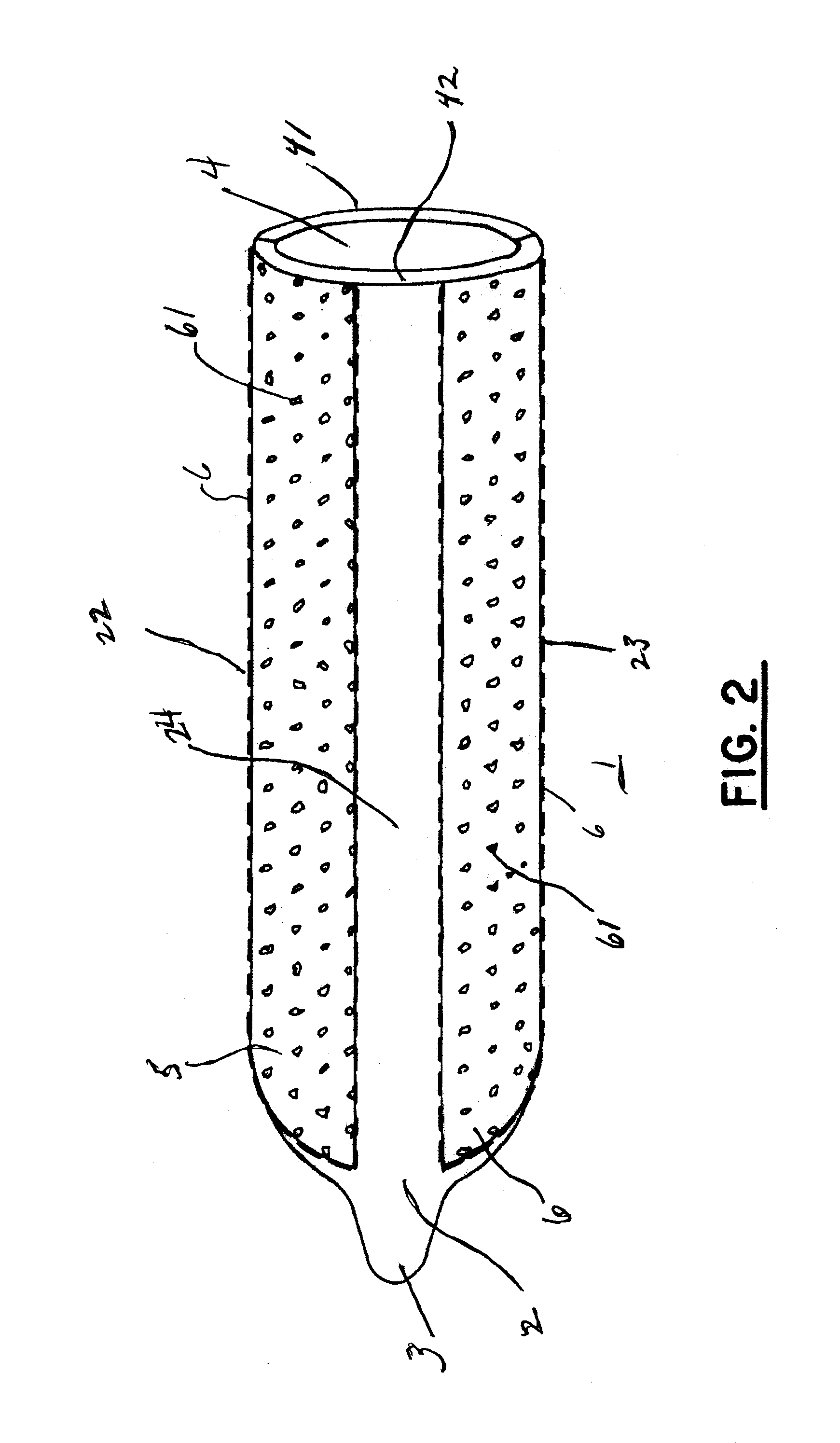

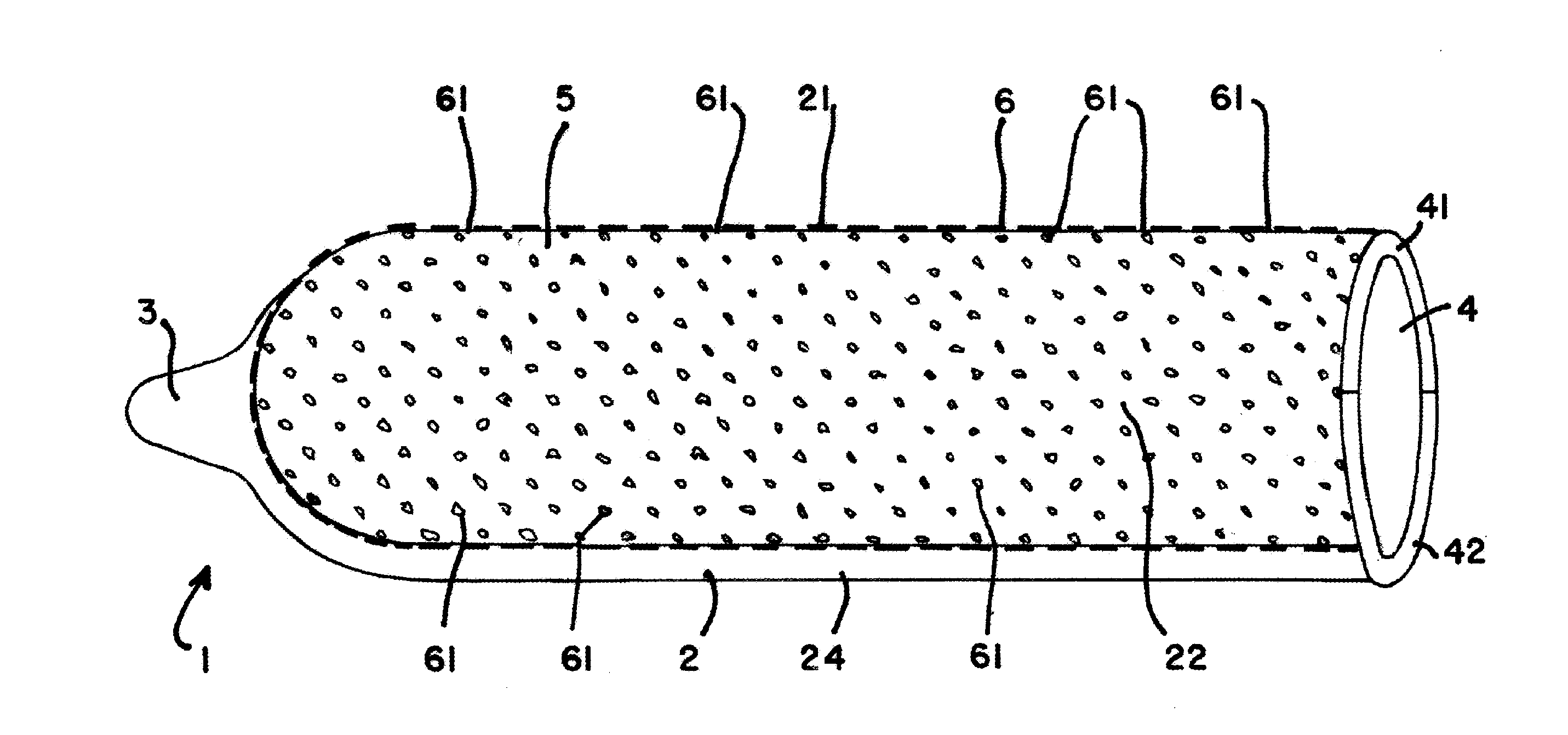

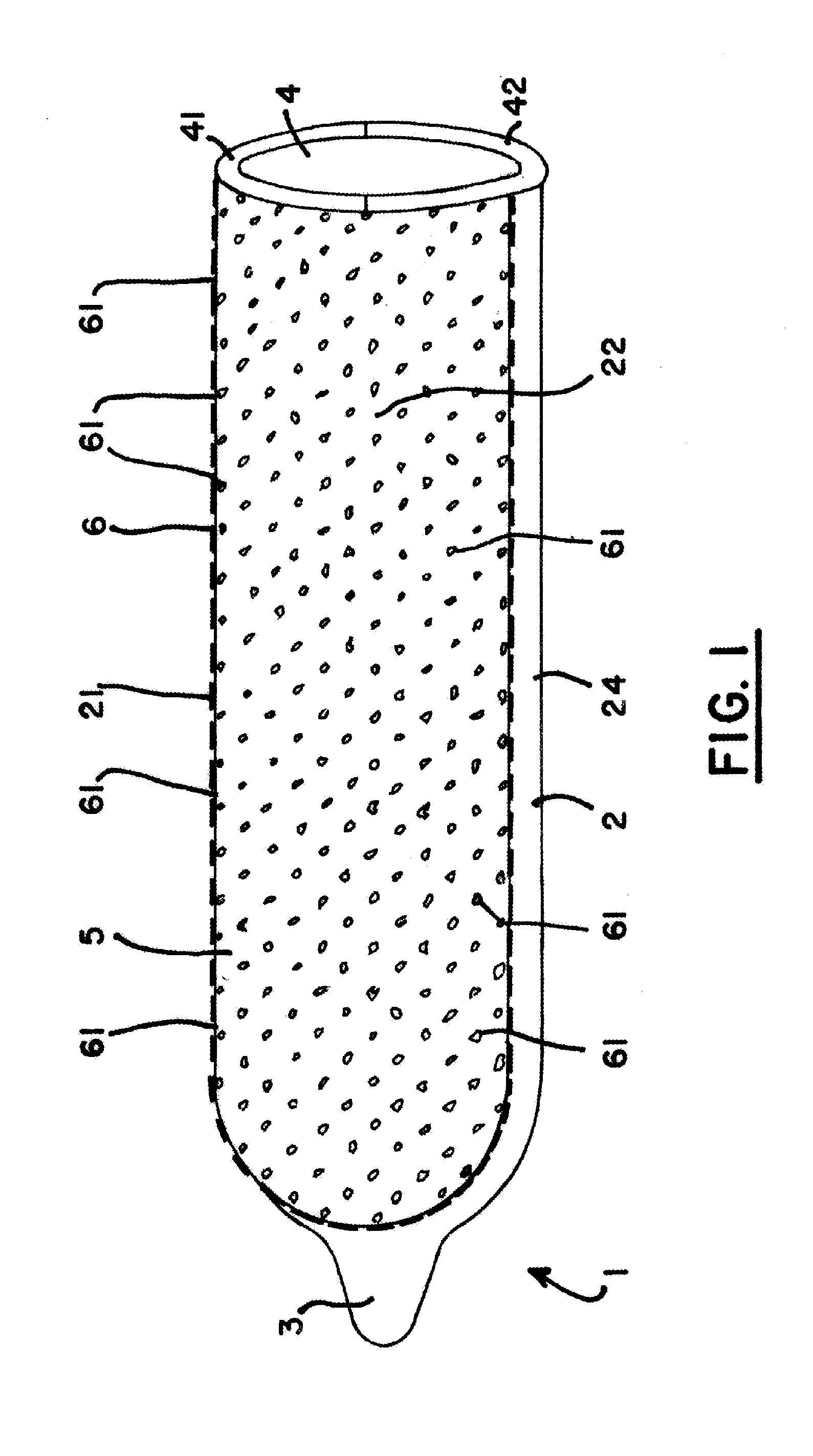

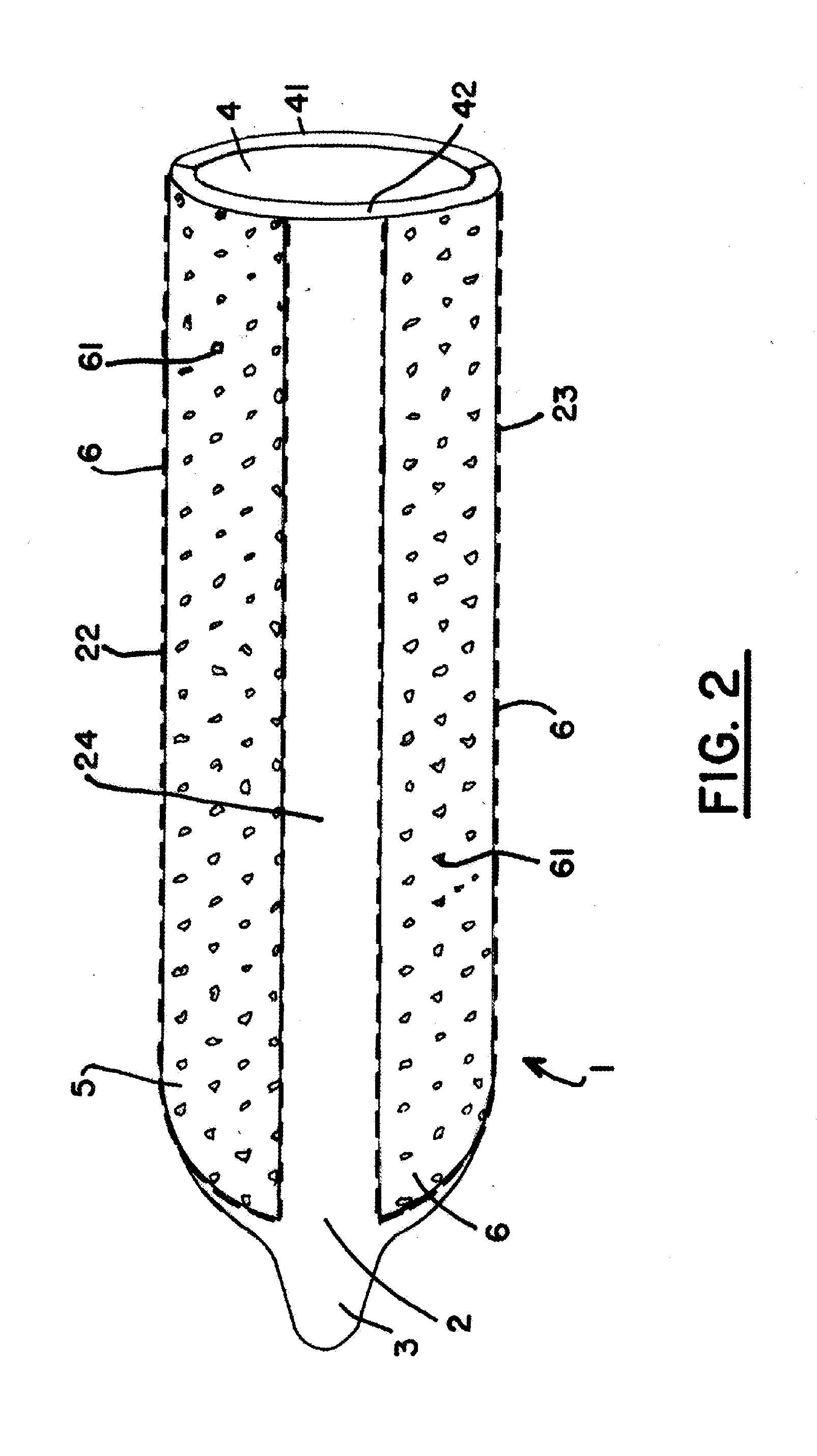

Condom with inflatable portion

InactiveUS6895967B2Improve experienceQuick deflationMale contraceptivesSurgeryEngineeringMechanical engineering

A condom having an inflatable portion is provided. The condom comprises an inner wall and an outer wall defining an annular chamber extending along a portion of the length of the condom. An air source, such as a handheld pump, is removably connected to a port which leads to an airway in the chamber for delivering air to a forward portion of the chamber. A plurality of vents are provided at a rearward end of the chamber for venting the air.

Owner:PRAML SCOTT

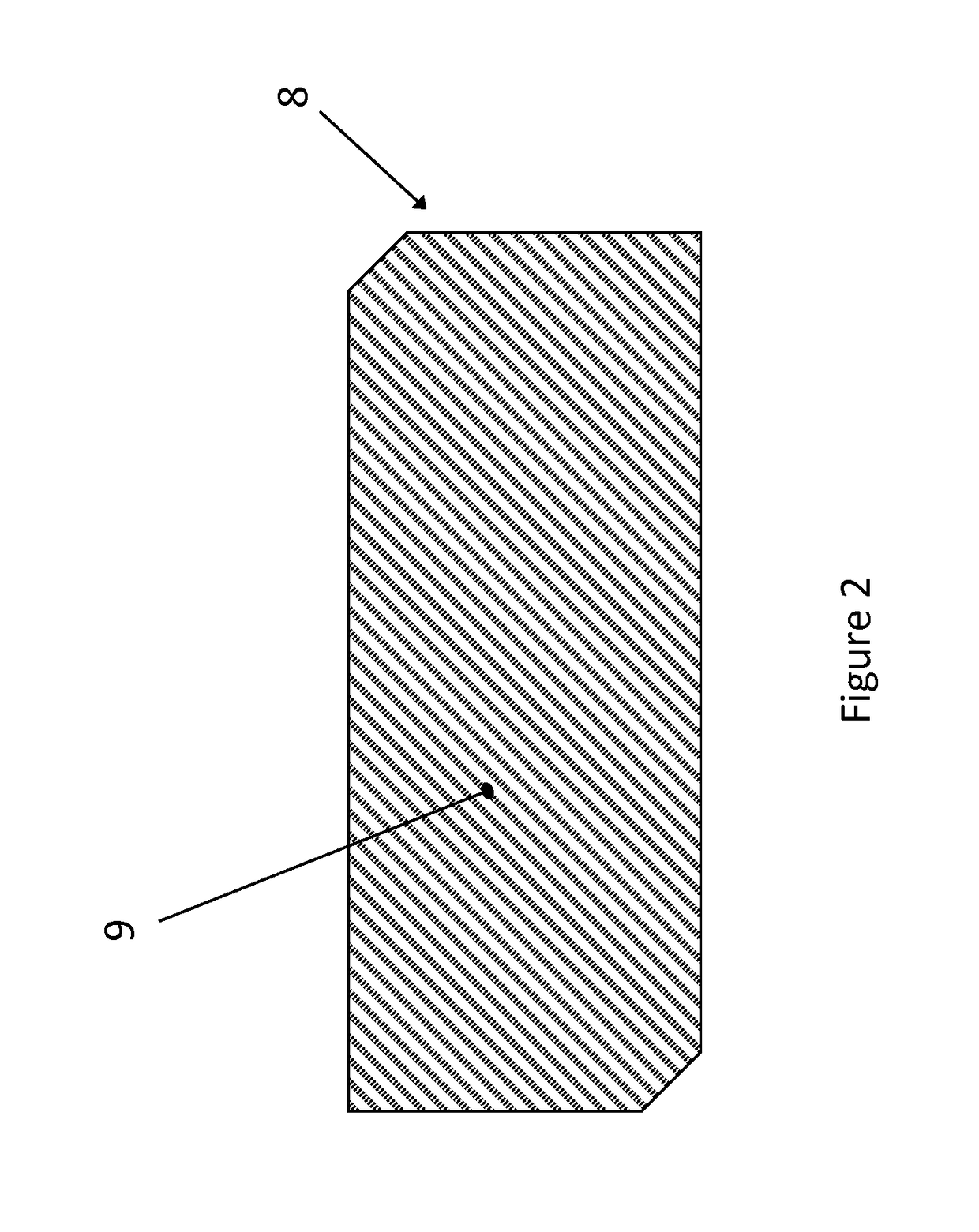

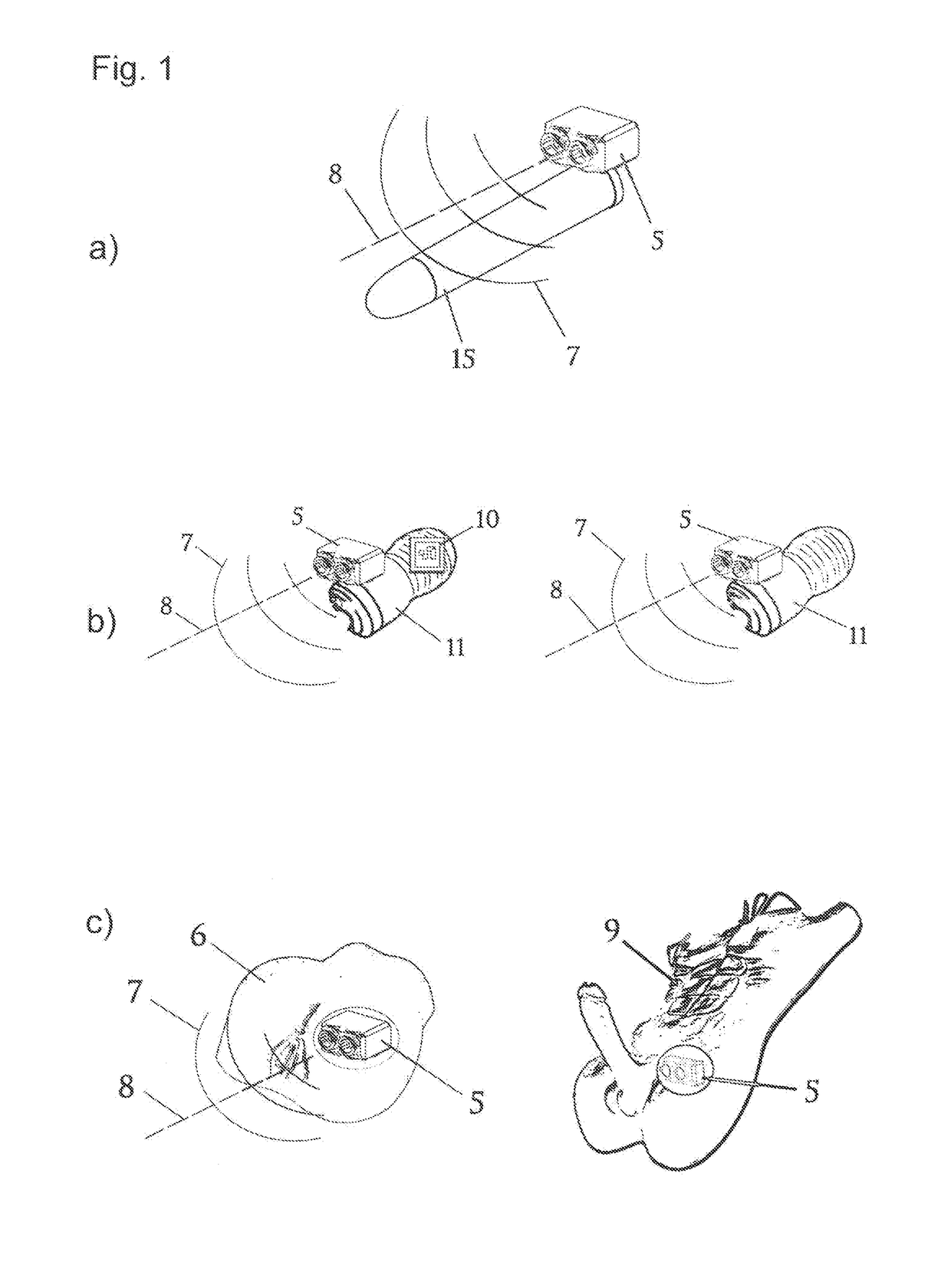

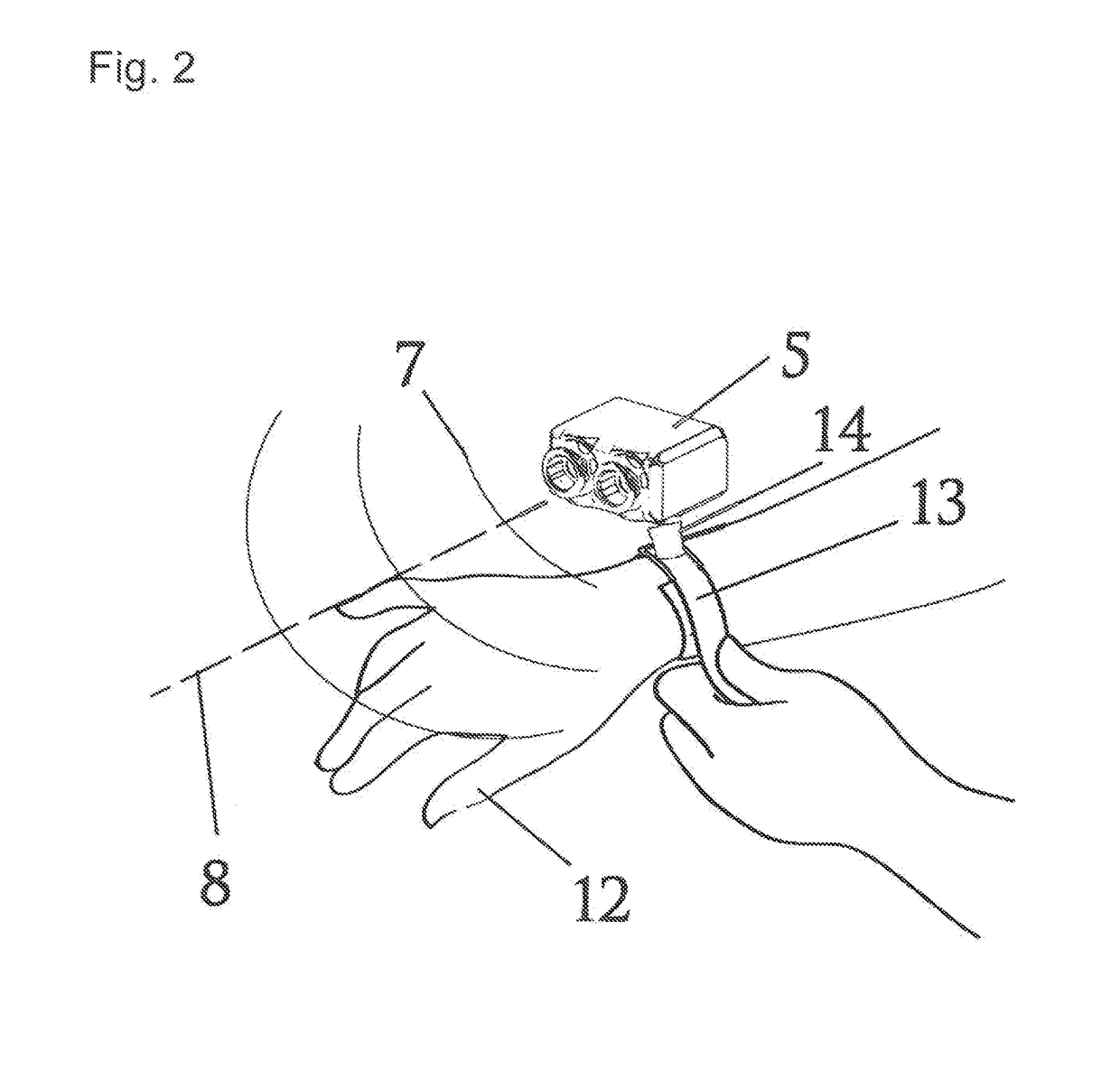

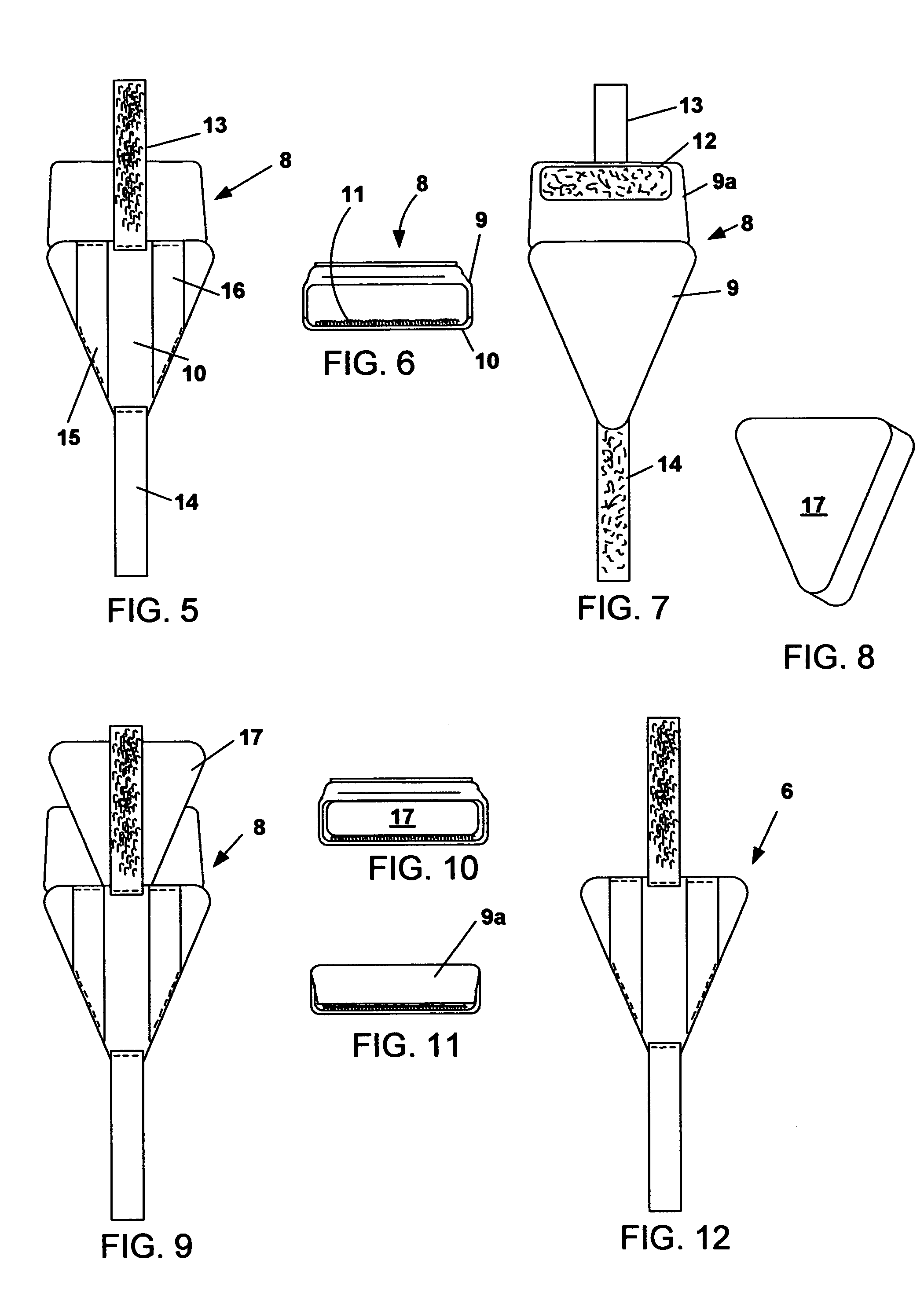

Sexual interaction device and method for providing an enhanced computer mediated sexual experience to a user

InactiveUS20180147110A1Speed of movementImprove performance experienceInput/output for user-computer interactionPhysical therapies and activitiesUltrasonic sensorInteraction device

A sexual interaction device for use as an input device for a virtual reality erotic application comprising a body part engaging portion (6, 9, 11, 13, 15) and a distance measurement unit (5). In order to allow for a reliable synchronisation between the user's motion and a virtual environment, it is provided according to the invention that the distance measurement unit (5) comprises an ultrasonic sensor (1) assembly operatively coupled to the body part engaging portion (6, 9, 11, 13, 15).

Owner:CAKMAK TUNCAY

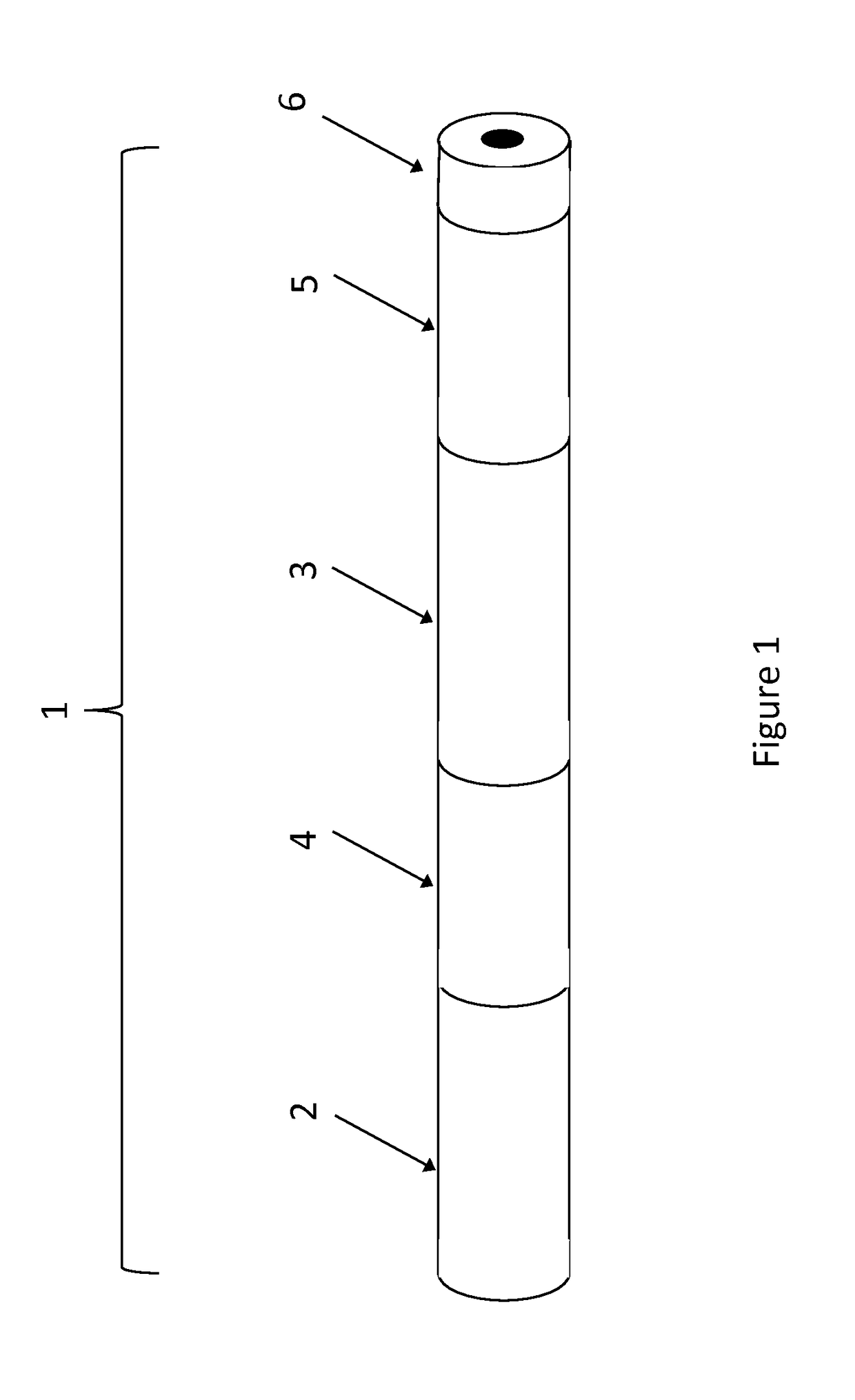

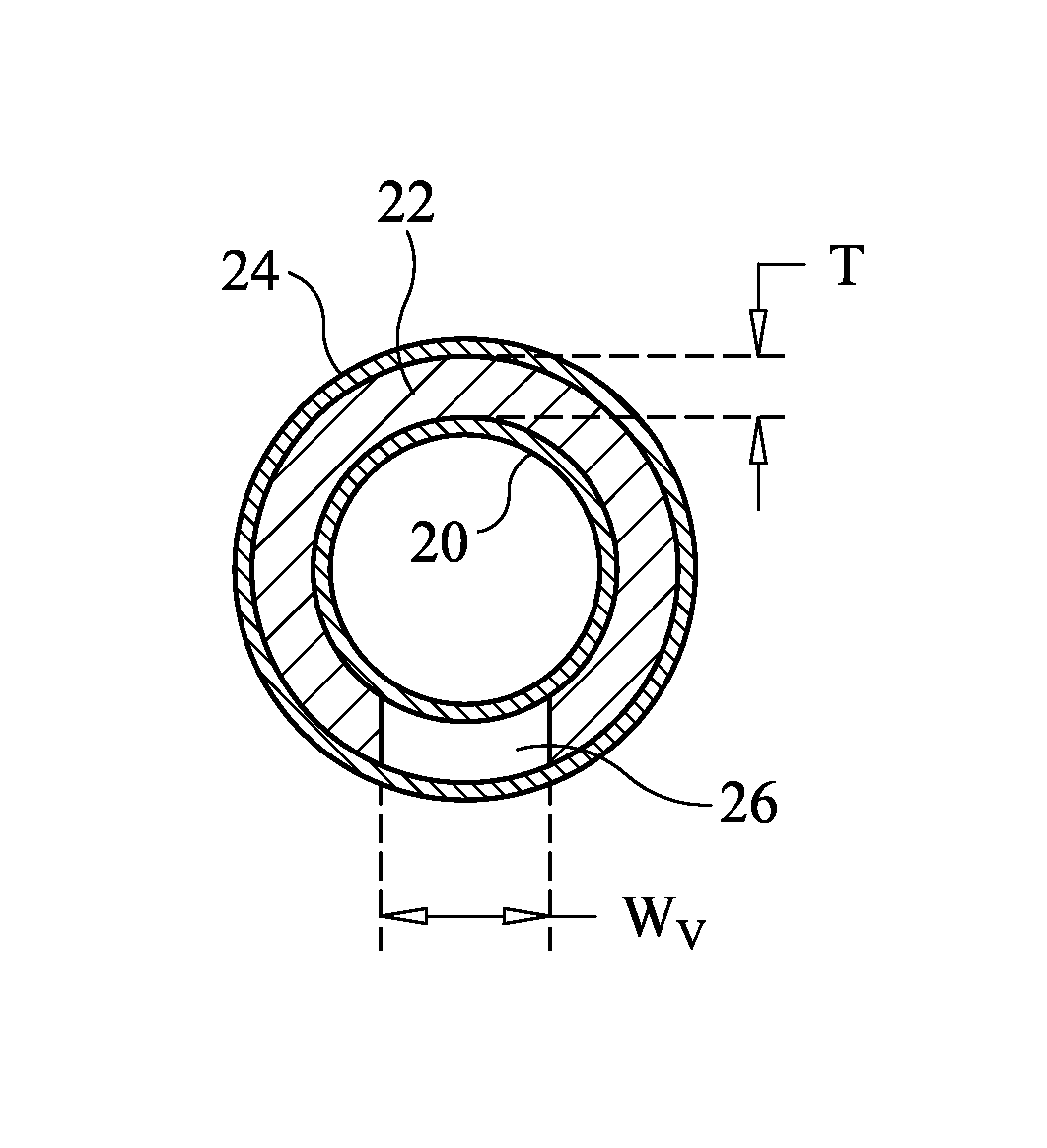

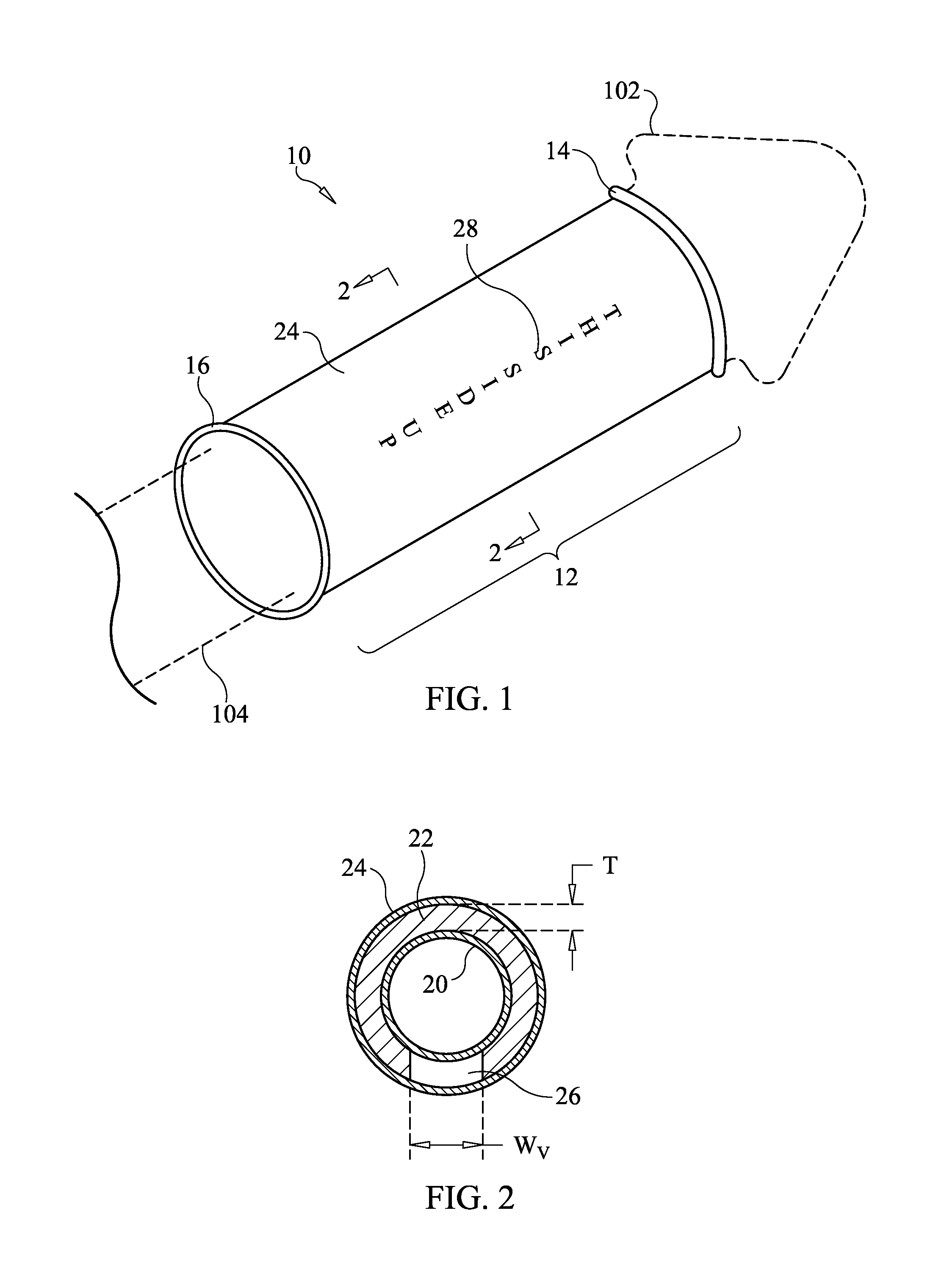

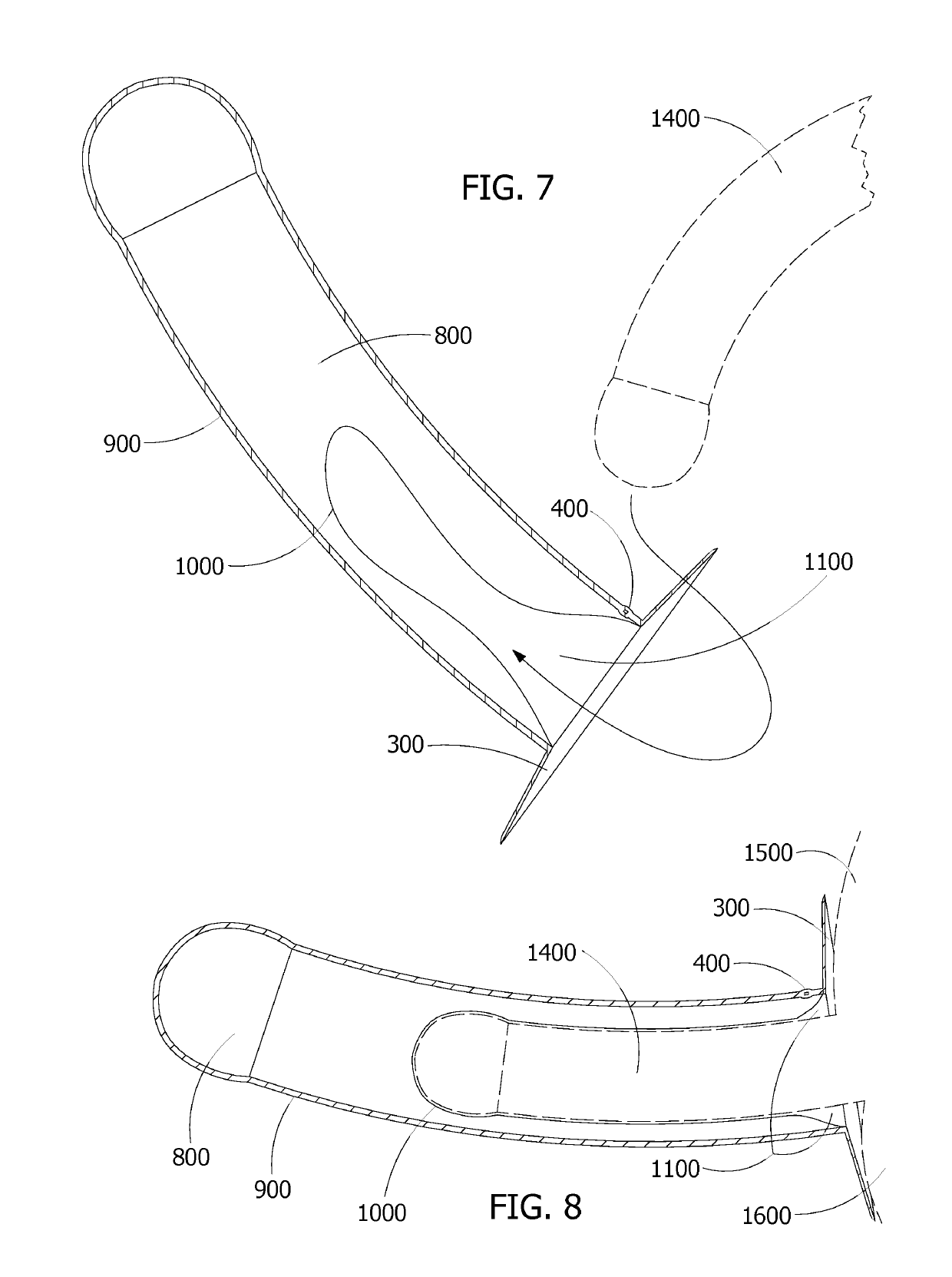

Cuff and cuff/condom combination for erection assistance

InactiveUS9295579B1Enhanced sexual experienceImprove performance experienceMale contraceptivesPenis support devicesEngineeringPenis

A device providing erection assistance includes a first sleeve of flaccid material that can fit over a male penis and extend from the base to the corona of the penis. Also included is a second sleeve of flaccid material of similar length that can fit over the penis. One or more wall regions are disposed between the first and second sleeves. The wall region(s) extend(s) continuously along the lengths of the first and second sleeves. The wall region(s) is (are) coupled to the first and second sleeves, and define a void region along the length of the sleeves that is readily aligned with the urethral tube of a male user.

Owner:ENCORE PROD

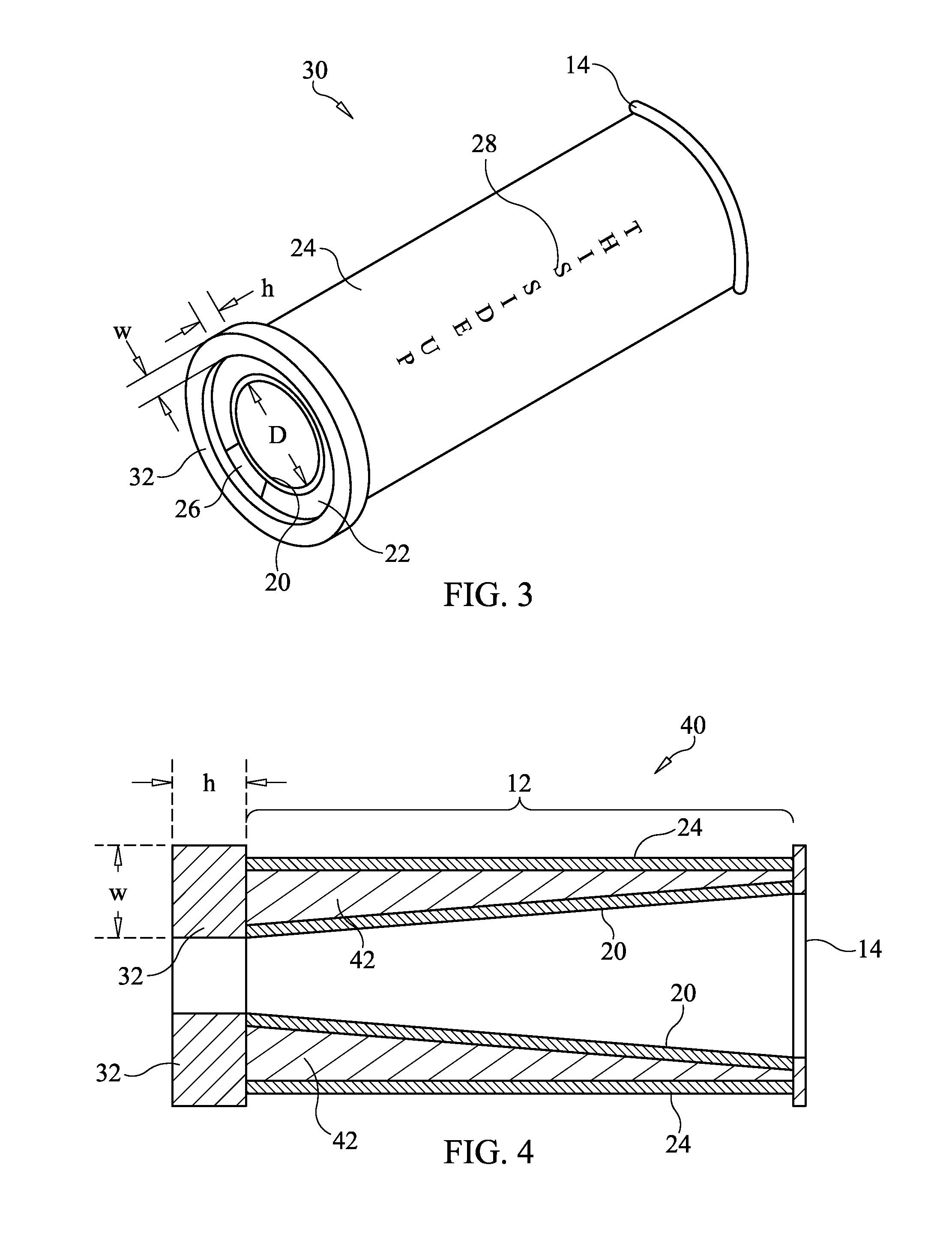

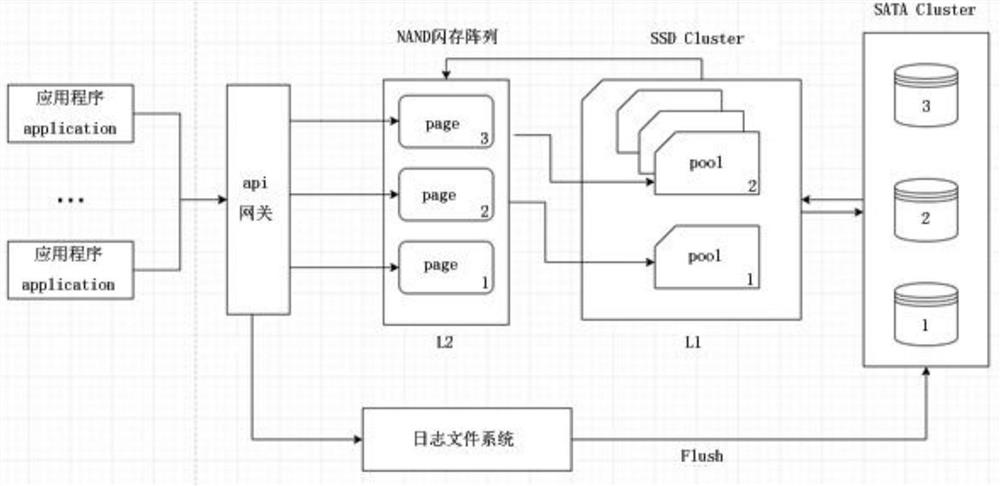

Method for hierarchically caching read-write data in storage cluster

The invention discloses a method for hierarchically caching read-write data in a storage cluster, relates to the technical field of cloud computing, and is implemented on the basis of a back-end storage cluster, a first-stage cache, a second-stage cache, an api gateway, a log file system and an application program. The back-end storage cluster manages the original data while the first-stage cache stores the hotspot data. Hot spot data is divided into different pools, the second-stage cache extracts the poll data into segments according to indexes and stores the segments, and the api gateway processes the requests in a unified mode. When the application program initiates a read request, the api gateway processes the request and publishes the request to the second-stage cache, the second-stage cache searches related segments and locates the related segments to the pool, or, if the related segments are not found, a segment missing request is further initiated to the first-stage cache, and after related information still cannot be found, the second-stage cache continues to search in a back-end storage cluster; when the application program initiates a write request, the api gateway processes the request and writes the request into a log file system, and Flush enters a back-end storage cluster after a transaction is completed. According to the invention, time delay can be greatly reduced.

Owner:SHANDONG LANGCHAO YUNTOU INFORMATION TECH CO LTD

Cuff and cuff/condom combination for erection assistance

InactiveUS9622902B1Improve performance experienceThe process is simple and effectiveMale contraceptivesPenis support devicesPenisCuff

A device providing erection assistance includes an open-ended and radially elastic tubular cuff adapted to fit over a male penis. The cuff's length is such that it can extend from the base of the penis to as far as the corona thereof. The cuff includes at least one wall region defining a void region along the wall region's length.

Owner:ENCORE PROD

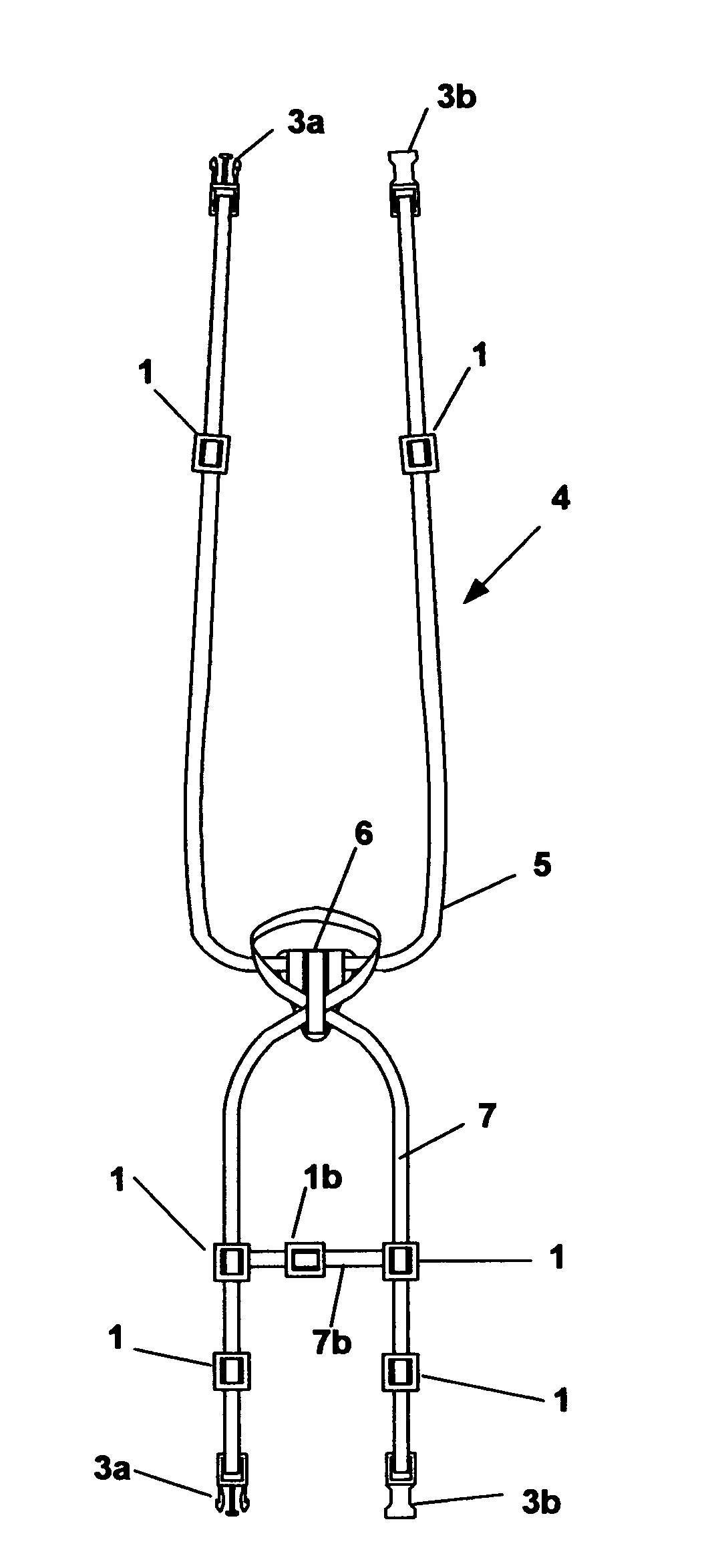

Sexual enhancement device

InactiveUS7645228B2Improve performance experienceControl flowPenis support devicesNon-surgical orthopedic devicesPenile TumescenceProstate gland

A sexual enhancement device for improving a user's sexual experience. A prostate pad is positioned adjacent to a male user's perineum below his prostate gland. A first prostate strap is connected to the prostate pad and is looped behind the user's neck. When the prostate strap is tightened, the prostate pad applies pressure to the perineum. This pressure is felt at the user's prostate and provides pleasurable stimulation to the prostate. A second prostate strap is also connected to the prostate pad and is looped around the user's waist. Preferably, the second prostate strap is also looped over the top of the user's erect penis. When looped over the top of the penis, tightening of the second prostate strap assists in restricting the flow of blood out of the erect penis, preferably allowing for an erection that lasts longer than it would otherwise. In a preferred embodiment, the pressure applied by the prostate pad can be dynamically increased or decreased by the user leaning his neck backwards or forwards, and also by the user arching his back and thrusting his hips. Also, in a preferred embodiment the tightness of the second prostate strap can be adjusted without having to interrupt sexual activity.

Owner:FLORES SAMUEL O

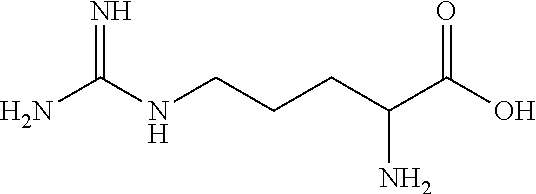

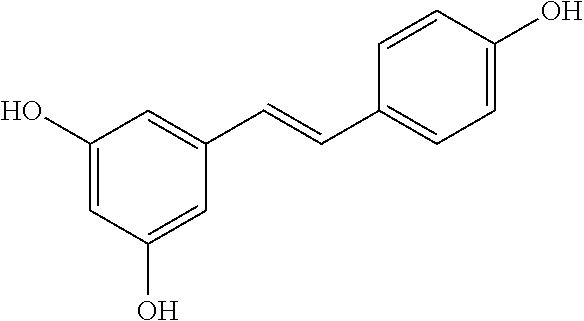

Topical Treatment Formulation of Natural Ingredients for Enhancing Sexual Response

InactiveUS20110245345A1High sensitivityImprove responseBiocidePeptide/protein ingredientsChemical compositionAdditive ingredient

The present invention relates to a topical composition to enhance sexual response. The composition includes L-arginine, trans-resveratrol, and a topical carrier wherein L-arginine is present in an amount not greater than about 10% and trans-resveratrol is present in an amount not greater than about 10% based upon the weight of the total composition. The invention also relates to a method of enhancing sexual response by topically applying the topical composition to the genetalia of a human, and an integrated product including the topical composition, a container for instantaneous delivery, and instructions for use.

Owner:AMATO TERI

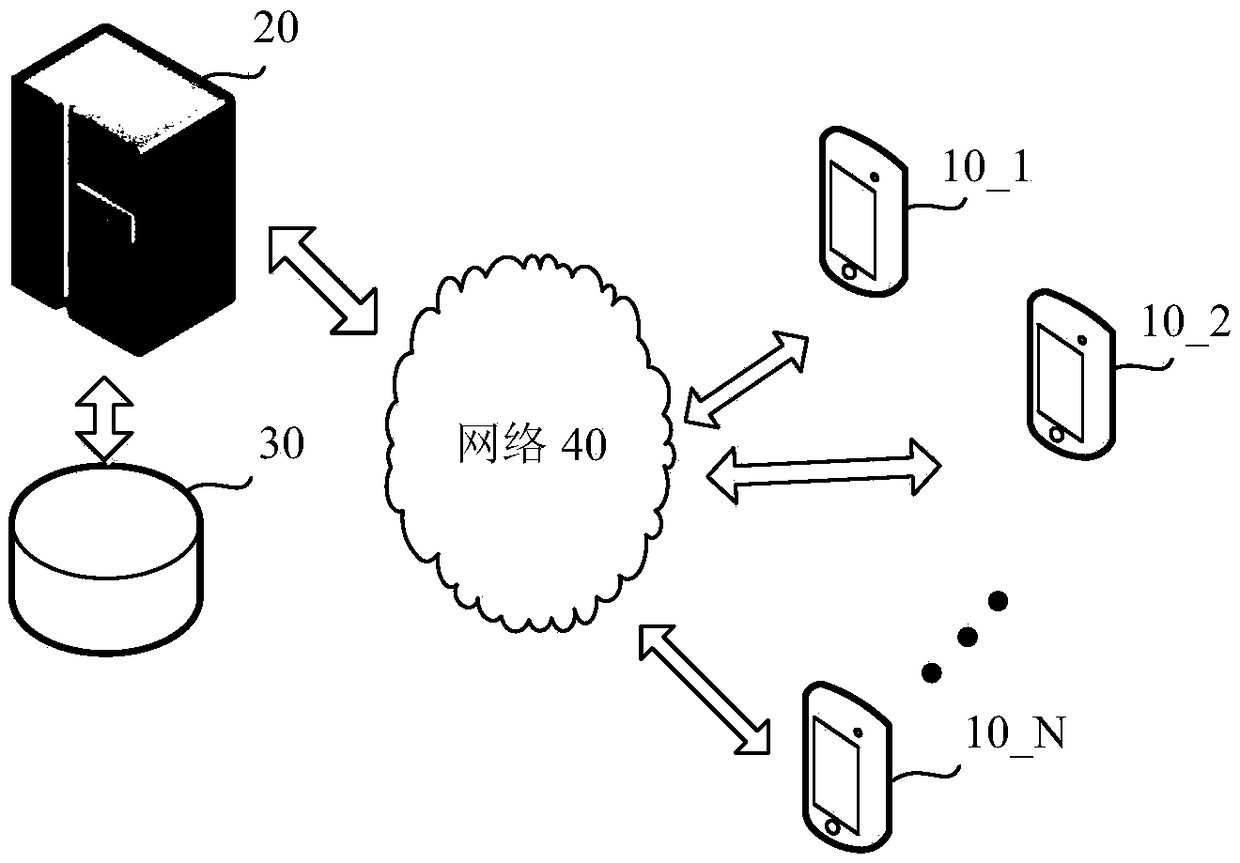

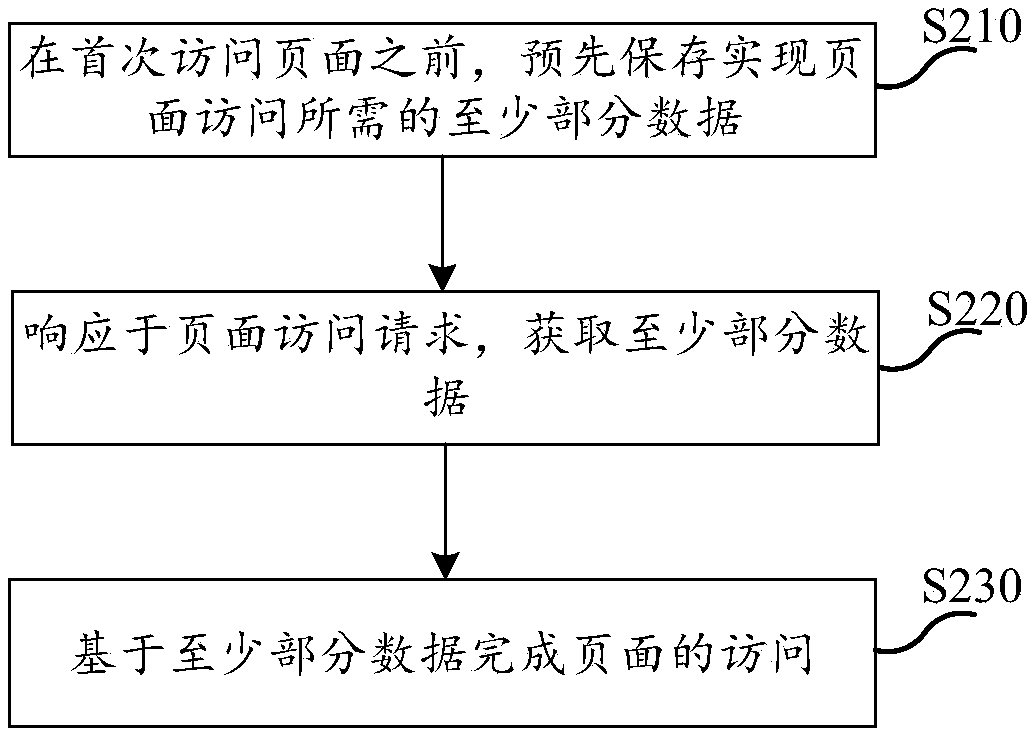

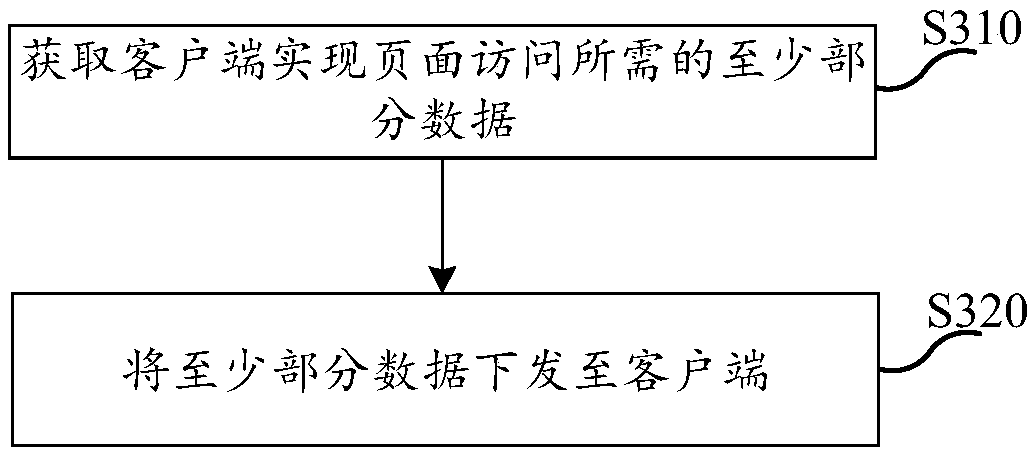

Page access method, device, apparatus, and storage medium

ActiveCN109325194AImprove performance experienceImprove first visit speedWeb data browsing optimisationAccess methodComputer science

The present disclosure provides a page access method, device, apparatus, and storage medium. Storing at least a portion of data required to implement page access in advance before the page is first accessed, wherein the at least a portion of the data comprises a parsing result of a script file required to implement page access; At least a portion of that data is acquired in response to the page access request; And completing access to the page based on at least part of the data. Thus, since the parsing result of the script file has been saved in advance when the user first accesses the page, the parsing step for the script file can be omitted and the parsed result saved in advance can be directly used. Thus, the speed of first page access can be improved, and the performance experience ofthe first page access by the user can be improved.

Owner:ALIBABA (CHINA) CO LTD

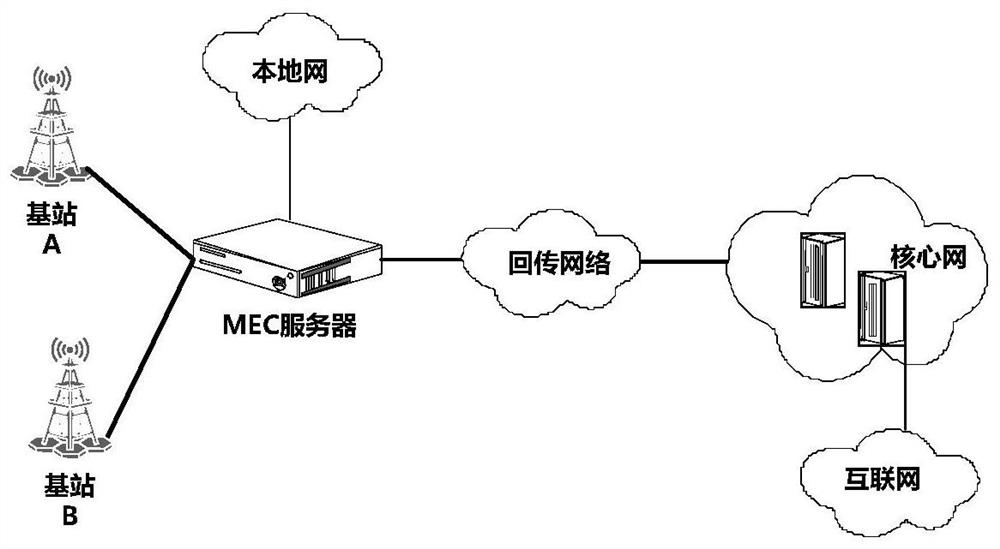

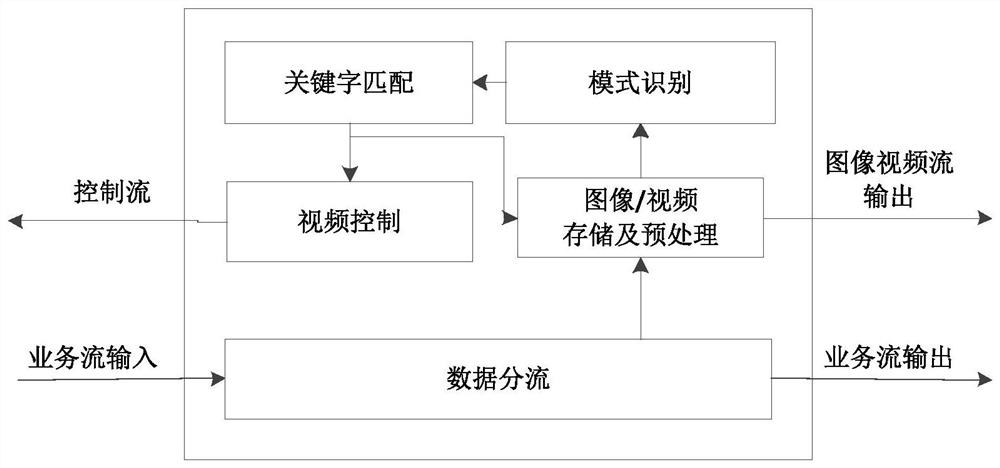

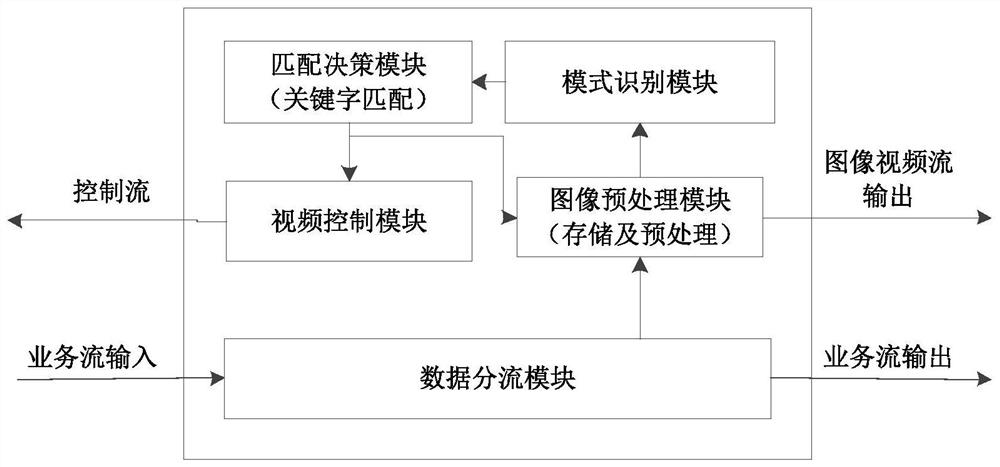

Mobile edge computing system and device for video monitoring service

PendingCN112016428AQuick calculationImprove operational efficiencyImage enhancementImage analysisVideo monitoringMobile edge computing

The invention provides a mobile edge computing system and device for a video monitoring service. The mobile edge computing system comprises a video monitoring device, a wireless terminal and a mobileedge computing server, the video monitoring device is connected to a mobile communication network through the wireless terminal, and the mobile edge computing server is deployed at the rear end of a base station in the mobile communication network to provide mobile edge computing service; the wireless terminal is accessed to a mobile communication network deployed with the mobile edge computing server; after the wireless terminal is accessed to a communication network, the mobile edge computing server shunts local monitoring video content according to a shunting rule and stores the local monitoring video content in a local content server, and the mobile edge computing server processes a local video through a pre-configured video recognition and processing strategy during shunting. By deploying the local edge calculation, the calculation can be quickly realized, the decision and the control are further made, and the real-time performance is strong.

Owner:深圳微品致远信息科技有限公司

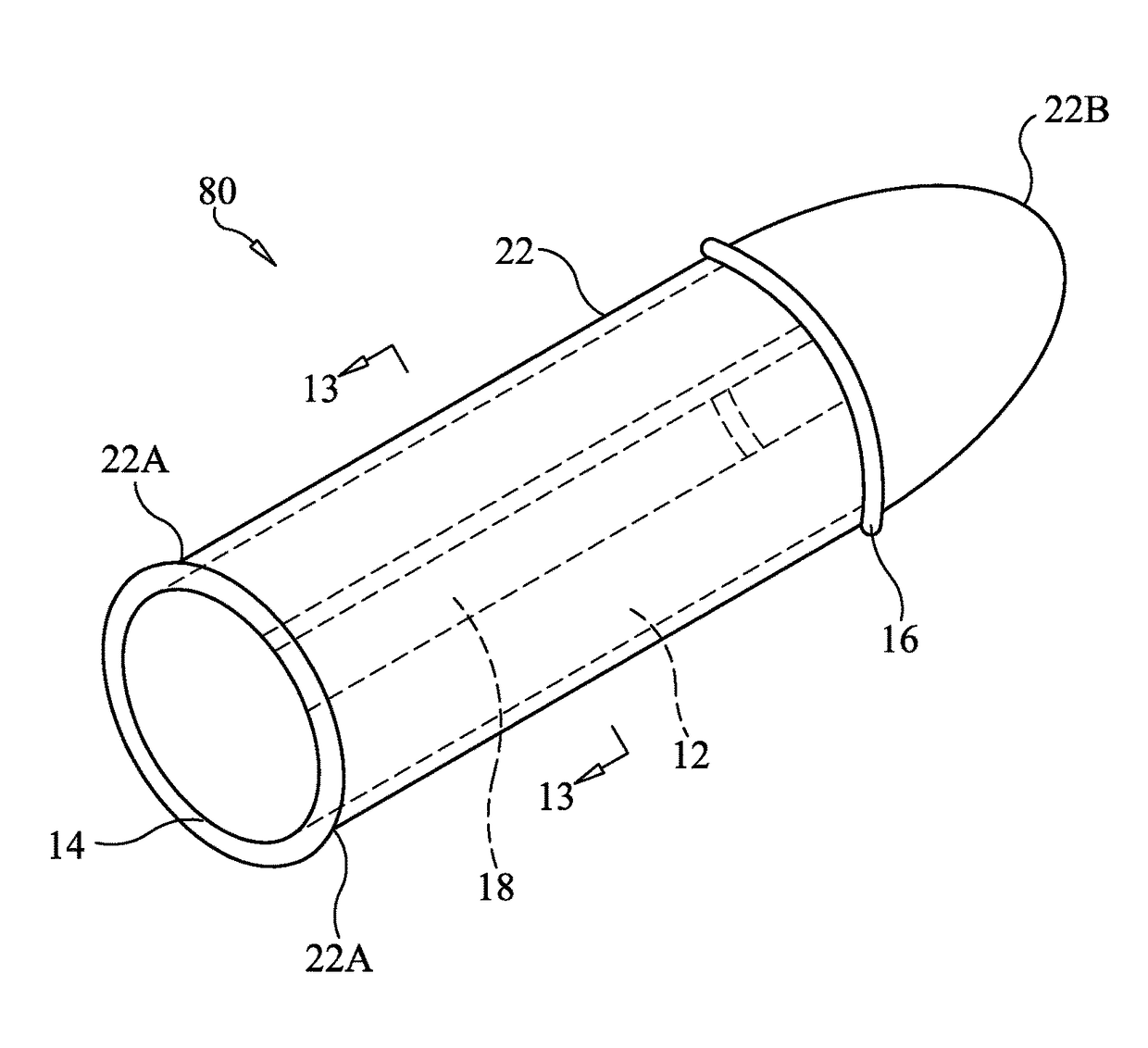

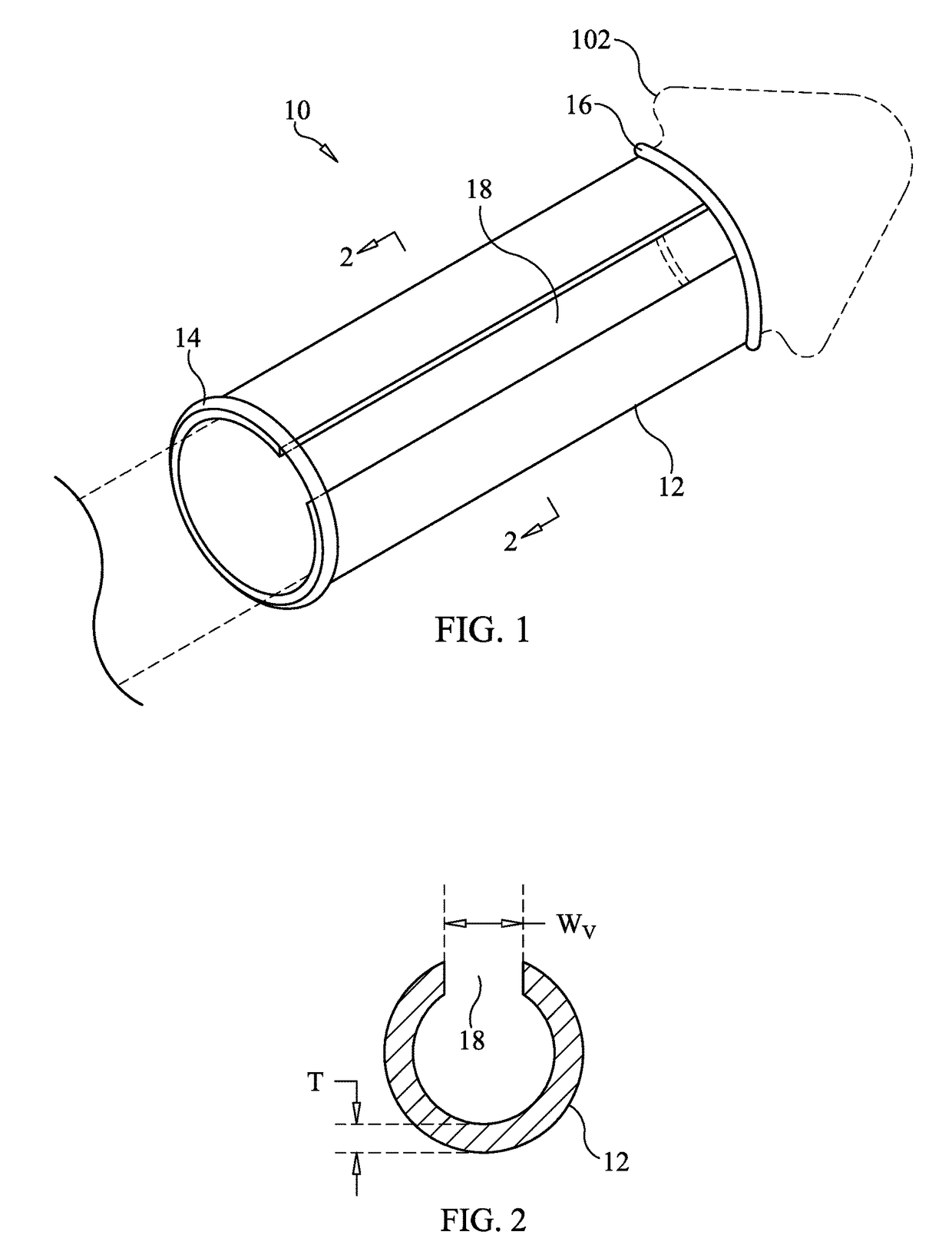

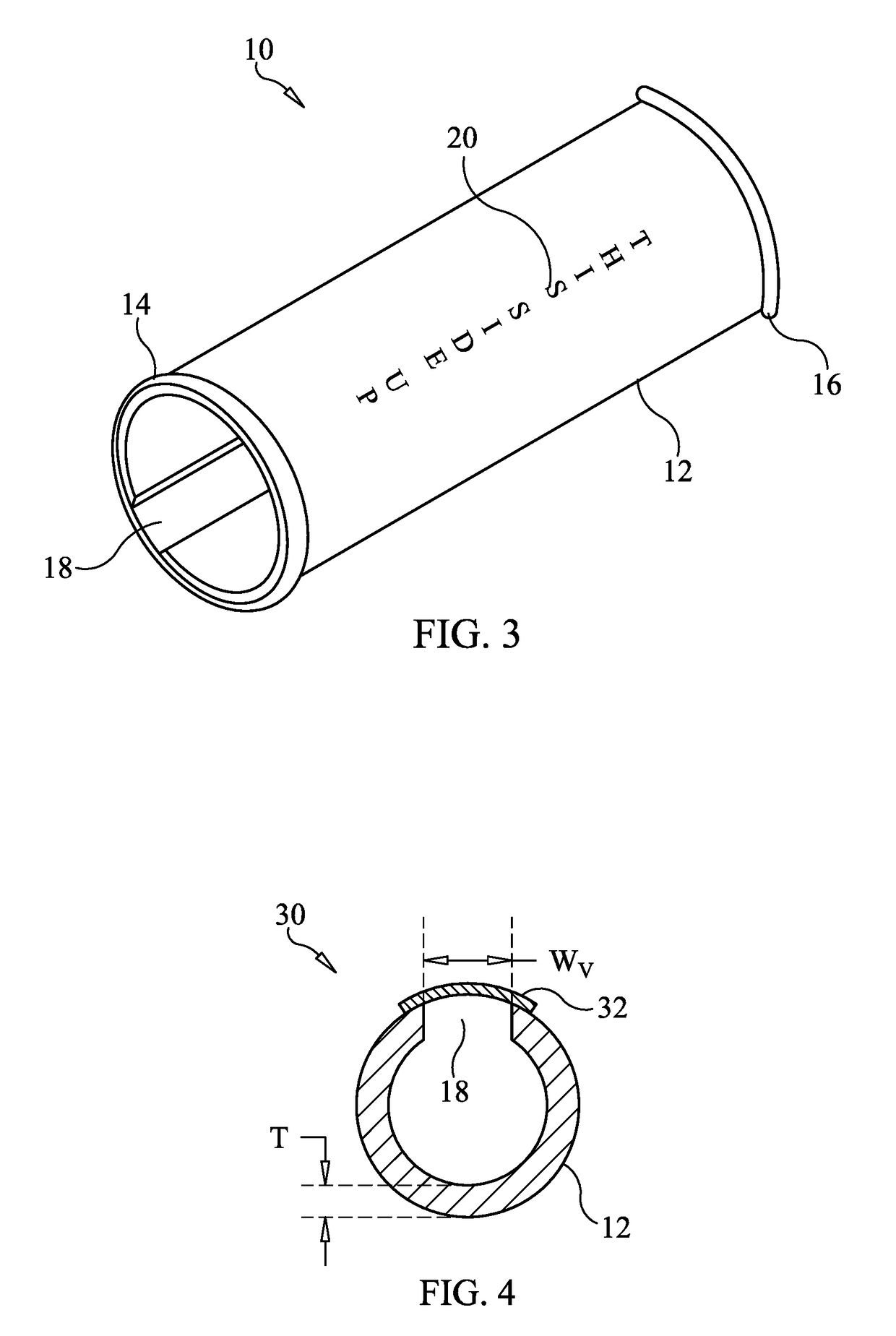

Penis enhancing condom

ActiveUS20130269707A1Improve performance experienceSuperior male prophylacticMale contraceptivesPenis support devicesPenisMedicine

Owner:IVORY BRIAN

Penis enhancing condom

ActiveUS20130269708A1Improve performance experienceSuperior male prophylacticMale contraceptivesPenis support devicesPenisMedicine

Owner:IVORY BRIAN

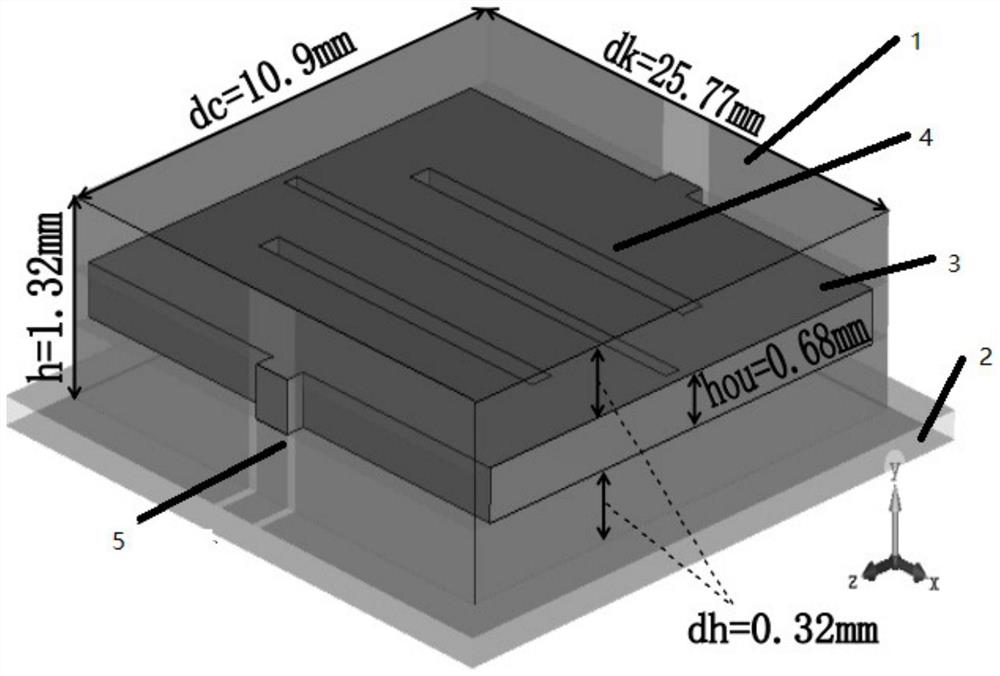

Double-frequency filter and mobile terminal

InactiveCN112186315AImprove isolationSmall footprintWaveguide type devicesTransmission zerosFrequency filtering

The invention discloses a dual-frequency filter and a mobile terminal, and the dual-frequency filter comprises a metal cavity formed by a cavity surface with five outer walls printed with metal and acoplanar waveguide, and a metal plate parallel to the coplanar waveguide is arranged in the metal cavity. The metal plate is provided with three slot lines parallel to the two long end faces of the metal plate, and the central positions of the two long end faces are respectively connected with the central conductor band of the coplanar waveguide through a metal patch. Compared with the prior art,the filter has the advantages that controllability of high-pass band width and low-pass band width is achieved, the designed filter can generate two transmission zero points between a high-pass band and a low-pass band and has the advantages of being small in size, high in return loss, good in inter-band isolation effect performance and the like, on one hand, hardware occupied space of the whole device is saved, and on the other hand, better performance experience can be provided.

Owner:NUBIA TECHNOLOGY CO LTD

A Method of Rapid Horizontal Expansion of Database

ActiveCN102930062BImprove the speed of expansionEasy to monitorSpecial data processing applicationsIn-memory databaseData segment

Owner:NANJING FUJITSU NANDA SOFTWARE TECH

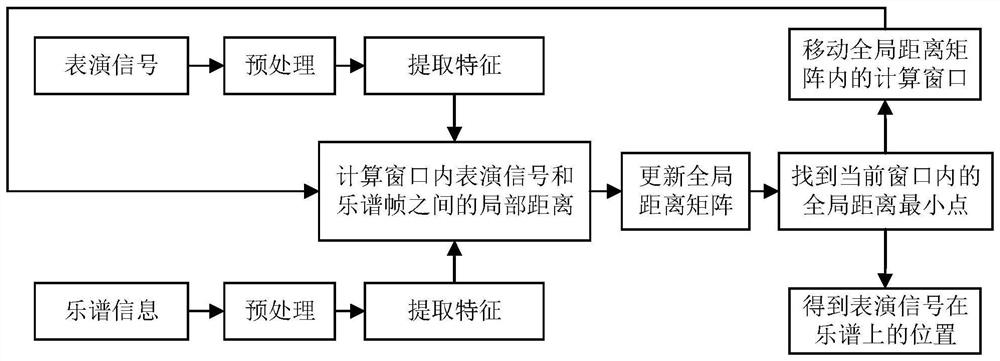

Accompaniment method for actively following music signals and related equipment

ActiveCN112669798AImprove performance experienceAdd artistryElectrophonic musical instrumentsSpeech analysisEngineeringPredicting performance

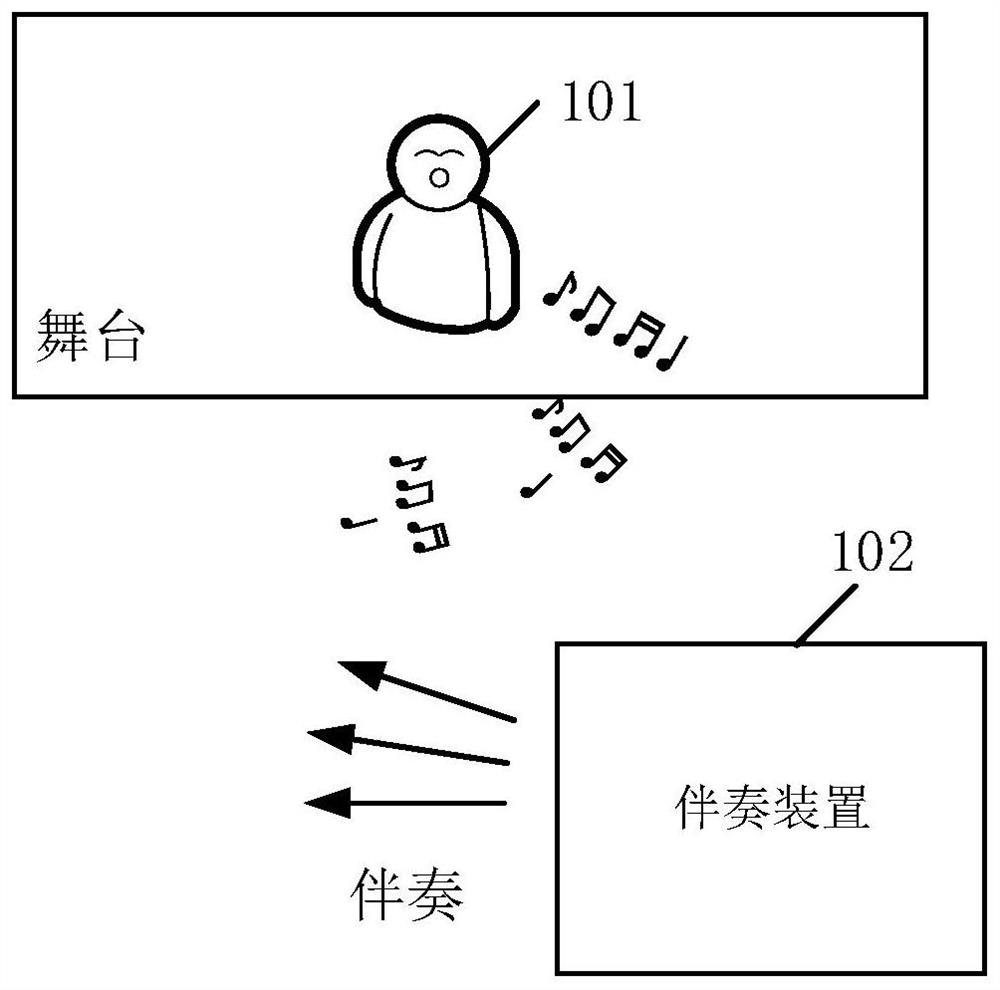

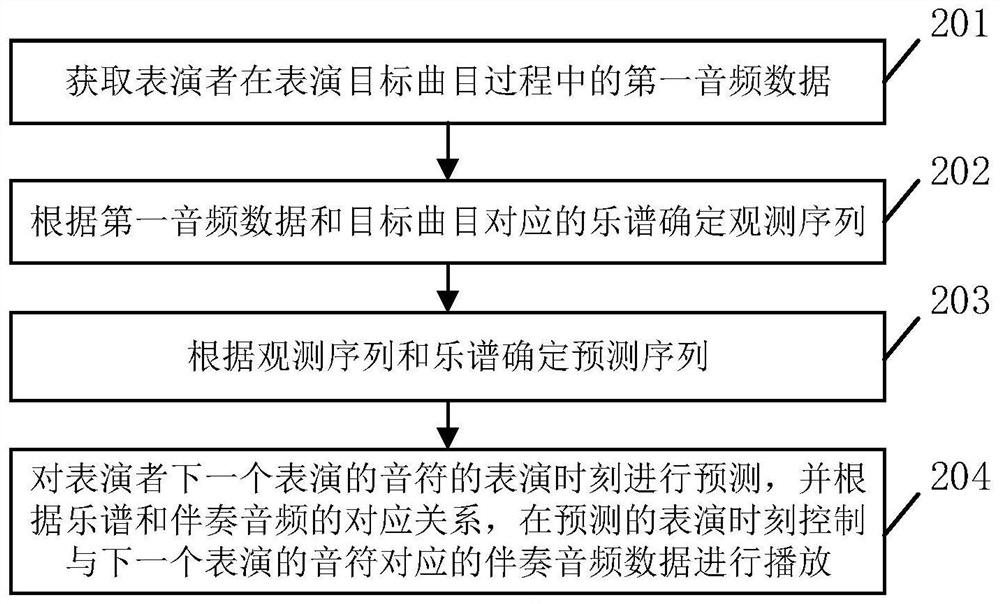

The embodiment of the invention discloses an accompaniment method for actively following music signals and related equipment. The accompaniment method comprises the following steps that: first audio data of a performer in the performance process of a target track are acquired; an observation sequence is determined according to the first audio data and music scores corresponding to the target track, wherein the observation sequence is a corresponding sequence of each note in the first audio data and a performance moment of each note; a prediction sequence is determined according to the observation sequence and the music scores, wherein the prediction sequence is a corresponding sequence of each note of the first audio data and the predicted performance moments; and according to the observation sequence and the prediction sequence, the performance moment of the musical note of the next performance of the performer is predicted, and according to the corresponding relationship between the music scores and accompaniment audios, accompaniment audio data corresponding to the musical note of the next performance is controlled to be played at the predicted performance moment. According to the method, the occurrence time of the next note to be performed of the performer can be accurately predicted, the accompaniment is automatically matched with the performance so as to be played, and the interaction effect between the accompaniment and the performance is improved.

Owner:深圳市芒果未来科技有限公司

Vacuum Prosthetic Penis, System and New Method of Intercourse

InactiveUS20190274869A1Improve performance experienceEasy to donPenis support devicesNon-surgical orthopedic devicesMassageVacuum pump

A sexual aid device, system and method comprising: an exterior wall; interior air bladder; flange; an outlet; penile sheath; penile port; wherein air is removed from the interior air bladder causing the penile sheath to expand toward the exterior wall and adhere to and engorge an inserted penis. In an optional saddled embodiment the inner and outer scrotal support of the chassis and sheath help to hold the device in place while providing a comfortable scrotal opening an enabling gentle massage of the scrotum and perineum through use of a perineum bump. In order to remove air from the interior air bladder a bulb, piston, motorized and / or other vacuum pumps may be used.

Owner:PERFECT FIT BRAND

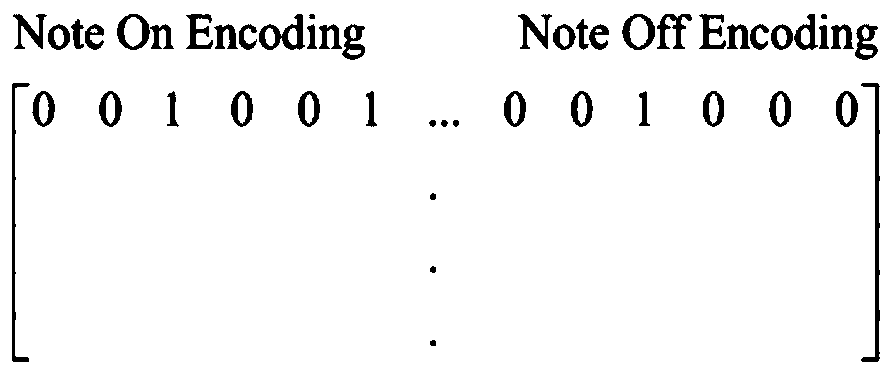

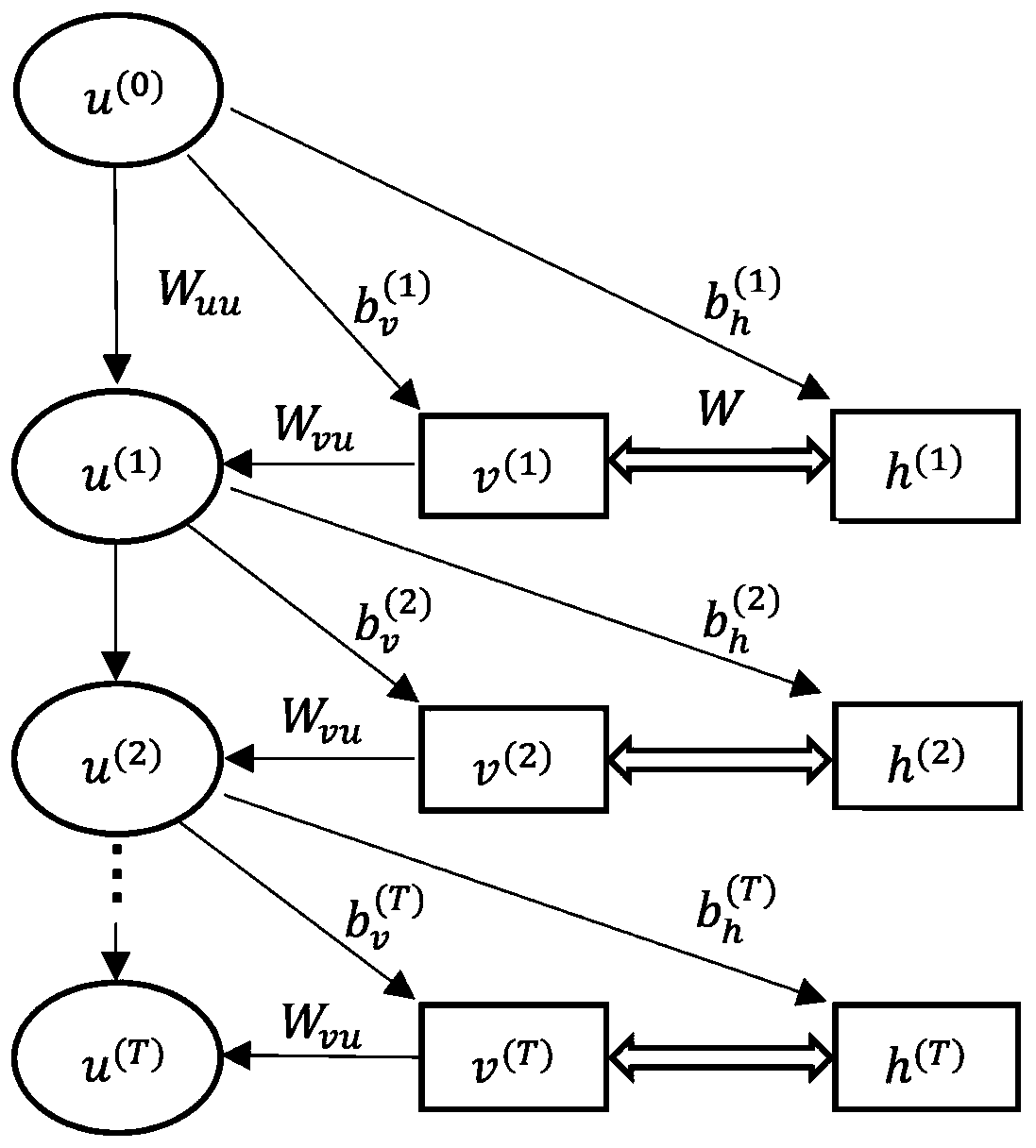

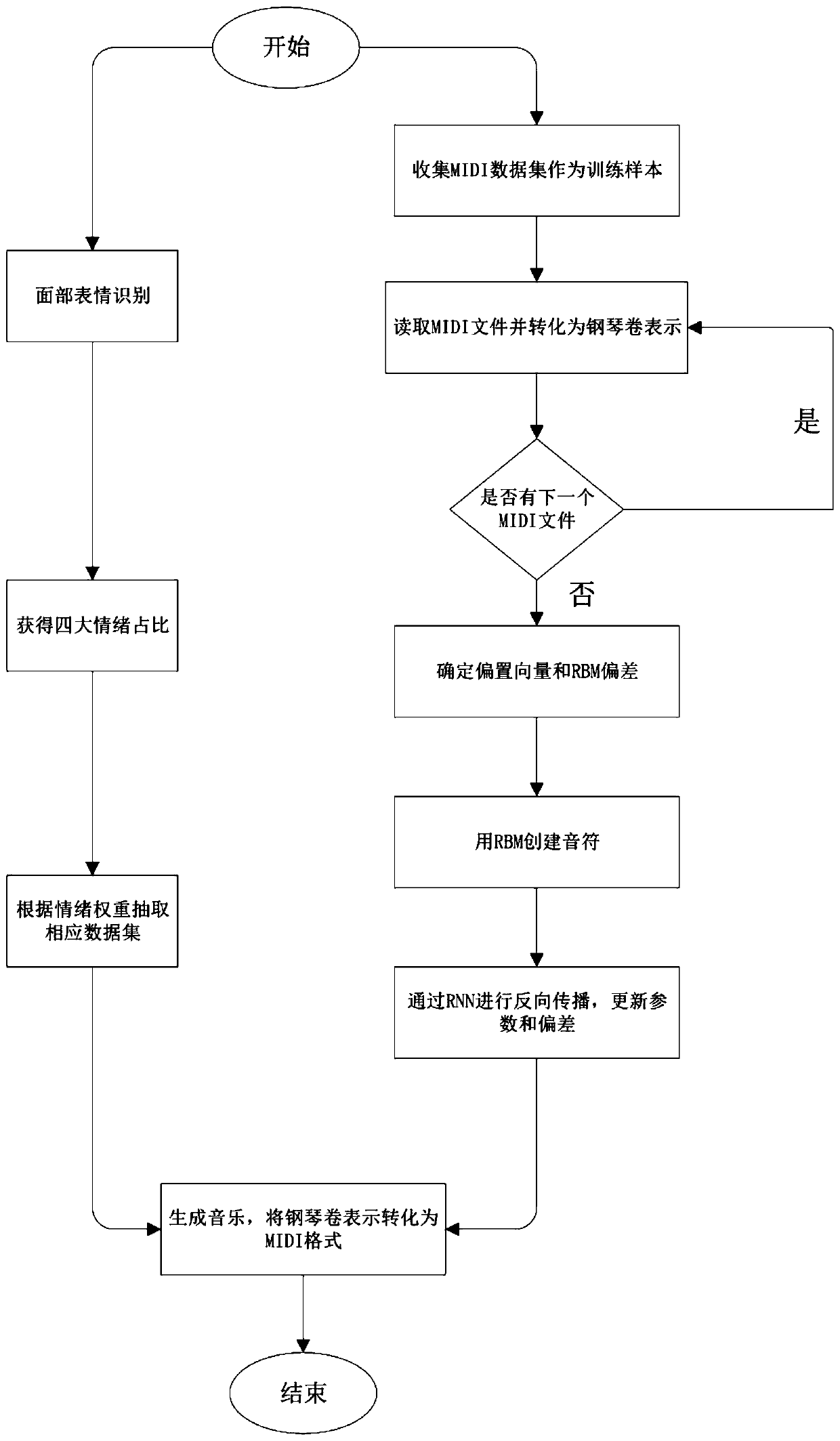

Music generation method based on facial expression recognition and recurrent neural network

InactiveCN110309349ALow hardware dependencyImprove performance experienceAcquiring/recognising facial featuresSpecial data processing applicationsPattern recognitionRegulation of emotion

The invention discloses a music generation method based on expression recognition and a recurrent neural network. The method comprises the following steps: 1) obtaining music audio data and characterexpression data; 2) classifying and marking the data; 3) processing audio data and image data; 4) initializing an RNN-RBM neural network; 5) training the RNN-RBM neural network; and 6) identifying thefacial expression by using VGG19 + dropout + 10crop + softmax. And 7) inputting the recognized emotion information into the trained RNN-RBM network to obtain final music generation The emotion regulation method combines facial emotion recognition and AI music generation, can generate music according to the emotion of the person, achieves the purpose of emotion regulation, and has high practical application value.

Owner:ZHEJIANG UNIV OF TECH

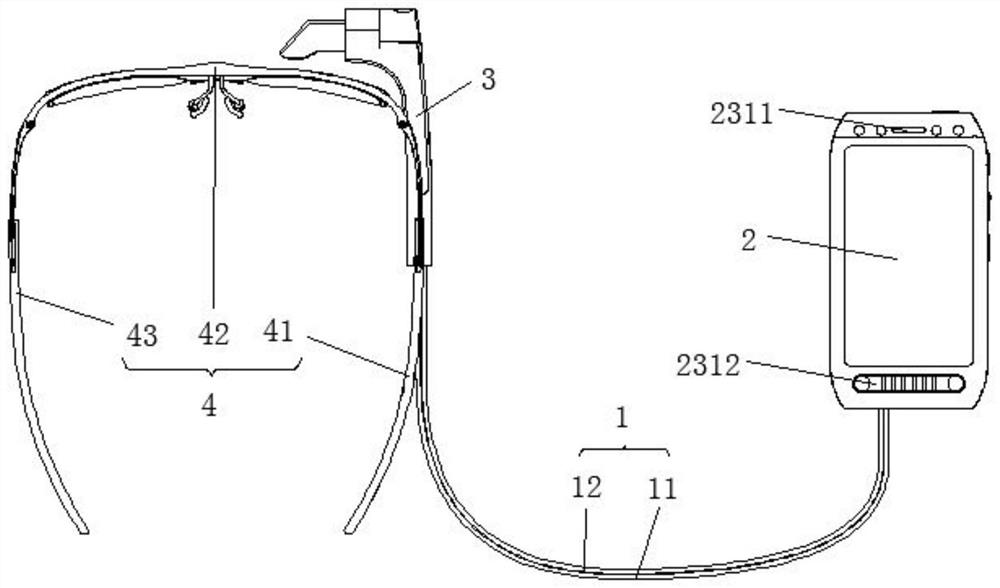

Heat dissipation device and intelligent glasses equipment

ActiveCN112739157AImprove stabilityImprove reliabilityModifications by conduction heat transferEnergy efficient computingThermodynamicsSmartglasses

The invention discloses a heat dissipation device. The heat dissipation device comprises a connecting wire, a control radiator and a wearable radiator, wherein the connecting wire is provided with an electric signal wire and an airflow channel wire; the control radiator is connected with the connecting wire and is provided with a control accommodating cavity and an air supply device; the wearable radiator is connected with the connecting wire and provided with a wearable containing cavity, and the wearable containing cavity is communicated with the control accommodating cavity through an airflow channel line. The invention further discloses intelligent glasses equipment which comprises the heat dissipation device, the surface shell temperature of the wearing end and the surface shell temperature of the operation end are controlled to be within an acceptable range, so that a user has good temperature rise experience; meanwhile, the local temperature is prevented from being too high, and good performance experience of the intelligent glasses is achieved. Function distribution is realized through separate arrangement of the control end and the wearing end, so that heat generated by the wearing end is controlled, the airflow is introduced into the control end through the airflow channel line, noise pollution caused by arrangement of a fan at the wearing end is avoided, and the heat dissipation device is simple in structure, remarkable in action effect and suitable for wide popularization.

Owner:BEIJING XLOONG TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com