A Weakly Supervised Temporal Action Localization Method Based on Action Coherence

A positioning method and coherence technology, applied in the field of computer vision, can solve problems such as ignoring differences, ignoring RGB and optical flow features, and inaccurate time labeling, so as to avoid limitations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

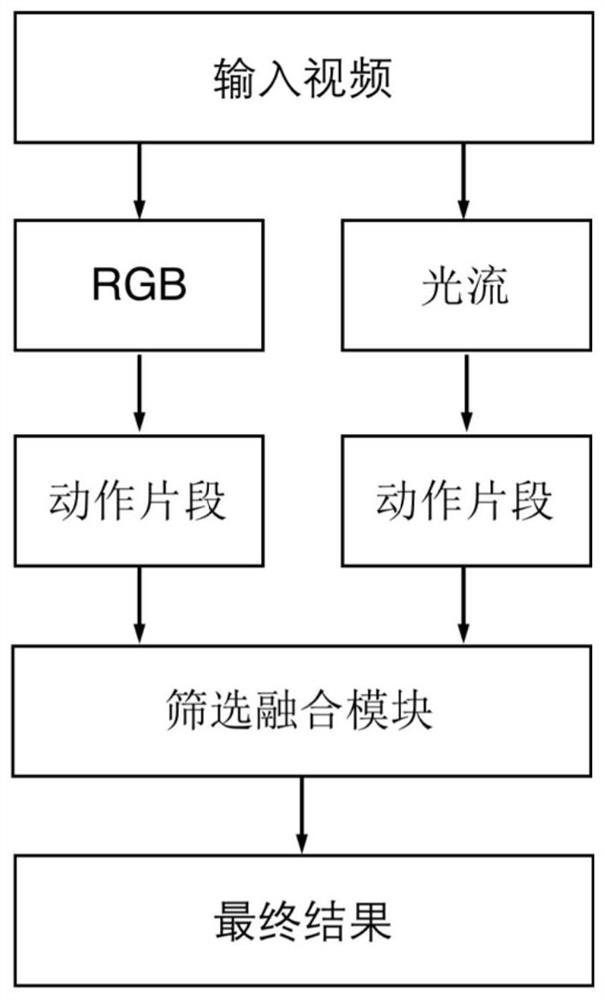

[0069] see figure 1 , a weakly supervised time-series action location method based on action coherence in an embodiment of the present invention, specifically comprising the following steps:

[0070] Step 1: Perform the following processing on RGB and optical flow respectively: Divide the video into a collection of 15 frames that do not overlap. For each segment, randomly select 3 frames as the representative frame of the segment, and then use Temporal SegmentNetwork for the 3 frames Extract the features and take the average value as the feature of the segment.

[0071] Step 2: Taking RGB as an example (optical flow is the same as RGB processing method), the RGB feature R obtained in step 1 s Input to multiple regression networks. Each regression network consists of a 3-layer 1D convolutional neural network and is assigned a segment length P. To avoid overfitting, the first two layers of the regression network consist of dilated convolutional networks with 256 kernels of si...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com