People flow counting method and system

A frame number and positive integer technology, applied in the field of object counting equipment and a computer-readable storage medium, can solve problems such as error detection and counting repetition, posture changes, environmental occlusion or scene asymmetry, and large amount of calculations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0083] An embodiment of the present invention provides an object tracking method, and the method includes:

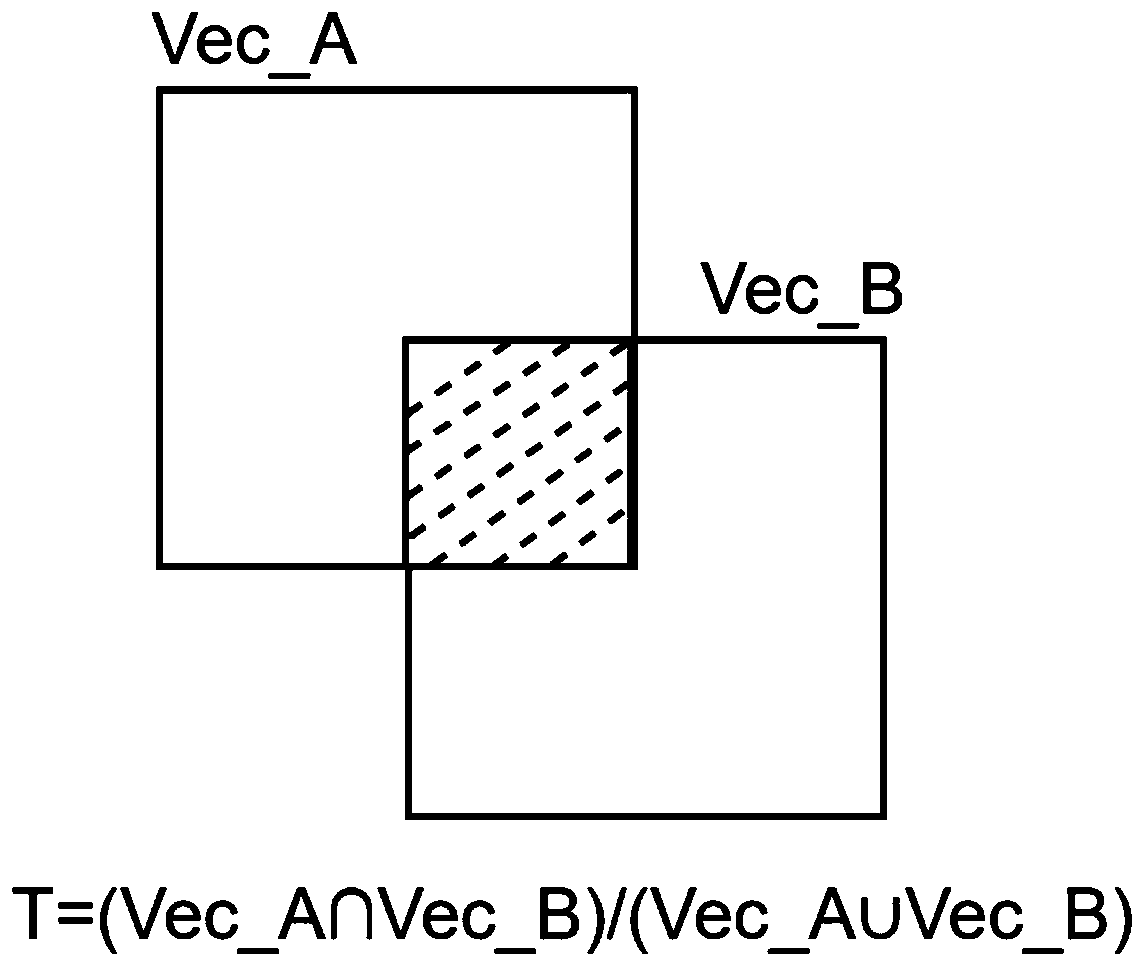

[0084] S1) Perform object detection on an image set with a frame sequence according to the frame sequence, and when a first image with a first object set is detected in the image set, obtain in the first image the same the first local vector corresponding to the position of the first object set relative to the first image, and then obtain the second image relative to the second object set in the second image with the second object set in the image set The second local vector corresponding to the position of , wherein the frame sequence of the first image is smaller than the frame sequence of the second image, and there is at least one object in the first object set;

[0085] S2) Obtain a set of tracking values according to the first local vector and the second local vector in combination with a preset tracking mapping relationship, and then obtain the set of tracking ...

Embodiment 2

[0137] Based on the object tracking method in Embodiment 1, the present invention also provides a method for counting objects by using the object tracking method, the method comprising:

[0138] S1) Acquire a tracking state set, wherein the tracking state set has a tracking state of at least one object, a frame sequence image set corresponding to the tracking state, and a frame sequence image set corresponding to each frame image in the frame sequence image set. The local vector corresponding to each object;

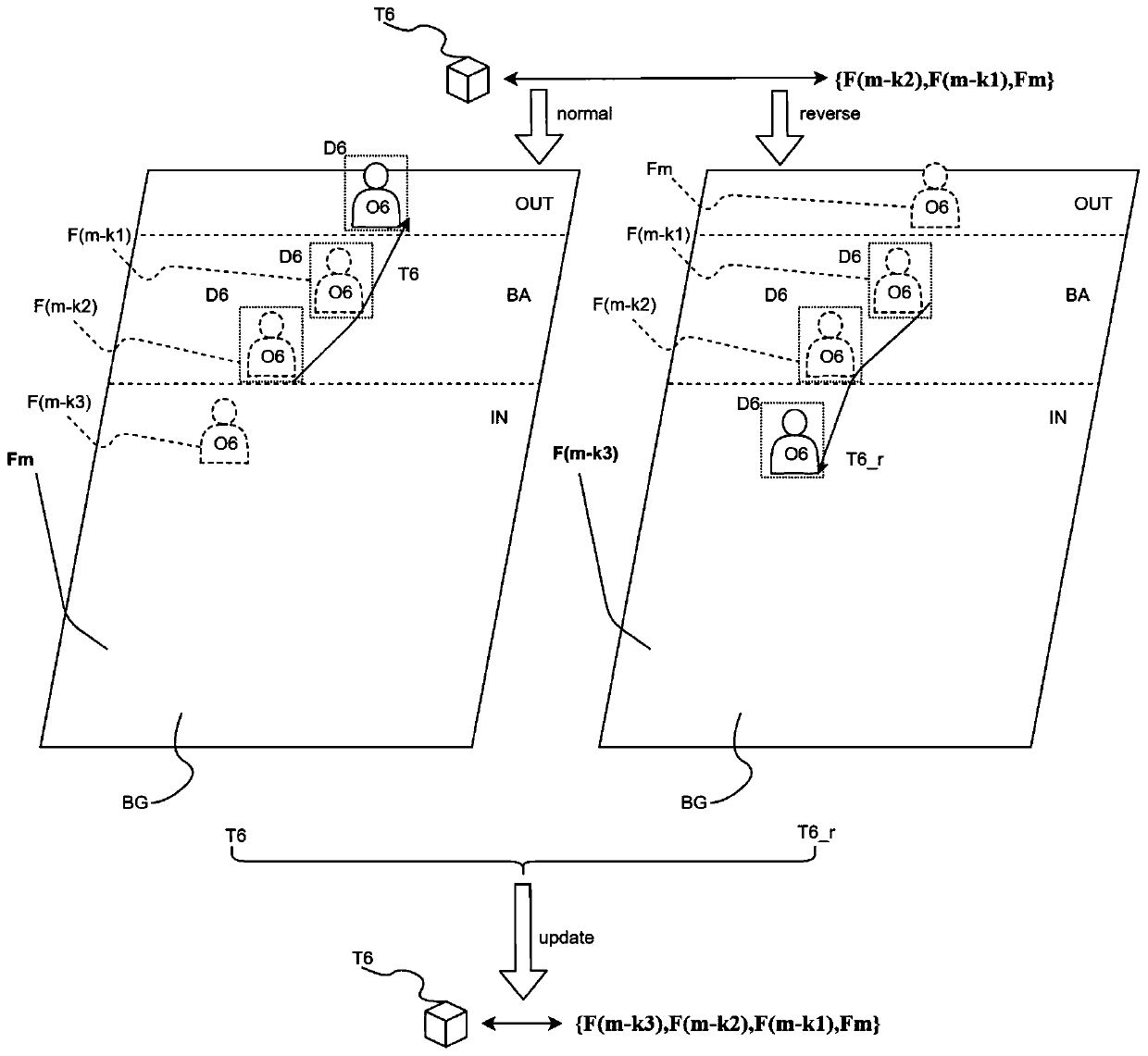

[0139] S2) Select a partial image area of each frame image in the set of frame-sequential images as a state identification area, and compare all local vectors corresponding to each object in the set of tracking states relative to all the local vectors in the frame order of the set of frame-sequential images. Described state identification area compares the position of each local vector, obtains the object position information set with order;

[0140] S3) according to ...

Embodiment 3

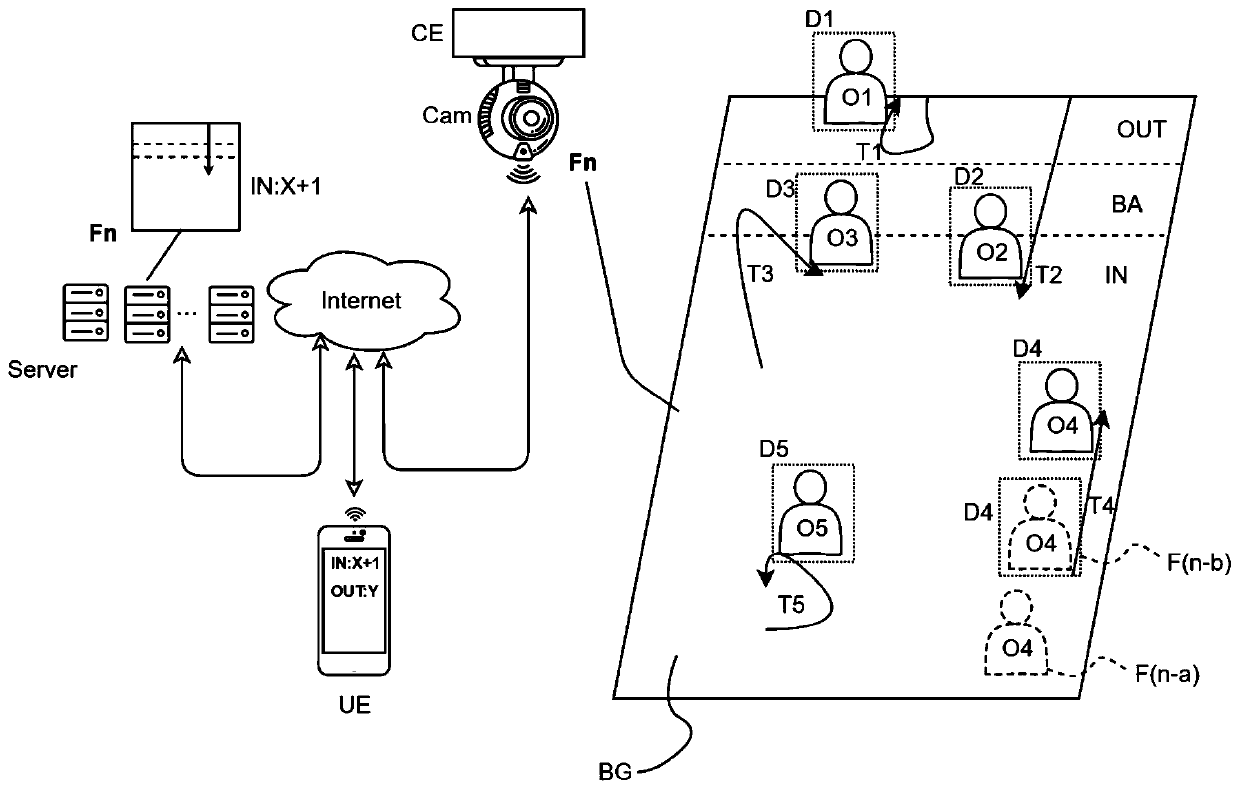

[0148] Based on Examples 1 and 2, as figure 1 and Image 6 , an embodiment of the present invention provides a system for counting objects by using an object tracking method, the system includes: one or more servers; also includes: one or more detection ends; the server and the detection end encrypt data through the Internet (Internet). interact;

[0149] Image 6The real picture of the in-store shopping room is derived from the people flow counting system built by the present invention; the detection end is used to form the tracking state aggregate data, the detection end has a charge-coupled device and a processor for image capture, and the processor and the charge-coupled device can be in the same place. In the device, such as an intelligent detection camera (with certain computing power), the processor can also be located in an independent detection end server, the charge-coupled device is located in the camera, and the camera transmits image data to the detection end se...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com