Image fusion method based on brightness self-adaption and significance detection

An image fusion and adaptive technology, applied in the field of image fusion, can solve problems such as poor fusion effect and unsatisfactory image details, and achieve the effect of improving the fusion effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

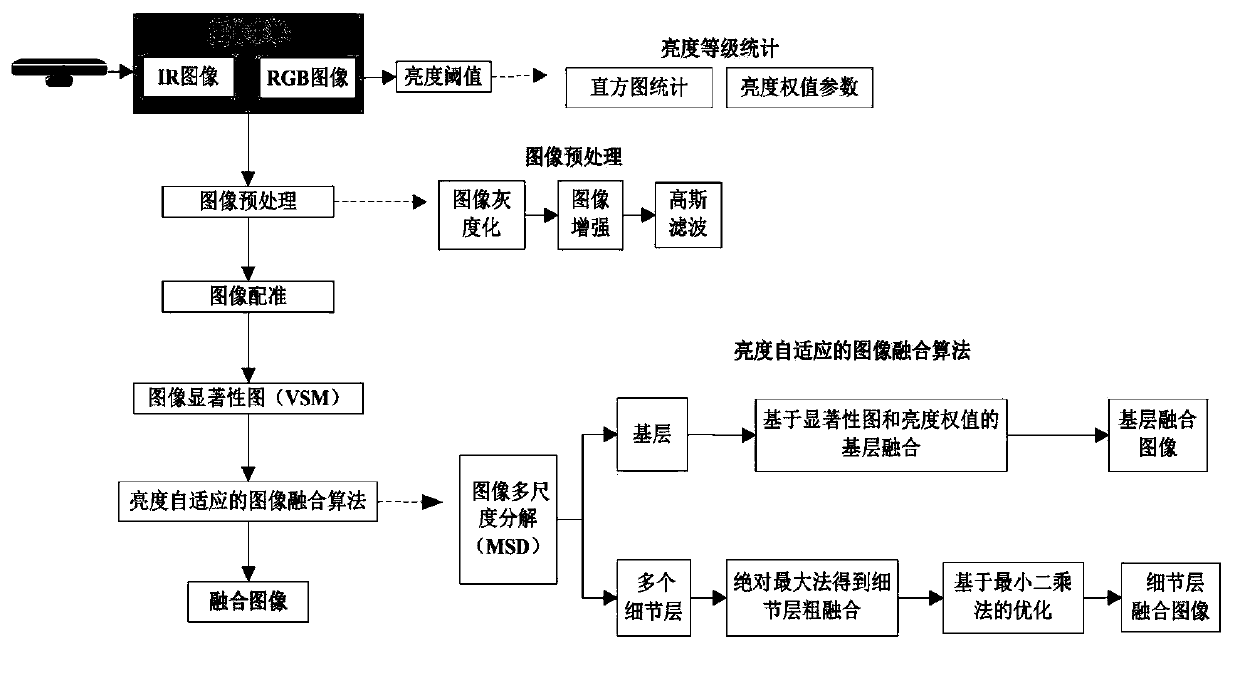

[0052] Based on the influence of brightness information on scene recognition, the present invention defines image brightness levels, classifies images, and saves computing time when image fusion is not required; based on the relationship between image characteristics and ambient brightness, the brightness weight function is designed to optimize the fusion of the base layer , and at the same time use the saliency feature map of the image to preserve the overall contrast information of the image base layer, and optimize the fusion of the image detail layer by the least square method.

[0053] Such as figure 1 As shown, an image fusion method based on brightness adaptation and saliency detection includes the following steps:

[0054] S1: collecting infrared images and visible light images and performing image preprocessing respectively, the image preprocessing process includes: image grayscale processing, image enhancement processing, filtering and denoising processing;

[0055]...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com