Training method for bimodal emotion recognition model and bimodal emotion recognition method

A technology for emotion recognition and speech emotion recognition, applied in the training of dual-modal emotion recognition model and dual-modal emotion recognition field, can solve the problem of low accuracy of emotion recognition, and achieve the effect of improving training speed and high accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

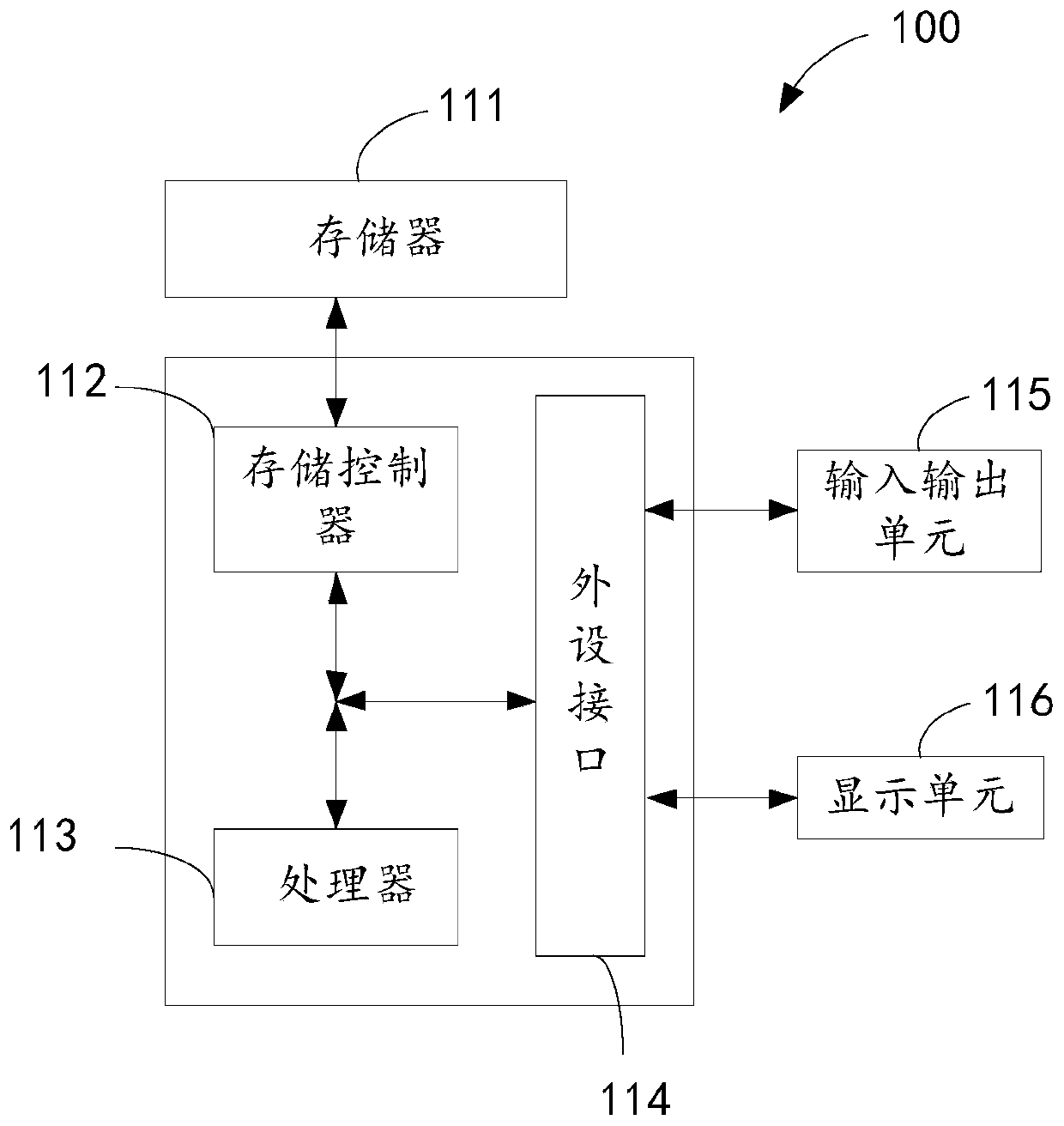

[0076] In order to facilitate the understanding of this embodiment, firstly, an electronic device that executes the dual-modal emotion recognition model training method or the dual-modal emotion recognition method disclosed in the embodiment of the present application is introduced in detail.

[0077] Such as figure 1 Shown is a block diagram of the electronic device. The electronic device 100 may include a memory 111 , a storage controller 112 , a processor 113 , a peripheral interface 114 , an input and output unit 115 , and a display unit 116 . Those of ordinary skill in the art can understand that, figure 1 The shown structure is only for illustration, and does not limit the structure of the electronic device 100 . For example, the electronic device 100 may also include a ratio figure 1 more or fewer components than shown in, or with figure 1 Different configurations are shown.

[0078] The memory 111 , storage controller 112 , processor 113 , peripheral interface 114...

Embodiment 2

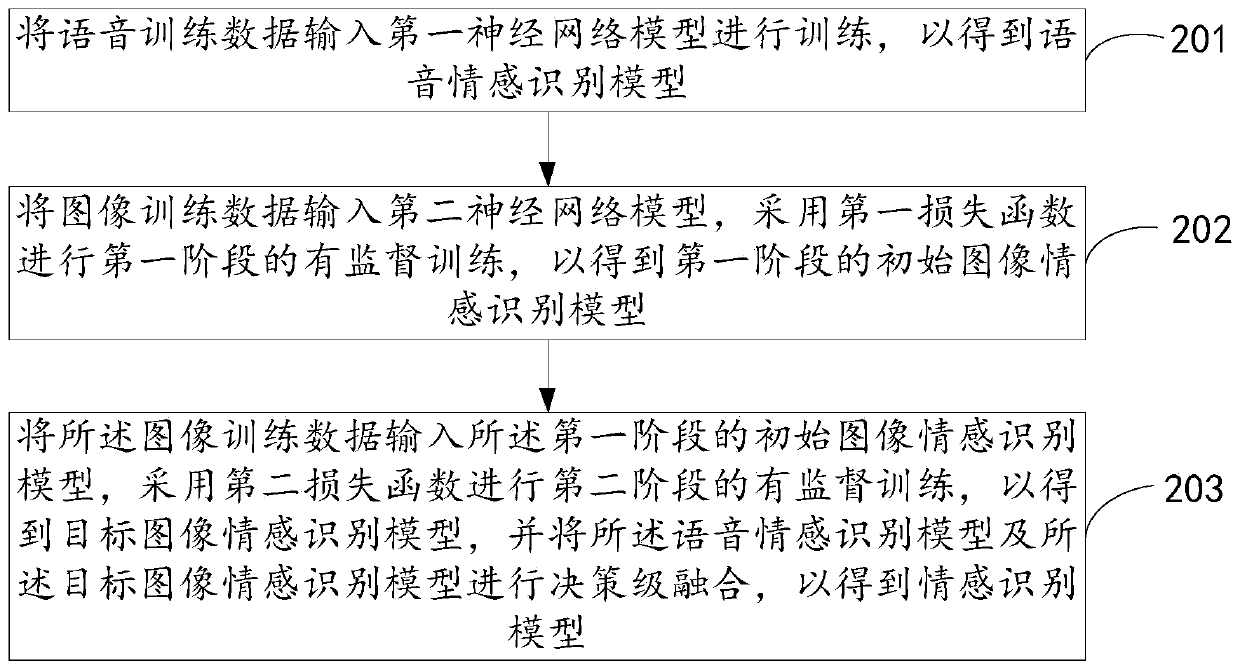

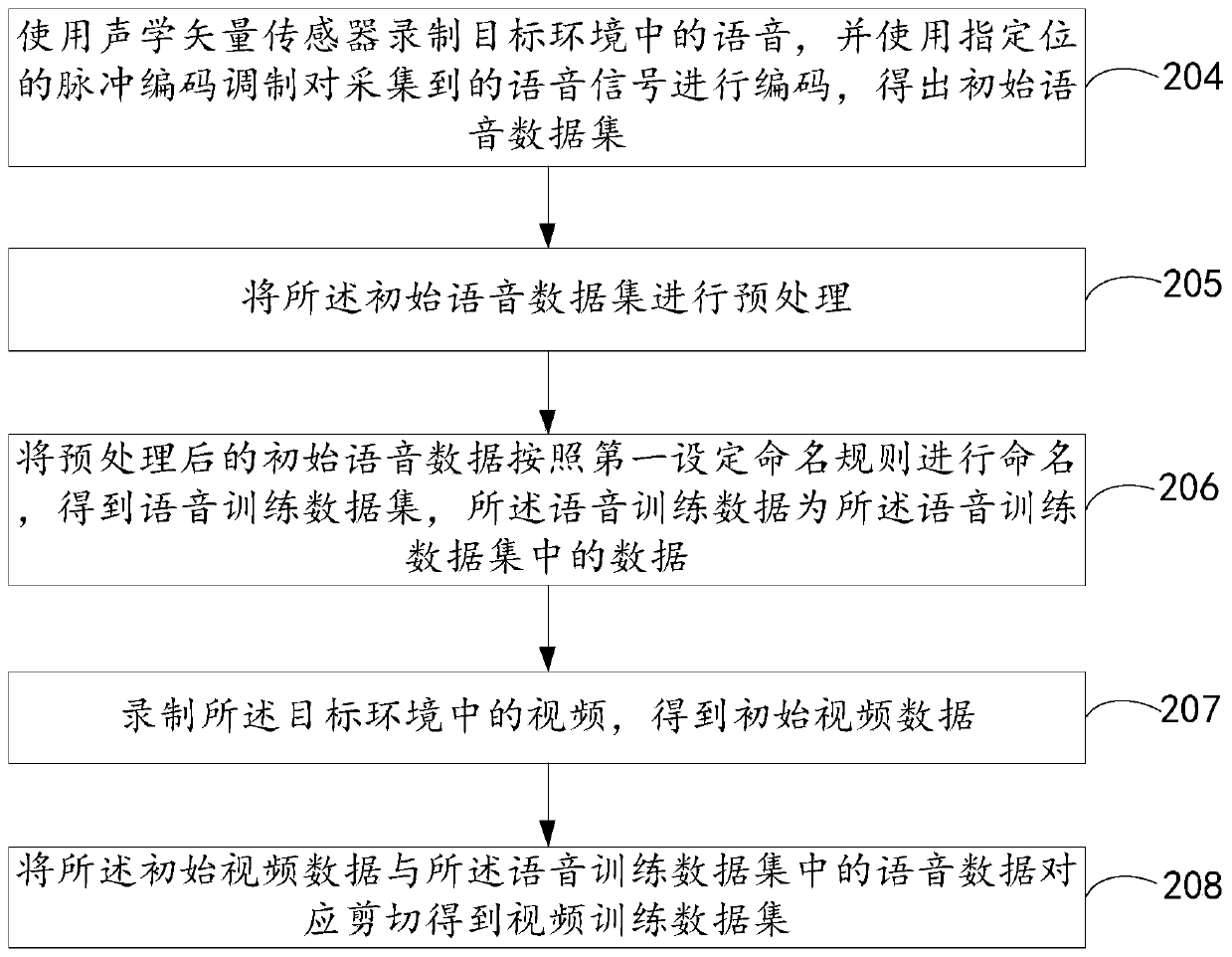

[0086] see figure 2 , is a flowchart of a method for training a dual-modal emotion recognition model provided in an embodiment of the present application. The following will be figure 2 The specific process shown will be described in detail.

[0087] Step 201, input speech training data into the first neural network model for training, so as to obtain a speech emotion recognition model.

[0088] Optionally, step 201 may include: input speech training data into the first neural network model, and perform supervised training using a joint loss function composed of an affinity loss function (Affinity loss) and a focal loss function (Focal loss), to obtain speech Emotion recognition model.

[0089] Aiming at the problem of emotional confusion and emotional data category imbalance, the speech emotion recognition model uses the idea of metric learning and uses the combined loss of Affinity loss and Focal loss as the loss function. Compared with the existing method that only ...

Embodiment 3

[0136] Based on the same application idea, an emotion recognition model training device corresponding to the emotion recognition model training method is also provided in the embodiment of the present application. Since the problem-solving principle of the device in the embodiment of the application is the same as the above-mentioned emotion recognition model training method in the embodiment of the application Similar, therefore, the implementation of the device can refer to the implementation of the method, and repeated descriptions will not be repeated.

[0137] see Figure 4 , is a schematic diagram of the functional modules of the emotion recognition model training device provided in the embodiment of the present application. Each module in the emotion recognition model training device in this embodiment is used to execute each step in the above method embodiment. The emotion recognition model training device includes: a first training module 301, a second training modul...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com