System and method for tandem feature extraction for general speech tasks in speech signals

A voice signal and series feature technology, applied in voice analysis, voice recognition, instruments, etc., can solve the problems of emotion recognition task models not universal, no universal solution, low integration accuracy, etc., to meet the needs of rich and diverse emotions High-quality, high-quality recorded voice, strong voice effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

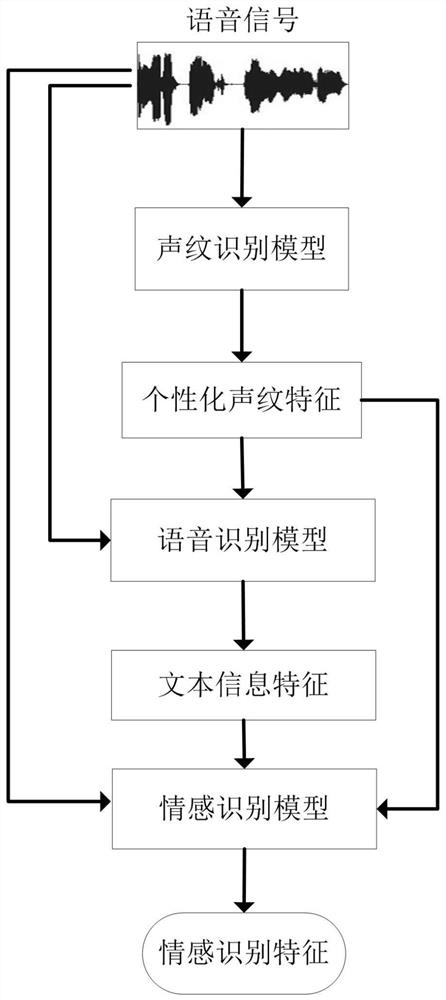

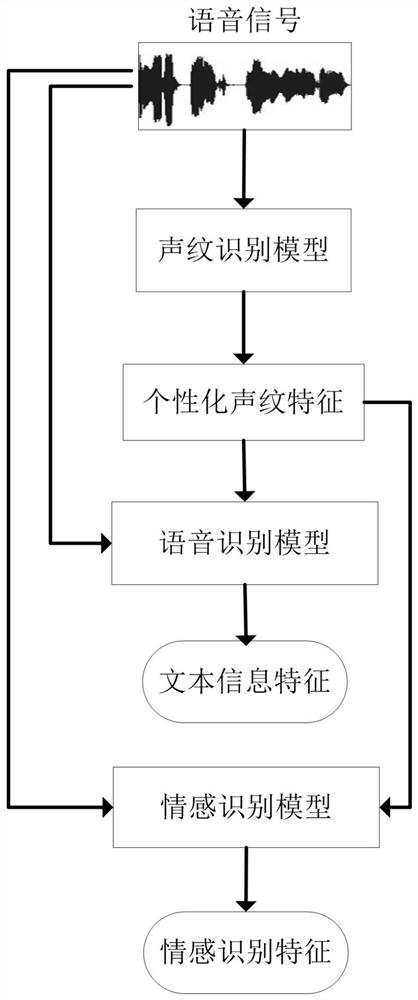

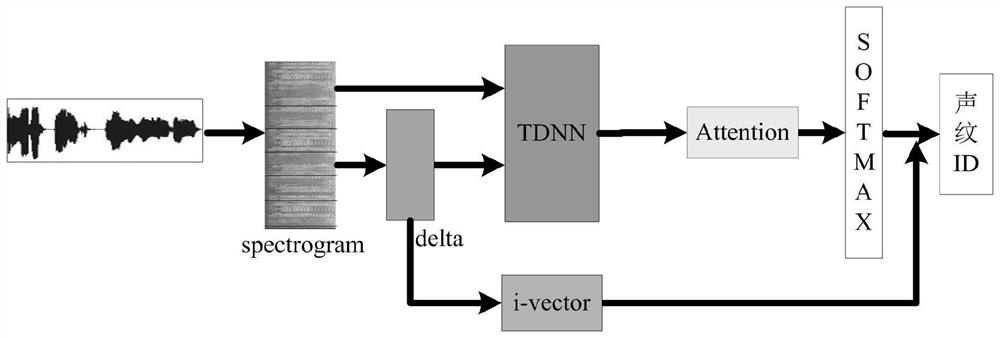

[0052] The present invention will be further described below in conjunction with accompanying drawing:

[0053] The system of the present invention mainly comprises emotional corpus, speech preprocessing model, speech feature extraction model; Emotional corpus is the collection based on the form of natural language construction real emotion, introduces statistical method on the basis of traditional greedy algorithm when setting up database; Emotional corpus Perform voice preprocessing after establishment;

[0054] The emotional corpus is characterized by large data scale, accurate emotional expression, and high-quality recorded voice. It has the characteristics of multi-age and high-level speech emotion, and the speech involved is dense and highly recognizable, which meets the needs of rich and diverse emotions to a certain extent. Emotional speech can be divided into natural speech, induced speech and performance speech according to different collection methods. This databa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com