Robot visual calibrating method based on perspective transformation model

A technology of robot vision and perspective transformation, applied in the field of robotics, can solve the problems of cumbersome calibration process, complex calibration process, and inability to meet the precision requirements of industrial robots, and achieve the effects of cost reduction, simple calibration process, and high calibration accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

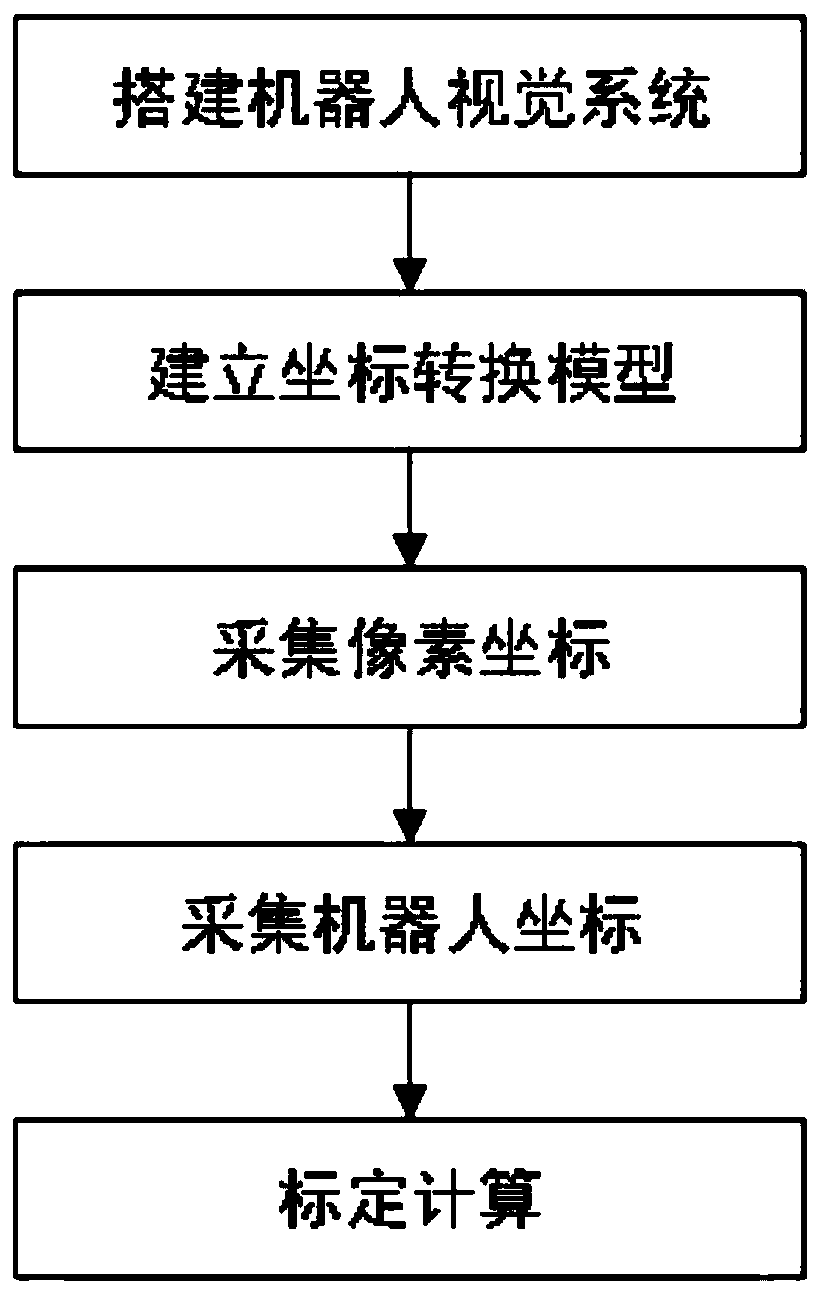

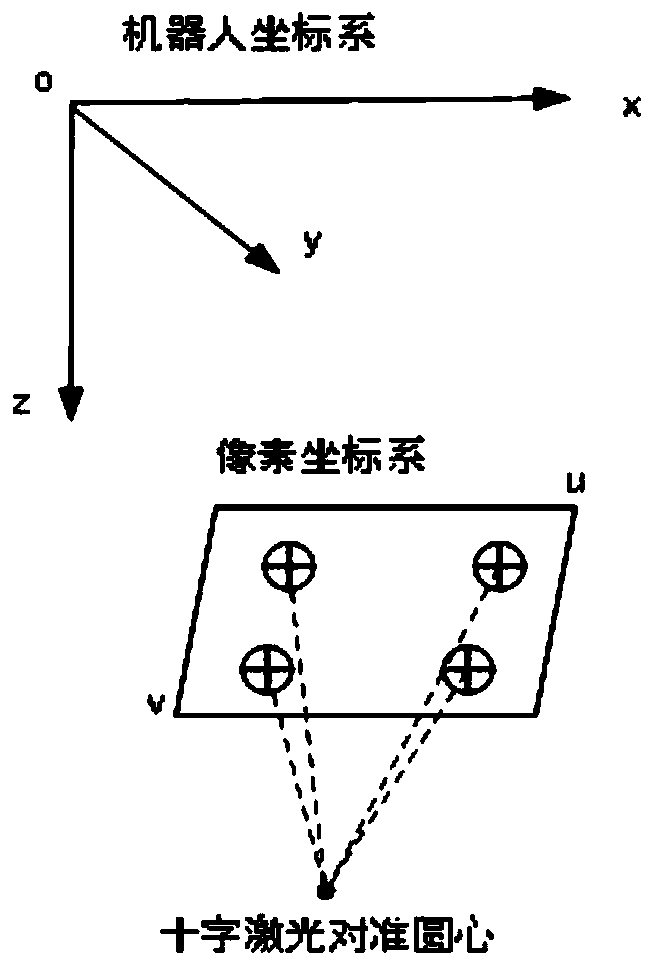

[0040] A robot vision calibration method based on a perspective transformation model. According to the perspective transformation principle between plane coordinate systems, the relationship between the pixel coordinate system and the robot coordinate system is established, and four sets of pixel coordinates and coordinates are collected by using four non-collinear mark points. Robot coordinates, calibration and calculation of coordinate transformation model parameters, used for vision-guided robot positioning. Process such as figure 1 As shown, it specifically includes the following steps:

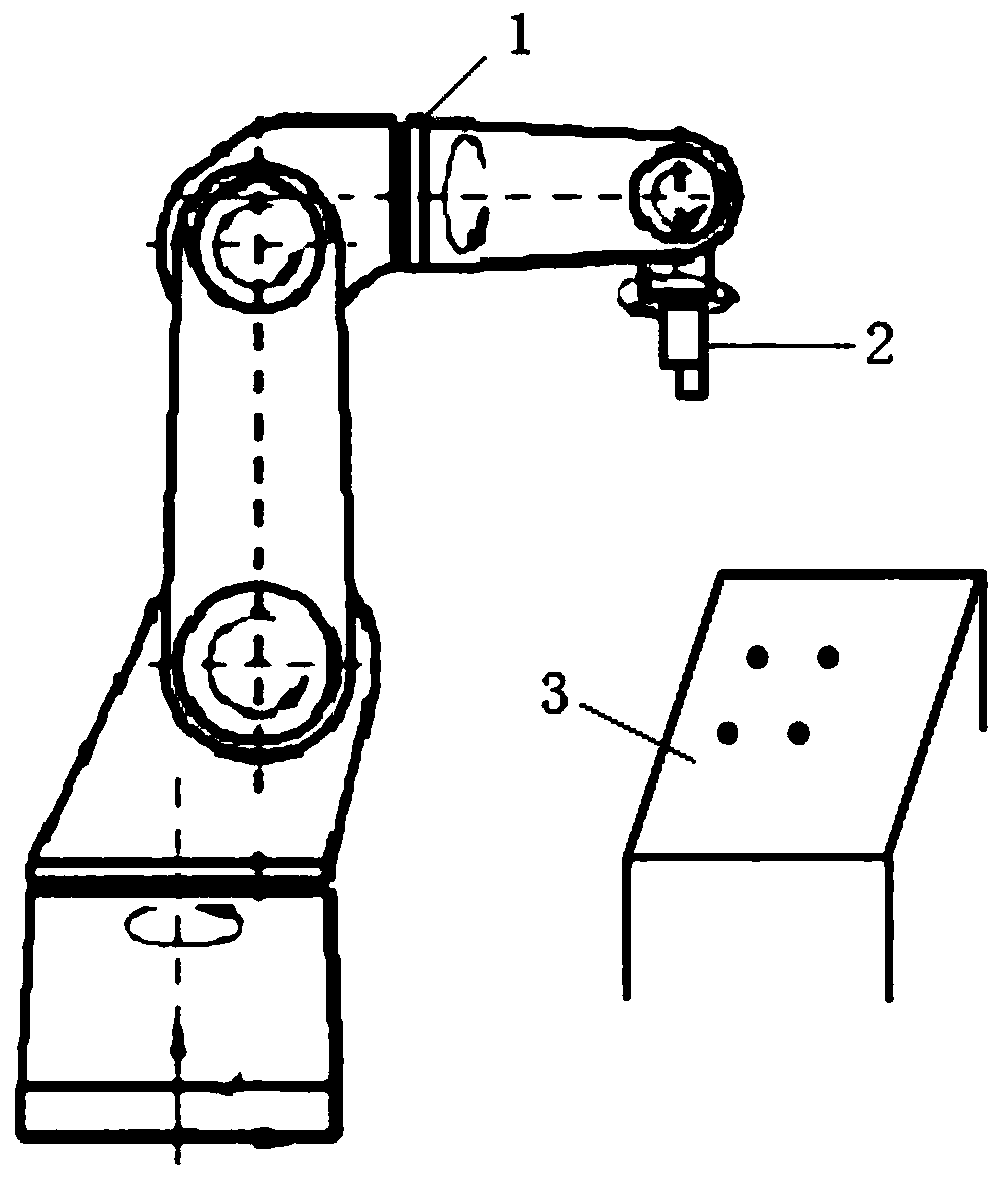

[0041] S1: Build a robot vision system. Taking the terminal camera as an example, the robot vision system such as figure 2 As shown, a camera 2 is installed at the end of the industrial six-axis robot 1, and the camera 2 moves with the end of the robot 1. The camera shoots downwards, and keeps the height constant when taking pictures; the height of the picture can be determined by the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com