Patents

Literature

338 results about "Visually guided" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

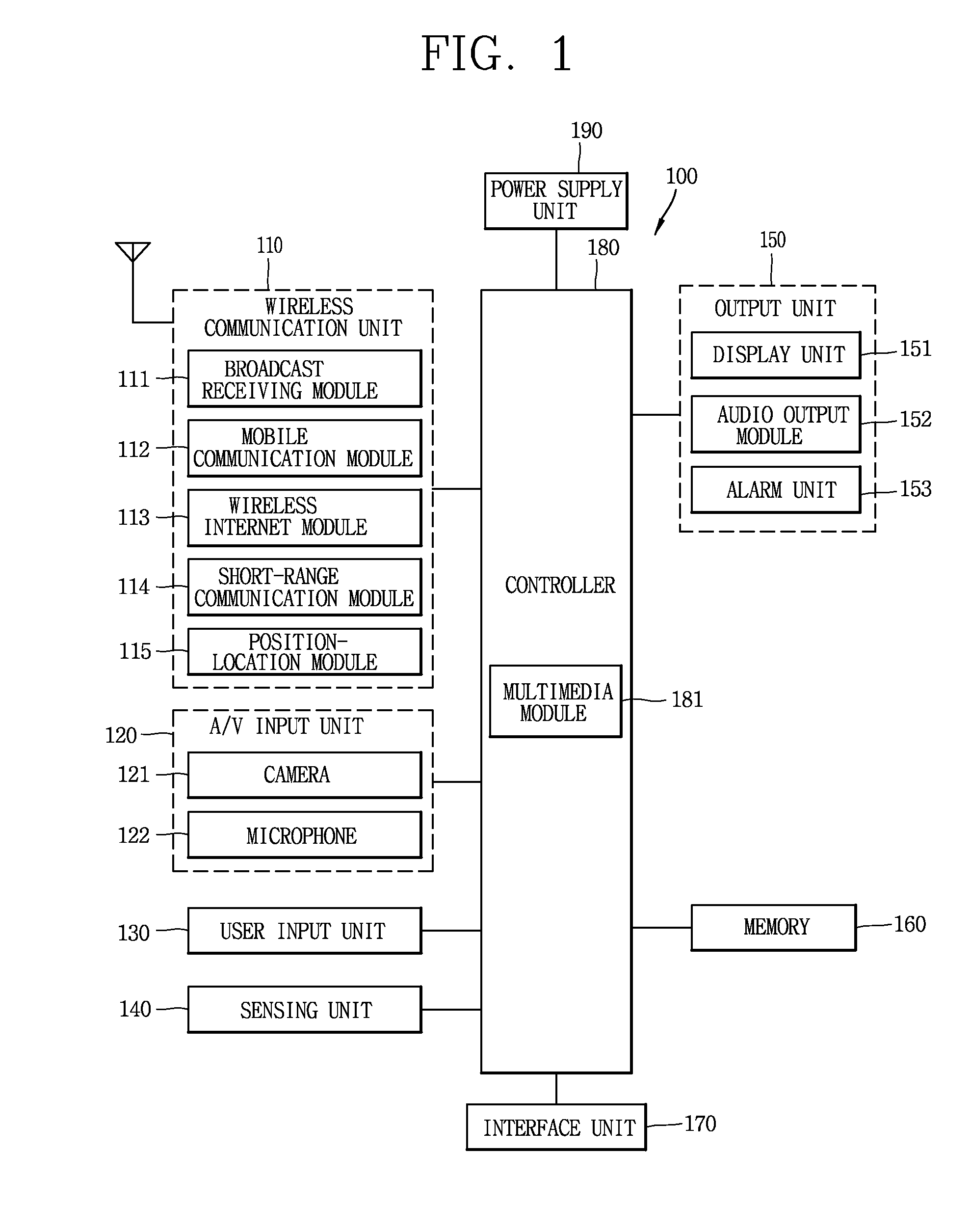

Scalable system and method for an integrated digital media catalog, management and reproduction system

InactiveUS20090177301A1Reduce stepsEfficient managementRecord information storageSpecial data processing applicationsScalable systemVisually guided

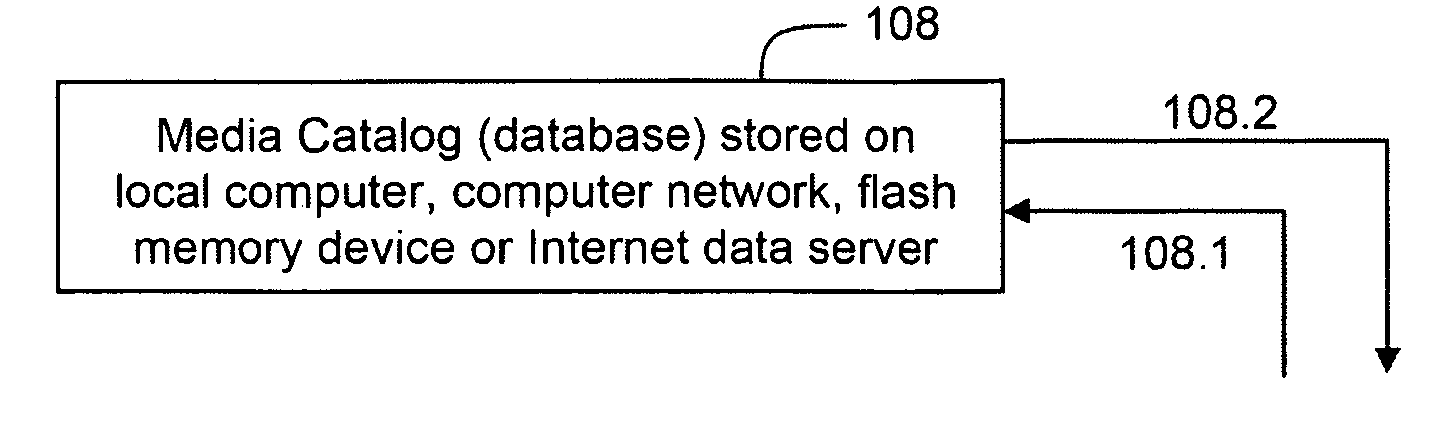

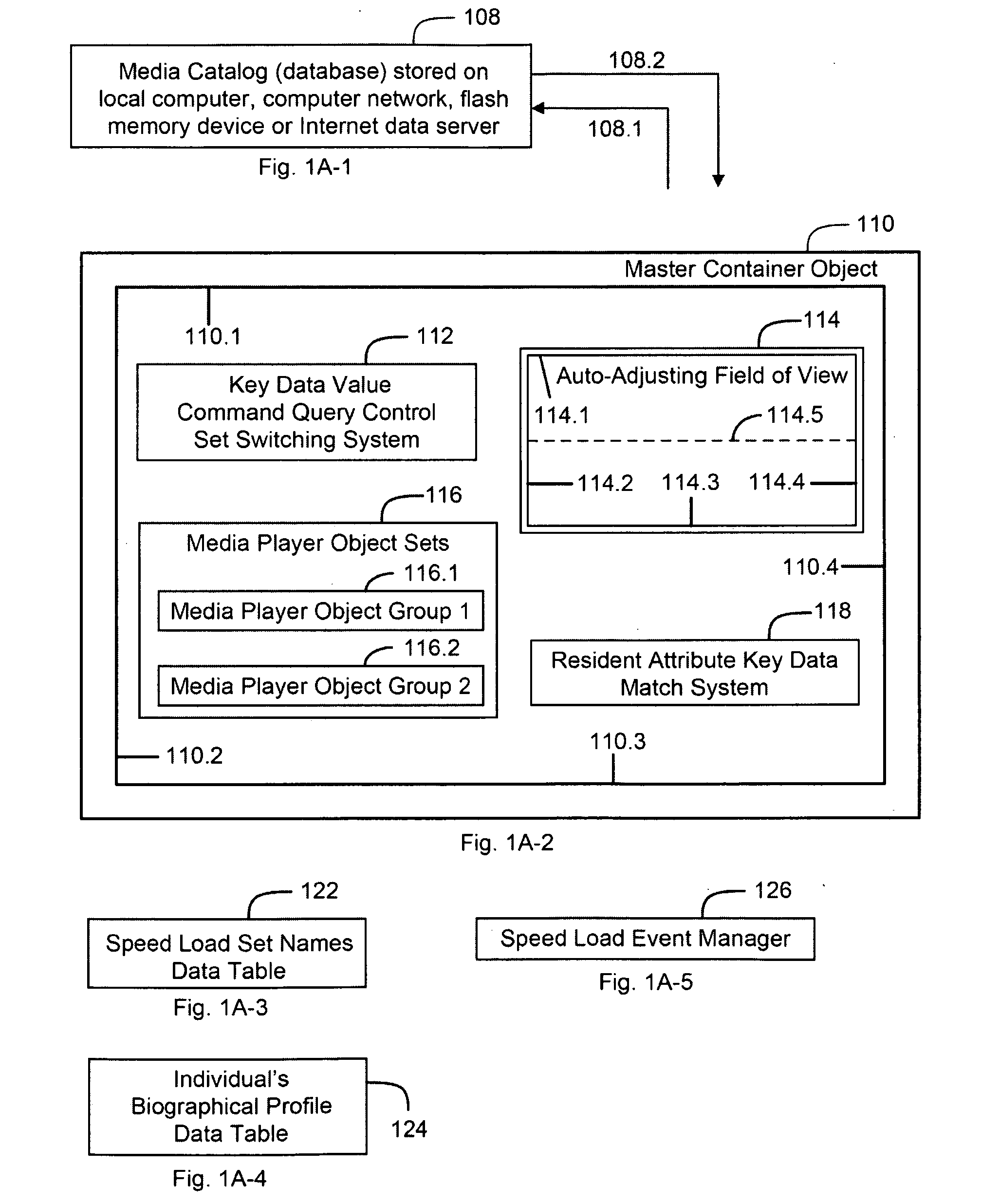

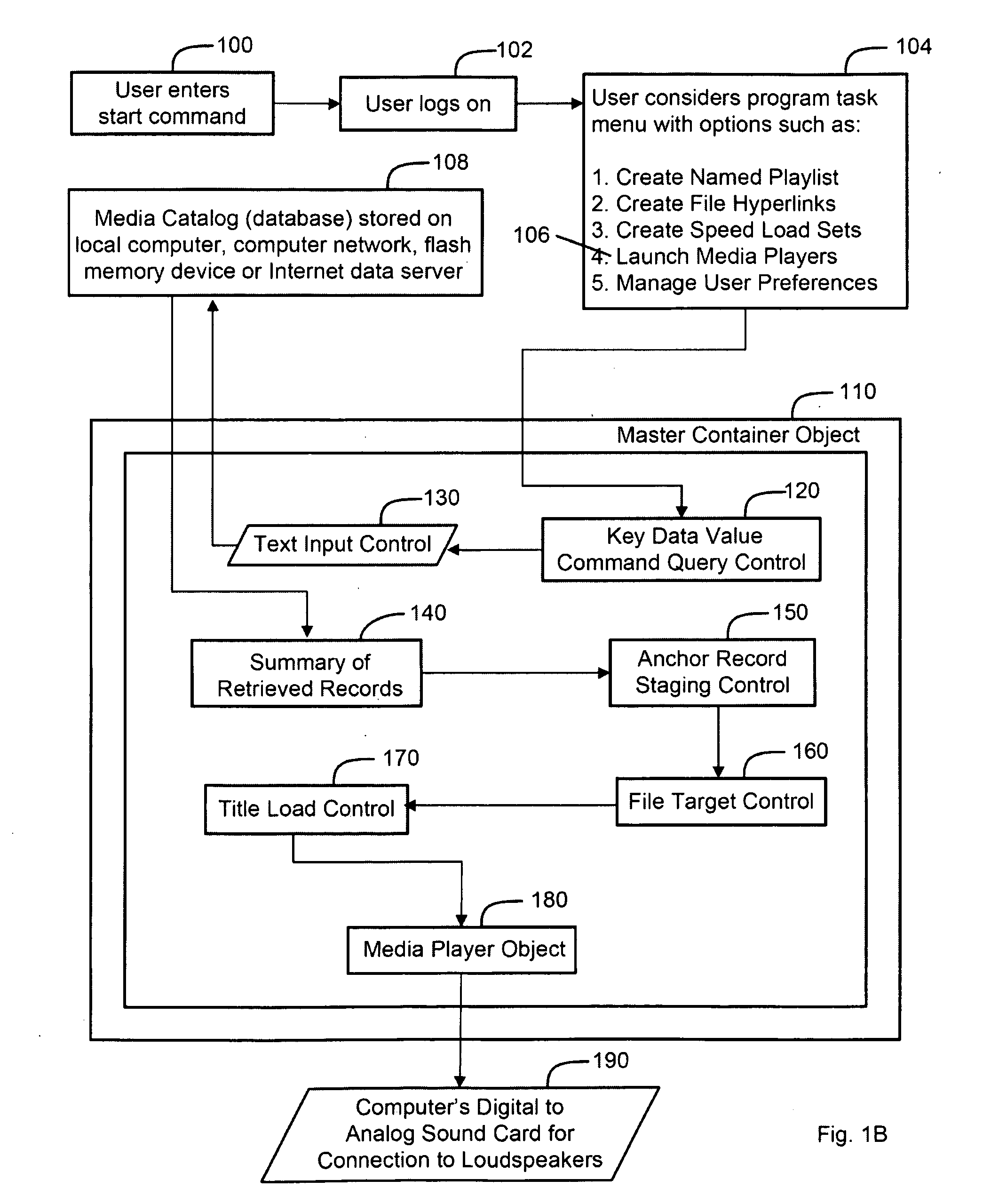

A system and method for creating an integrated digital media management and reproduction device comprised of a database with key data values for a plurality of records, and a module with search controls that include sets of compound parallel attribute queries that can execute instructions for a data category, and concurrently retrieve and display records across a plurality of related categories—thereby revealing the associations between discrete records. The invention also has a plurality of software instantiated media players which, through instructions managed by a “master container” design, function as if they were dynamically aware of user actions and the quantifiable states of each other player object—and respond according to logic rules, visually guiding event workflow. The system has a memory module for storing query information, a processor configured to retrieve data, a display unit for retrieved data and a digital-to-analog converter for providing capability for connection to loudspeakers.

Owner:CODENTITY

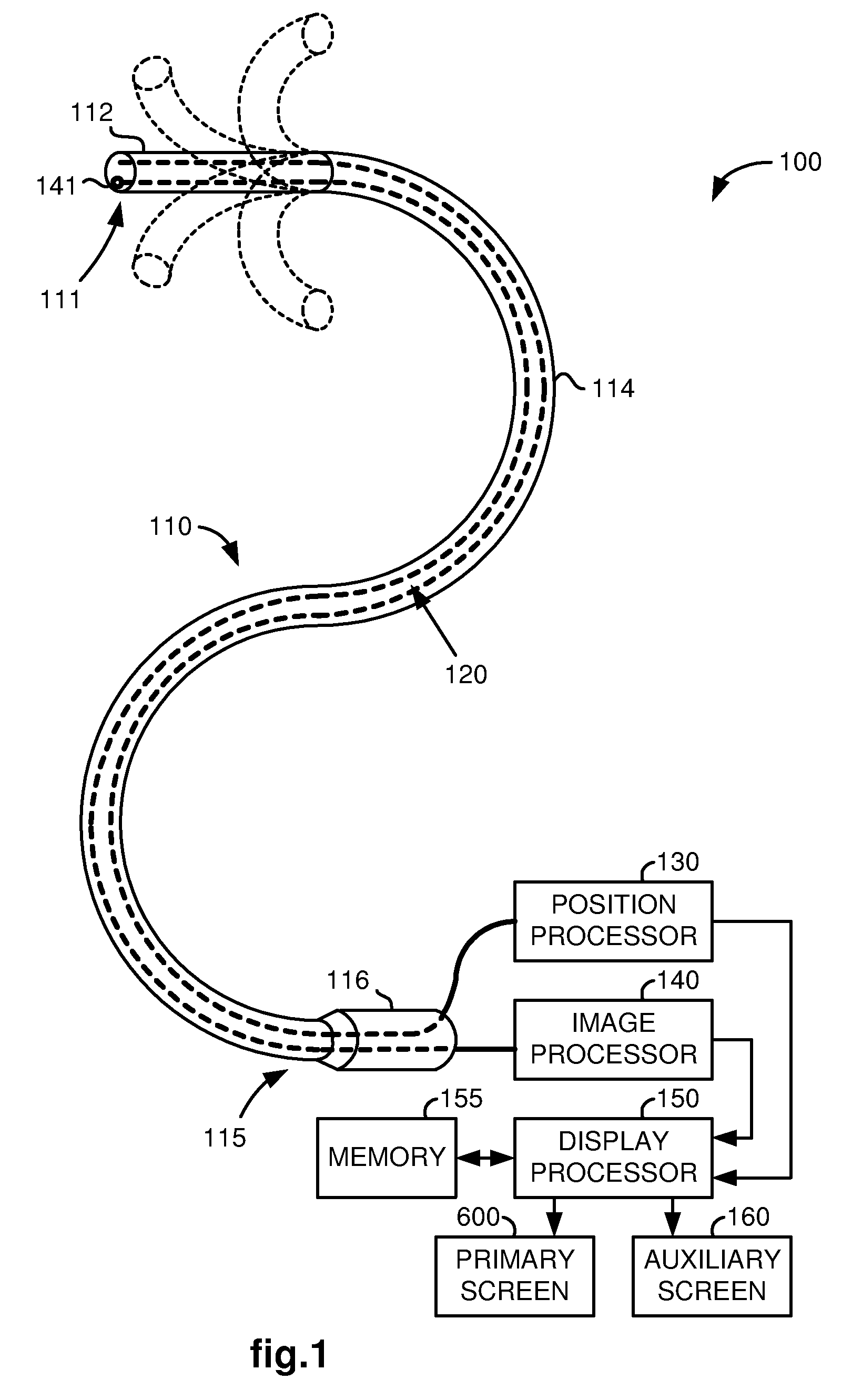

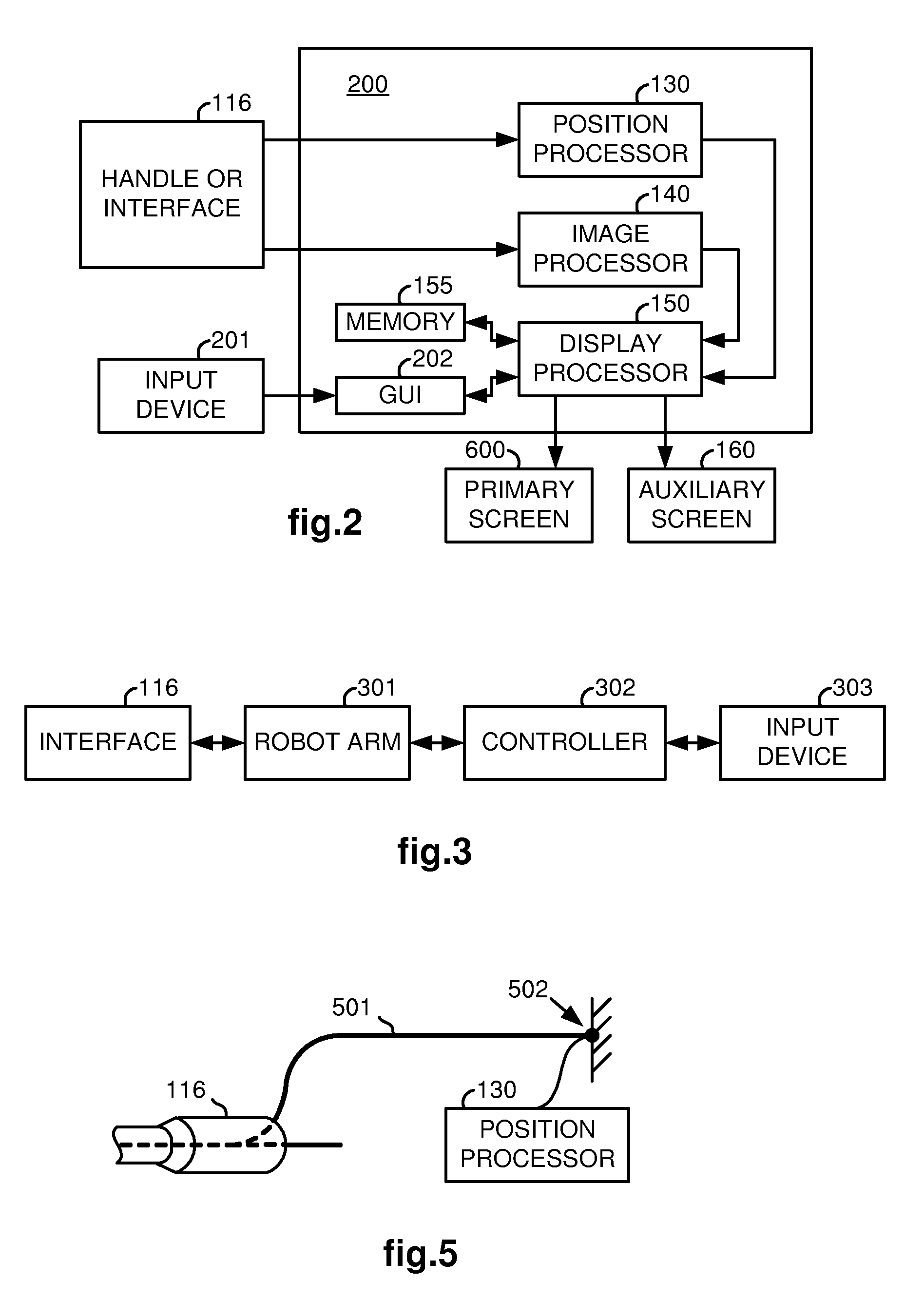

Method and system for providing visual guidance to an operator for steering a tip of an endoscopic device toward one or more landmarks in a patient

Landmark directional guidance is provided to an operator of an endoscopic device by displaying graphical representations of vectors adjacent a current image captured by an image capturing device disposed at a tip of the endoscopic device and being displayed at the time on a display screen, wherein the graphical representations of the vectors point in directions that the endoscope tip is to be steered in order to move towards associated landmarks such as anatomic structures in a patient.

Owner:INTUITIVE SURGICAL OPERATIONS INC

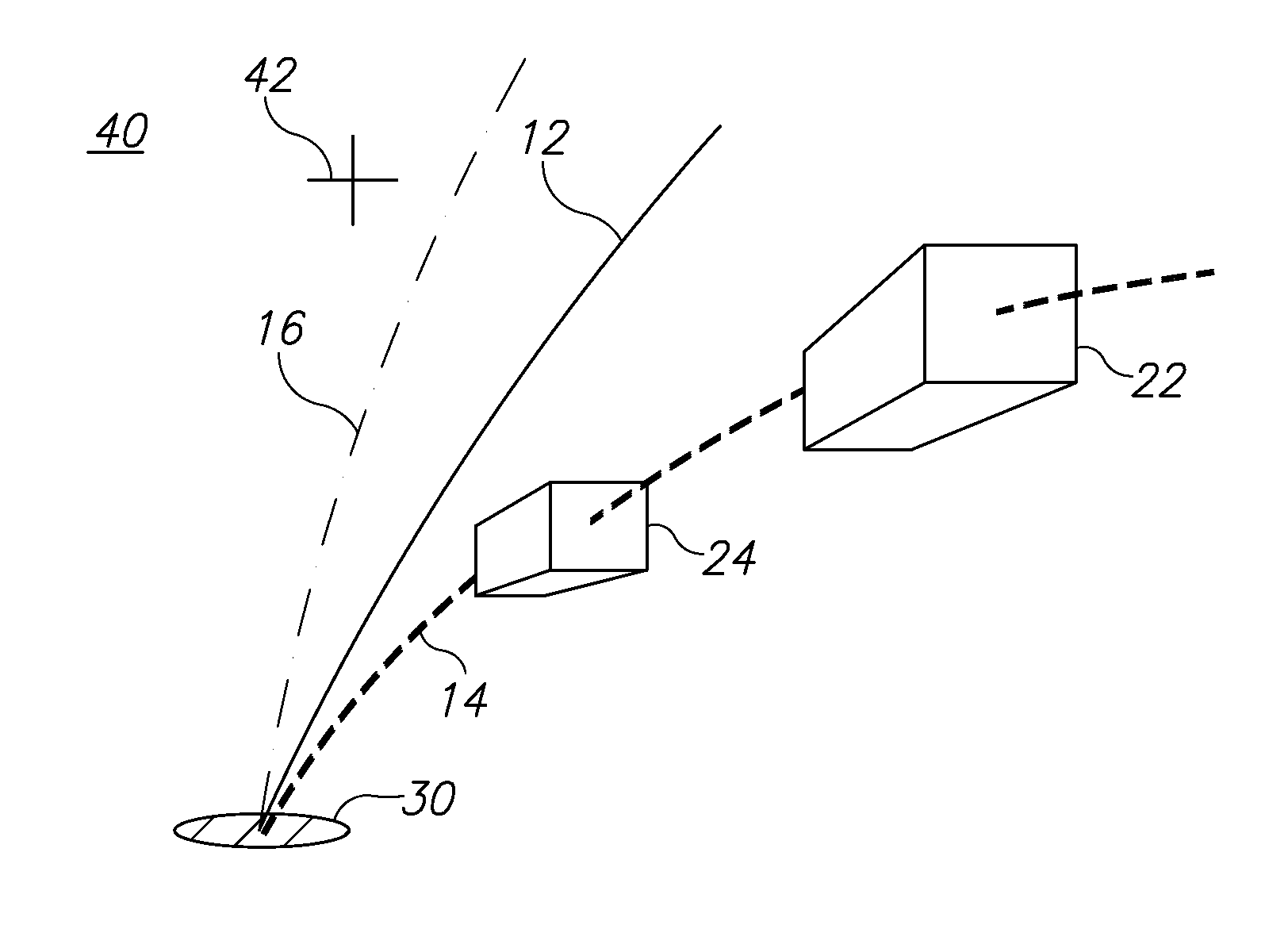

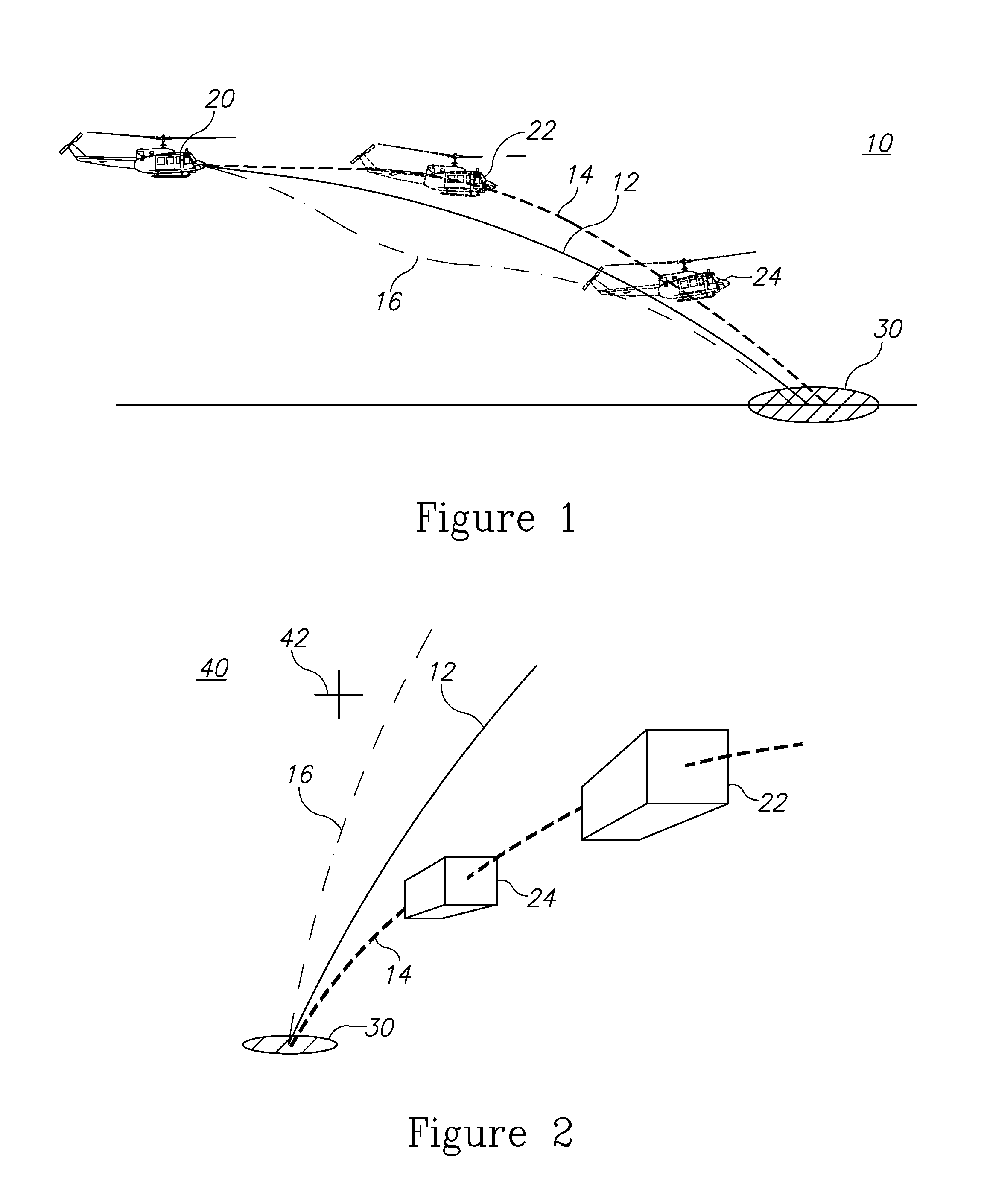

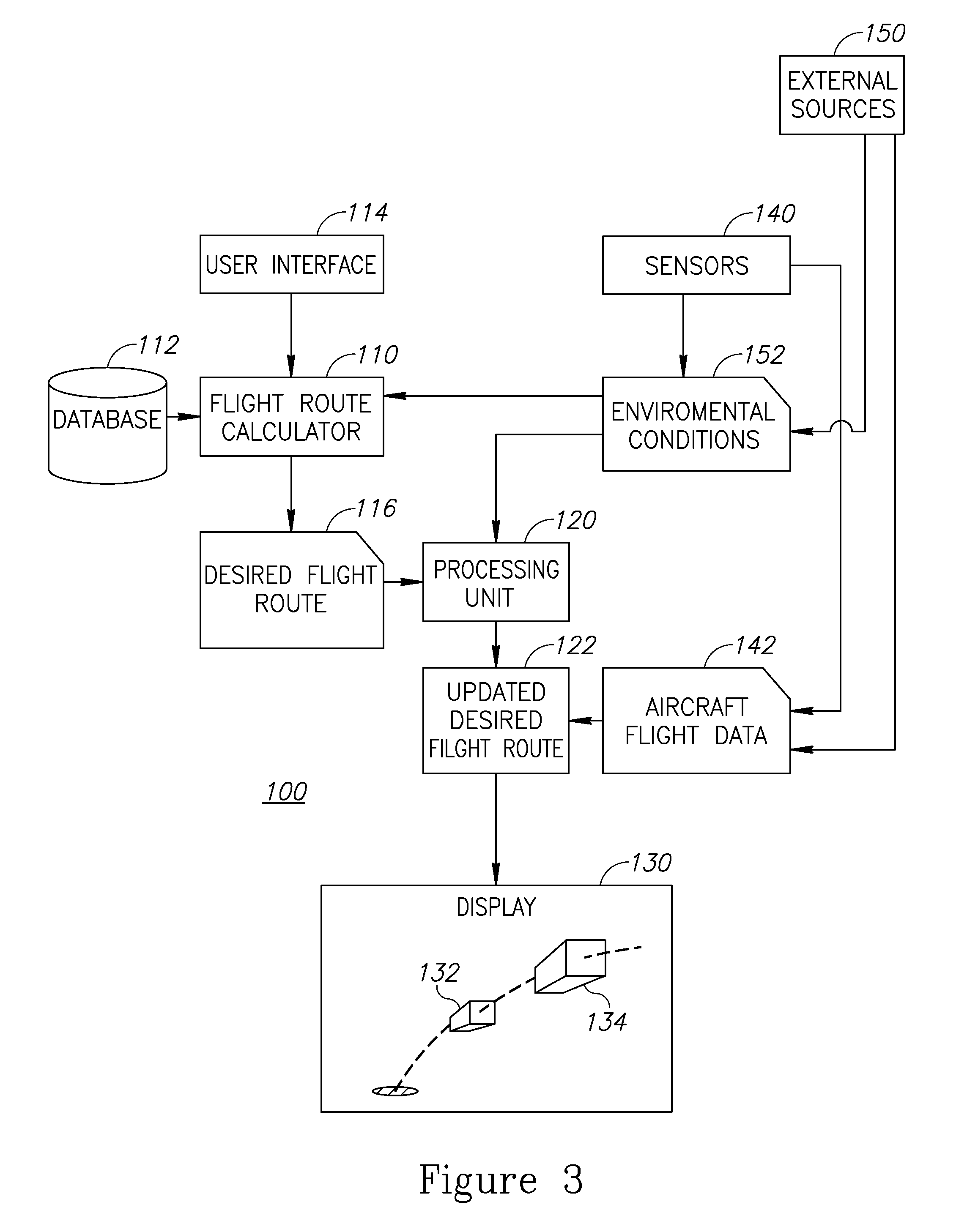

System for guiding an aircraft to a reference point in low visibility conditions

ActiveUS20130138275A1Analogue computers for vehiclesAnalogue computers for trafficVisibilityDisplay device

A method of visually guiding a pilot flying an aircraft using one or more conformal symbols whose position is dynamically updated throughout the guidance is provided herein. The method includes the following stages: determining a desired flight route of an aircraft, based on a user-selected maneuver; presenting to a pilot, on a display, at least one 3D visual symbol that is: (i) earth-space stabilized, and (ii) positioned along a future location along the desired route; computing an updated desired route based on repeatedly updated aircraft flight data that include at least one of: location, speed, and spatial angle, of the aircraft; and repeating the presenting of the at least one 3D visual symbol with its updated location along the updated desired route.

Owner:ELBIT SYST LTD

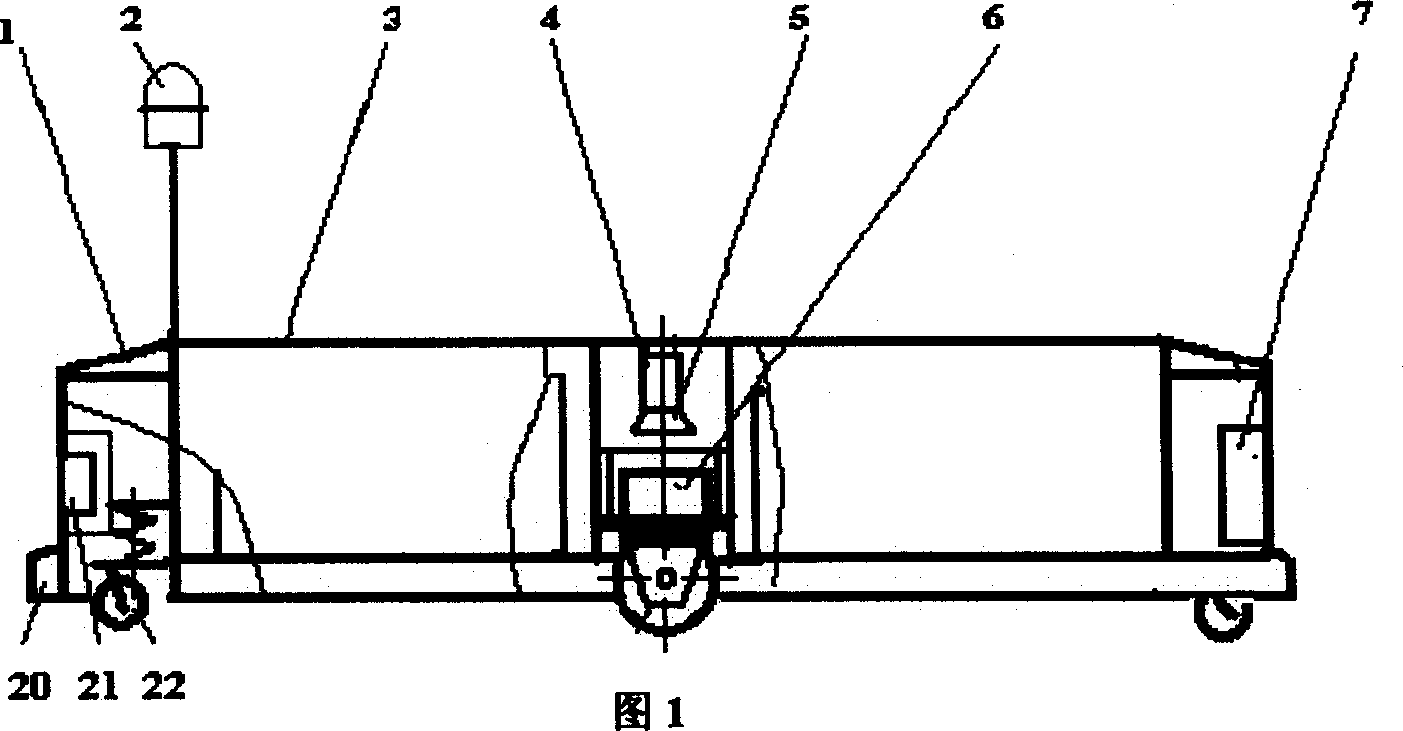

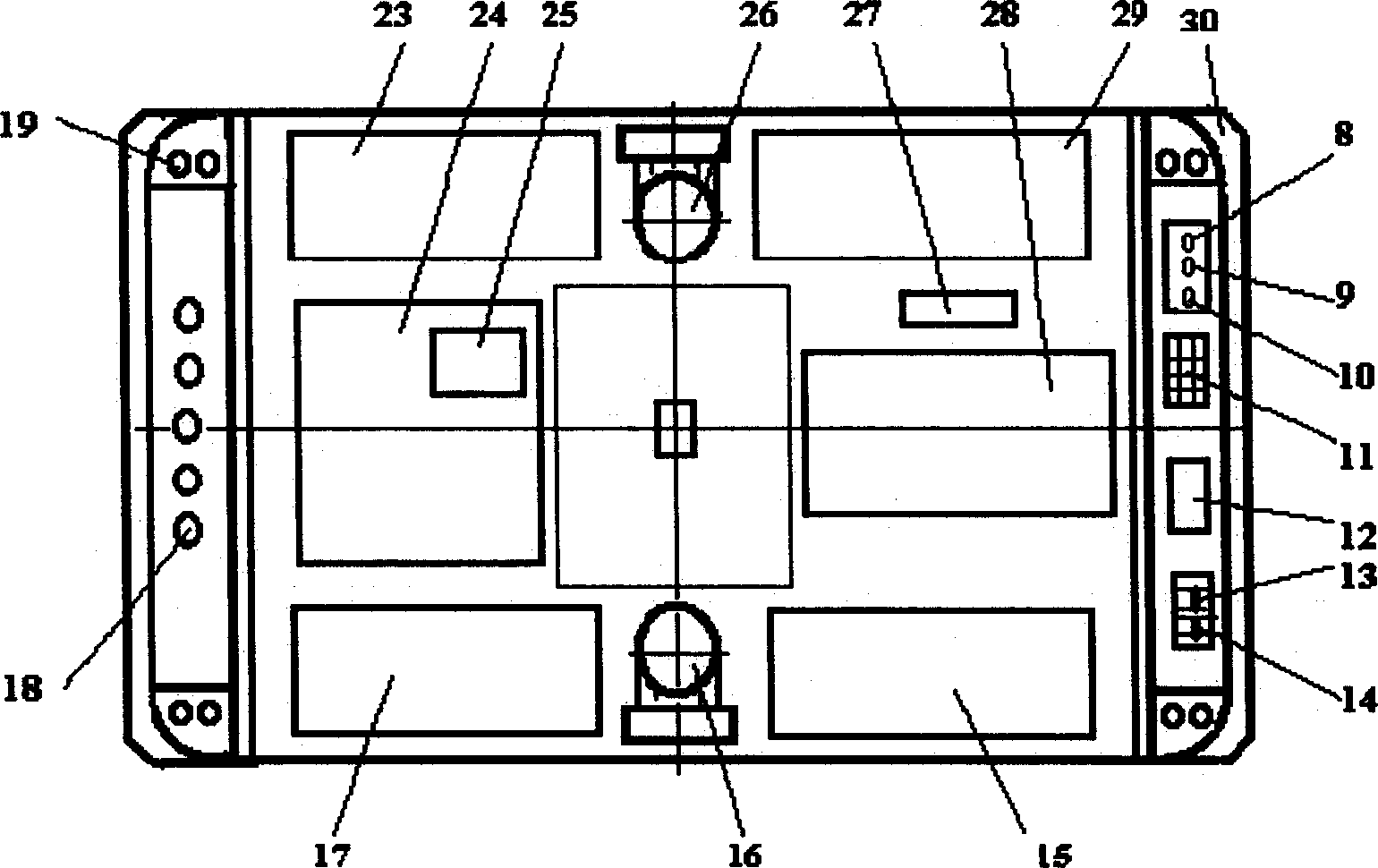

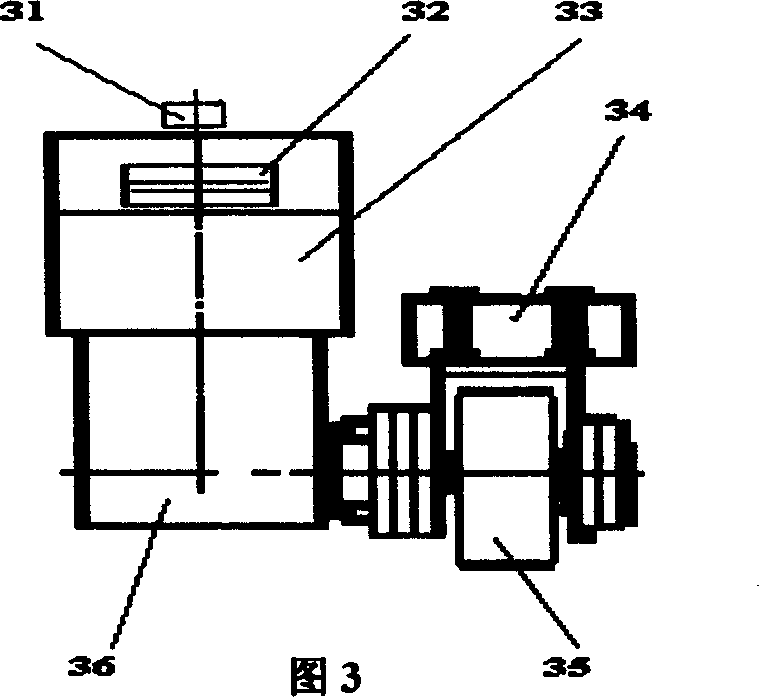

Vision guiding method of automatic guiding vehicle and automatic guiding electric vehicle

InactiveCN1438138AEasy to set upIncrease flexibilitySpeed controllerElectric energy managementControl signalElectric vehicle

The present invention relates to an automatic guide method of intelligent industrial transportation vehicle and electric vehicle adopting said automatic guide method. The visual guide method of automatic guide vehicle controlled by computer is characterized by that the camera mounted on the automatic guide vehicle can be used for shooting the running path mark line on the pavement, station address code identifier and running state control identifier, and the computer connected with the camera can utilize image intellectualized identification to obtain the position deviation and direction deviation parameters of the vehicle body and running path mark line and station address and running state control information to give out correspondent control signal to wheel drive mechanism controller to implement automatic guide control of the automatic guide vehicle.

Owner:JILIN UNIV +1

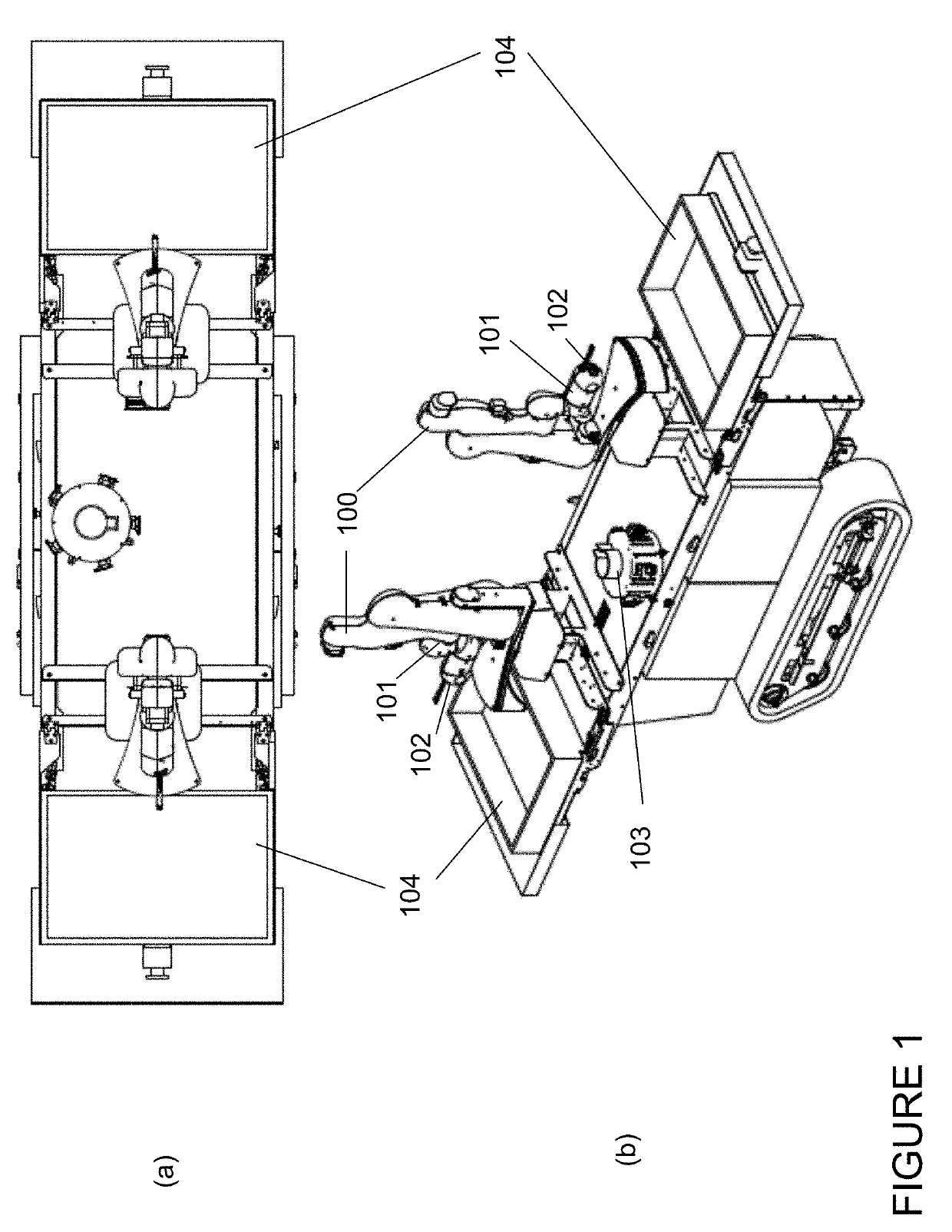

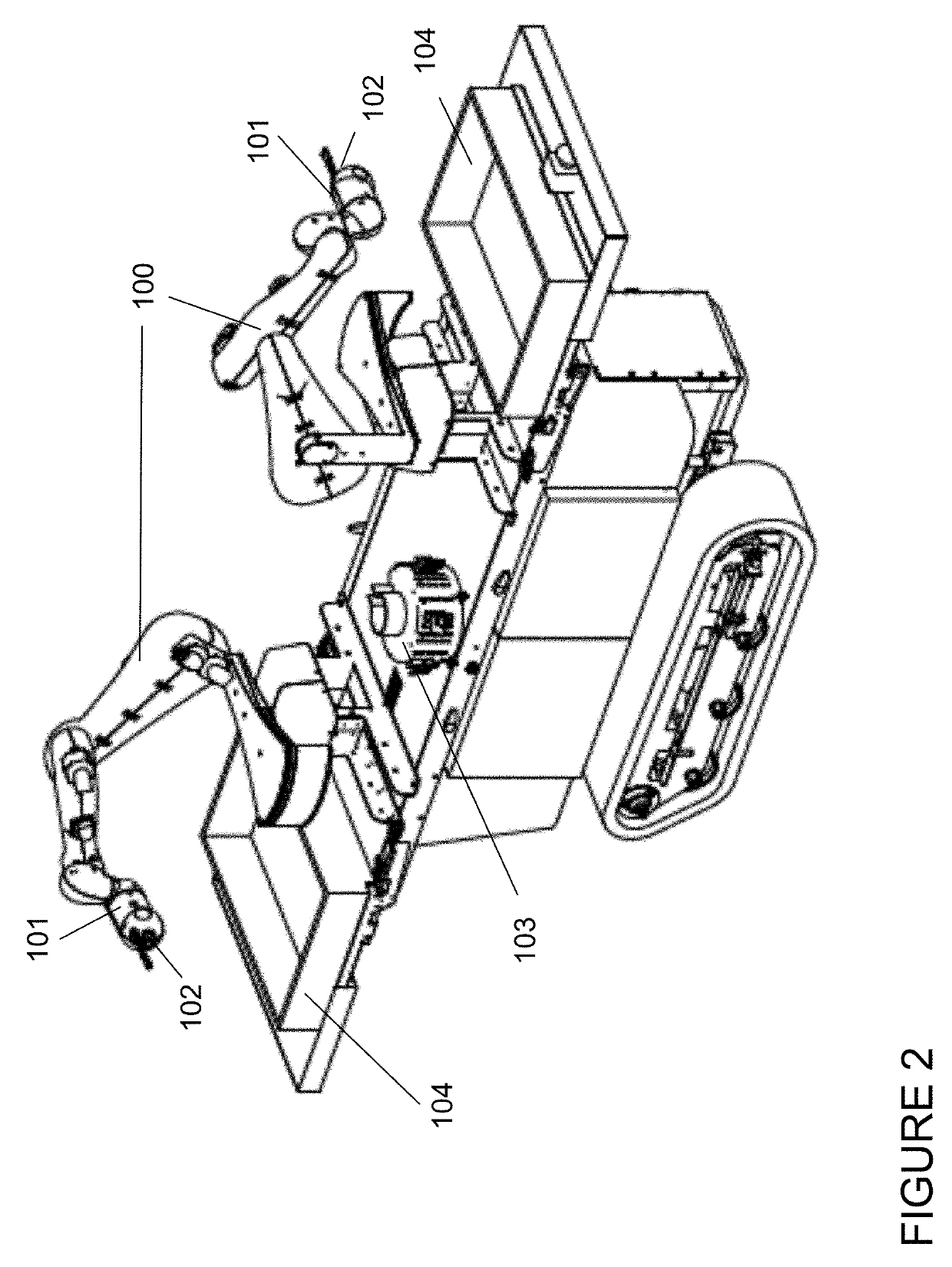

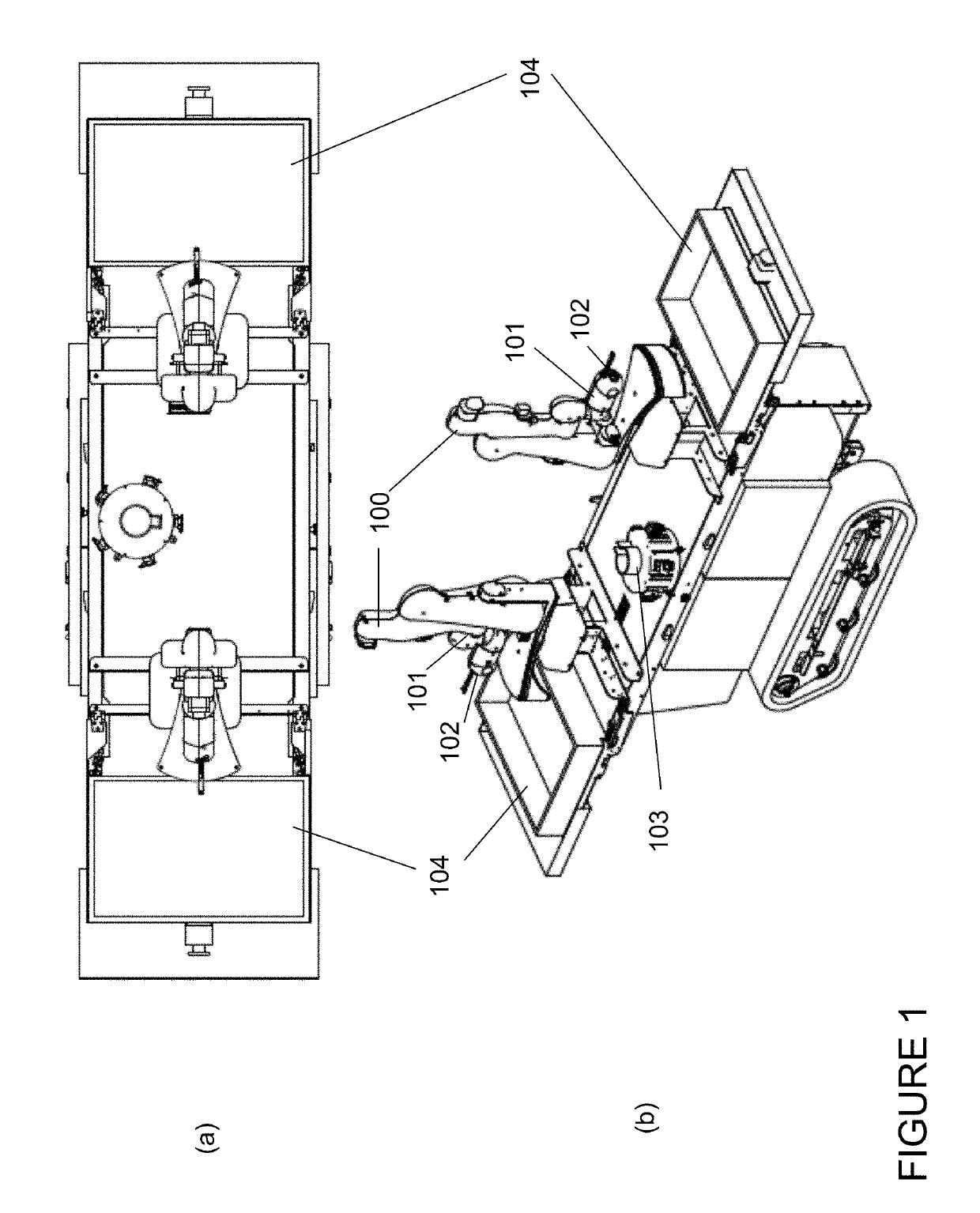

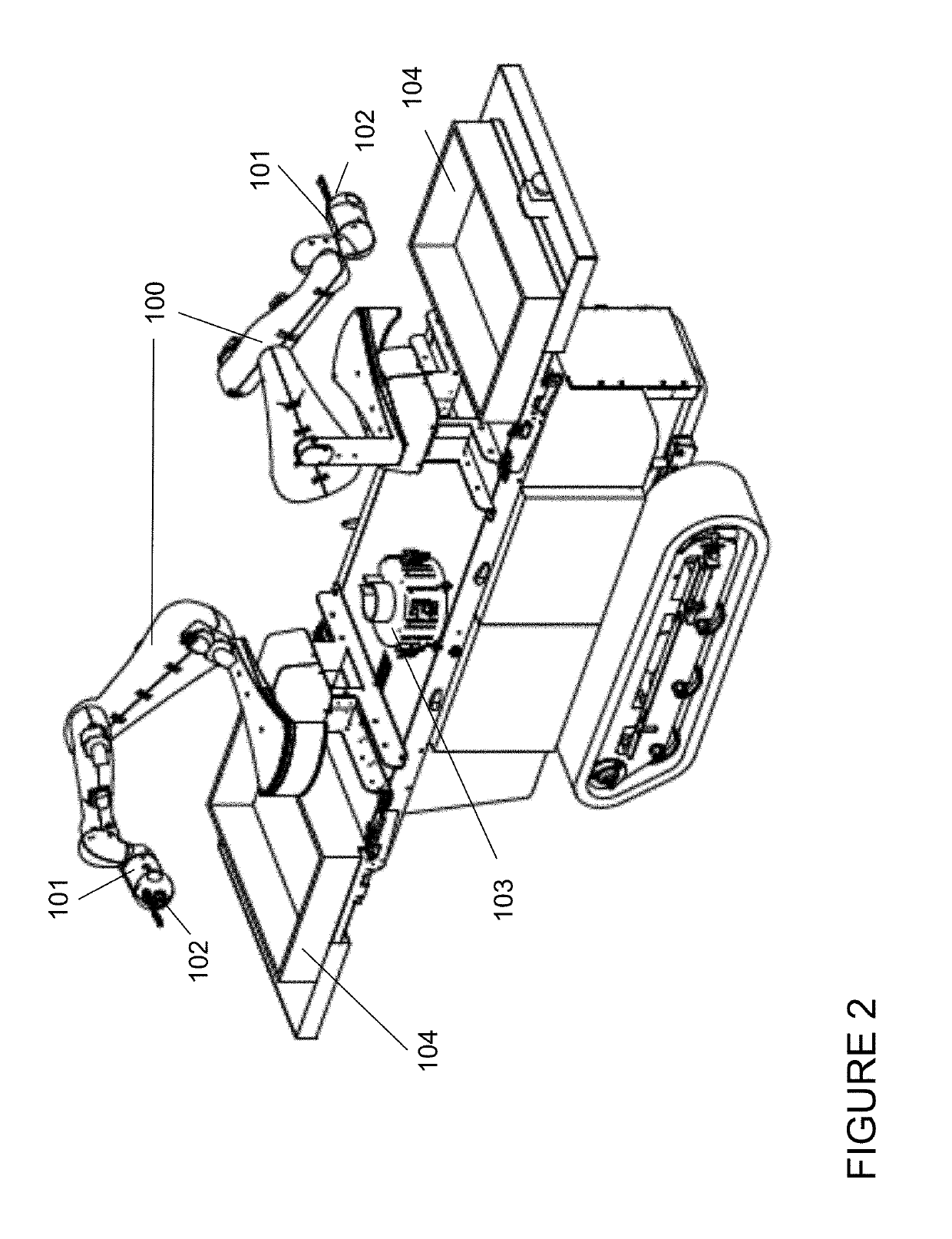

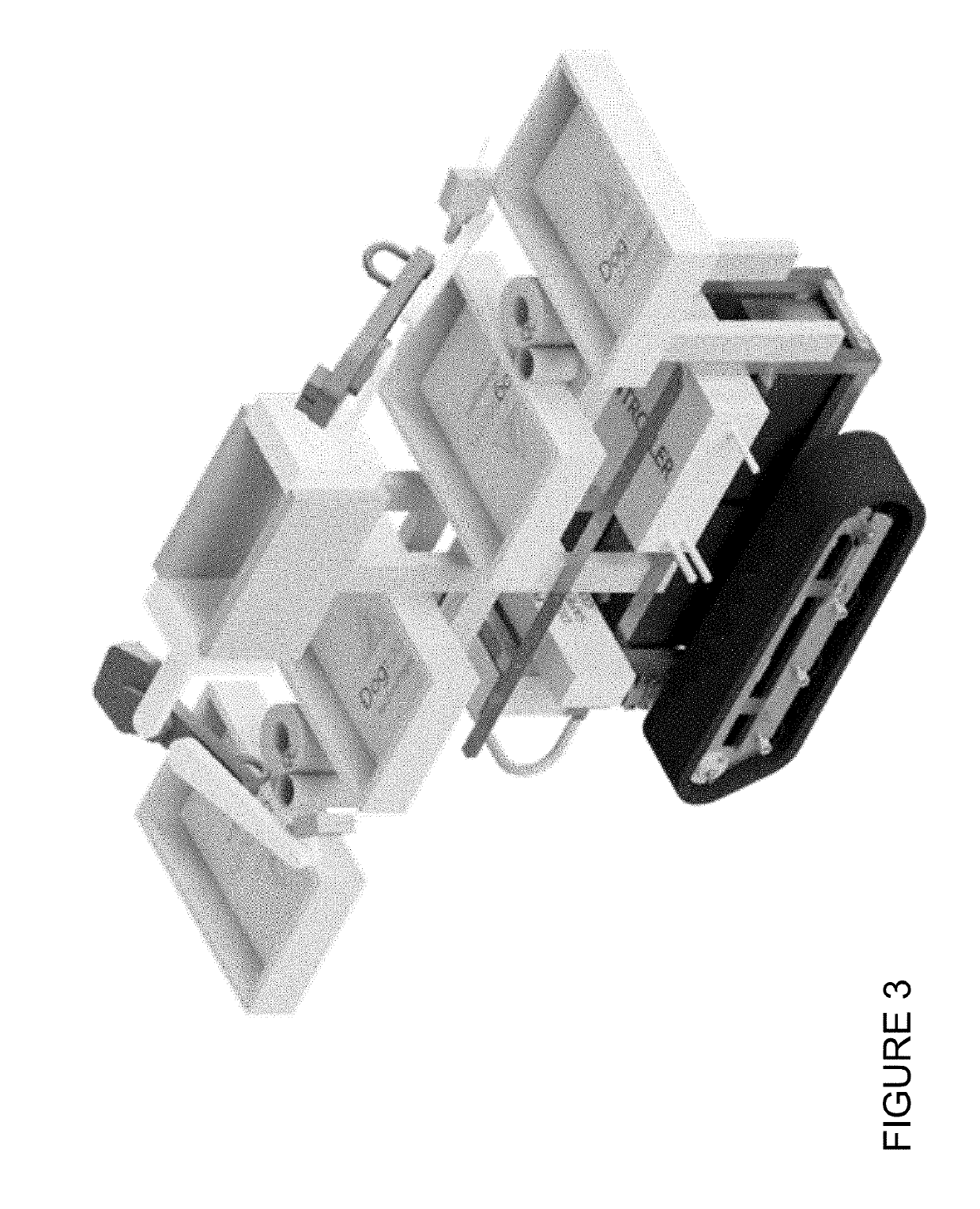

Robotic fruit picking system

A robotic fruit picking system includes an autonomous robot that includes a positioning subsystem that enables autonomous positioning of the robot using a computer vision guidance system. The robot also includes at least one picking arm and at least one picking head, or other type of end effector, mounted on each picking arm to either cut a stem or branch for a specific fruit or bunch of fruits or pluck that fruit or bunch. A computer vision subsystem analyses images of the fruit to be picked or stored and a control subsystem is programmed with or learns picking strategies using machine learning techniques. A quality control (QC) subsystem monitors the quality of fruit and grades that fruit according to size and / or quality. The robot has a storage subsystem for storing fruit in containers for storage or transportation, or in punnets for retail.

Owner:DOGTOOTH TECH LTD

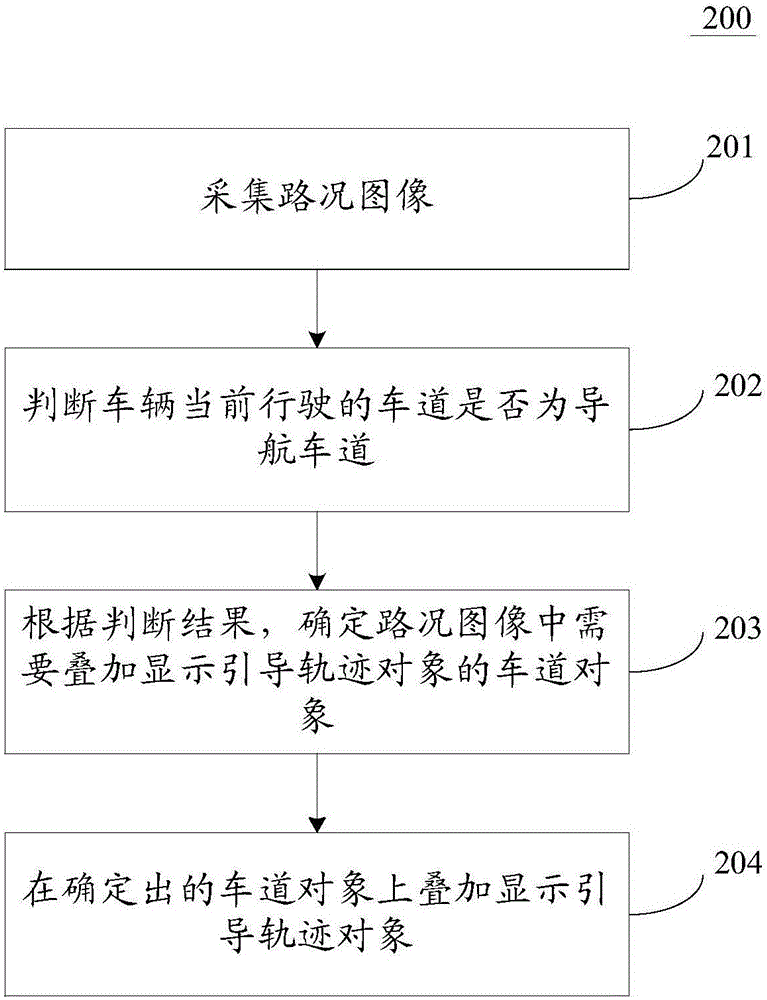

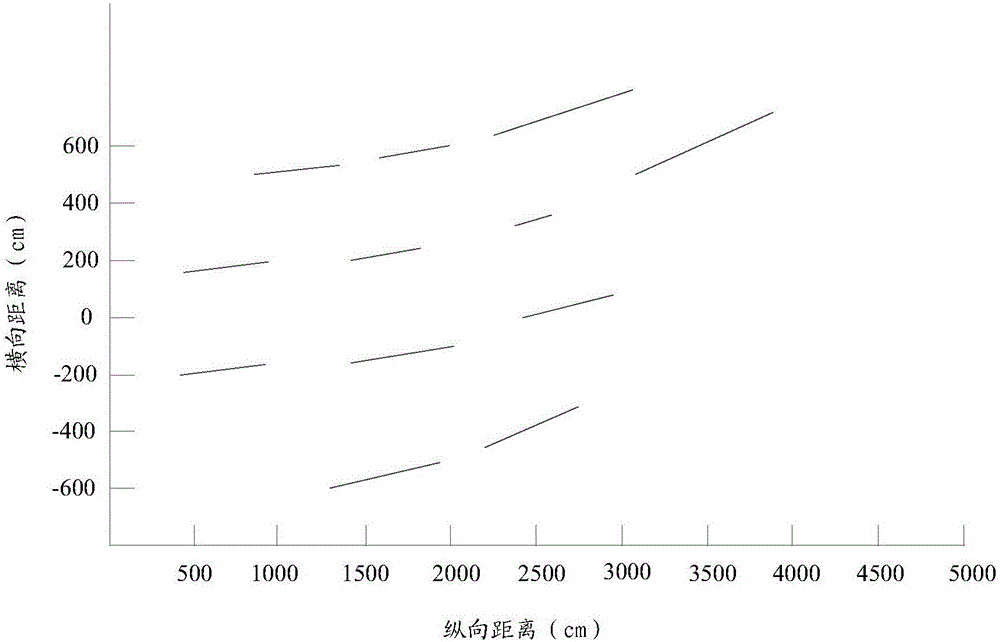

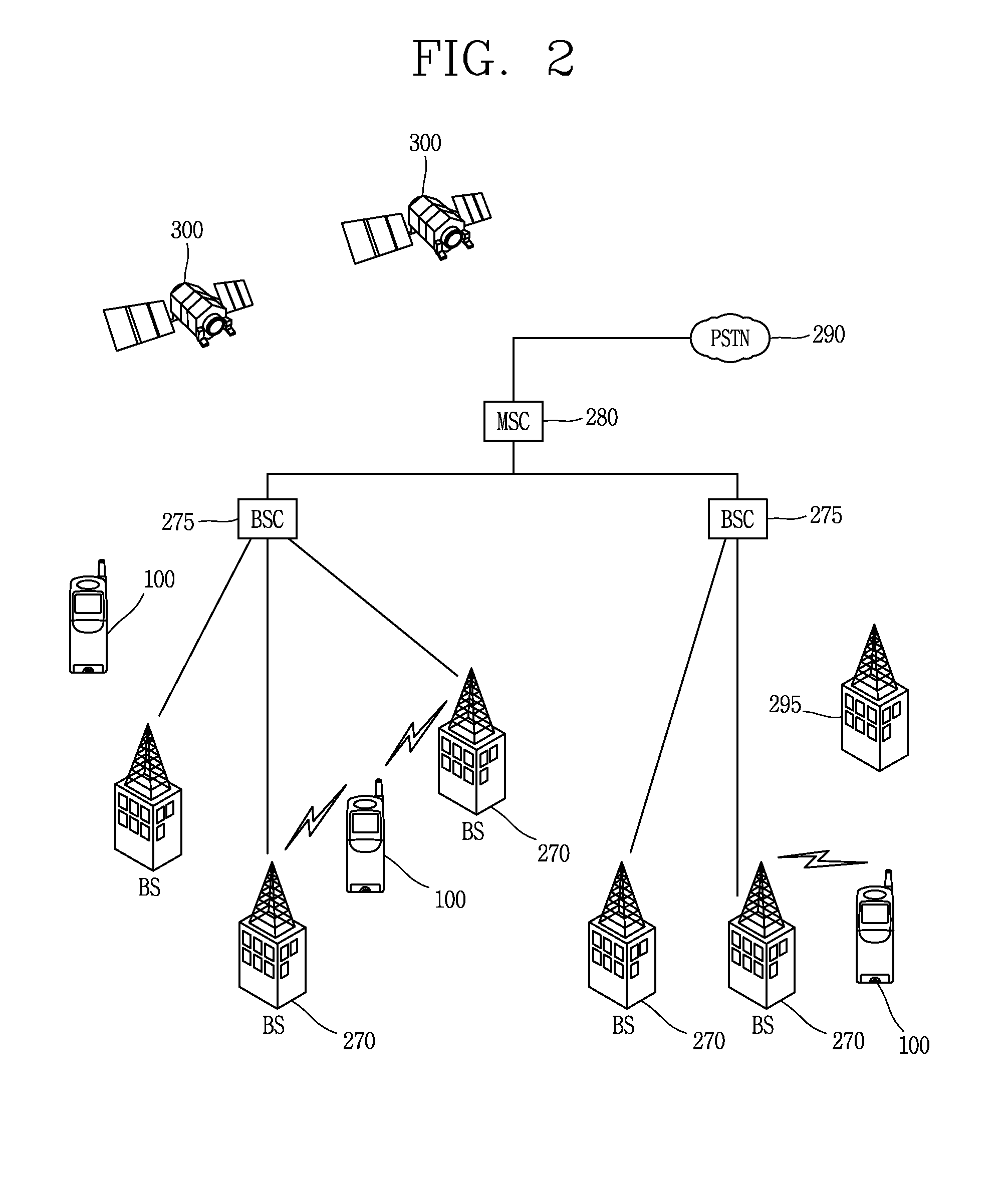

Vehicle navigation method and vehicle navigation apparatus

ActiveCN106092121APrecise NavigationInstruments for road network navigationCharacter and pattern recognitionVehicle drivingVisually guided

The invention discloses a vehicle navigation method and a vehicle navigation apparatus. One concrete embodiment mode of the method comprises the following steps: a camera acquires traffic images; whether the vehicle's current driving lane is a navigation lane or not is judged, and the navigation lane is the vehicle's current driving lane defined in navigation information; lane objects with guiding locus objects to be displayed in a superposing manner are determined according to the judgment result, wherein the guiding locus objects are used for indicating a vehicle to drive in the current driving lane or to turn into the navigation lane; and the guiding locus objects are displayed on the determined lane object in a superposing manner. The method and the apparatus realizes superposing display of the guiding locus objects in the lane of the vehicle driving road according to the current position and the navigation route of the vehicle, so a driver is visually guided to drive the vehicle in the right lane, and the vehicle is accurately navigated.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

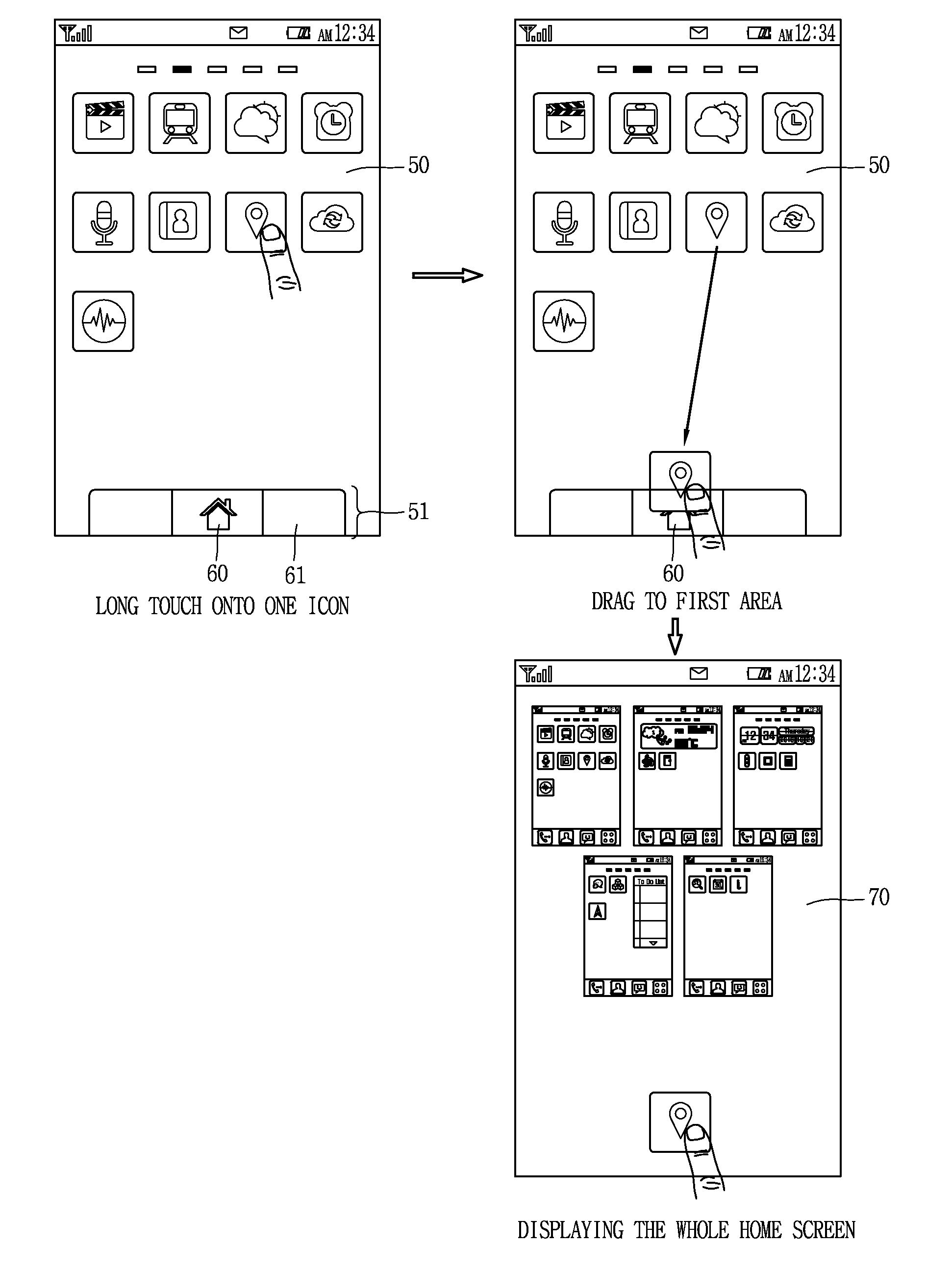

Mobile terminal and application icon moving method thereof

ActiveUS20140068477A1Simple to executeSubstation equipmentTransmissionHome screenHuman–computer interaction

A mobile terminal and an application icon moving method thereof are provided. When a predetermined application icon is selected from a menu screen including a plurality of application icons and moved to a control region, a default home screen or the whole home screen stored in a memory may be selectively displayed according to a moved position of the corresponding icon, and also an icon-insertable position may be visually guided on the displayed home screen. This may allow a user to execute the movement of the application icon in an easy, convenient manner.

Owner:LG ELECTRONICS INC

Robotic fruit picking system

A robotic fruit picking system includes an autonomous robot that includes a positioning subsystem that enables autonomous positioning of the robot using a computer vision guidance system. The robot also includes at least one picking arm and at least one picking head, or other type of end effector, mounted on each picking arm to either cut a stem or branch for a specific fruit or bunch of fruits or pluck that fruit or bunch. A computer vision subsystem analyses images of the fruit to be picked or stored and a control subsystem is programmed with or learns picking strategies using machine learning techniques. A quality control (QC) subsystem monitors the quality of fruit and grades that fruit according to size and / or quality. The robot has a storage subsystem for storing fruit in containers for storage or transportation, or in punnets for retail.

Owner:DOGTOOTH TECH LTD

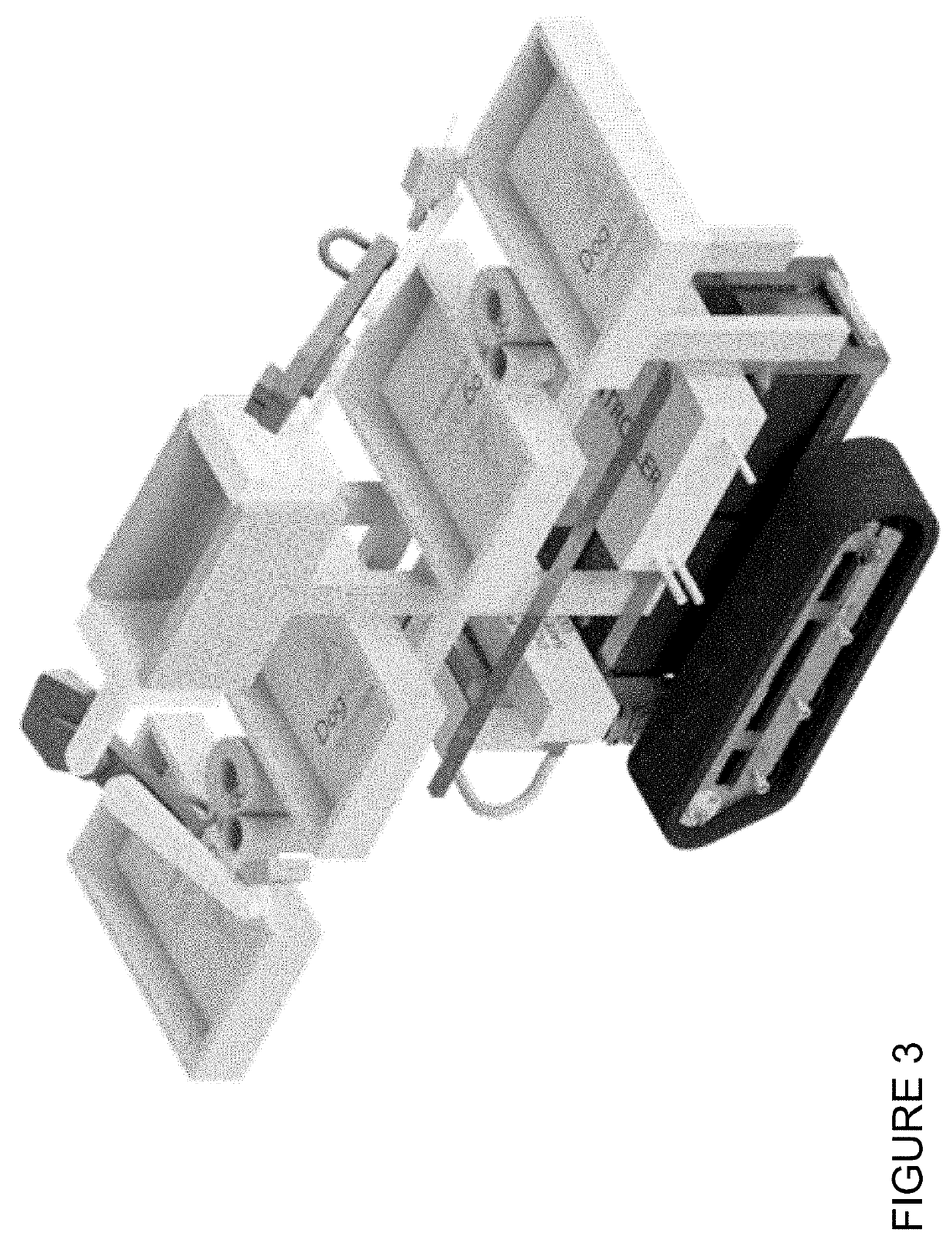

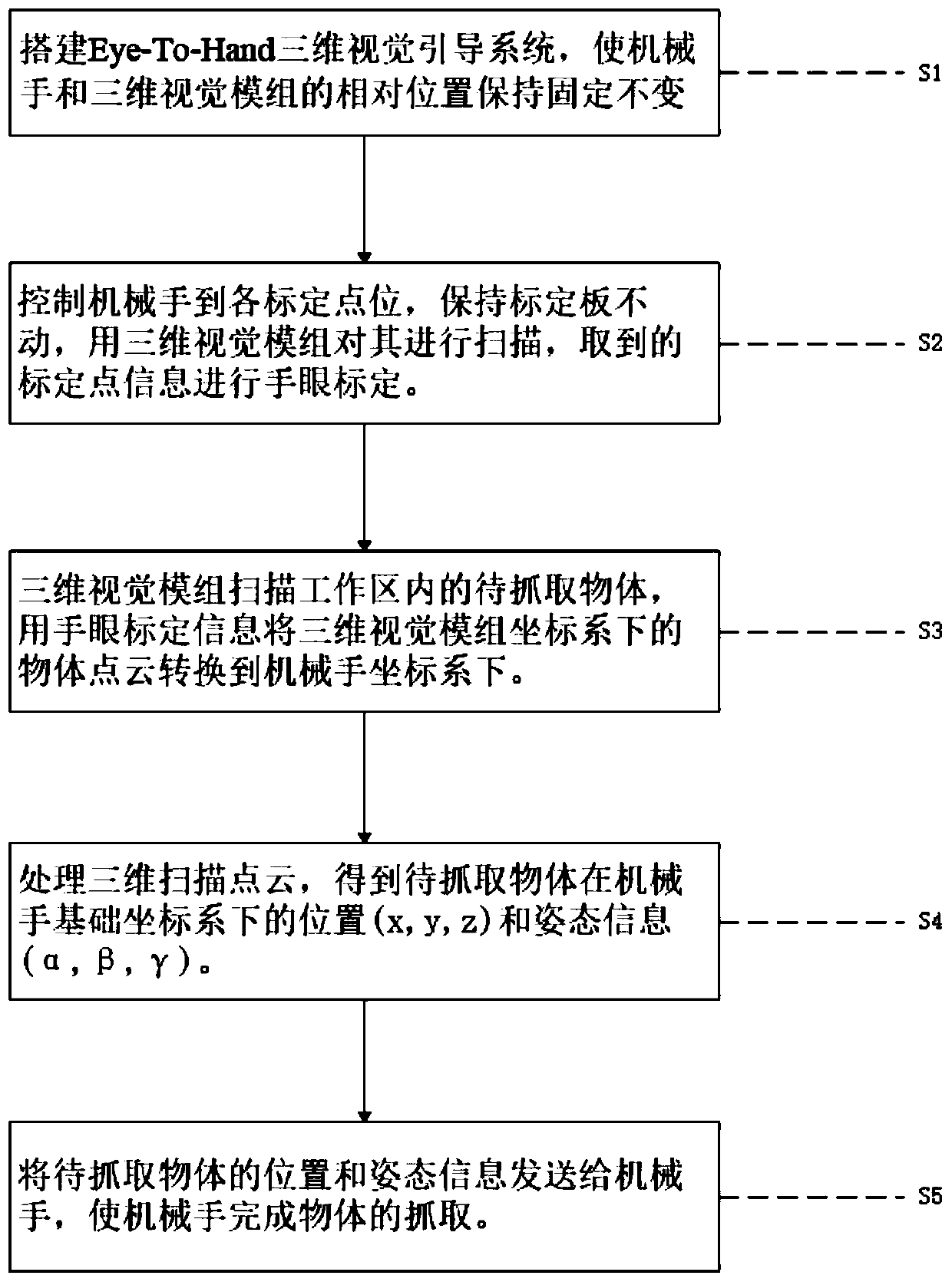

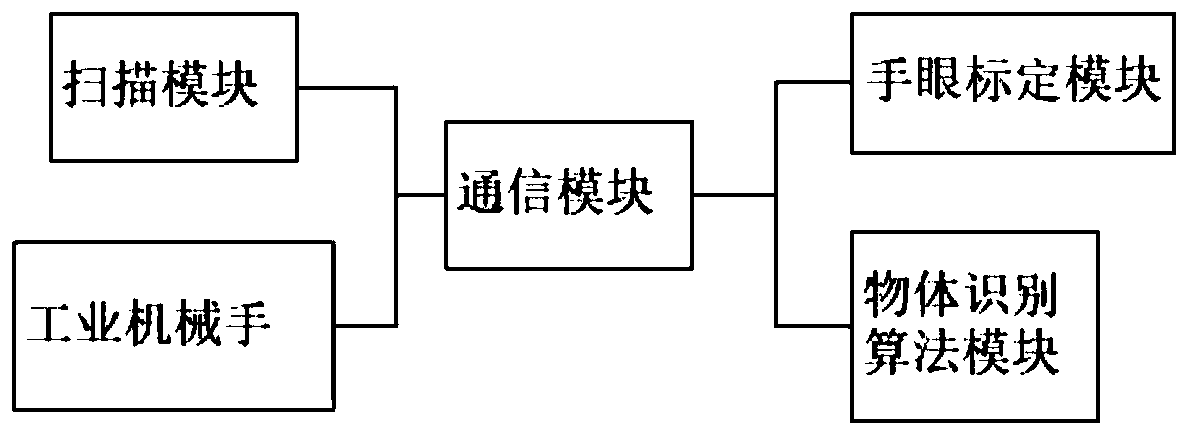

Method and system for guiding mechanical hand grabbing through three-dimensional visual guidance

InactiveCN109927036ANot easy to break awayGuaranteed working accuracyProgramme-controlled manipulatorWork taskEngineering

The invention provides a method and system for guiding mechanical hand grabbing through three-dimensional visual guidance. The whole system is fixed in space, the visual field does not change due to the movement of a mechanical arm, and a target object is not likely to be away from the observation visual field of a camera; by means of acquisition in an off calibration manner, a system model structure can be repeatedly calibrated, and therefore the work precision in long-time work tasks is guaranteed; and a robot can sense environments, by means of the 3D machine vision scanning and point cloudfeature recognition and analysis technology, the robot is guided to complete some recognition and grabbing tasks of disorderly objects, and people are liberated from high-repeatability dangerous labor.

Owner:QINGDAO XIAOYOU INTELLIGENT TECH CO LTD

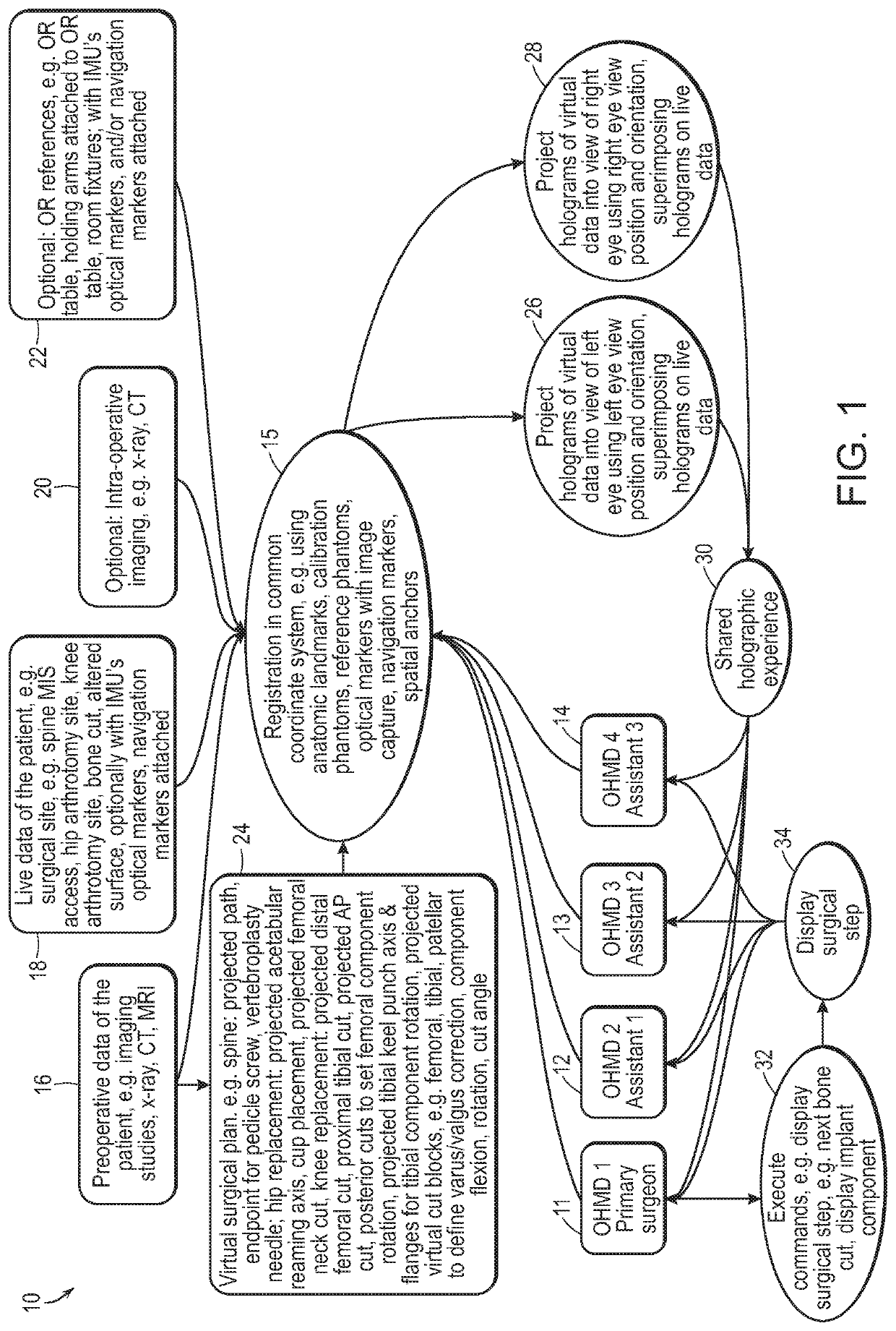

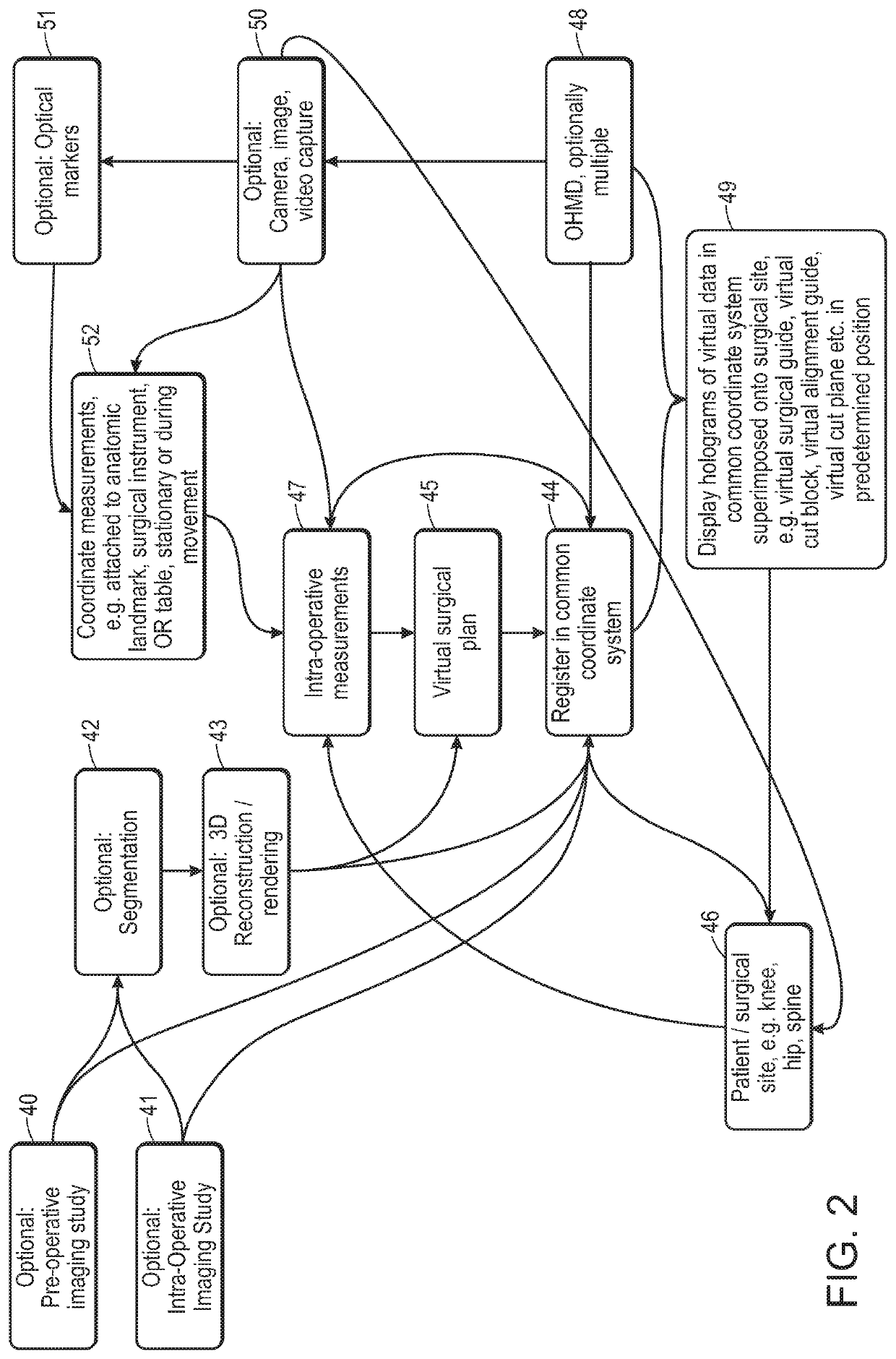

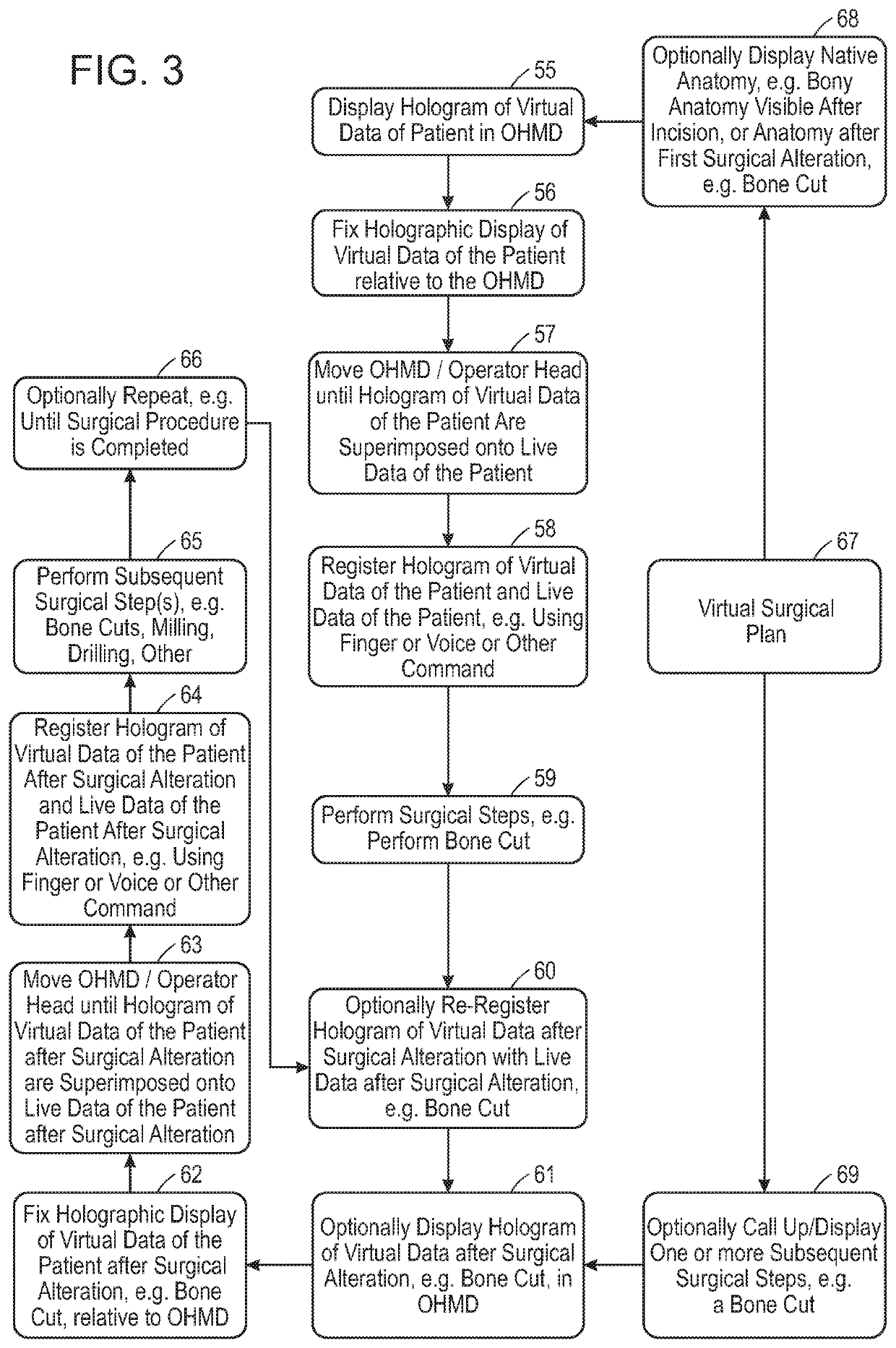

Augmented Reality System Configured for Coordinate Correction or Re-Registration Responsive to Spinal Movement for Spinal Procedures, Including Intraoperative Imaging, CT Scan or Robotics

Devices and methods for performing a surgical step or surgical procedure with visual guidance using an optical head mounted display are disclosed.

Owner:LANG PHILIPP K

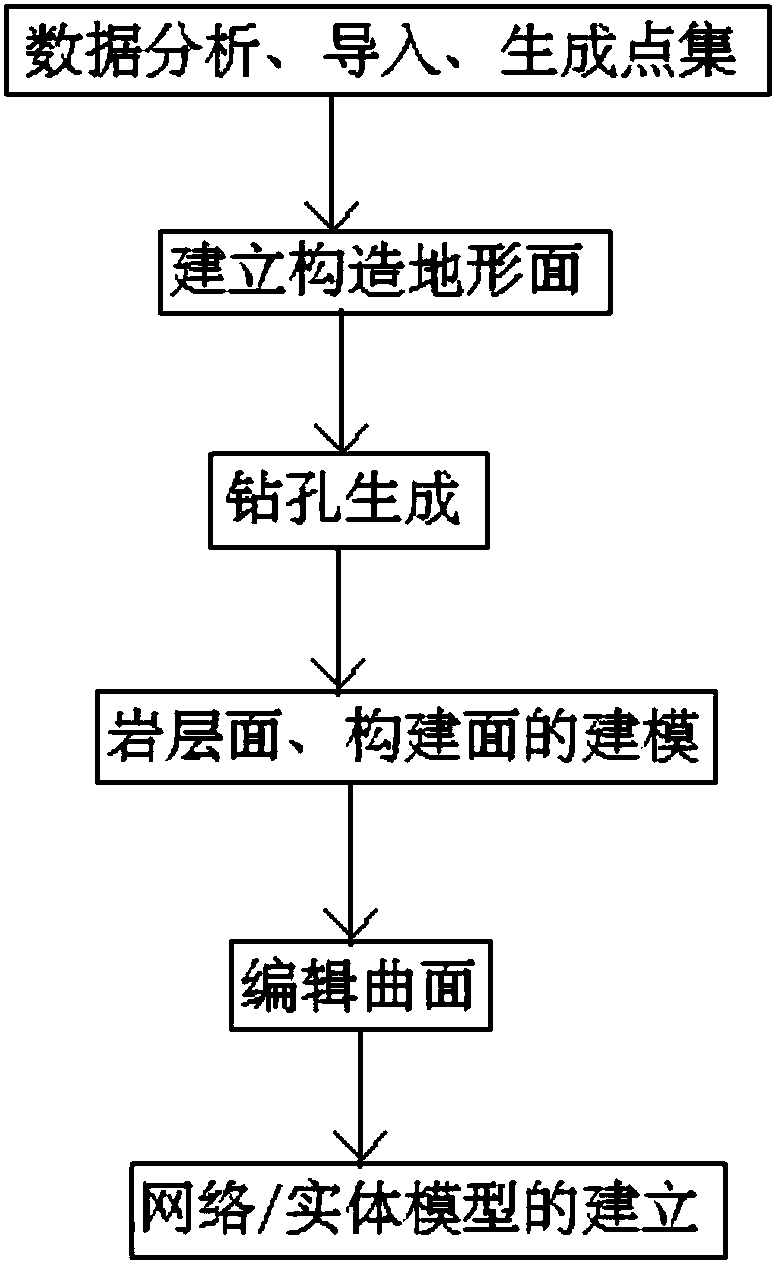

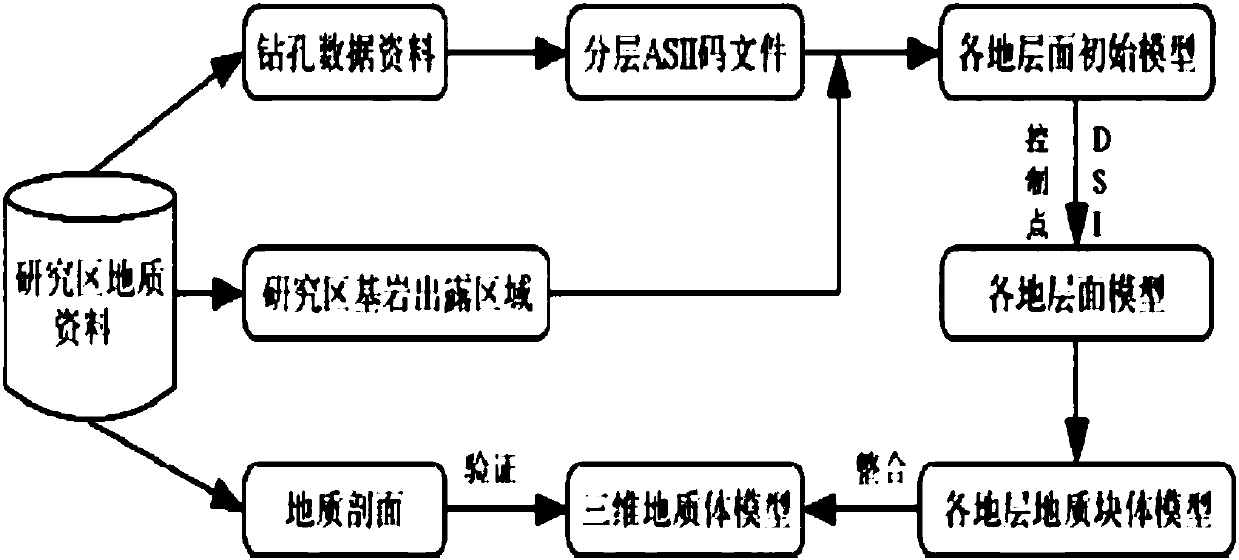

Three-dimensional geologic modeling method based on GOCAD (Geological Object Computer Aided Design)

The invention provides a three-dimensional geologic modeling method based on GOCAD (Geological Object Computer Aided Design). The method comprises the following processes that: 1) carrying out data analysis and importing, and generating a point set; 2) establishing and constructing a topographic surface; 3) generating a borehole; 4) carrying out modeling on a rock stratum surface and a construction surface; 5) editing a hook face; and 6) establishing a grid / entity model. By use of the method, field actual measurement discrete geological prospecting data can be used for fitting and interpolation, a three-dimensional geologic model with information, including terrains simulated at a ratio, a stratum boundary, attributes and the like, is established, geologic structure information can be specifically embodies through three-dimensional visualization, coal mine production and water prevention and treatment work can be visually guided through the visualization, a new meaning and method is provided for the analysis and the research of the water prevention and treatment work, and the method has an effective guidance meaning for the analysis of a mine coal water prevention and treatment scheme.

Owner:中国煤炭地质总局水文地质局

Disorderly stacked workpiece grabbing system based on 3D vision and interaction method

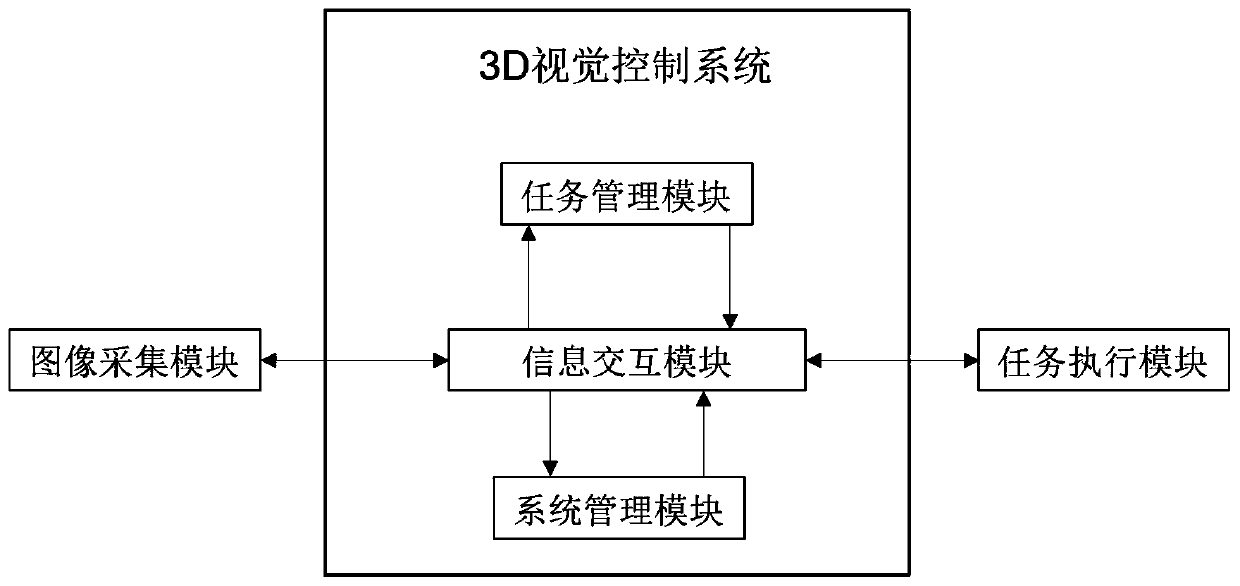

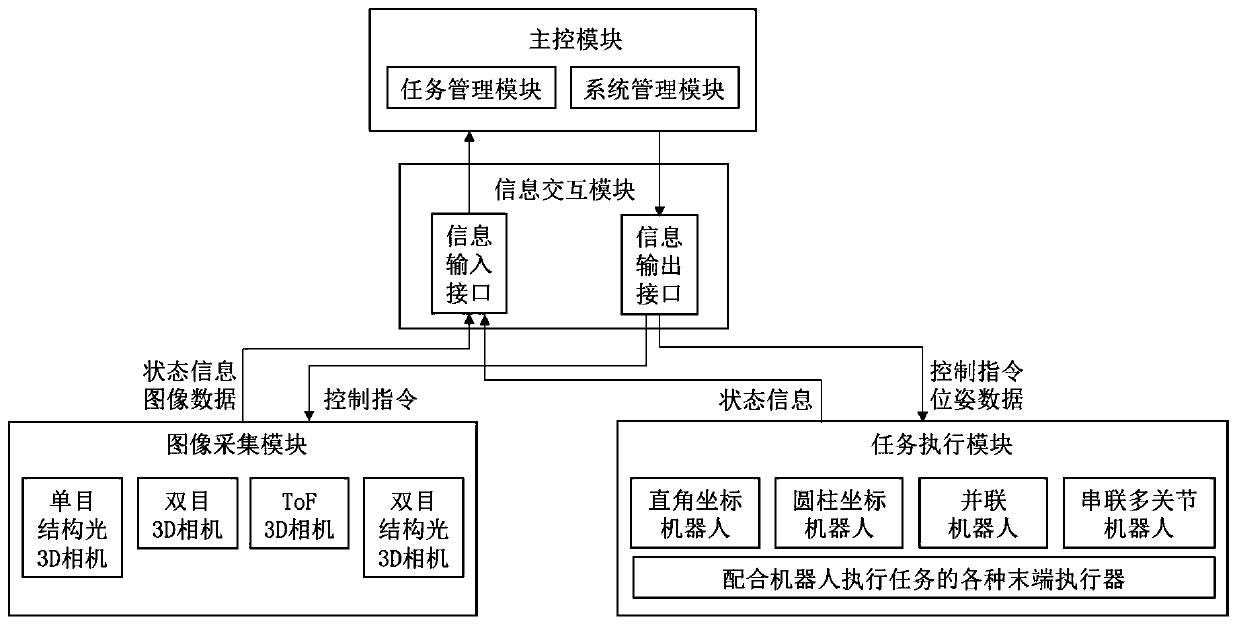

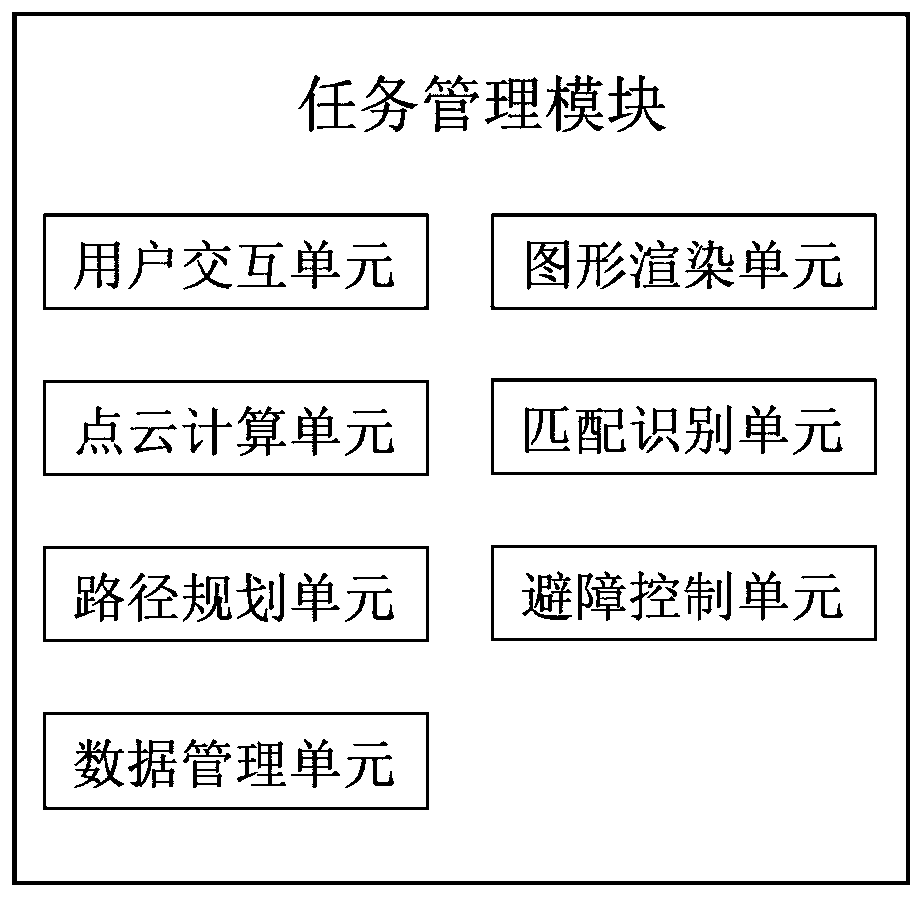

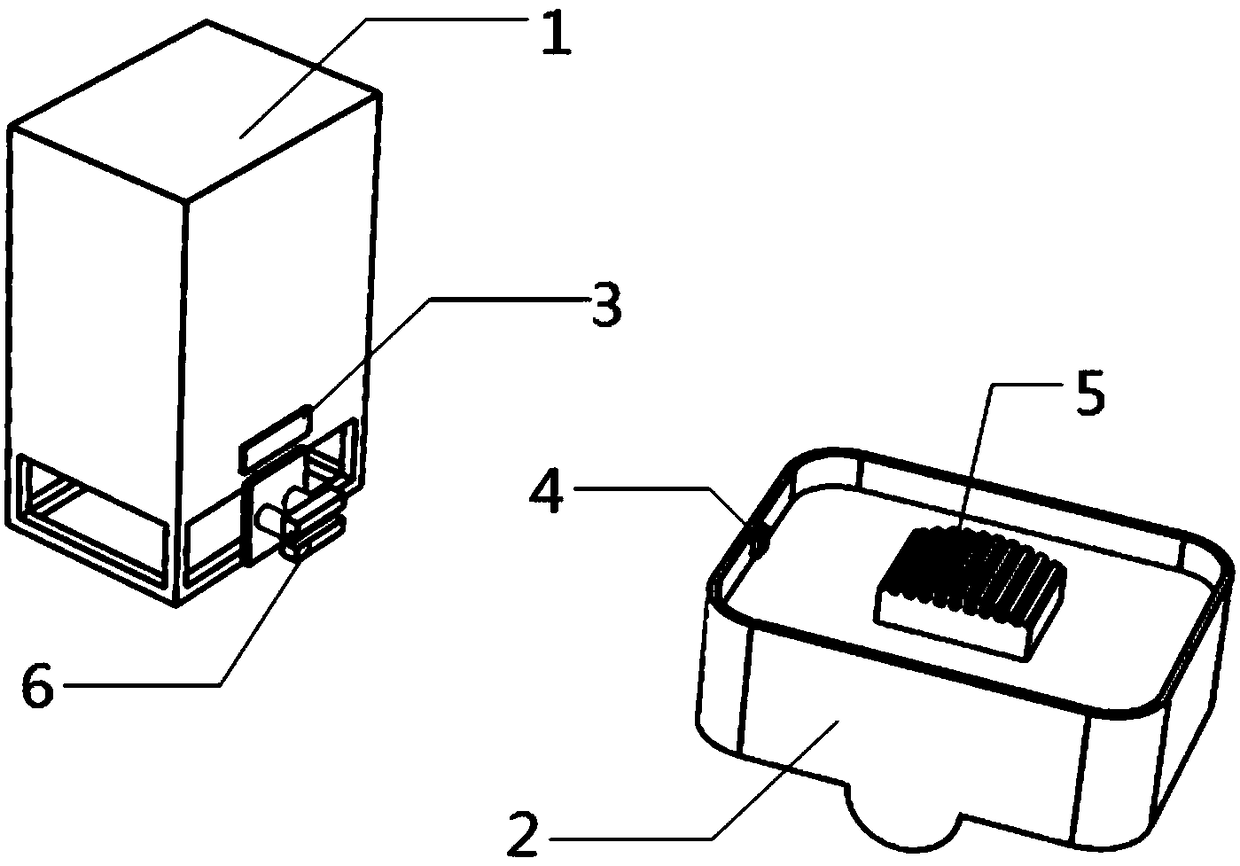

ActiveCN111508066ALower barriers to useReduced commissioning timeTotal factory controlInput/output processes for data processingVisual technologySystems management

The invention discloses a disorderly stacked workpiece grabbing system based on 3D vision and an interaction method, and relates to the technical field of 3D vision. The system comprises a task management module, an information interaction module, a system management module, an image acquisition module and a task execution module. According to the invention, a process-oriented task creation form is adopted for guiding workers to complete disordered workpiece grabbing task creation according to set steps; workers only need to grasp the specific implementation process of the disordered workpiecegrabbing task; the technical details of workpiece point cloud model generation, workpiece matching recognition, grabbing pose calculation, path planning and the like do not need to be paid attentionto, the usability of a visual software system is improved, the use threshold and learning cost of a terminal user are reduced, the debugging time of a 3D visual guidance robot for executing a grabbingtask is shortened, and the production efficiency is improved.

Owner:北京迁移科技有限公司

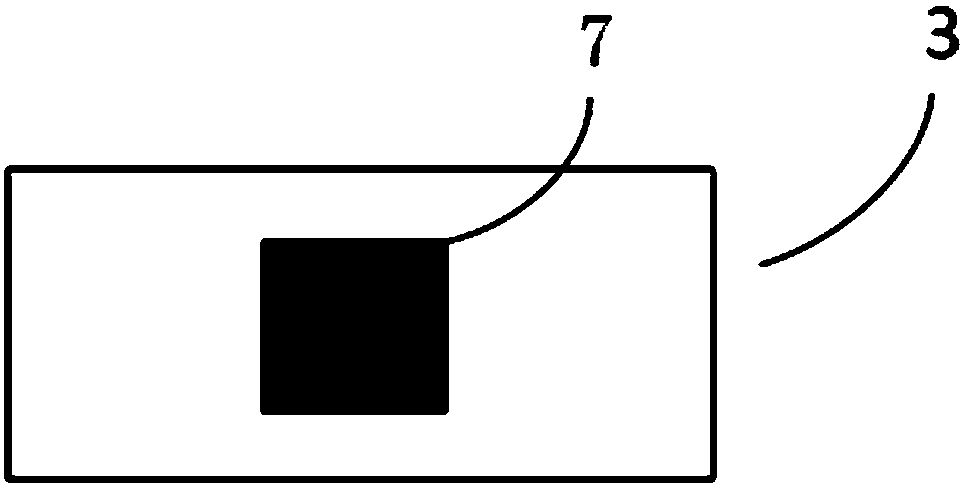

Alignment system and method for automatic charging of robots based on vision

ActiveCN108508897ANo wearImprove real-time performanceBatteries circuit arrangementsElectric powerSignal-to-noise ratio (imaging)Vision based

The invention discloses an alignment system and method for automatic charging of robots based on vision. An active visual guidance device is installed on a charging base, a common camera is installedat the charging end of a mobile robot, the position opposite to the charging base and an approximate angle of the robot are calculated according to the position of a guidance marker in an image captured by the camera and visual characteristics, and then the robot is accurately aligned to the charging base by adopting an alignment motion control algorithm. Positioning markers such as magnetic barslaid on the ground or two-dimensional codes are not needed, so that the problem does not exist that failure is caused by manual or mechanical abrasion. The calculation of the relative position of themobile robot can be up to the precision of image pixel level, real-timeliness is good, and the precision is high. Compared with an image identification scheme of a charging base body, the active visual guidance device actively transmits marking signals, is simple in identification, has higher signal to noise ratio and can be obviously different from a background, and therefore guidance and positioning are more stable and reliable.

Owner:HANGZHOU LANXIN TECH CO LTD

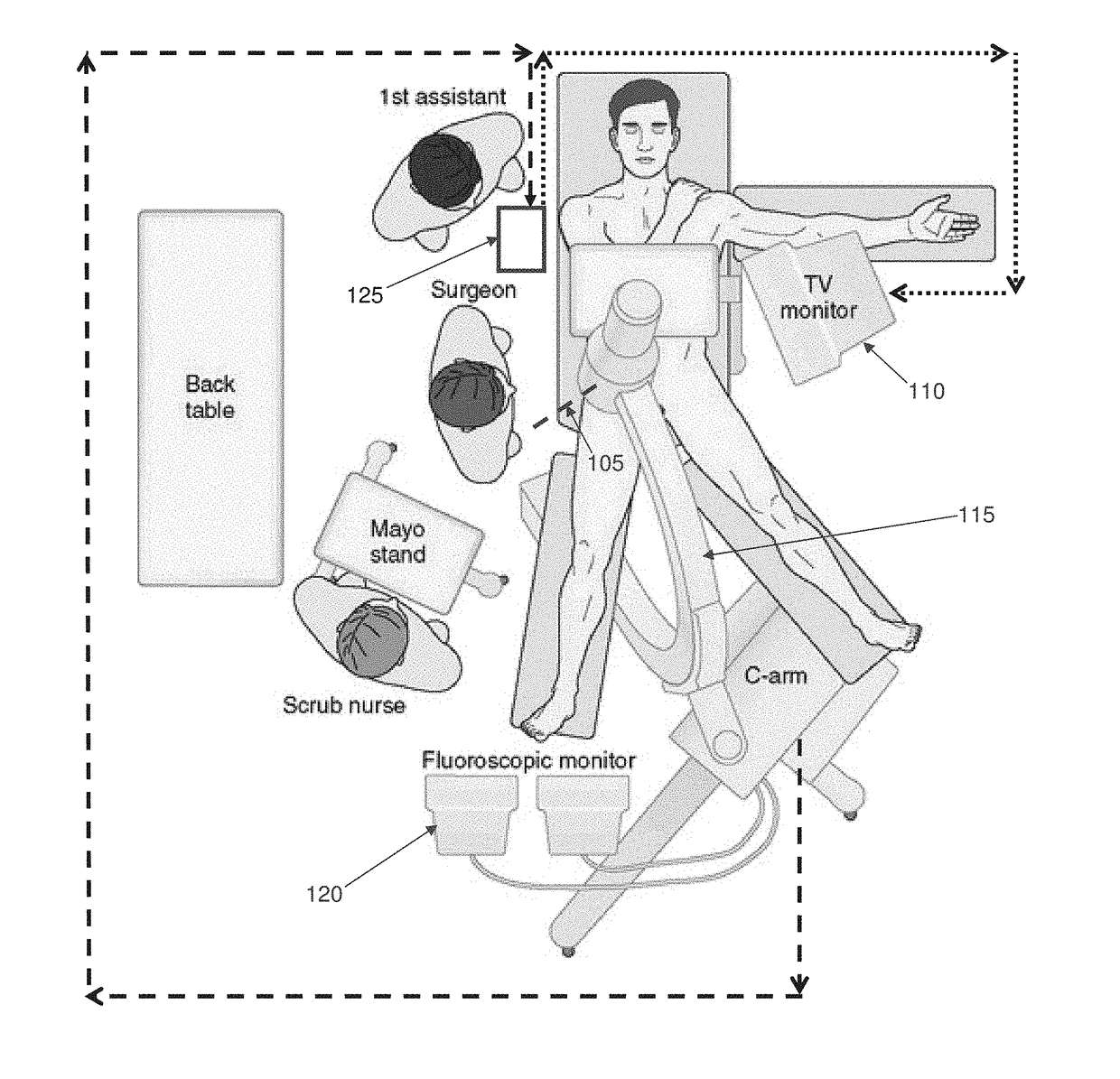

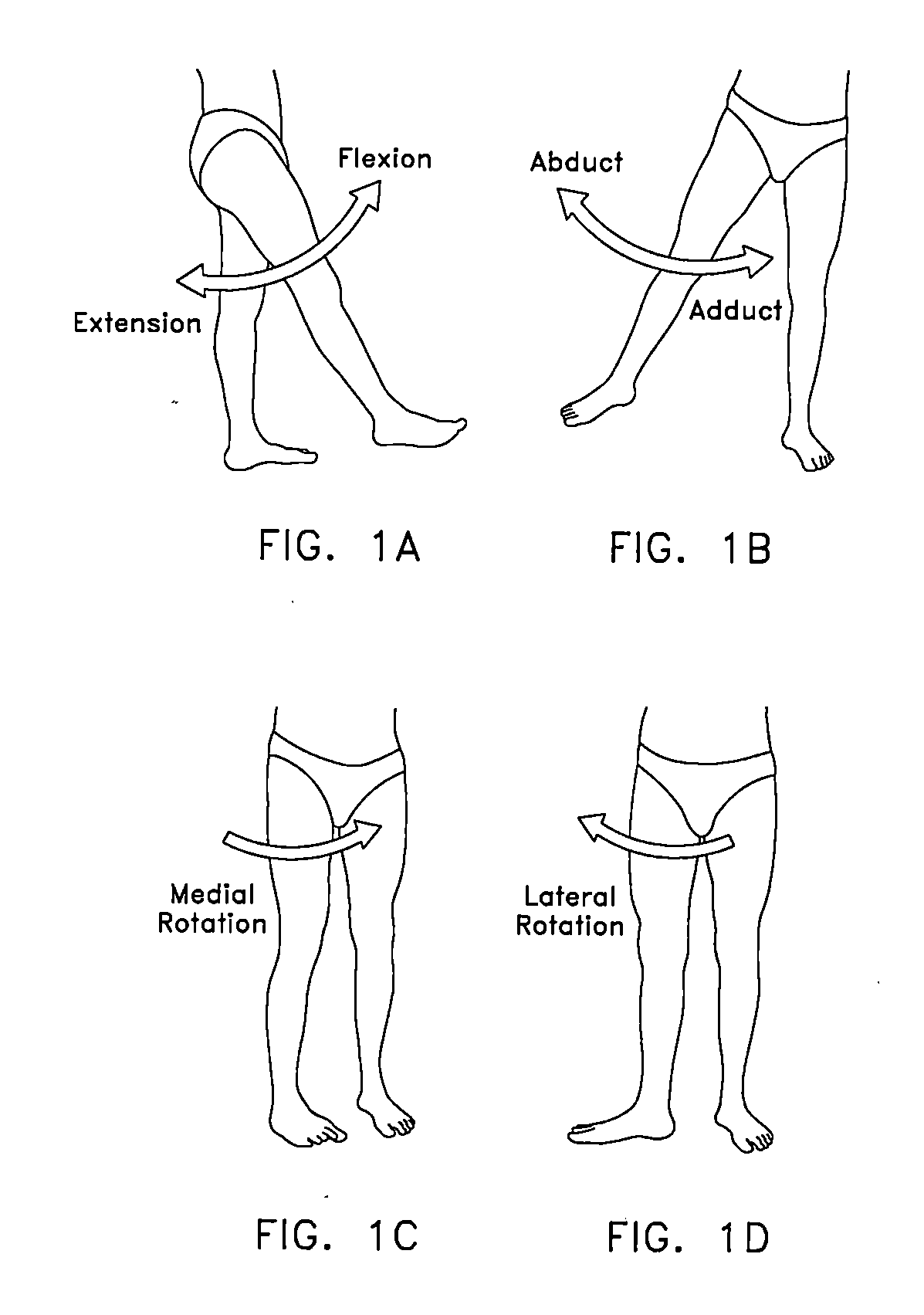

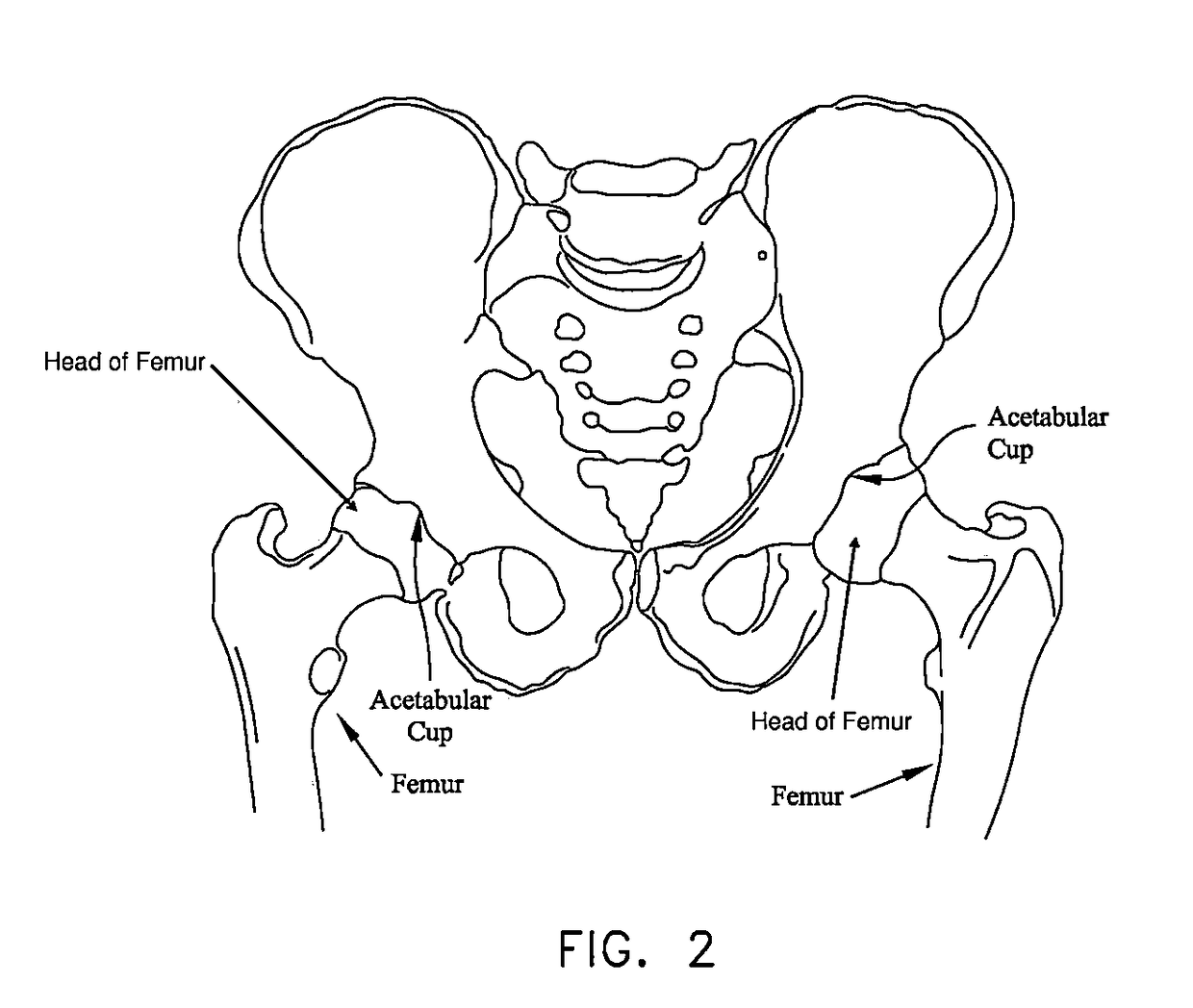

Method and apparatus for treating a joint, including the treatment of cam-type femoroacetabular impingement in a hip joint and pincer-type femoroacetabular impingement in a hip joint

A computer visual guidance system for guiding a surgeon through an arthroscopic debridement of a bony pathology, wherein the computer visual guidance system is configured to: (i) receive a 2D image of the bony pathology from a source; (ii) automatically analyze the 2D image so as to determine at least one measurement with respect to the bony pathology; (iii) automatically annotate the 2D image with at least one annotation relating to the at least one measurement determined with respect to the bony pathology so as to create an annotated 2D image; and (iv) display the annotated 2D image to the surgeon so as to guide the surgeon through the arthroscopic debridement of the bony pathology.

Owner:STRYKER CORP

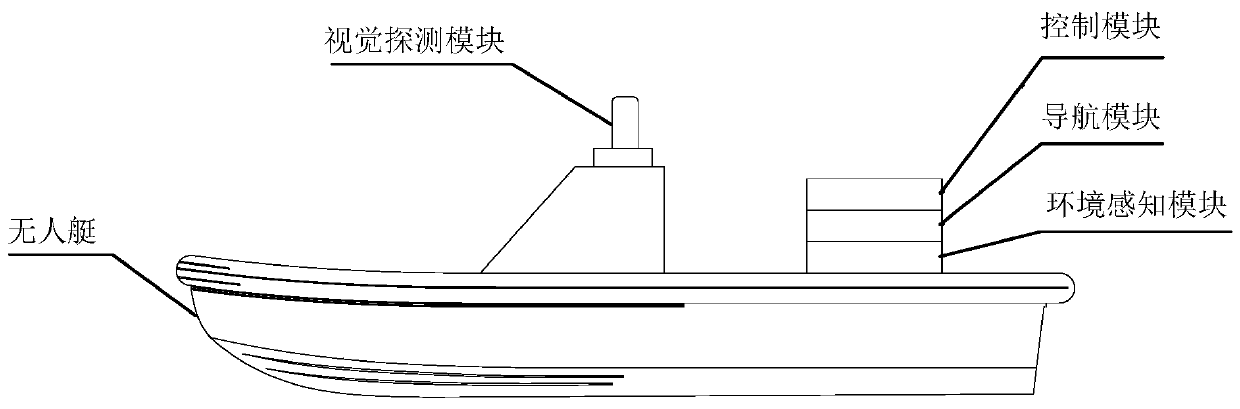

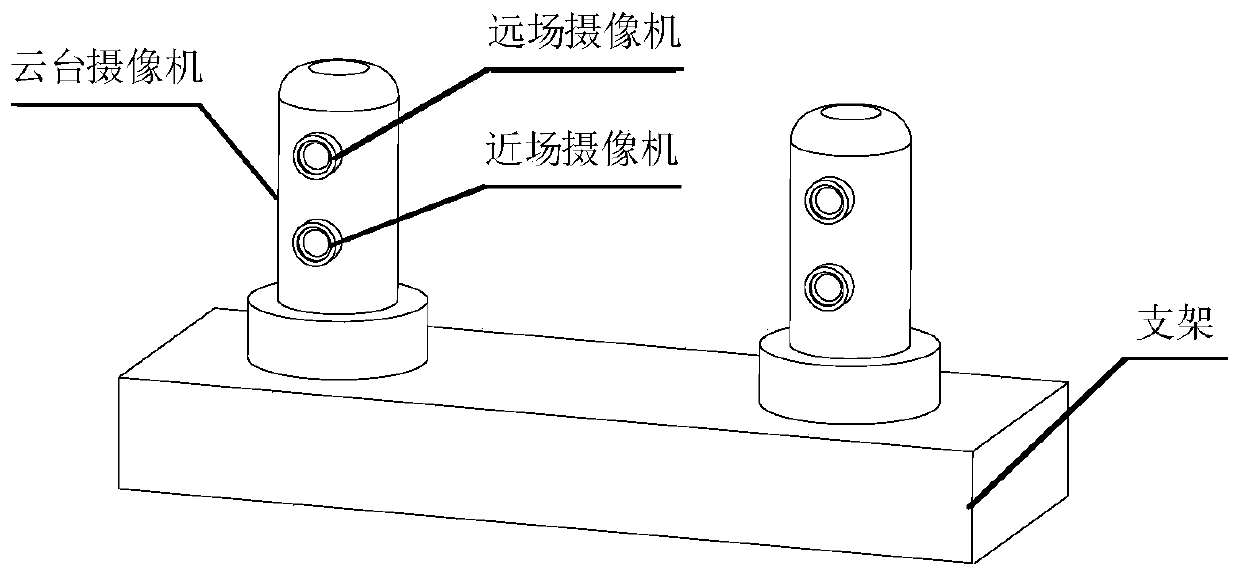

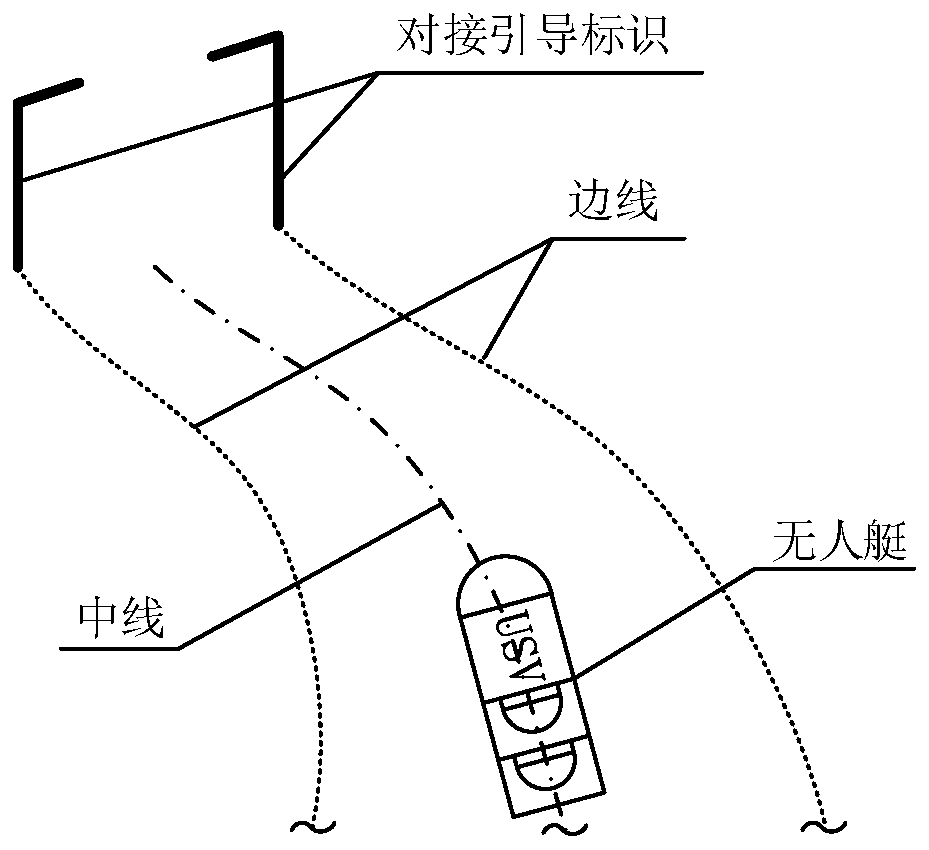

Visual guidance device and method for unmanned surface vehicle recycling

InactiveCN110162042AOvercoming difficulties in recycling guide positioningImprove accuracyWaterborne vesselsUnmanned surface vesselsClosed loopVision sensor

The invention discloses a visual guidance device and method for unmanned surface vehicle recycling. The method comprises the following steps: measuring a relative distance with a mother ship target, adirection and a heading in real time through a pan and tilt camera, thereby realizing the positioning of the mother ship target, combining the relative locations of the unmanned surface vehicle withtwo sidelines in a sailing channel and the offset of the central line, controlling the displacement, direction and deviation angle of the automatic driving of the unmanned surface vehicle in a closed-loop way, and maintaining center line driving state in the unmanned surface vehicle cycling process. If the unmanned surface vehicle deviates from two sidelines of the sailing channel in the sailing,the condition indicates that the unmanned surface vehicle misses the sailing channel, a navigation module of the unmanned surface vehicle re-plans an auxiliary lead line of the unmanned surface vehicle. The method disclosed by the invention is small in external interference and wide in signal detection range, and the acquired target information is complete, and the target motion parameters in thewhole field of view of the visual sensor can be dynamically measured.

Owner:CHINA SHIP DEV & DESIGN CENT

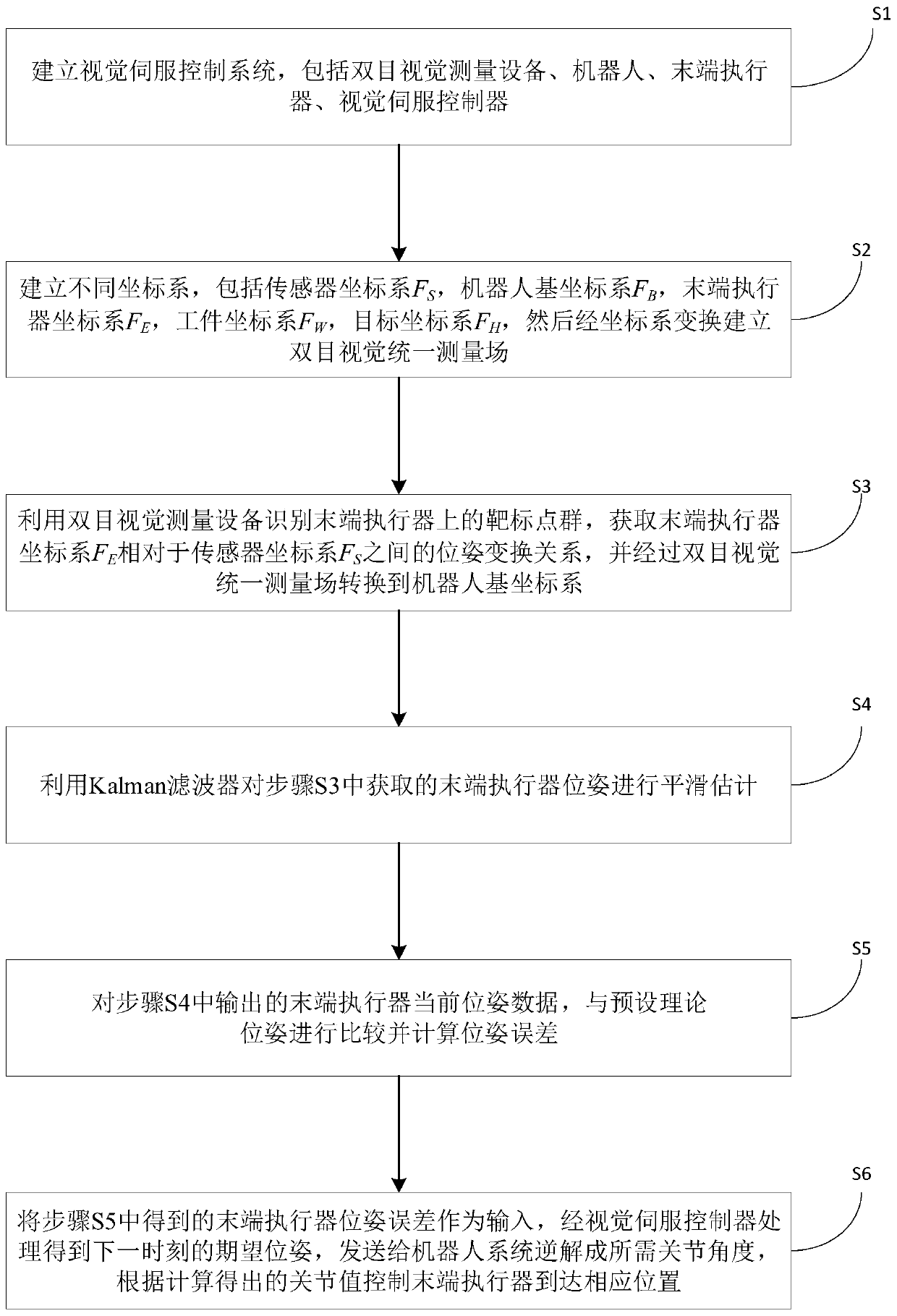

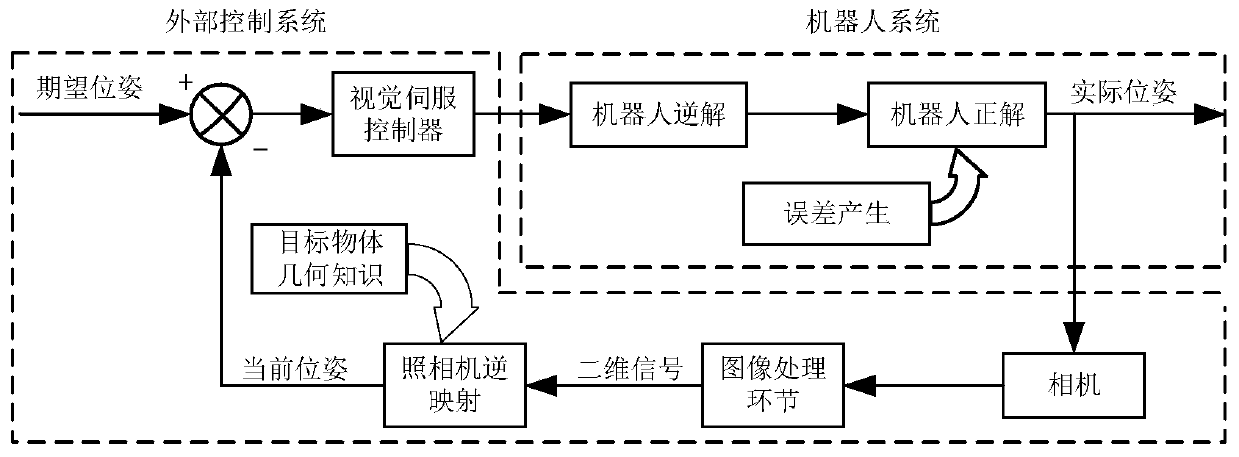

Robot trajectory tracking control method based on visual guidance

ActiveCN111590594ASolve poor trajectory accuracyOptimize layoutProgramme-controlled manipulatorVision basedVision sensor

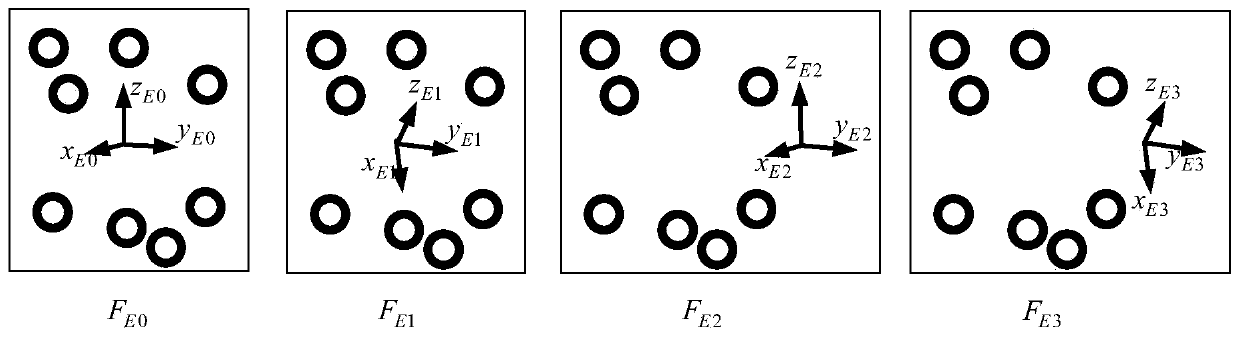

The invention relates to a robot trajectory tracking control method based on visual guidance. The method comprises the following steps: establishing a robot visual servo control system; establishing abinocular vision unified measurement field; carrying out observing by using a binocular vision device to obtain a pose transformation relationship between an end effector coordinate system and a measurement coordinate system, and converting the pose transformation relationship to a robot base coordinate system through the binocular vision measurement field; carrying out smooth estimation on the observed pose of an end effector by utilizing a Kalman filter; calculating the pose error of the end effector; and designing a visual servo controller based on fuzzy PID, processing the pose error to obtain an expected pose at the next moment, and sending the expected pose to a robot system to control the end effector to move. The technical scheme is oriented to the field of flexible machining of aerospace large parts and the application requirements of robot high-precision machining equipment, the pose of the end effector is sensed in real time through a visual sensor, so that a closed-loop feedback system is formed, and the trajectory motion precision of a six-degree-of-freedom series robot is greatly improved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

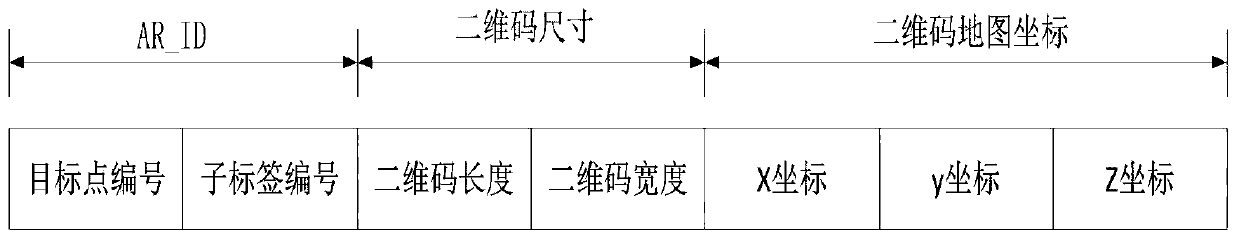

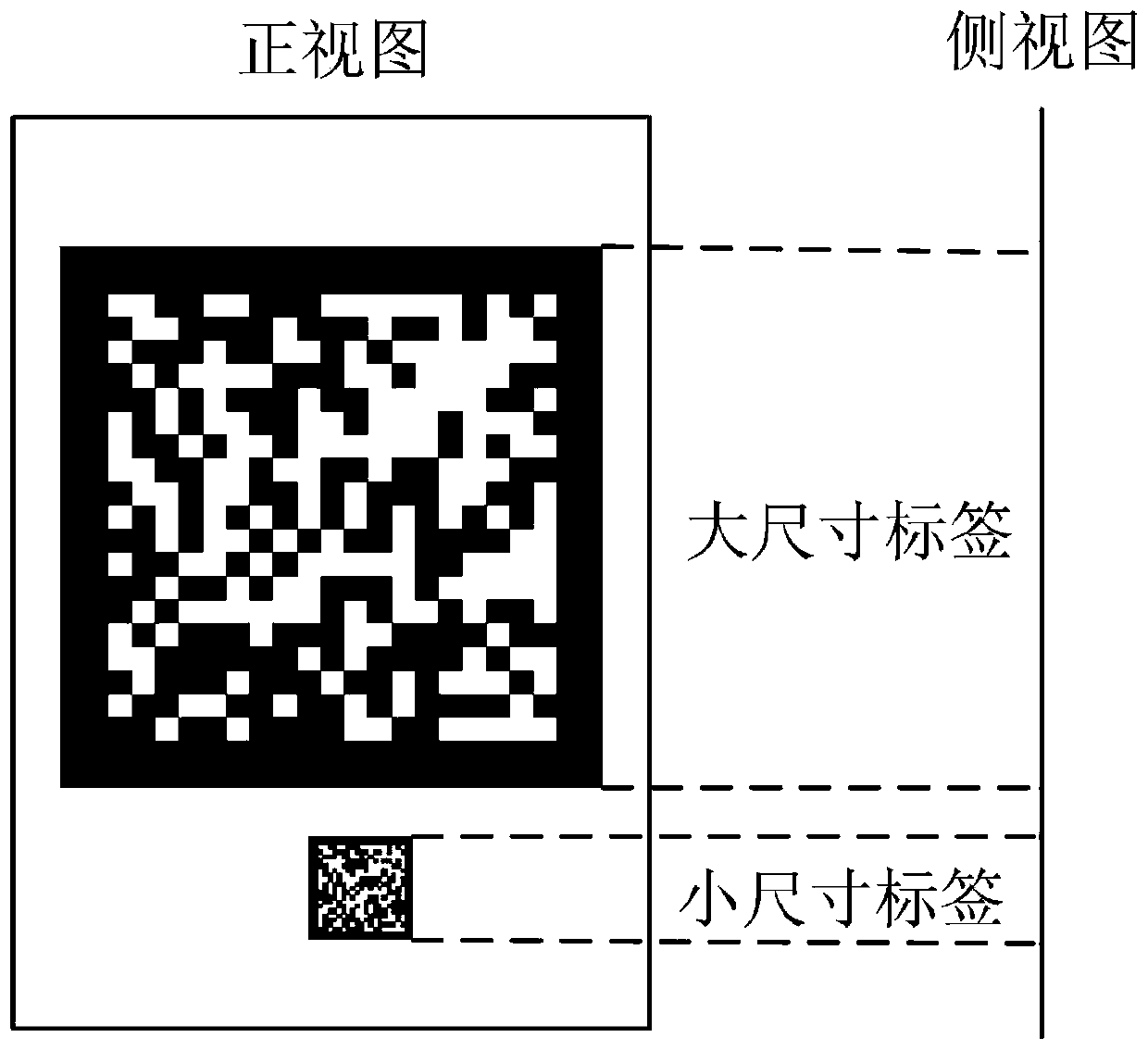

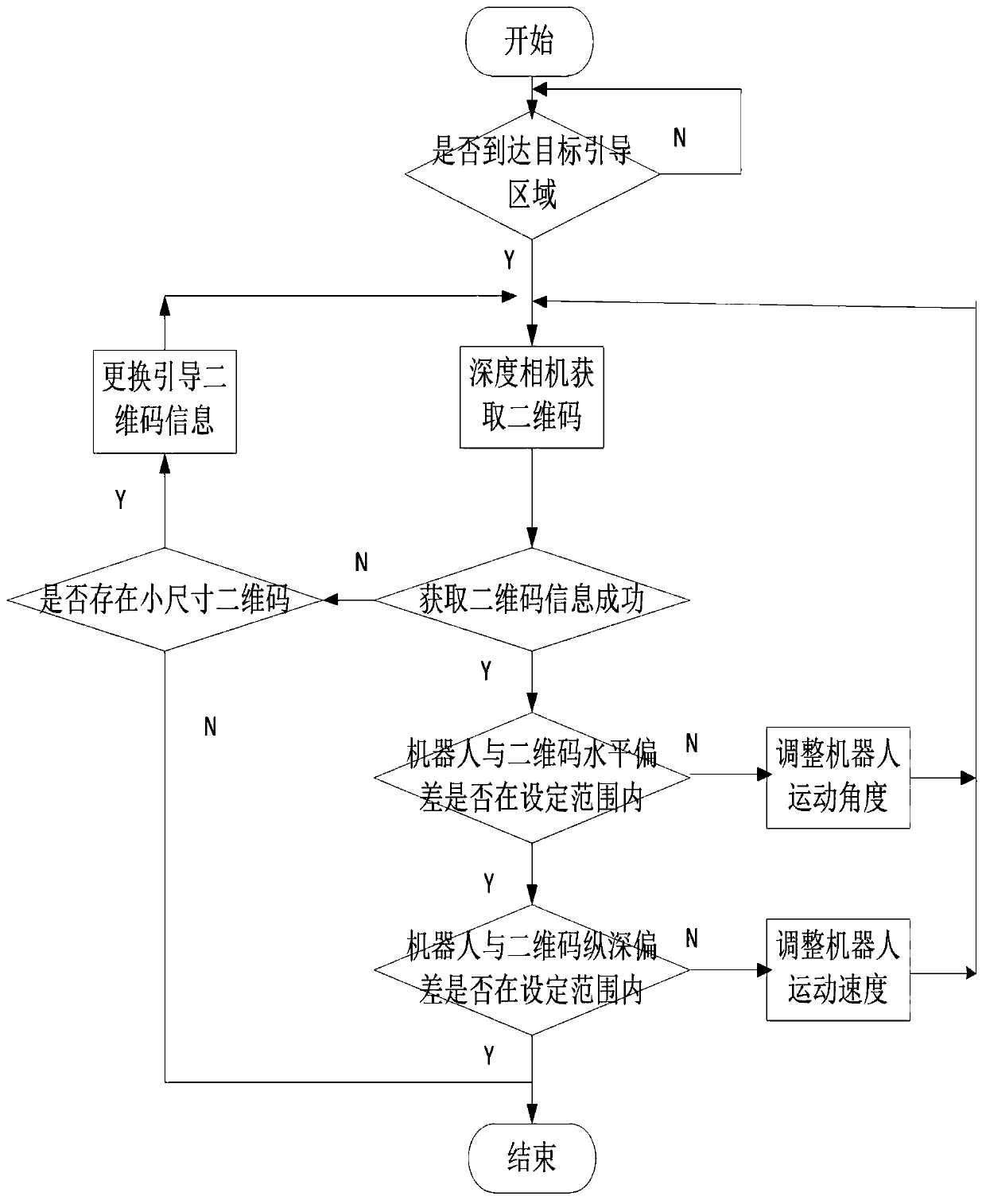

Two-dimensional code guidance control method of autonomous mobile robot

PendingCN110673612ASolve the positioning accuracySolve problems such as path planningPosition/course control in two dimensionsGuidance controlEngineering

The invention relates to a two-dimensional code guidance control method of an autonomous mobile robot, and belongs to the technical field of guidance control of mobile robots. The method comprises thesteps of: marking a guidance target position on a constructed map by using a plurality of two-dimensional code based on two-dimensional code vision and in combination with real-time positioning and composition of the mobile robot; moving, by the autonomous mobile robot, to a two-dimensional code area to complete rough positioning; and then, identifying, by the mobile robot, the two-dimensional code, calculating a three-dimensional coordinate point and pose coordinates of the point, adjusting the forward speed and direction of the mobile robot according to the identified posture of the two-dimensional code and a spatial position of the two-dimensional code relative to a camera, so that the robot moves to the guidance target position to complete target guidance. The two-dimensional code guidance control method provided by the invention has the advantages that a two-dimensional code label is used to cooperate with the camera to perform visual guidance, so that the posture characteristicsare obvious, the processing is fast, the hardware cost is low, and the guidance accuracy is high.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

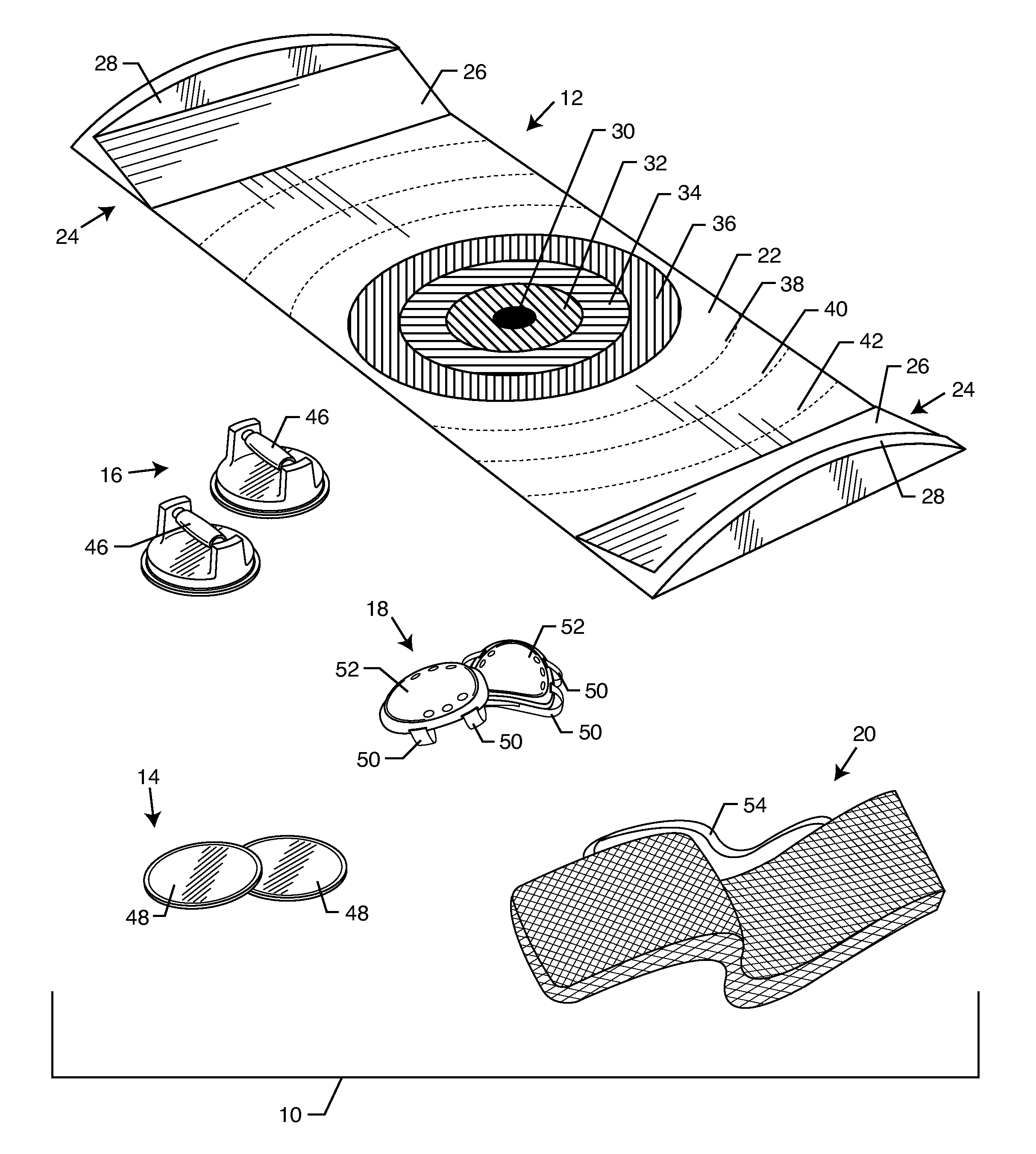

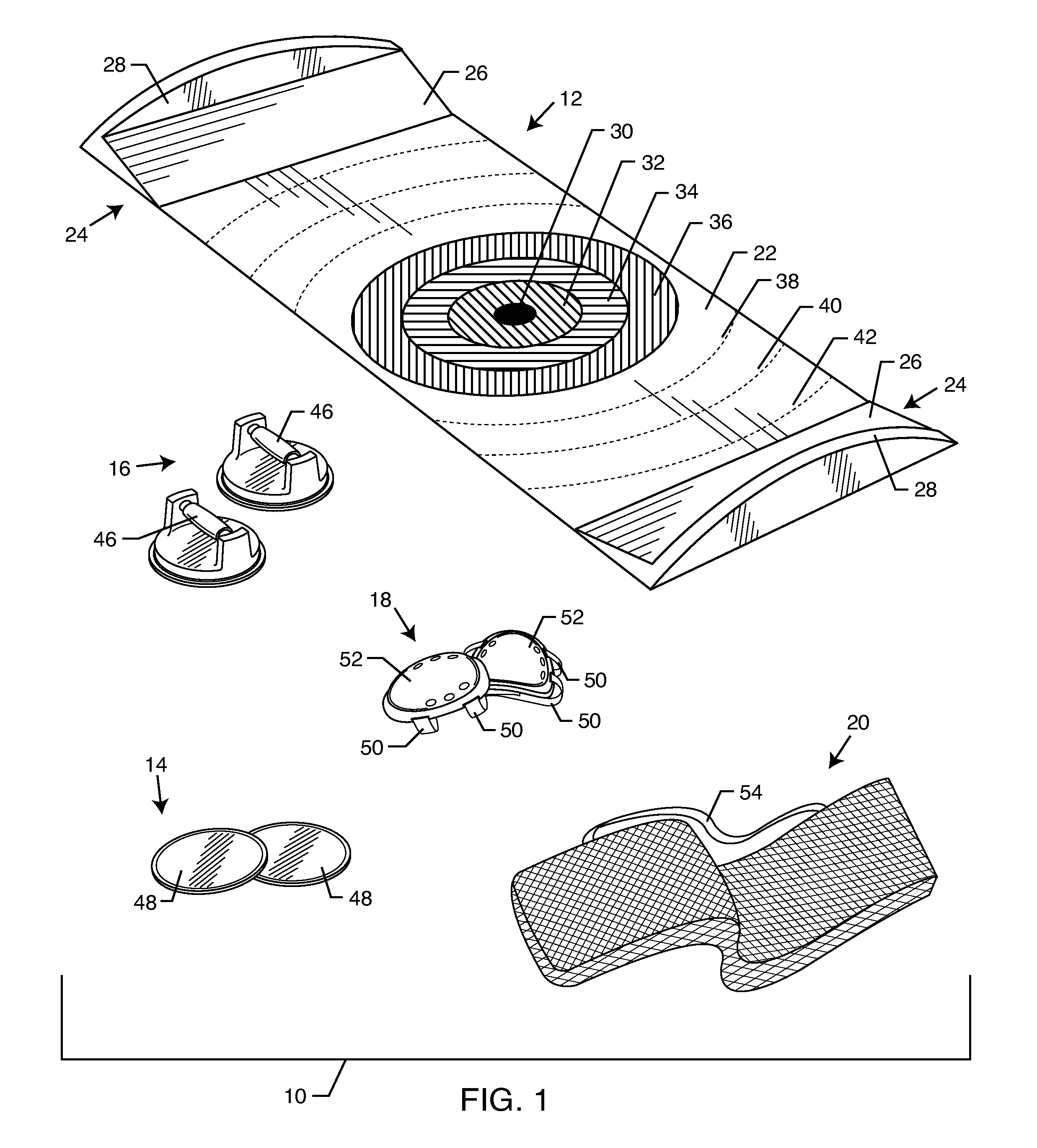

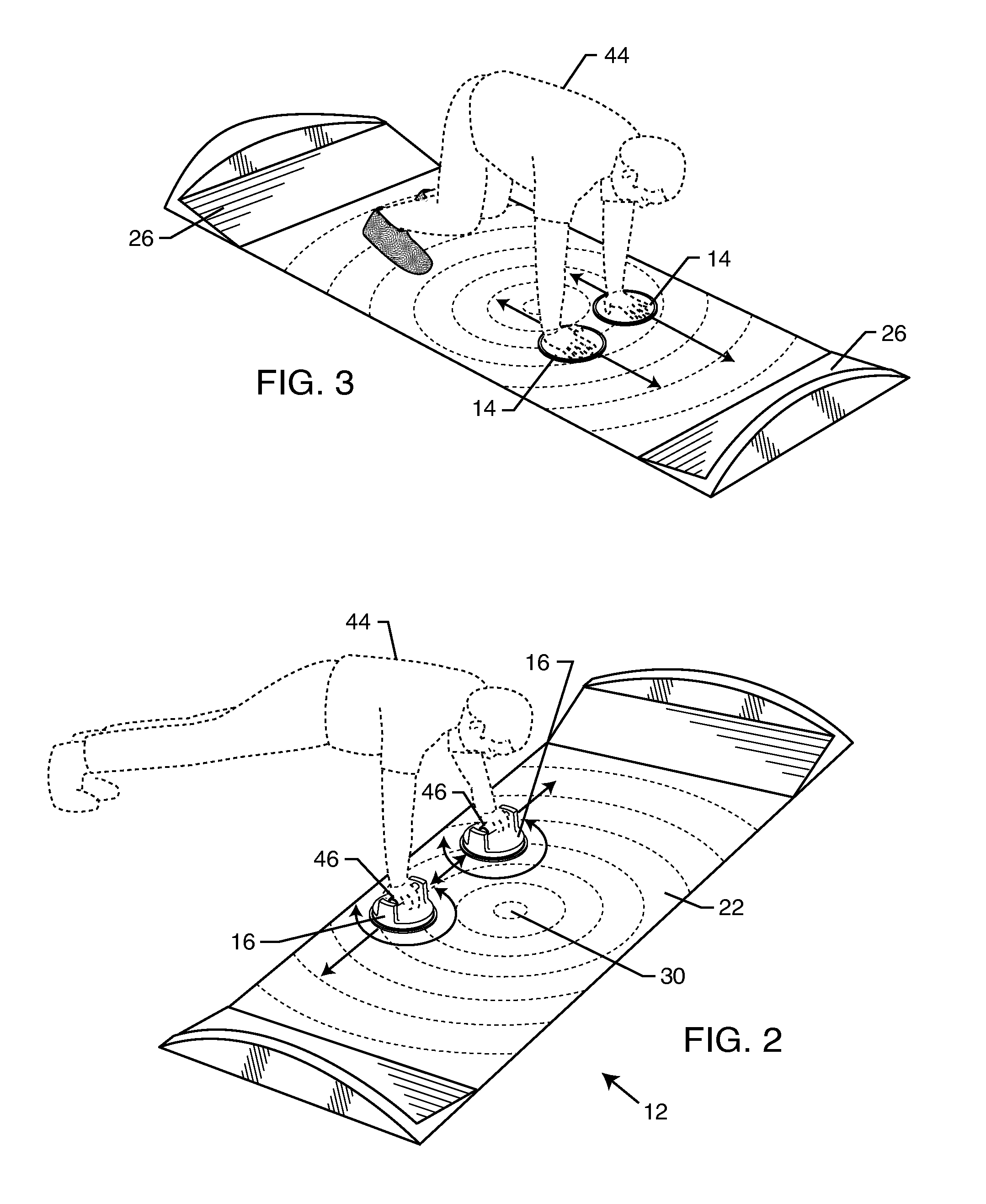

Multi-exercise slide board

InactiveUS20110230312A1Easy to slideFacilitates rotating movementStiltsMuscle exercising devicesPush upsVisual perception

The multi-exercise trainer includes a portable workout board having a low-friction upper surface. The low-friction upper surface includes a set of indicia concentrically spaced from one another to provide visual guidance for performing exercises thereon. The multi-exercise trainer further includes a hand pad slidable and rotatable over the low-friction upper surface. The indicia designate defined distances on the low-friction upper surface so a user may selectively position and slide the hand pad thereon while simultaneously performing push-ups.

Owner:BORG UNLTD

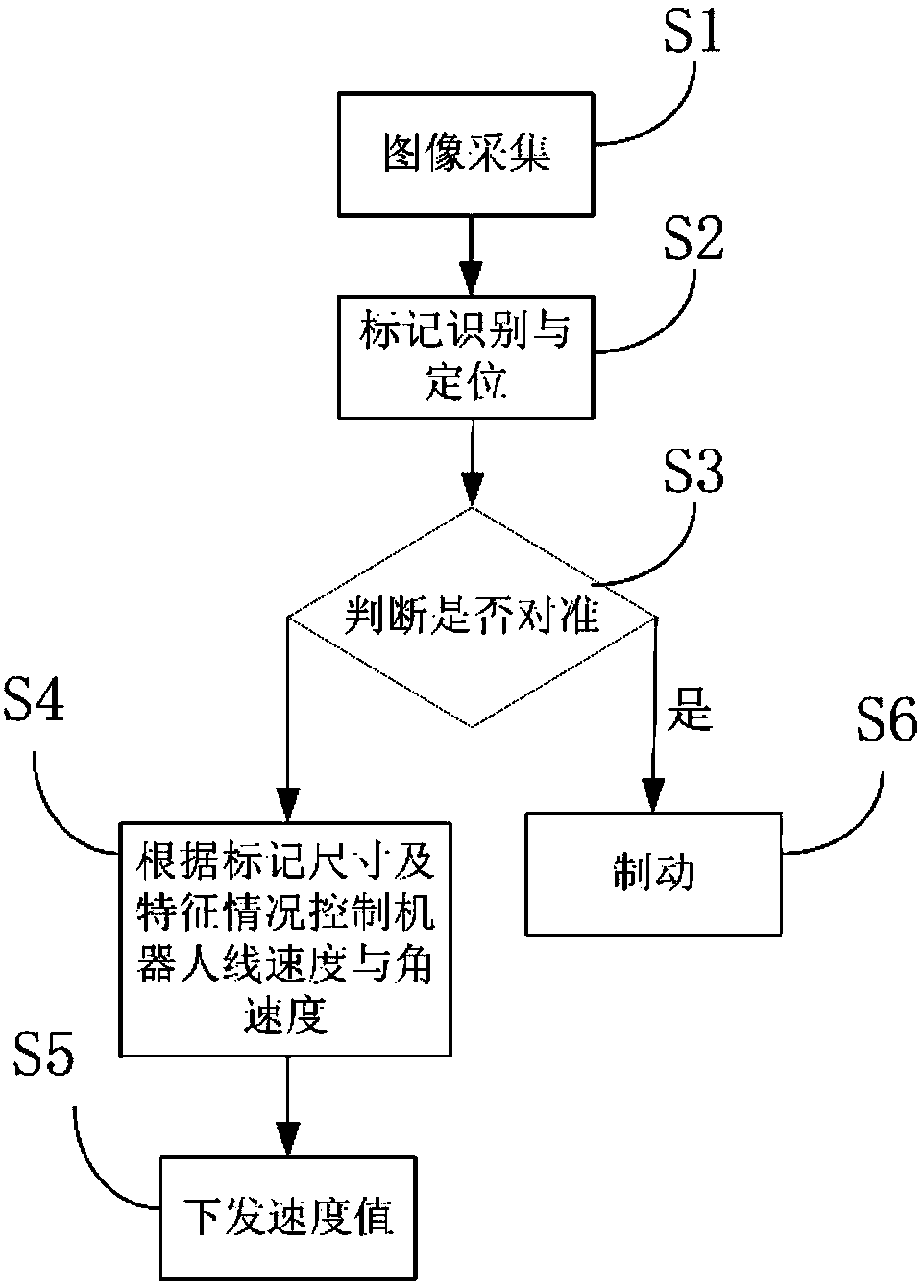

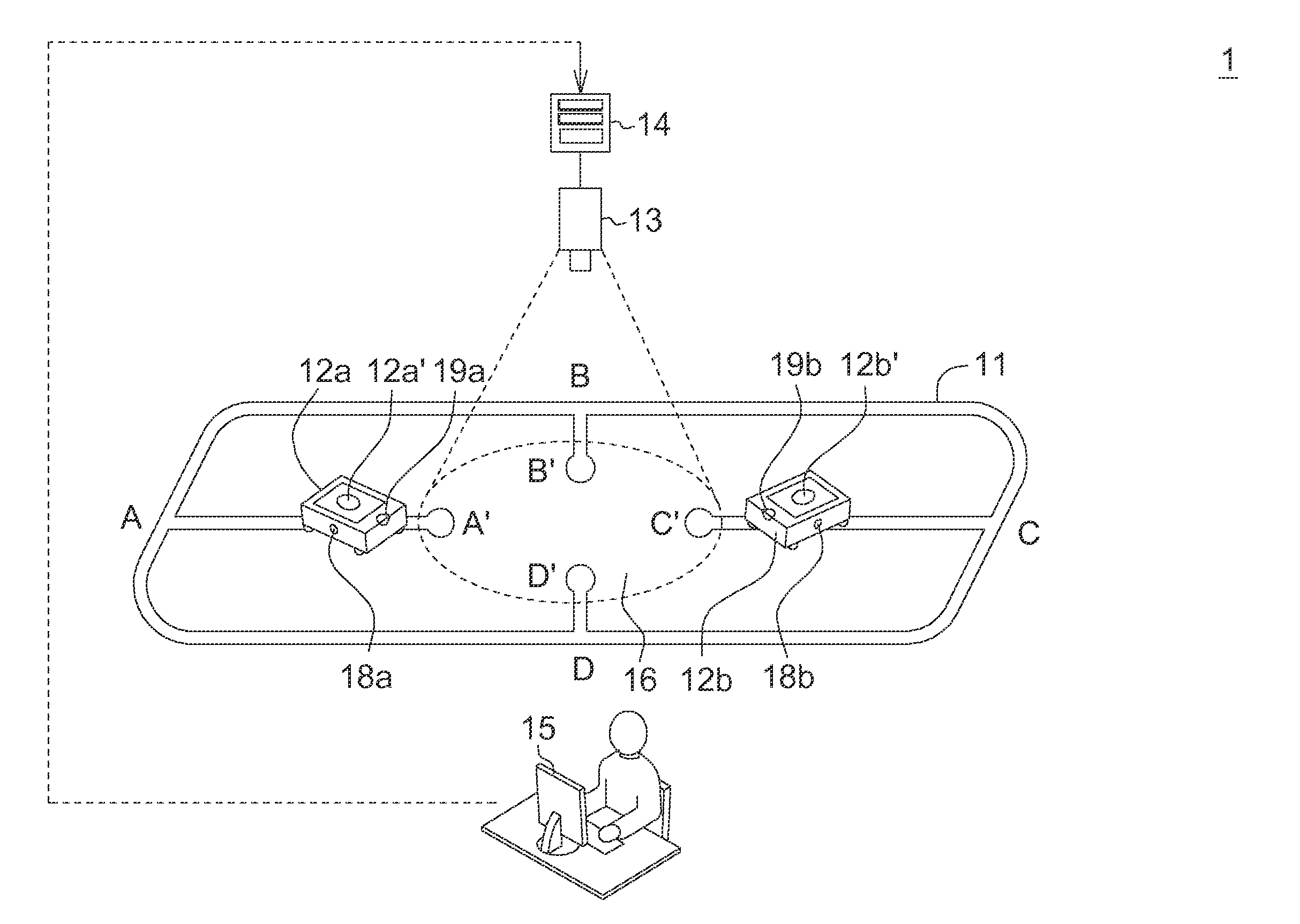

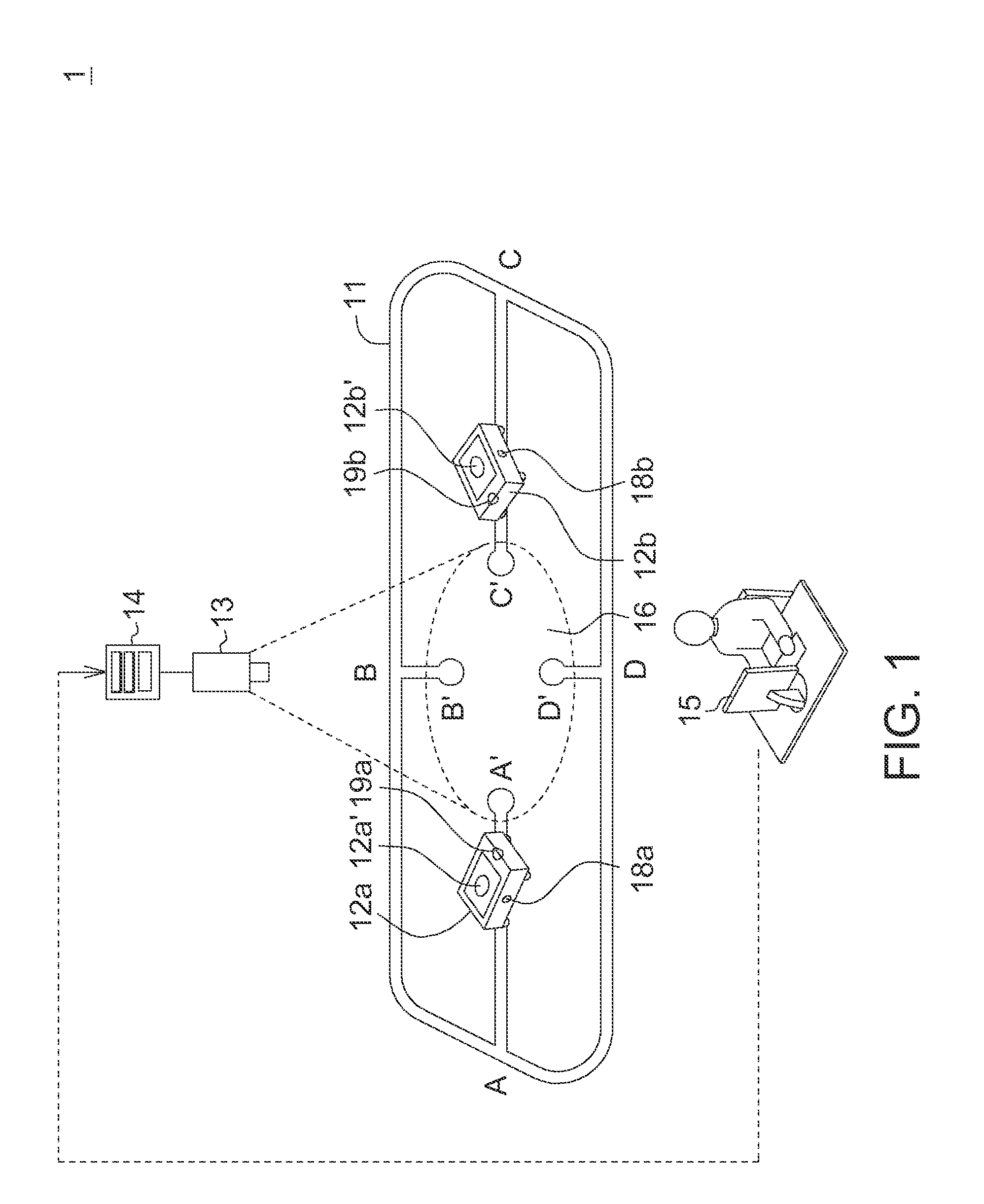

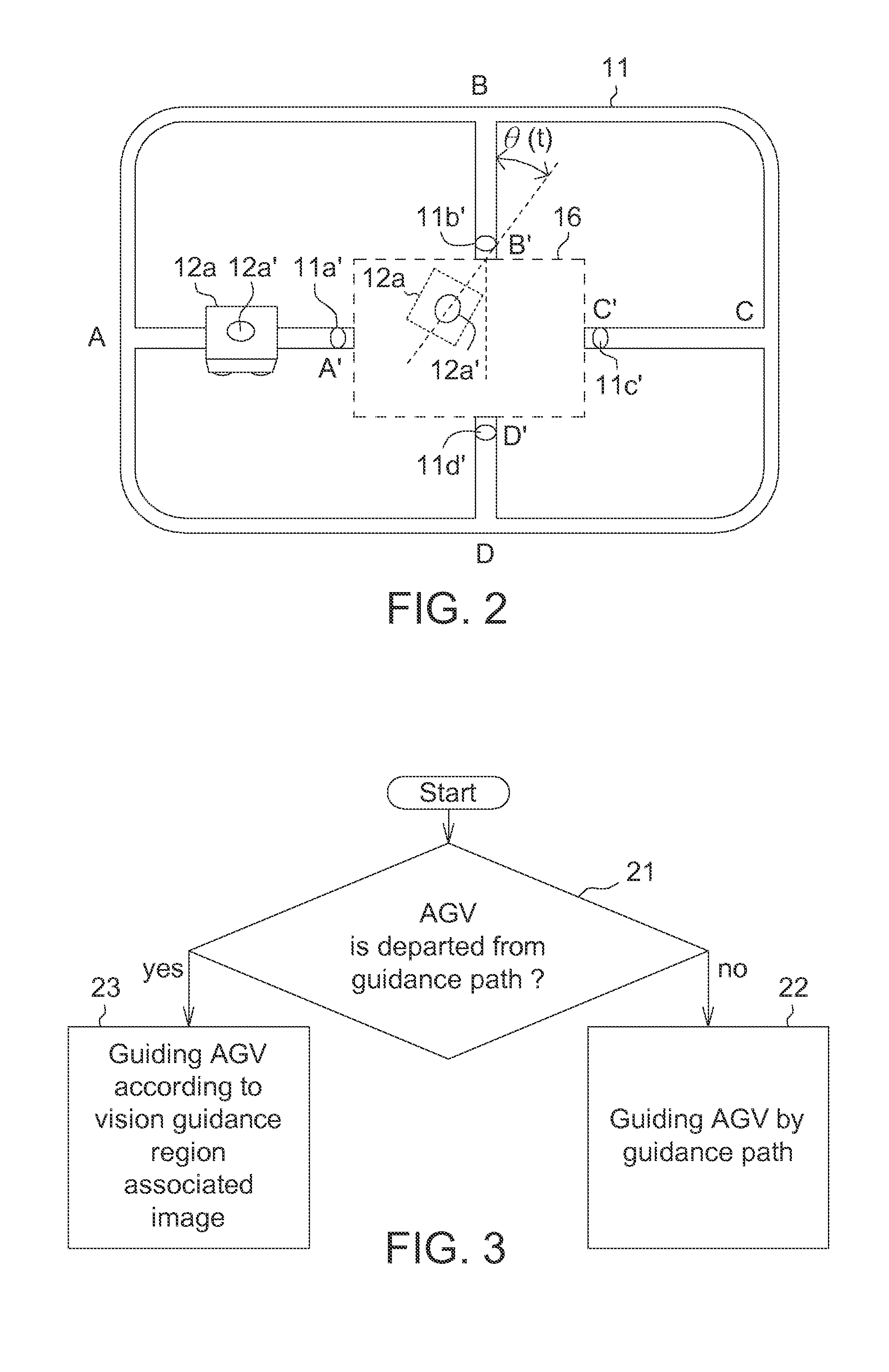

System and method for guiding automated guided vehicle

ActiveUS20130158773A1Position/course control in two dimensionsDistance measurementControl engineeringAutomated guided vehicle

A system for guiding an automated guided vehicle (AGV) is provided. The system includes a guidance path, an AGV, an image capturing apparatus and an operation unit. The guidance path guides the AGV. The AGV moves on the guidance path and is guided by the guidance path. The AGV moves in a vision guidance region after departing from the guidance path. The image capturing apparatus captures a vision guidance region associated image. The vision guidance region associated image at least includes an image of the vision guidance region. The operation unit determines whether the AGV departs from the guidance path, and calculates position information of the AGV in the vision guidance region. When the AGV departs from the guidance path, the operation unit guides the AGV according to the vision guidance region associated image.

Owner:IND TECH RES INST

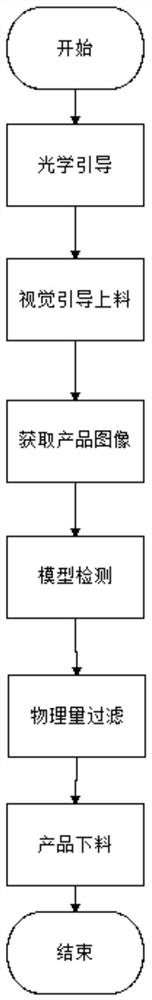

Visual detection method for appearances

ActiveCN111951237AReduce generationReduce time complexityImage enhancementImage analysisPattern recognitionImage detection

The invention provides a visual detection method for appearances which comprises the following steps: an optical guiding step: calculating the similarity of images to be compared by taking a referenceimage as a standard, so as to ensure the optical imaging consistency of the images acquired by the same batch of products on different machines; and in the visual guidance step, when small part products need to reach preset high precision, otherwise, indicating that a mechanical arm cannot conduct normal feeding; acquiring the deviation angle, the X position and the Y position of a product through a visual guidance algorithm before product feeding, and notifying the machines to conduct adjustment to ensure the product feeding precision. According to the invention, a deep learning and traditional image processing machine vision detection method is adopted, so that the requirement of similarity comparison in an image acquisition stage exists, and the invention is used for ensuring that consistent images are acquired, thereby ensuring accuracy of subsequent data for depth model detection and the accuracy of image detection.

Owner:上海微亿智造科技有限公司 +1

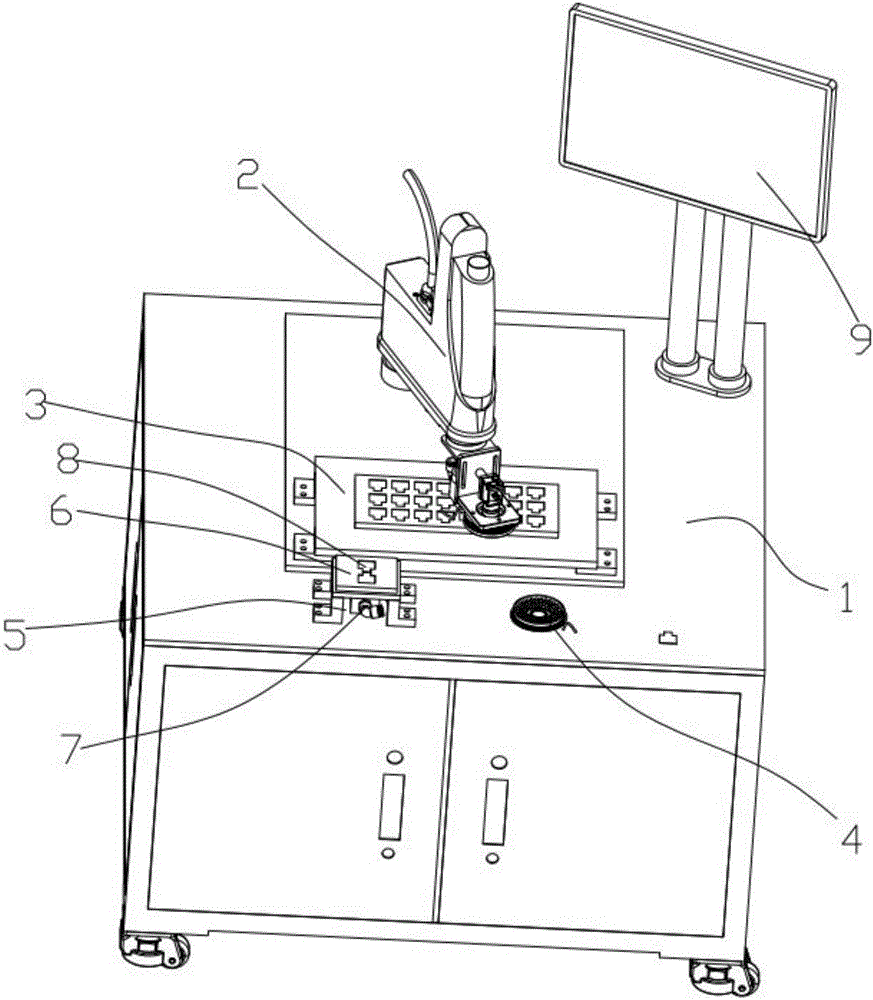

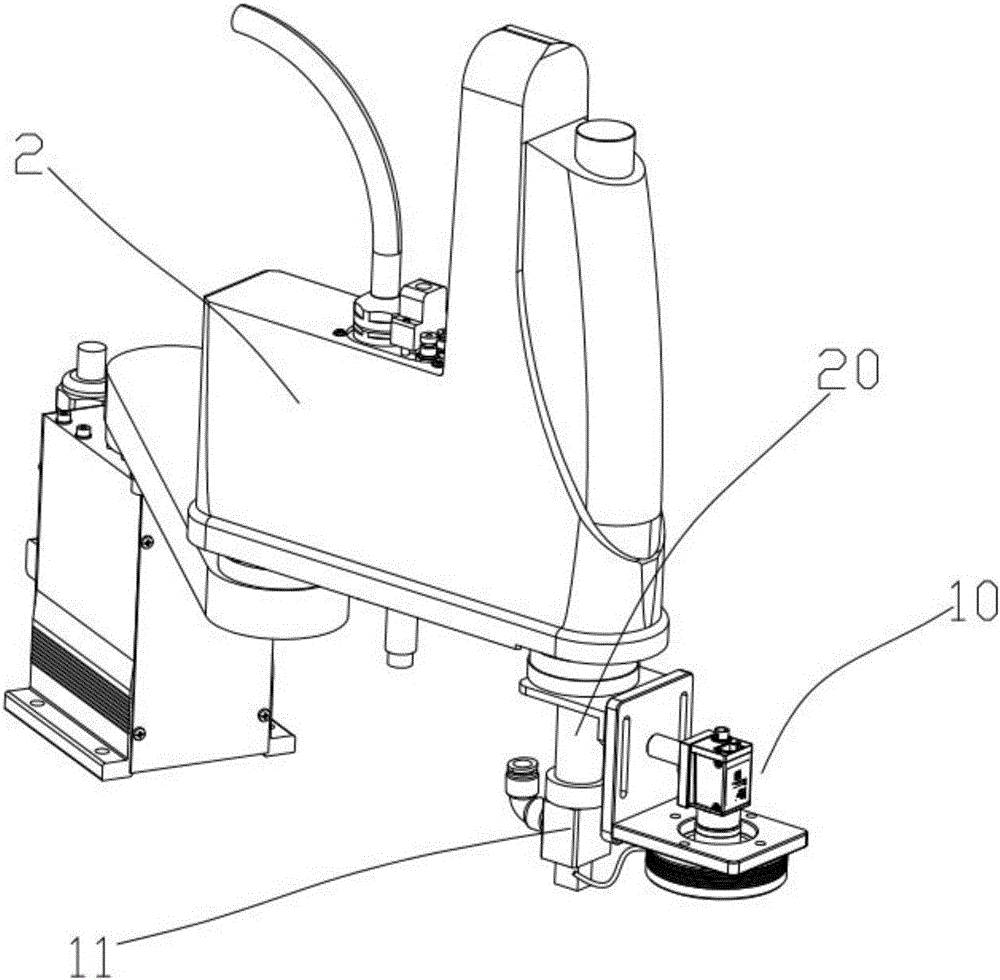

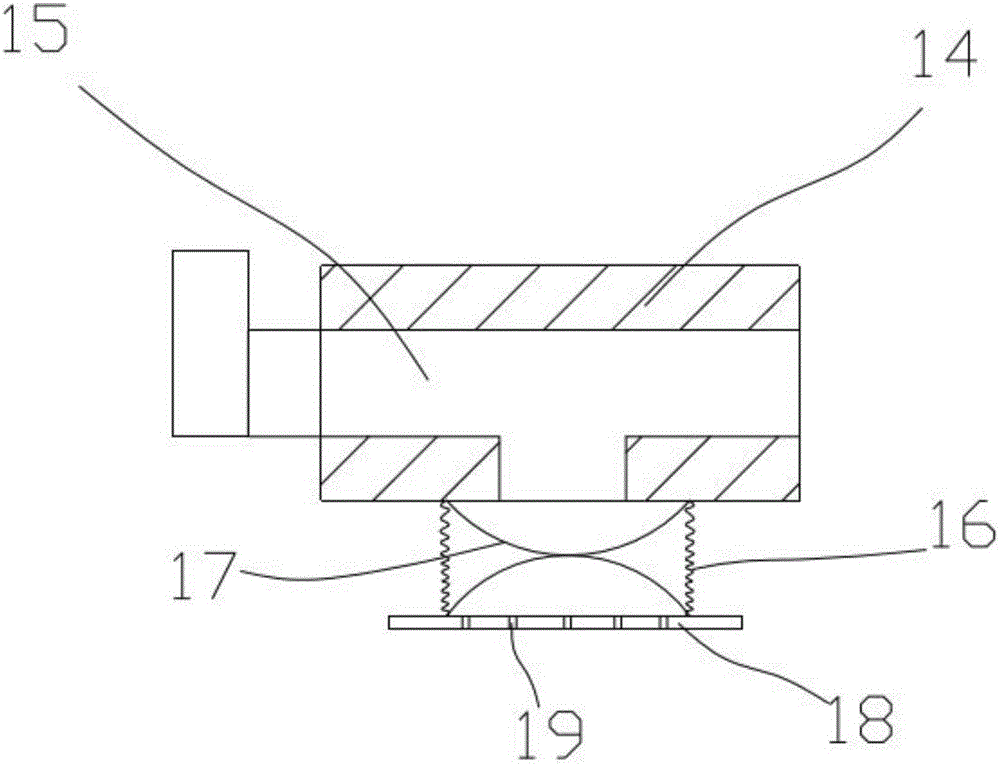

FPC precision alignment machine

PendingCN106817851ASolve efficiency problemsSolve visualPrinted circuit assemblingEngineeringVisually guided

The invention provides an FPC precision aligning machine, which comprises a working table. The working table is provided with a four-axis robot, a material loading plate, an alignment loading table and a first visual camera. The output end of the four-axis robot is connected with a rotating shaft. The rotating shaft is connected with a second visual camera and a grabbing mechanism. The first visual camera is arranged upwards. The alignment loading table is composed of a supporting seat and the supporting seat is provided with a loading plate. The loading plate is provided with two symmetrically arranged material loading levels. The lower part of the material loading levels is communicated with a first vacuum suction disc. Based on the visual guidance, the precise alignment is conducted. Therefore, the problems of low personnel efficiency and visual fatigue can be solved. Moreover, the high precision is achieved based on the visual guidance. When a soft FPC board is changed, a new product can be realized only through relearning the visual program. The utilization rate of equipment is improved, and the flexibility is high. The diversified demands of customers are well satisfied.

Owner:苏州华天视航智能装备技术有限公司

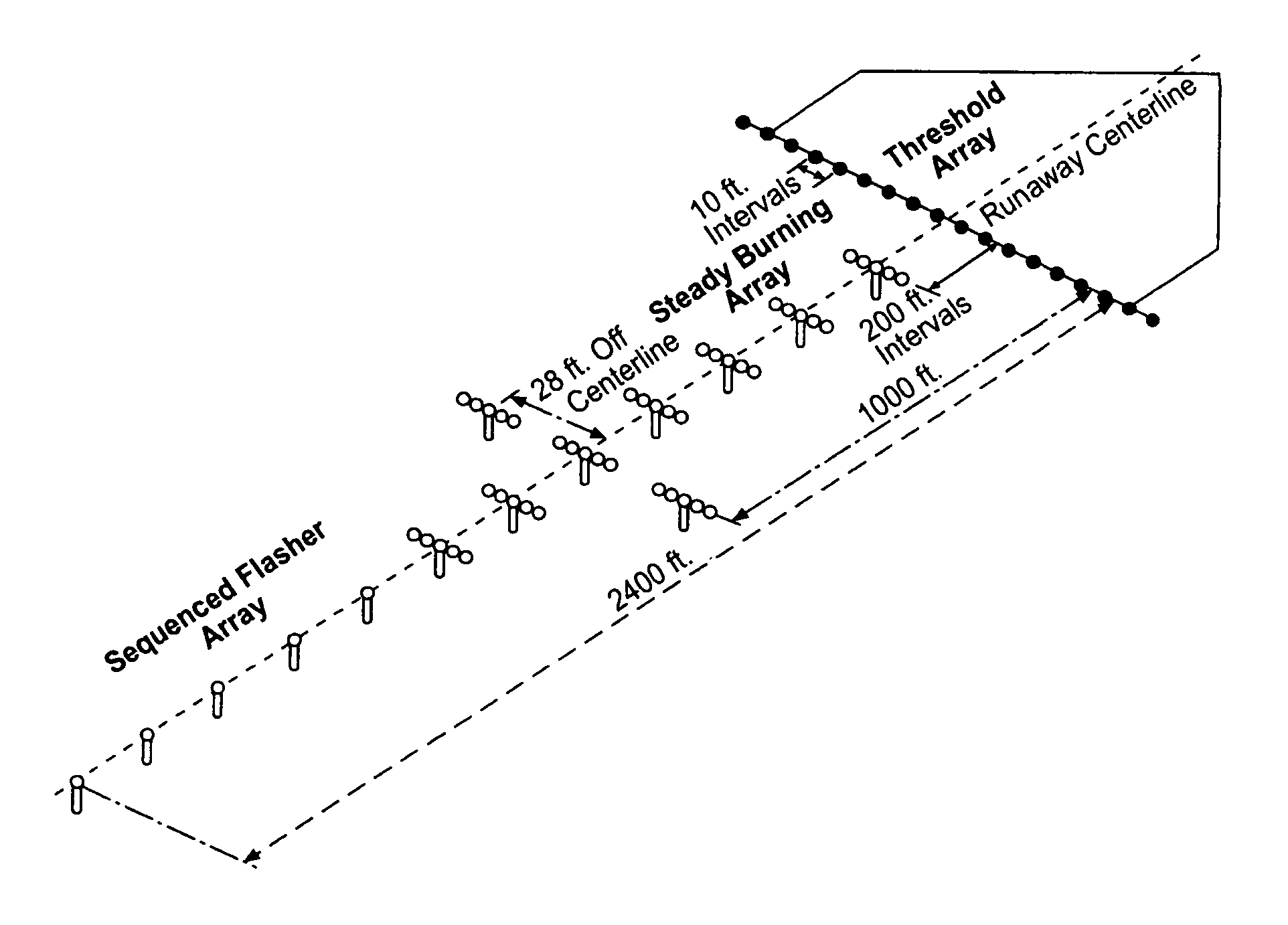

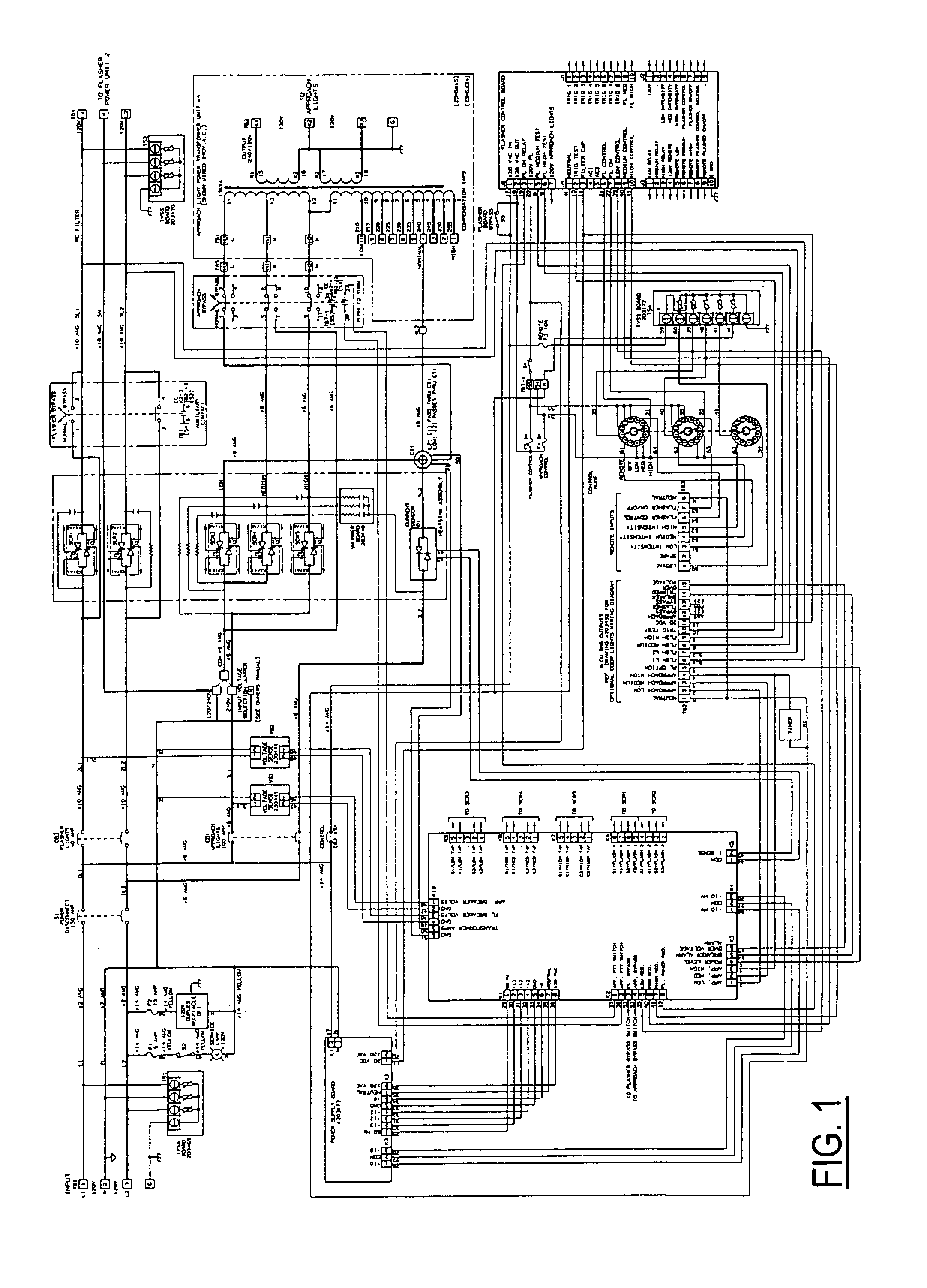

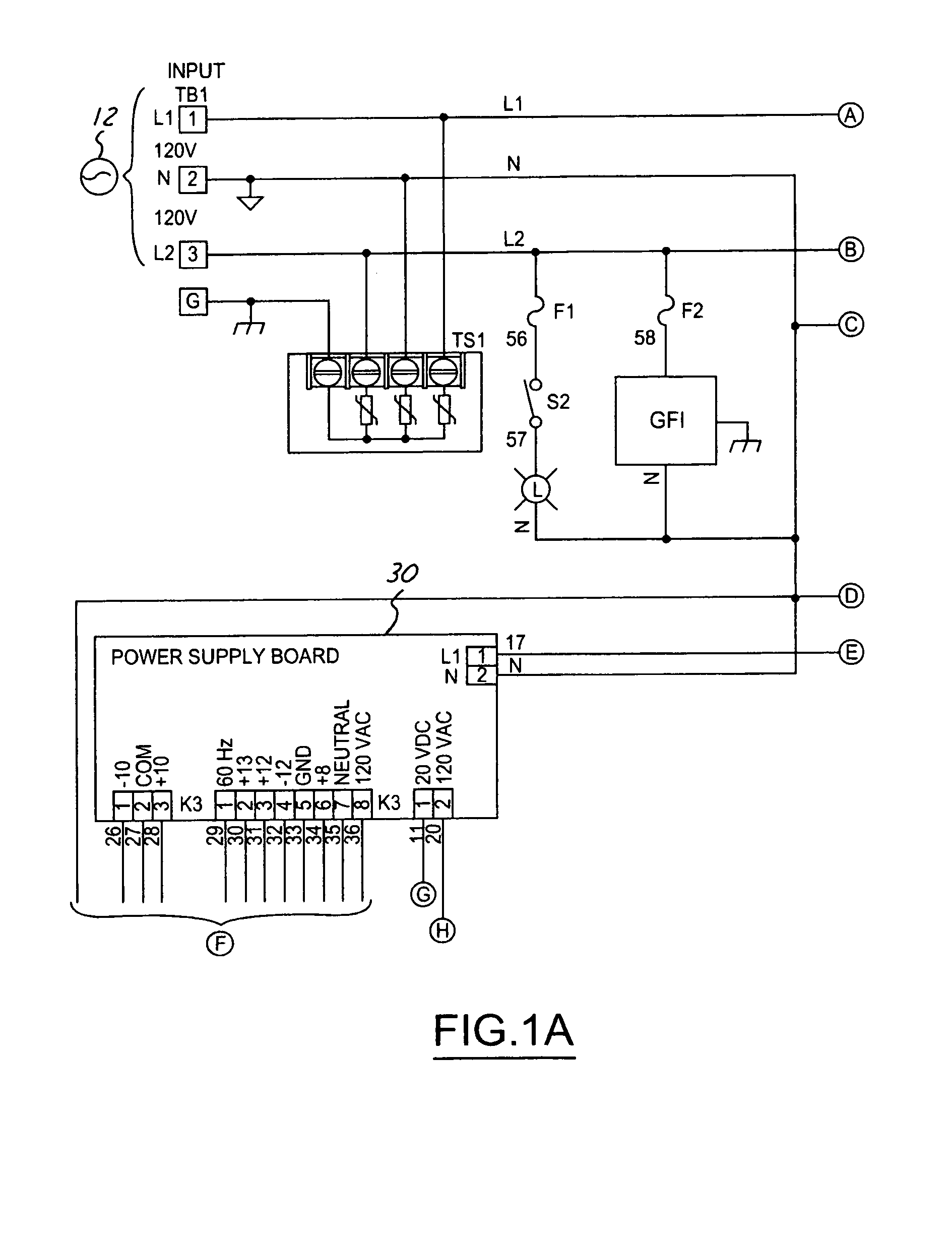

Runway approach lighting system and method

InactiveUS7088263B1Minimizing transformer excitationReduce operating expensesHelicopter landing platformElectrical apparatusEffect lightEngineering

A method and system for visually guiding an aircraft in its landing approach to a runway having an approach area equipped with approach lights operable in off, low, medium, and high intensity states. The lights are communicated with a secondary side of a transformer, which has a primary side with low, medium, and high taps that correspond to the light states. Off, low, medium, and high lighting intensity requests correspond to the low, medium, and high states of the plurality of lights. AC power is switched between the transformer taps in response to a request for an increase in lighting intensity. Power is sequentially applied to the taps by supplying the power to the low tap for a first predetermined time interval before supplying the power to the medium tap.

Owner:CONTROLLED POWER

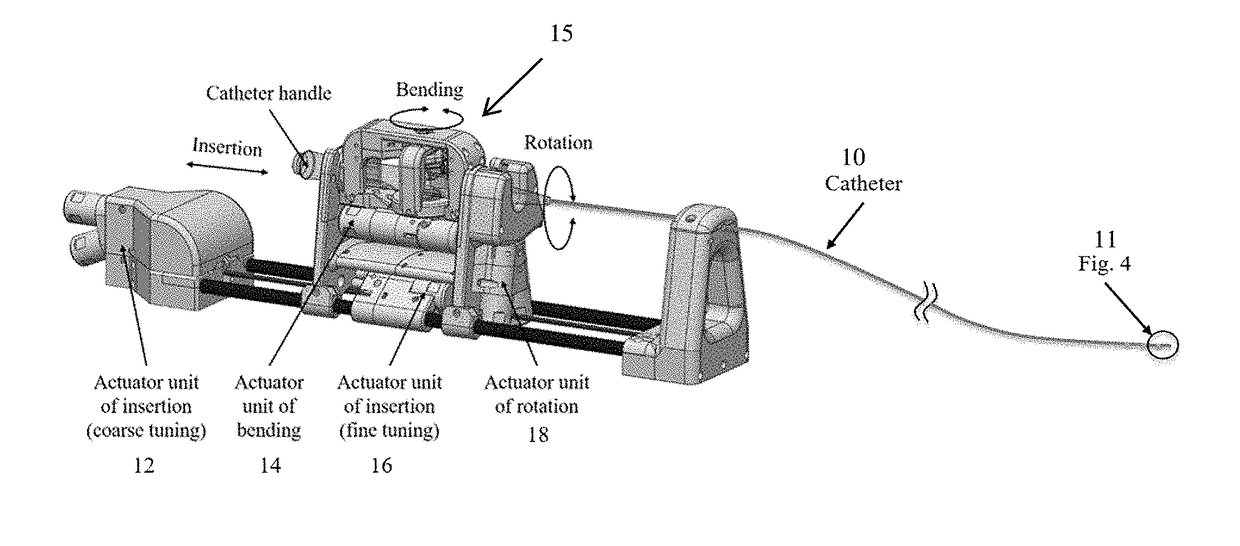

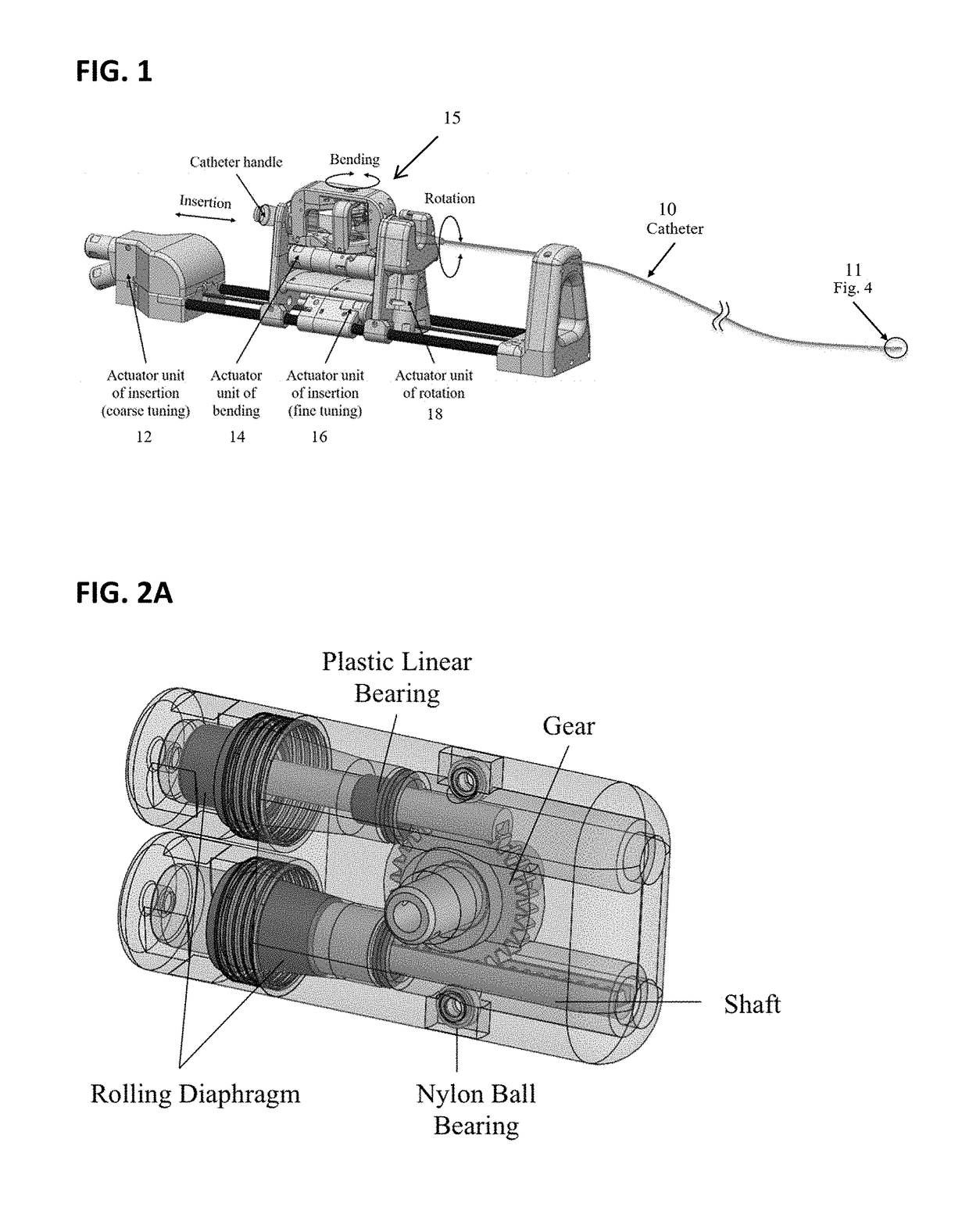

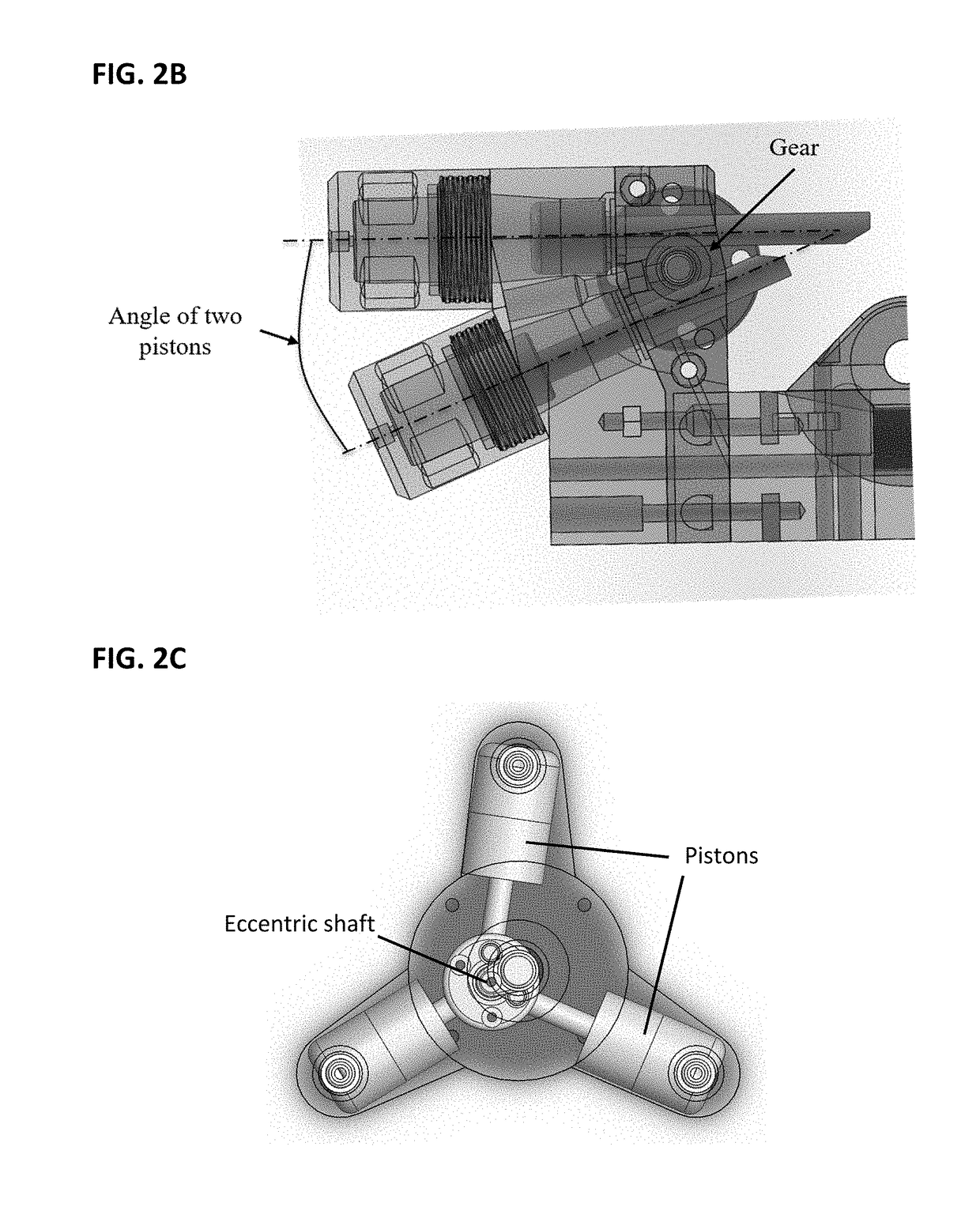

Robotic catheter system for mri-guided cardiovascular interventions

ActiveUS20170367776A1High rate position samplingLower latencySurgical instrument detailsDiagnostic recording/measuringMri guidedVisually guided

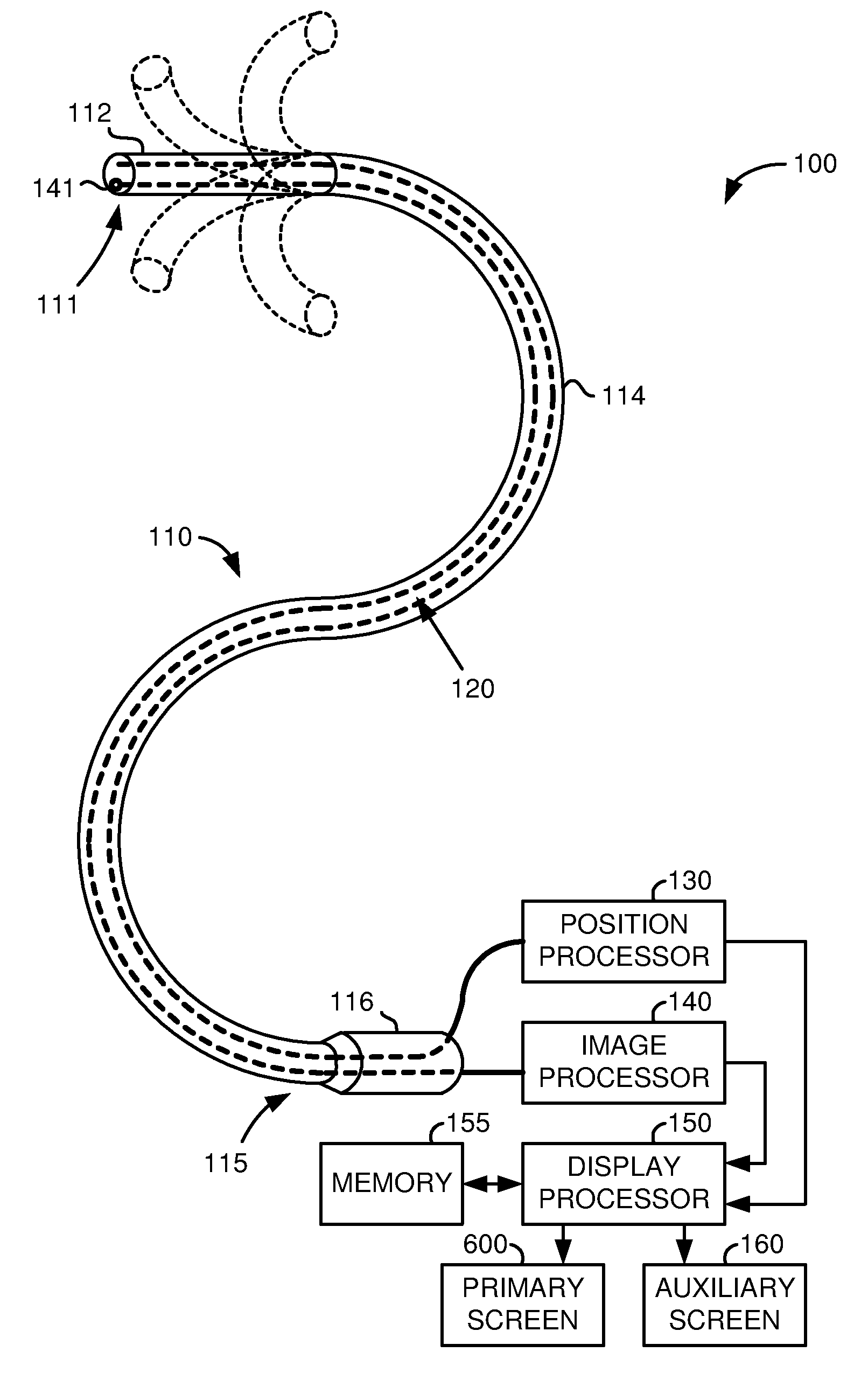

MRI-guided robotics offers possibility for physicians to perform interventions remotely on confined anatomy. While the pathological and physiological changes could be visualized by high-contrast volumetric MRI scan during the procedure, robots promise improved navigation with added dexterity and precision. In cardiac catheterization, however, maneuvering a long catheter (1-2 meters) to the desired location and performing the therapy are still challenging. To meet this challenge, this invention presents an MRI-conditional catheter robotic system that integrates intra-op MRI, MR-based tracking units and enhanced visual guidance with catheter manipulation. This system differs fundamentally from existing master / slave catheter manipulation systems, of which the robotic manipulation is still challenging due to the very limited image guidance. This system provides a means of integrating intra-operative MR imaging and tracking to improve the performance of tele-operated robotic catheterization.

Owner:VERSITECH LTD

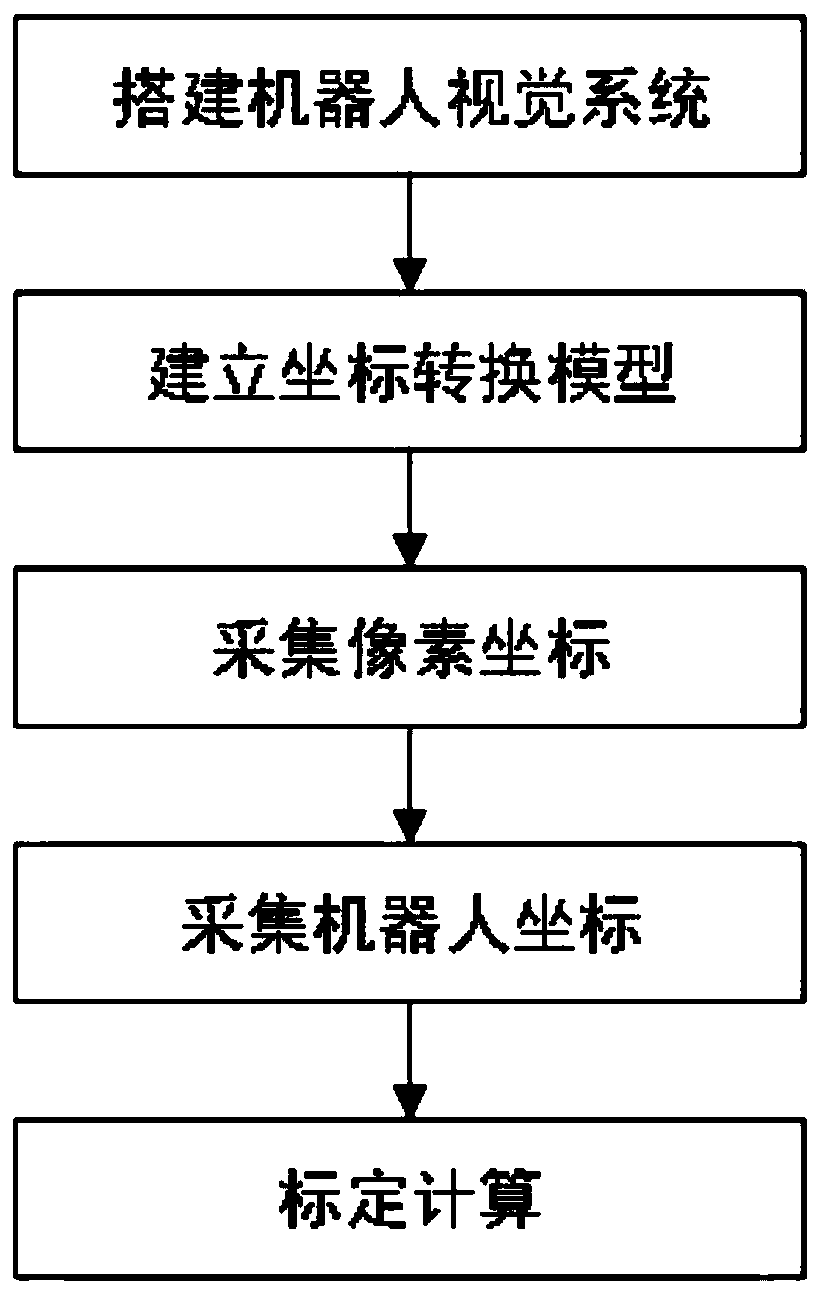

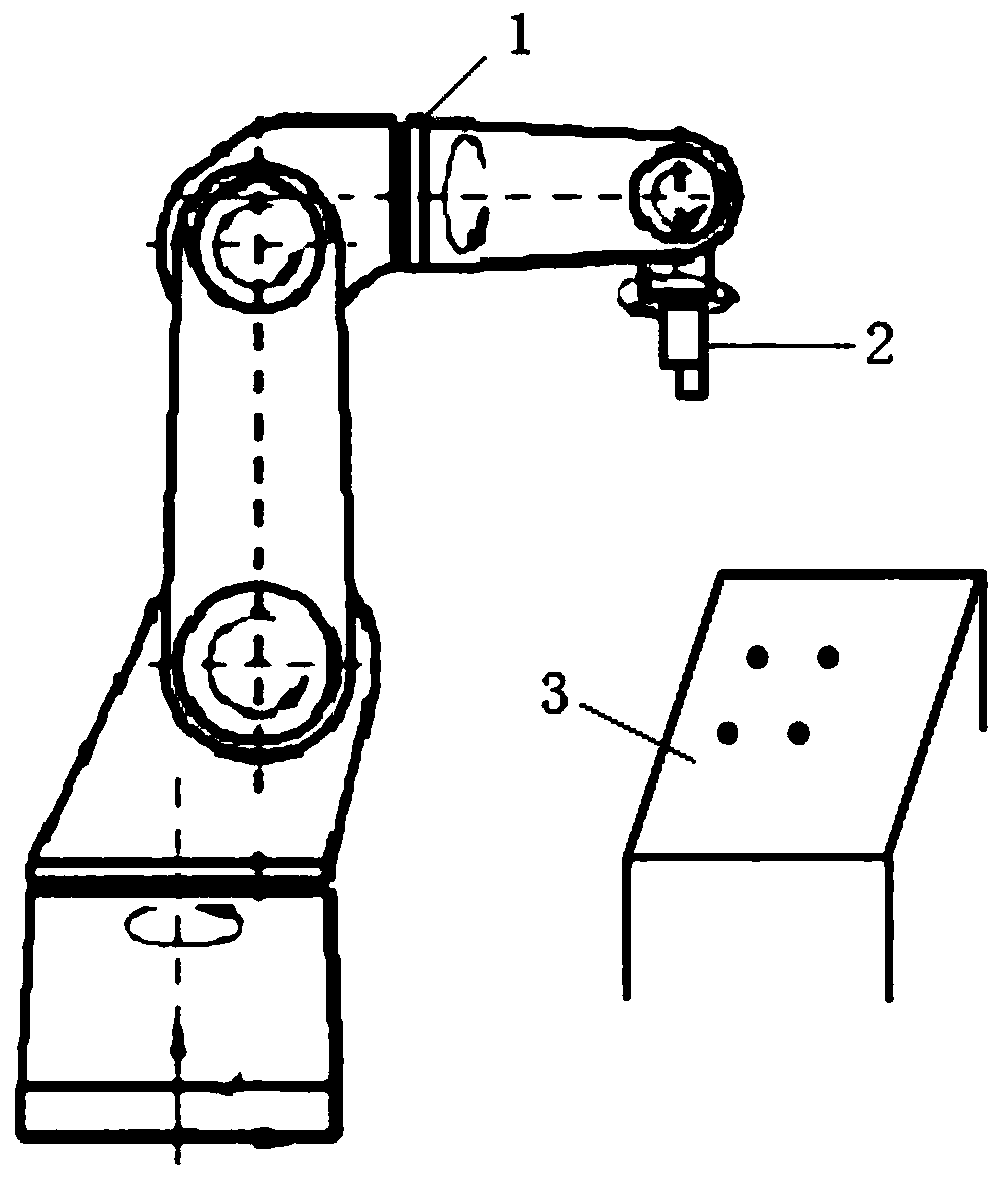

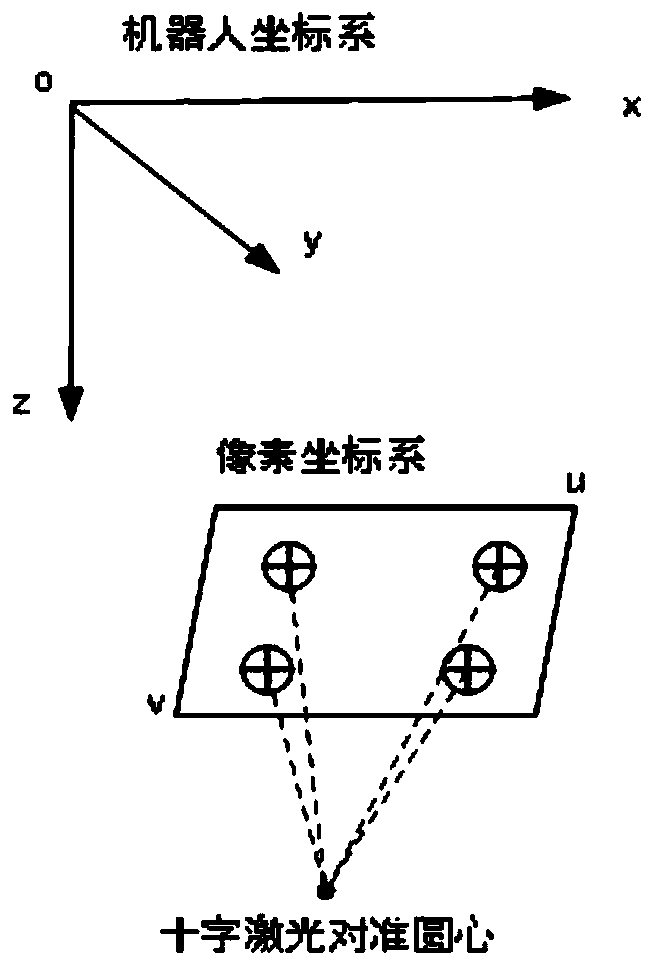

Robot visual calibrating method based on perspective transformation model

ActiveCN110666798AImprove calibration accuracyLow costProgramme-controlled manipulatorPattern recognitionComputer graphics (images)

The invention discloses a robot visual calibrating method based on a perspective transformation model. The relation between a pixel coordinate system and a robot coordinate system is built according to a perspective transformation principle between plane coordinate systems; and four sets of pixel coordinates and robot coordinates are acquired by using four non-collinear marking points to calibrateand calculate coordinate conversion model parameters for visual guide robot positioning. The method can be used for calibrating fixed cameras or terminal cameras mounted on robots without consideringthe depth direction, and is low in cost, high in calibrating precision and suitable for visual positioning requirements of industrial scene robots.

Owner:HUAZHONG UNIV OF SCI & TECH

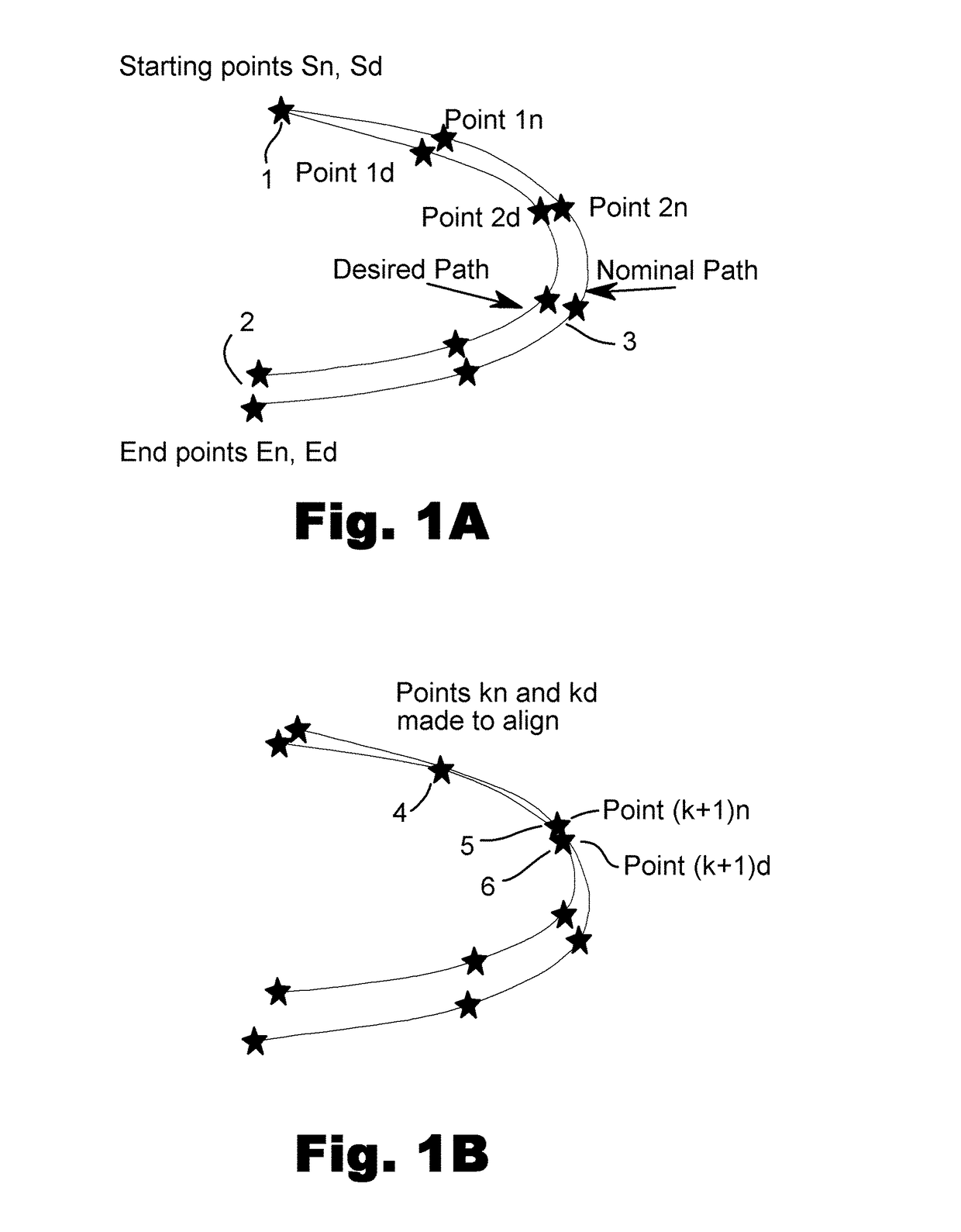

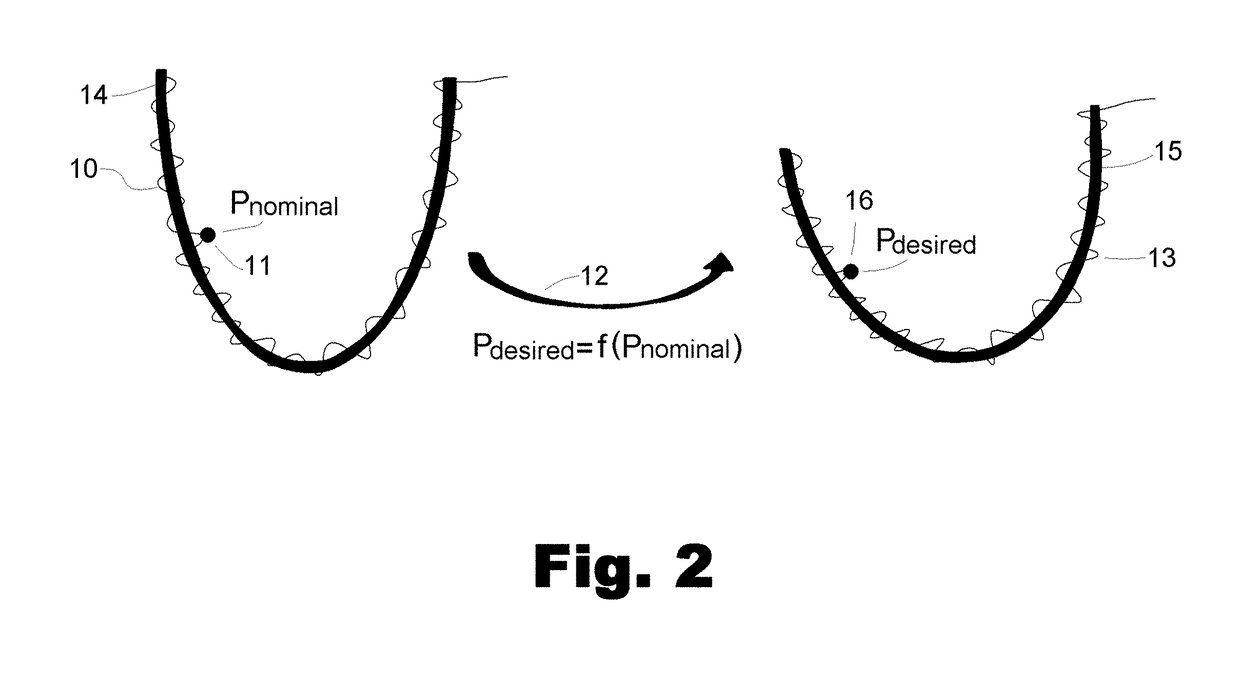

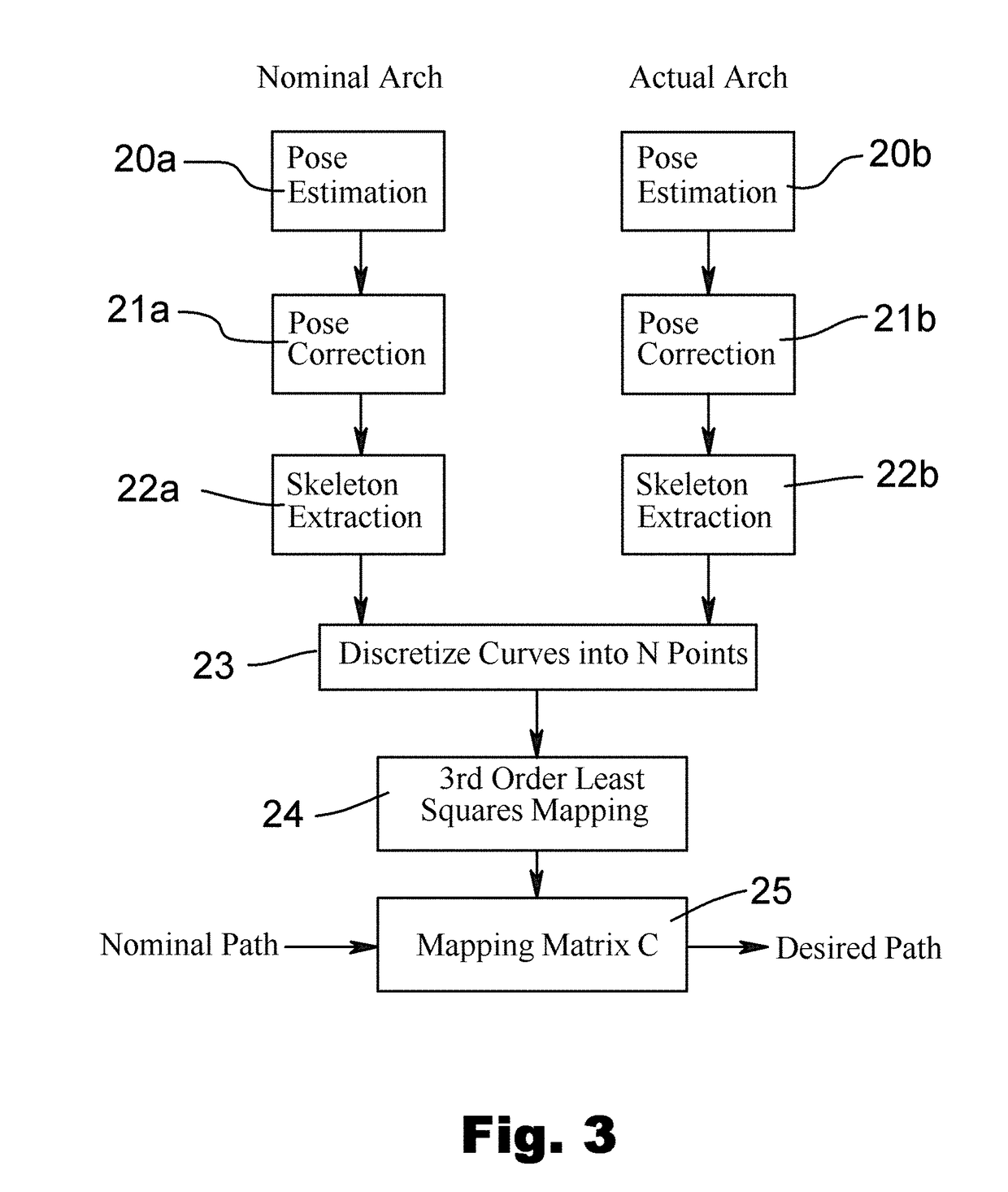

Vision guided robot path programming

ActiveUS20190047145A1Eliminating human involvementOvercome problemsProgramme controlProgramme-controlled manipulatorReference imageWorkspace

A method to automate the process of teaching by allowing the robot to compare a live image of the work space along the desired path with a reference image of a similar workspace associated with a nominal path approximating the desired path, the robot teaches itself by comparing the reference image to the live image and generating the desired path at the desired location, hence eliminating human involvement in the process with most of its shortcomings. The present invention also overcomes the problem of site displacement or distortion by monitoring its progress along the path by sensors and modifying the path to conform to the desired path.

Owner:BRACHIUM

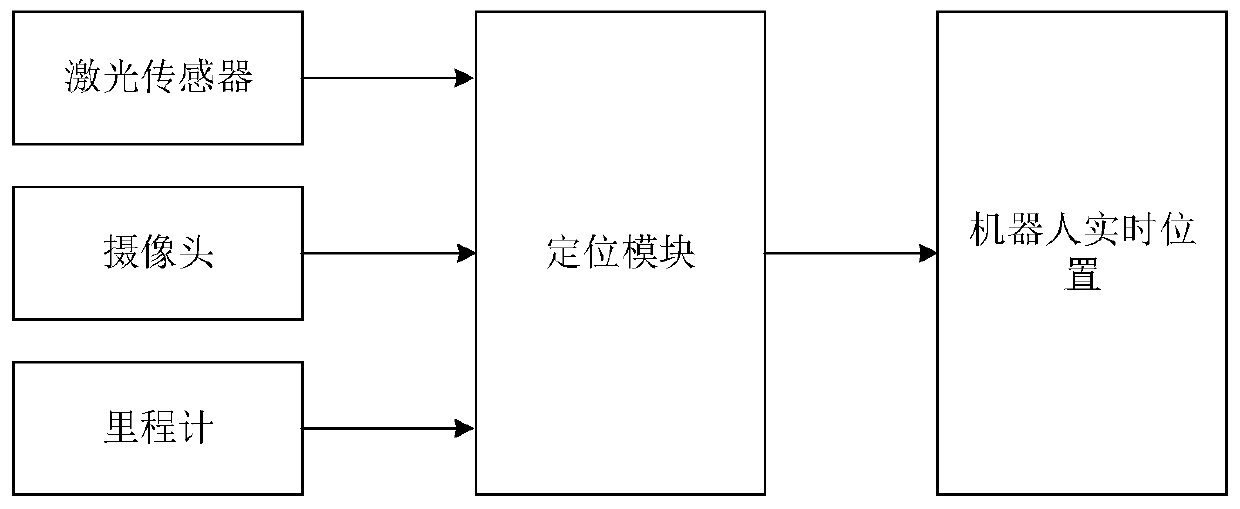

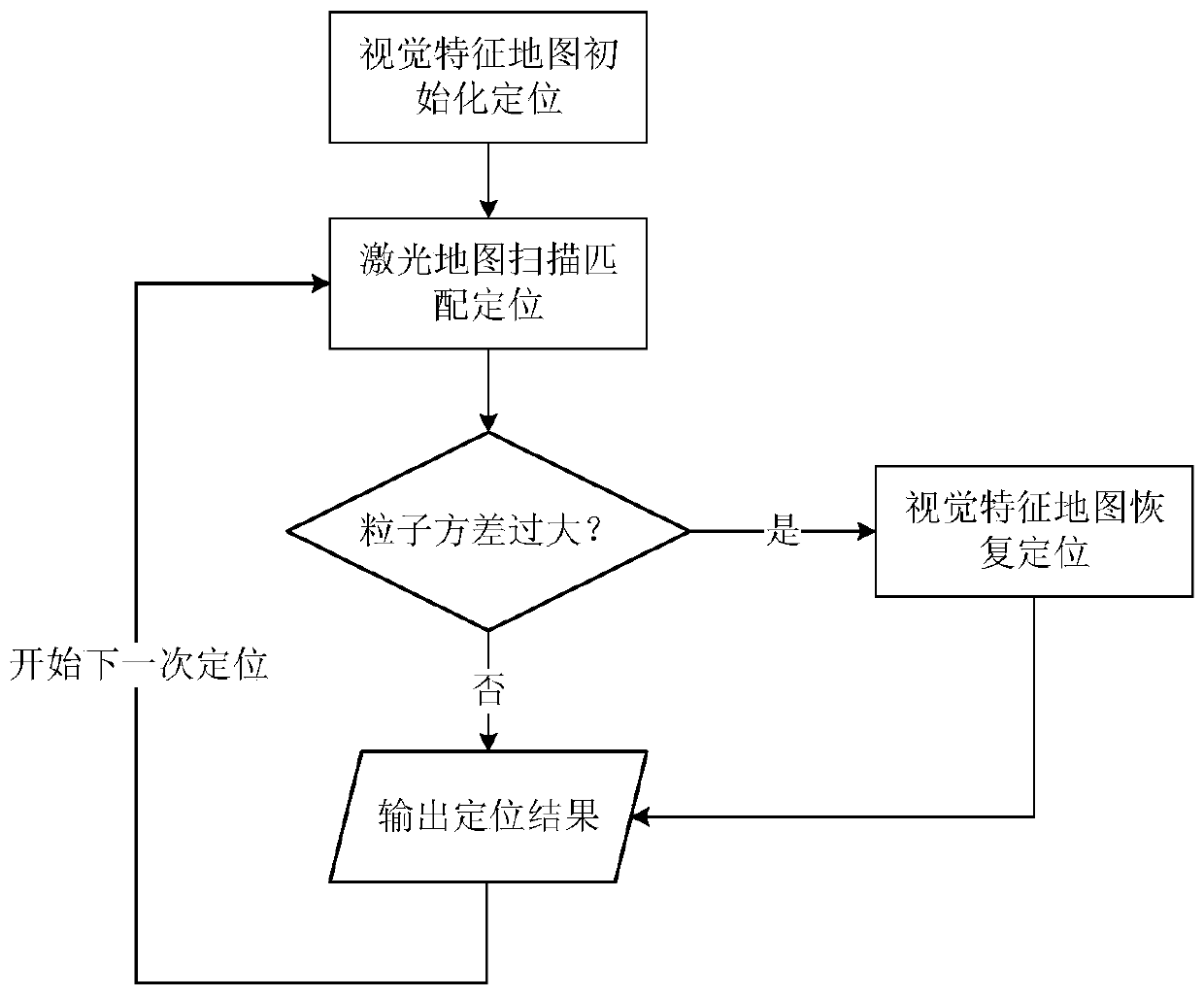

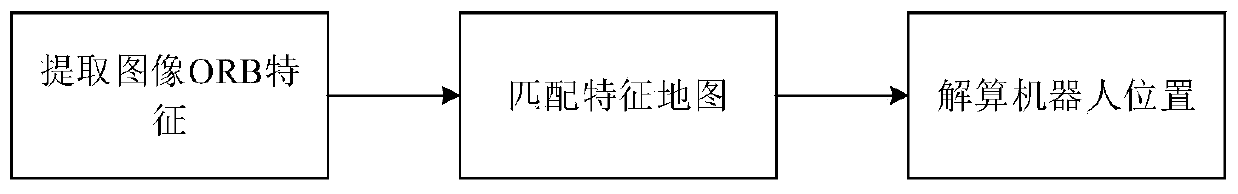

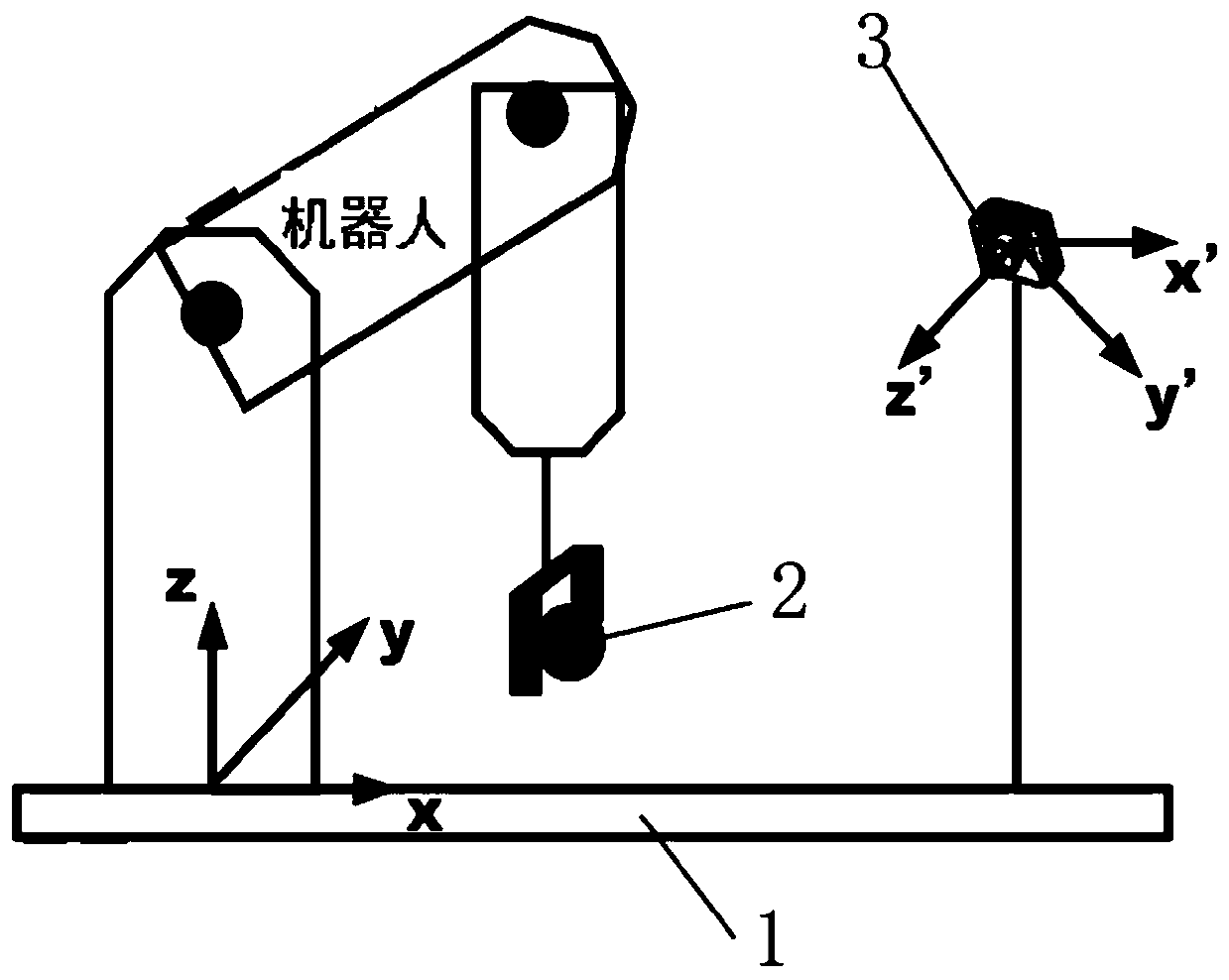

Mobile robot positioning method based on visual guidance laser repositioning

ActiveCN111337943APrecise positioningPositioning does not affectElectromagnetic wave reradiationLaser scanningEngineering

The invention relates to a mobile robot positioning method based on visual guidance laser repositioning, and the method comprises the following steps: carrying out the initialization positioning of the position of a robot according to a visual feature map, and mapping the position of the robot to a laser map; adopting an adaptive particle filtering method, and obtaining accurate positioning of therobot on a laser map according to a laser scanning matching result; judging whether the particle variance positioned in the positioning process of the self-adaptive particle filtering method exceedsa set threshold value or not: if so, performing visual repositioning by utilizing a visual feature map, outputting a positioning result of the robot, and re-initializing the current particle, namely,performing error recovery; if not, outputting a positioning result of the robot. Compared with the prior art, the method has the advantages that the robot can quickly recover accurate positioning by means of the repositioning function of the visual feature map during initialization or after being bound, so the stability and reliability of positioning are guaranteed.

Owner:TONGJI UNIV

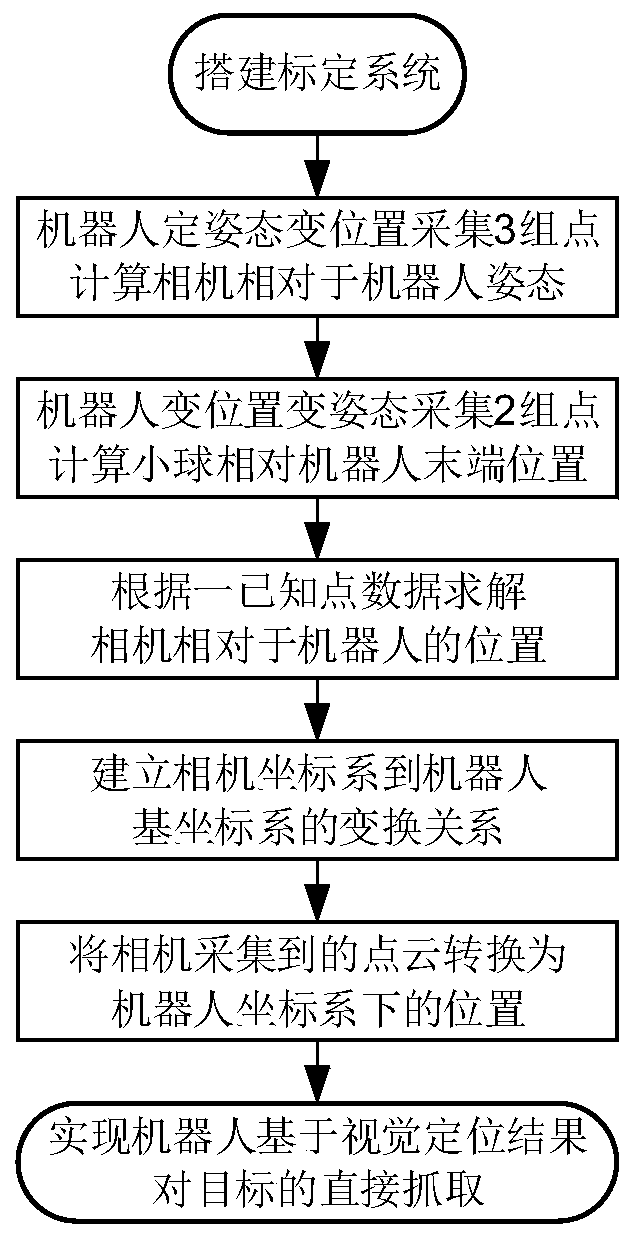

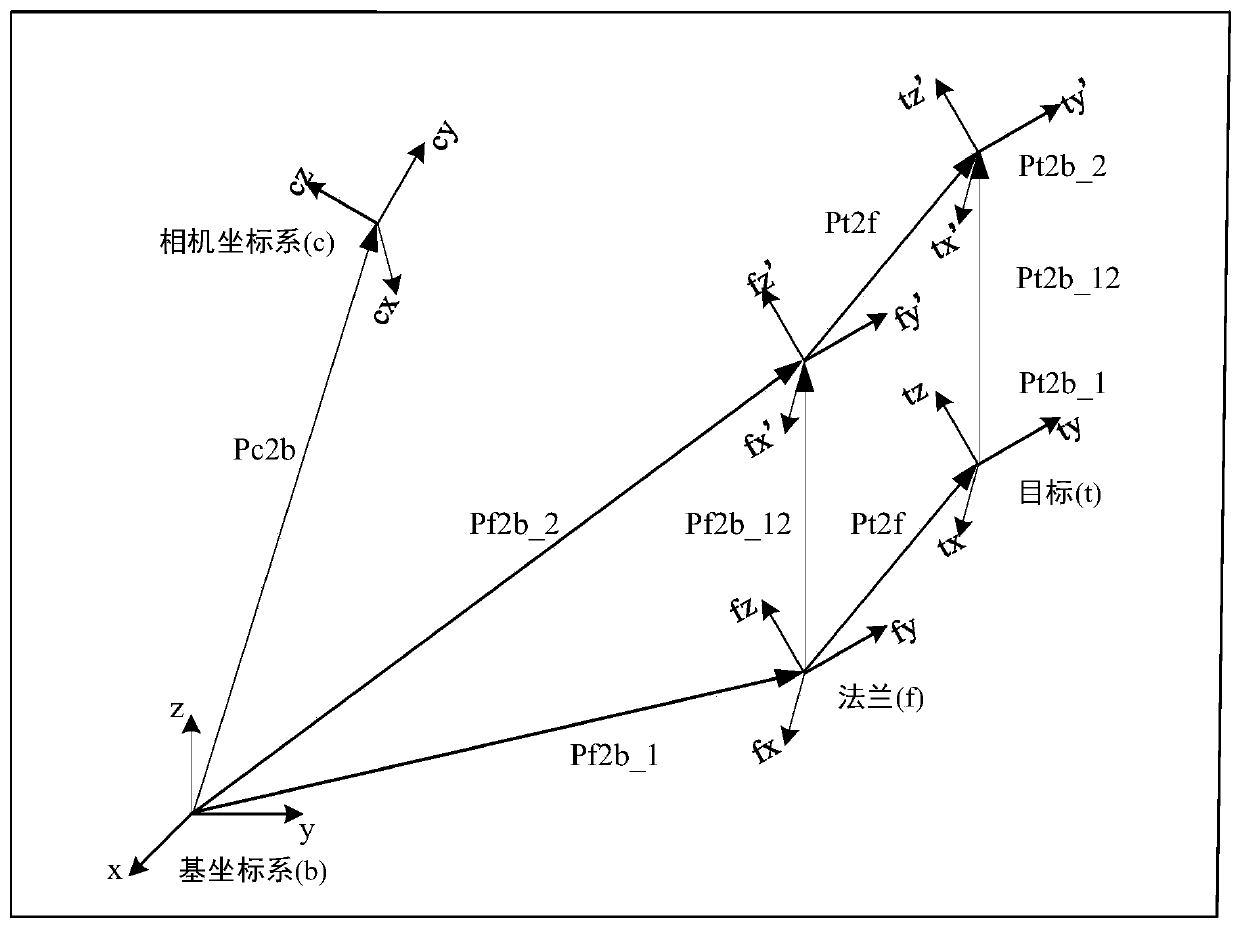

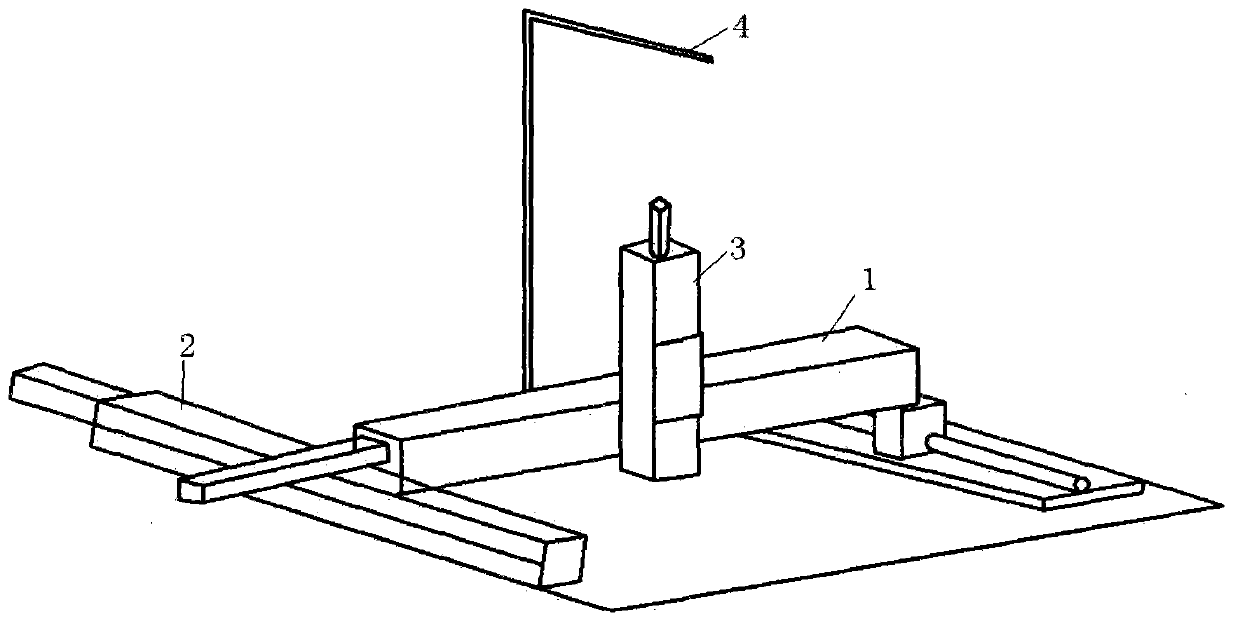

Camera pose calibration method based on spatial point location information

The invention discloses a camera pose calibration method based on spatial point location information. For a robot vision system with an independently-installed camera, a sphere is placed at the tail end of a robot to serve as a calibration object, then the robot is operated to change the position and posture of the sphere to move to different point locations, images and point clouds of table tennis balls at the tail end of the robot are collected; the sphere center is fitted to serve as a spatial point, and meanwhile the corresponding position and posture of the robot are recorded; then a transformation relation between a camera coordinate system and a robot base coordinate system is calculated by searching an equation relation between specific point position changes; the points collectedunder a camera coordinate system are converted into points under a robot base coordinate system, and directly achieving target grabbing of the robot based on visual guidance. According to the invention, the sphere is used as a calibration object, the operation is simple, flexible and portable, the tedious calibration process is simplified, and compared with a method for conversion by means of a calibration plate or a calibration intermediate coordinate system, the method has higher precision, and does not introduce an intermediate transformation relationship and excessive error factors.

Owner:EUCLID LABS NANJING CORP LTD

Three-dimensional positioning device based on visual guidance and dispensing equipment

InactiveCN103272739AAvoid the problem of lower precisionLow costLiquid surface applicatorsCoatingsEngineeringMotion system

The invention relates to a three-dimensional positioning device based on visual guidance and dispensing equipment. The three-dimensional positioning device comprises an X axis motion system; a Y axis motion system, wherein the X axis motion system is movably supported on the Y axis motion system, and the Y axis motion system drives the X axis motion system to move along the axial direction of the Y axis motion system; a Z axis motion system, wherein the Z axis motion system is installed on the Y axis motion system, the X axis motion system drives the Z axis motion system to move along the axial direction of the X axis motion system; an image obtaining unit installed above the Z axis motion system and used for obtaining the height position information of a target position and plane center position information of the target position; and a controller electrically connected with the X axis motion system, the Y axis motion system, the Z axis motion system and the image obtaining unit and used for driving the X axis motion system, the Y axis motion system and the Z axis motion system to move according to the height position information and the plane center position information. The three-dimensional positioning device provided by the invention improves the positioning effect of the system.

Owner:WUHAN HUAZHUO BENTENG SCI & TECH

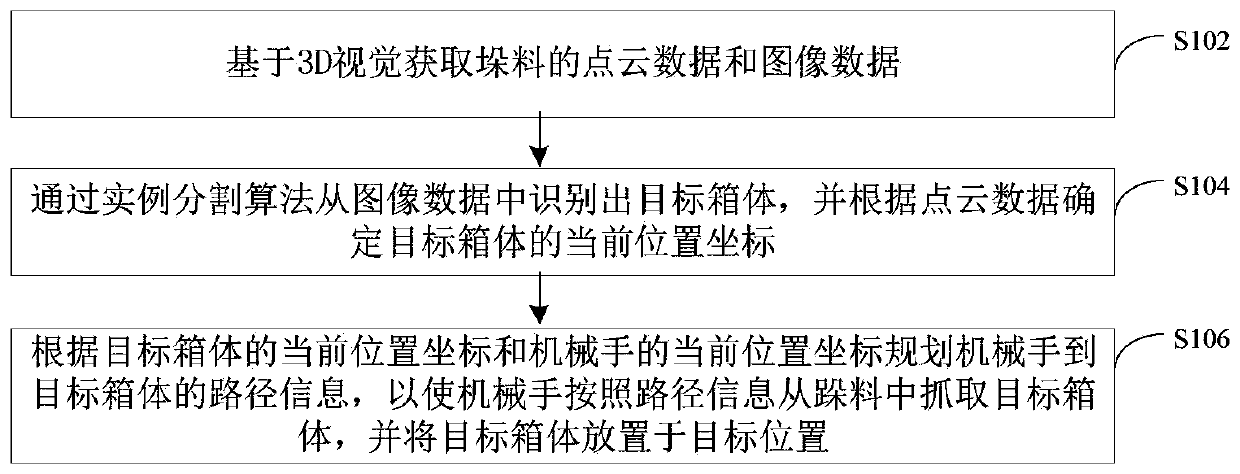

3D visual guidance based unstacking method and system

ActiveCN111439594AImprove work efficiencyImprove accuracyControl devices for conveyorsCharacter and pattern recognitionPoint cloudLogistics management

The invention provides a 3D visual guidance based unstacking method and system, and relates to the technical field of logistics and warehousing. The method comprises steps as follows: point cloud dataand image data of stacked materials are acquired on the basis of 3D vision; a target box body is recognized from the image data with an instance segmentation algorithm, and current position coordinates of the target box body are determined according to the point cloud data; information of a path from a manipulator to the target box body is planned according to the current position coordinates ofthe target box body and current position coordinates of the manipulator, the manipulator is enabled to grab the target box body from the stacked material according to the information of the path, andthe target box body is placed in the target position. Manual work is replaced to complete unstacking, working efficiency is high, the worker is tireless, working properties are relatively stable, andaccuracy rate of goods unstacking is high.

Owner:BLUESWORD INTELLIGENT TECH CO LTD

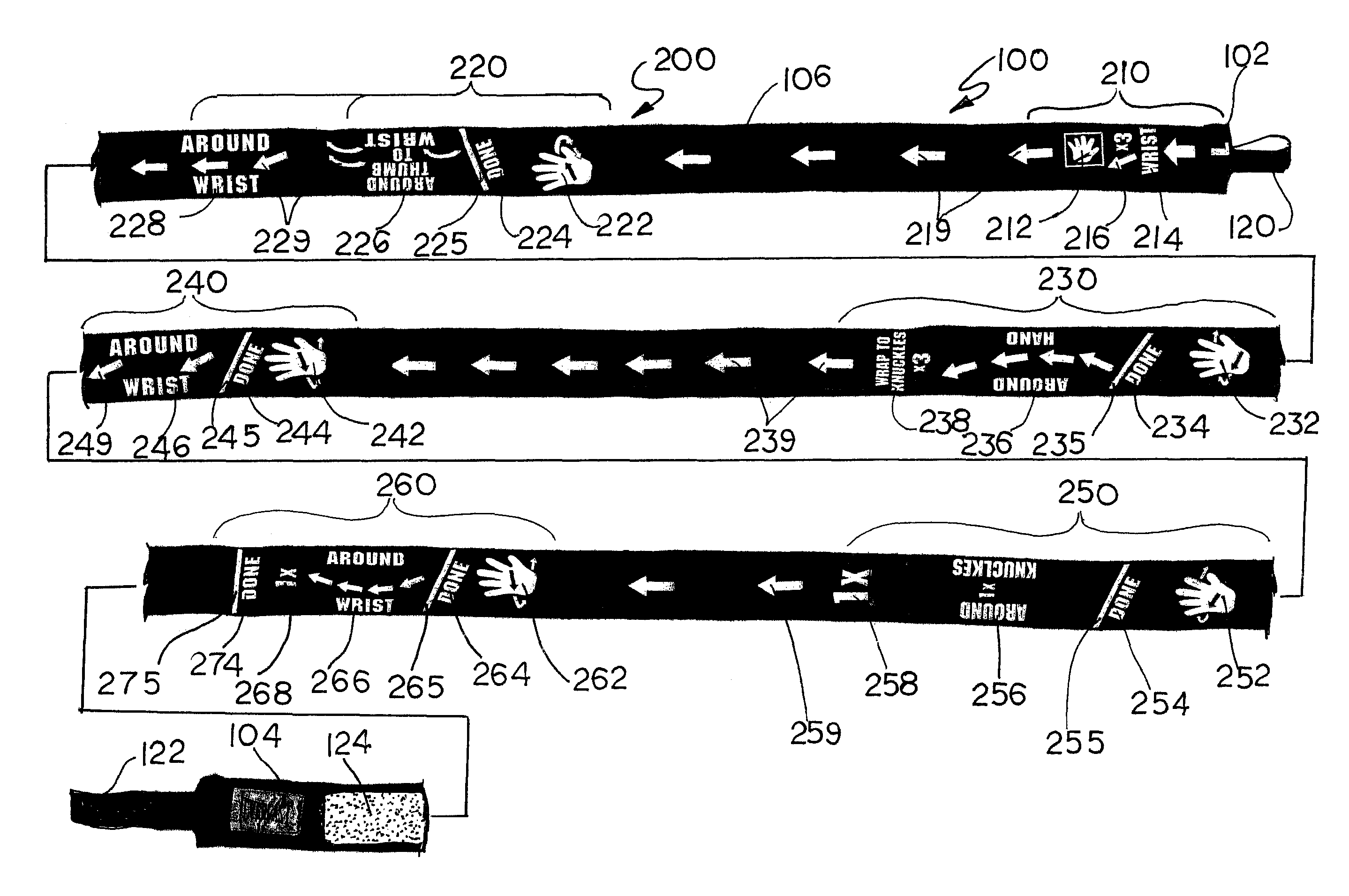

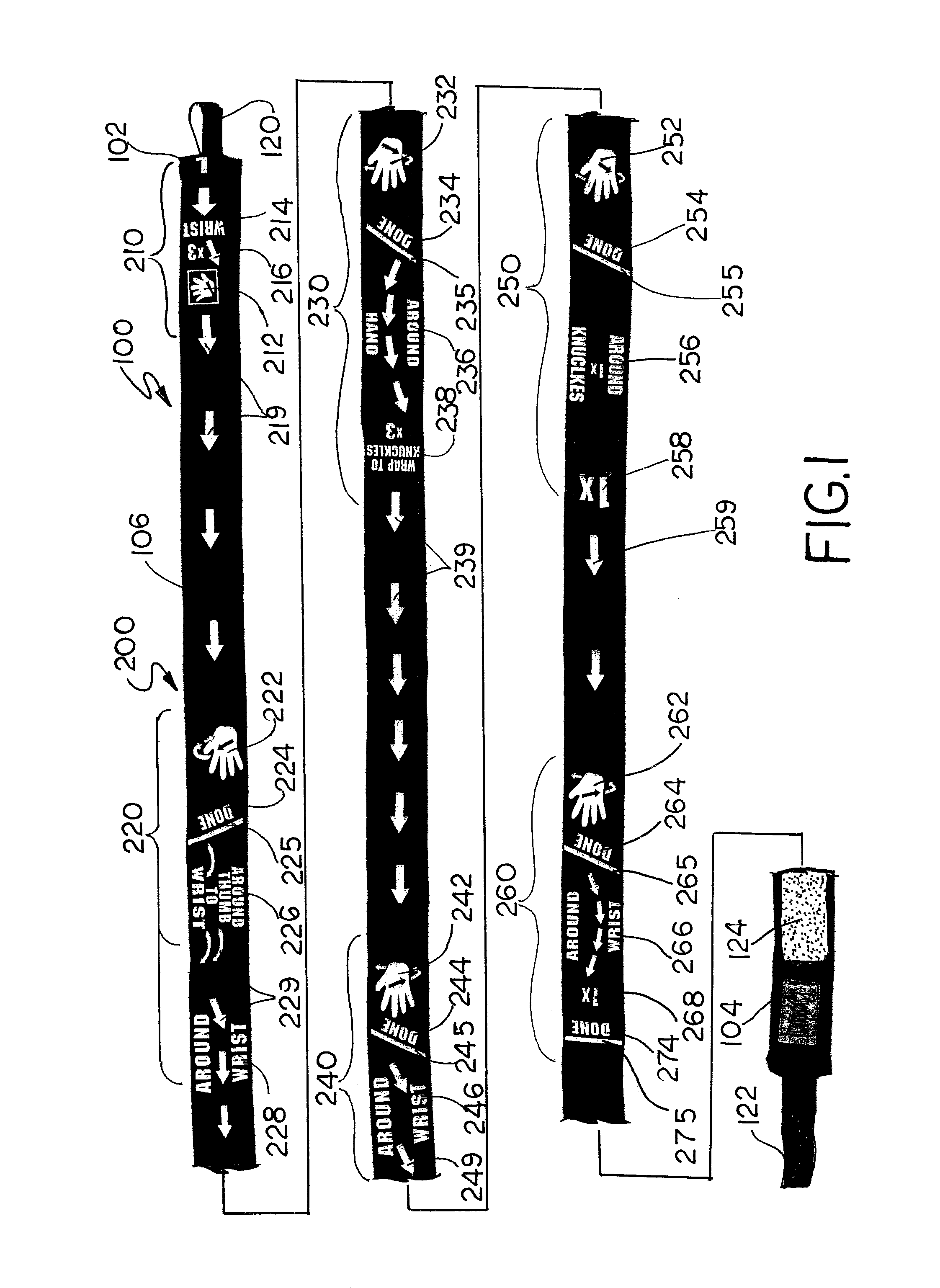

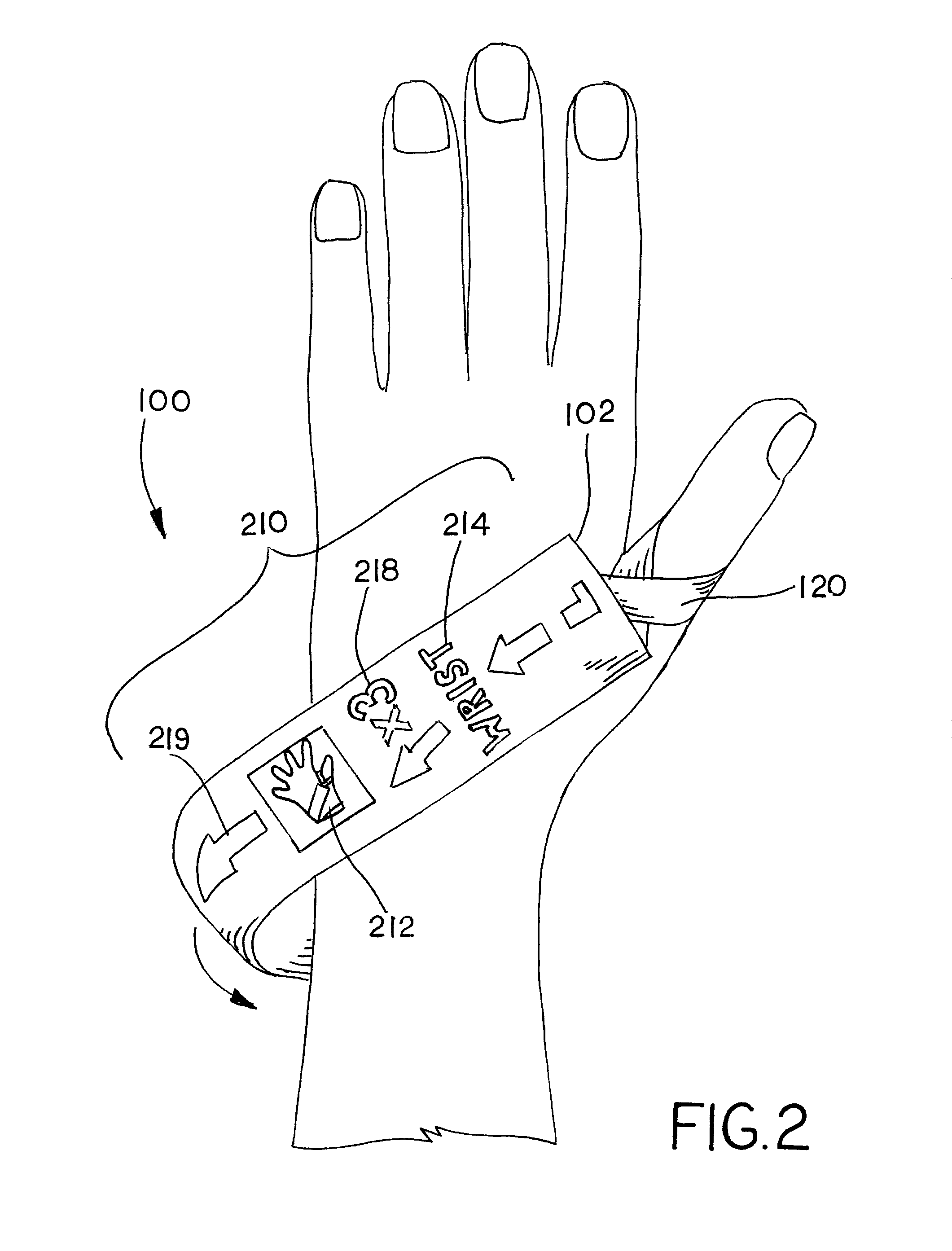

Wrap for human appendage

An appendage wrap having a sequential set of graphic instruction steps printed across the length of the wrap on one or both sides for explaining and illustrating how a user is to apply the wrap to a specific appendage. Each individual instruction step is readily visible to the user and is easily discernable as the wrap is unrolled and wound around the appendage. Text instructions provide concise directions to the user. Simply illustrations of the appendage (hand graphics) provide a visual reference to the user for the direction and location where the wrap will be wound. Arrows are used to visually guide the user as wrap is unrolled and wound around the wrist.

Owner:MCGUIN AARON

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com