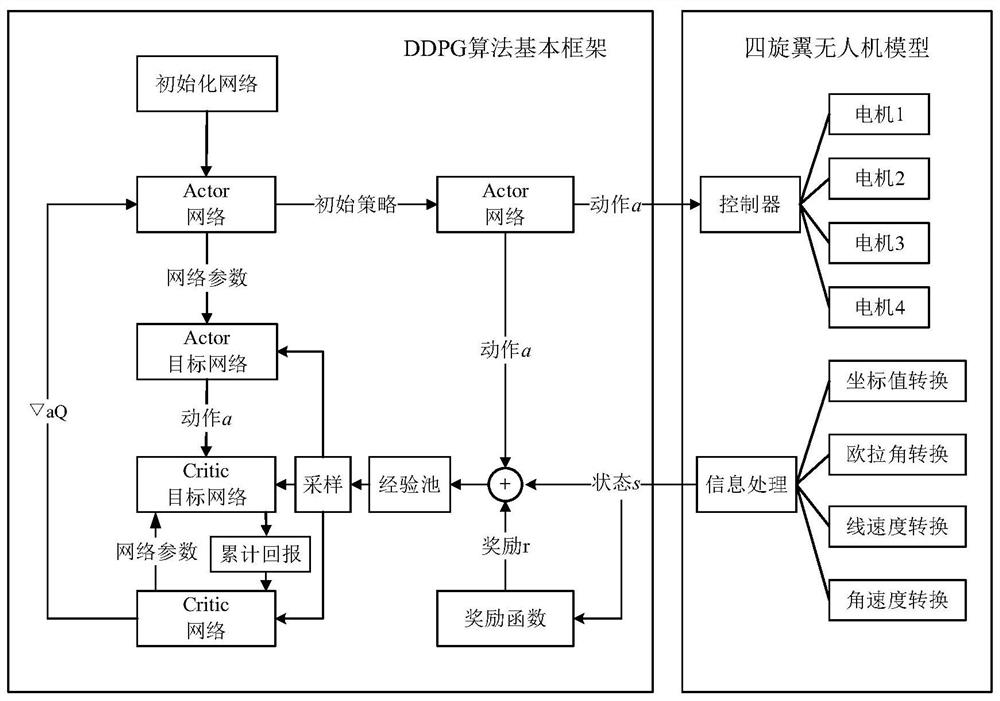

A Quadrotor UAV Route Following Control Method Based on Deep Reinforcement Learning

A four-rotor unmanned aerial vehicle and reinforcement learning technology, applied in three-dimensional position/course control, vehicle position/route/height control, attitude control, etc., can solve the problem of unstable learning process, low control accuracy, and inability to achieve continuous control, etc. question

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

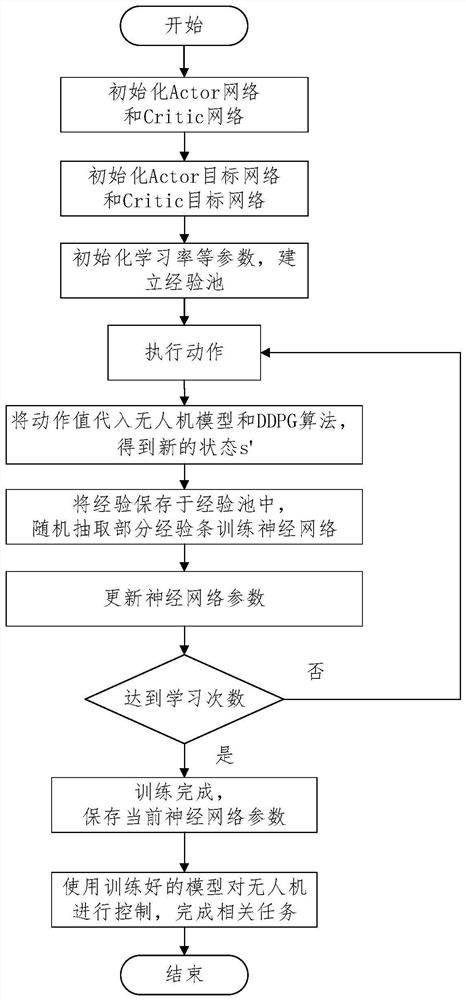

Method used

Image

Examples

Embodiment

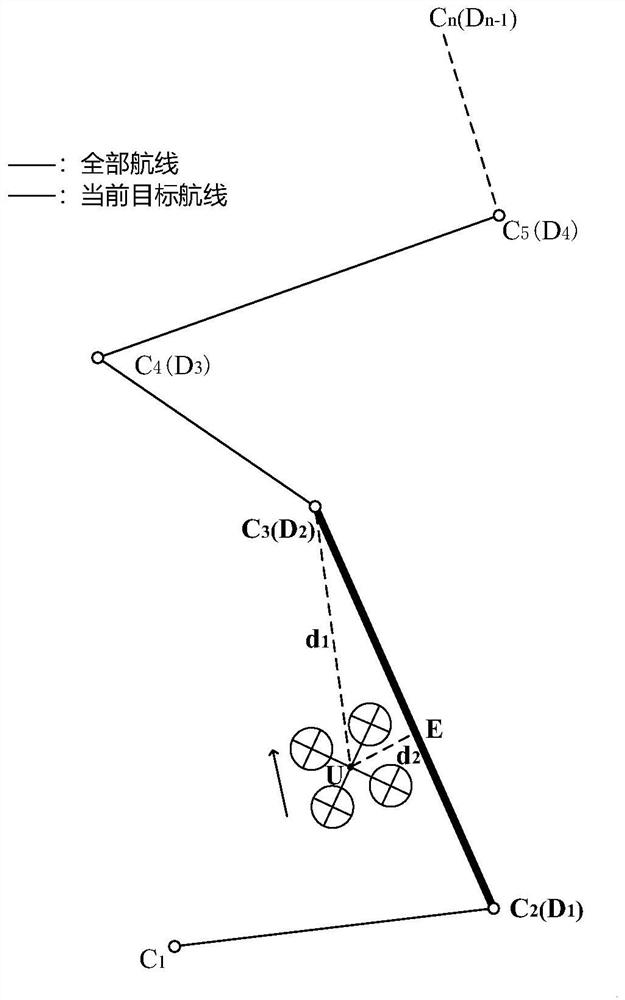

[0129] The use case of this embodiment realizes the autonomous flight of the quad-rotor UAV following random route. Set the UAV mass m = 0.62 kg, and the gravity acceleration g = 9.81 m / s 2 . Set the drone to hover initially, and fly from the starting coordinates (0,0,0) to perform the mission. When the drone completes the target route and reaches the end of the route, the system automatically refreshes the new target route, and the drone performs the route following task flight diagram as shown figure 2 Shown.

[0130] The initial φ, θ, and ψ are all 0°, which is derived from the identification of the drone sensor. To facilitate neural network processing, when the roll angle, pitch angle, and yaw angle are input into the state, they are cosineized. Set the single-step movement time of the UAV Δt = 0.05 seconds, the thrust coefficient of the quadrotor UAV c T = 0.00003, the arm length d = 0.23 meters.

[0131] Solve the position r of the drone in the inertial coordinate system ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com