Multi-target tracking method and face recognition method

A multi-target and target technology, applied in the field of computer vision, can solve the problems of unrepairable feature information F, uncorrectable errors, low matching accuracy, etc., to achieve the effect of taking into account calculation efficiency and matching accuracy, improving matching accuracy, and simple calculation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

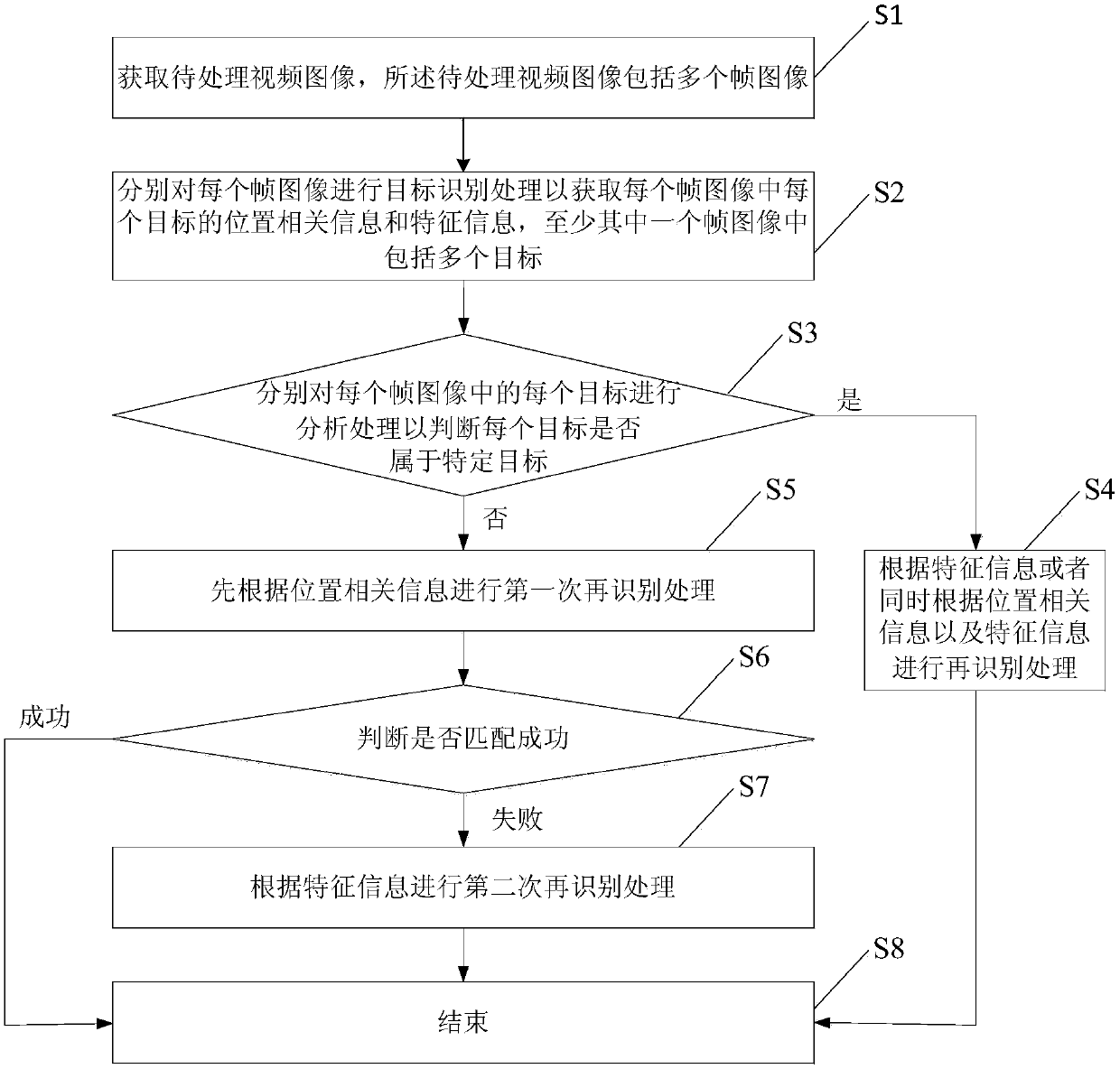

[0036] As mentioned in the background technology section, the ReID processing logic in the prior art is fixed. After the target recognition processing is performed on the frame image, the position-related information is used to perform the first ReID, and the feature information that is not successfully matched is restarted. Second ReID. Since the time and place of image shooting are random, and the light, angle, and posture are different, and the object is easily affected by factors such as detection accuracy and occlusion, the matching accuracy of this method is relatively low.

[0037] In order to make the above objects, features and advantages of the present invention more comprehensible, specific embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings.

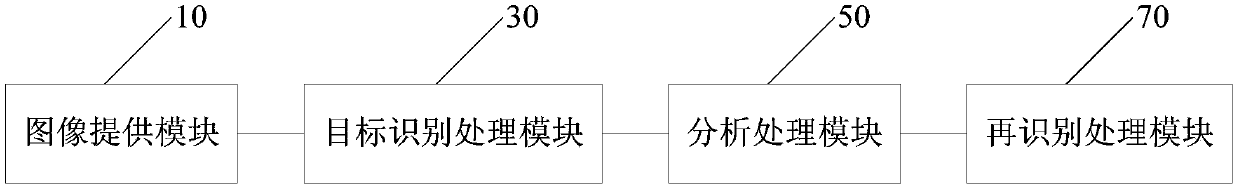

[0038] The method in this embodiment is mainly implemented by computer equipment; the computer equipment includes but not limited to network equipment and user ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com