Gesture action recognition method based on Kinect

A technology of gesture action and recognition method, which is applied in the field of virtual reality and human-computer interaction, and can solve the problems of not considering the difference in speed and distance, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0083] The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

[0084] The technical scheme adopted in the present invention is, a kind of gesture action recognition method based on Kinect, specifically implement according to the following steps:

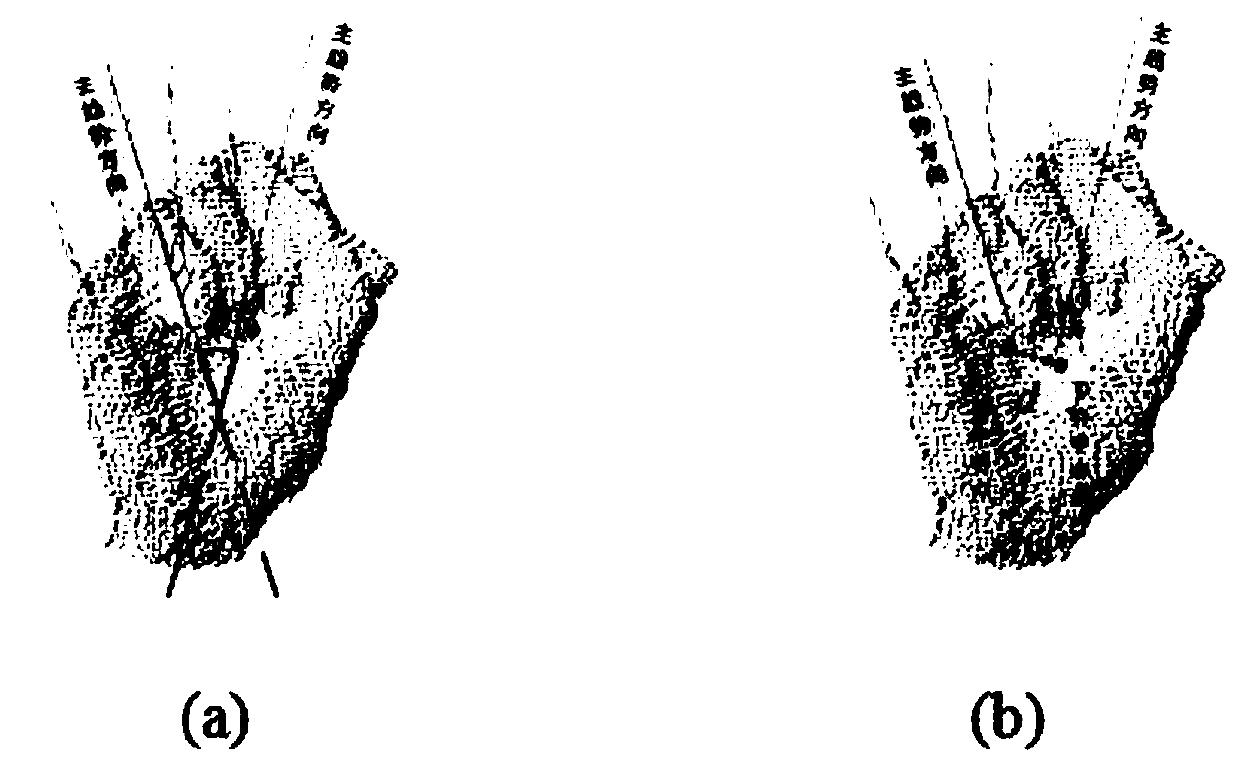

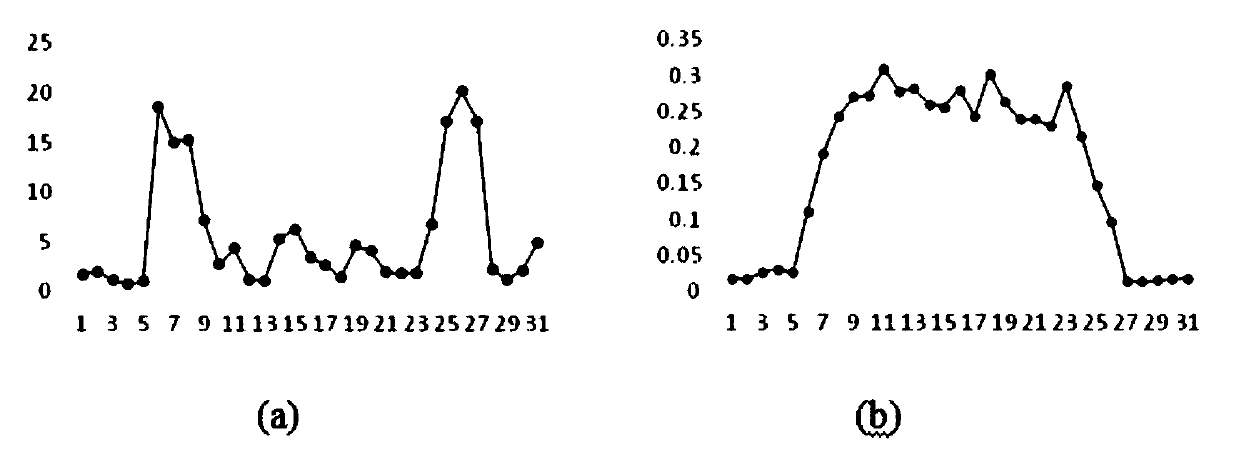

[0085] Step 1. Use the gesture main trend to characterize the gesture orientation and gesture posture, and realize the measurement of the difference between the gesture orientation and gesture posture of adjacent frames; use the distance between the gesture center points of adjacent frames to measure the motion speed of the gesture, and complete Extract keyframes of independent gesture sequences; specifically:

[0086] Step 1.1: Take the wrist joint point as the initial seed coordinate, and recursively traverse its neighborhood pixels to extract the gesture area and convert it into gesture point cloud data; specifically:

[0087] Step 1.1.1, obtain the human w...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com