Smart campus-oriented distributed machine learning model parameter aggregation method

A machine learning model and smart campus technology, applied in machine learning, computing models, instruments, etc., can solve the problems of local optimization of distributed machine learning training, and achieve the effect of maximizing utilization efficiency, reducing communication volume, and improving training accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

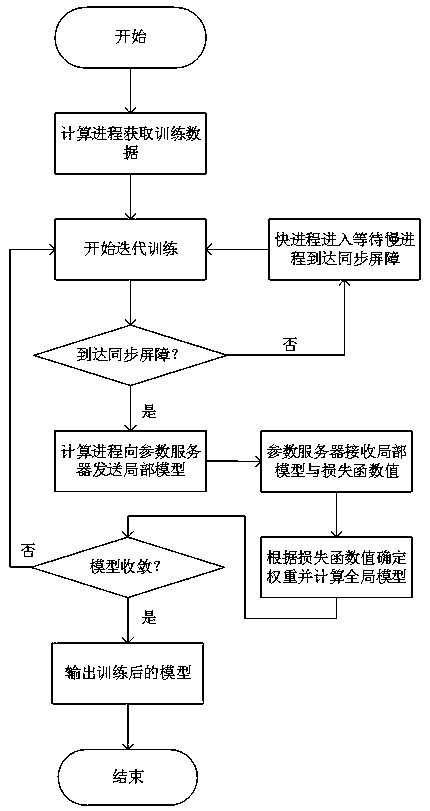

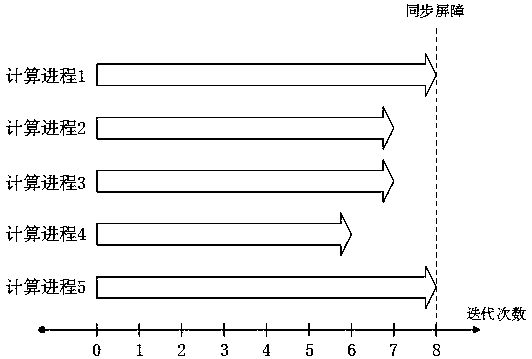

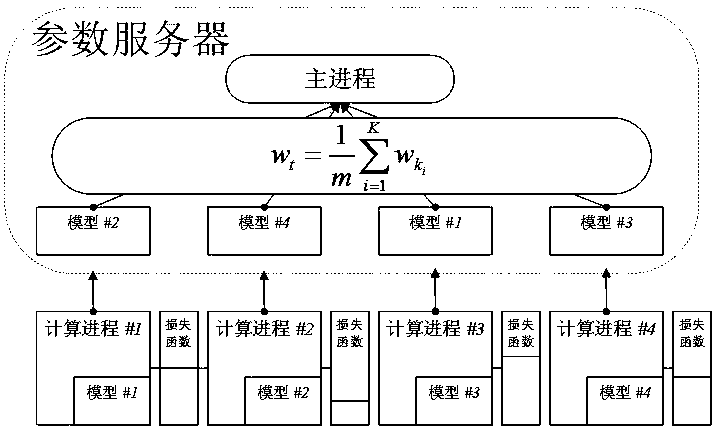

[0023] The specific embodiments of the present invention will be further described in detail below in conjunction with the accompanying drawings. The specific steps are described as figure 1 shown, where:

[0024] Step 1: Clean and transform the data generated by teachers, students, and other staff's daily behavior, and store it in a memory-mapped database for training.

[0025] Step 2: The main process reads the configuration file, including training parameters and model network. The training parameters mainly include initial learning rate, learning rate adjustment method, impulse value, maximum number of iterations, etc.; the model network is a model described by layer in prototxt format network files. Each calculation process uses the no-replacement extraction method to randomly select local training data from all training data. The final result is that each process has the same number of different data, and the training data is formatted and labeled pictures.

[0026] S...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com