Memory network video abstraction method based on multipath features

A network video and memory technology, applied in image communication, selective content distribution, electrical components, etc., can solve the problems of lack of real-time video, difficult to find content quickly, and inability to achieve long-term memory of information.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The following will clearly and completely describe the technical solutions in the embodiments of the present invention with reference to the drawings in the embodiments of the present invention.

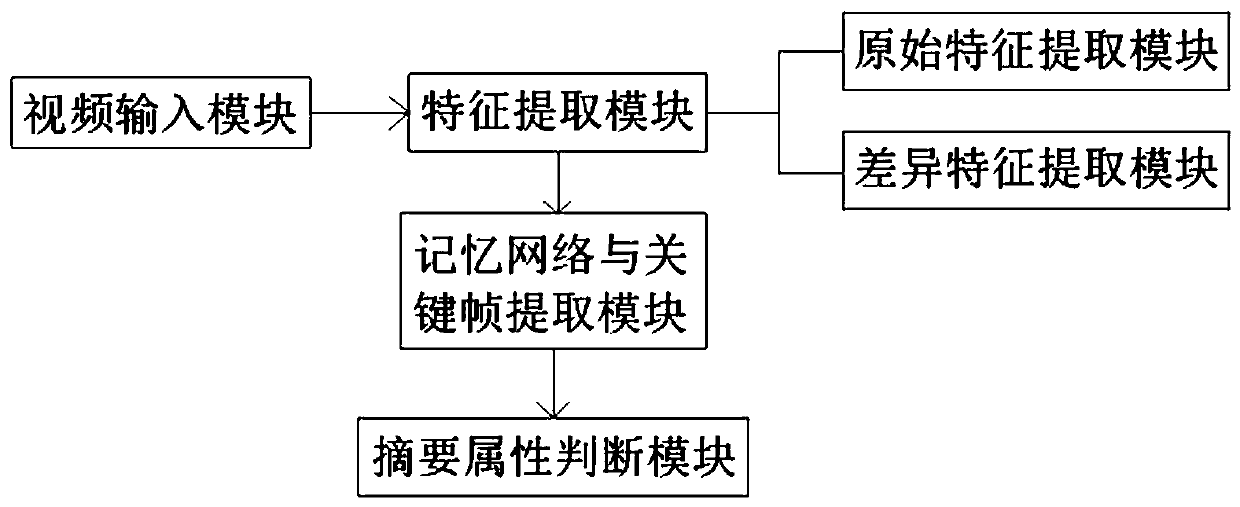

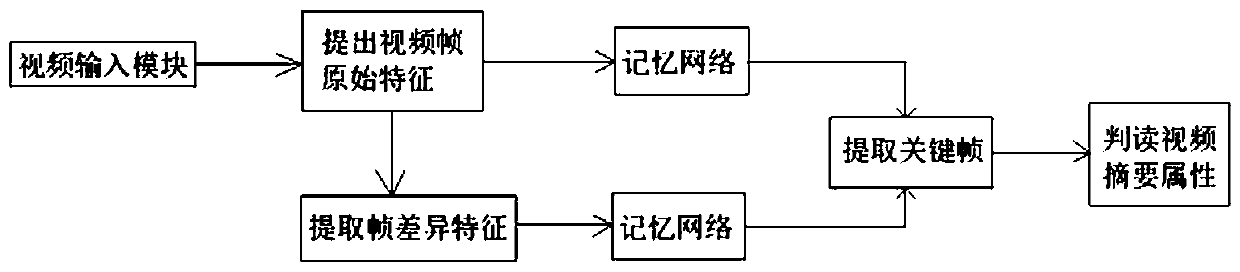

[0030] Such as Figure 1-2 As shown, the present invention provides a kind of technical scheme: a kind of memory network video summarization method based on multi-channel feature, comprising:

[0031] A video input module, the video input module is used to record video frames to be processed;

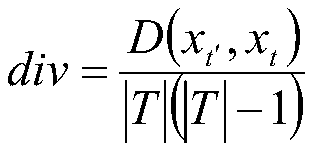

[0032] Feature extraction module, the feature extraction module is used to extract the original feature x in the video image t , and each video is represented by a K*1024-dimensional vector, and the difference between two video frames is used as the difference feature x d , and then input the difference feature and the original feature into the RNN memory network at the same time. Due to the ability of RNN to capture long-term dependencies in the video frame, only the temporal memory ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com