Training method and device of interpolation filter, video image encoding and decoding method, and codec

An interpolation filter and training method technology, applied in the field of video encoding and decoding, can solve the problems of poor video image encoding and decoding performance and accuracy, and achieve the effects of improving encoding and decoding performance, reducing code stream, and improving prediction accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 approach

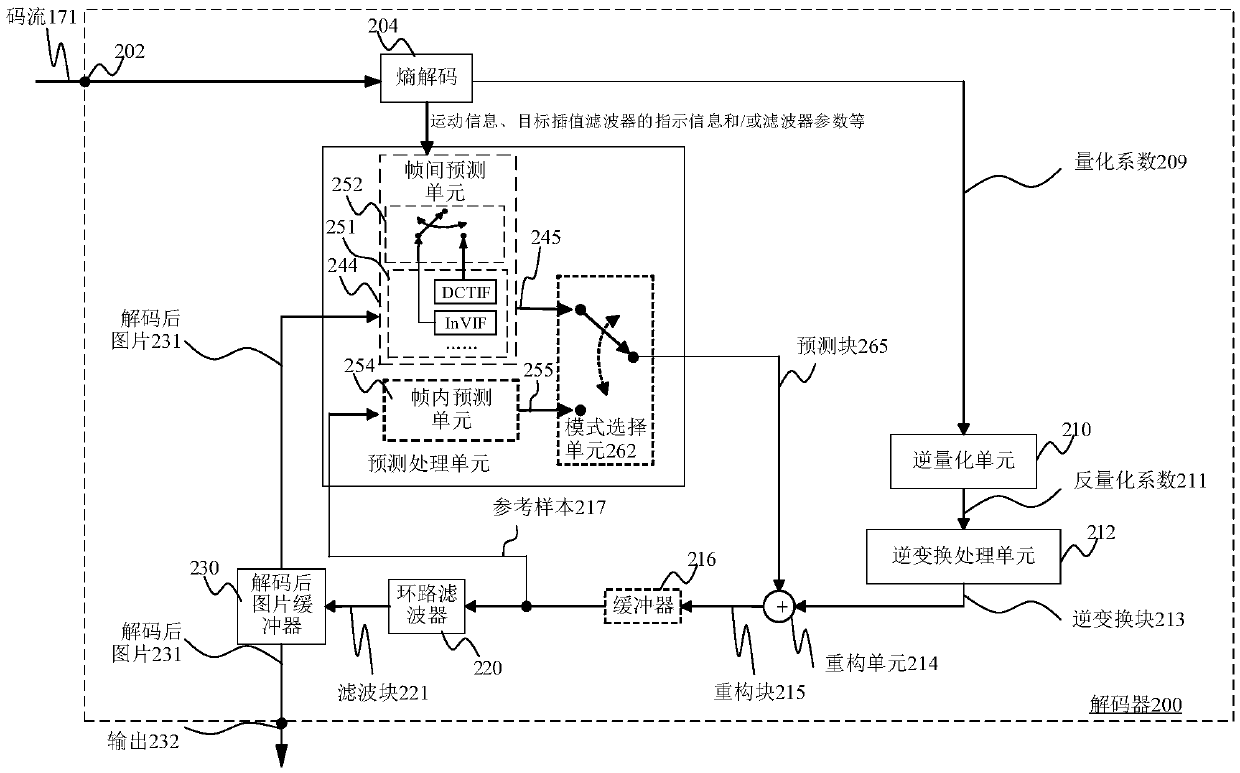

[0449] In a non-target inter prediction mode (such as a non-merge mode), the entropy decoding unit 1601 is specifically configured to parse the index of the motion information of the image block to be decoded from the code stream;

[0450] The inter prediction unit 1602 is further configured to determine the motion information of the currently decoded image block based on the index of the motion information of the currently decoded image block and the candidate motion information list of the currently decoded image block.

no. 2 approach

[0452] In a non-target inter prediction mode (such as a non-merge mode), the entropy decoding unit 1601 is specifically configured to: parse out the index and motion vector difference of the motion information of the image block to be decoded from the code stream;

[0453] The inter prediction unit 1602 is further configured to: determine the motion vector predictor of the currently decoded image block based on the index of the motion information of the currently decoded image block and the candidate motion information list of the currently decoded image block; and, based on the The motion vector prediction value and the motion vector difference value are obtained to obtain the motion vector of the currently decoded image block.

no. 3 approach

[0454] Embodiment 3: In a non-target inter prediction mode (such as non-merge mode), the entropy decoding unit 1601 is specifically configured to parse out the index and motion vector difference of the motion information of the image block to be decoded from the code stream value;

[0455] The inter-frame prediction unit 1602 is further configured to determine the motion vector predictor of the currently decoded image block based on the index of the motion information of the currently decoded image block and the candidate motion information list of the currently decoded image block; further, based on the The motion vector prediction value and the motion vector difference value are obtained to obtain the motion vector of the currently decoded image block.

[0456] In a possible implementation of the embodiment of this application, if the target filter is the above Figures 6A-6D For the second interpolation filter obtained by any of the training methods of the interpolation fi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com