Data parallelization processing method, system and device and storage medium

A processing method and technology of a processing system, applied in data parallel processing methods, equipment and storage media, and system fields, can solve problems such as inability to fully utilize CPU and computing card computing resources

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

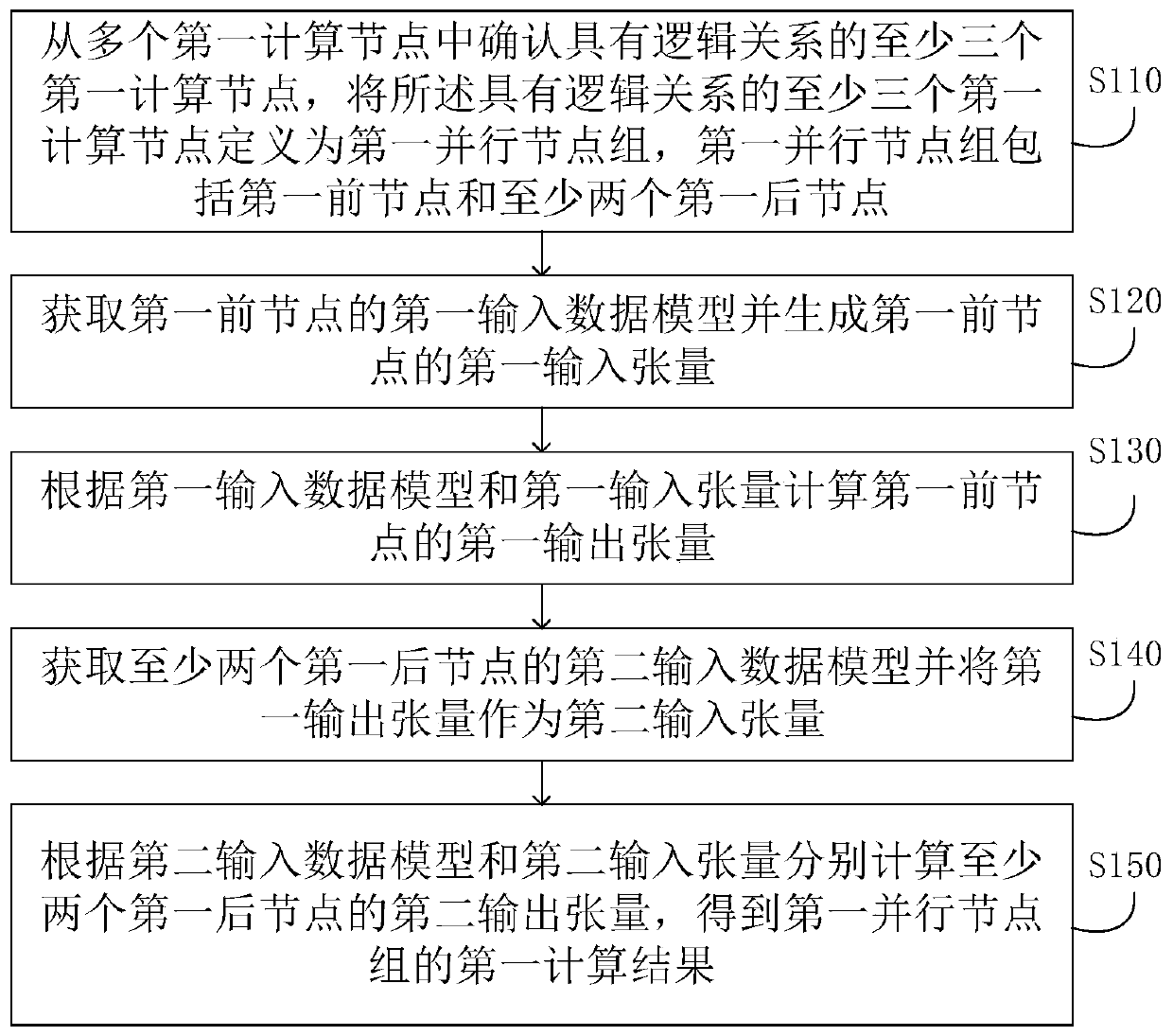

[0031] figure 1 It is a flow chart of a data parallel processing method provided by Embodiment 1 of the present invention. This embodiment is applicable to graph reasoning with multiple logical relationships, and the method can be executed by a host. like figure 1 As shown, a data parallel processing method includes S110 to S150.

[0032] S110. Confirm at least three first computing nodes with a logical relationship from a plurality of first computing nodes, and define the at least three first computing nodes with a logical relationship as a first parallel node group, and the first parallel node group includes the first parallel node group A front node and at least two first rear nodes.

[0033] In this embodiment, at least three first computing nodes having a logical relationship mean that the three first computing nodes include at least one first previous node located upstream in the logical relationship and at least two nodes directly connected to the first previous node...

Embodiment 2

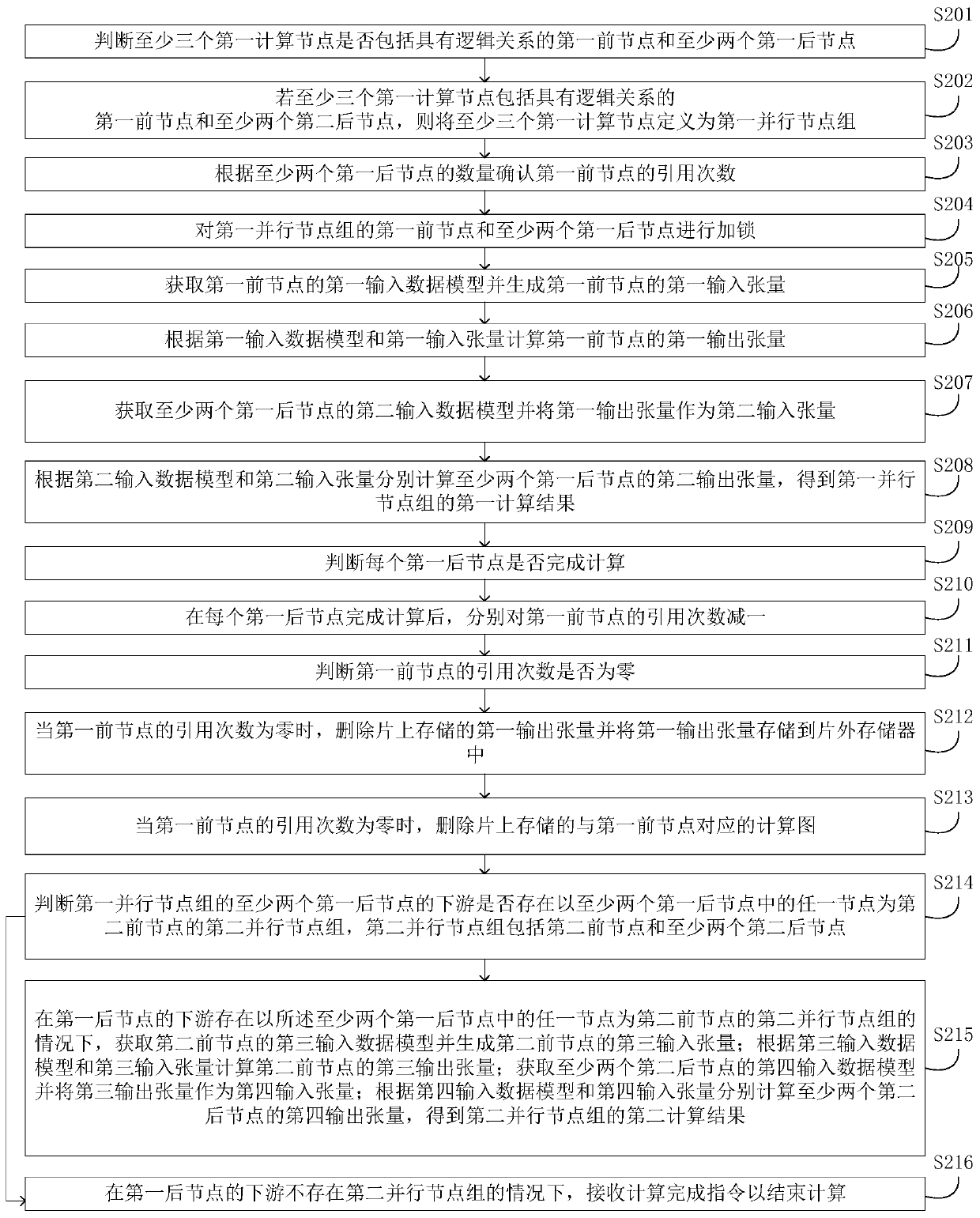

[0045] Embodiment 2 of the present invention is an optional embodiment based on Embodiment 1. figure 2 It is a flow chart of a data parallel processing method provided by Embodiment 2 of the present invention. like figure 2 As shown, the data parallel processing method in this embodiment includes S201 to S216.

[0046] S201. Determine whether at least three first computing nodes include a first front node and at least two first rear nodes having a logical relationship.

[0047] In this embodiment, after receiving multiple first computing nodes, select at least three first computing nodes among these first computing nodes and judge whether there is a logical relationship between these three computing nodes, that is, whether there is a first node and at least two first post nodes. Taking the computing node calculation of the neural network as an example, the neural network generally has multiple layers, that is, multiple logically connected computing nodes. , the first pos...

Embodiment 3

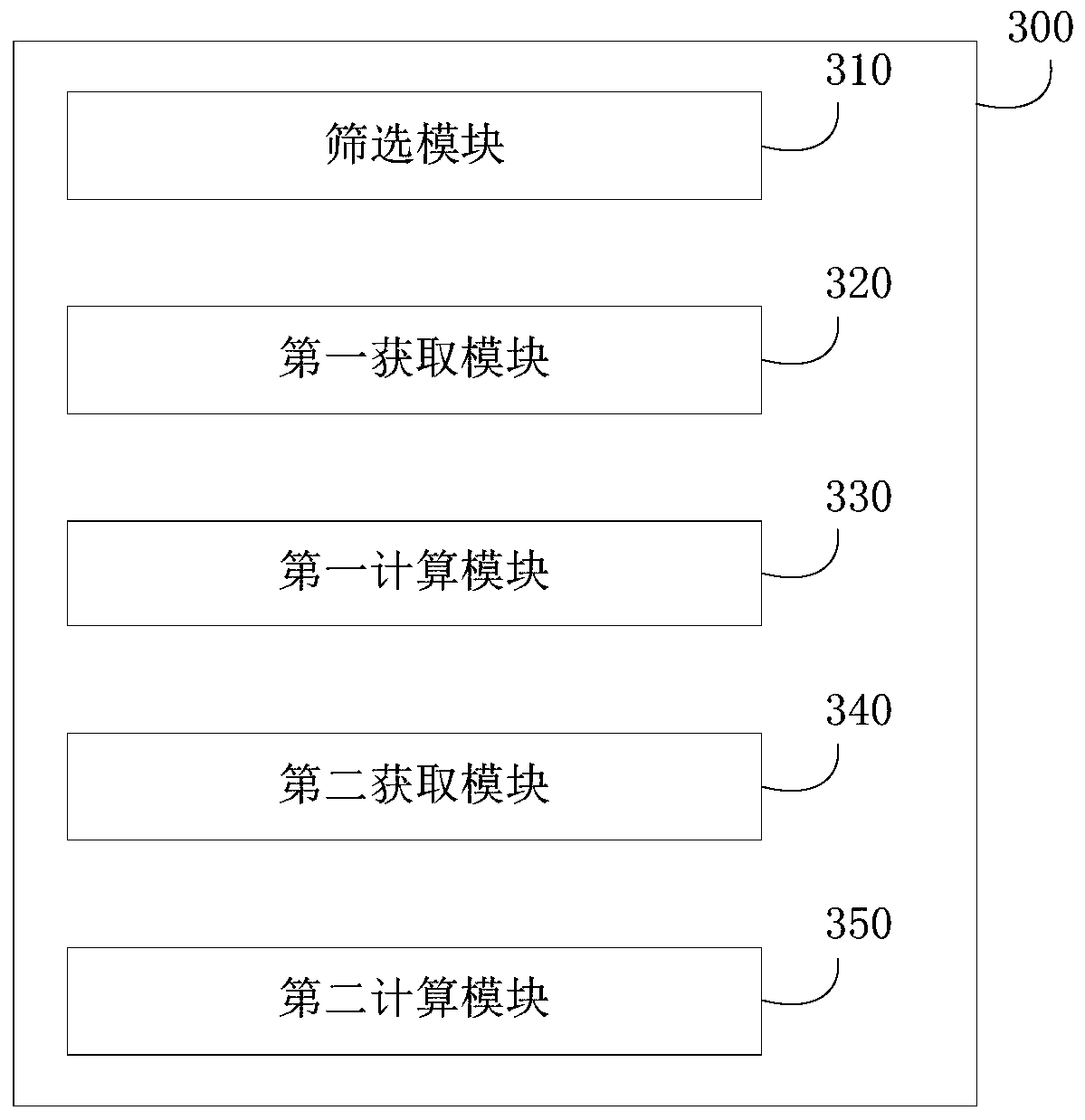

[0080] image 3 It is a schematic structural diagram of a data parallel processing system provided by Embodiment 3 of the present invention. like image 3 As shown, the data parallel processing system 300 of this embodiment includes: a screening module 310 , a first acquisition module 320 , a first calculation module 330 , a second acquisition module 340 and a second calculation module 350 .

[0081] A screening module 310, configured to confirm at least three first computing nodes having a logical relationship from a plurality of first computing nodes, defining the at least three first computing nodes having a logical relationship as a first parallel node group, the first parallel The node group includes a first front node and at least two first rear nodes;

[0082] The first obtaining module 320 is used to obtain the first input data model of the first previous node and generate the first input tensor of the first previous node;

[0083] The first calculation module 330 i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com