Novel multi-head attention mechanism

A kind of attention and multi-head technology, applied in the direction of computing model, machine learning, computing, etc., can solve the problems of high space complexity, destroying sequence continuity structure, occupying large computing space, etc., to reduce storage space consumption and reduce model complexity Degree, the effect of improving the degree of parallelism

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0028] The present invention will be further described below.

[0029] A new type of multi-head attention mechanism, including the following steps:

[0030] a) Connect the equal-dimensional vector sequences input to the multi-head attention mechanism to form a matrix E, E i,j Indicates the data in row i and column j in the matrix, 1≤i≤l, l is the sequence length in the matrix, 1≤j≤d, d is the dimension of the vector sequence;

[0031] b) Set the model hyperparameters k, h, m respectively, k, h, m are positive integers, k represents the length range of establishing context in the multi-head attention mechanism, h represents the number of heads in the multi-head attention mechanism, m is the vector dimension of the hidden layer processed by each head in the multi-head attention mechanism;

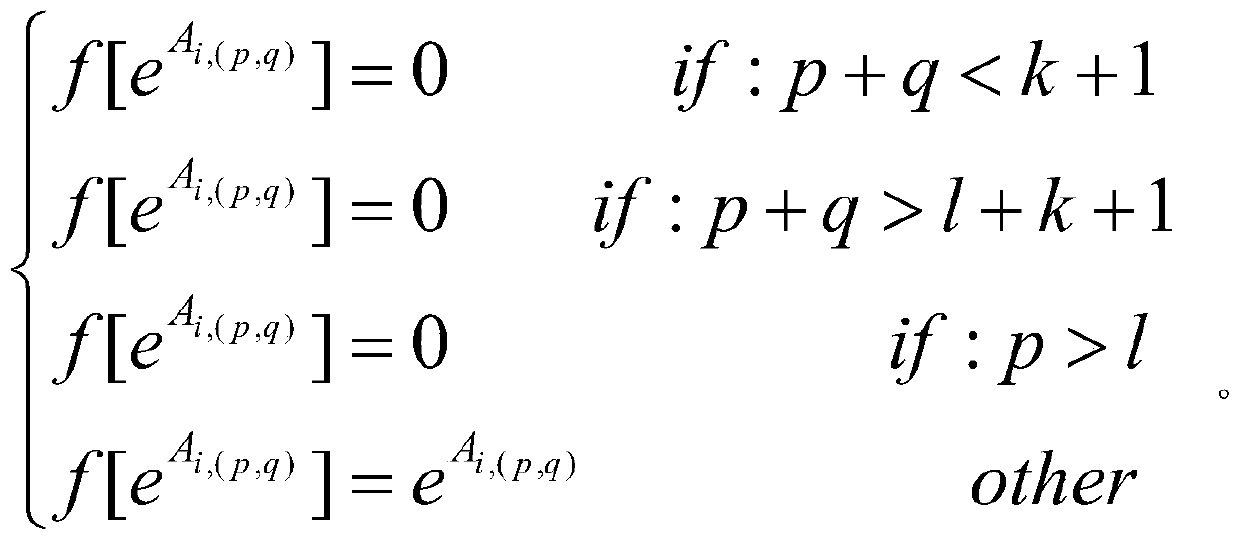

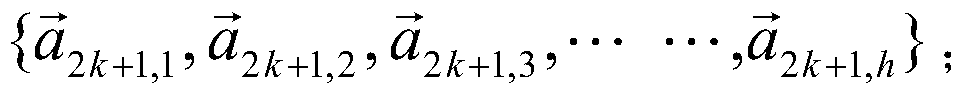

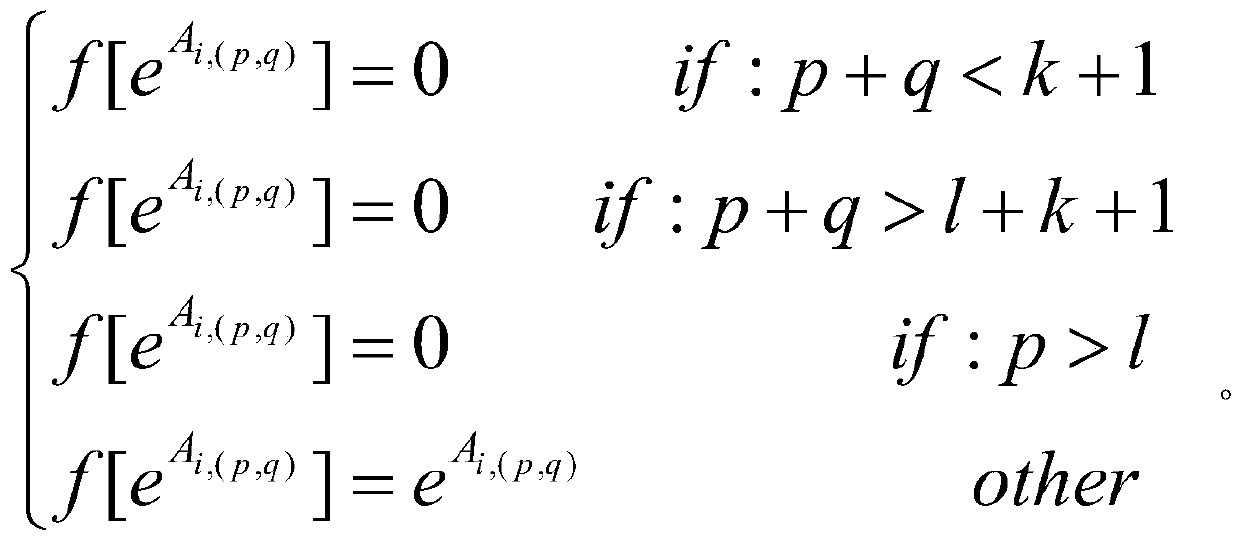

[0032] c) Initialize the set of parameter matrices separately and In each set, there are h parameter matrices with d rows and m columns, for The ith matrix in the set, 1≤i≤h, fo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com