Patents

Literature

35results about How to "Increase parallelism" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

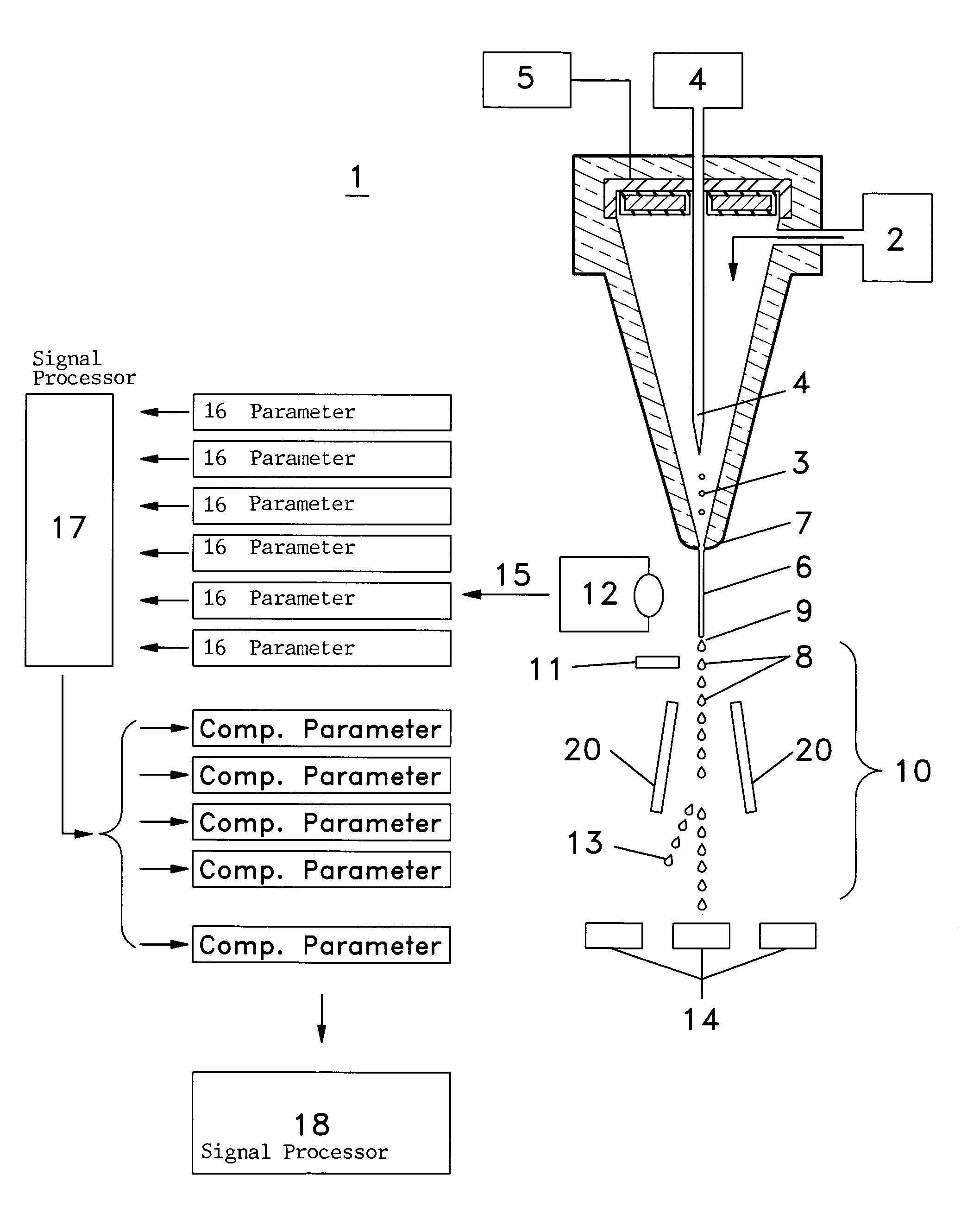

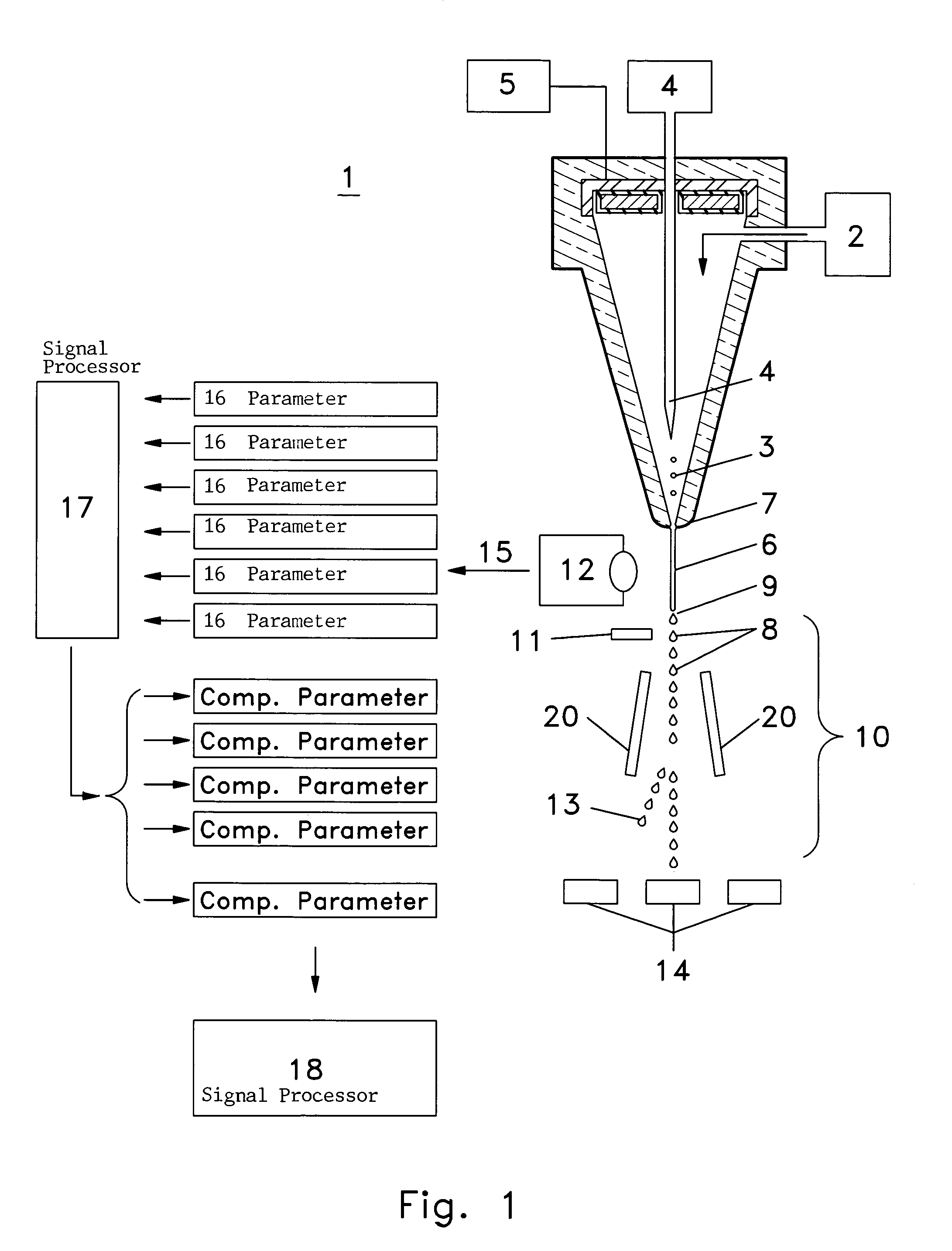

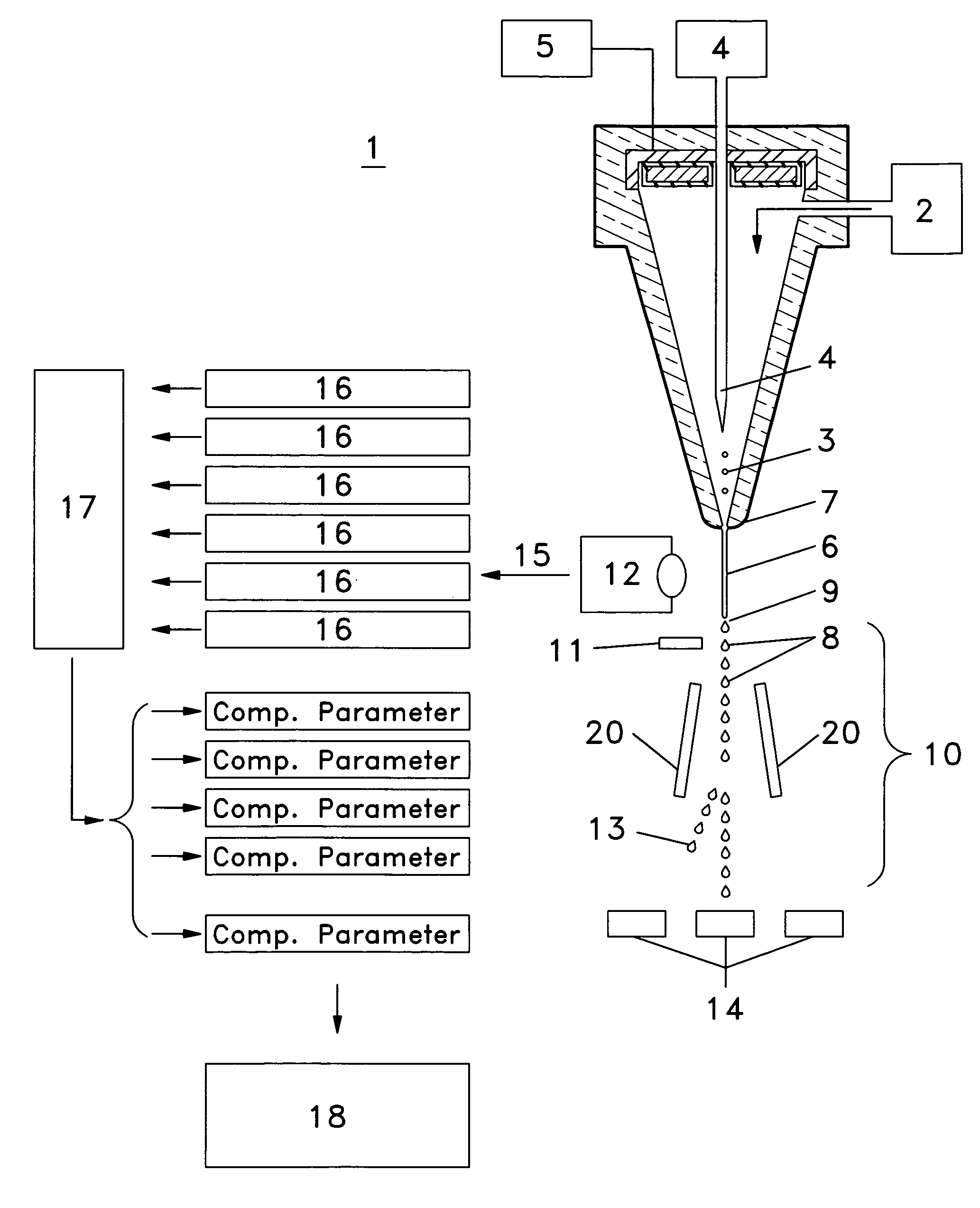

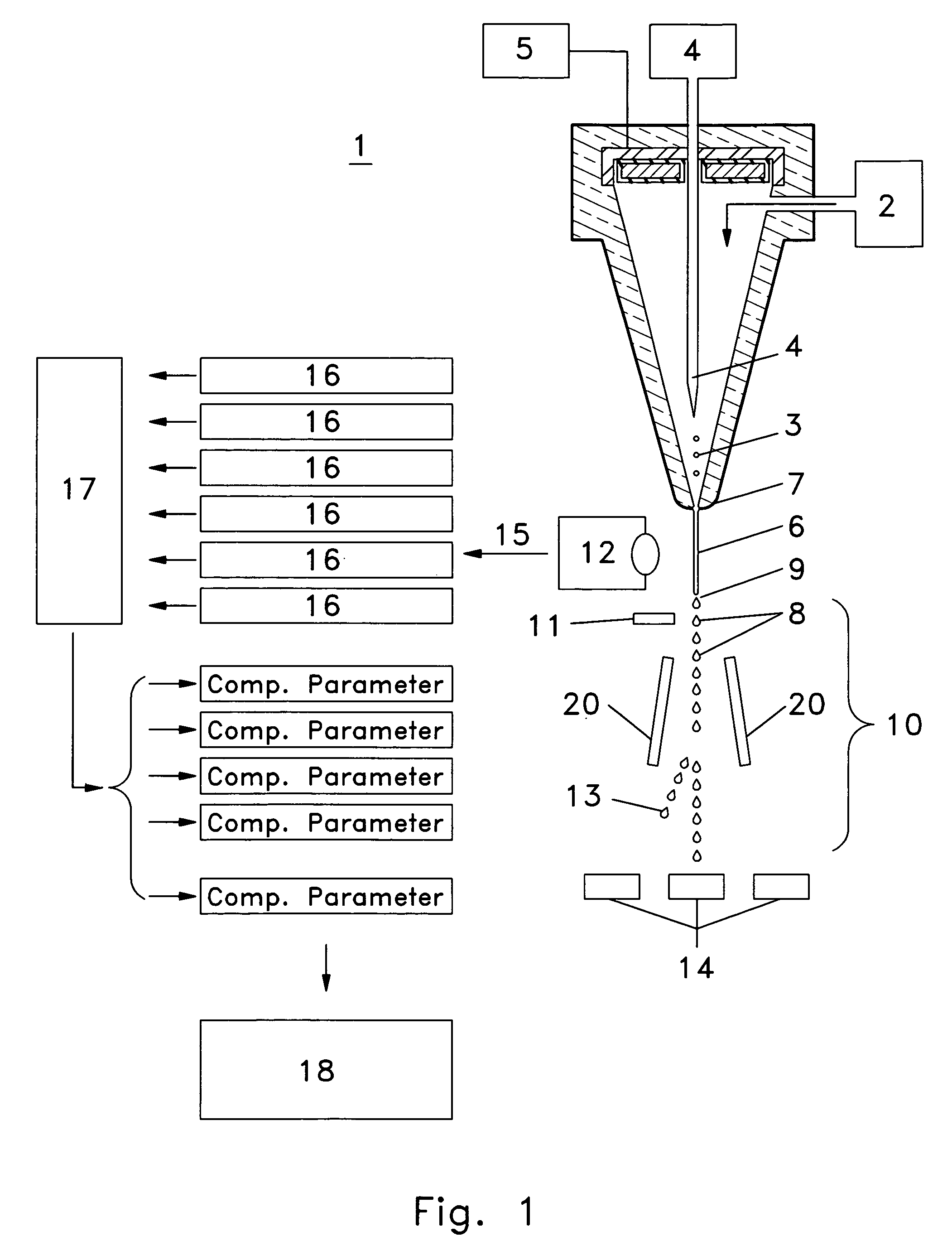

Transiently dynamic flow cytometer analysis system

InactiveUS7024316B1Good compensationShort transition timeFlow propertiesVolume/mass flow measurementHigh rateComputer science

A flow cytometry apparatus and methods to process information incident to particles or cells entrained in a sheath fluid stream allowing assessment, differentiation, assignment, and separation of such particles or cells even at high rates of speed. A first signal processor individually or in combination with at least one additional signal processor for applying compensation transformation on data from a signal. Compensation transformation can involve complex operations on data from at least one signal to compensate for one or numerous operating parameters. Compensated parameters can be returned to the first signal processor for provide information upon which to define and differentiate particles from one another.

Owner:BECKMAN COULTER INC

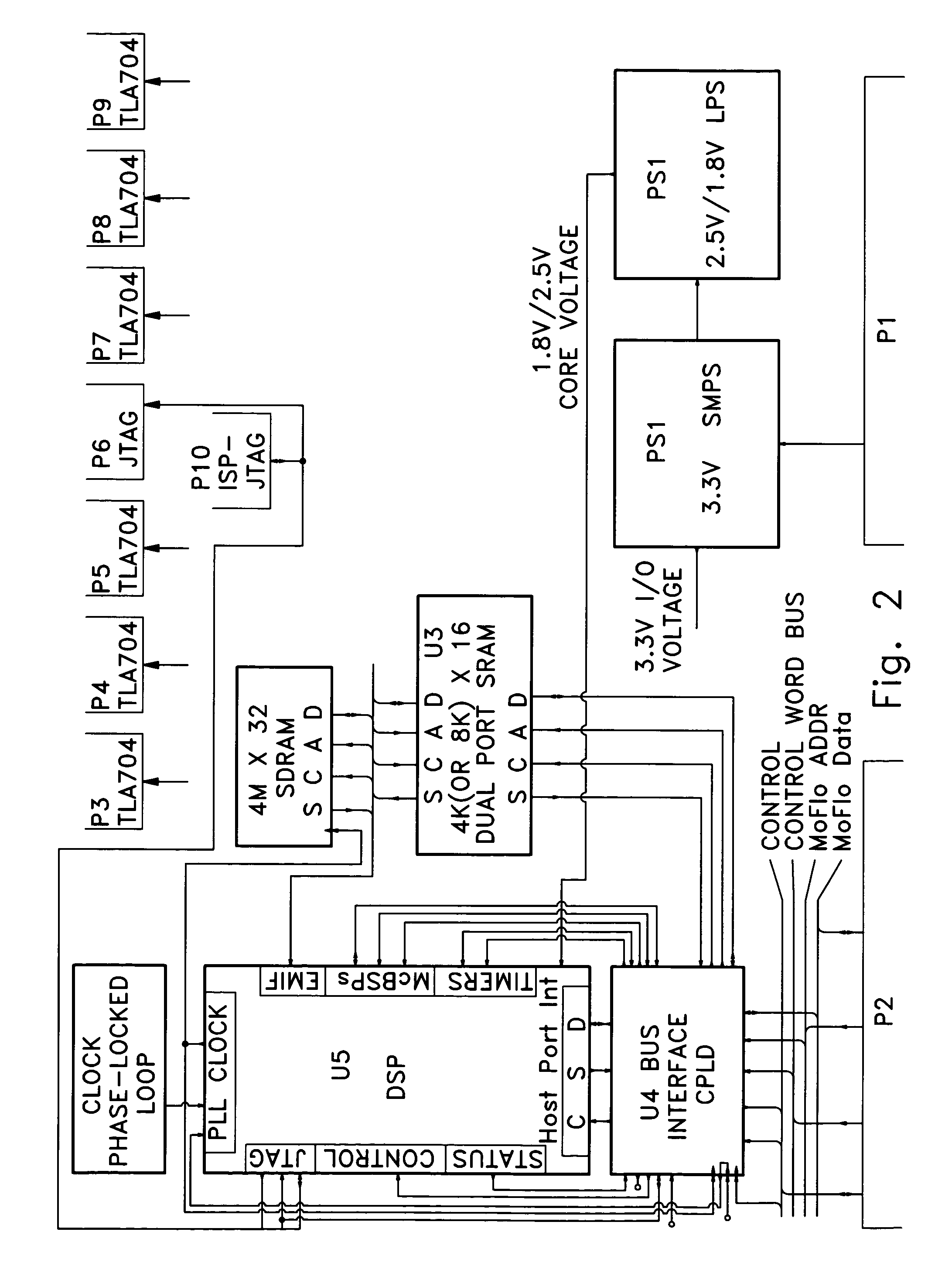

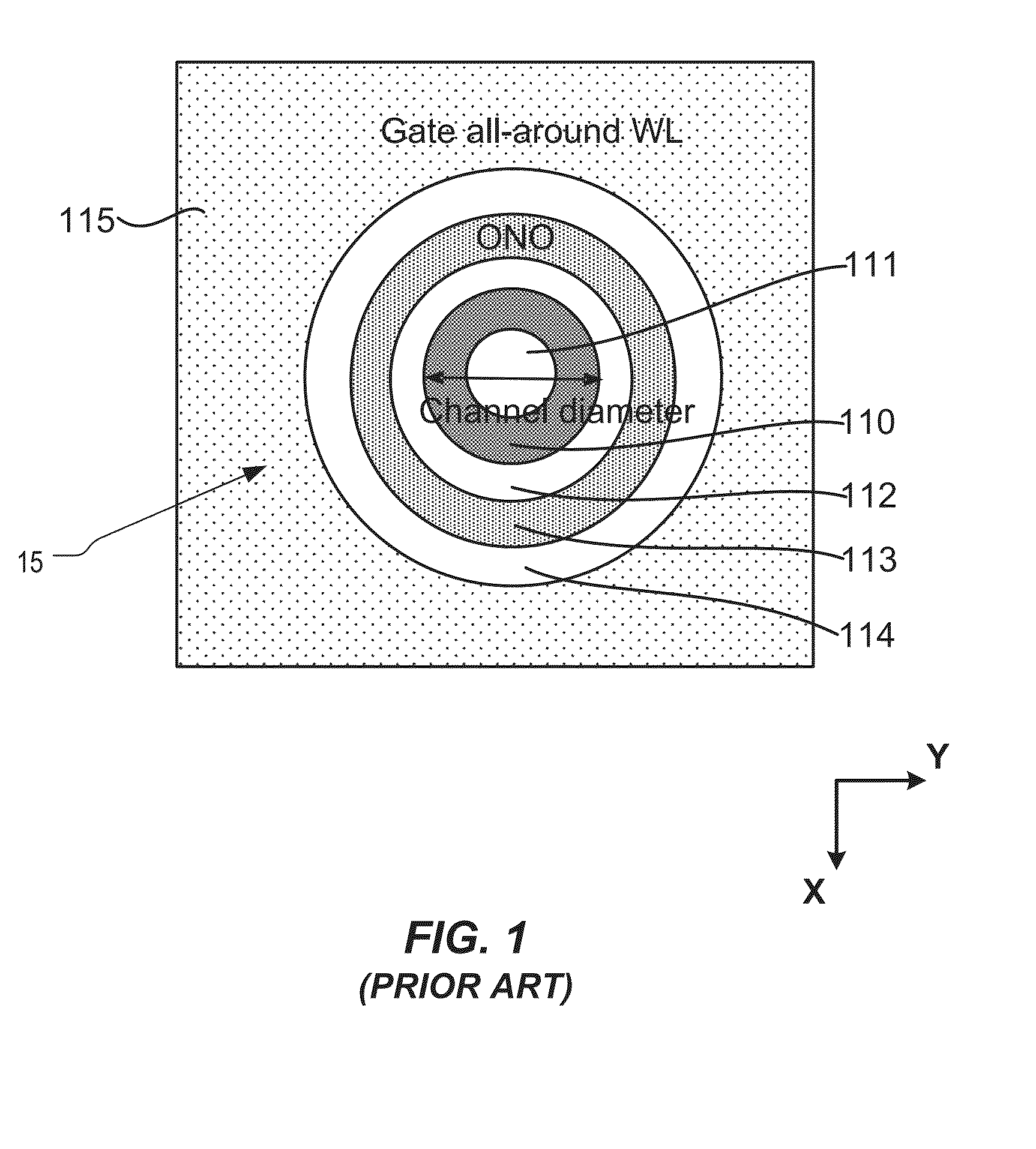

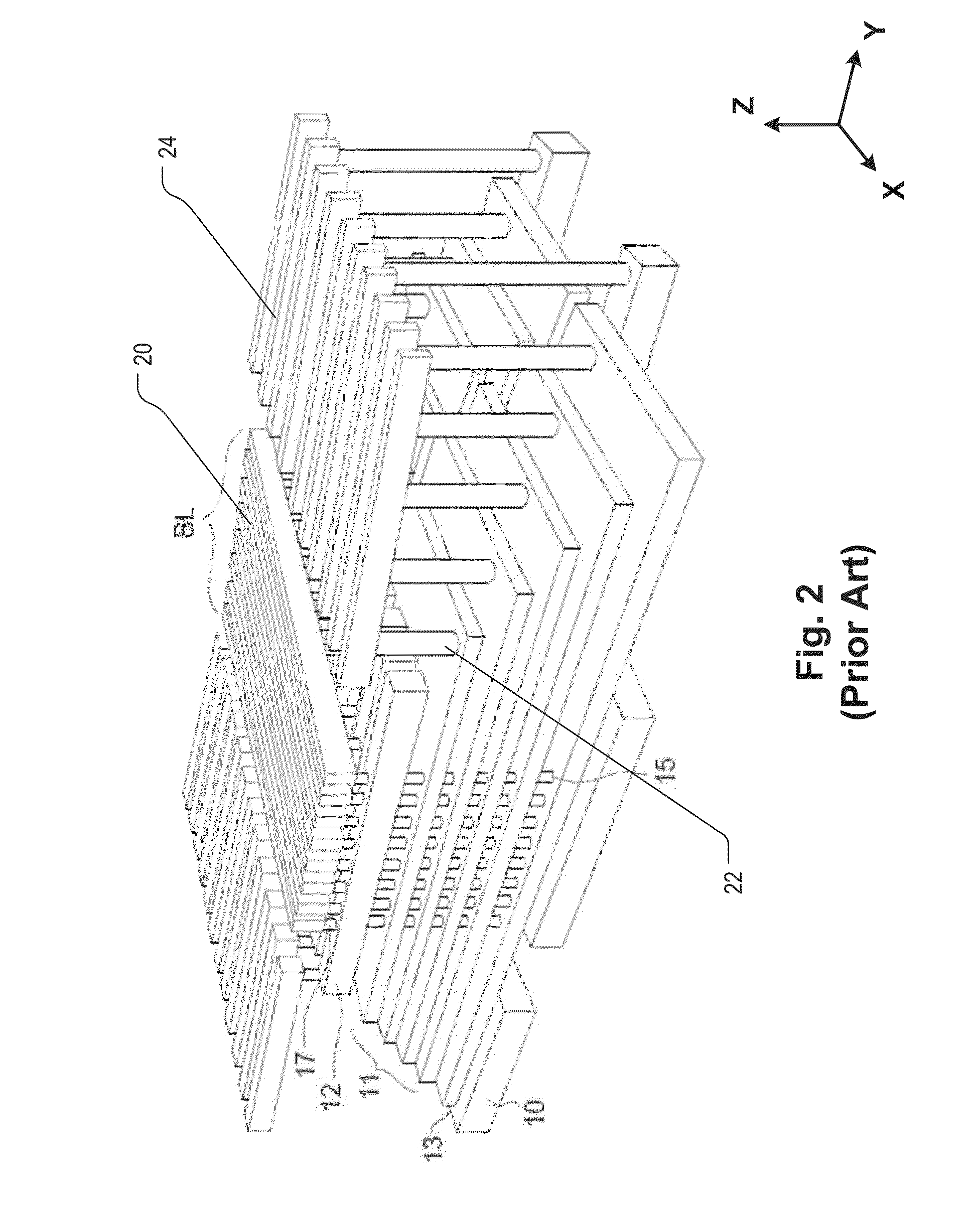

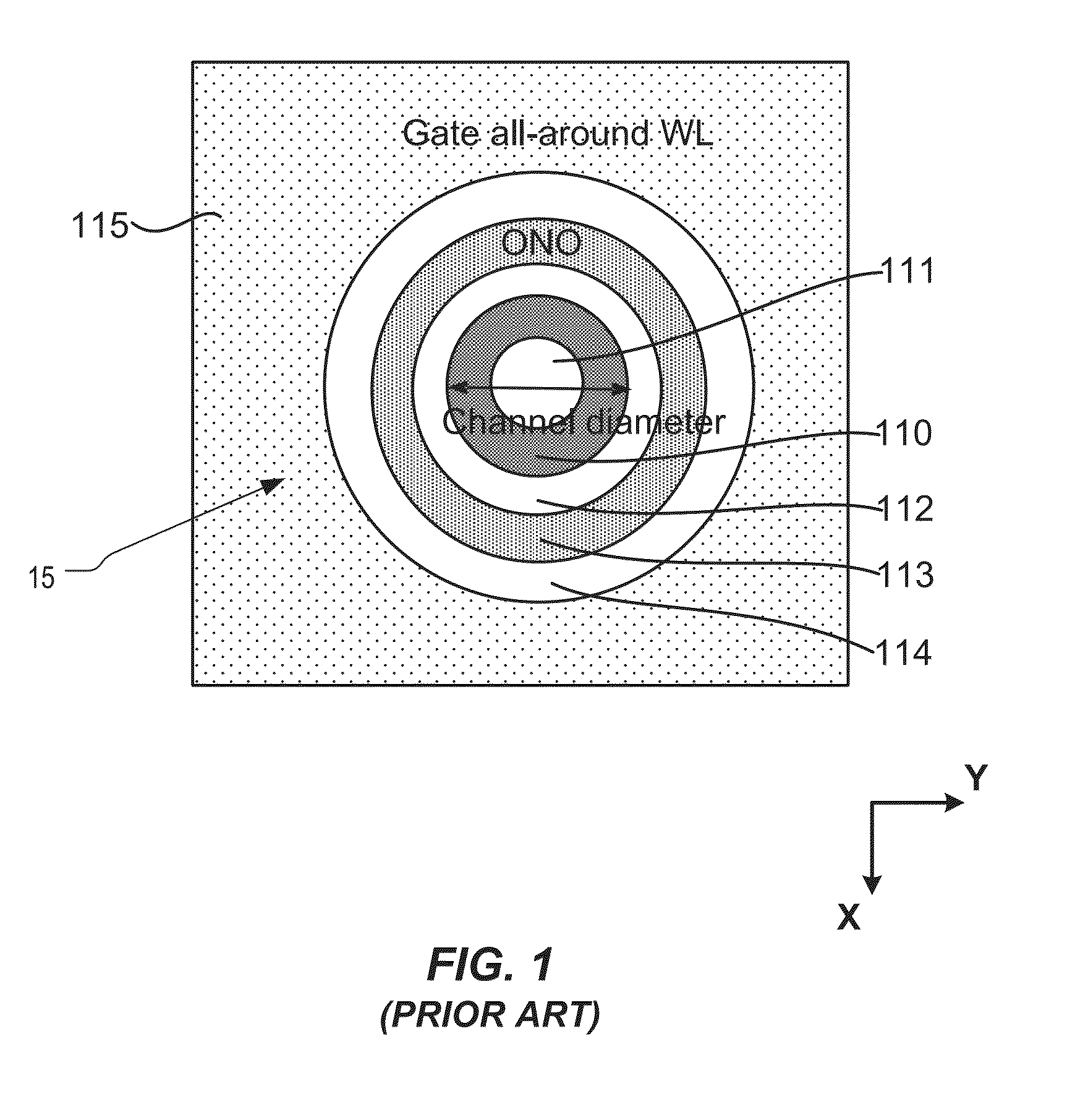

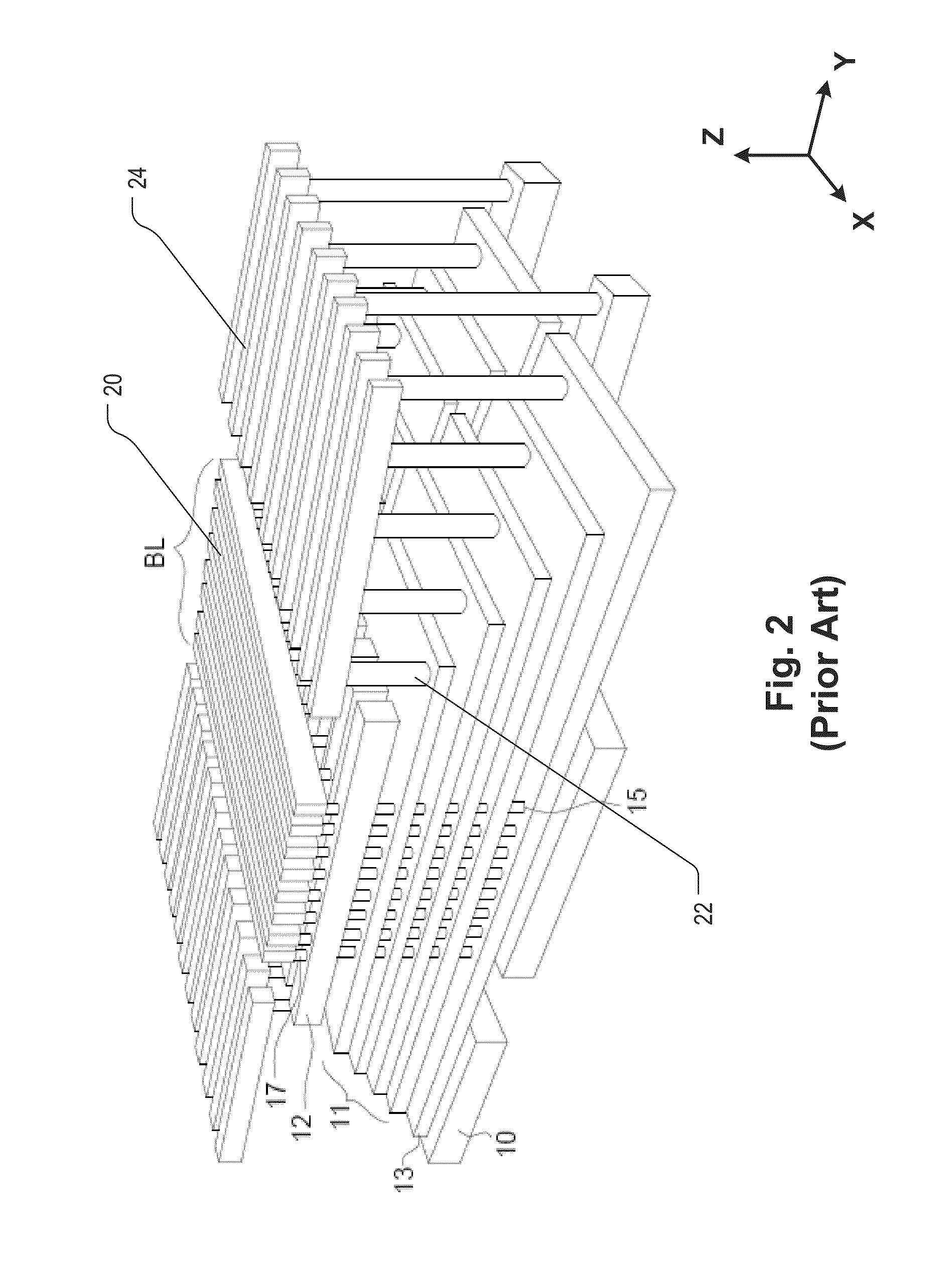

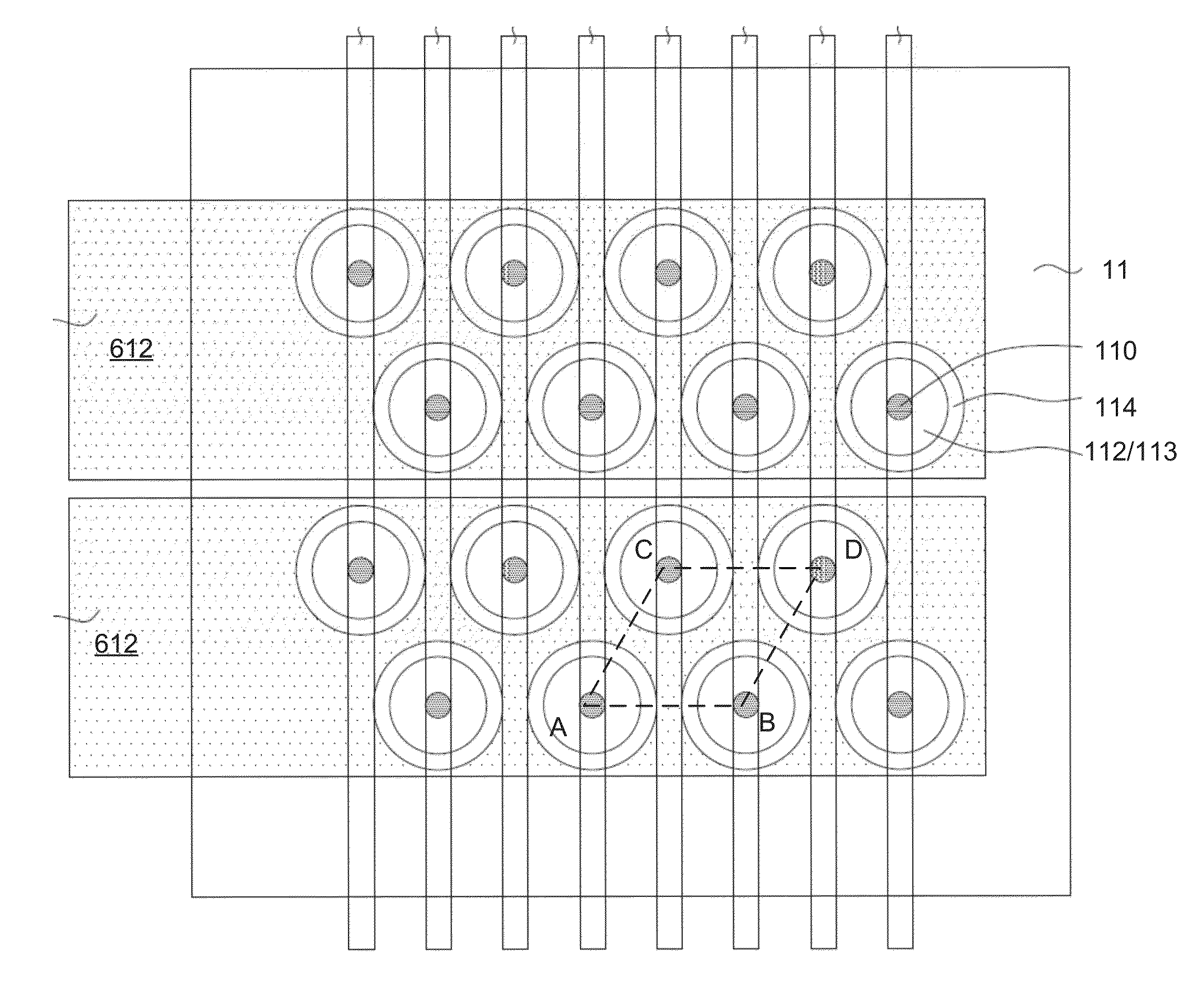

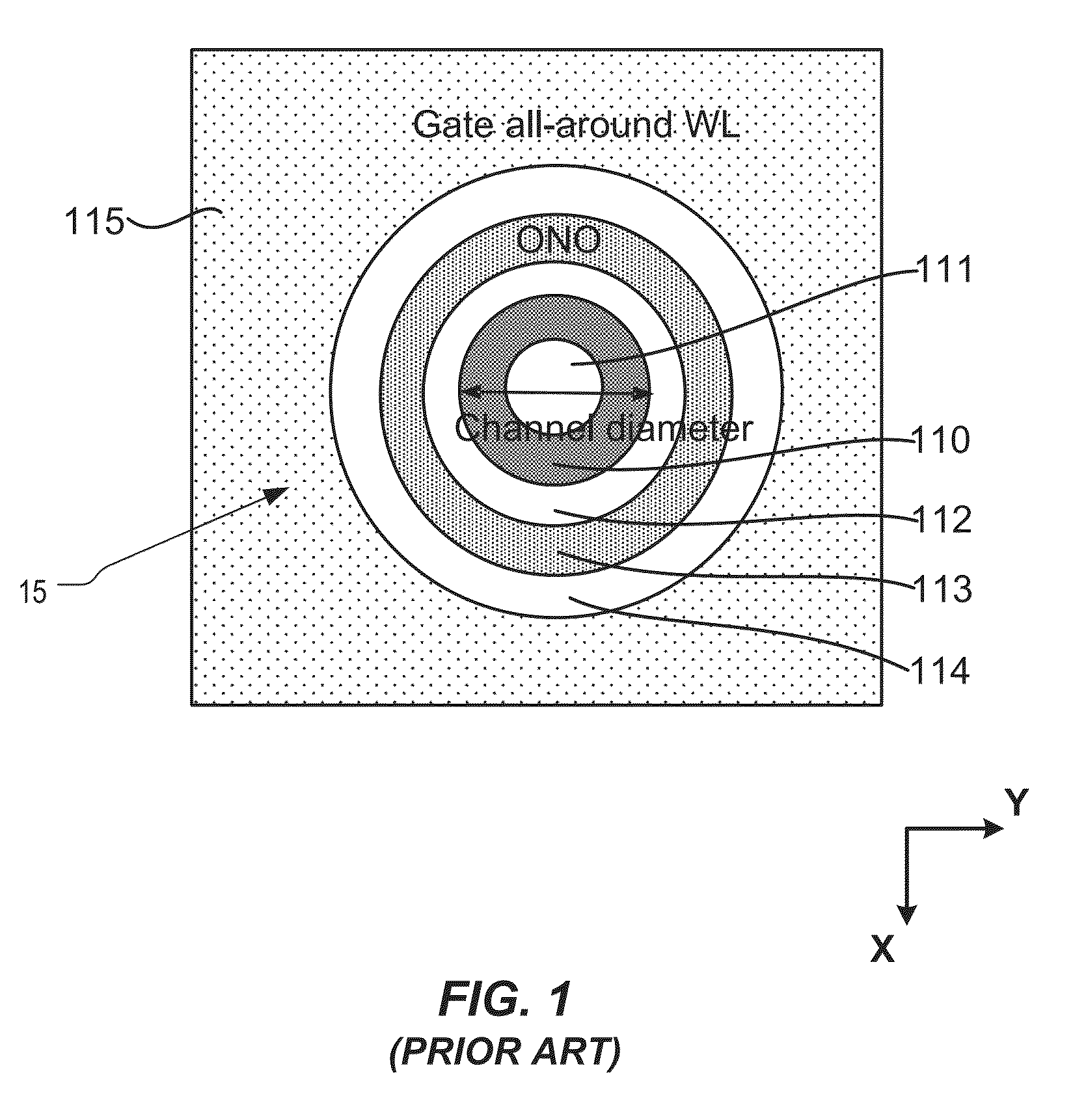

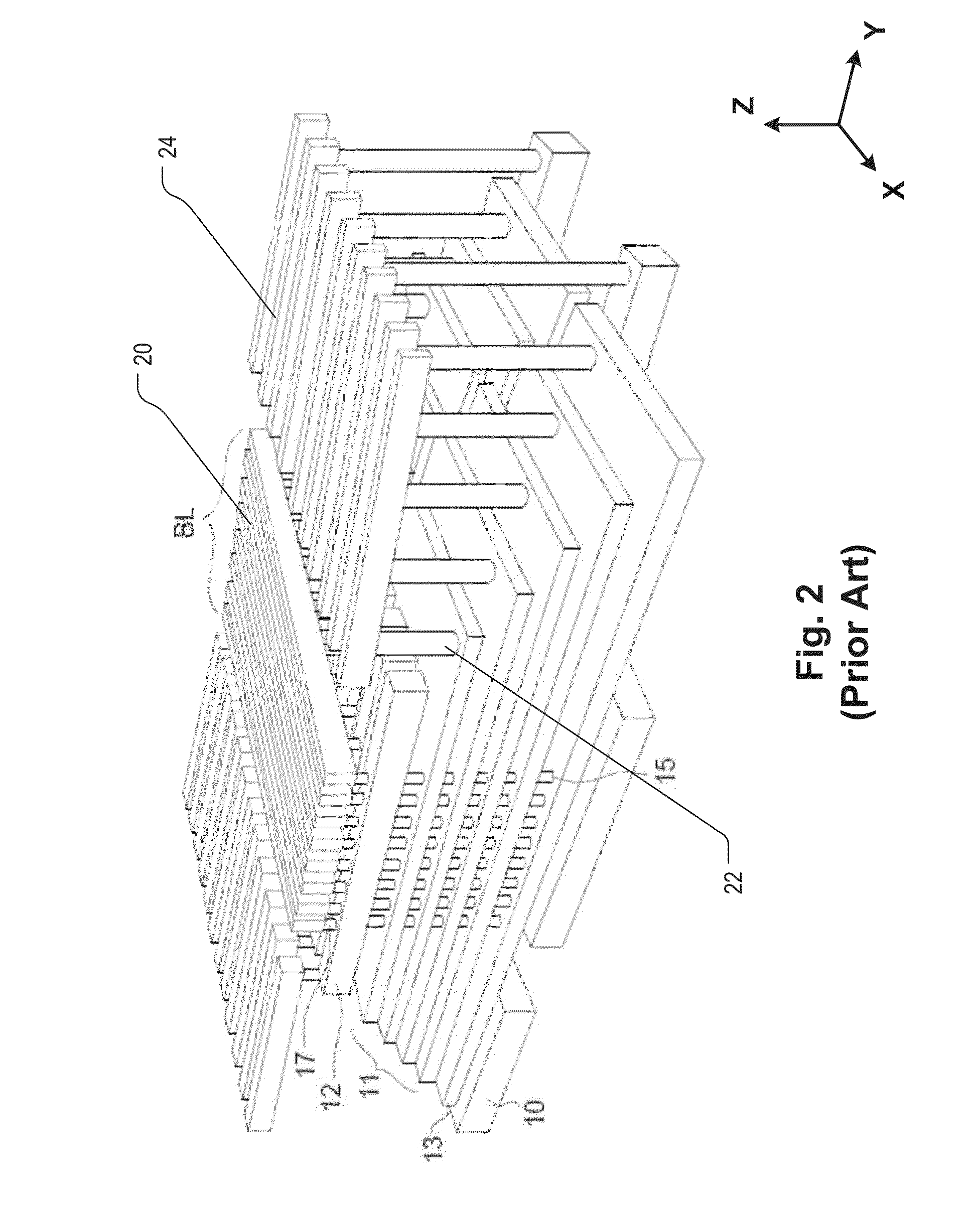

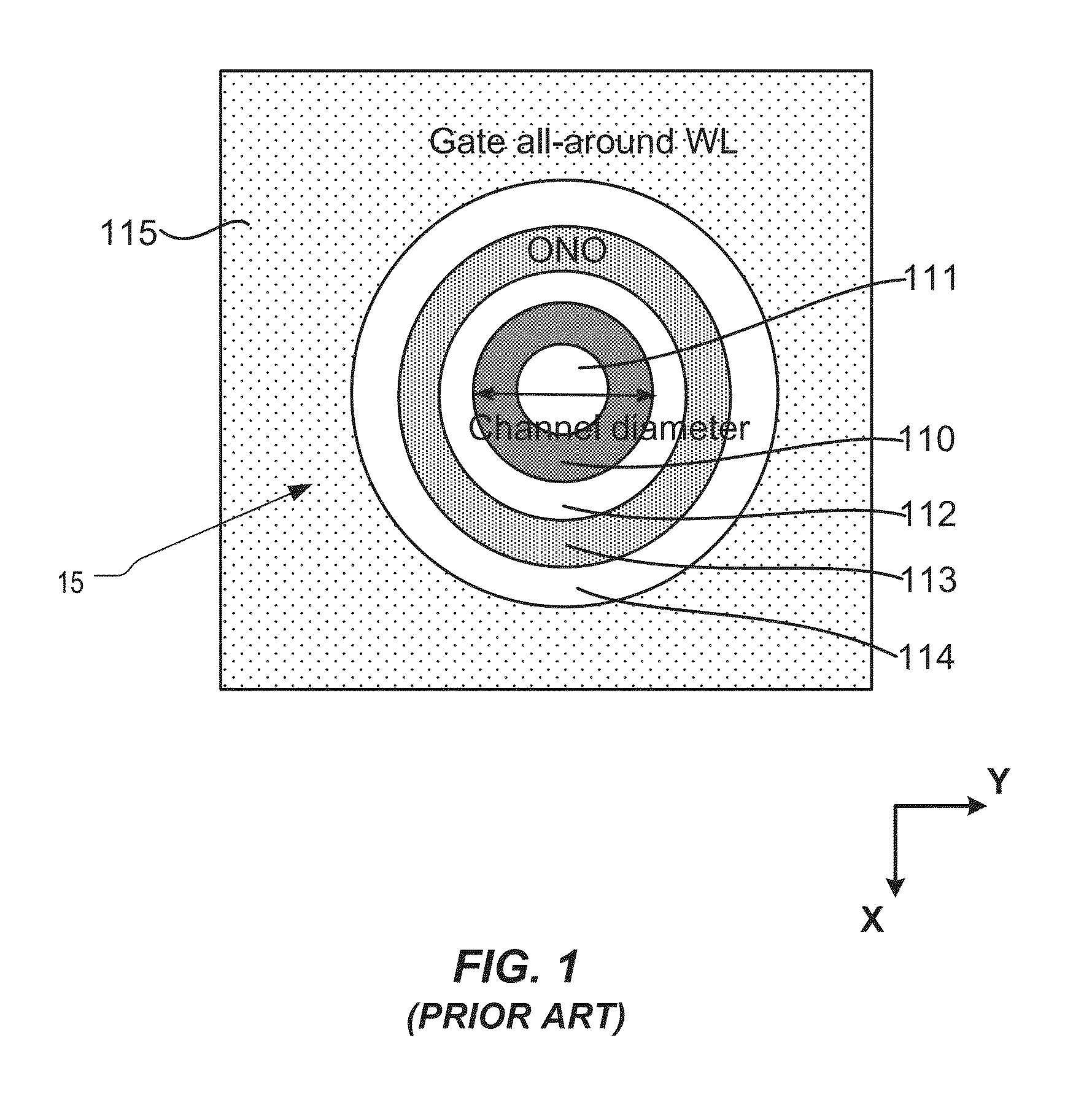

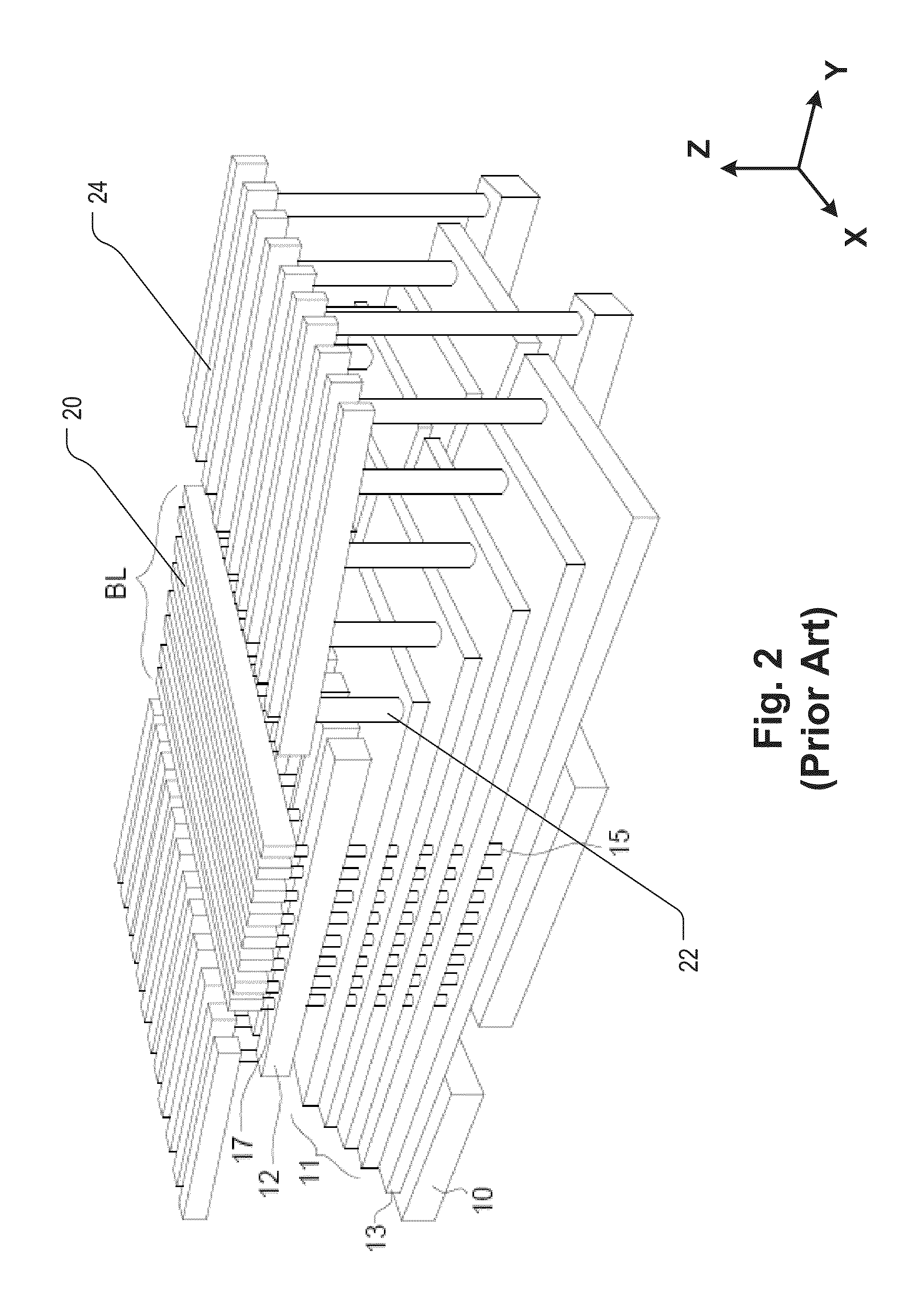

Parallelogram cell design for high speed vertical channel 3D NAND memory

ActiveUS20150206898A1Increase bit densityReduce distractionsSolid-state devicesSemiconductor devicesCapacitanceBit line

Roughly described, a memory device has a multilevel stack of conductive layers. Pillars oriented orthogonally to the substrate each include series-connected memory cells at cross-points between the pillars and the conductive layers. String select lines (SSLs) are disposed above the conductive layers, and bit lines are disposed above the SSLs. The pillars are arranged on a regular grid having a unit cell which is a non-rectangular parallelogram. The pillars may be arranged so as to define a number of parallel pillar lines, each having an acute angle θ>° relative to the bit line conductors, each line of pillars having n>1 pillars intersecting a common one of the SSL. The arrangement permits higher bit line density, a higher data rate due to increased parallelism, and a smaller number of SSLs, thereby reducing disturbance, reducing power consumption and reducing unit cell capacitance.

Owner:MACRONIX INT CO LTD

Systems for transiently dynamic flow cytometer analysis

InactiveUS20060259253A1Cross talk” can be eliminated or minimizedIncrease differentiationFlow propertiesFluid pressure measurement by mechanical elementsHigh rateComputer science

A flow cytometry apparatus and methods to process information incident to particles or cells entrained in a sheath fluid stream allowing assessment, differentiation, assignment, and separation of such particles or cells even at high rates of speed. A first signal processor individually or in combination with at least one additional signal processor for applying compensation transformation on data from a signal. Compensation transformation can involve complex operations on data from at least one signal to compensate for one or numerous operating parameters. Compensated parameters can be returned to the first signal processor for provide information upon which to define and differentiate particles from one another.

Owner:BECKMAN COULTER INC

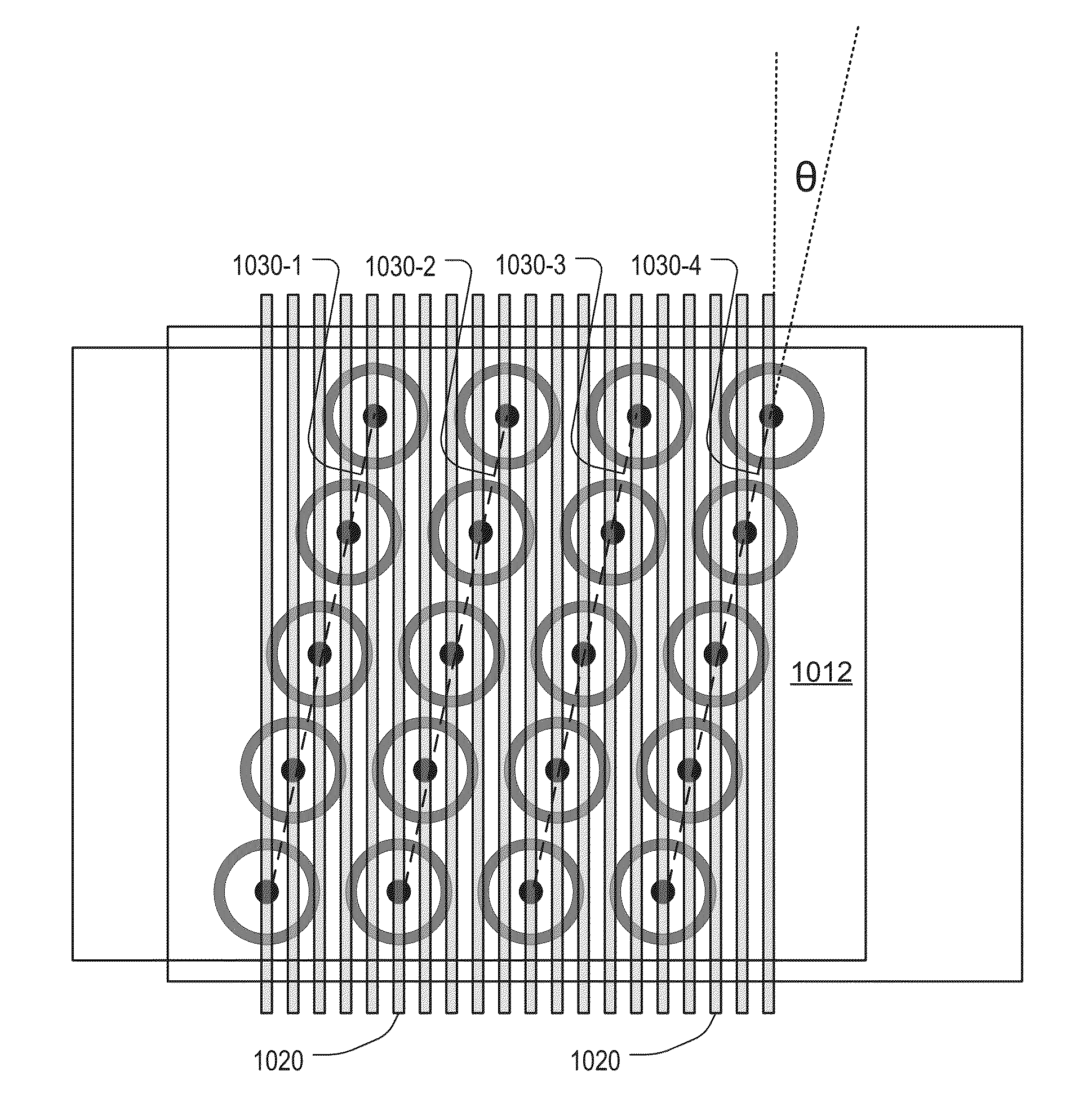

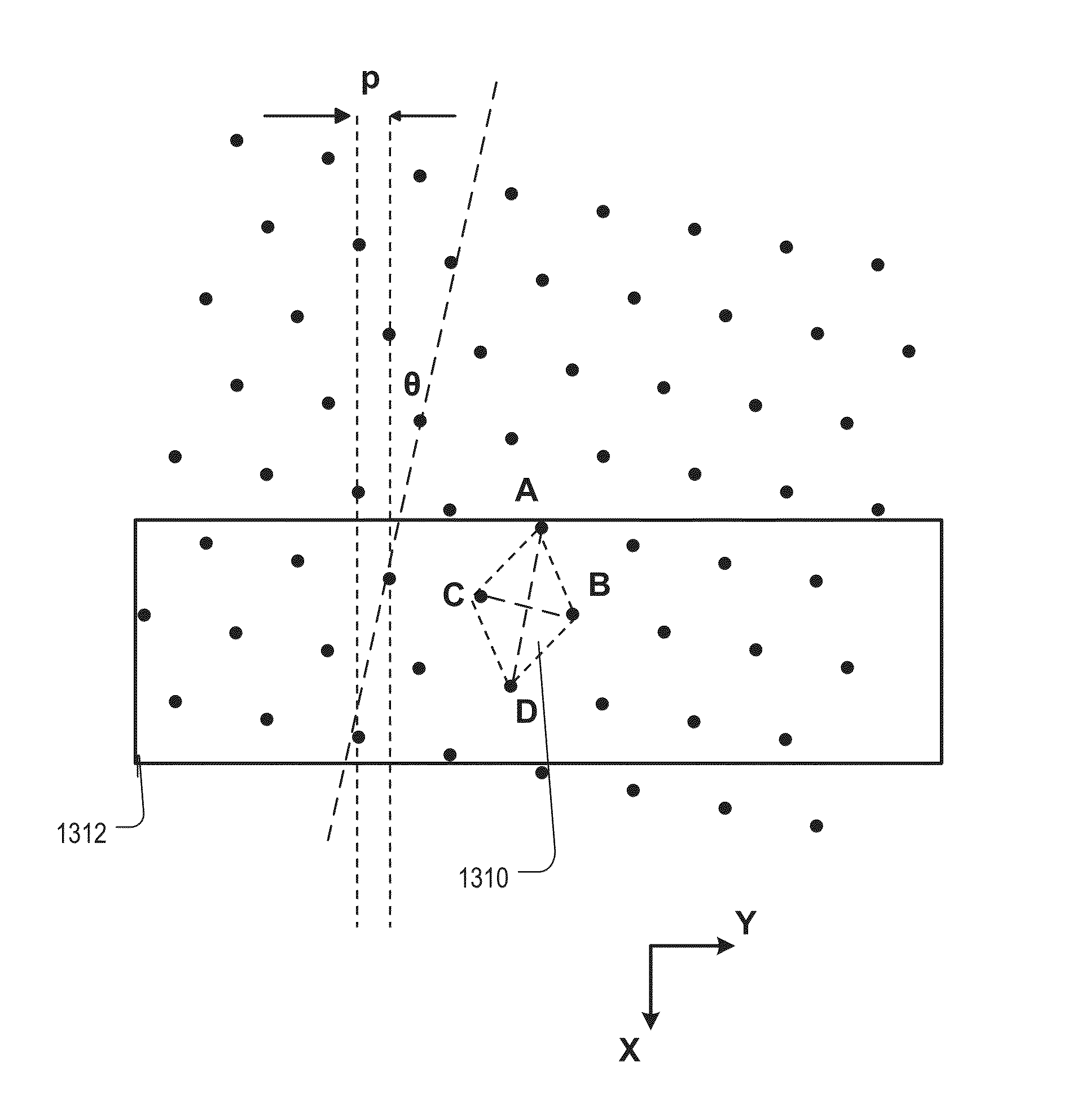

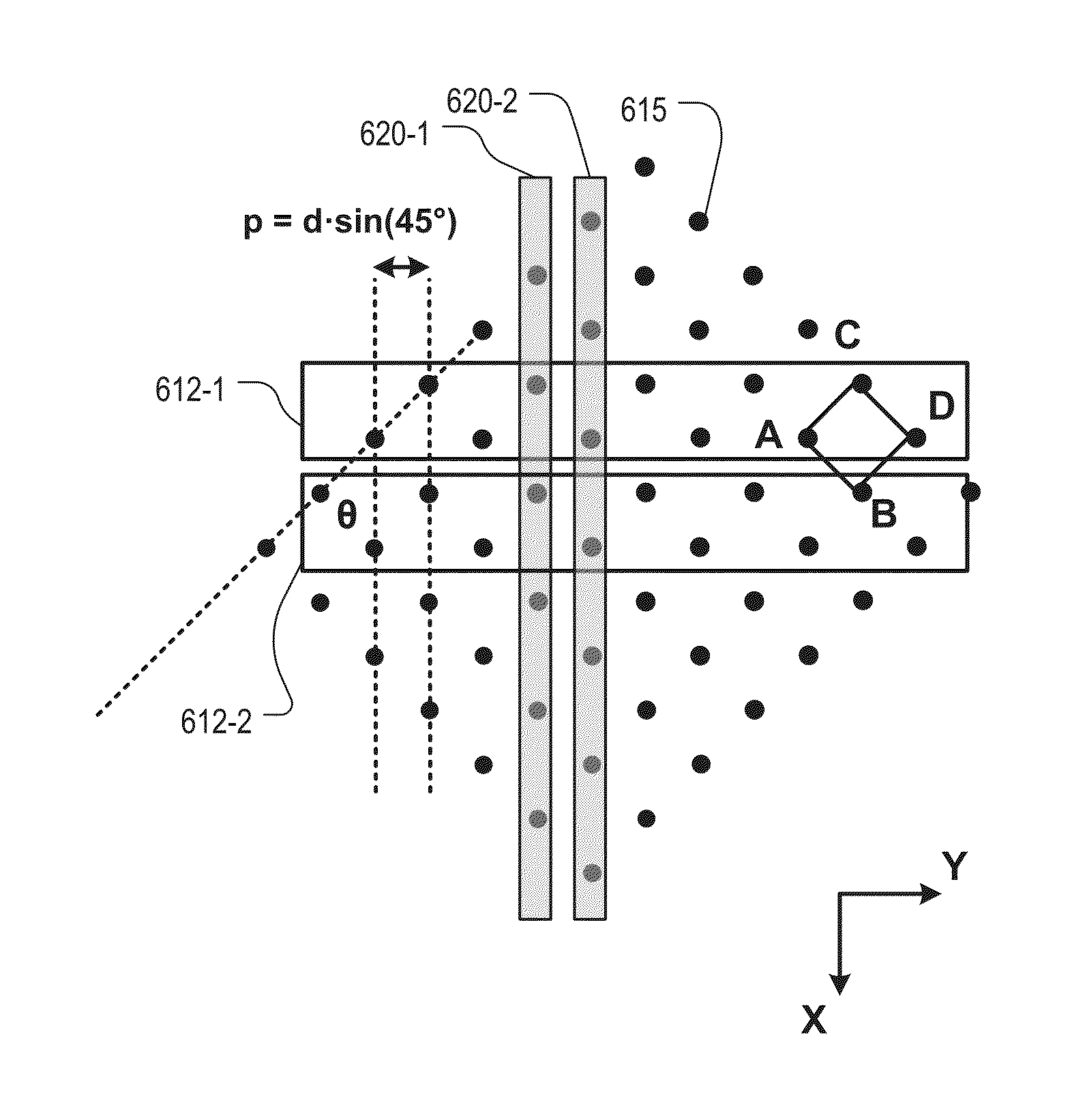

Twisted array design for high speed vertical channel 3D NAND memory

ActiveUS20150206899A1High bit densityHigh data rateSemiconductor/solid-state device detailsSolid-state devicesPhysicsElectrically conductive

Roughly described, a memory device has a multilevel stack of conductive layers. Vertically oriented pillars each include series-connected memory cells at cross-points between the pillars and the conductive layers. SSLs run above the conductive layers, each intersection of a pillar and an SSL defining a respective select gate of the pillar. Bit lines run above the SSLs. The pillars are arranged on a regular grid which is rotated relative to the bit lines. The grid may have a square, rectangle or diamond-shaped unit cell, and may be rotated relative to the bit lines by an angle θ where tan(θ)=±X / Y, where X and Y are co-prime integers. The SSLs may be made wide enough so as to intersect two pillars on one side of the unit cell, or all pillars of the cell, or sufficiently wide as to intersect pillars in two or more non-adjacent cells.

Owner:MACRONIX INT CO LTD

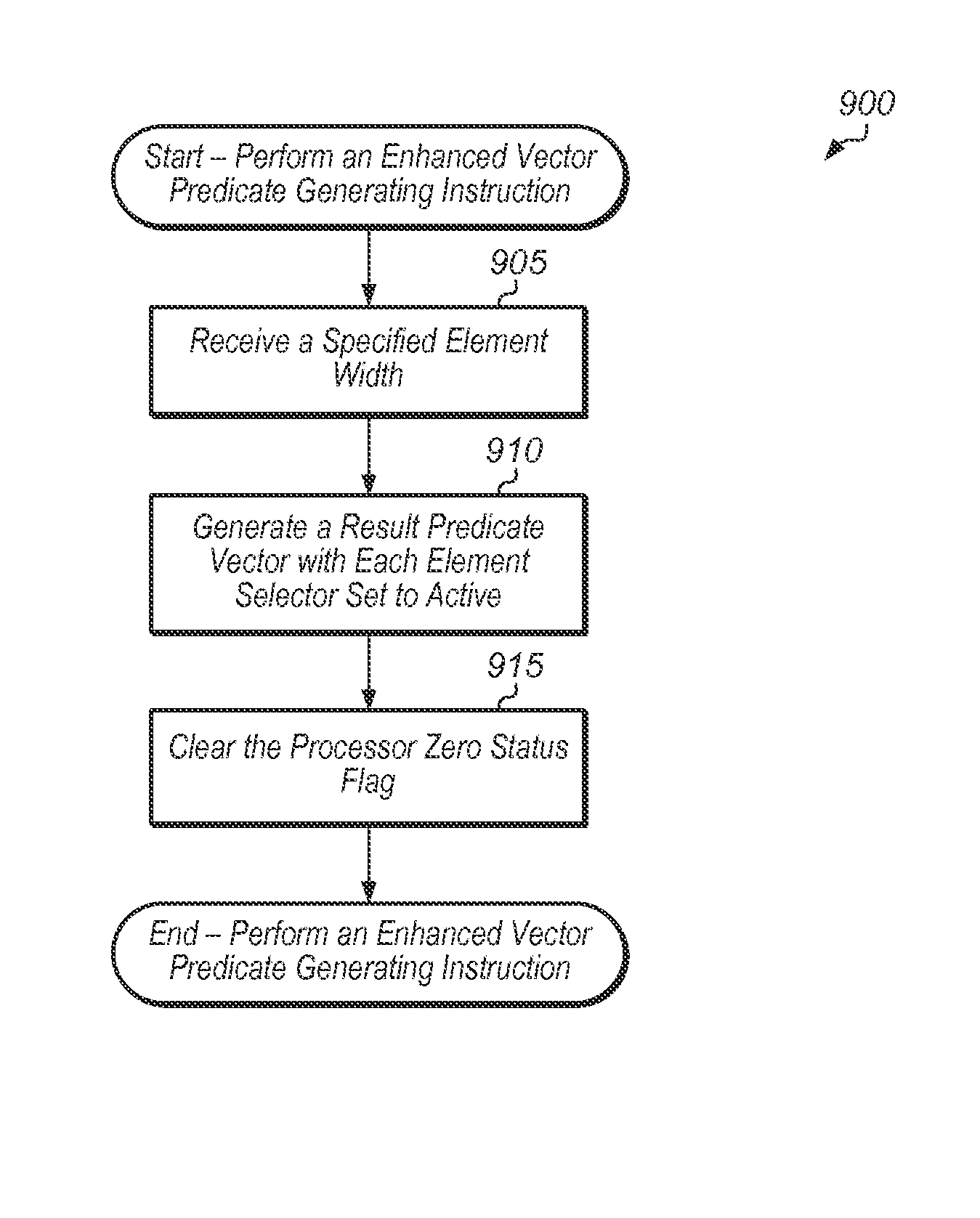

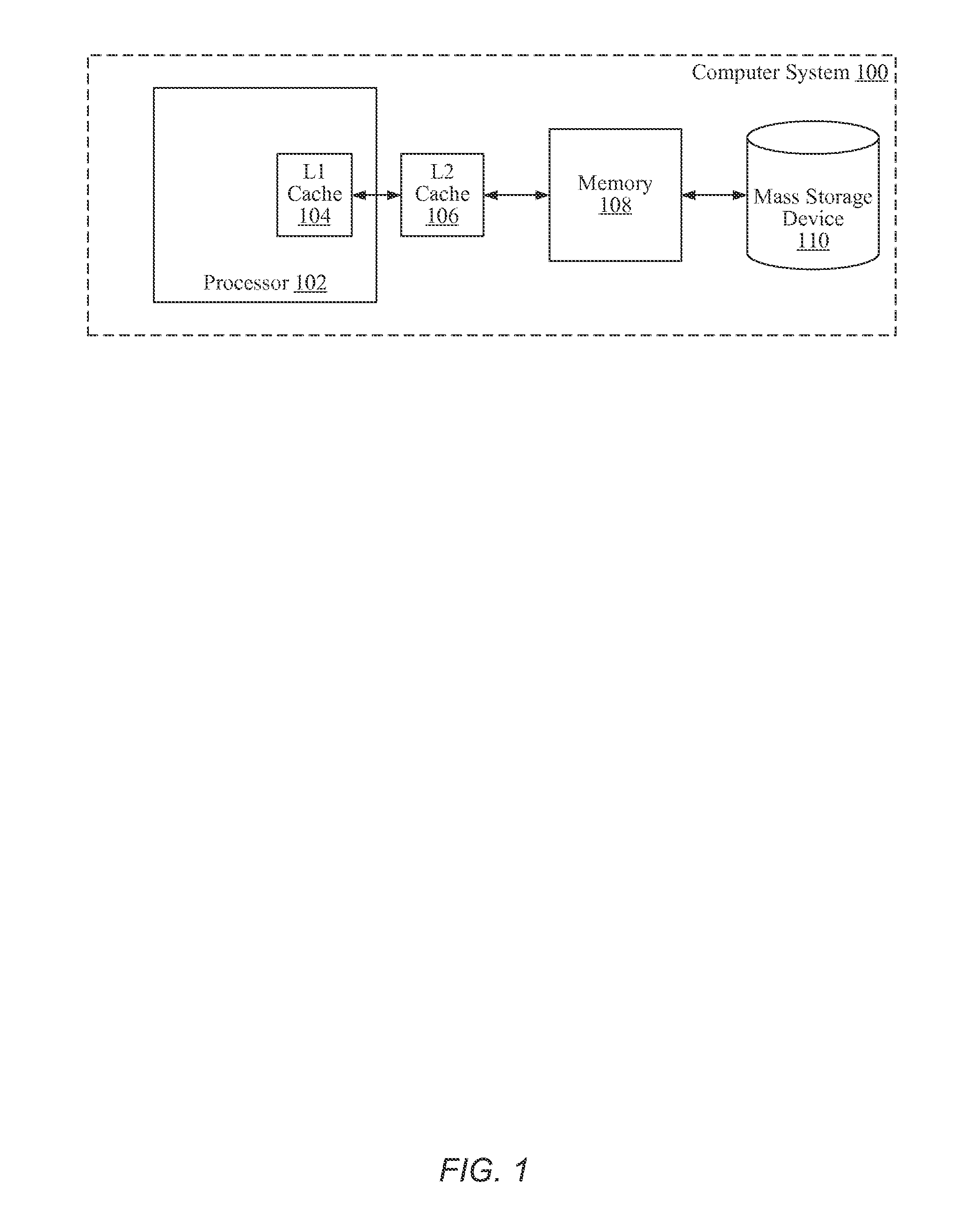

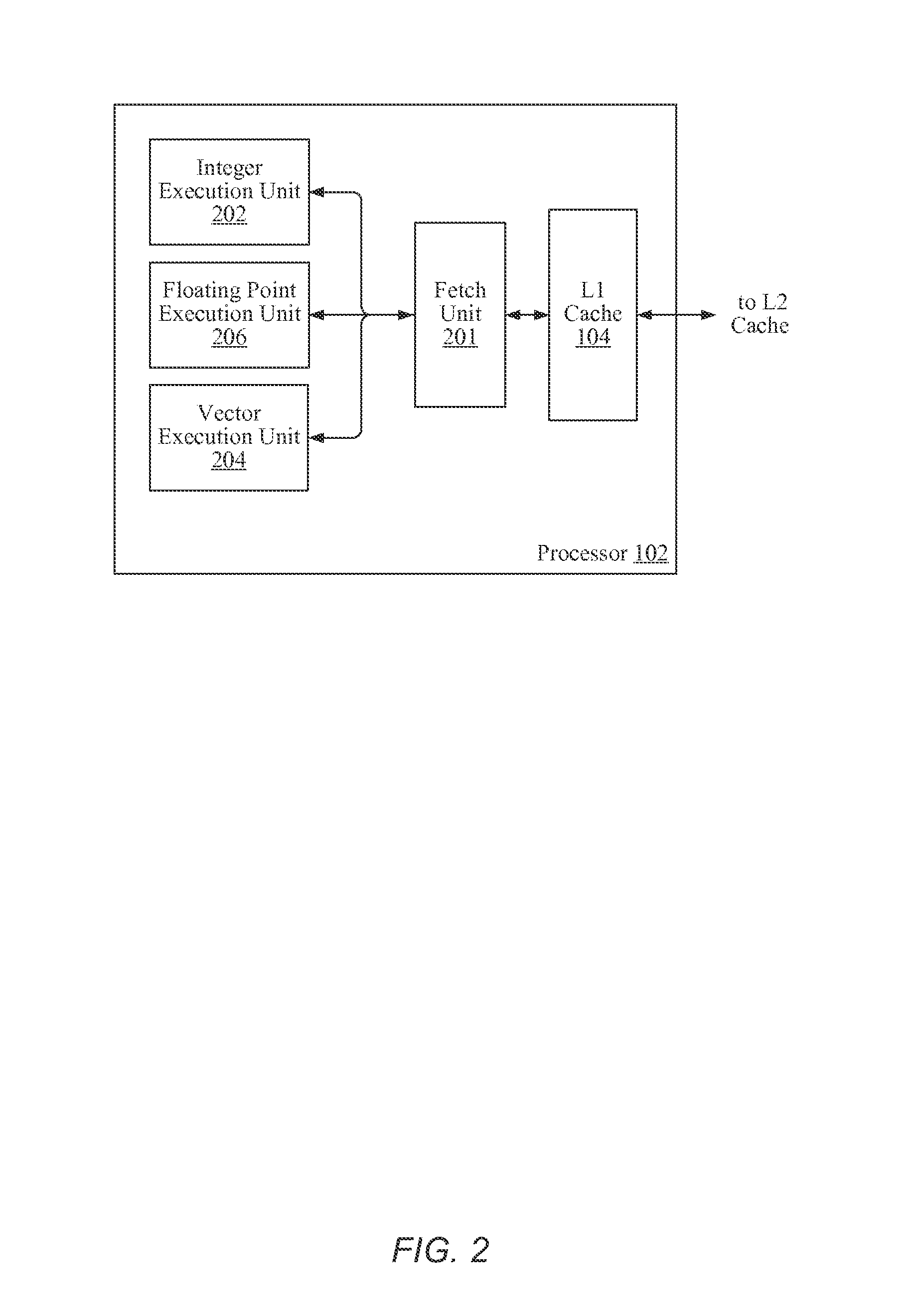

Enhanced vector true/false predicate-generating instructions

InactiveUS20140289502A1Increase parallelismEasy to operateDigital computer detailsSpecific program execution arrangementsVector sizeEuclidean vector

Systems, apparatuses and methods for utilizing enhanced vector true / false instructions. The enhanced vector true / false instructions generate enhanced predicates to correspond to the request element width and / or vector size. A vector true instruction generates an enhanced predicate where all elements supported by the processing unit are active. A vector false instruction generates an enhanced predicate where all elements supported by the processing unit are inactive. The enhanced predicate specifies the requested element width in addition to designating the element selectors.

Owner:APPLE INC

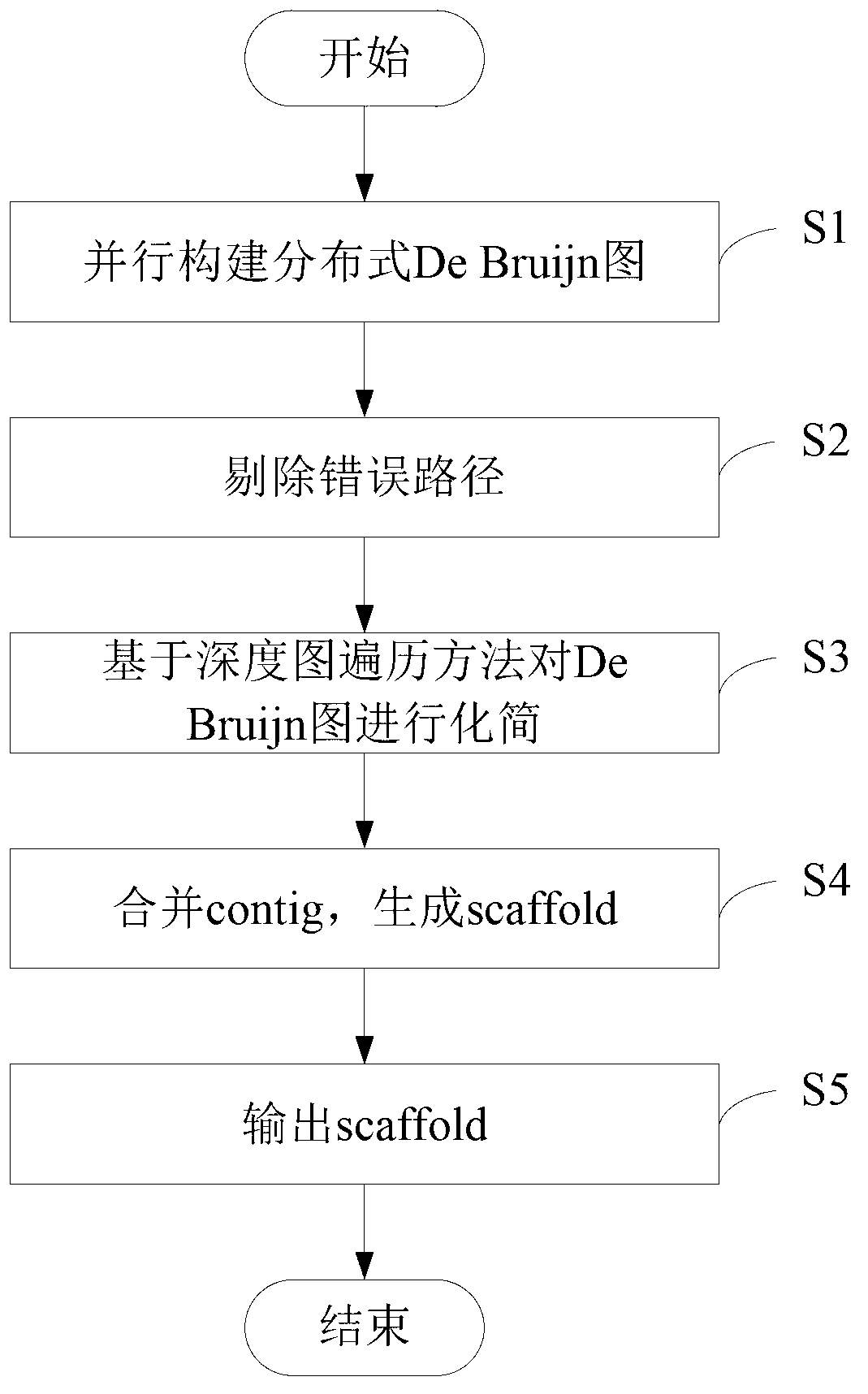

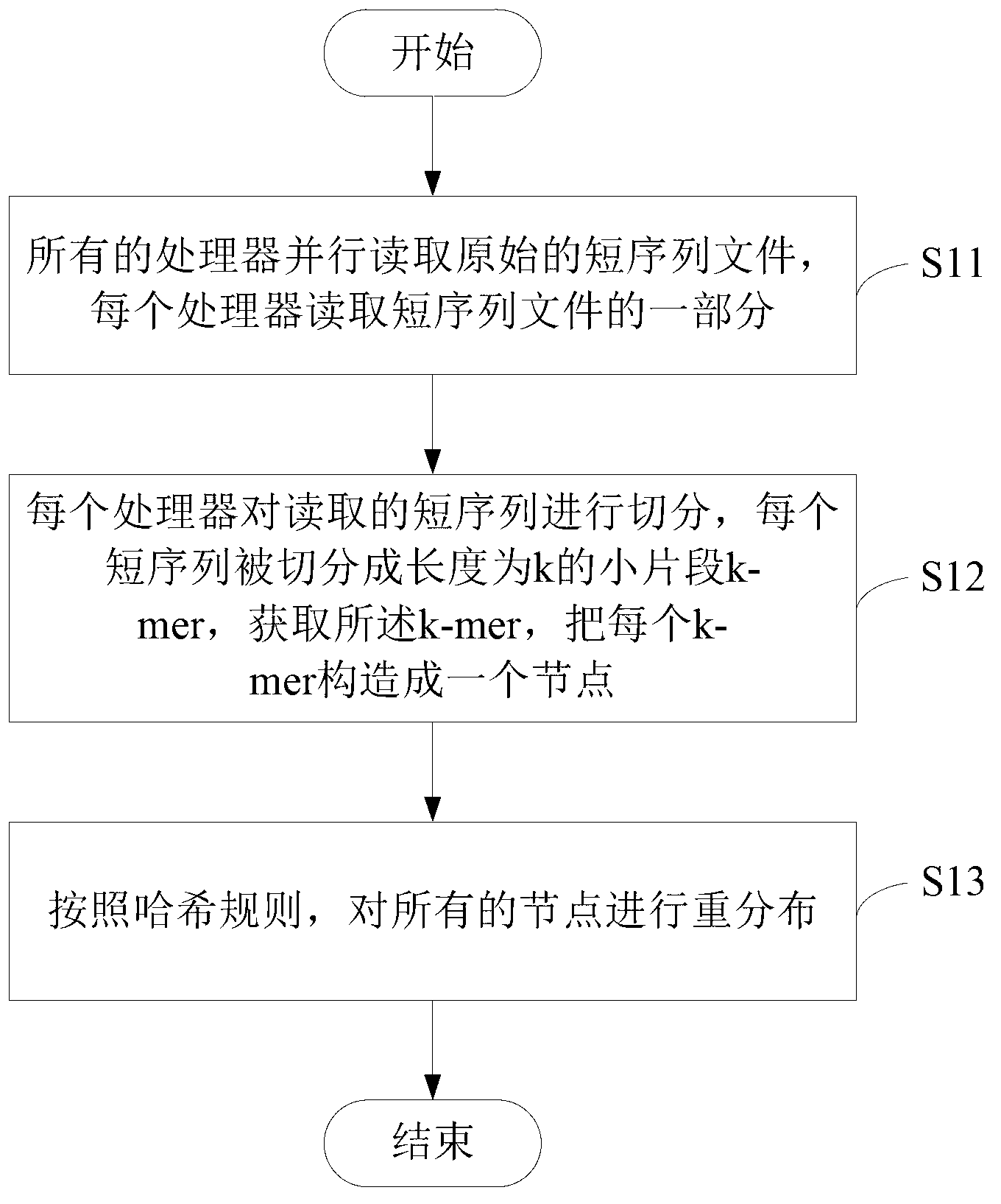

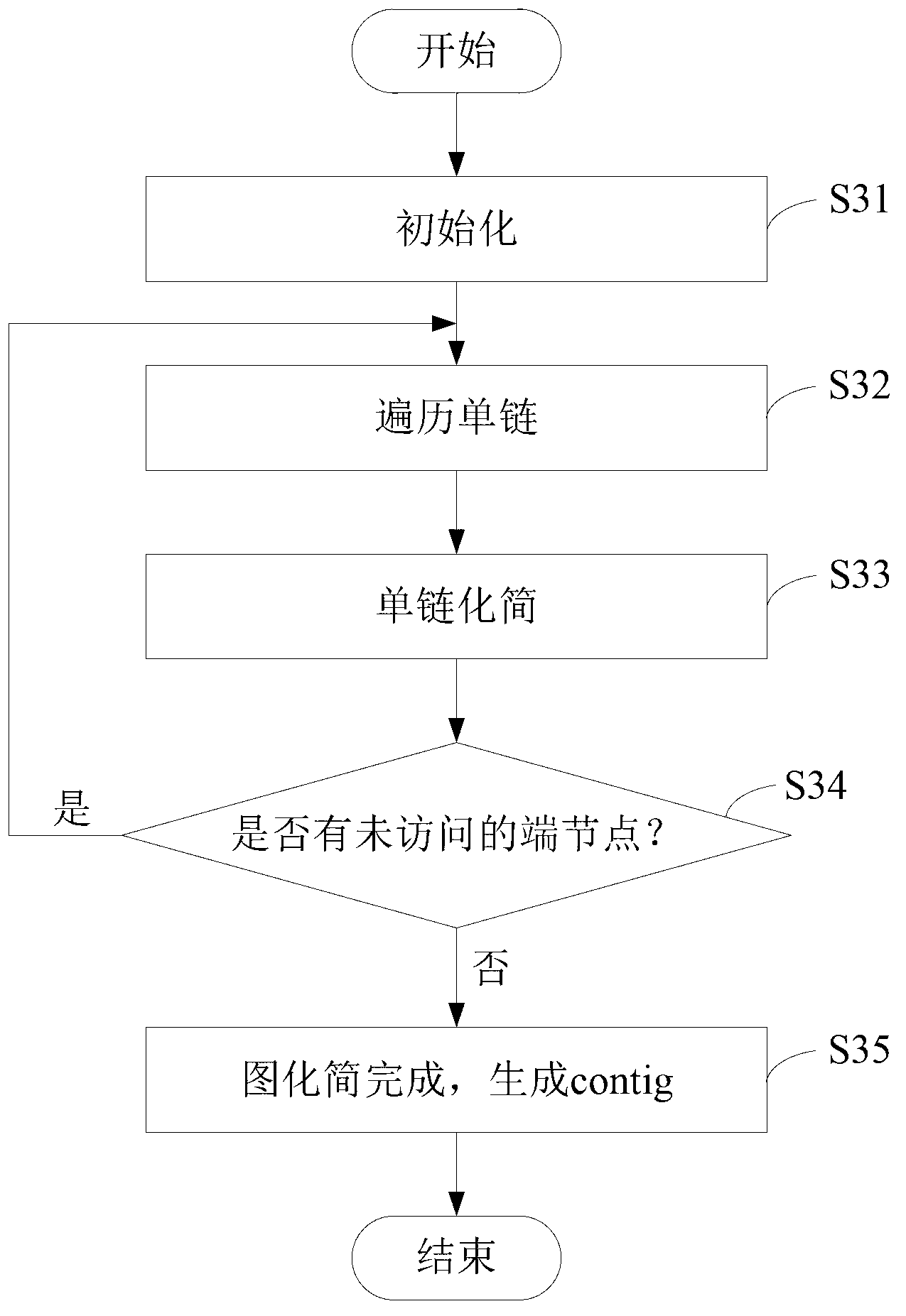

Parallel gene splicing method based on De Bruijn graph

ActiveCN103258145AThe simplification process is simpleIncrease parallelismSpecial data processing applicationsDegree of parallelismGraph traversal

The invention relates to the technical field of gene sequencing, and provides a parallel gene splicing method based on a De Bruijn graph. The parallel gene splicing method based on the De Bruijn graph comprises the following steps that S1, the distributed De Bruijn graph is built in parallel; S2, error paths are removed; S3, the De Bruijn graph is simplified on the base of a depth graph traversal method; S4, contig is combined, and scaffold is generated; S5, the scaffold is output. The parallel gene splicing method is based on a trunking system, the De Bruijn graph is built in parallel, and the problems that when large genomes are spliced, as the data volume of the large genomes is too large, graphs cannot be built and further processing cannot be executed in traditional single-machine serial gene splicing algorithms are solved. Meanwhile, in the simplifying process, parallel simplification based on depth graph traversal is carried out, the graph simplifying process is simple, the degree of parallelism is high, and the splicing speed is high.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

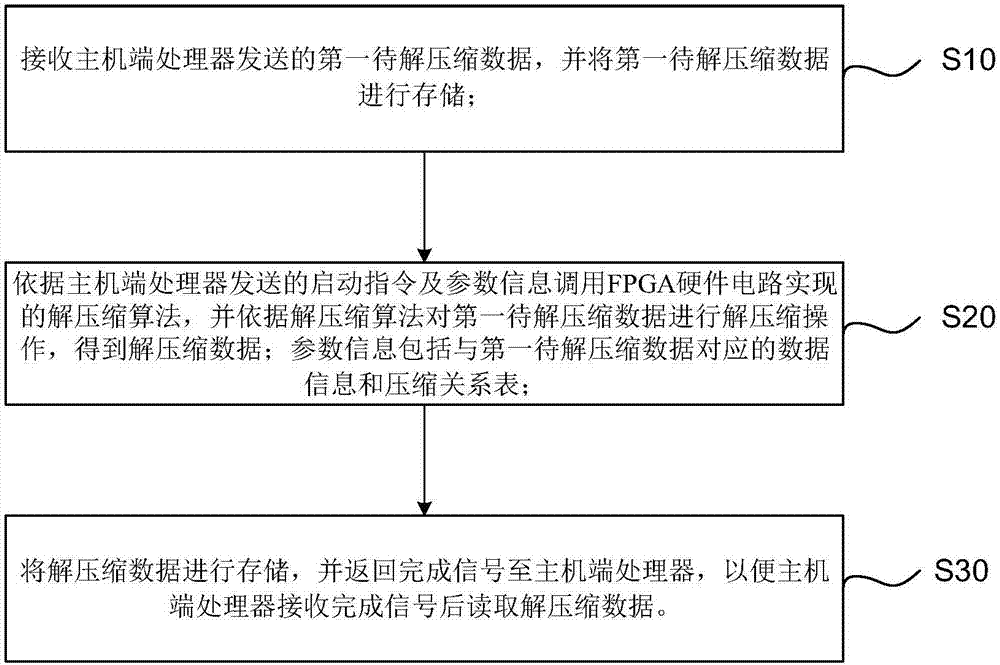

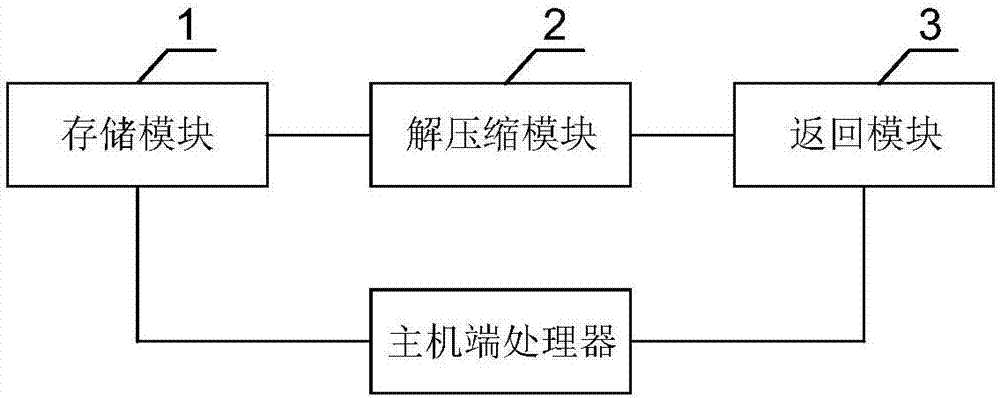

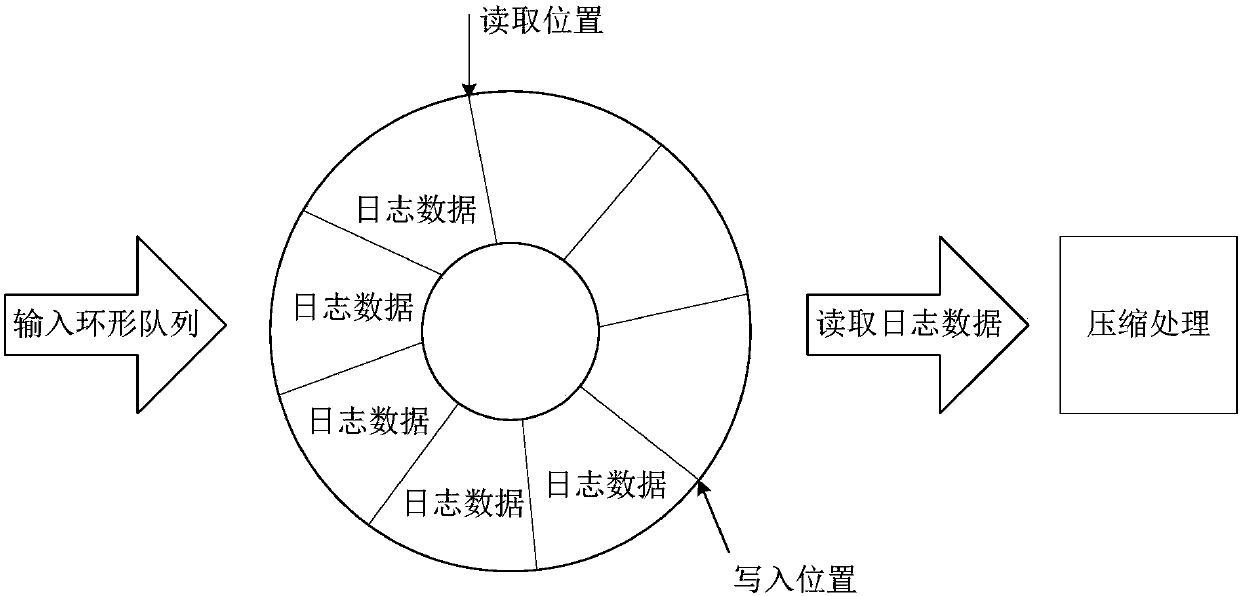

Decompression method, device and system for FPGA heterogeneous acceleration platform

InactiveCN107027036AIncrease parallelismAccelerateDigital video signal modificationSelective content distributionData informationHardware circuits

The embodiment of the invention discloses a decompression method, device and system for an FPGA heterogeneous acceleration platform. The method comprises the following steps: receiving first data to be decompressed sent by a host side processor and storing the first data to be decompressed; calling a decompression algorithm implemented by an FPGA hardware circuit according to a start-up instruction and parameter information sent by the host side processor, and decompressing the first data to be decompressed based on the decompression algorithm to obtain decompressed data, wherein the parameter information contains data information corresponding to the first data to be decompressed and a compression relation table; and storing the decompressed data, and returning a completion signal to the host side processor to ensure that the host side processor receives the completion signal and then reads the decompressed data. According to the decompression method, device and system disclosed by the invention, the decompression speed can be increased during use, and the power consumption required during decompression can be reduced.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

Parallelogram cell design for high speed vertical channel 3D NAND memory

ActiveUS9219073B2Increase bit densityIncrease data rateSolid-state devicesSemiconductor devicesCapacitanceElectrical conductor

Owner:MACRONIX INT CO LTD

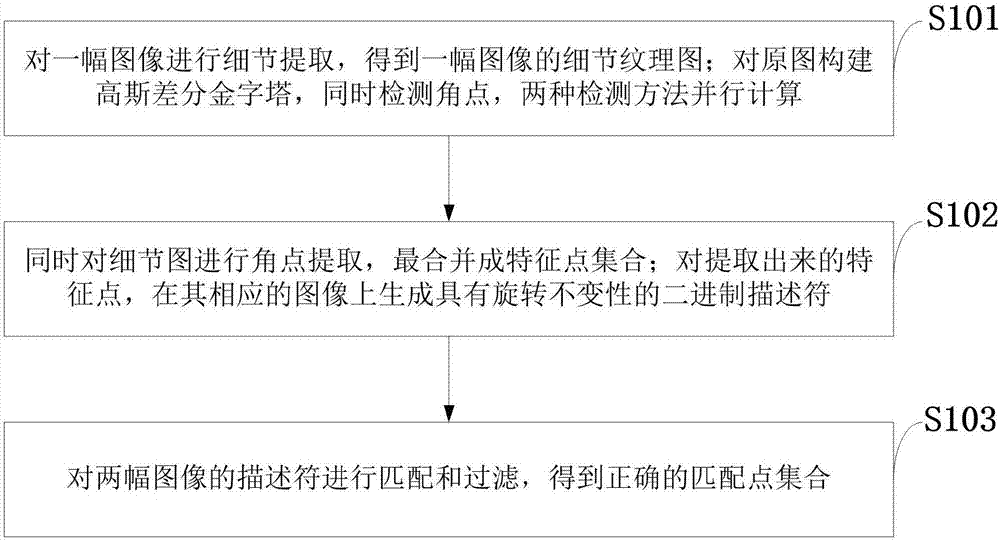

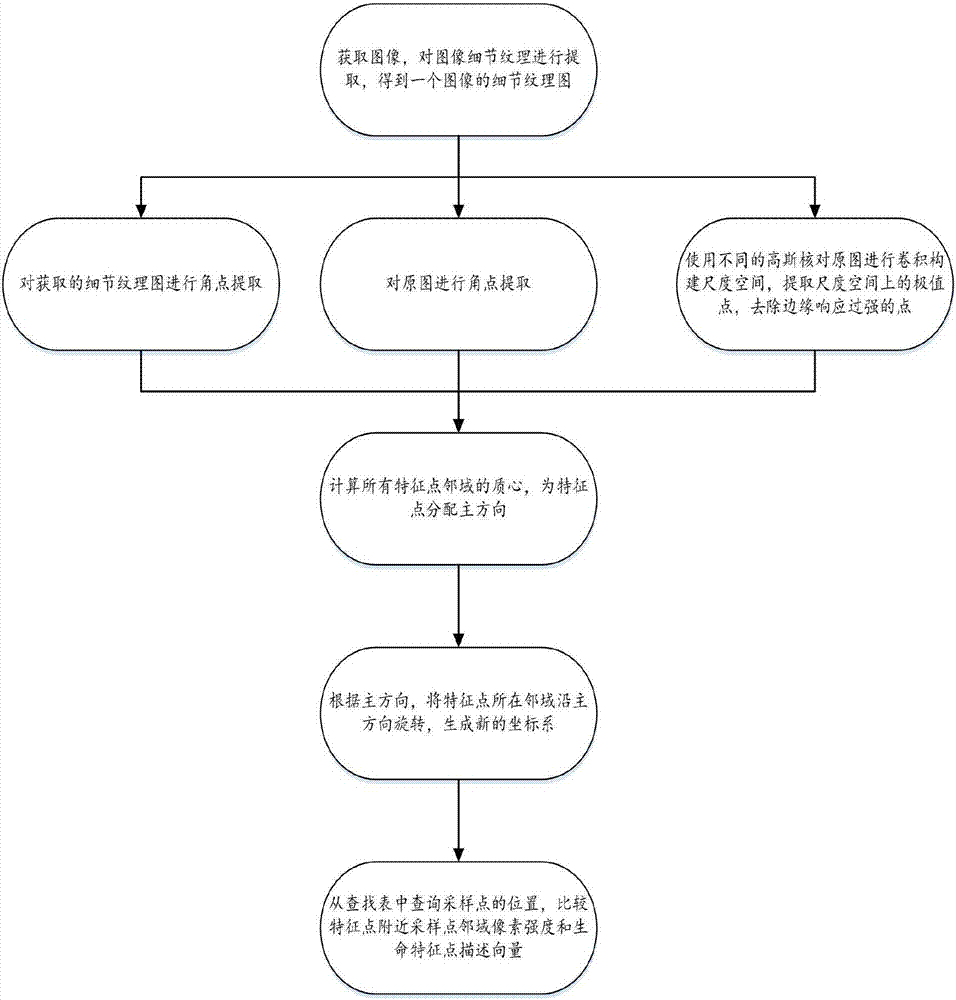

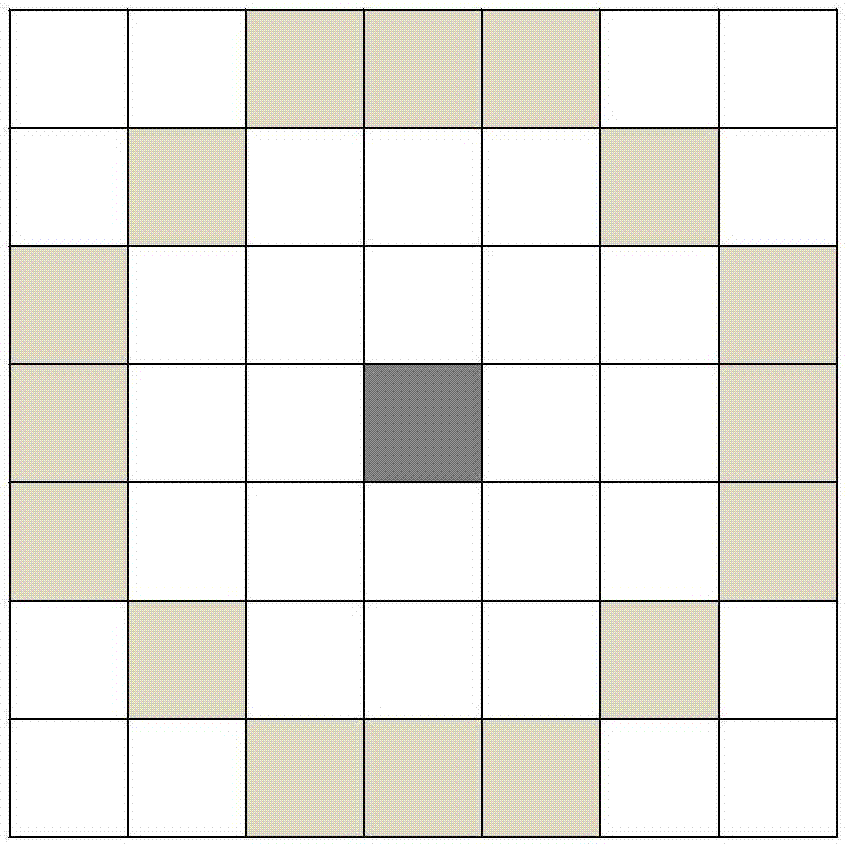

Method for increasing number of feature points in weak texture region of image

ActiveCN106960451AExtraction of optimized featuresIncrease parallelismImage analysisPyramidRotational invariance

The invention belongs to the technical field of computer visions, and discloses a method for increasing the number of feature points in a weak texture region of one image. The method comprises the steps: carrying out the detail extraction of one image, and obtaining the detail texture map of one image; building a Gaussian difference pyramid for an original image, and detecting corners; carrying out the parallel computing of the detail extraction and the Gaussian difference pyramid, carrying out the corner extraction of the detail map, and finally forming a feature point set through merging; generating a binary descriptor with rotation invariance on the corresponding image for the extracted feature points; carrying out the matching and filtering of the descriptors of two images, and obtaining a correct matching point set. The method employs a binary operator, and can generate a description vector through simple summation and comparison. Meanwhile, the method employs the parallel computing of corner detection and spatial extreme point detection, greatly improves the stability of feature points, is low in coupling degree of calculation steps, is high in parallelism degree, and is very suitable for hardware implementation.

Owner:XIDIAN UNIV +1

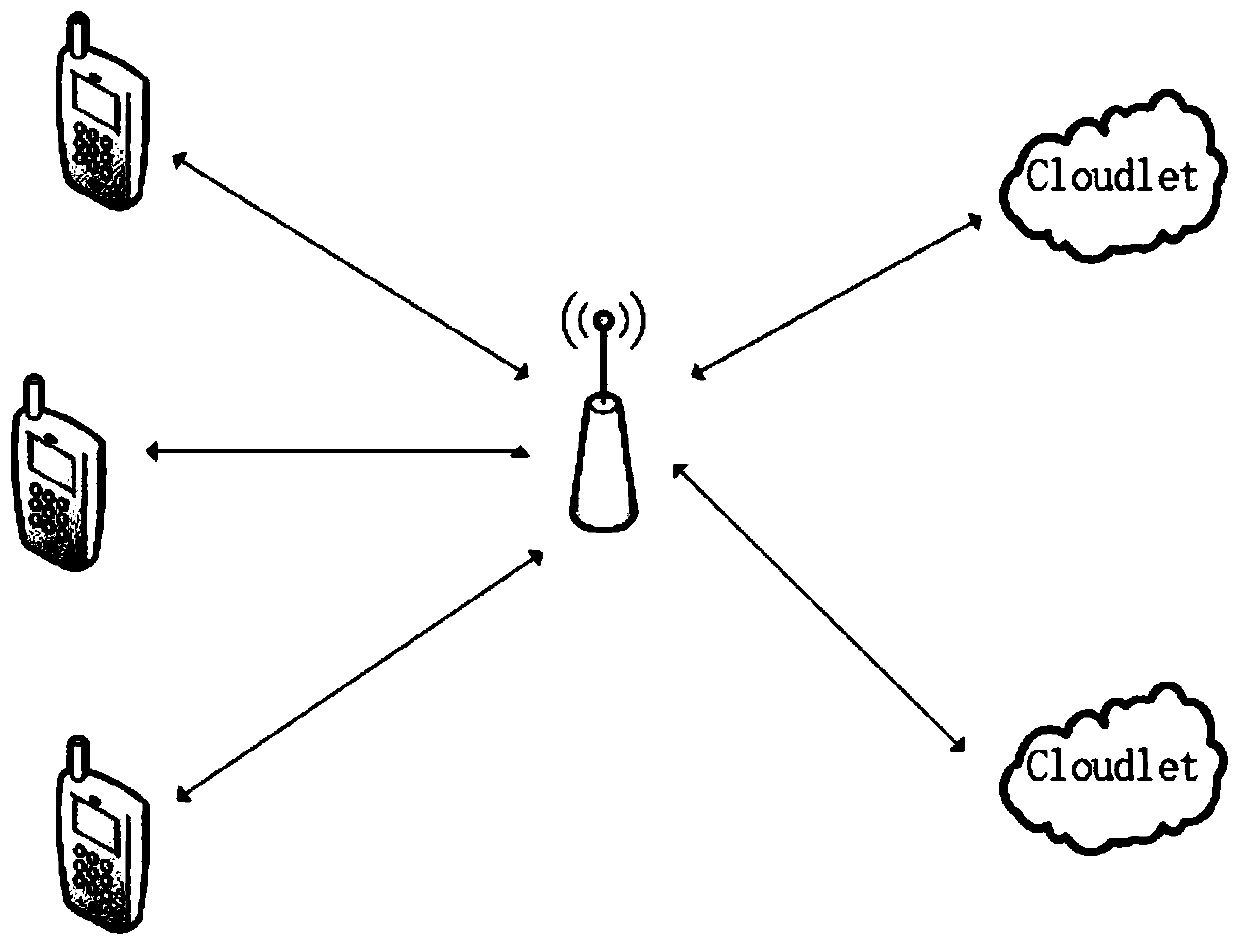

A computing migration method based on task dependency in a mobile cloud environment

ActiveCN109840154AReduce data transferIncrease parallelismResource allocationEnergy efficient computingData transmissionImproved algorithm

The invention discloses a computing migration method based on task dependency in a mobile cloud environment, and provides an improved algorithm based on a general genetic algorithm idea for solving the problem of multi-task computing migration with dependency in the mobile cloud environment, thereby obtaining a migration scheme with overall excellent response time and terminal energy consumption.On the basis of considering task type subdivision, the computing power of different microclouds is expressed in a refined manner; a calculation task model and a calculation resource model are given; Atime sequence and data double dependency relationship exists between calculation tasks, data transmission between the tasks is reduced as much as possible under the constraint of time sequence and data dependency, the task parallelism is improved, and a utility function calculation method of a migration scheme is given on the basis and is expressed in a mathematical form; And then, a final migration scheme can be obtained by using an improved genetic algorithm, and the scheme has relatively good response time and terminal energy consumption.

Owner:NANJING UNIV OF POSTS & TELECOMM

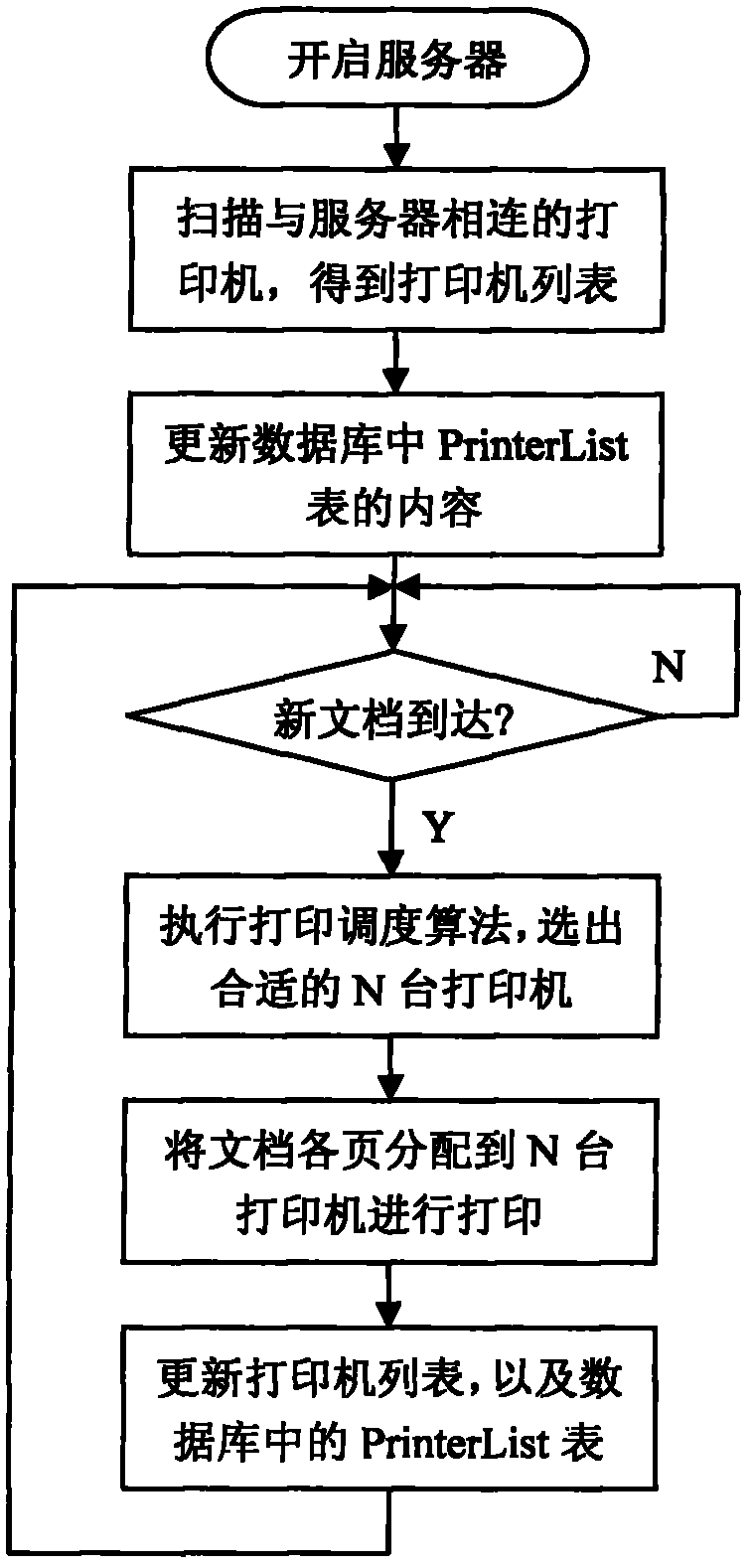

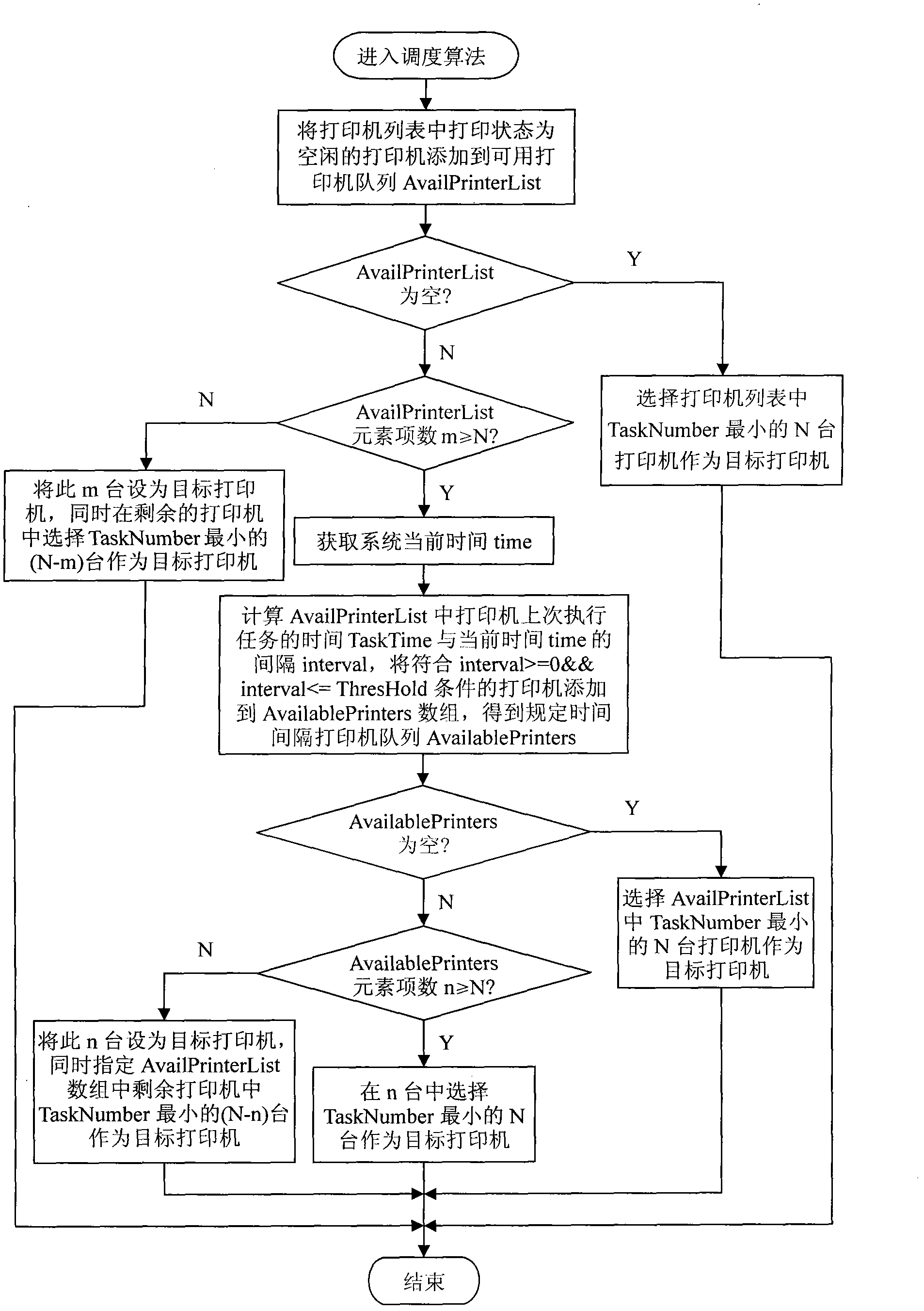

Novel method and system for parallel printing dispatching

InactiveCN102508626AIncrease parallelismImprove print output speedDigital output to print unitsClient-sidePrint server

The invention discloses a novel method and system for parallel printing dispatching. A host of a printing server is connected with a plurality of usable printers, and maintains a printer list; and the list is updated when the host is rebooted or some new printers are added. For realizing parallel printing of a document, a client side transmits a message, containing the number N of the printers applied for, to the server before printing; and then, the client side segments the document to be printed into print pages, and transmits the print pages to the printing server. After receiving the print pages transmitted by the client side, based on the number N of the applied printers, the printing server takes the page as a unit, and adds the printing tasks to a printing queue of the N dispatched printers by a certain strategy. The technical scheme of the invention is featured by making full use of the printing resource, being convenient for unified management and having high efficiency.

Owner:XIDIAN UNIV

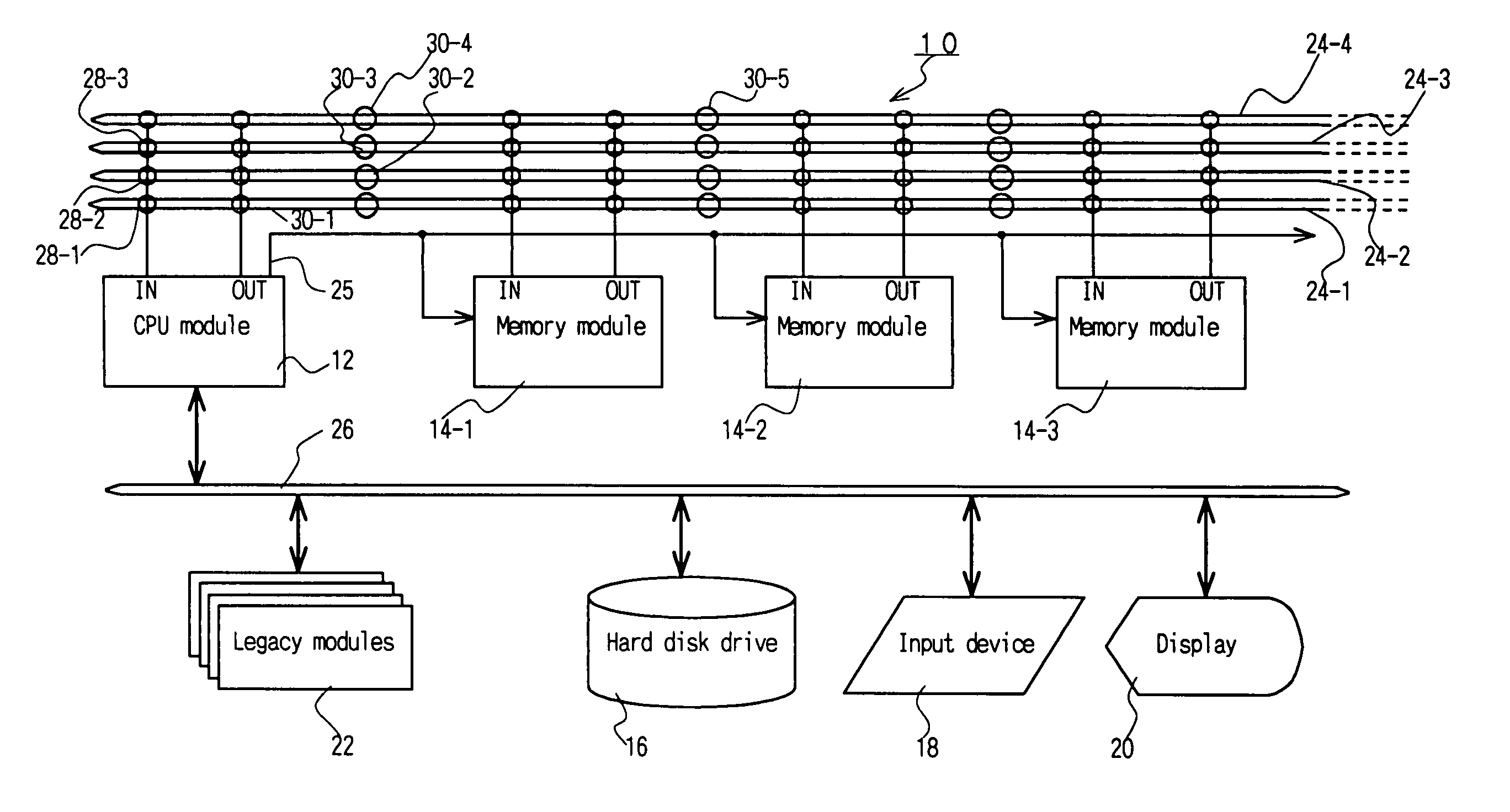

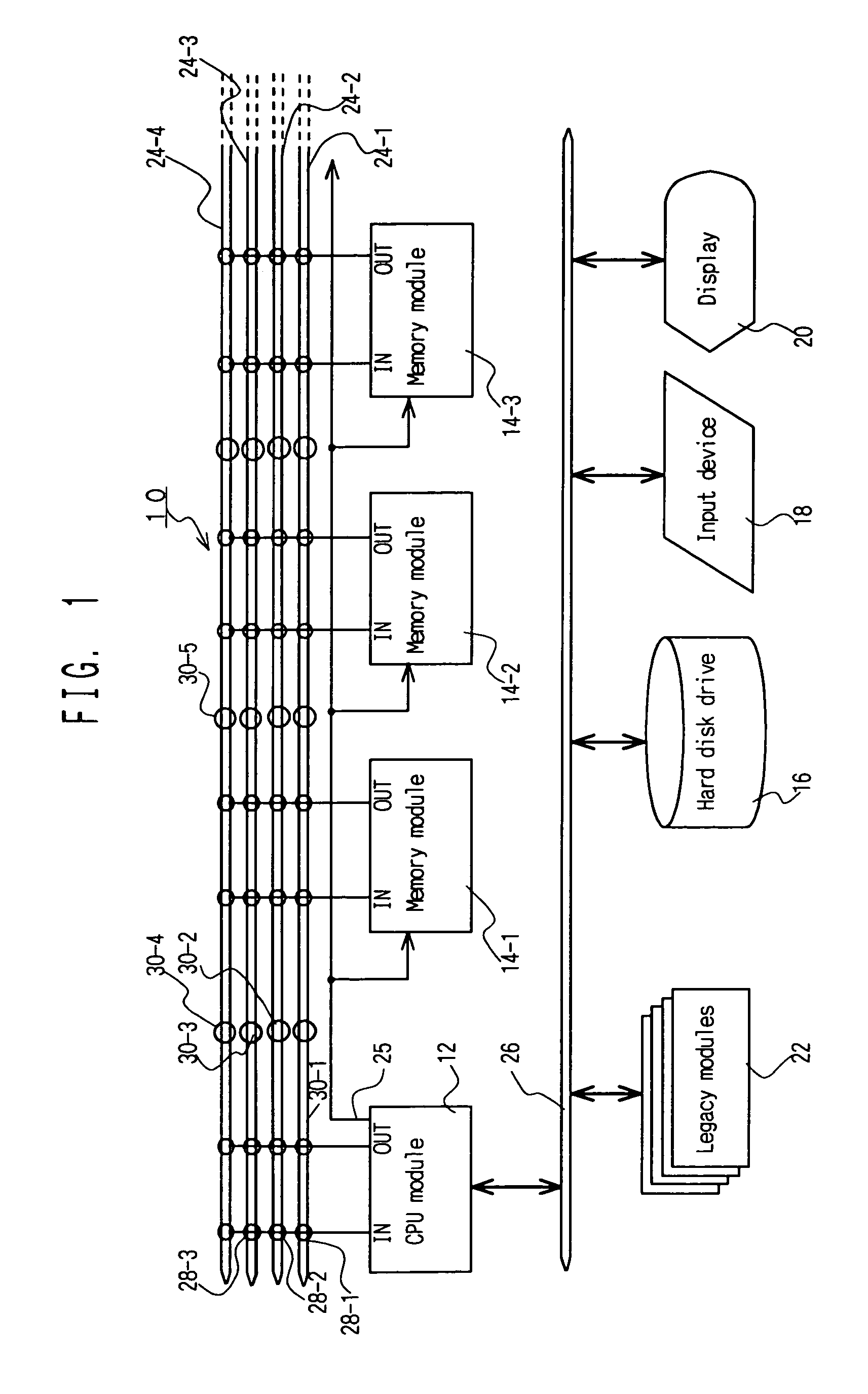

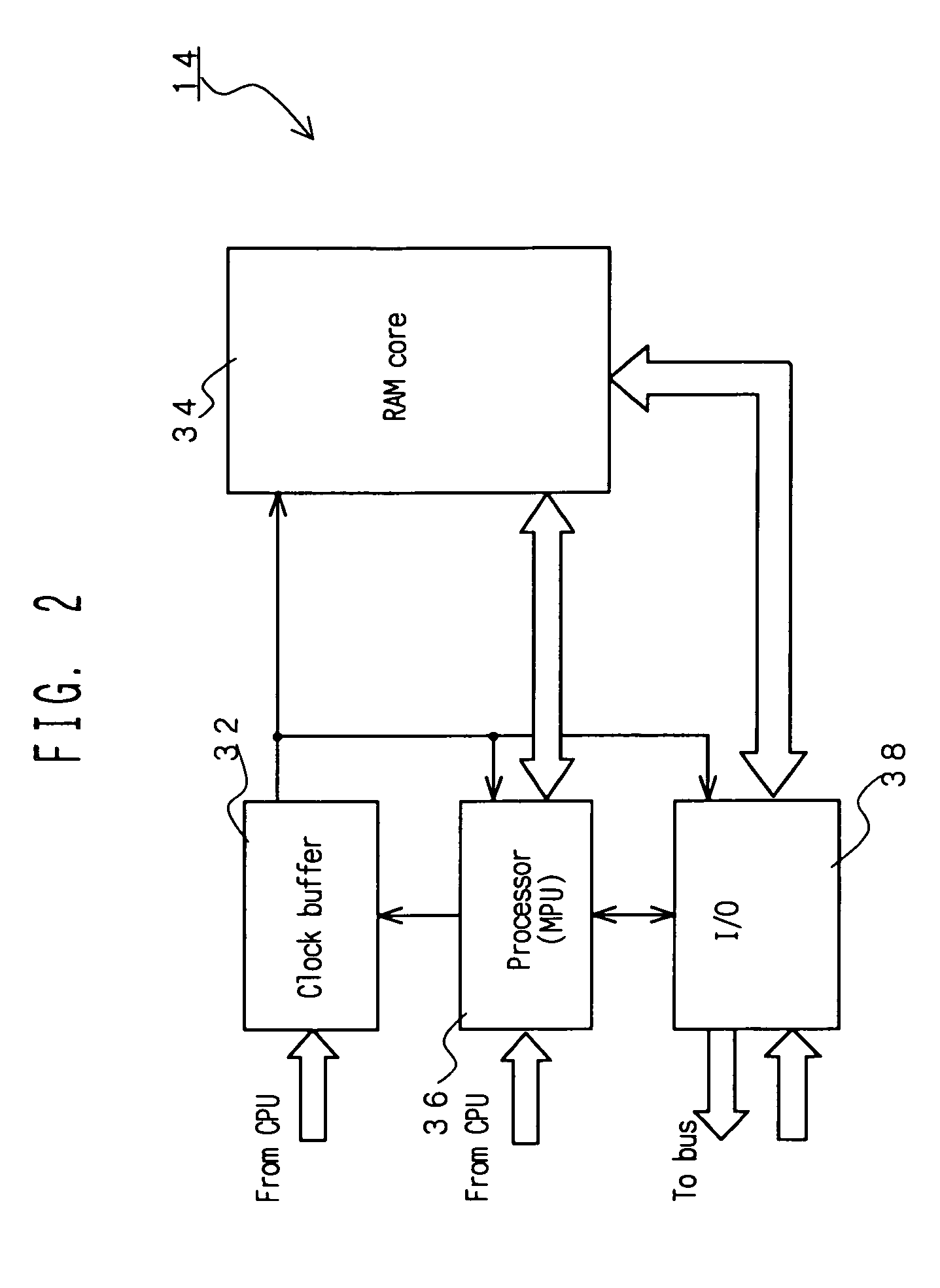

Architecture of a parallel computer and an information processing unit using the same

InactiveUS7185179B1Increase parallelismEffectively useMemory adressing/allocation/relocationMultiple digital computer combinationsLogical addressMemory module

A computer system provides distributed memory computer architecture achieving extremely high speed parallel processing, and includes: a CPU modules, a plurality of memory modules, each module having a processor and RAM core, and a plurality of sets of buses making connections between the CPU and the memory modules and / or connections among memory modules, so the various memory modules operate on an instruction given by the CPU. A series of data having a stipulated relationship is given a space ID and each memory module manages a table containing at least the space ID, the logical address of the portion of the series of data managed, the size of the portion and the size of the series of data, and, the processor of each memory module determines if the portion of the series of data managed is involved in a received instruction and performs processing on data stored in the RAM core.

Owner:TURBO DATA LAB INC

Twisted array design for high speed vertical channel 3D NAND memory

ActiveUS9373632B2Increase bit densityIncrease data rateSemiconductor/solid-state device detailsSolid-state devicesBit lineRegular grid

Roughly described, a memory device has a multilevel stack of conductive layers. Vertically oriented pillars each include series-connected memory cells at cross-points between the pillars and the conductive layers. SSLs run above the conductive layers, each intersection of a pillar and an SSL defining a respective select gate of the pillar. Bit lines run above the SSLs. The pillars are arranged on a regular grid which is rotated relative to the bit lines. The grid may have a square, rectangle or diamond-shaped unit cell, and may be rotated relative to the bit lines by an angle θ where tan(θ)=±X / Y, where X and Y are co-prime integers. The SSLs may be made wide enough so as to intersect two pillars on one side of the unit cell, or all pillars of the cell, or sufficiently wide as to intersect pillars in two or more non-adjacent cells.

Owner:MACRONIX INT CO LTD

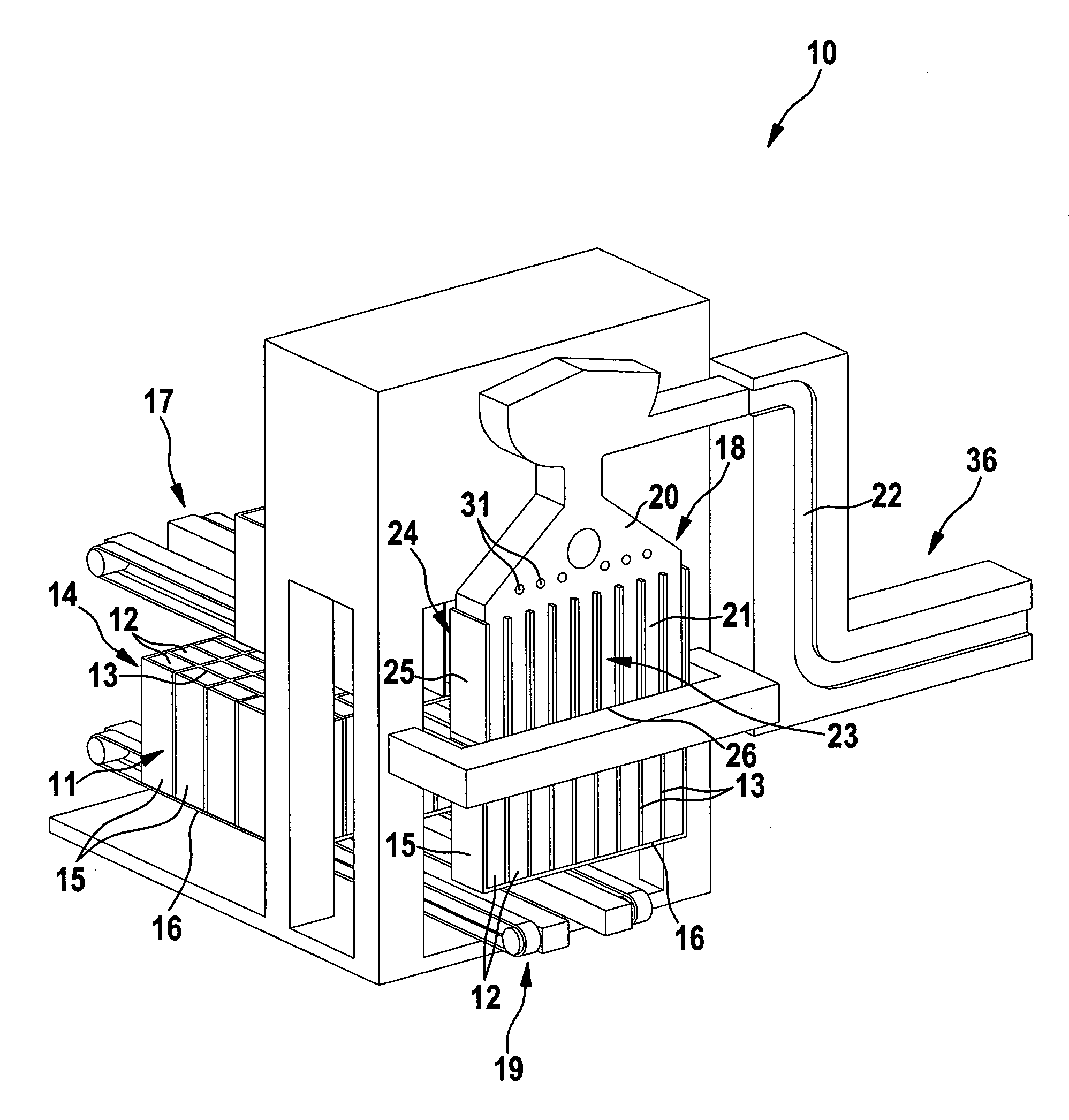

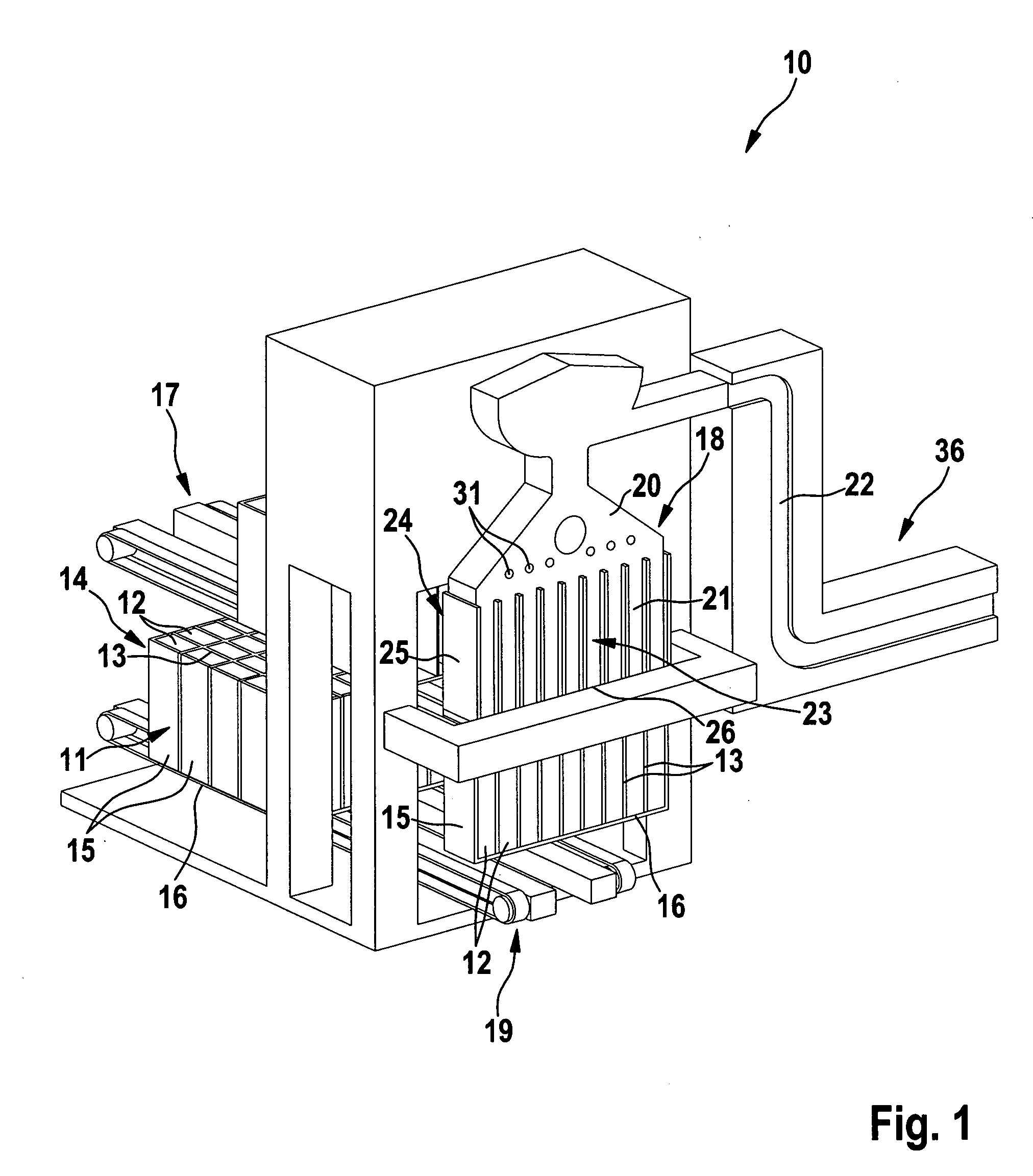

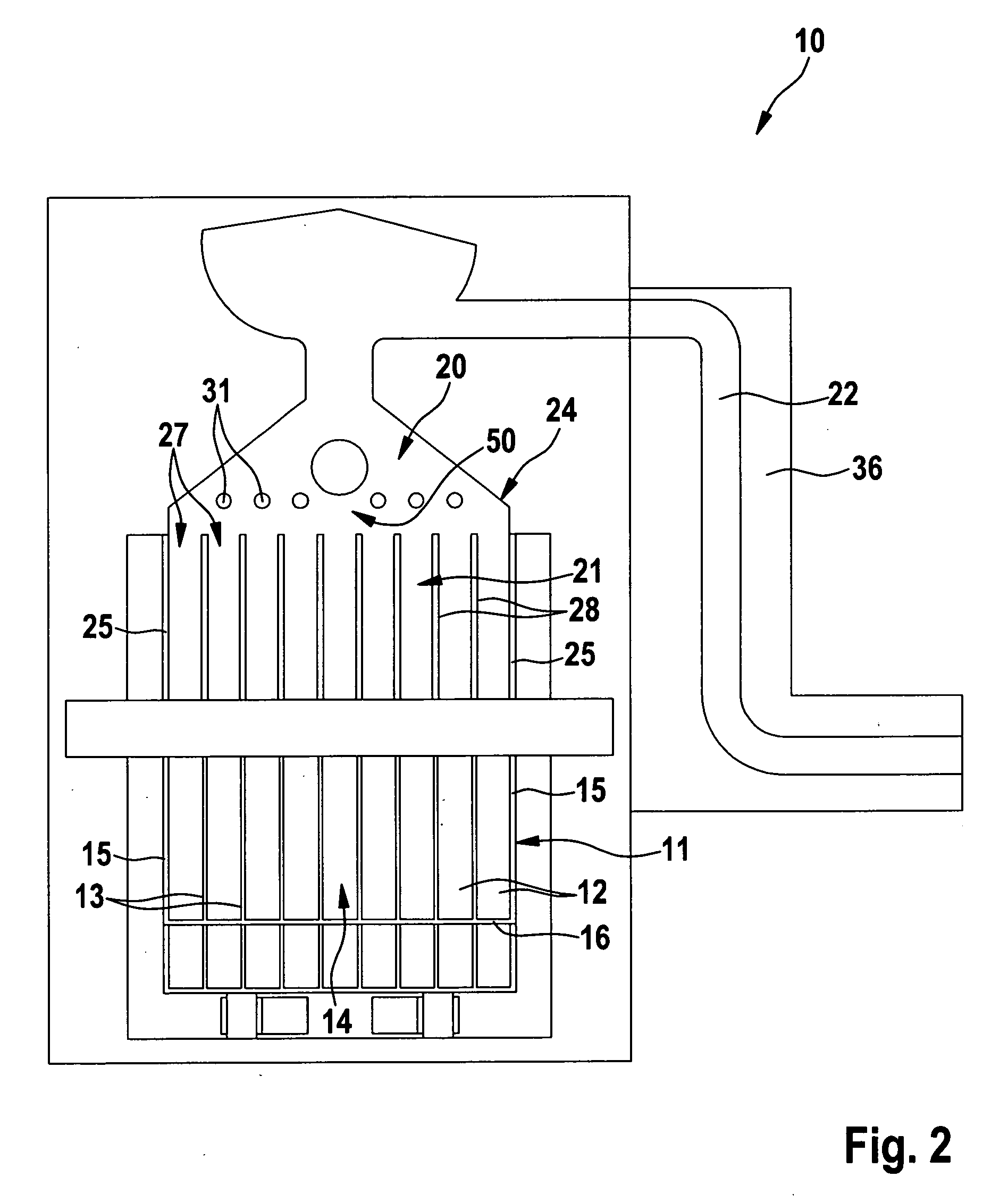

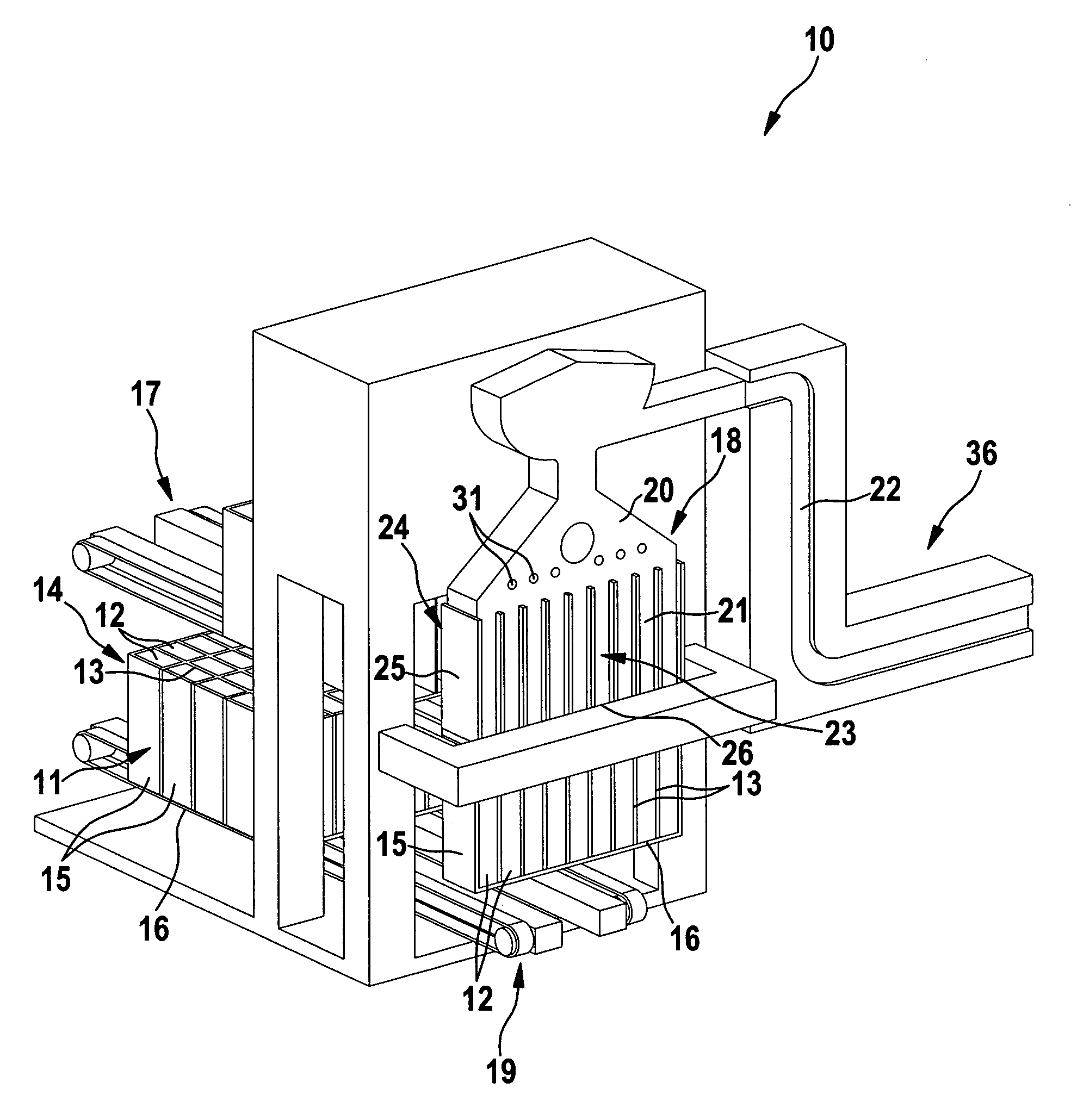

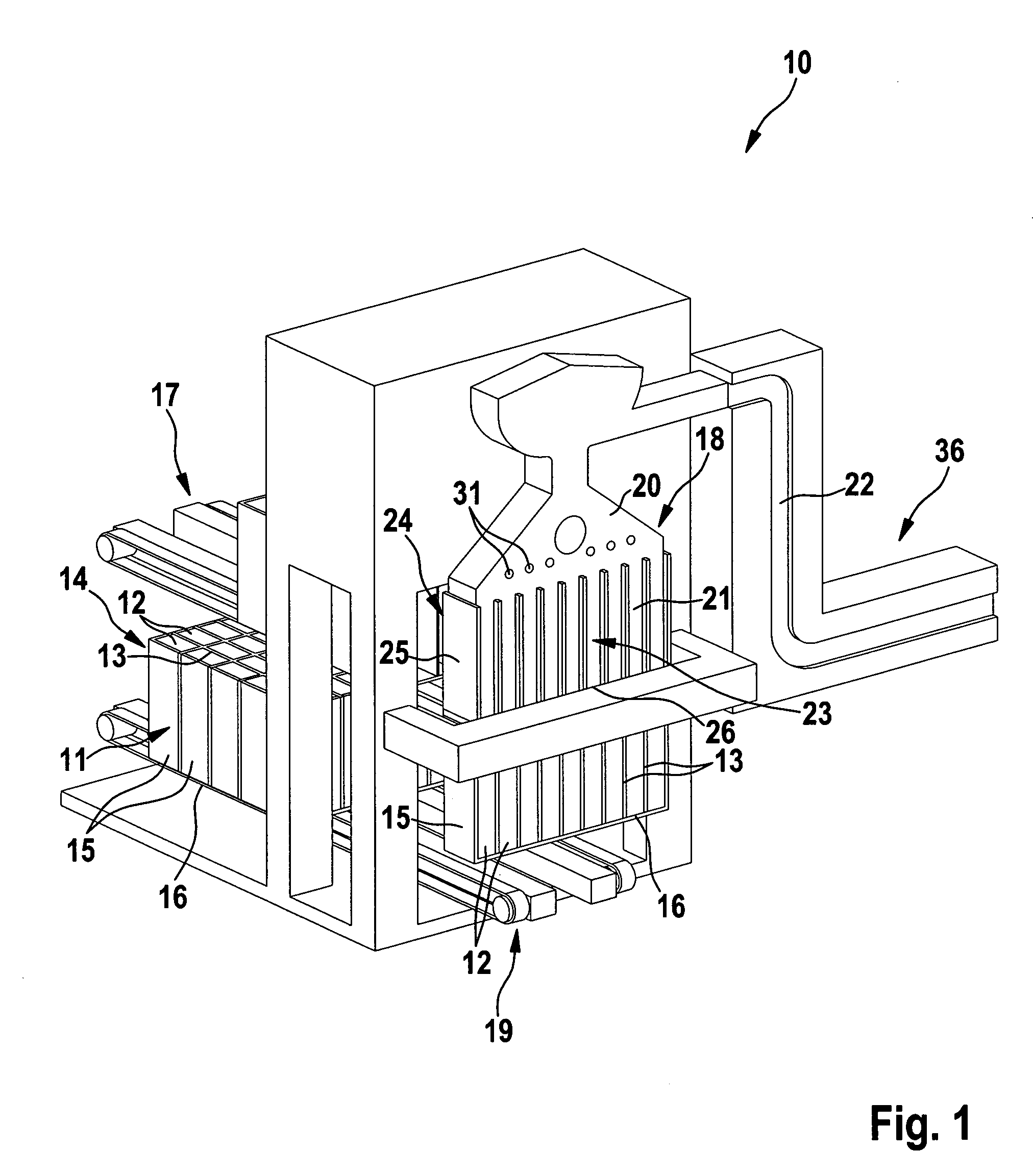

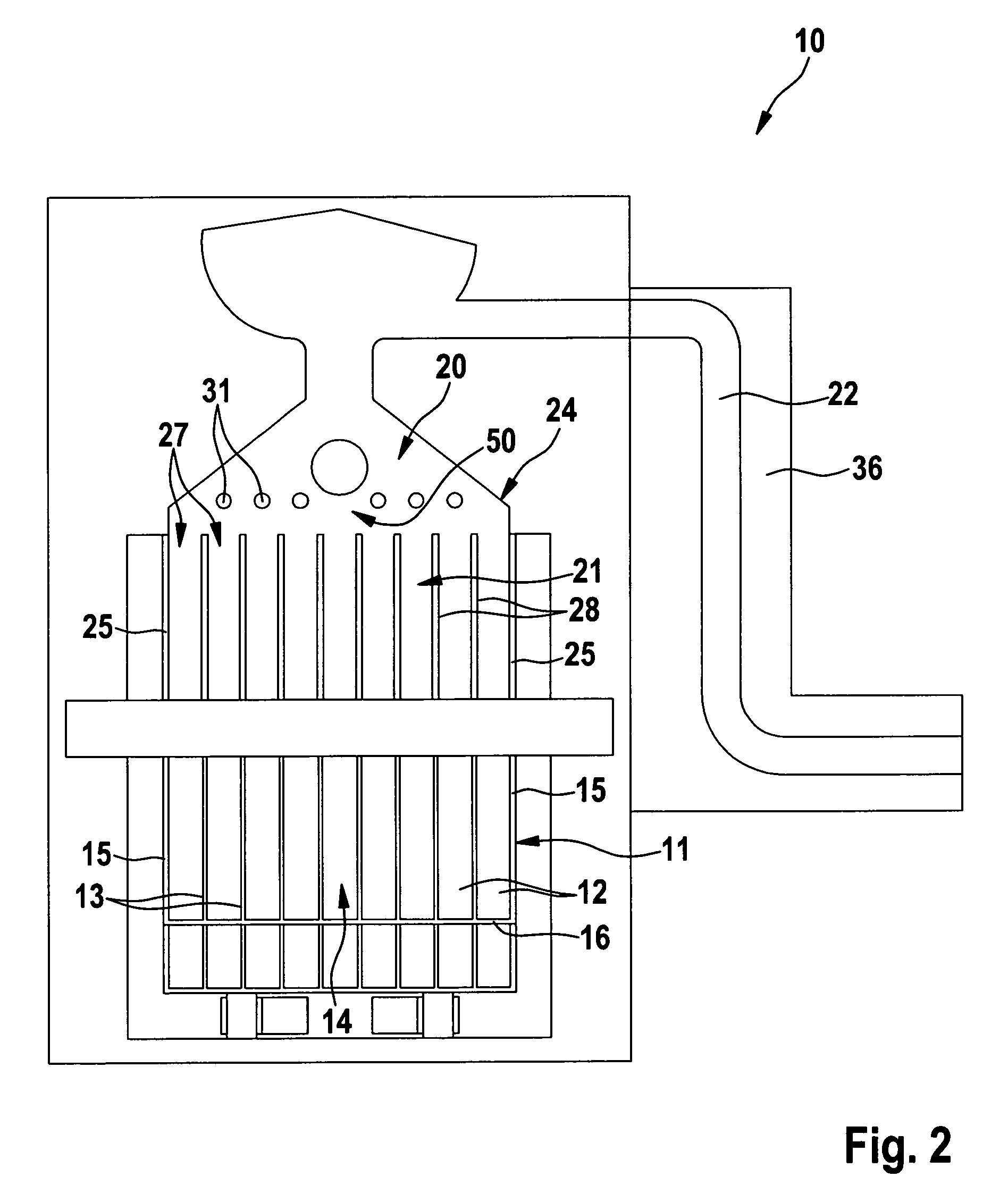

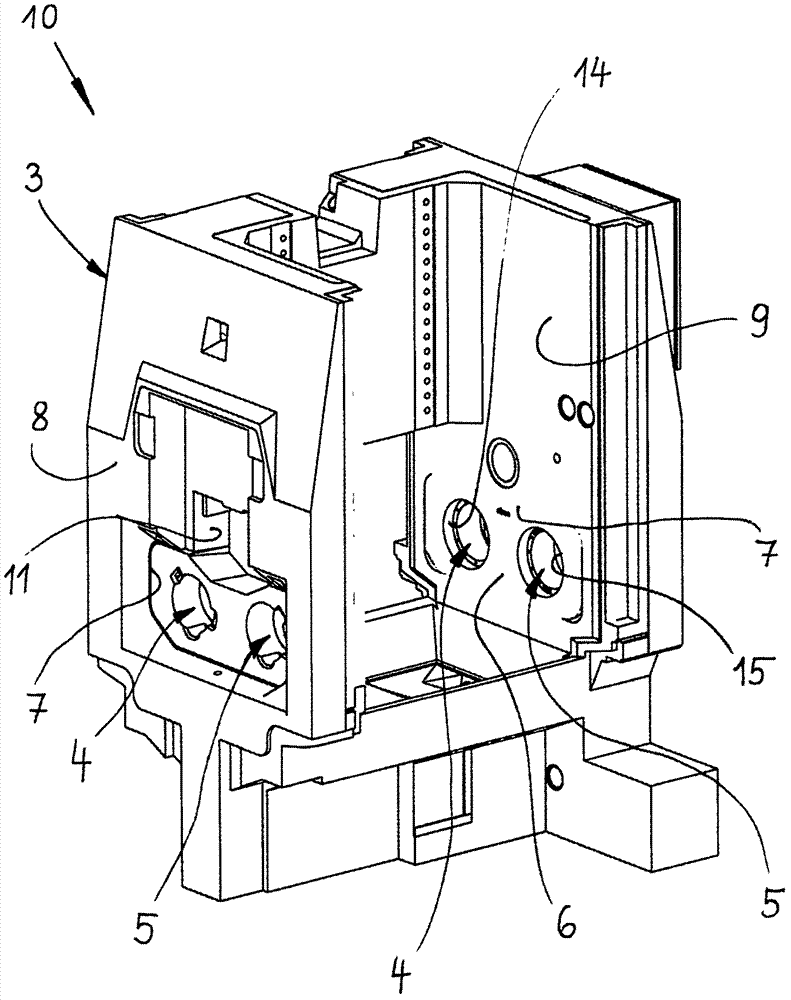

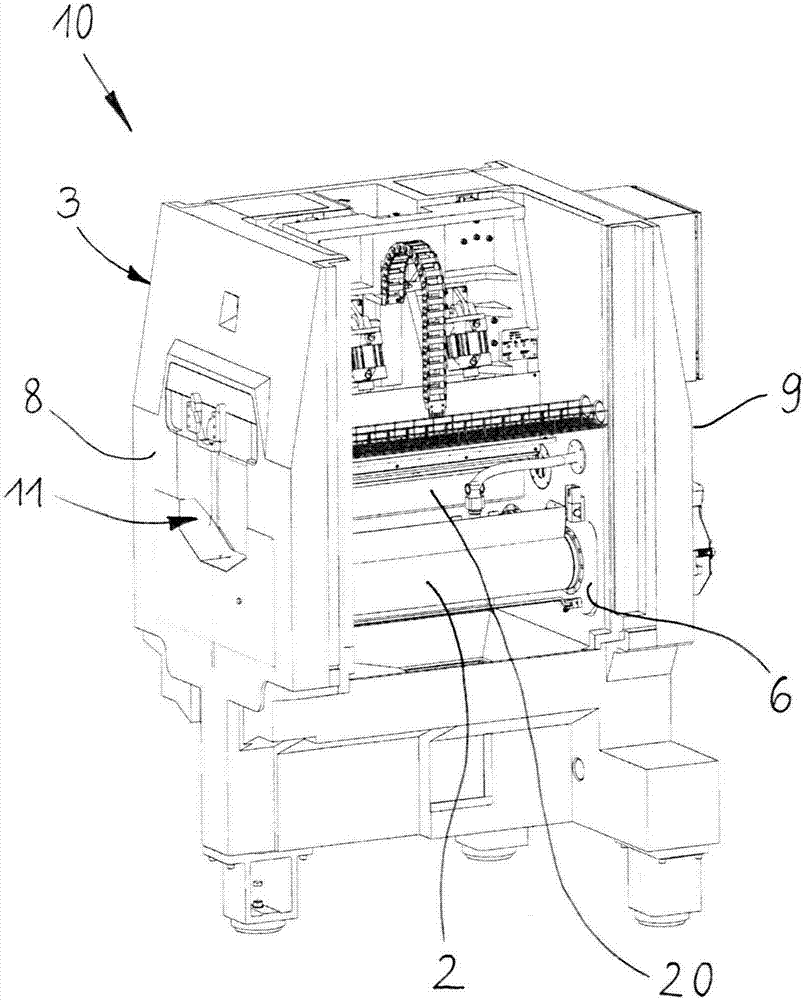

Apparatus and method for filling containers with rod-shaped products

InactiveUS20080190074A1Improve efficiencyCompact apparatusPackaging cigaretteCigarette manufactureEngineeringMechanical engineering

An apparatus is provided for filling an empty shaft tray with rod-shaped products. The shaft tray has shaft walls which form a plurality of shafts. The apparatus includes a filling hopper having a receiving region for a mass flow composed of the products, and a storage region for products comprising a front wall, a rear wall, side walls and a bottom wall. The storage region includes partitions that form a plurality of shafts adjacent to each other, wherein the partitions substantially extend over a full height of the storage region, and wherein the rear wall of the storage region includes openings for passage of the shaft walls of the shaft tray. A delivery element delivers the empty shaft tray into the receiving region of the filling hopper to be filled with the products. A removal element removes a filled shaft tray from the filling hopper.

Owner:HAUNI MASCHINENBAU AG

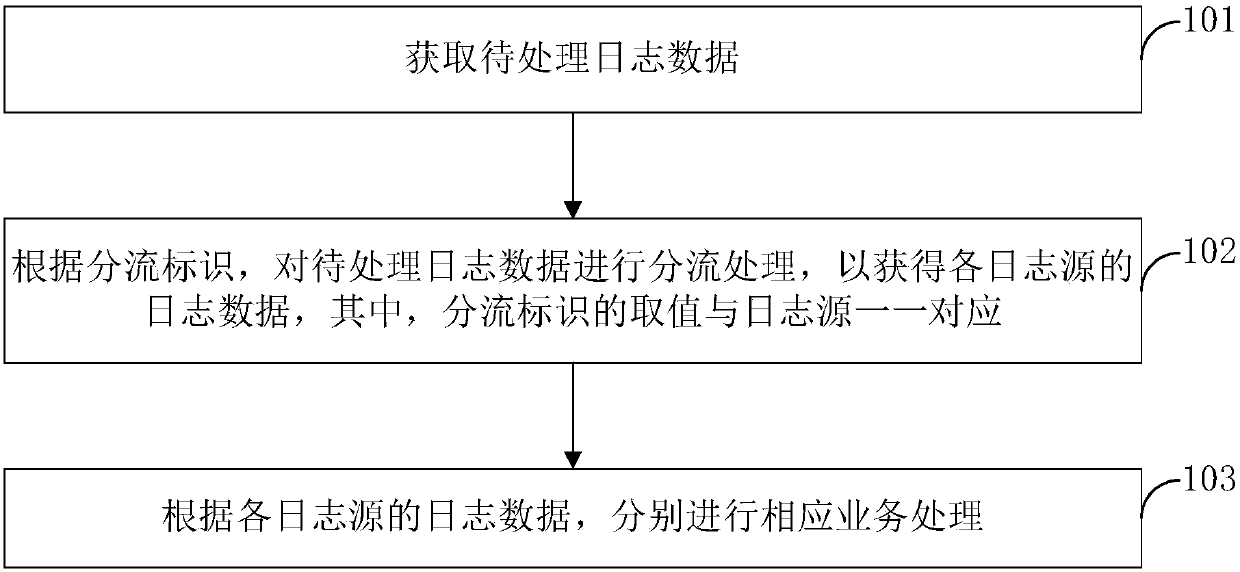

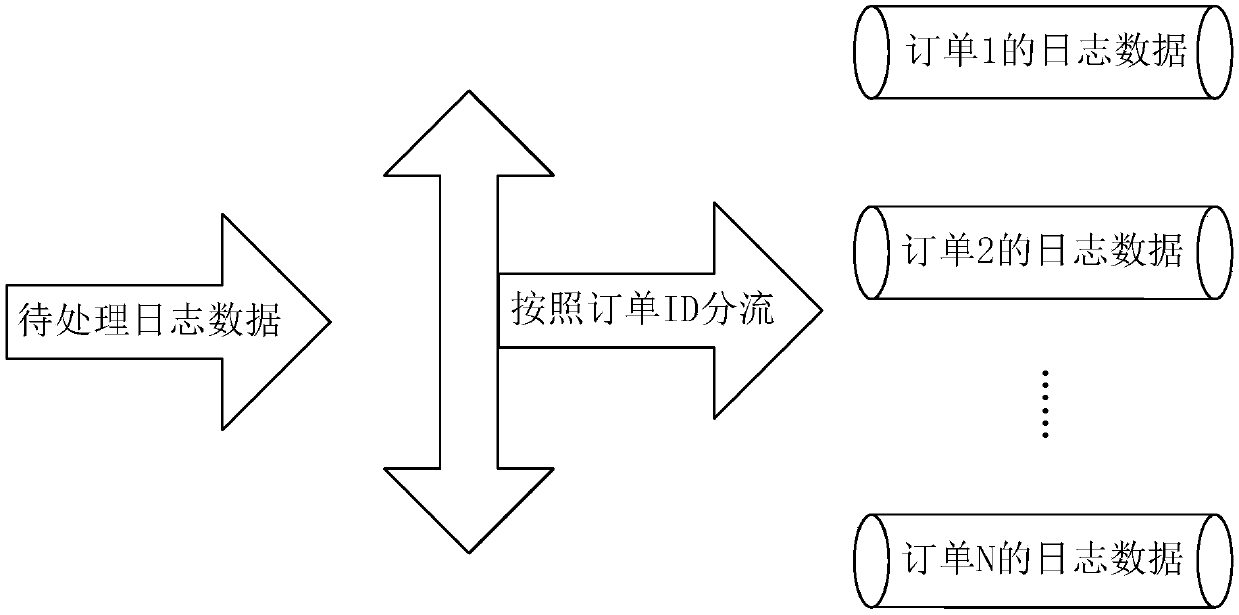

Log data processing method and device, and business system

InactiveCN107918621AIncrease parallelismImprove throughputSpecial data processing applicationsData processingData mining

The invention provides a log data processing method, a log data processing device and a business system. The log data processing method comprises the steps of acquiring to-be-processed log data; carrying out distribution processing on the to-be-processed log data according to a distribution identifier to obtain the log data of each log source, wherein each value of the distribution identifier corresponds to one log source; respectively processing a corresponding business and according to the log data of each log source. According to the log data processing method, the log data processing device and the business system, the business may be conducted dependent on the log data, the parallelism of the businesses is improved and the throughput of a log system is enhanced.

Owner:ALIBABA GRP HLDG LTD

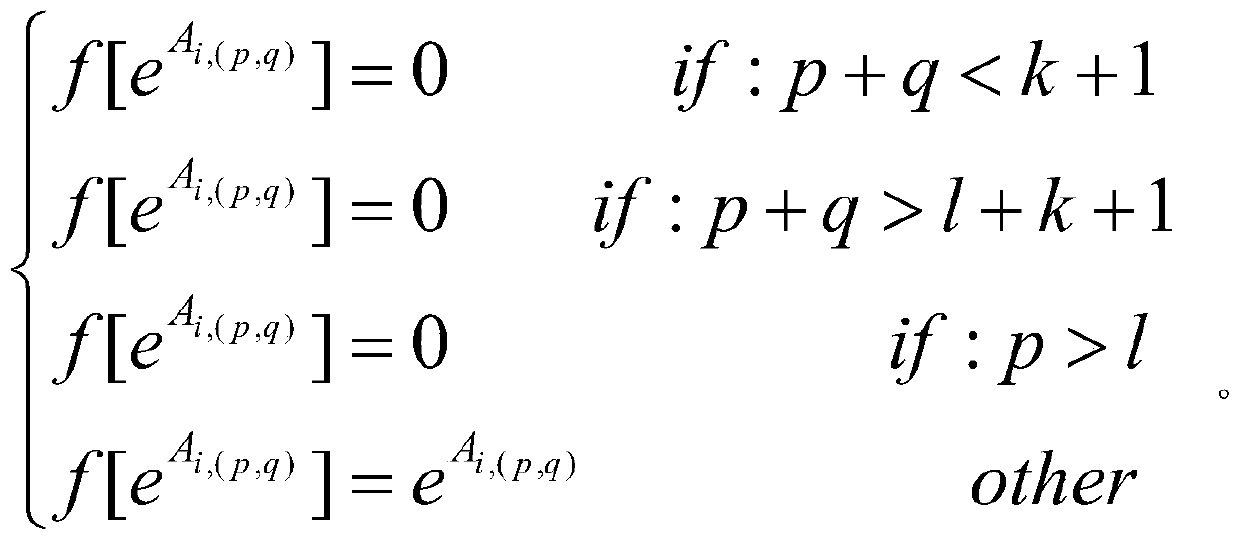

Novel multi-head attention mechanism

InactiveCN111199288AReduce Model ComplexityReduce storage consumptionMachine learningRound complexityModel complexity

The invention discloses a novel multi-head attention mechanism. According to a method employing local attention, compared with a global attention method adopted by a traditional multi-head attention mechanism, the complexity of the model is reduced, the sizes of all matrixes in the operation process are only in direct proportion to the length of the sequence, and compared with a matrix in direct proportion to the square of the sequence in the traditional attention mechanism, the storage space consumption of the model is reduced to a large extent. In calculation process, compared with a solution in Transformer-XL, blocking processing is not carried out on the sequence, the sequence characteristics of the original sequence are reserved to a great extent, softmax is used for establishing global semantics, and compared with a cross-block connection mode used in Transformer-XL, the parallelism degree of the model is improved.

Owner:SHAN DONG MSUN HEALTH TECH GRP CO LTD

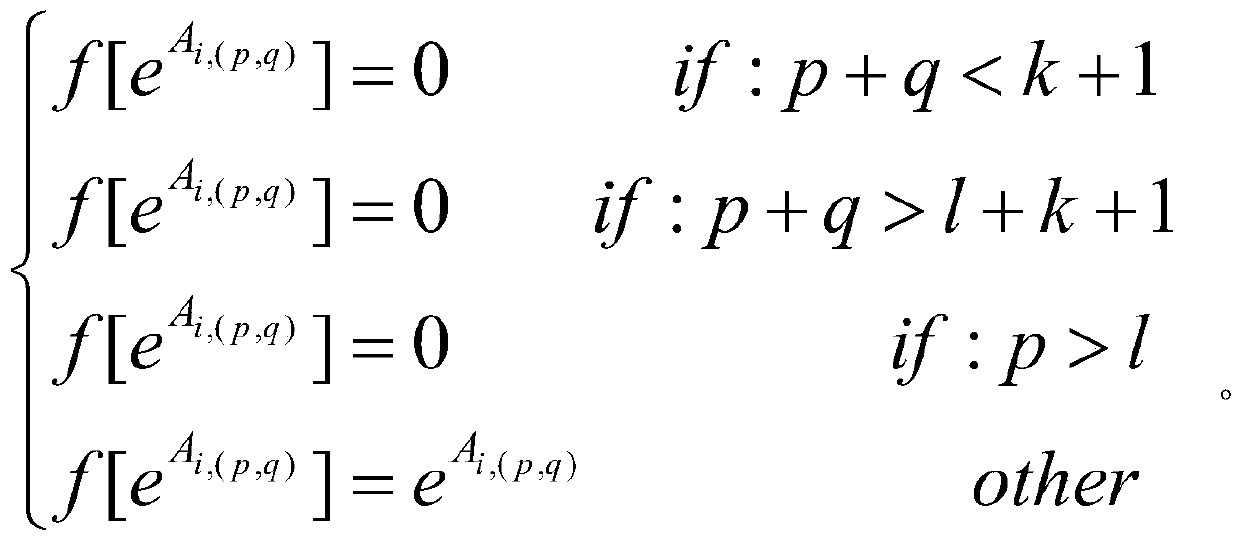

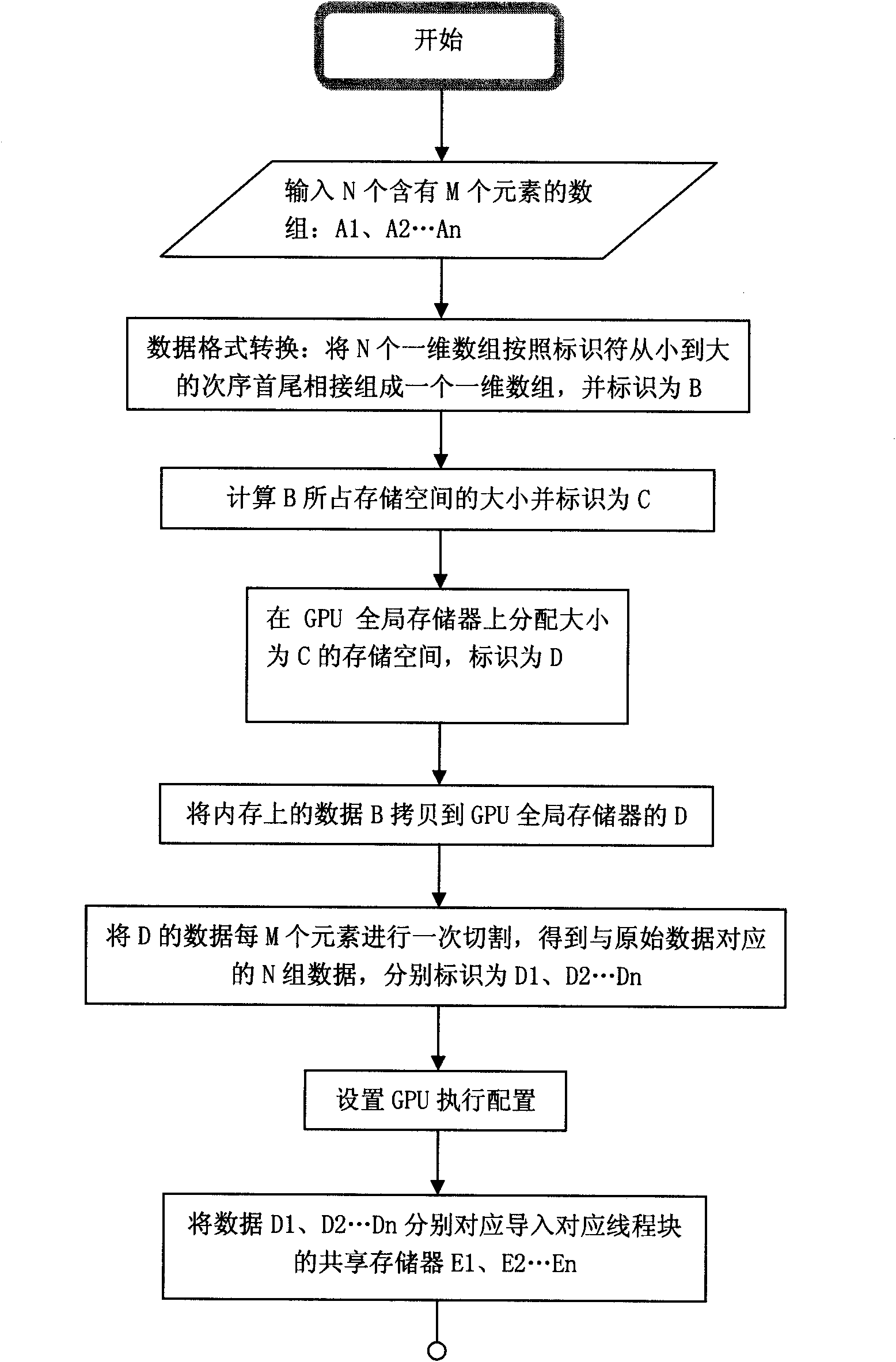

FFT (Fast Fourier Transform) paralleling method based on GPU (Graphics Processing Unit) multi-core platform

InactiveCN101937422AIncrease parallelismImprove operation accuracyDigital computer detailsComplex mathematical operationsFast Fourier transformGraphics processing unit

The invention discloses an FFT (Fast Fourier Transform) paralleling method based on a GPU (Graphics Processing Unit) multi-core platform. In the FFT paralleling method, the communication is carried out for one time to complete FFT operation of N M points according to a principle of once communication mass operation on a storage aspect, which greatly reduces the communication consumption; and by using the high-speed cache, i.e. a shared storage, inside each thread block, the communication time is further reduced, and the operating efficiency is enhanced. The invention is used for parallelly processing the data by using hundreds of processing cores through scientific comprehensive arrangement, thereby furthest enhancing the parallelism degree and efficiently completing the operation and enhancing the operation accuracy.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

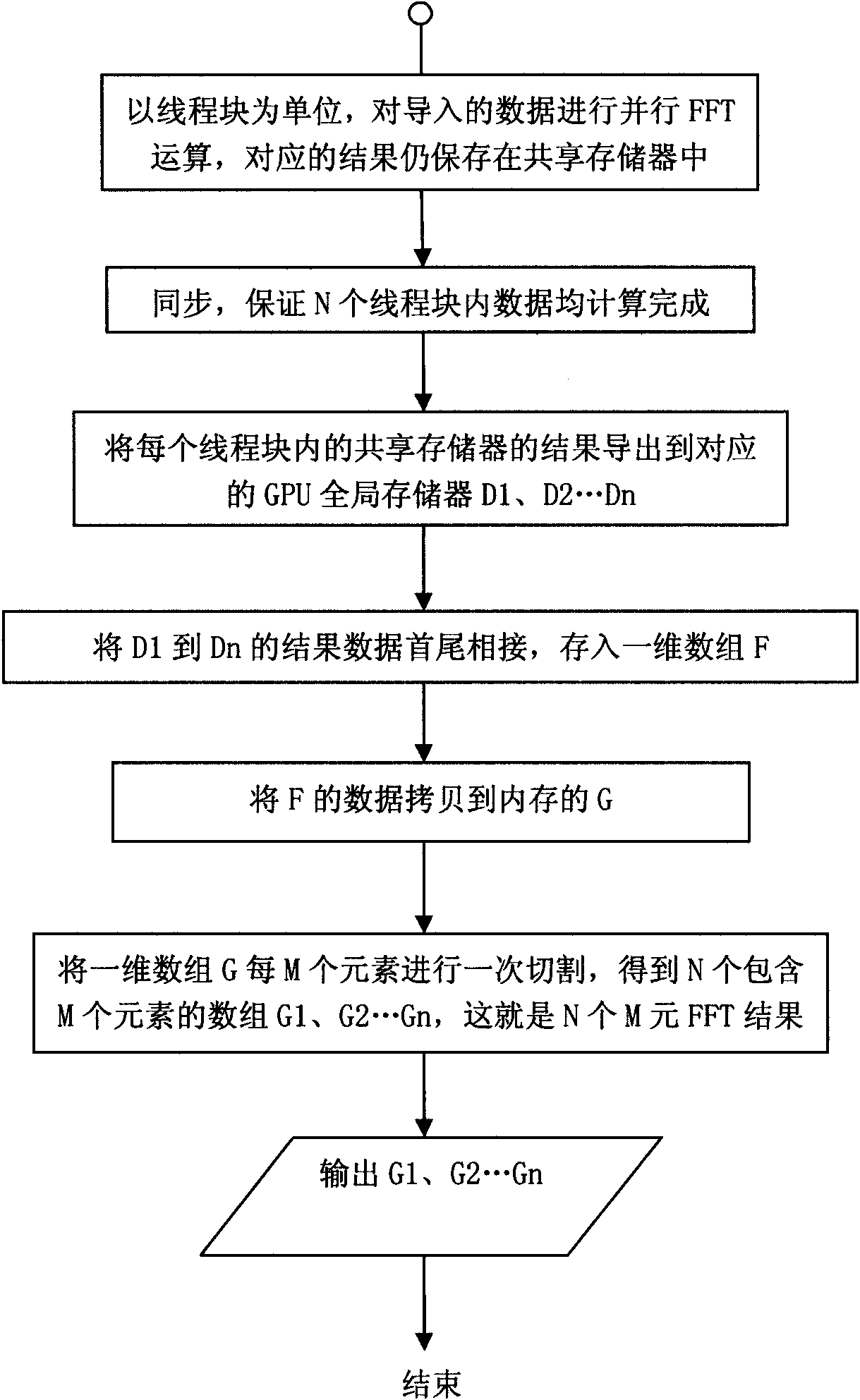

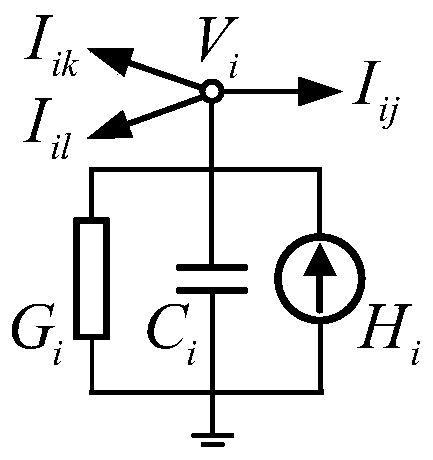

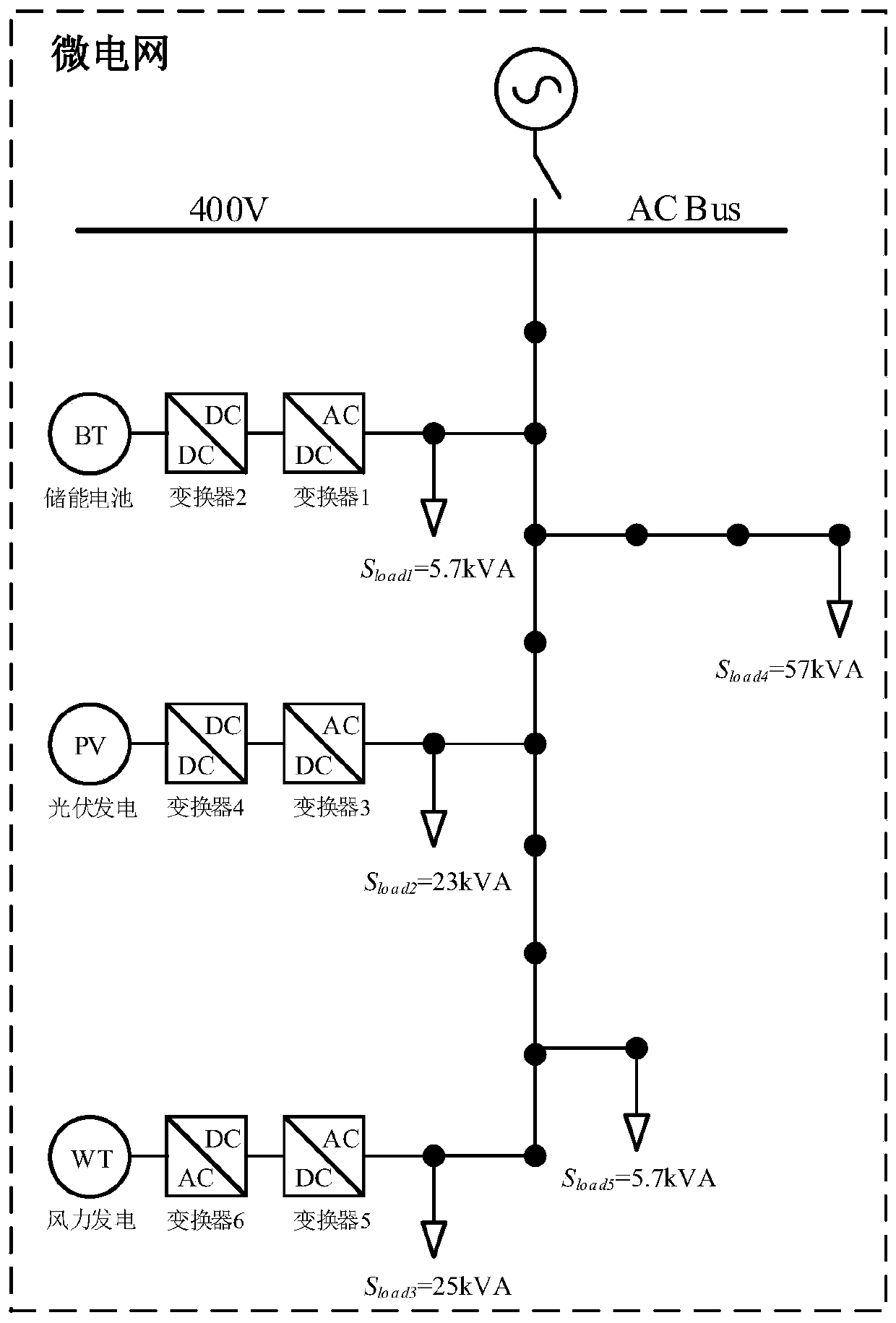

Hybrid electromagnetic transient simulation method suitable for microgrid real-time simulation

ActiveCN110569558AIncrease parallelismCalculation speedData processing applicationsSpecial data processing applicationsPower gridReal-time simulation

The invention discloses a hybrid electromagnetic transient simulation method suitable for microgrid real-time simulation. The method is characterized in that a traditional node analysis method (NAM) and a highly-parallelized delay insertion method (LIM) are combined; therefore, the micro-grid is segmented from a filter of the distributed power generation system; a delay insertion (LIM) network including a distribution line and a plurality of node analysis (NAM) networks including a distributed power generation system are respectively formed. The NAM network is simulated by adopting a traditional node analysis method; the LIM network is simulated by adopting a delay insertion method; in an initialization phase, an incidence matrix and four diagonal matrixes containing line parameters used for LIM network simulation are formed according to micro-grid line topology and parameters; in a simulation main body cycle, the LIM network and a plurality of NAM networks can be solved at the same time, the parallelism degree of microgrid simulation is improved, in addition, diagonal matrix multiplication is mainly adopted for calculation in the LIM network simulation solving process, the calculation burden generated when a node analysis method is used for solving a large-scale network equation is avoided, and the simulation efficiency is improved.

Owner:SHANGHAI JIAO TONG UNIV

Apparatus and method for filling containers with rod-shaped products

InactiveUS7757465B2Improve efficiencyCompact apparatusPackaging cigaretteCigarette manufactureEngineeringMechanical engineering

An apparatus is provided for filling an empty shaft tray with rod-shaped products. The shaft tray has shaft walls which form a plurality of shafts. The apparatus includes a filling hopper having a receiving region for a mass flow composed of the products, and a storage region for products comprising a front wall, a rear wall, side walls and a bottom wall. The storage region includes partitions that form a plurality of shafts adjacent to each other, wherein the partitions substantially extend over a full height of the storage region, and wherein the rear wall of the storage region includes openings for passage of the shaft walls of the shaft tray. A delivery element delivers the empty shaft tray into the receiving region of the filling hopper to be filled with the products. A removal element removes a filled shaft tray from the filling hopper.

Owner:HAUNI MASCHINENBAU AG

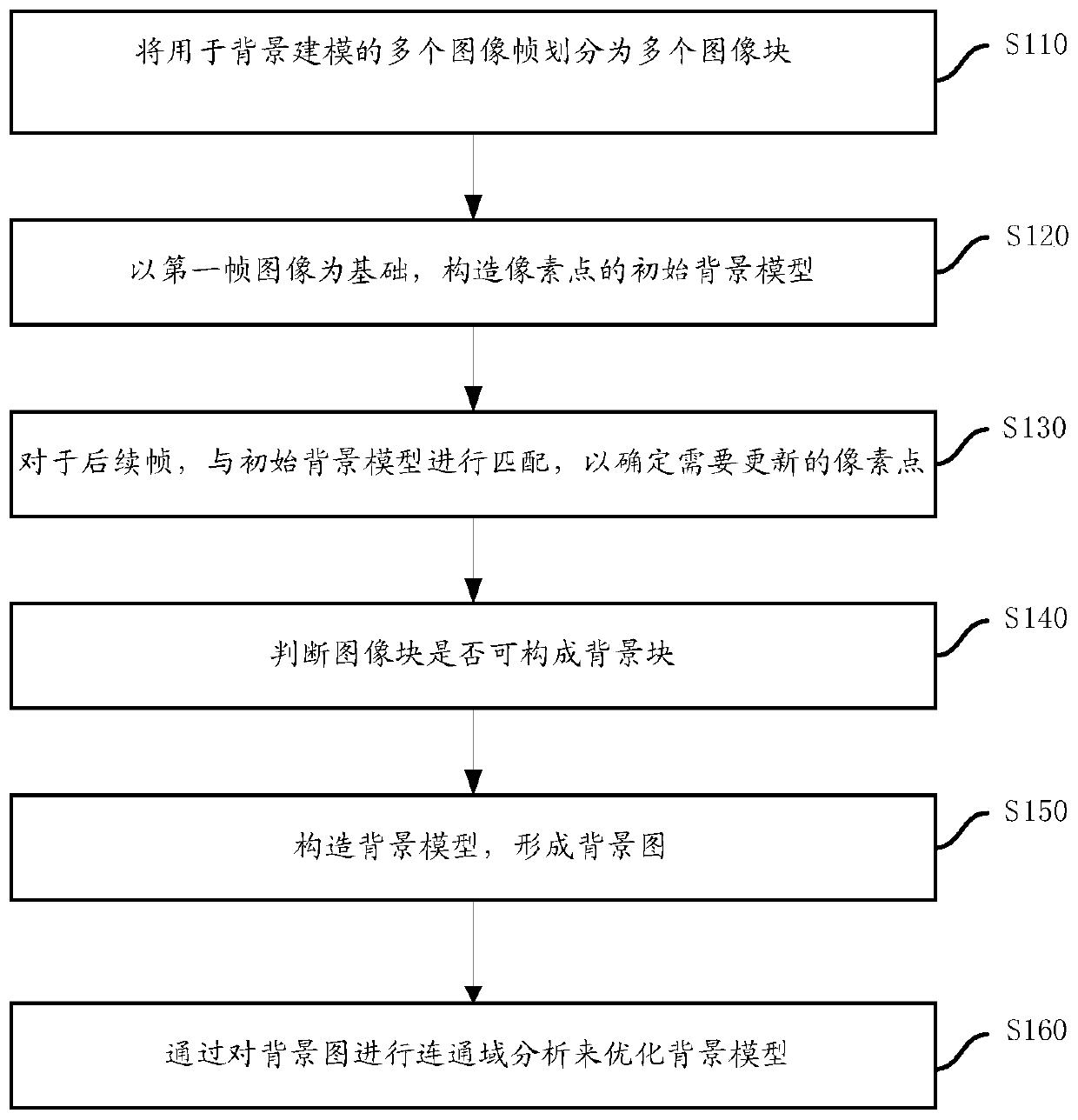

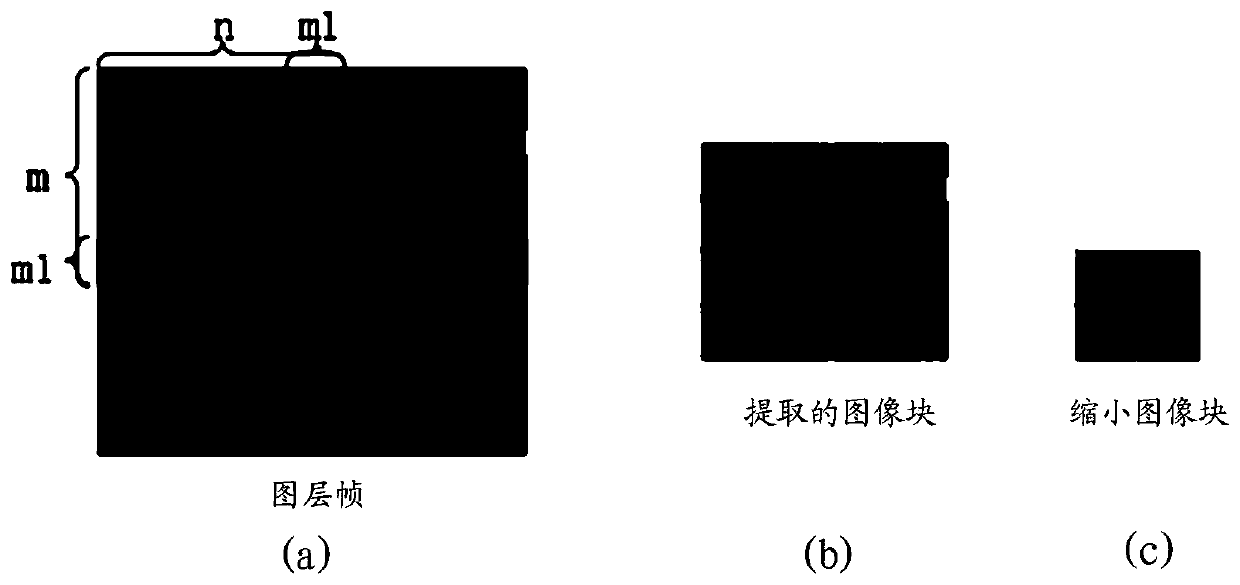

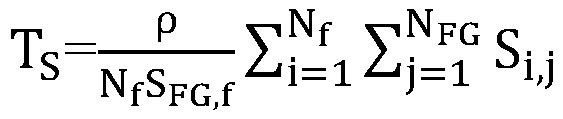

A background modeling method of a video image

The invention provides a background modeling method of a video image. The method comprises the steps of dividing each frame of image into a plurality of image blocks for a plurality of video image frames; Establishing an initial background model according to the first frames of the plurality of video image frames, wherein the initial background model stores a corresponding sample set for each background point; For a subsequent frame of the first frame, constructing a background model for the plurality of image blocks by matching with the initial background model to form a background image. According to the method, the background model can be quickly and accurately constructed.

Owner:北京中科晶上超媒体信息技术有限公司

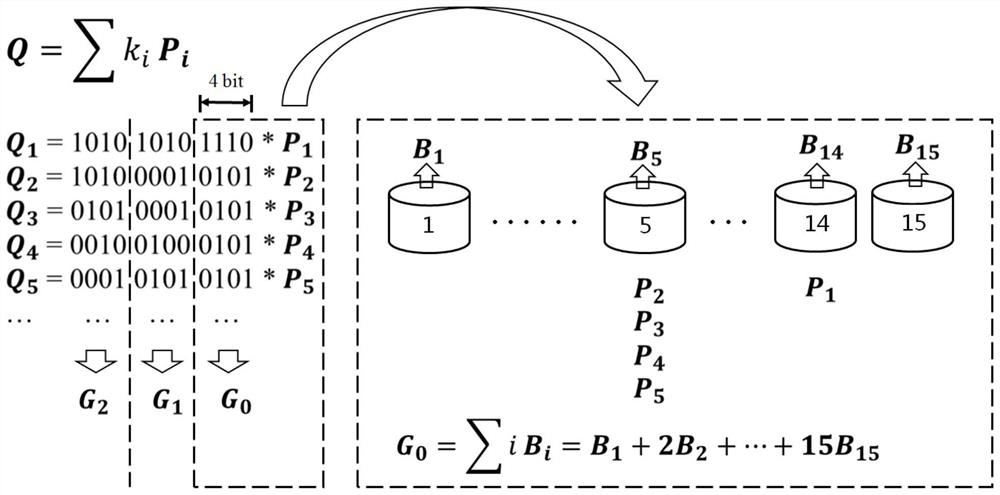

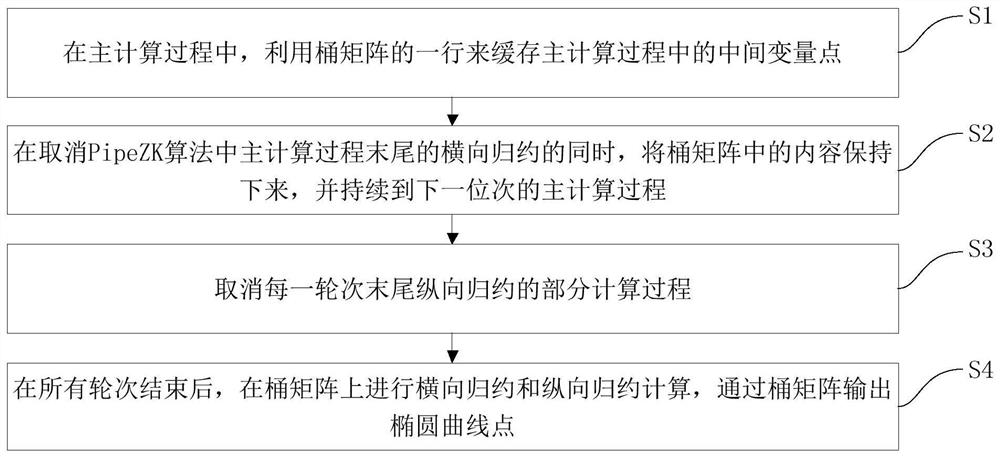

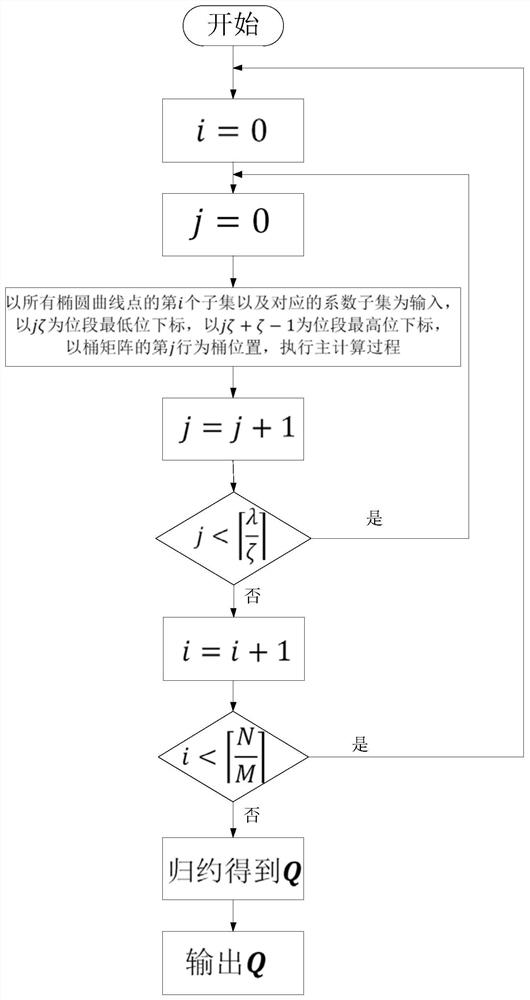

Elliptic curve multi-scalar point multiplication calculation optimization method and optimization device

PendingCN113504895AInhibit outputExtended working hoursComputations using residue arithmeticAlgorithmParallel computing

The invention discloses an elliptic curve multi-scalar point multiplication calculation optimization method and optimization device. Comprising the following steps: designing a bucket matrix to cache an intermediate variable point in a main calculation process, and avoiding the output of a Pippenger intermediate quantity; continuously running and calculating in a running water manner until all the final calculations are finished; then performing transverse reduction and longitudinal reduction, and reducing the total number of calculation times of serial calculation to one from thousands of times. Therefore, most overhead of serial-to-parallel conversion, synchronous locking and the like is eliminated, the continuous working time of an assembly line is effectively prolonged, and therefore the overall performance is improved.

Owner:深圳市智芯华玺信息技术有限公司

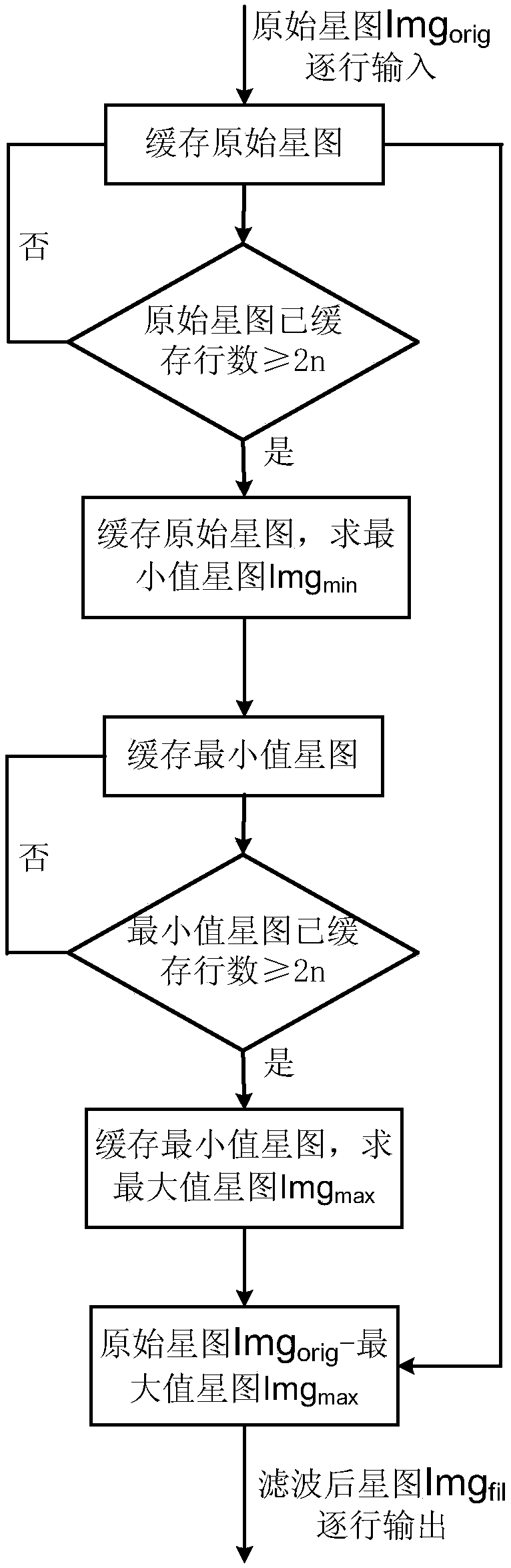

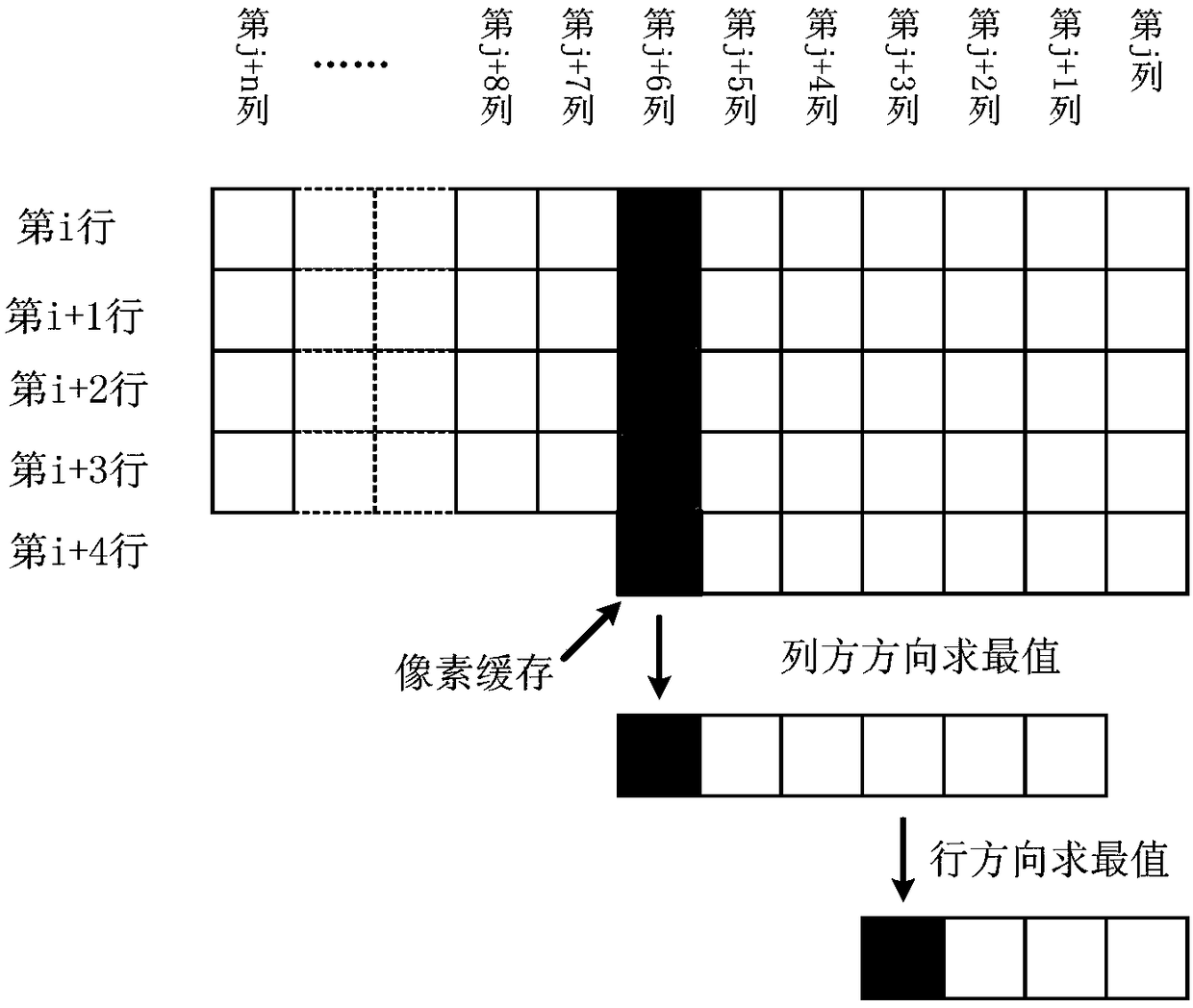

Real-time star atlas background filtering method in daytime environment

ActiveCN108154462ACalculation rules are simpleIncrease parallelismImage enhancementProcessor architectures/configurationAtlas dataMethod of undetermined coefficients

The invention provides a real-time star atlas background filtering method in a daytime environment. A finite row (2n+1) of star atlas data is filtered in real time by taking a (2n+1)* (2n+1) structureelement as a basic unit, a minimal star atlas and a maximal star atlas are solved sequentially, and a difference is obtained to complete filtering. The whole process is carried out in a processing line manner, calculation rules are simple and highly parallel with one another, the process is realized by hardware, star atlas collection and filtering are carried out in a processing line, the speed and instantaneity are high, and a using value is very high; and especially, the process of solving extreme star atlas values is carried out in the parallel graded processing line, the extreme values are solved in the column direction and then in the row direction, extreme values of the (2n+1) data are solved in the column or row direction, and calculation is highly parallel, low in computational complexity and high in speed.

Owner:BEIJING INST OF CONTROL ENG

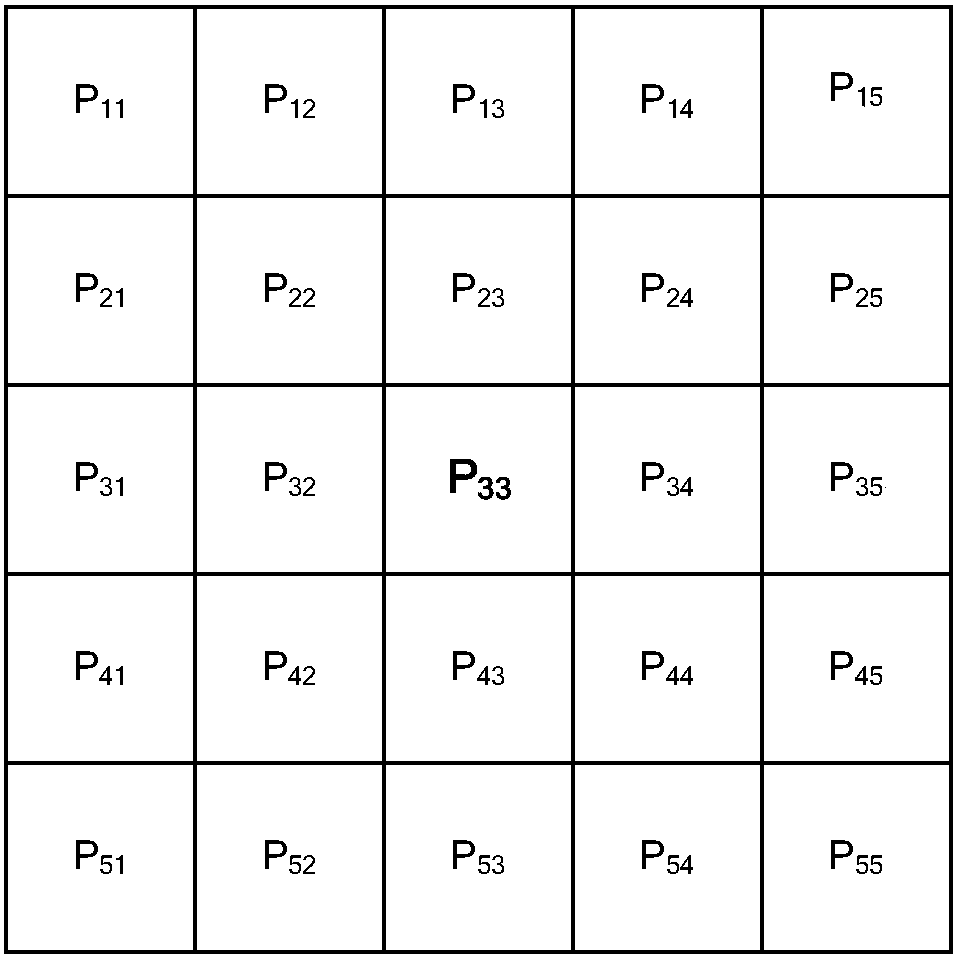

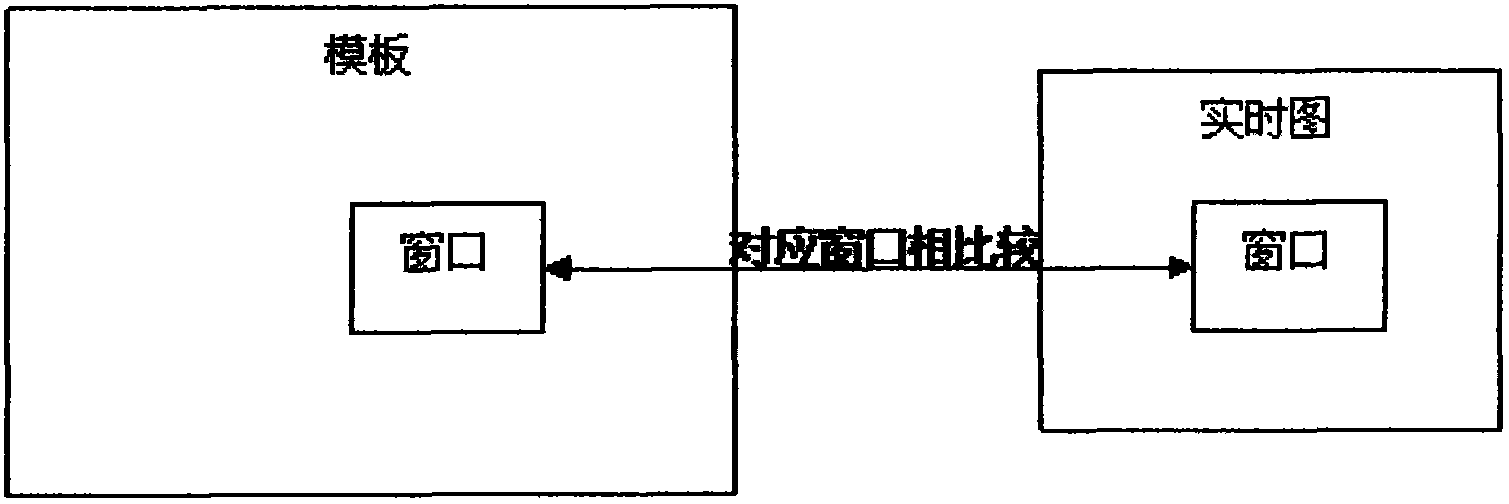

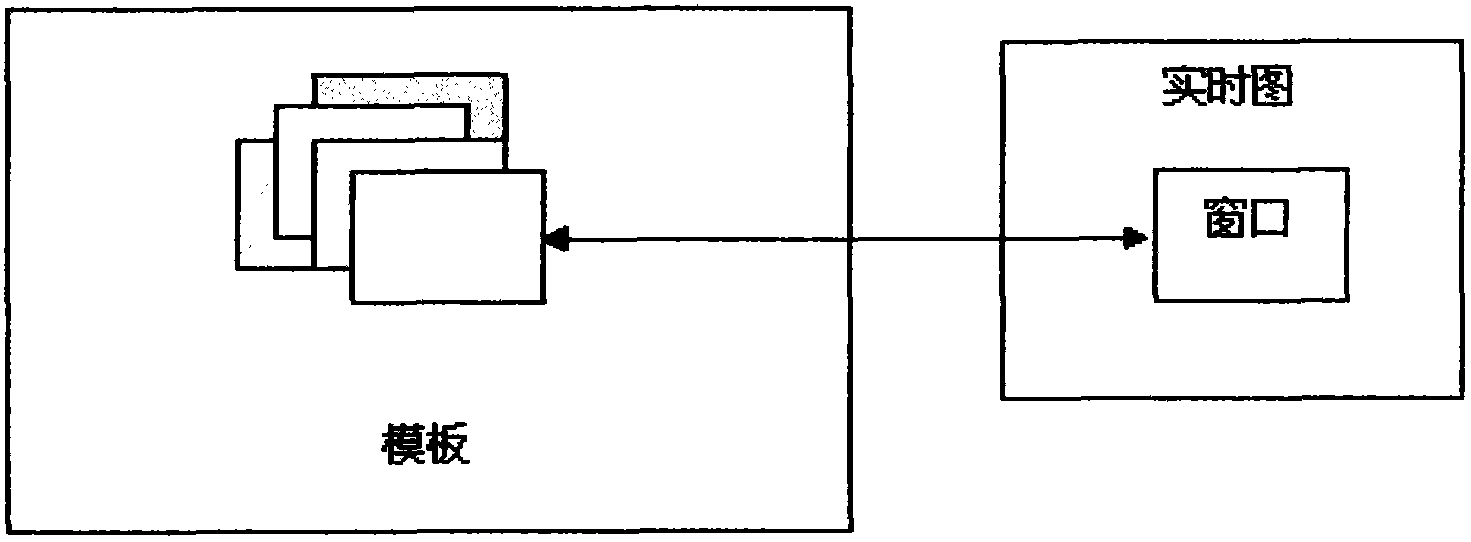

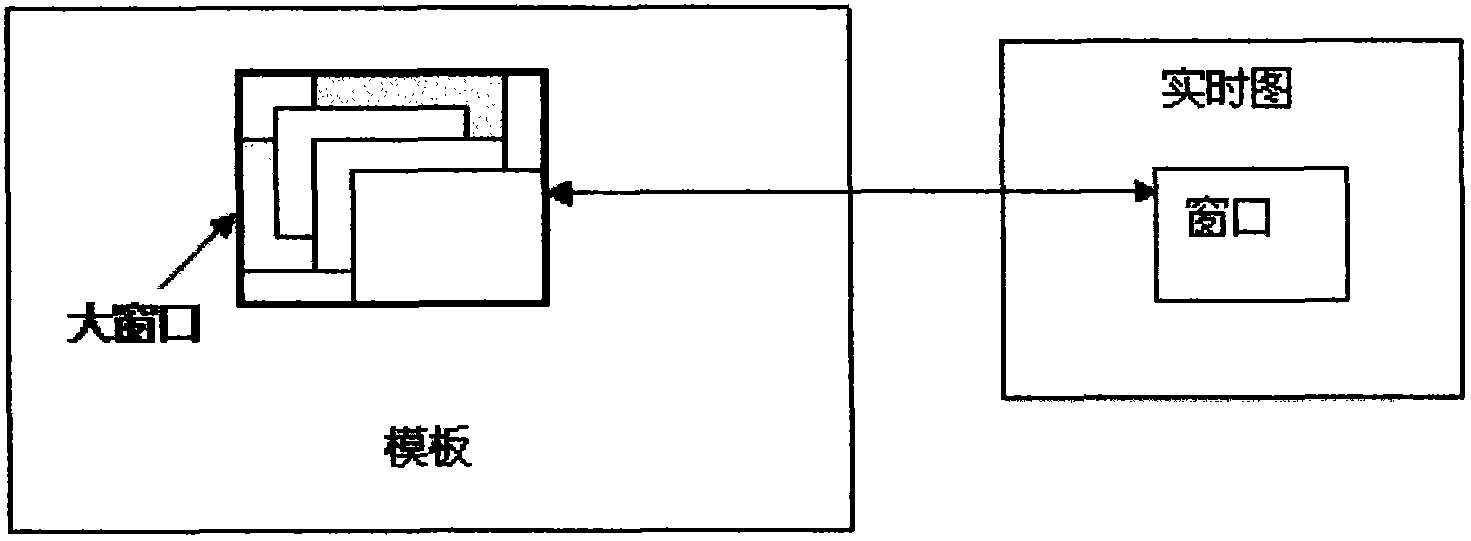

Hardware implementation method of related parallel computation of groups in image recognition

InactiveCN102842048ALower requirementIncrease parallelismCharacter and pattern recognitionHardware implementationsData path

The invention discloses a hardware implementation method of related parallel computation of groups in image recognition. The hardware implementation method comprises the following steps of: 1) setting a large window of a template, wherein the large window includes all windows in the template; 2) transmitting the data read out by a template memory data path to all arithmetic units; 3) judging whether the data belongs to the data in the window charged by the arithmetic unit by each arithmetic unit, if so, adding the data to participate in operation, if not, ignoring and waiting arrival of the belonged data. According to the hardware implementation method, a parallelism degree of computation is greatly improved under the situation of not increasing access memory path, and a computation speed is also improved.

Owner:SUZHOU GALAXY ELECTRONICS TECH

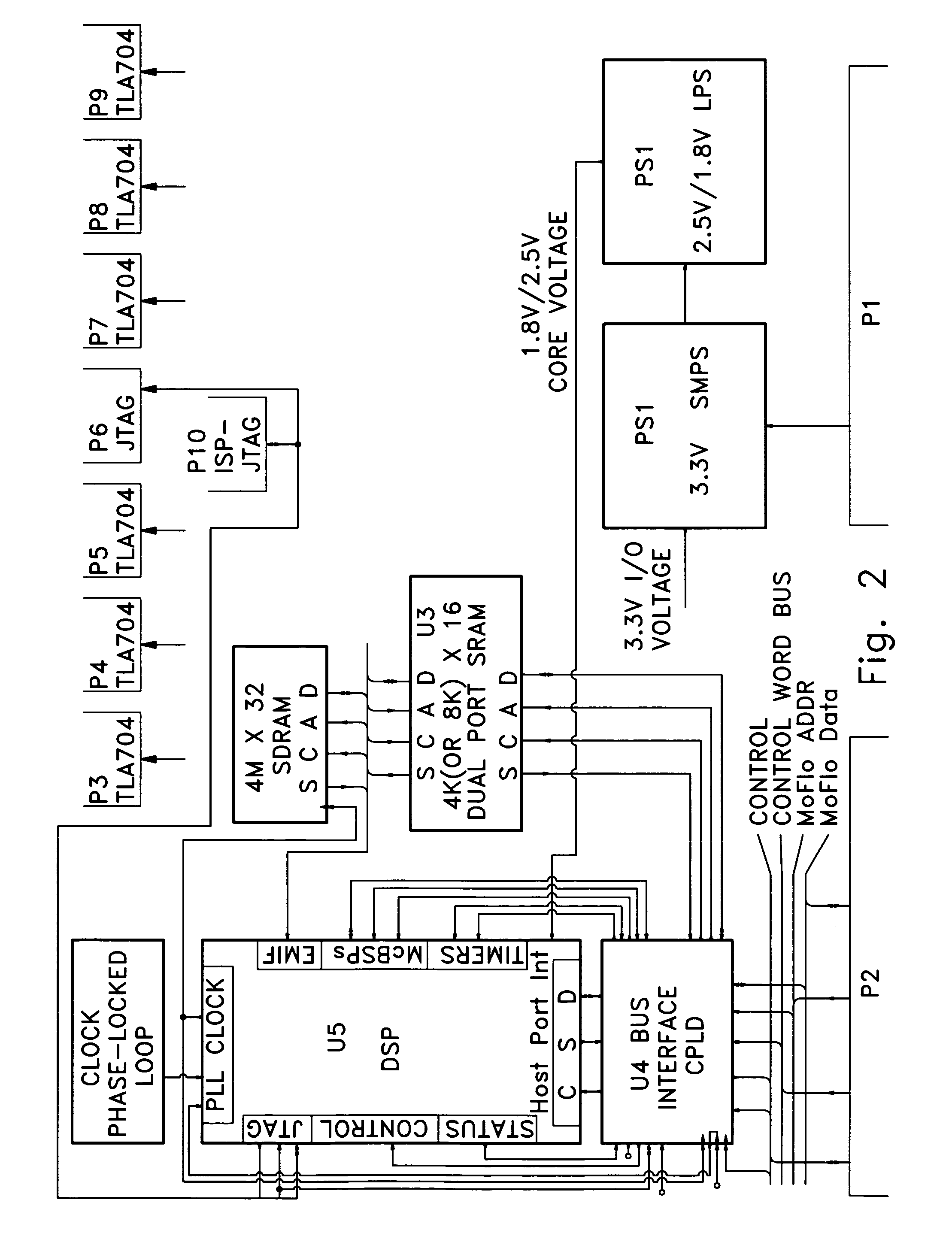

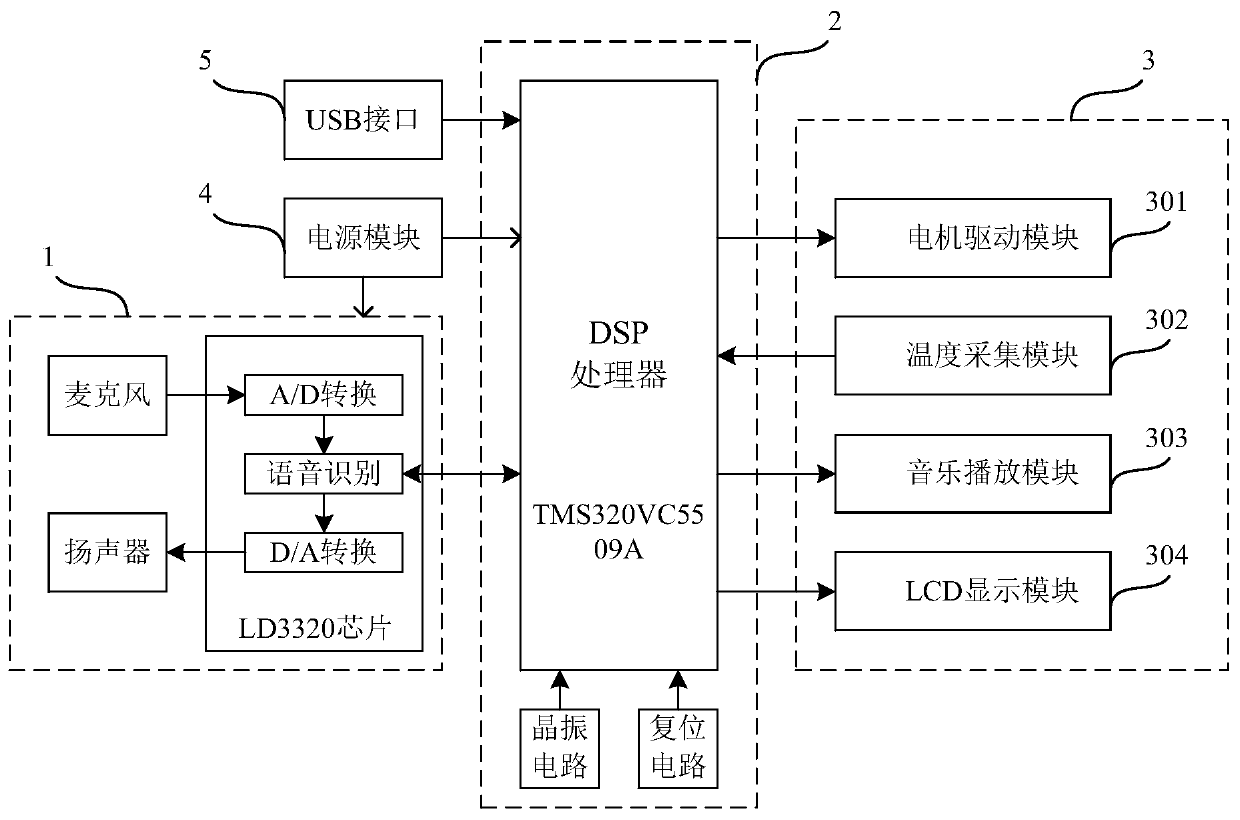

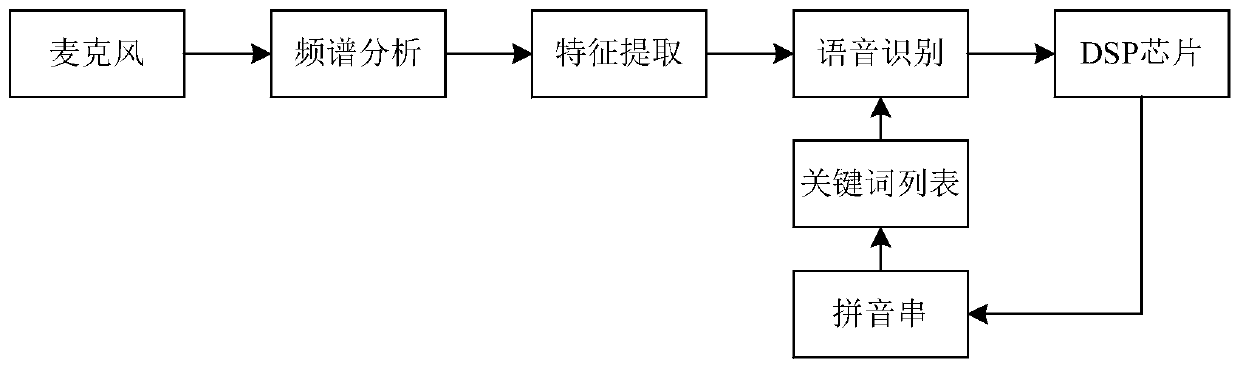

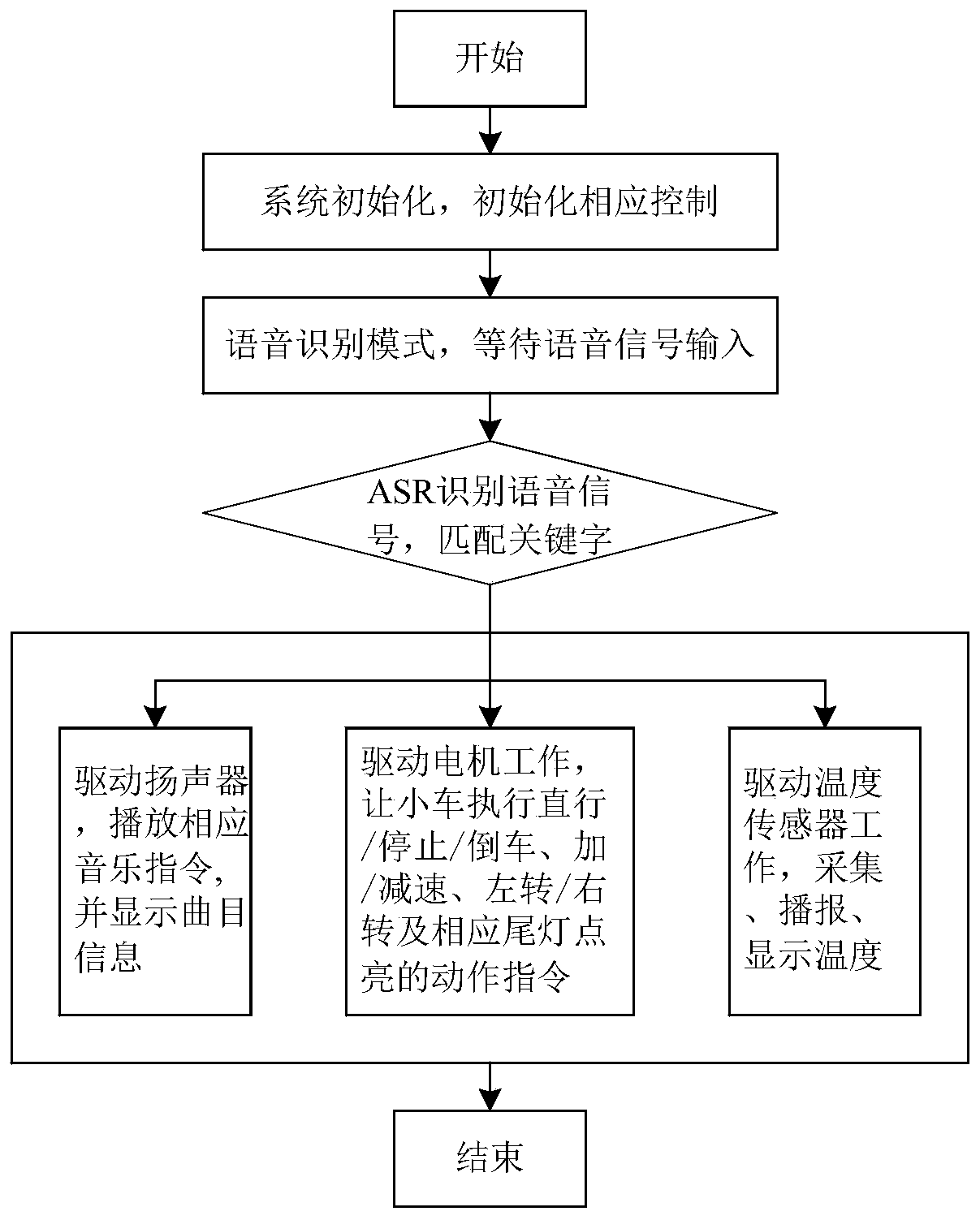

Voice control intelligent toy car system based on TMS320VC5509A

PendingCN111001167AIncrease parallelismReduce energy dissipationSpeech recognitionRemote-control toysSpeech controlDsp processor

The invention relates to the field of voice signal processing and intelligent control, in particular to a voice control intelligent toy car system based on TMS320VC5509A. The difference is as follows:the system comprises a voice recognition module which is an LD3320 chip, template keywords are preset in the LD3320 chip, the voice recognition module performs spectral analysis on input voice signals through an ARS voice recognition technology to extract parameters representing voice features in the input voice signals, then the parameters are compared with the template keywords, and the keywordwith the highest matching degree is found out to serve as a voice recognition signal to be output; the system also comprises a DSP processor, which is a DSP chip of TMS320VC5509A, the input end of the DSP processor is connected to the voice recognition module, and the DSP processor is used for converting the voice recognition signal output by the voice recognition module into a control signal andsending the control signal to a control object of a toy car so as to execute a corresponding action command. The system overcomes the defects of a man-machine interaction plate of an existing toy car, achieves voice control, and is high in performance and low in power consumption.

Owner:NANJING COLLEGE OF INFORMATION TECH

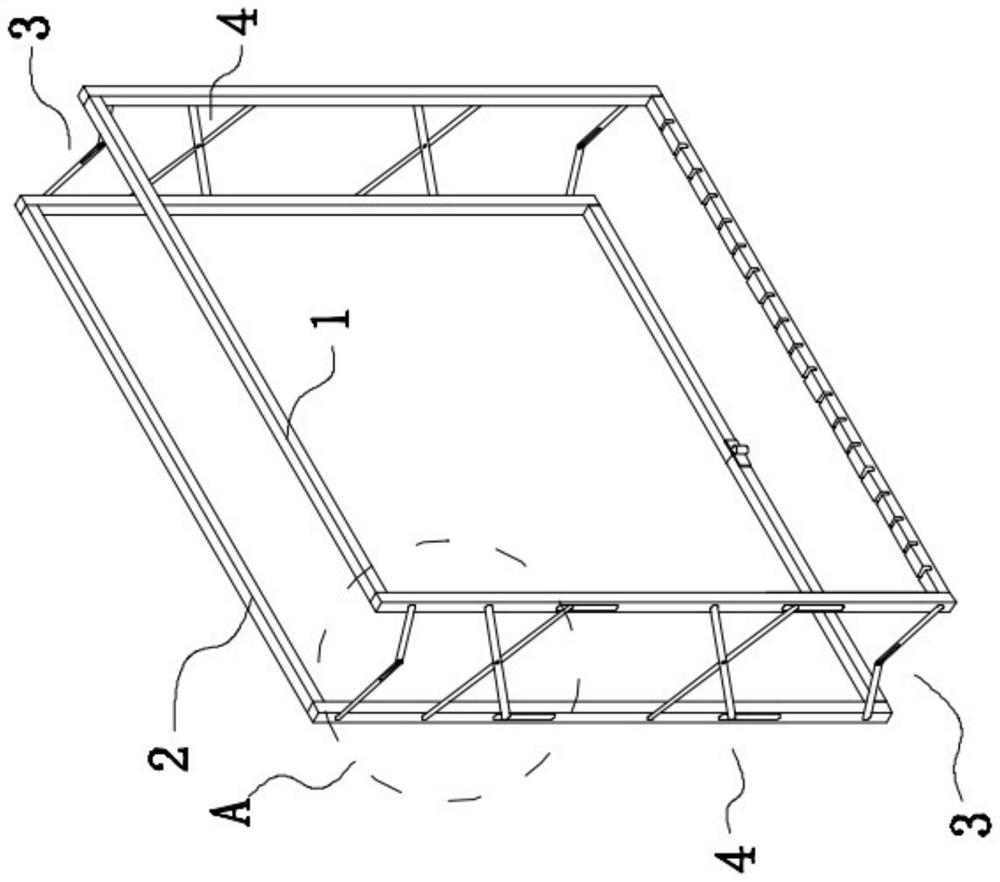

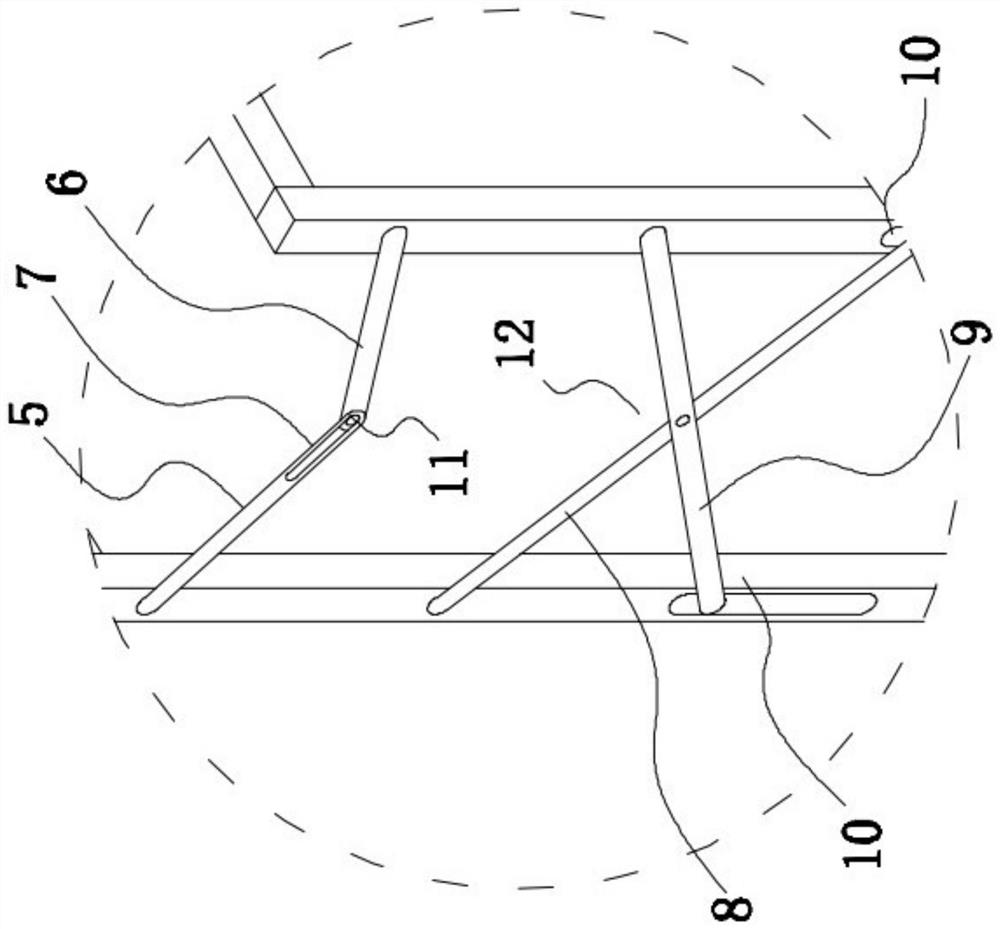

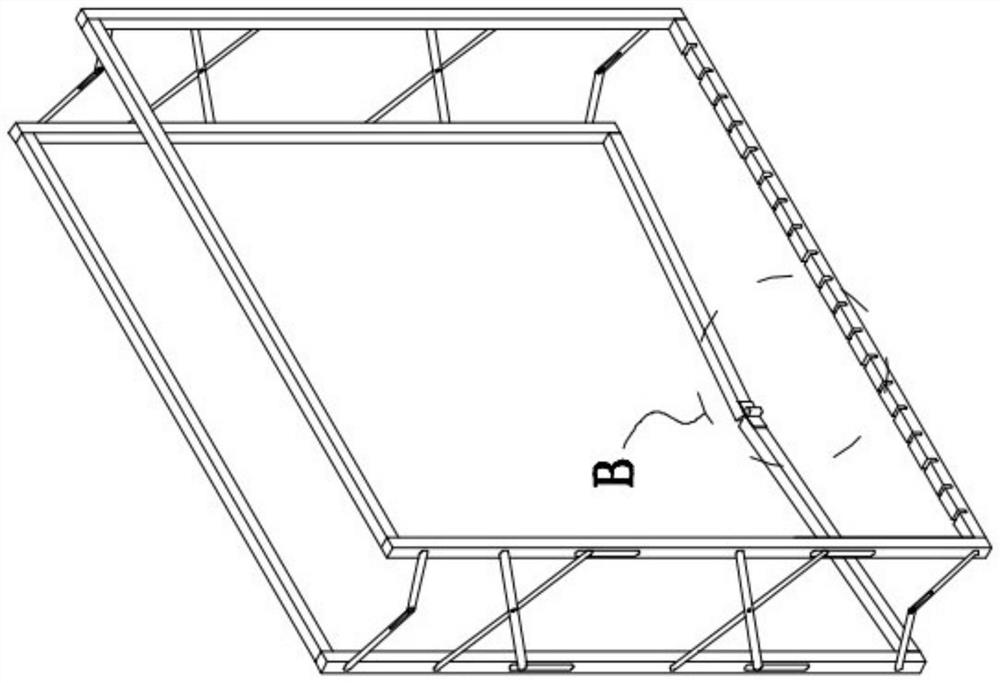

Protective window with escape function

PendingCN113445887AFast and reliable responsePlay a role in unlockingShutters/ movable grillesBuilding rescueEngineeringStructural engineering

Owner:昆山麦途文化传媒有限公司

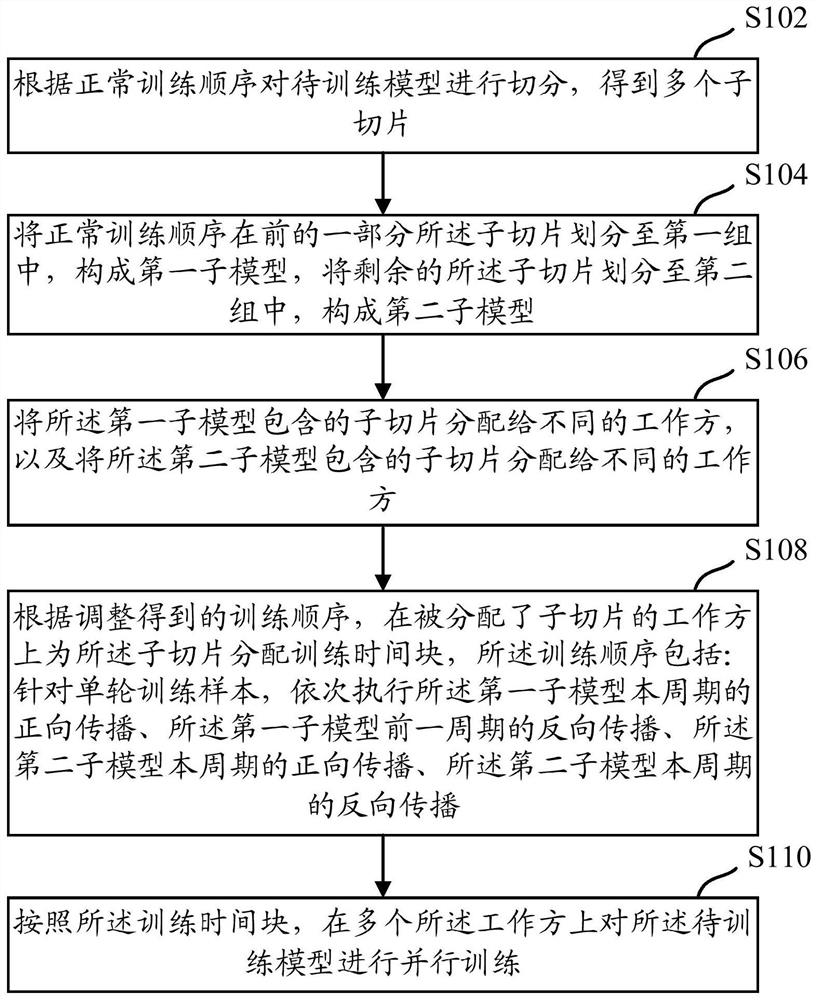

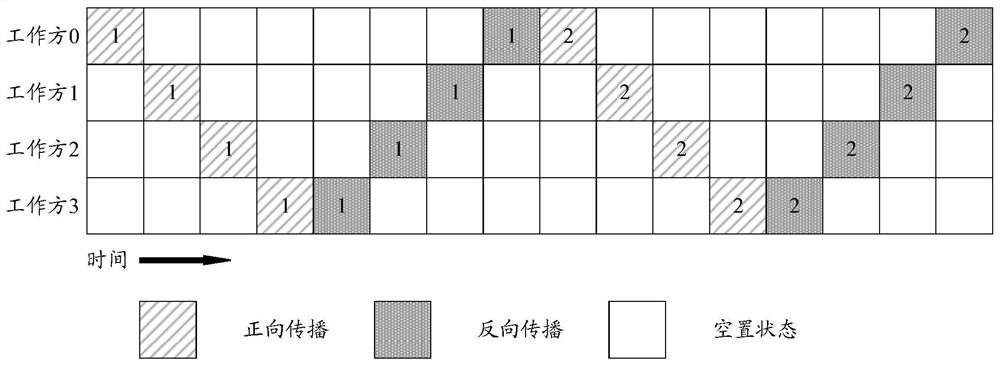

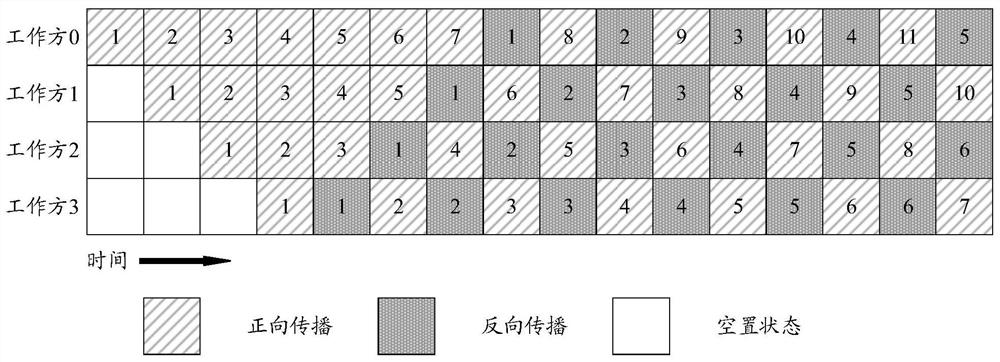

Model training method, device and equipment based on pipeline parallelism

ActiveCN113177632AGuaranteed training effectIncrease parallelismNeural architecturesNeural learning methodsForward propagationAlgorithm

The embodiment of the invention discloses a model training method based on pipeline parallelism. The model training method comprises the steps of segmenting a to-be-trained model according to a normal training sequence to obtain a plurality of sub-slices; dividing a part of sub-slices with the normal training sequence in the front into a first group to form a first sub-model, and dividing the remaining sub-slices into a second group to form a second sub-model; distributing the sub-slices contained in the first sub-model to different working parties, and distributing the sub-slices contained in the second sub-model to different working parties; according to the training sequence obtained through adjustment, distributing training time blocks to the sub-slices on the working party to which the sub-slices are distributed, wherein the training sequence comprises the following steps: for a single-round training sample, sequentially executing the forward propagation of the first sub-model in the current period, the backward propagation of the first sub-model in the previous period, the forward propagation of the second sub-model in the current period and the backward propagation of the second sub-model in the current period; and according to the training time block, carrying out parallel training on the to-be-trained model on the plurality of working parties.

Owner:ALIPAY (HANGZHOU) INFORMATION TECH CO LTD

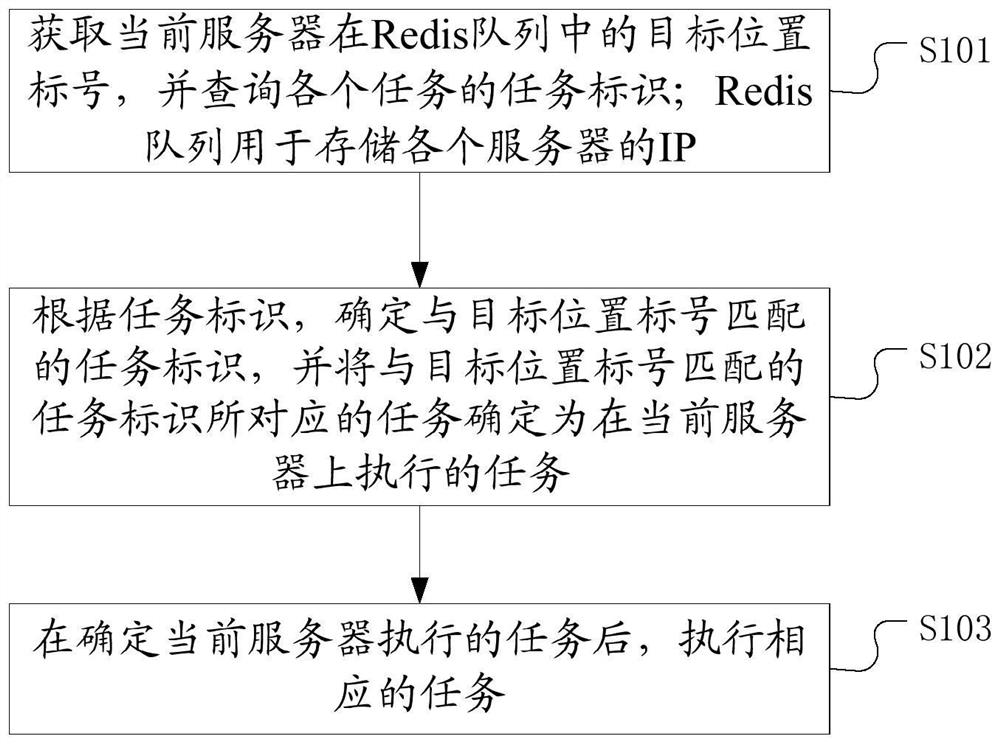

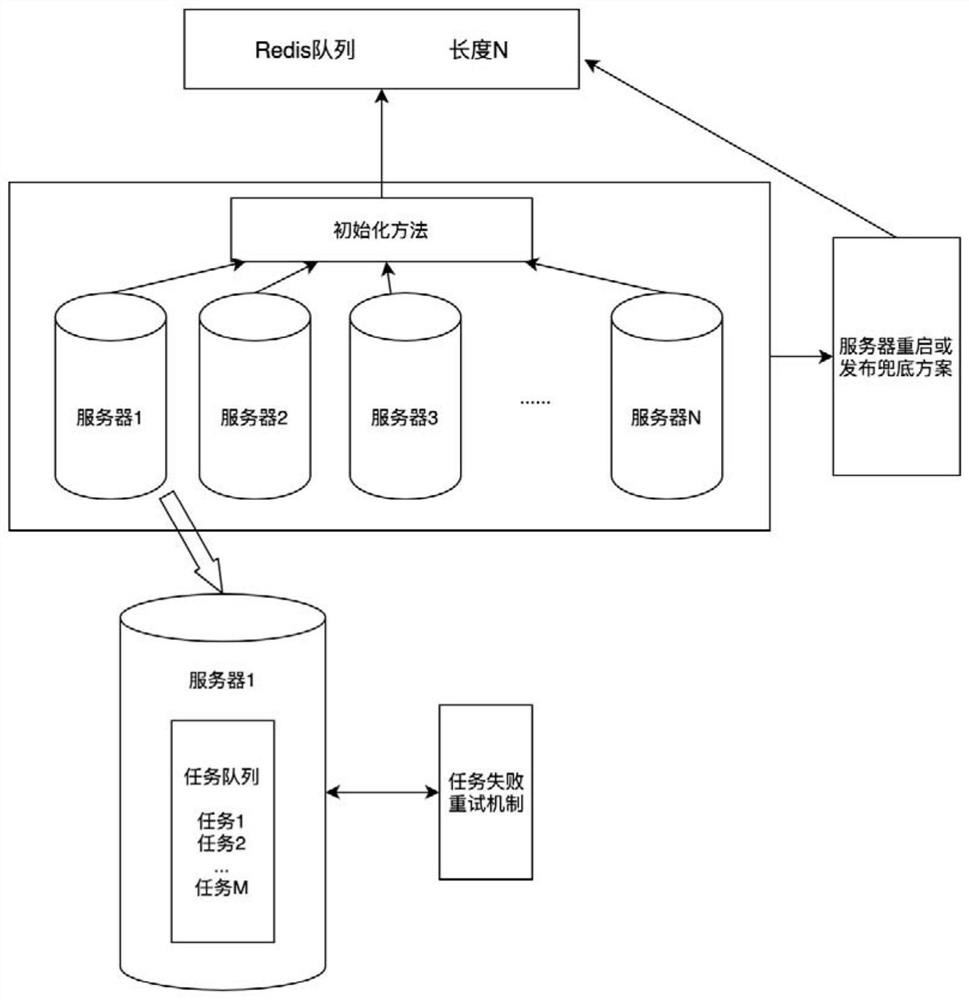

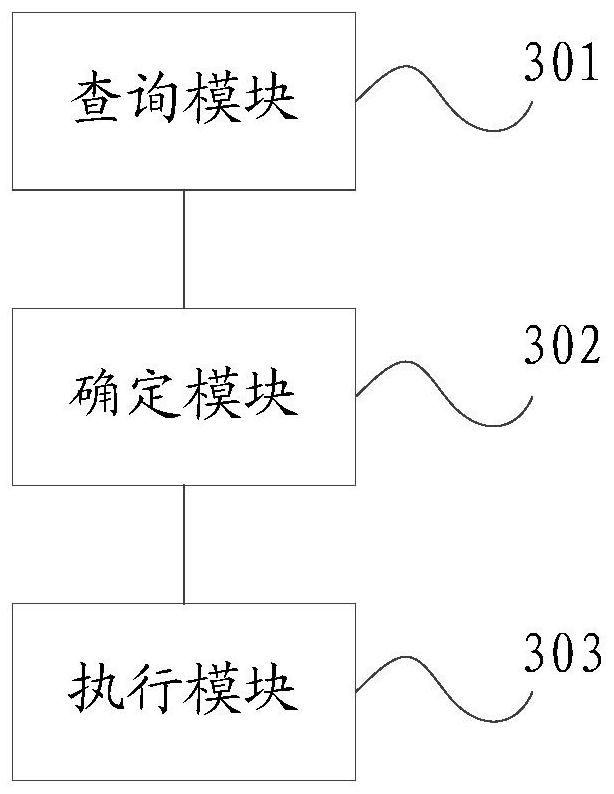

Task execution method and device, electronic equipment and storage medium

PendingCN113127172AIncrease parallelismImprove execution efficiencyProgram initiation/switchingResource allocationBusiness logicServer

The invention discloses a task execution method which comprises the following steps: collecting a target position label of a current server in a Redis queue, and querying a task identifier of each task; the Redis queue is used for storing the IP of each server; determining a task identifier matched with the target position label according to the task identifier, and determining a task corresponding to the task identifier matched with the target position label as a task executed on the current server; and after the task executed by the current server is determined, executing the corresponding task. According to the method, the service logic that some tasks need to be executed in the same server can be ensured, and the parallel tasks can be executed in multiple servers at the same time, so that the service logic is not influenced, and the task execution efficiency can be improved. The invention also provides a task execution device, electronic equipment and a computer readable storage medium, which have the above beneficial effects.

Owner:上海销氪信息科技有限公司

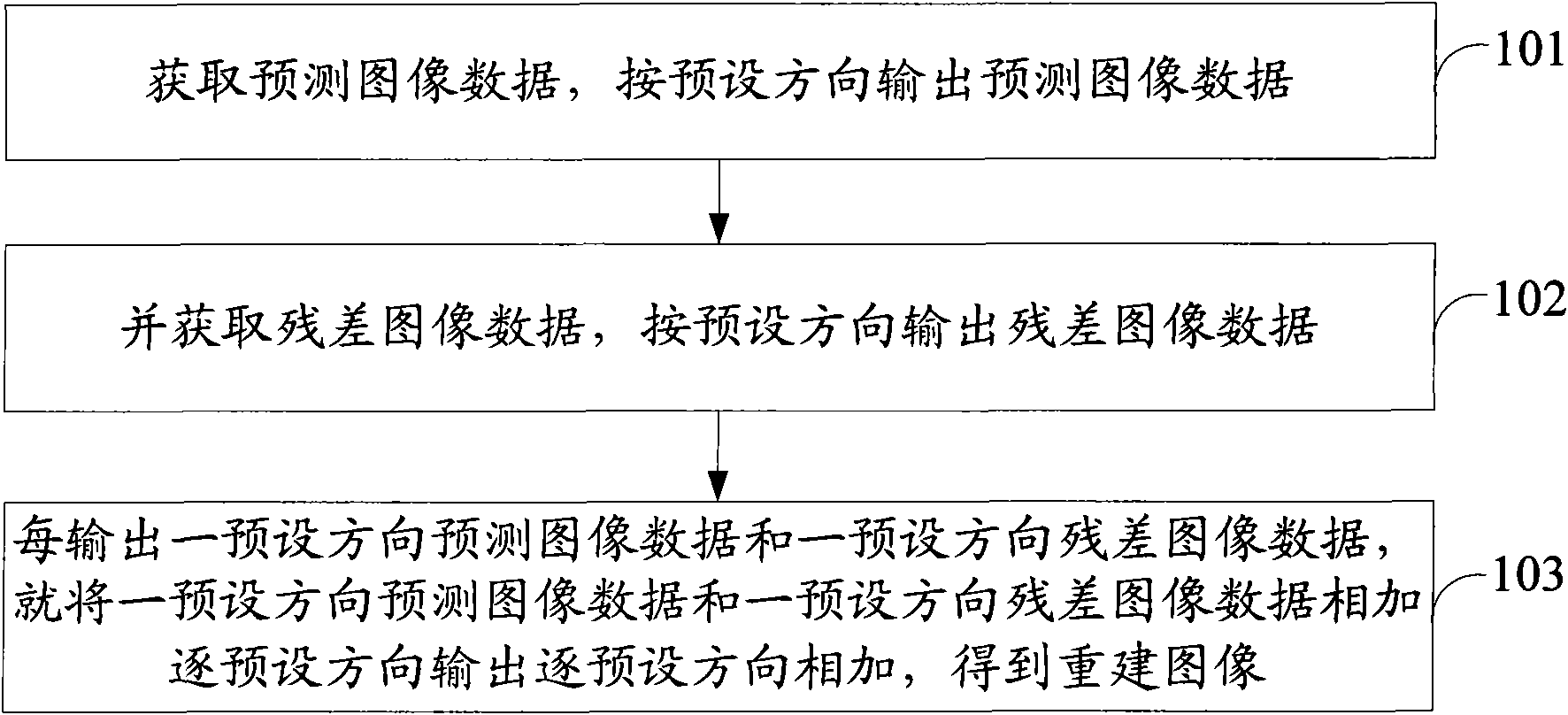

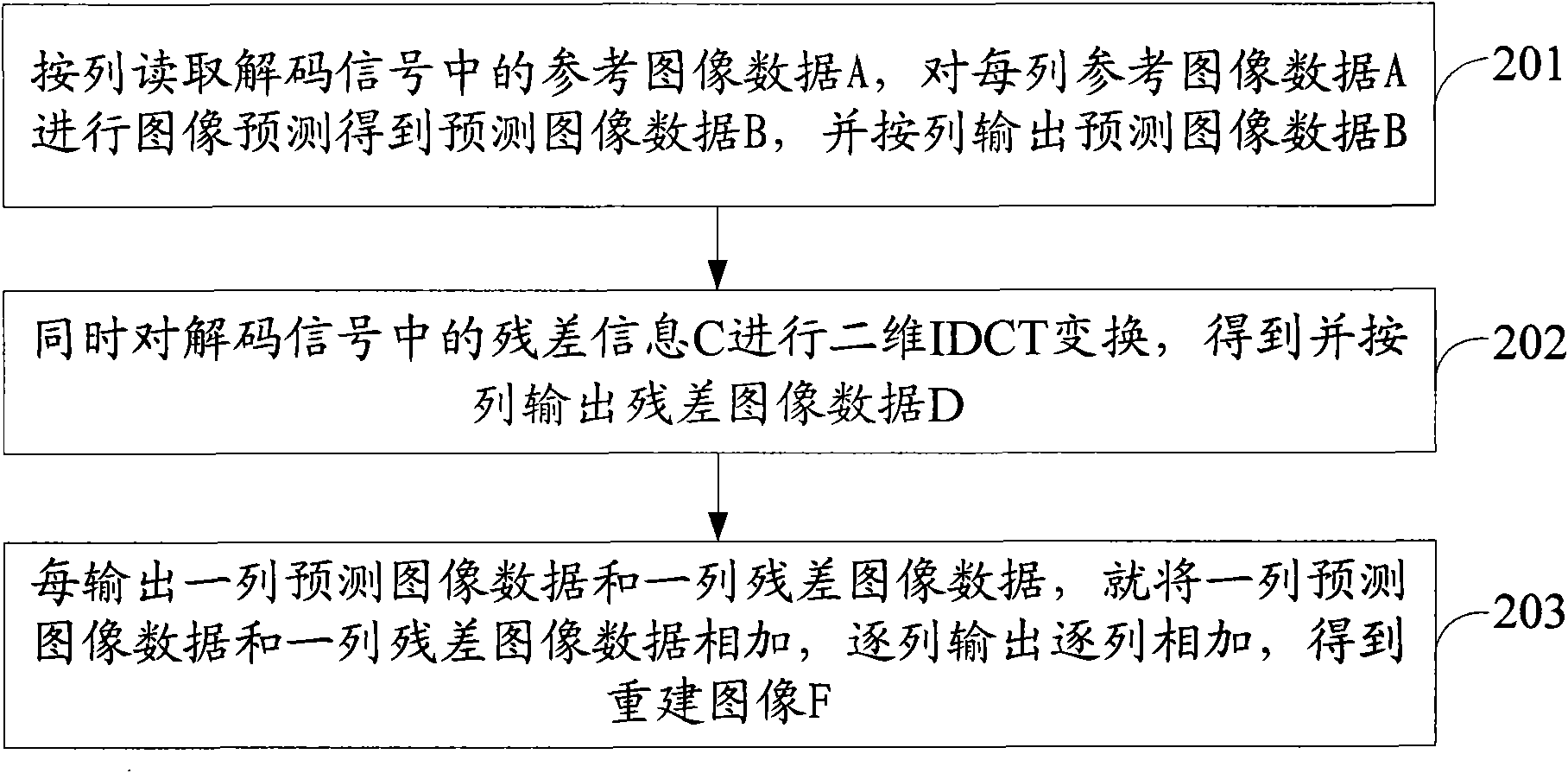

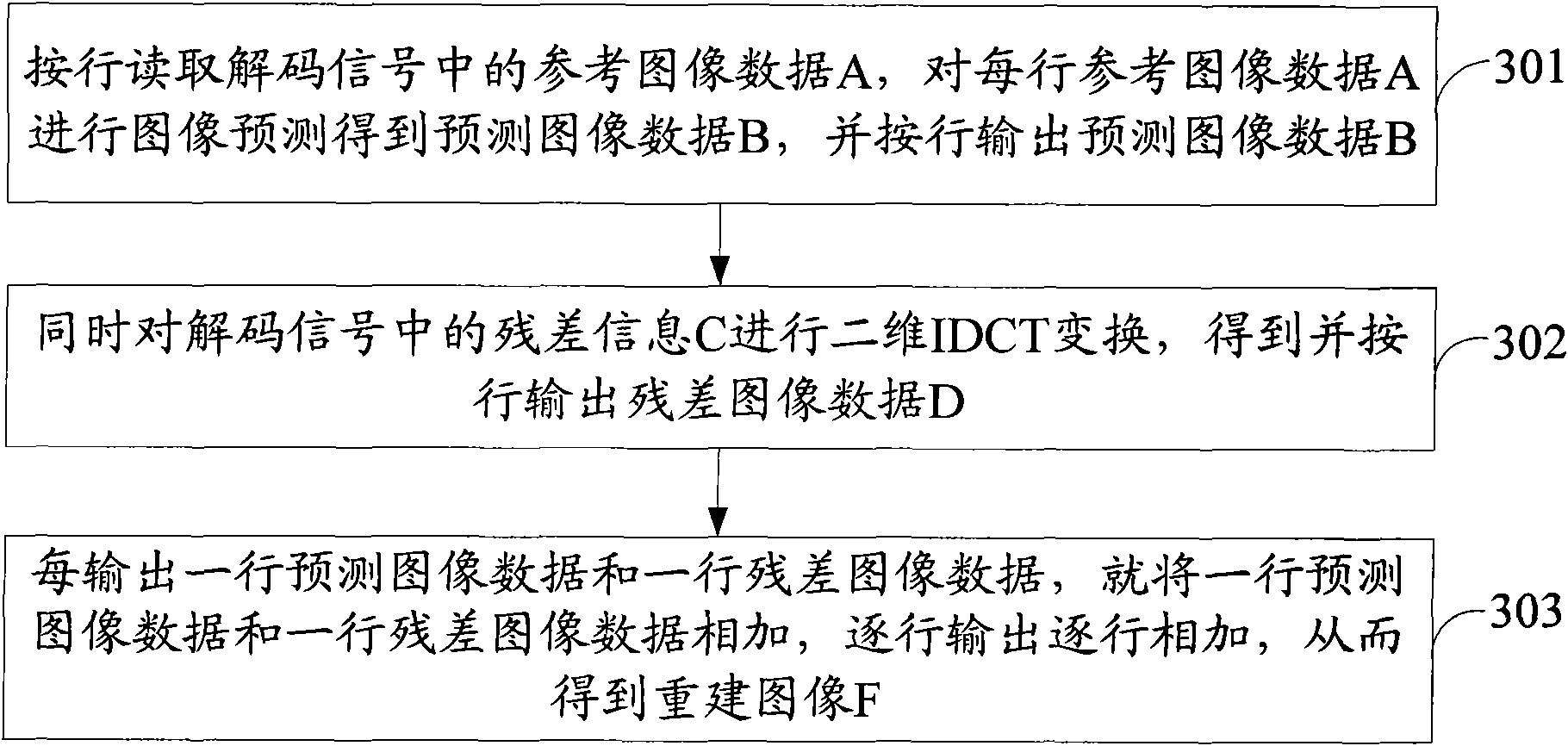

Method and device for rebuilding image

InactiveCN101867806AIncrease parallelismShorten the timeTelevision systemsDigital video signal modificationImage acquisitionImaging data

The invention discloses a method and a device for rebuilding an image, and belongs to the filed of digital image processing. The method comprises the following steps of: acquiring predicted image data and outputting the predicted image data according to the preset direction; acquiring residual image data and outputting the residual image data according to the preset direction; and adding the predicted image data in each preset direction to the residual image data in each preset direction while outputting the predicted image data in each preset direction and the residual image data in each preset direction to acquire a rebuilt image. The device comprises a predicted image data output module, a residual image data output module and a rebuilt image acquisition module. The predicted image data and the residual image data are both output according to the preset direction, and the predicted image data in each preset direction and the residual image data in each preset direction are added while the predicted image data in each preset direction and the residual image data in each preset direction are output, so that the parallelism degree of the rebuilt image is improved, the image rebuilding time is reduced and the image rebuilding speed is improved.

Owner:TELEGENE TECH

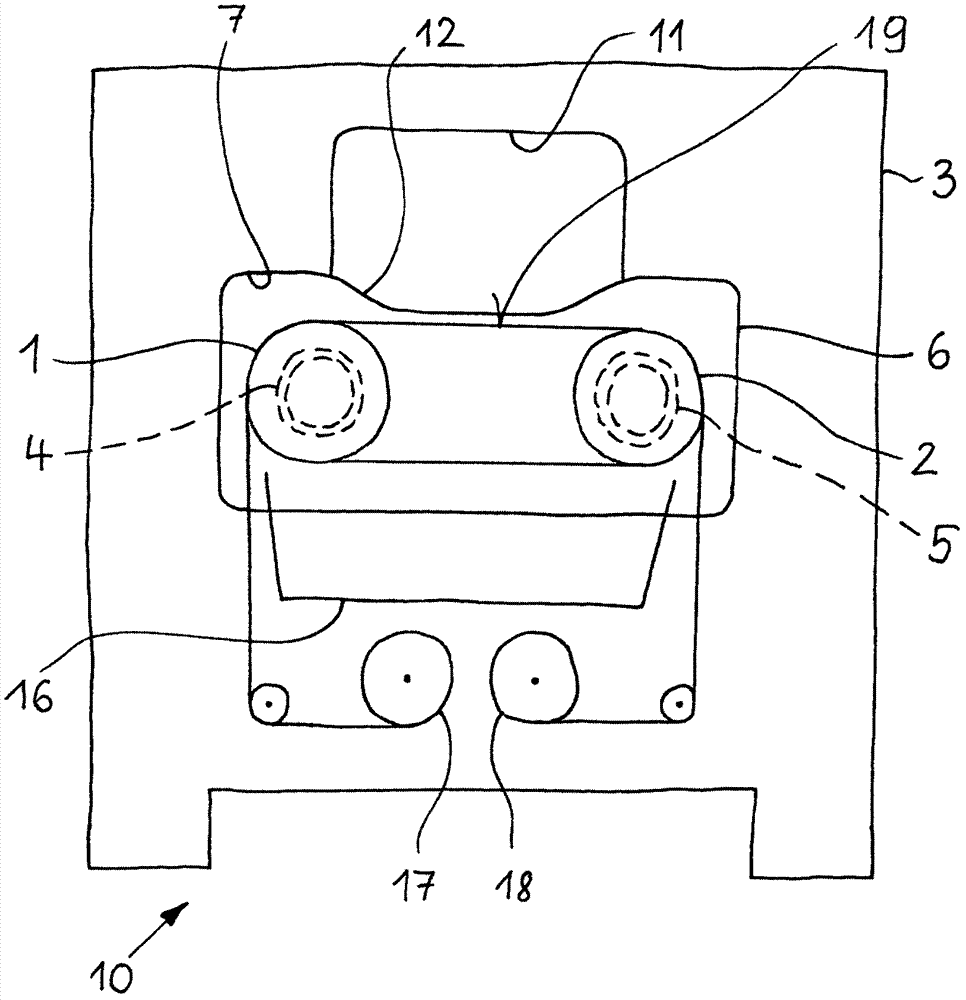

Wire saw

ActiveCN107984024ABetter and more even absorption capacityReduce weightMetal sawing devicesYoung's modulusWire saw

The invention relates to a wire saw (10) for cutting material blocks, the wire saw comprising a first wire guide roller (1) and a second wire guide roller (2) for supporting a cutting field (19) formed from cutting wires; and a frame (3) supporting the wire guide rollers (1, 2) and made of a first material, wherein the wire guide rollers (1, 2) are rotatably mounted to the frame (3) via bearings (4, 5). The invention is characterized in that the wire saw (10) comprises at least one common bearing block (6), in which a first bearing (4) supporting the first wire guide roller (1) and a second bearing (5) supporting the second wire guide roller (2) are accommodated, wherein the at least one common bearing block (6) is made of a second material and is mounted to the frame (3), and wherein Young's modulus of the second material is higher, preferably at least 3 times higher, than Young's modulus of the first material.

Owner:PRECISION SURFACING SOLUTIONS GMBH

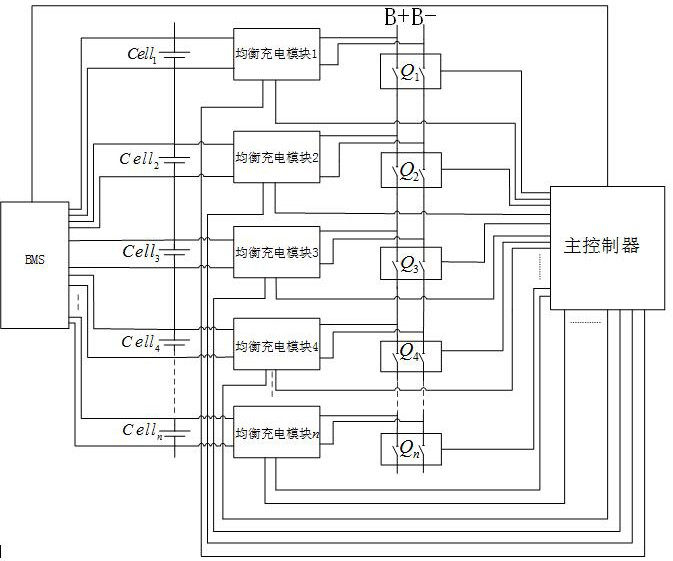

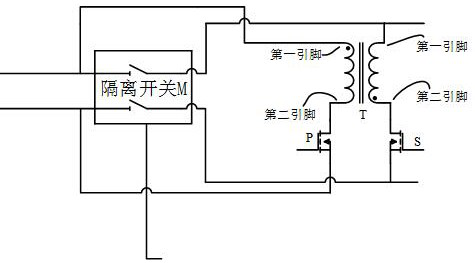

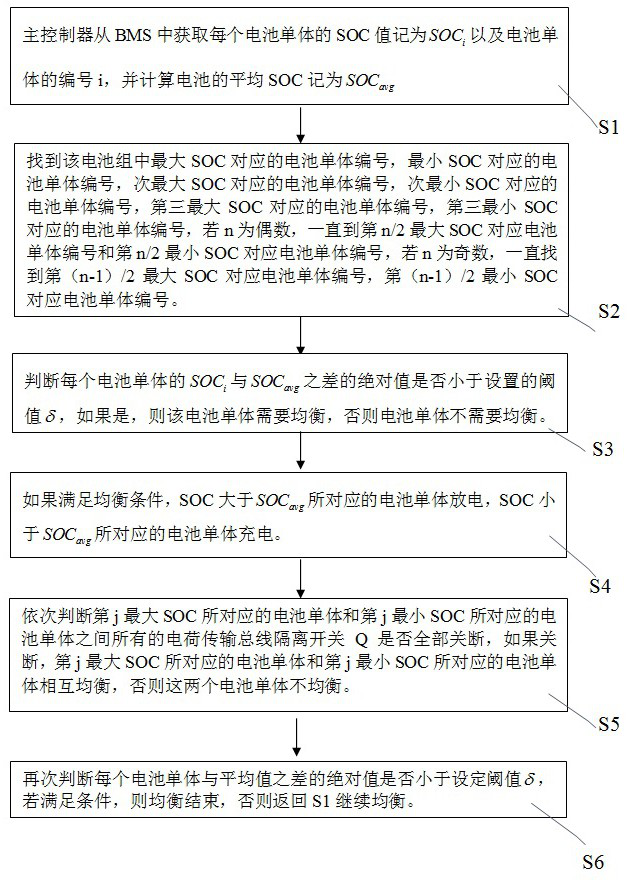

Active equalization charging device and method of power battery

ActiveCN112644335ABalanced Parallel RateShorten charge and discharge cycleCharge equalisation circuitElectric powerPower batteryControl theory

The invention provides an active equalization charging device and method for a power battery, and aims to solve the problems that an active equalization device cannot perform bidirectional equalization charging, grouping equalization charging and battery adjacent and non-adjacent equalization charging at the same time. The system comprises a main controller, a battery management system (BMS), n equalization charging modules, n battery cells, n transmission bus isolation switches, and two charge transmission buses B+ and B-. According to the device, battery cell grouping parallel equalization, charge bidirectional, adjacent and non-adjacent transmission, energy leap type transmission and no equalization overlapping problem can be realized, the equalization effect is good, the expansion is easy, and the circuit is easy to realize. In addition, the direct non-adjacent charge transfer maintains the service life of the intermediate battery by reducing the charge-discharge period of the intermediate battery and minimizing the loss caused by the number of charge transfer steps, and the energy transfer efficiency of the equalization process is also improved.

Owner:HANGZHOU DIANZI UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com