Multi-task named entity recognition method combining text classification

A technology for named entity recognition and text classification. It is applied in neural learning methods, character and pattern recognition, instruments, etc., and can solve problems such as poor neural network models.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

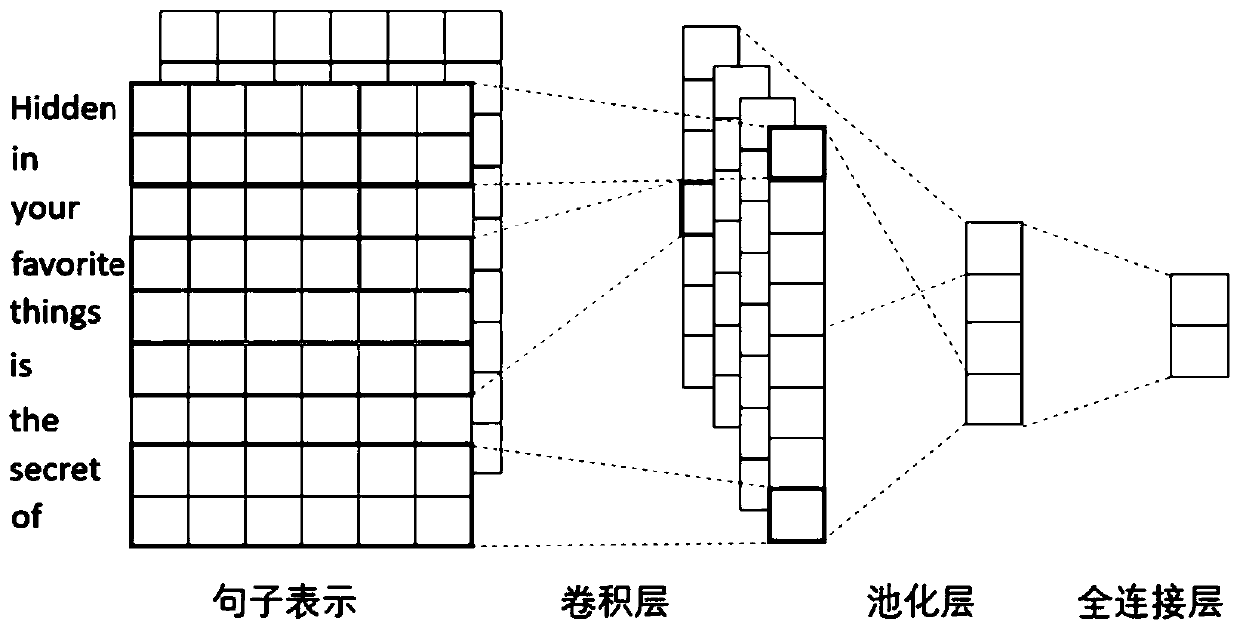

[0140] Taking the three public data sets (BioNLP13CG, BioNLP13PC and CRAFT) of the cell component group in the biomedical field as examples, the above method is applied to the three data sets for named entity recognition. The specific parameters and methods in each step are as follows: training text classifier :

[0141] 1. Each word in the input sentence is converted into a word vector with a dimension of 128 through the word embedding module. A sentence of length n can be expressed as 1:n =[x 1 ; x 2 ;…;x n ];

[0142] 2. The convolution kernel uses three sizes of 3, 4, and 5, each using 100, and the feature constructed by a sentence with a length of n is denoted as c;

[0143] 3. Select the maximum value of the feature in the pooling layer

[0144] 4. All the features are spliced and input into the fully connected network, and the Softmax function is used for classification to construct a text classifier. When training the text classifier, the batch size is 64, t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com