Neural network recognition model training method and device, server and storage medium

A technology for neural network recognition and model training

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

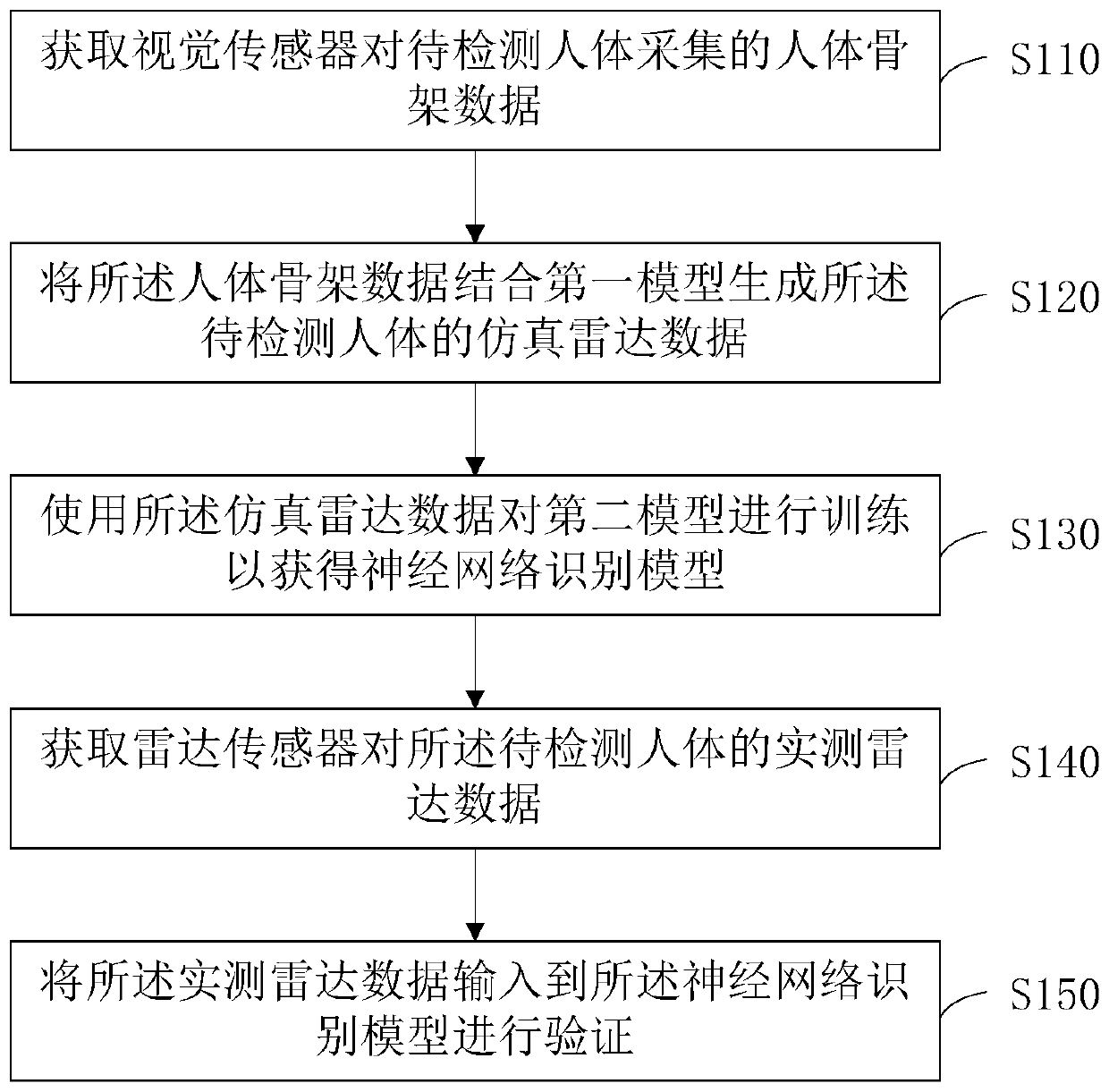

[0032] figure 1 It is a flow chart of the neural network recognition model training method provided by Embodiment 1 of the present invention. This embodiment is applicable to the neural network recognition model training situation. The method specifically includes the following steps:

[0033] S110. Obtain human body skeleton data collected by the visual sensor for the human body to be detected;

[0034] In this embodiment, the visual sensor refers to an instrument that uses optical elements and imaging devices to obtain image information of the external environment, and image resolution is usually used to describe the performance of the visual sensor. The visual sensor of this embodiment, that is, Kinect V2, not only uses optical elements, but also utilizes depth sensors, infrared emitters, etc. to obtain depth information. Exemplarily, the KinectV2 sensor is a 3D somatosensory camera, and it introduces functions such as real-time motion capture, image recognition, microphon...

Embodiment 2

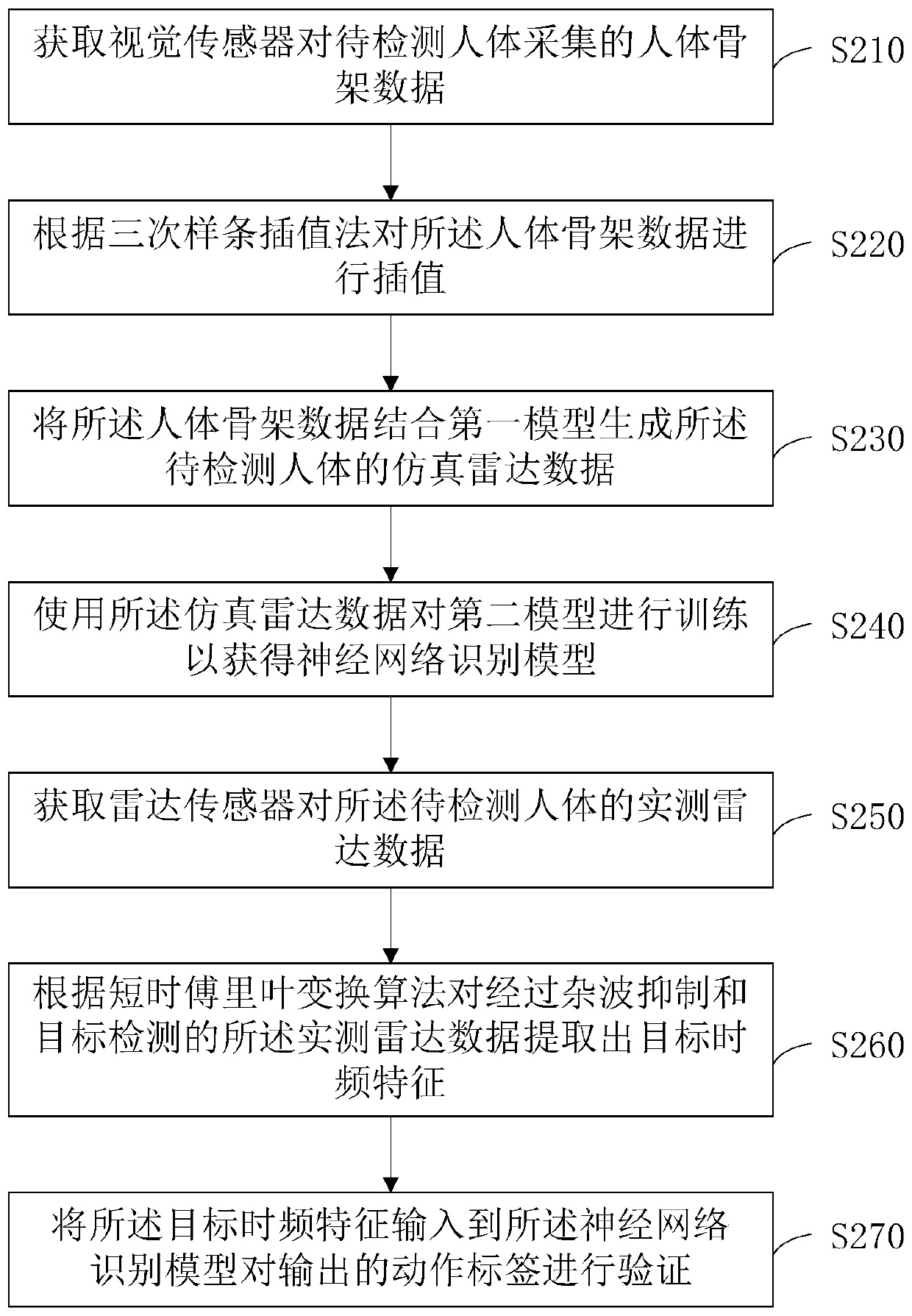

[0045] figure 2 It is a flow chart of the neural network recognition model training method provided by Embodiment 2 of the present invention. This embodiment is further optimized on the basis of the above embodiments. The method specifically includes:

[0046] S210. Acquire human skeleton data collected by the visual sensor from the human body to be detected;

[0047] In this embodiment, the visual sensor refers to an instrument that uses optical elements and imaging devices to obtain image information of the external environment, and image resolution is usually used to describe the performance of the visual sensor. The visual sensor of this embodiment, that is, Kinect V2, not only uses optical elements, but also utilizes depth sensors, infrared emitters, etc. to obtain depth information. It introduces functions such as real-time motion capture, image recognition, microphone input, voice recognition, social interaction, and skeleton tracking. The computer can use vision tec...

Embodiment 3

[0071] Figure 4 Shown is a schematic structural diagram of the neural network recognition model training device 300 provided by the third embodiment of the present invention. This embodiment is applicable to the training of the neural network recognition model, and the specific structure is as follows:

[0072] Human skeleton data acquisition module 310, used to obtain the human skeleton data collected by the human body to be detected by the visual sensor;

[0073] The simulation data generating module 320 is used to combine the human skeleton data with the first model to generate the simulated radar data of the human body to be detected;

[0074] A recognition model training module 330, configured to use the simulated radar data to train a second model to obtain a neural network recognition model;

[0075] The measured data acquisition module 340, configured to acquire the measured radar data of the human body to be detected by the radar sensor;

[0076] The recognition mo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com