Multi-modal fusion method and device based on normalized mutual information, medium and equipment

A fusion method and multi-modal technology, applied in the field of data processing, can solve problems such as difficult training, lack of perfect processing methods for cross-modal comprehensive data, and difficult reasoning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

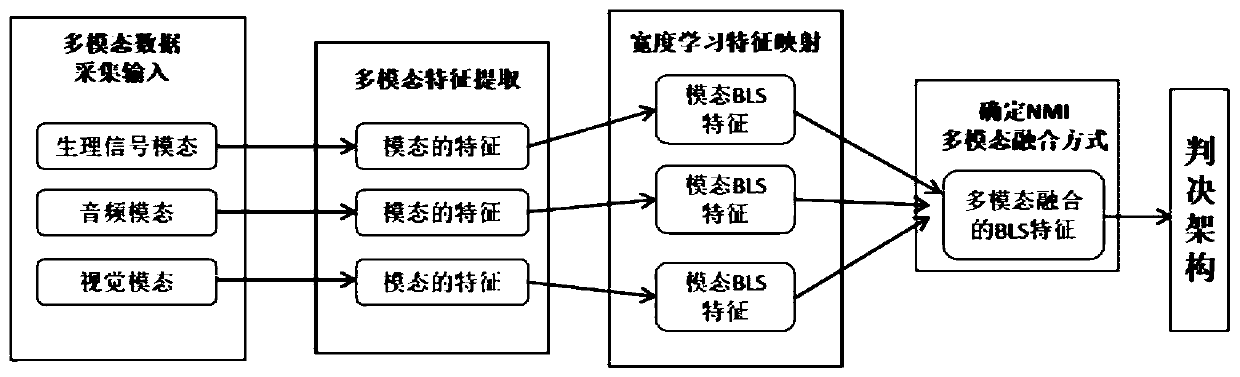

[0063] In this embodiment, a multimodal fusion method based on normalized mutual information, such as figure 1 shown, including the following steps:

[0064] Step S1: Acquire multiple modal data sets of the human body, and the data in each modal data set has labels respectively; for various modal data sets, the total amount of data is the same, the label classification and division of the data are the same, and the data model state is not the same;

[0065] Step S2, preprocessing various modal data sets; performing feature extraction on the preprocessed various modal data sets, so as to obtain corresponding feature data that is beneficial to decision-making labels;

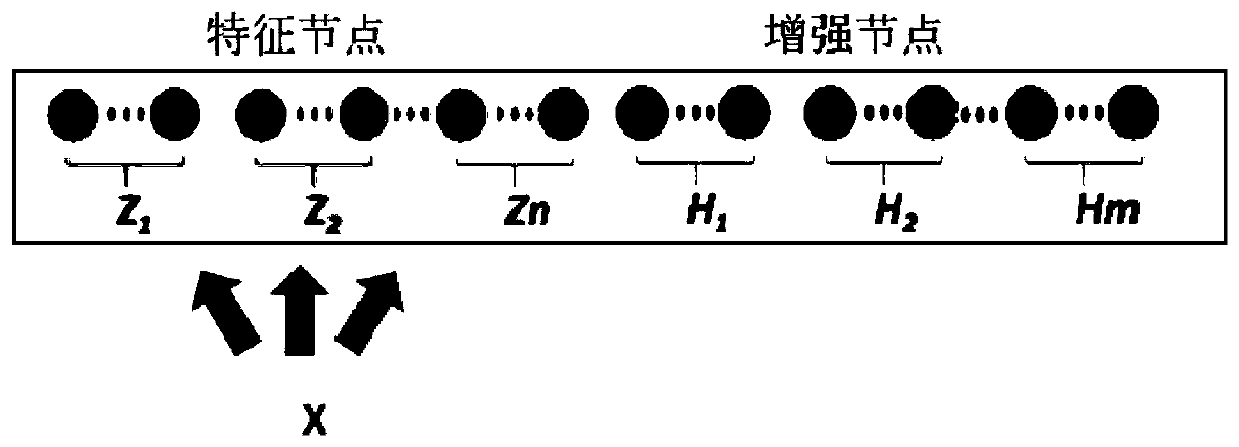

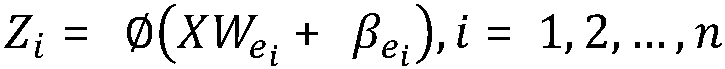

[0066] Step S3, obtain the width learning feature map of each modal data set through the width learning system; determine the multi-modal fusion method of normalized mutual information; use the feature data of various modal data sets to train and test the width learning system , to obtain the discriminant archit...

Embodiment 2

[0113] In order to realize the multimodal fusion method based on normalized mutual information described in Embodiment 1, this embodiment provides a multimodal fusion device based on normalized mutual information, including:

[0114] The multi-modal data set acquisition module is used to acquire multiple modal data sets of the collected human body, and the data in each modal data set has labels respectively; for each modal data set, the total amount of data is the same, and the data labels The classification and division are the same, but the data modes are different;

[0115] The feature extraction module is used to preprocess various modal data sets; perform feature extraction on the preprocessed various modal data sets, so as to obtain corresponding feature data that is beneficial to decision-making labels;

[0116] The width learning training and testing module is used to obtain the width learning feature map of each modal data set through the width learning system; determ...

Embodiment 3

[0119] A storage medium in this embodiment is characterized in that the storage medium stores a computer program, and when the computer program is executed by a processor, the processor executes the normalization-based A multimodal fusion method for mutual information.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com