Countermeasure defense method for countermeasure attacks based on artificial immune algorithm

An artificial immune algorithm and anti-sample technology, which is applied in the field of anti-attack based on artificial immune algorithm, can solve the problems of poor defense effect and low recognition accuracy of classifiers, and achieve the effect of strong diversity and strong defense effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0052] The present invention will be further described in detail below with reference to the accompanying drawings and embodiments. It should be noted that the following embodiments are intended to facilitate the understanding of the present invention, but do not limit it in any way.

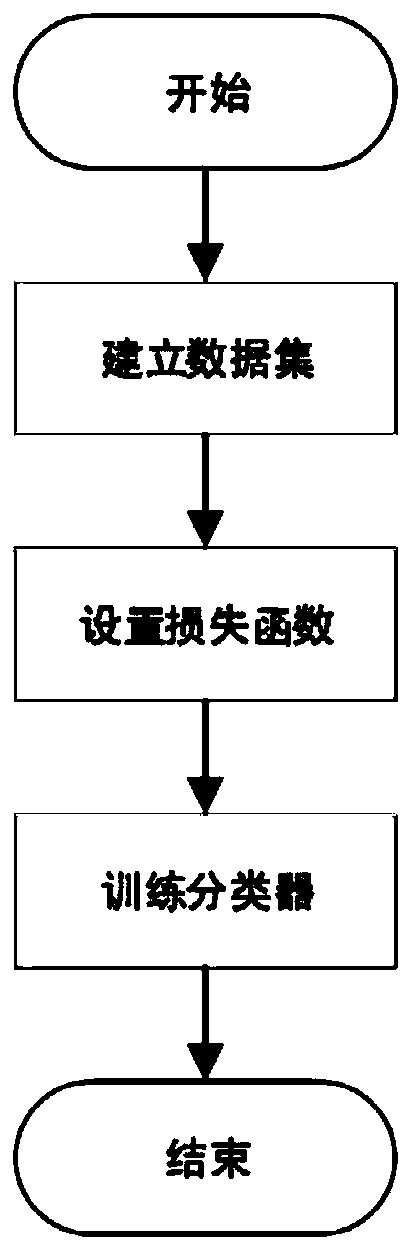

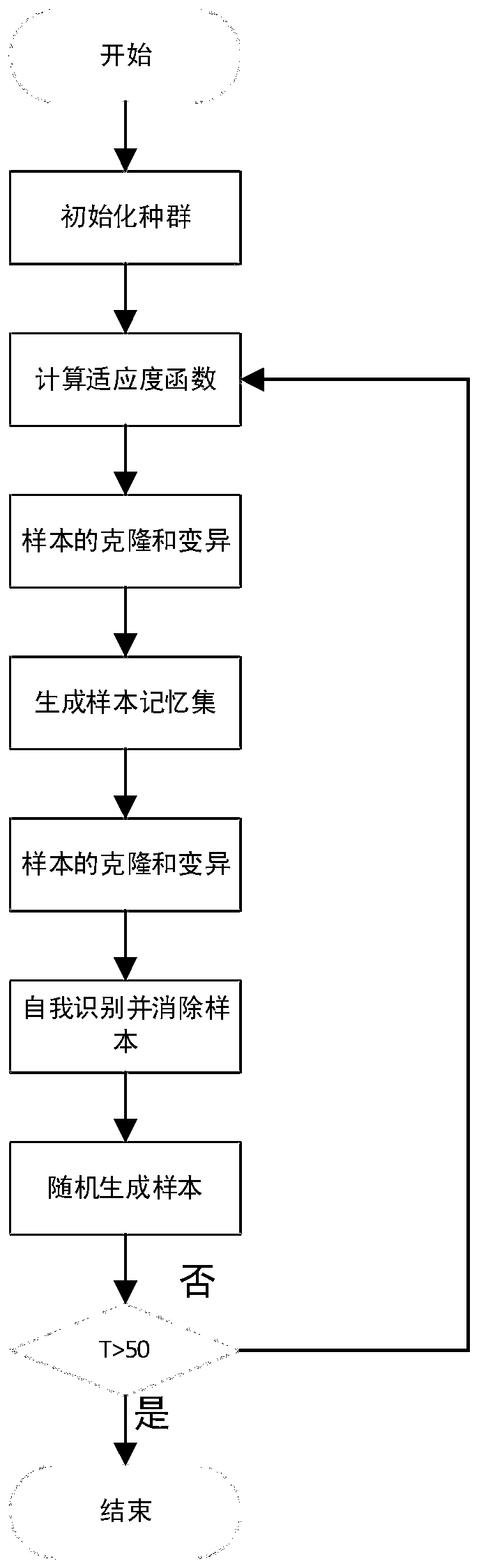

[0053] Such as figure 1 with figure 2 As shown, this embodiment provides a method for adversarial defense based on artificial immune algorithm-based adversarial attacks, including the following steps:

[0054] 1) Establish a data set. The data set consists of two parts: training set and test set. The specific process is as follows:

[0055] 1.1) Select the cifar10 data set as the normal sample data set, which has two parts, the training set and the test set, wherein the training set contains 50,000 pictures, and the test set contains 10,000 pictures.

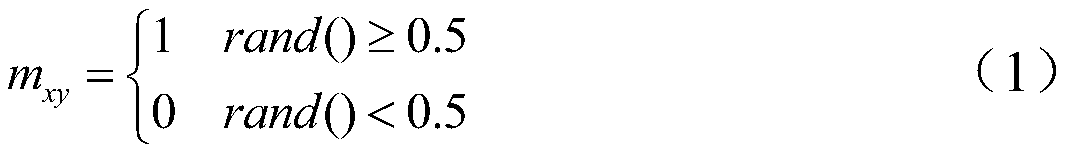

[0056] 1.2) Initialize the population. Randomly add disturbance blocks to normal image samples to form N (N=25~50) different confrontation sa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com