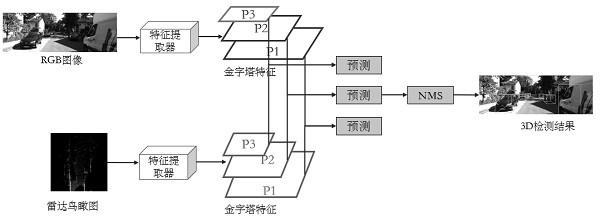

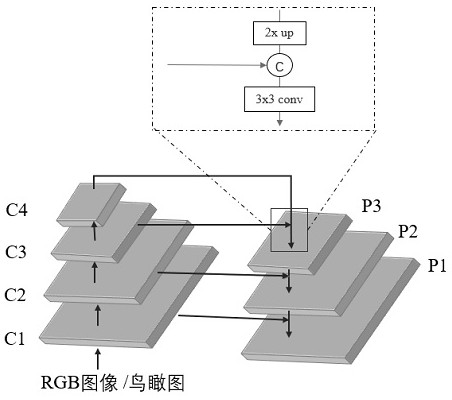

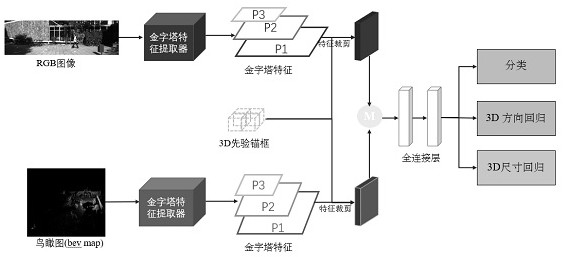

Multi-scale three-dimensional target detection method based on feature pyramid network

A technology of feature pyramid and detection method, applied in the field of computer vision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0015] The implementation of the following method is described in further detail:

[0016] 1. Convert from a voxel grid with 0.1m resolution to a six-channel aerial view. First, filter the point cloud. According to the definition of the point cloud coordinate system on the KITTI benchmark, only the points within [0,70][-40,40][0,2.5] on the three axes are considered. At the same time, the grid is evenly divided into 5 slices on the Z axis, corresponding to the five channels of the bird's-eye view, and encoded using the maximum height of all points in the cell on the slice. The sixth channel represents the point density information of the unit in the XY plane of the overall point cloud, and the calculation formula is: Where N represents the number of points in the unit, so a bird's-eye view with dimensions (800,700,6) can be obtained. By representing the 3D point cloud as a regular bird's-eye view, it can directly use the mature image feature extractor to obtain effective an...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com